Biomimetic Approaches for Human Arm Motion Generation: Literature Review and Future Directions

Abstract

:1. Introduction

2. Classification of Biomimetics Literature

2.1. Outline of the Literature Review

2.2. Data Collection Methods

2.3. Robot Manipulators and Simulation Softwares

3. Research Framework

3.1. Study Methodolgy

- A vital attribute of these robots is that they have a high number of degrees of freedom (DOFs). Redundancy provides flexibility and the capability to compensate for lost control as fast as possible and adapt to new dynamics. However, in cognitive robotics, simultaneously controlling multiple degrees of freedom in a predictive/purposeful manner can be computationally challenging.

- Generating of an optimum end-effector trajectory can avoid collisions between the self and the objects in the environment.

- Optimizing the various performance criteria can generate joint angle trajectory paths that mimic human movements.

3.2. Robot Manipulators

3.3. Test Enviroments

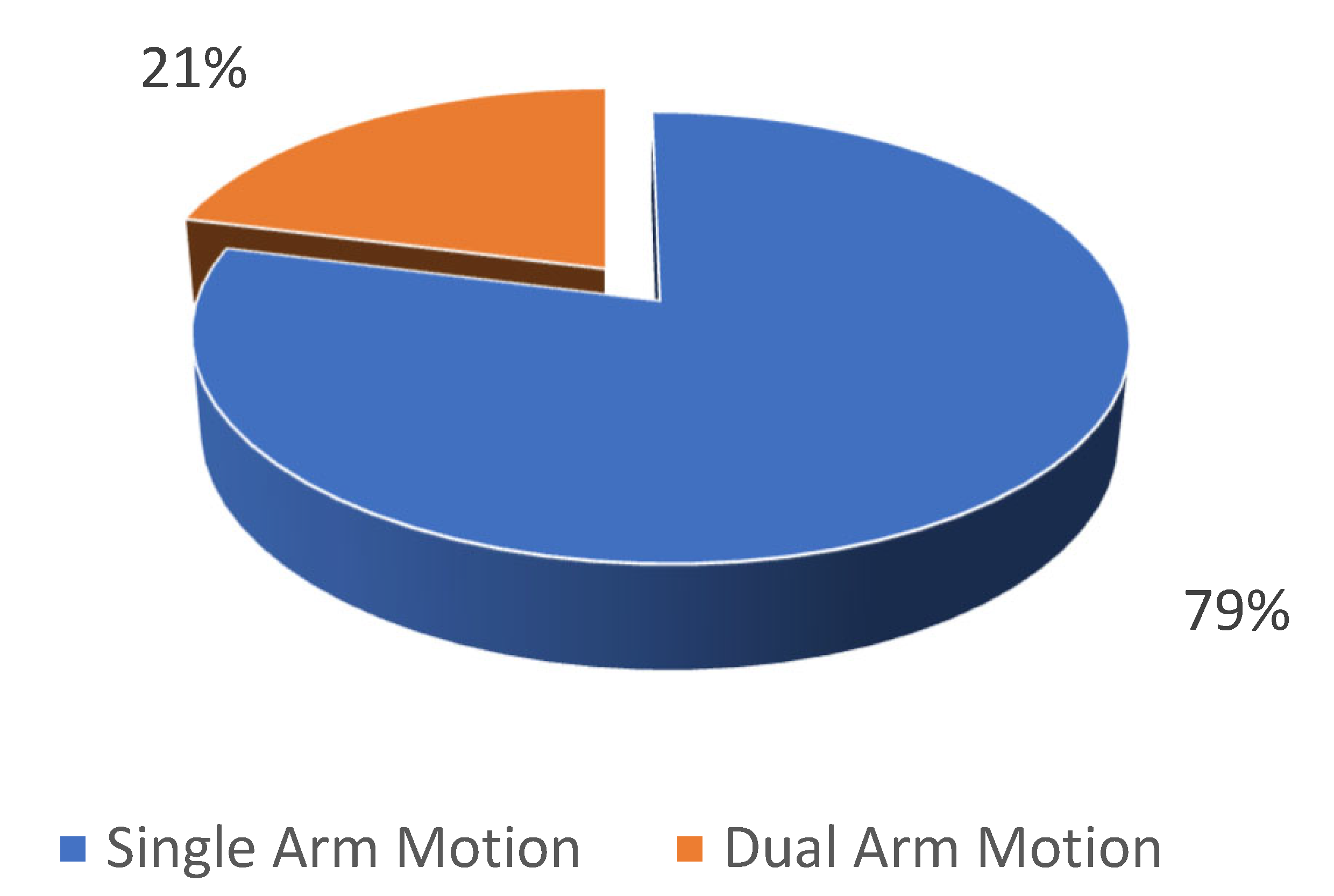

3.4. Single/Dual-Arm Motion

4. Redundancy Resolution Methods

4.1. Closed-Form Solutions

4.2. Gradient Projection Methods

4.3. Weighted Least-Norm Solution

4.4. Constrained Optimization

4.5. Machine Learning, Neural Networks, and Reinforcement Learning

5. Discussion and Future Works

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Bar-Cohen, Y. Biomimetics: Biologically Inspired Technologies; CRC Press: Boca Raton, FL, USA, 2005. [Google Scholar]

- Schmitt, O.H. Where are we now and where are we going. In Proceedings of the USAF Air Research and Development Command Symposium on Bionics, Dayton, OH, USA, 19–21 March 1961. [Google Scholar]

- Iavazzo, C.; Gkegke, X.-E.D.; Iavazzo, P.-E.; Gkegkes, I.D. Evolution of robots throughout history from Hephaestus to Da Vinci Robot. Acta Med.-Hist. Adriat. 2014, 12, 247–258. [Google Scholar] [PubMed]

- Bogue, R. Humanoid robots from the past to the present. Ind. Robot. Int. J. Robot. Res. Appl. 2020, 47, 465–472. [Google Scholar] [CrossRef]

- Hirai, K.; Hirose, M.; Haikawa, Y.; Takenaka, T. The development of Honda humanoid robot. In Proceedings of the 1998 IEEE International Conference on Robotics and Automation (Cat. No. 98CH36146), Leuven, Belgium, 20 May 1998; IEEE: Piscataway, NJ, USA, 1998. [Google Scholar]

- Nelson, G.; Saunders, A.; Playter, R. The petman and atlas robots at boston dynamics. In Humanoid Robotics: A Reference; Springer Science+Business Media B.V.: Dordrecht, The Netherlands, 2019; Volume 169, p. 186. [Google Scholar]

- Hashimoto, S.; Narita, S.; Kasahara, H.; Shirai, K.; Kobayashi, T.; Takanishi, A.; Sugano, S.; Yamaguchi, J.I.; Sawada, H.; Takanobu, H. Humanoid robots in waseda university—Hadaly-2 and wabian. Auton. Robot. 2002, 12, 25–38. [Google Scholar] [CrossRef]

- Nishiguchi, S.; Ogawa, K.; Yoshikawa, Y.; Chikaraishi, T.; Hirata, O.; Ishiguro, H. Theatrical approach: Designing human-like behaviour in humanoid robots. Robot. Auton. Syst. 2017, 89, 158–166. [Google Scholar] [CrossRef]

- Tanie, K. Humanoid robot and its application possibility. In Proceedings of the IEEE International Conference on Multisensor Fusion and Integration for Intelligent Systems, MFI2003, Tokyo, Japan, 1 August 2003; IEEE: Piscataway, NJ, USA, 2003. [Google Scholar]

- Welfare, K.S.; Hallowell, M.R.; Shah, J.A.; Riek, L.D. Consider the human work experience when integrating robotics in the workplace. In Proceedings of the 2019 14th ACM/IEEE International Conference on Human-Robot Interaction (HRI), Daegu, Republic of Korea, 11–14 March 2019; IEEE: Piscataway, NJ, USA, 2019. [Google Scholar]

- Tanizaki, Y.; Jimenez, F.; Yoshikawa, T.; Furuhashi, T. Impression Investigation of Educational Support Robots using Sympathy Expression Method by Body Movement and Facial Expression. In Proceedings of the 2018 Joint 10th International Conference on Soft Computing and Intelligent Systems (SCIS) and 19th International Symposium on Advanced Intelligent Systems (ISIS), Toyama, Japan, 5–8 December 2018; IEEE: Piscataway, NJ, USA, 2018. [Google Scholar]

- Gulletta, G.; Erlhagen, W.; Bicho, E. Human-like arm motion generation: A Review. Robotics 2020, 9, 102. [Google Scholar] [CrossRef]

- Argall, B.D.; Chernova, S.; Veloso, M.; Browning, B. A survey of robot learning from demonstration. Robot. Auton. Syst. 2009, 57, 469–483. [Google Scholar] [CrossRef]

- Breazeal, C.; Scassellati, B. Robots that imitate humans. Trends Cogn. Sci. 2002, 6, 481–487. [Google Scholar] [CrossRef]

- Campos, F.M.; Calado, J.M.F. Approaches to human arm movement control—A review. Annu. Rev. Control 2009, 33, 69–77. [Google Scholar] [CrossRef]

- Sha, D.; Thomas, J.S. An optimisation-based model for full-body upright reaching movements. Comput. Methods Biomech. Biomed. Eng. 2015, 18, 847–860. [Google Scholar] [CrossRef]

- Chiaverini, S.; Oriolo, G.; Walker, I.D. Kinematically Redundant Manipulators. In Springer Handbook of Robotics; Springer: Berlin/Heidelberg, Germany, 2008. [Google Scholar]

- Field, M.; Stirling, D.; Naghdy, F.; Pan, Z. Motion capture in robotics review. In Proceedings of the 2009 IEEE International Conference on Control and Automation, Christchurch, New Zealand, 9–11 December 2019; IEEE: Piscataway, NJ, USA, 2019. [Google Scholar]

- Field, M.; Pan, Z.; Stirling, D.; Naghdy, F. Human motion capture sensors and analysis in robotics. Ind. Robot. 2011, 38, 163–171. [Google Scholar] [CrossRef]

- Kurihara, K.; Hoshino, S.I.; Yamane, K.; Nakamura, Y. Optical motion capture system with pan-tilt camera tracking and real time data processing. In Proceedings of the 2002 IEEE International Conference on Robotics and Automation (Cat. No. 02CH37292), Washington, DC, USA, 11–15 May 2002; IEEE: Piscataway, NJ, USA, 2002. [Google Scholar]

- Sementille, A.C.; Lourenço, L.E.; Brega, J.R.F.; Rodello, I. A motion capture system using passive markers. In Proceedings of the 2004 ACM SIGGRAPH International Conference on Virtual Reality Continuum and Its Applications in Industry, Singapore, 16–18 June 2004. [Google Scholar]

- Pfister, A.; West, A.M.; Bronner, S.; Noah, J.A. Comparative abilities of Microsoft Kinect and Vicon 3D motion capture for gait analysis. J. Med. Eng. Technol. 2014, 38, 274–280. [Google Scholar] [CrossRef] [PubMed]

- Cloete, T.; Scheffer, C. Benchmarking of a full-body inertial motion capture system for clinical gait analysis. In Proceedings of the 2008 30th Annual International Conference of the IEEE Engineering in Medicine and Biology Society, Vancouver, BC, Canada, 20–25 August 2008; IEEE: Piscataway, NJ, USA, 2008. [Google Scholar]

- Jung, Y.; Kang, D.; Kim, J. Upper body motion tracking with inertial sensors. In Proceedings of the 2010 IEEE International Conference on Robotics and Biomimetics, Tianjin, China, 14–18 December 2010; IEEE: Piscataway, NJ, USA, 2010. [Google Scholar]

- Liarokapis, M.; Bechlioulis, C.P.; Artemiadis, P.K.; Kyriakopoulos, K.J. Deriving Humanlike Arm Hand System Poses. J. Mech. Robot. 2017, 9, 011012. [Google Scholar] [CrossRef] [Green Version]

- Kim, S.; Kim, C.; Park, J.H. Human-like arm motion generation for humanoid robots using motion capture database. In Proceedings of the 2006 IEEE/RSJ International Conference on Intelligent Robots and Systems, Beijing, China, 9–15 October 2006; IEEE: Piscataway, NJ, USA, 2006. [Google Scholar]

- Calinon, S.; Billard, A. Incremental learning of gestures by imitation in a humanoid robot. In Proceedings of the ACM/IEEE International Conference on Human-Robot Interaction, Arlington, VA, USA, 9–11 March 2007. [Google Scholar]

- Albrecht, S.; Ramirez-Amaro, K.; Ruiz-Ugalde, F.; Weikersdorfer, D.; Leibold, M.; Ulbrich, M.; Beetz, M. Imitating human reaching motions using physically inspired optimization principles. In Proceedings of the 2011 11th IEEE-RAS International Conference on Humanoid Robots, Bled, Slovenia, 26–28 October 2011; IEEE: Piscataway, NJ, USA, 2011. [Google Scholar]

- Tomić, M.; Chevallereau, C.; Jovanović, K.; Potkonjak, V.; Rodić, A. Human to humanoid motion conversion for dual-arm manipulation tasks. Robotica 2018, 36, 1167–1187. [Google Scholar] [CrossRef] [Green Version]

- Alibeigi, M.; Rabiee, S.; Ahmadabadi, M.N. Inverse Kinematics Based Human Mimicking System using Skeletal Tracking Technology. J. Intell. Robot. Syst. 2016, 85, 27–45. [Google Scholar] [CrossRef]

- Elbasiony, R.; Gomaa, W. Humanoids skill learning based on real-time human motion imitation using Kinect. Intell. Serv. Robot. 2018, 11, 149–169. [Google Scholar] [CrossRef]

- Chen, P.; Zhao, H.; Zhao, X.; Ge, D.; Ding, H. Dimensionality Reduction for Motion Planning of Dual-arm Robots. In Proceedings of the 2018 IEEE International Conference on Mechatronics and Automation (ICMA), Changchun, China, 5–8 August 2018; IEEE: Piscataway, NJ, USA, 2018. [Google Scholar]

- García, N.; Roseli, J.; Suárez, R. Modeling human-likeness in approaching motions of dual-arm autonomous robots. In Proceedings of the 2018 IEEE International Conference on Simulation, Modeling, and Programming for Autonomous Robots (SIMPAR), Brisbane, Australia, 16–19 May 2018; IEEE: Piscataway, NJ, USA, 2018. [Google Scholar]

- Wang, Y. Closed-Form Inverse Kinematic Solution for Anthropomorphic Motion in Redundant Robot Arms. Master’s Thesis, Arizona State University, Tempe, AZ, USA, 2013. [Google Scholar]

- Gielniak, M.J.; Liu, C.K.; Thomaz, A.L. Generating human-like motion for robots. Int. J. Robot. Res. 2013, 32, 1275–1301. [Google Scholar] [CrossRef] [Green Version]

- Denysyuk, P.; Matviichuk, K.; Duda, M.; Teslyuk, T.; Kobyliuk, Y. Technical support for mobile robot system RoboCAD. In Proceedings of the 2013 12th International Conference on the Experience of Designing and Application of CAD Systems in Microelectronics (CADSM), Lviv, UKraine, 19–23 February 2013; IEEE: Piscataway, NJ, USA, 2013. [Google Scholar]

- Ryan, R.R. ADAMS—Multibody system analysis software. In Multibody Systems Handbook; Springer: Berlin/Heidelberg, Germany, 1990; pp. 361–402. [Google Scholar]

- Žlajpah, L. Simulation in robotics. Math. Comput. Simul. 2008, 79, 879–897. [Google Scholar] [CrossRef]

- Kim, Y.; Jung, Y.; Choi, W.; Lee, K.; Koo, S. Technology. Similarities and differences between musculoskeletal simulations of OpenSim and AnyBody modeling system. J. Mech. Sci. Technol. 2018, 32, 6037–6044. [Google Scholar] [CrossRef]

- Silva, E.C.; Costa, M.; Araújo, J.; Machado, D.; Louro, L.; Erlhagen, W.; Bicho, E. Towards human-like bimanual movements in anthropomorphic robots: A nonlinear optimization approach. Appl. Math. Inf. Sci. 2015, 9, 619–629. [Google Scholar]

- Richter, C.; Jentzsch, S.; Hostettler, R.; Garrido, J.A.; Ros, E.; Knoll, A.; Rohrbein, F.; van der Smagt, P.; Conradt, J. Musculoskeletal robots: Scalability in neural control. IEEE Robot. Autom. Mag. 2016, 23, 128–137. [Google Scholar] [CrossRef] [Green Version]

- Holzbaur, K.R.; Murray, W.M.; Delp, S.L. A model of the upper extremity for simulating musculoskeletal surgery and analyzing neuromuscular control. Ann. Biomed. Eng. 2005, 33, 829–840. [Google Scholar] [CrossRef] [PubMed]

- Fan, J.; Jin, J.; Wang, Q. Humanoid muscle-skeleton robot arm design and control based on reinforcement learning. In Proceedings of the 2020 15th IEEE Conference on Industrial Electronics and Applications (ICIEA), Kristiansand, Norway, 9–13 November 2020; IEEE: Piscataway, NJ, USA, 2020. [Google Scholar]

- Al Borno, M.; Hicks, J.L.; Delp, S.L. The effects of motor modularity on performance, learning and generalizability in upper-extremity reaching: A computational analysis. J. R. Soc. Interface 2020, 17, 20200011. [Google Scholar] [CrossRef]

- Lenarcic, J.; Stanisic, M. A humanoid shoulder complex and the humeral pointing kinematics. IEEE Trans. Robot. Autom. 2003, 19, 499–506. [Google Scholar] [CrossRef]

- Sapio, V.D.; Warren, J.; Khatib, O. Predicting reaching postures using a kinematically constrained shoulder model. In Advances in Robot Kinematics; Springer: Dordrecht, The Netherlands, 2006; pp. 209–218. [Google Scholar]

- Chan, M.; Giddings, D.R.; Chandler, C.; Craggs, C.; Plant, R.; Day, M. An experimentally confirmed statistical model on arm movement. Hum. Mov. Sci. 2004, 22, 631–648. [Google Scholar] [CrossRef] [PubMed]

- Gams, A.; Lenarcic, J. Humanoid arm kinematic modeling and trajectory generation. In Proceedings of the First IEEE/RAS-EMBS International Conference on Biomedical Robotics and Biomechatronics, 2006. BioRob 2006, Pisa, Italy, 20–22 February 2006; IEEE: Piscataway, NJ, USA, 2006. [Google Scholar]

- Menychtas, D.; Carey, S.L.; Alqasemi, R.; Dubey, R.V. Upper limb motion simulation algorithm for prosthesis prescription and training. In Proceedings of the 2019 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Macau, China, 3–8 November 2019; IEEE: Piscataway, NJ, USA, 2019. [Google Scholar]

- Menychtas, D.; Carey, S.; Dubey, R.; Lura, D. A robotic human body model with joint limits for simulation of upper limb prosthesis users. In Proceedings of the 2016 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Daejeon, Republic of Korea, 9–14 October 2016; IEEE: Piscataway, NJ, USA, 2016. [Google Scholar]

- Lura, D.J.; Carey, S.L.; Dubey, R.V. Joint Limit vs. Optimized Weighted Least Norm Methods in Predicting Upper Body Posture. In Converging Clinical and Engineering Research on Neurorehabilitation; Springer: Berlin/Heidelberg, Germany, 2013; pp. 799–803. [Google Scholar]

- Suárez, R.; Rosell, J.; Garcia, N. Using synergies in dual-arm manipulation tasks. In Proceedings of the 2015 IEEE International Conference on Robotics and Automation (ICRA), Seattle, WA, USA, 26–30 May 2015; IEEE: Piscataway, NJ, USA, 2015. [Google Scholar]

- Shepherd, S.; Buchstab, A. Kuka robots on-site. In Robotic Fabrication in Architecture, Art and Design 2014; Springer: Cham, Switzerland, 2014; pp. 373–380. [Google Scholar]

- e Silva, E.C.; Costa, M.F.; Bicho, E.; Erlhagen, W. Human-Like Movement of an Anthropomorphic Robot: Problem Revisited. AIP Conf. Proc. 2011, 1389, 779–782. [Google Scholar]

- Corke, P.I.; Khatib, O. Robotics, Vision and Control: Fundamental Algorithms in MATLAB; Springer: Cham, Switzerland, 2011; Volume 73. [Google Scholar]

- Delp, S.L.; Anderson, F.C.; Arnold, A.S.; Loan, P.; Habib, A.; John, C.T.; Guendelman, E.; Thelen, D.G. OpenSim: Open-source software to create and analyze dynamic simulations of movement. IEEE Trans. Biomed. Eng. 2007, 54, 1940–1950. [Google Scholar] [CrossRef] [Green Version]

- Billard, A.; Matarić, M.J. Learning human arm movements by imitation:: Evaluation of a biologically inspired connectionist architecture. Robot. Auton. Syst. 2001, 37, 145–160. [Google Scholar] [CrossRef]

- Fox, A.S.; Bonacci, J.; Gill, S.D.; Page, R.S. Simulating the effect of glenohumeral capsulorrhaphy on kinematics and muscle function. J. Orthop. Res. 2021, 39, 880–890. [Google Scholar] [CrossRef]

- Kashi, B.; Rosen, J.; Brand, M.; Avrahami, I. Synthesizing two criteria for redundancy resolution of human arm in point tasks. In Proceedings of the 2011 Third World Congress on Nature and Biologically Inspired Computing, Salamanca, Spain, 19–21 October 2011; IEEE: Piscataway, NJ, USA, 2011. [Google Scholar]

- Lura, D.J. The Creation of a Robotics Based Human Upper Body Model for Predictive Simulation of Prostheses Performance. Ph.D. Thesis, University of South Florida, Tampa, FL, USA, 2012. [Google Scholar]

- Kim, H.; Rosen, J. Predicting Redundancy of a 7 DOF Upper Limb Exoskeleton toward Improved Transparency between Human and Robot. J. Intell. Robot. Syst. 2015, 80, 99–119. [Google Scholar] [CrossRef] [Green Version]

- Quigley, M.; Conley, K.; Gerkey, B.; Faust, J.; Foote, T.; Leibs, J.; Wheeler, R.; Ng, A.Y. ROS: An open-source Robot Operating System. In Proceedings of the ICRA Workshop on Open Source Software, Kobe, Japan, 12–May 2009. [Google Scholar]

- Todorov, E.; Erez, T.; Tassa, Y. Mujoco: A physics engine for model-based control. In Proceedings of the 2012 IEEE/RSJ International Conference on Intelligent Robots and Systems, Vilamoura-Algarve, Portugal, 7–12 October 2012; IEEE: Piscataway, NJ, USA, 2012. [Google Scholar]

- Kemp, C.C.; Edsinger, A.; Torres-Jara, E. Challenges for robot manipulation in human environments [grand challenges of robotics]. IEEE Robot. Autom. Mag. 2007, 14, 20–29. [Google Scholar] [CrossRef]

- Wisspeintner, T.; Van Der Zant, T.; Iocchi, L.; Schiffer, S. RoboCup@ Home: Scientific competition and benchmarking for domestic service robots. Interact. Stud. 2009, 10, 392–426. [Google Scholar] [CrossRef]

- Gonzalez-Aguirre, J.A.; Osorio-Oliveros, R.; Rodríguez-Hernández, K.L.; Lizárraga-Iturralde, J.; Morales Menendez, R.; Ramírez-Mendoza, R.A.; Ramírez-Moreno, M.A.; Lozoya-Santos, J.d.J. Service robots: Trends and technology. Appl. Sci. 2021, 11, 10702. [Google Scholar] [CrossRef]

- Su, H.; Enayati, N.; Vantadori, L.; Spinoglio, A.; Ferrigno, G.; De Momi, E. Online human-like redundancy optimization for tele-operated anthropomorphic manipulators. Int. J. Adv. Robot. Syst. 2018, 15, 1729881418814695. [Google Scholar] [CrossRef]

- Liu, W.; Chen, D.; Steil, J. Analytical Inverse Kinematics Solver for Anthropomorphic 7-DOF Redundant Manipulators with Human-Like Configuration Constraints. J. Intell. Robot. Syst. 2016, 86, 63–79. [Google Scholar] [CrossRef]

- Artemiadis, P.K.; Katsiaris, P.T.; Kyriakopoulos, K.J. A biomimetic approach to inverse kinematics for a redundant robot arm. Auton. Robot. 2010, 29, 293–308. [Google Scholar] [CrossRef]

- Kim, C.; Kim, S.; Ra, S.; You, B.J. Regenerating human-like arm motions of humanoid robots for a movable object. In Proceedings of the SICE Annual Conference 2007, Takamatsu, Japan, 17–20 September 2007; IEEE: Piscataway, NJ, USA, 2007. [Google Scholar]

- Chen, W.; Xiong, C.; Yue, S. On configuration trajectory formation in spatiotemporal profile for reproducing human hand reaching movement. IEEE Trans. Cybern. 2015, 46, 804–816. [Google Scholar] [CrossRef] [PubMed]

- Zanchettin, A.M.; Bascetta, L.; Rocco, P. Achieving Humanlike Motion: Resolving Redundancy for Anthropomorphic Industrial Manipulators. IEEE Robot. Autom. Mag. 2013, 20, 131–138. [Google Scholar] [CrossRef]

- Lamperti, C.; Zanchettin, A.M.; Rocco, P. A redundancy resolution method for an anthropomorphic dual-arm manipulator based on a musculoskeletal criterion. In Proceedings of the 2015 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Hamburg, Germany, 28 September–2 October 2015; pp. 1846–1851. [Google Scholar]

- Shin, S.Y.; Kim, C. Human-like motion generation and control for humanoid’s dual arm object manipulation. IEEE Trans. Ind. Electron. 2014, 62, 2265–2276. [Google Scholar] [CrossRef]

- Zanchettin, A.M.; Rocco, P.; Bascetta, L.; Symeonidis, I.; Peldschus, S. Kinematic analysis and synthesis of the human arm motion during a manipulation task. In Proceedings of the 2011 IEEE International Conference on Robotics and Automation, Shanghai, China, 9–13 May 2011; IEEE: Piscataway, NJ, USA, 2011. [Google Scholar]

- Ding, X.; Fang, C. A Novel Method of Motion Planning for an Anthropomorphic Arm Based on Movement Primitives. IEEE/ASME Trans. Mechatron. 2013, 18, 624–636. [Google Scholar] [CrossRef]

- Kim, H.; Miller, L.M.; Byl, N.; Abrams, G.M.; Rosen, J. Redundancy resolution of the human arm and an upper limb exoskeleton. IEEE Trans. Biomed. Eng. 2012, 59, 1770–1779. [Google Scholar]

- Zhao, J.; Xie, B.; Song, C. Generating human-like movements for robotic arms. Mech. Mach. Theory 2014, 81, 107–128. [Google Scholar] [CrossRef]

- Lura, D.; Wernke, M.; Alqasemi, R.; Carey, S.; Dubey, R. Probability density based gradient projection method for inverse kinematics of a robotic human body model. In Proceedings of the 2012 Annual International Conference of the IEEE Engineering in Medicine and Biology Society, San Diego, CA, USA, 28 August–1 September 2012; IEEE: Piscataway, NJ, USA, 2012. [Google Scholar]

- Poignant, A.; Legrand, M.; Jarrassé, N.; Morel, G. Computing the positioning error of an upper-arm robotic prosthesis from the observation of its wearer’s posture. In Proceedings of the 2021 IEEE International Conference on Robotics and Automation (ICRA), Xi’an, China, 30 May–5 June 2021; IEEE: Piscataway, NJ, USA, 2021. [Google Scholar]

- Xie, B.; Zhao, J.; Liu, Y. Human-like motion planning for robotic arm system. In Proceedings of the 2011 15th International Conference on Advanced Robotics (ICAR), Tallinn, Estonia, 20–23 June 2011; IEEE: Piscataway, NJ, USA, 2011. [Google Scholar]

- Jacquier-Bret, J.; Gorce, P.; Rezzoug, N. The manipulability: A new index for quantifying movement capacities of upper extremity. Ergonomics 2012, 55, 69–77. [Google Scholar] [CrossRef] [PubMed]

- Jaquier, N.; Rozo, L.D.; Caldwell, D.G.; Calinon, S. Geometry-aware Tracking of Manipulability Ellipsoids. In Proceedings of the Robotics: Science and Systems, Pittsburgh, PA, USA, 26–30 June 2018. [Google Scholar]

- Jaquier, N.; Rozo, L.; Calinon, S. Analysis and transfer of human movement manipulability in industry-like activities. In Proceedings of the 2020 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Las Vegas, NV, USA, 24 October 2020–24 January 2021; IEEE: Piscataway, NJ, USA, 2021. [Google Scholar]

- Sentis, L.; Khatib, O. Task-oriented control of humanoid robots through prioritization. In Proceedings of the IEEE International Conference on Humanoid Robots, Santa Monica, CA, USA, 10–12 November 2004. [Google Scholar]

- Whitney, D.E. Resolved motion rate control of manipulators and human prostheses. IEEE Trans. Man-Mach. Syst. 1969, 10, 47–53. [Google Scholar] [CrossRef]

- Liegeois, A. Automatic supervisory control of the configuration and behavior of multibody mechanisms. IEEE Trans. Syst. Man Cybern. 1977, 7, 868–871. [Google Scholar]

- Chan, T.F.; Dubey, R.V. A weighted least-norm solution based scheme for avoiding joint limits for redundant joint manipulators. IEEE Trans. Robot. Autom. 1995, 11, 286–292. [Google Scholar] [CrossRef]

- Admiraal, M.A.; Kusters, M.J.; Gielen, S.C. Modeling kinematics and dynamics of human arm movements. Mot. Control 2004, 8, 312–338. [Google Scholar] [CrossRef] [Green Version]

- Kashima, T.; Hori, K. Control of biomimetic robots based on analysis of human arm trajectories in 3D movements. Artif. Life Robot. 2016, 21, 24–30. [Google Scholar] [CrossRef]

- Ishida, S.; Harada, T.; Carreno-Medrano, P.; Kulić, D.; Venture, G. Human motion imitation using optimal control with time-varying weights. In Proceedings of the 2021 IEEE/RSJ international conference on intelligent robots and systems (IROS), Prague, Czech Republic, 27 September–1 October 2021; IEEE: Piscataway, NJ, USA, 2021. [Google Scholar]

- Choi, S.; Kim, J. Towards a natural motion generator: A pipeline to control a humanoid based on motion data. In Proceedings of the 2019 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Macau, China, 3–8 November 2019; IEEE: Piscataway, NJ, USA, 2019. [Google Scholar]

- Rosado, J.; Silva, F.; Santos, V.; Lu, Z. Reproduction of human arm movements using Kinect-based motion capture data. In Proceedings of the 2013 IEEE International Conference on Robotics and Biomimetics (ROBIO), Shenzhen, China, 12–14 December 2013; IEEE: Piscataway, NJ, USA, 2013. [Google Scholar]

- Kim, H.; Li, Z.; Milutinović, D.; Rosen, J. Resolving the redundancy of a seven dof wearable robotic system based on kinematic and dynamic constraint. In Proceedings of the 2012 IEEE International Conference on Robotics and Automation, Saint Paul, MN, USA, 14–18 May 2012; IEEE: Piscataway, NJ, USA, 2012. [Google Scholar]

- Vetter, P.; Flash, T.; Wolpert, D.M. Planning movements in a simple redundant task. Curr. Biol. 2002, 12, 488–491. [Google Scholar] [CrossRef] [Green Version]

- Al Borno, M.; Vyas, S.; Shenoy, K.V.; Delp, S.L. High-fidelity musculoskeletal modeling reveals that motor planning variability contributes to the speed-accuracy tradeoff. Elife 2020, 9, e57021. [Google Scholar] [CrossRef]

- Khatib, O.; Warren, J.; Sapio, V.D.; Sentis, L. Human-like motion from physiologically-based potential energies. In On Advances in Robot Kinematics; Springer: Dordrecht, The Netherlands, 2004; pp. 145–154. [Google Scholar]

- Hua, J.; Zeng, L.; Li, G.; Ju, Z. Learning for a robot: Deep reinforcement learning, imitation learning, transfer learning. Sensors 2021, 21, 1278. [Google Scholar] [CrossRef]

- Fang, B.; Jia, S.; Guo, D.; Xu, M.; Wen, S.; Sun, F. Survey of imitation learning for robotic manipulation. Int. J. Intell. Robot. Appl. 2019, 3, 362–369. [Google Scholar] [CrossRef]

- Park, G.-R.; Kim, C. Constructing of optimal database structure by imitation learning based on evolutionary algorithm. In Proceedings of the 2010 IEEE/RSJ International Conference on Intelligent Robots and Systems, Taipei, Taiwan, 18–22 October 2010; IEEE: Piscataway, NJ, USA, 2010. [Google Scholar]

- Wei, Y.; Zhao, J. Designing human-like behaviors for anthropomorphic arm in humanoid robot NAO. Robotica 2020, 38, 1205–1226. [Google Scholar] [CrossRef]

- Su, H.; Qi, W.; Yang, C.; Aliverti, A.; Ferrigno, G.; De Momi, E. Deep Neural Network Approach in Human-Like Redundancy Optimization for Anthropomorphic Manipulators. IEEE Access 2019, 7, 124207–124216. [Google Scholar] [CrossRef]

- Su, H.; Qi, W.; Gao, H.; Hu, Y.; Shi, Y.; Ferrigno, G.; De Momi, E. Machine learning driven human skill transferring for control of anthropomorphic manipulators. In Proceedings of the 2020 5th International Conference on Advanced Robotics and Mechatronics (ICARM), Shenzhen, China, 18–21 December 2020; IEEE: Piscataway, NJ, USA, 2020. [Google Scholar]

- Wen, S.; Liu, Y.; Zheng, L.; Sun, F.; Fang, B. Pose Analysis of Humanoid Robot Imitation Process Based on Improved MLP Network. In Proceedings of the 2019 WRC Symposium on Advanced Robotics and Automation (WRC SARA), Beijing, China, 21–22 August 2019; IEEE: Piscataway, NJ, USA, 2019. [Google Scholar]

- Kratzer, P.; Toussaint, M.; Mainprice, J. Prediction of human full-body movements with motion optimization and recurrent neural networks. In Proceedings of the 2020 IEEE International Conference on Robotics and Automation (ICRA), Paris, France, 31 May–31 August 2020; IEEE: Piscataway, NJ, USA, 2020. [Google Scholar]

- Xie, Z.; Zhang, Q.; Jiang, Z.; Liu, H. Robot learning from demonstration for path planning: A review. Sci. China Technol. Sci. 2020, 63, 1325–1334. [Google Scholar] [CrossRef]

- Liu, Z.; Hu, F.; Luo, D.; Wu, X. Learning arm movements of target reaching for humanoid robot. In Proceedings of the 2015 IEEE International Conference on Information and Automation, Lijiang, China, 8–10 August 2015; IEEE: Piscataway, NJ, USA, 2015. [Google Scholar]

- Guenter, F.; Hersch, M.; Calinon, S.; Billard, A. Reinforcement learning for imitating constrained reaching movements. Adv. Robot. 2012, 21, 1521–1544. [Google Scholar] [CrossRef] [Green Version]

- Lim, B.; Ra, S.; Park, F.C. Movement primitives, principal component analysis, and the efficient generation of natural motions. In Proceedings of the 2005 IEEE International Conference on Robotics and Automation, Barcelona, Spain, 18–22 April 2015; IEEE: Piscataway, NJ, USA, 2015. [Google Scholar]

- Jenkins, O.C.; Mataric, M.J.; Weber, S. Primitive-based movement classification for humanoid imitation. In Proceedings of the First IEEE-RAS International Conference on Humanoid Robotics (Humanoids-2000), Munich, Germany, 20–21 July 2021. [Google Scholar]

- Yang, A.; Chen, Y.; Naeem, W.; Fei, M.; Chen, L. Humanoid motion planning of robotic arm based on human arm action feature and reinforcement learning. Mechatronics 2021, 78, 102630. [Google Scholar] [CrossRef]

- Winter, D.A. Biomechanics and Motor Control of Human Movement; John Wiley & Sons: Hoboken, NJ, USA, 2009. [Google Scholar]

- Baker, R.; Leboeuf, F.; Reay, J.; Sangeux, M. The conventional gait model-success and limitations. In Handbook of Human Motion; Springer: Cham, Switzerland, 2018; pp. 489–508. [Google Scholar]

- Wu, G.; Van der Helm, F.C.; Veeger, H.D.; Makhsous, M.; Van Roy, P.; Anglin, C.; Nagels, J.; Karduna, A.R.; McQuade, K.; Wang, X.; et al. ISB recommendation on definitions of joint coordinate systems of various joints for the reporting of human joint motion—Part II: Shoulder, elbow, wrist and hand. J. Biomech. 2005, 38, 981–992. [Google Scholar] [CrossRef]

- Gaveau, J.; Berret, B.; Demougeot, L.; Fadiga, L.; Pozzo, T.; Papaxanthis, C. Energy-related optimal control accounts for gravitational load: Comparing shoulder, elbow, and wrist rotations. J. Neurophysiol. 2014, 111, 4–16. [Google Scholar] [CrossRef] [Green Version]

- Yu, J.; Ackland, D.C.; Pandy, M.G. Shoulder muscle function depends on elbow joint position: An illustration of dynamic coupling in the upper limb. J. Biomech. 2011, 44, 1859–1868. [Google Scholar] [CrossRef]

- Dumas, R.; Cheze, L.; Verriest, J.-P. Adjustments to McConville et al. and Young et al. body segment inertial parameters. J. Biomech. 2007, 40, 543–553. [Google Scholar] [CrossRef]

- Latash, M.L. The bliss (not the problem) of motor abundance (not redundancy). Exp. Brain Res. 2012, 217, 1–5. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Scholz, J.P.; Schöner, G. The uncontrolled manifold concept: Identifying control variables for a functional task. Exp. Brain Res. 1999, 126, 289–306. [Google Scholar] [CrossRef]

- Latash, M.L.; Scholz, J.P.; Schöner, G. Toward a new theory of motor synergies. Mot. Control 2007, 11, 276–308. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Bernstein, N.A. A new method of mirror cyclographie and its application towards the study of labor movements during work on a workbench. Hyg. Saf. Pathol. Labor 1930, 5, 3–9. [Google Scholar]

- Iqbal, S.; Zang, X.; Zhu, Y.; Zhao, J. Bifurcations and chaos in passive dynamic walking: A review. Robot. Auton. Syst. 2014, 62, 889–909. [Google Scholar] [CrossRef]

| Technology | Advantages | Disadvantages |

|---|---|---|

| Optical tracking system using passive markers | Precision < 1 mm. | Occlusion. |

| Reflective markers without any wires or battery packs. | Post-processing expectancy. | |

| Reliable and flexible sensor arrangement. | Not for outdoor use. | |

| Optical tracking system using active markers | Precision < 1 mm. | Occlusion. |

| Post-processing expectancy. | ||

| Higher precision compared to passive markers. | Presence of wires and battery packs makes it cumbersome. | |

| Not for outdoor use. | ||

| Optical tracking using a markerless vision-based system | Wireless. | Occlusion. |

| Moderate reliability. | High noise. | |

| Can be used for indoor outdoor setup. | ||

| Without any markers, wires, or battery packs. | Sensitive to lighting. | |

| Contextual information. | ||

| Inertial sensors tracking system | Wireless. | Sensitive to magnetic field. |

| Precision < 1 mm with fast calibration. | Post-processing expectancy. | |

| Can be used for indoor outdoor setup. | High noise. | |

| Magnetic marker tracking system | Wireless. | Sensitive to magnetic field. |

| Moderate reliability. | Post-processing expectancy. | |

| Can be used for indoor outdoor setup. | High noise. | |

| Limited range. |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Trivedi, U.; Menychtas, D.; Alqasemi, R.; Dubey, R. Biomimetic Approaches for Human Arm Motion Generation: Literature Review and Future Directions. Sensors 2023, 23, 3912. https://doi.org/10.3390/s23083912

Trivedi U, Menychtas D, Alqasemi R, Dubey R. Biomimetic Approaches for Human Arm Motion Generation: Literature Review and Future Directions. Sensors. 2023; 23(8):3912. https://doi.org/10.3390/s23083912

Chicago/Turabian StyleTrivedi, Urvish, Dimitrios Menychtas, Redwan Alqasemi, and Rajiv Dubey. 2023. "Biomimetic Approaches for Human Arm Motion Generation: Literature Review and Future Directions" Sensors 23, no. 8: 3912. https://doi.org/10.3390/s23083912