Sound Source Localization Method Based on Time Reversal Operator Decomposition in Reverberant Environments

Abstract

:1. Introduction

2. SSL Model in Reverberant Environments

2.1. DORT Process

2.2. Proposed ISTR-MUSIC

3. Simulations and Analysis

- 1.

- The interested area is divided into n grids, and the grid spacing is set as dd, assuming that the sound source is located in the center of the grid;

- 2.

- The microphone positions are arranged, and the sound pressure Q of different grid points received by the array is calculated;

- 3.

- The signal received by the array is y, and it converts it to the frequency domain to obtain Y, performs conjugation processing, and then sends it back to the medium. The signal received by the virtual array is Z(ω);

- 4.

- The covariance matrix RX of Z(ω) is calculated, and the eigenvector EN corresponding to the noise subspace is obtained by singular value decomposition;

- 5.

- According to Equation (21), the final spatial spectrum is calculated, and the position corresponding to the maximum value is the position of the sound source.

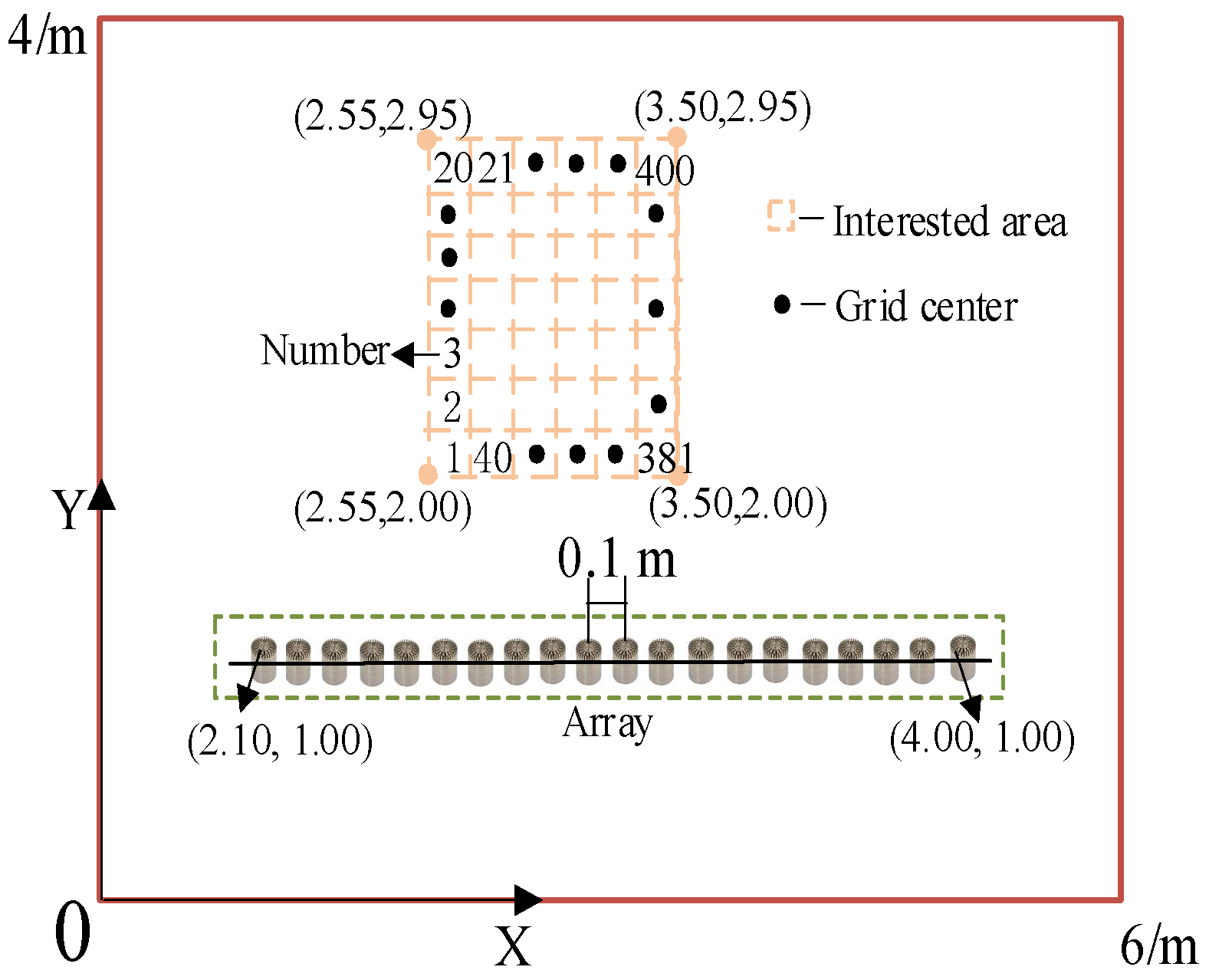

3.1. Simulation Condition and Evaluation Index

3.1.1. Simulation Condition

3.1.2. Evaluation Index (EI)

- SSL error. When the localization result is accurate, the error is 0. When the result is inaccurate, the position obtained by the SSL algorithm is la, and the distance between the predicted position and the actual position ls is the error, . It intuitively reflects the spatial resolution of the SSL algorithm. The smaller e is, the better the algorithm performance is.

- Accuracy, represented by A. The total number of experiments is t, and the number of accurate results is c; thus, A = c/t × 100%. SSL accuracy can serve as an indicator of the reliability of SSL results. A higherHigher accuracy implies that the results are more reliable.

- Root mean square error (RMSE). It is an important index for measuring localization accuracy, representing the average deviation between observed values and true values. A lower RMSE value indicates better performance of the model, as it can get closer to the true values on average.

- Ratio of peak values, represented by P. The peak value of the correlation coefficient is p1 and the second peak value is p2, P = p2/p1. This index reflects the correlation between observed values and true values. A smaller value indicates better performance of the algorithm.

3.2. Comparison with Different Methods

3.3. Studies in Different Situations

3.3.1. Different Number of Microphones

3.3.2. Different SNRs

3.3.3. Different Reverberation Time

3.3.4. Different Frequencies

3.3.5. Multiple Sound Sources

4. Real-Data Experiments

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Pierre-Amaury Grumiaux, S.D.K.C. A review of sound source localization with deep learning methods. arXiv 2021, arXiv:2109.03465. [Google Scholar]

- He, C.; Cheng, S.; Zheng, R.; Liu, J. Delay-and-sum beamforming based spatial mapping for multi-source sound localization. IEEE Internet Things 2024, 11, 16048–16060. [Google Scholar] [CrossRef]

- Meng, X.; Cao, B.; Yan, F.; Greco, M.; Gini, F.; Zhang, Y. Real-valued MUSIC for efficient direction of arrival estimation with arbitrary arrays: Mirror suppression and resolution improvement. Signal Process 2023, 202, 108766. [Google Scholar] [CrossRef]

- Simon, G. Acoustic Moving Source Localization using Sparse Time Difference of Arrival Measurements. In Proceedings of the IEEE 22nd International Symposium on Computational Intelligence and Informatics and 8th IEEE International Conference on Recent Achievements in Mechatronics, Automation, Computer Science and Robotics, Zhuhai, China, 26–28 June 2022. [Google Scholar]

- Osamu, I. An image-source reconstruction algorithm using phase conjugation for diffraction-limited imaging in an inhomogeneous medium. J. Acoust. Soc. Am. 1989, 85, 1602–1606. [Google Scholar]

- Xanthos, L.; Yavuz, M.; Himeno, R.; Yokota, H.; Costen, F. Resolution enhancement of uwb time-reversal microwave imaging in dispersive environments. IEEE Trans. Comput. Imaging 2021, 7, 925–934. [Google Scholar] [CrossRef]

- Haghpanah, M.; Kashani, Z.G.; Param, A.K. Breast cancer detection by time-reversal imaging using ultra-wideband modified circular patch antenna array. In Proceedings of the 2022 30th International Conference on Electrical Engineering, Seoul, South Korea, 17–19 May 2022. [Google Scholar]

- Sadeghi, S.; Mohammadpour-Aghdam, K.; Faraji-Dana, R.; Burkholder, R.J. A DORT-uniform diffraction tomography algorithm for through-the-wall imaging. IEEE Trans. Antennas Propag. 2020, 68, 3176–3183. [Google Scholar] [CrossRef]

- Li, F.; Bai, T.; Nakata, N.; Lyu, B.; Song, W. Efficient seismic source localization using simplified gaussian beam time reversal imaging. IEEE Trans. Geosci. Remote Sens. 2020, 58, 4472–4478. [Google Scholar] [CrossRef]

- Li, M.; Xi, X.; Song, Z.; Liu, G. Multitarget time-reversal radar imaging method based on high-resolution hyperbolic radon transform. IEEE Trans. Geosci. Remote Sens. 2022, 19, 1–5. [Google Scholar] [CrossRef]

- Cassereau, D.; Wu, F.; Fink, M. Limits of self-focusing using closed time-reversal cavities and mirrors-theory and experiment. In Proceedings of the IEEE Symposium on Ultrasonics, Honolulu, HI, USA, 4–7 December 1990. [Google Scholar]

- Draeger, C.; Fink, M. One-channel time reversal of elastic waves in a chaotic 2d-silicon cavity. Phys. Rev. Lett. 1997, 79, 407–410. [Google Scholar] [CrossRef]

- Draeger, C.; Aime, J.C.; Fink, M. One-channel time-reversal in chaotic cavities: Experimental results. J. Acoust. Soc. Am. 1999, 2, 618–625. [Google Scholar] [CrossRef]

- Draeger, C.; Fink, M. One-channel time-reversal in chaotic cavities: Theoretical limits. J. Acoust. Soc. Am. 1999, 105, 611–617. [Google Scholar] [CrossRef]

- Yon, S.; Tanter, M.; Fink, M. A Sound focusing in rooms: The time-reversal approach. J. Acoust. Soc. Am. 2003, 113, 1533–1543. [Google Scholar] [CrossRef] [PubMed]

- Bavu, E.; Besnainou, C.; Gibiat, V.; de Rosny, J.; Fink, M. Subwavelength sound focusing using a time-reversal acoustic sink. Acta Acust. United Acust. 2007, 93, 706–715. [Google Scholar]

- Conti, S.G.; Roux, P.; Kuperman, W.A. Near-field time-reversal amplification. J. Acoust. Soc. Am. 2007, 121, 3602–3606. [Google Scholar] [CrossRef] [PubMed]

- Foroozan, F.; Asif, A. Time reversal based active array source localization. IEEE Trans. Signal Process. 2011, 59, 2655–2668. [Google Scholar] [CrossRef]

- Mimani, A.; Porteous, R.; Doolan, C.J. A simulation-based analysis of the effect of a reflecting surface on aeroacoustic time-reversal source characterization and comparison with beamforming. Wave Motion 2017, 70, 65–89. [Google Scholar] [CrossRef]

- Ma, C.; Kim, S.; Fang, N.X. Far-field acoustic subwavelength imaging and edge detection based on spatial filtering and wave vector conversion. Nat. Commun. 2019, 10, 204. [Google Scholar] [CrossRef] [PubMed]

- Ma, H.; Shang, T.; Li, G.; Li, Z. Low-frequency sound source localization in enclosed space based on time reversal method. Measurement 2022, 204, 112096. [Google Scholar] [CrossRef]

- Prada, C.; Wu, F.; Fink, M. The iterative time reversal mirror: A solution to self-focusing in the pulse echo mode. J. Acoust. Soc. Am. 1991, 90, 1119–1129. [Google Scholar] [CrossRef]

- Prada, C.; Manneville, S.; Spoliansky, D.; Fink, M. Decomposition of the time reversal operator: Detection and selective focusing on two scatterers. J. Acoust. Soc. Am. 1996, 99, 2067–2076. [Google Scholar] [CrossRef]

- Bilski, P.; Panich, A.M.; Sergeev, N.A.; Wąsicki, J. Simple two-pulse time-reversal sequence for dipolar and quadrupolar-coupled spin systems. Solid State Nucl. Mag. 2004, 25, 76–79. [Google Scholar] [CrossRef] [PubMed]

- Yavuz, M.E.; Teixeira, F.L. Space-frequency ultrawideband time-reversal imaging. IEEE Trans. Geosci. Remote Sens. 2008, 46, 1115–1124. [Google Scholar] [CrossRef]

- Devaney, A.J. Time reversal imaging of obscured targets from multistatic data. IEEE Trans. Antennas Propag. 2005, 53, 1600–1610. [Google Scholar] [CrossRef]

- Marengo, E.A.; Gruber, F.K. Subspace-based localization and inverse scattering of multiply scattering point targets. Eurasip. J. Adv. Sig. Process. 2007, 1, 017342. [Google Scholar] [CrossRef]

- Ciuonzo, D.; Romano, G.; Solimene, R. Performance analysis of time-reversal MUSIC. IEEE Trans. Signal Process. 2015, 63, 2650–2662. [Google Scholar] [CrossRef]

- Tan, J.; Nie, Z.; Peng, S. In adaptive time reversal MUSIC algorithm with monostatic MIMO radar for low angle estimation. In Proceedings of the 2019 IEEE Radar Conference, Boston, MA, USA, 22–26 April 2019. [Google Scholar]

- He, J.; Yuan, F.; Chimenti, D.E.; Bond, L.J. Lamb waves based fast subwavelength imaging using a DORT-MUSIC algorithm. In Proceedings of the Aip Conference Proceedings, Terchova, Slovakia, 12–14 October 2016. [Google Scholar]

- Fan, C.; Yang, L.; Zhao, Y. Ultrasonic multi-frequency time-reversal-based imaging of extended targets. NDT E Int. 2020, 113, 102276. [Google Scholar] [CrossRef]

- Fan, C.; Yu, S.; Gao, B.; Zhao, Y.; Yang, L. Ultrasonic time-reversal-based super resolution imaging for defect localization and characterization. NDT E Int. 2022, 131, 102698. [Google Scholar] [CrossRef]

- Cheng, Z.; Li, X.; Liu, S.; Ma, M.; Liang, F.; Zhao, D.; Wang, B. Cascaded Time-Reversal-MUSIC approach for accurate location of passive intermodulation sources activated by antenna array. IEEE Trans. Antennas Propag. 2023, 71, 8841–8853. [Google Scholar] [CrossRef]

- Cheng, Z.; Ma, M.; Liang, F.; Zhao, D.; Wang, B. Low complexity time reversal imaging methods based on truncated time reversal operator. IEEE Trans. Geosci. Remote Sens. 2024, 62, 1. [Google Scholar] [CrossRef]

- Xu, C.; Wang, J.; Yin, S.; Deng, M. A focusing MUSIC algorithm for baseline-free Lamb wave damage localization. Mech. Syst. Signal Process. 2022, 164, 108242. [Google Scholar] [CrossRef]

| f/Hz | RMSE/m |

|---|---|

| 125 | 0.2953 |

| 250 | 0.2915 |

| 500 | 0.2110 |

| 1000 | 0.1173 |

| f/Hz | RMSE/m |

|---|---|

| 125 | 0.41 |

| 250 | 0.34 |

| 500 | 0.24 |

| 1000 | 0.17 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ma, H.; Shang, T.; Li, G.; Li, Z. Sound Source Localization Method Based on Time Reversal Operator Decomposition in Reverberant Environments. Electronics 2024, 13, 1782. https://doi.org/10.3390/electronics13091782

Ma H, Shang T, Li G, Li Z. Sound Source Localization Method Based on Time Reversal Operator Decomposition in Reverberant Environments. Electronics. 2024; 13(9):1782. https://doi.org/10.3390/electronics13091782

Chicago/Turabian StyleMa, Huiying, Tao Shang, Gufeng Li, and Zhaokun Li. 2024. "Sound Source Localization Method Based on Time Reversal Operator Decomposition in Reverberant Environments" Electronics 13, no. 9: 1782. https://doi.org/10.3390/electronics13091782