Early Eye Disengagement Is Regulated by Task Complexity and Task Repetition in Visual Tracking Task

Abstract

:1. Introduction

2. Methods

2.1. Apparatus

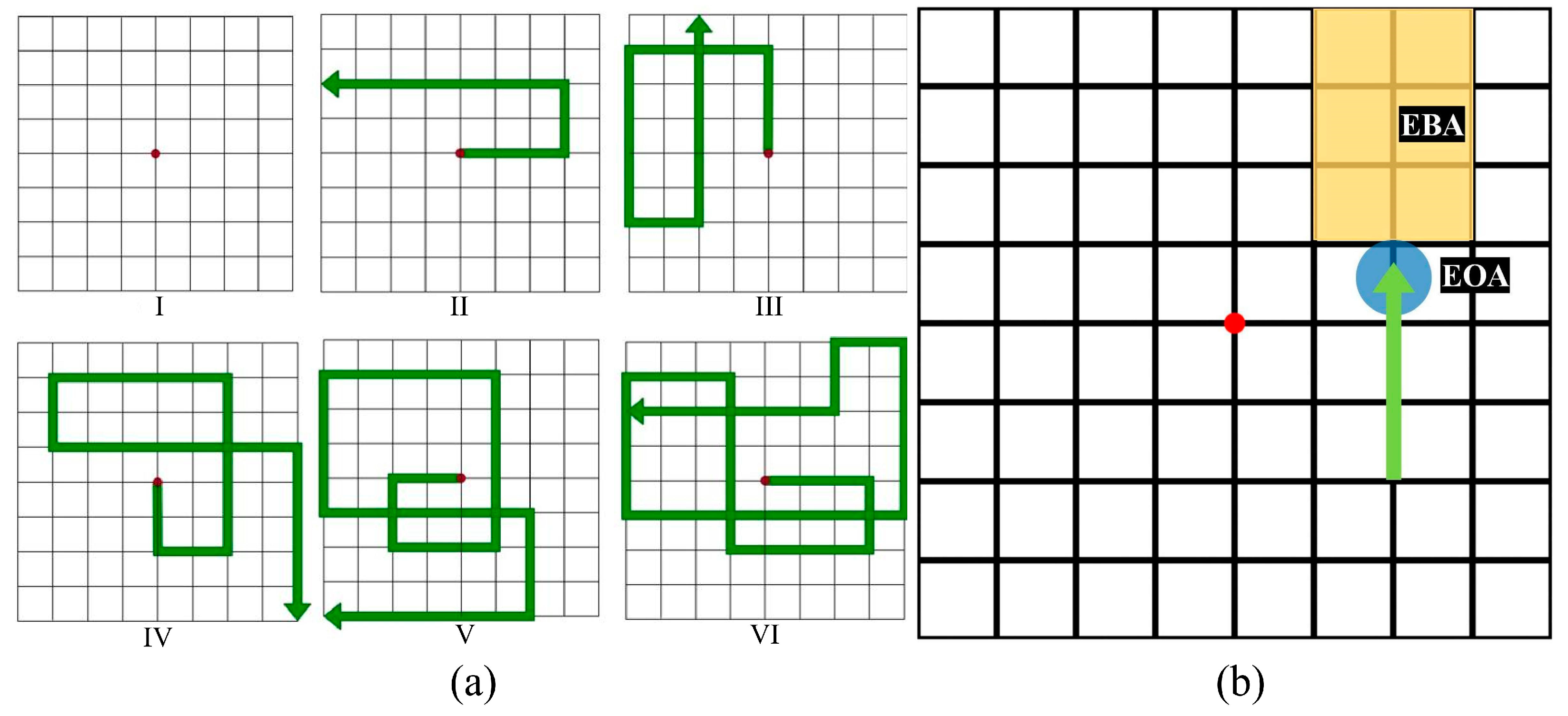

2.2. Movement Pattern Design

2.3. Tracking Task Procedure

2.4. Measures

2.4.1. Recall Accuracy Score (RAS)

2.4.2. Eye-Tracking Metrics

2.4.3. Pre-Processing of Eye-Tracking Data

2.4.4. NASA-TLX

2.5. Data Analysis

3. Results

3.1. Participants

3.2. RAS

3.3. NASA-TLX Score

3.4. Eye on Arrow

3.4.1. EOA-FixDura

3.4.2. EOA-FixFreq

3.5. Eye before Arrow

3.5.1. EBA-FixDura

3.5.2. EBA-FixFreq

3.6. Pearson Correlation Analysis

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Mennie, N.; Hayhoe, M.; Sullivan, B. Look-ahead fixations: Anticipatory eye movements in natural tasks. Exp. Brain Res. 2006, 179, 427–442. [Google Scholar] [CrossRef] [PubMed]

- Vickers, J.N. Perception, Cognition, and Decision Training: The Quiet Eye in Action; Human Kinetics: Champaign, IL, USA, 2007. [Google Scholar]

- Lehtonen, E.; Lappi, O.; Koirikivi, I.; Summala, H. Effect of driving experience on anticipatory look-ahead fixations in real curve driving. Accid. Anal. Prev. 2014, 70, 195–208. [Google Scholar] [CrossRef] [PubMed]

- Wilson, M.R.; Causer, J.; Vickers, J.N. Aiming for excellence. In Routledge Handbook of Sport Expertise; Routledge: London, UK, 2015; pp. 22–37. [Google Scholar]

- Rosner, A.; Franke, T.; Platten, F.; Attig, C. Eye Movements in Vehicle Control. In Eye Movement Research: An Introduction to Its Scientific Foundations and Applications, 1st ed.; Springer: Berlin, Germany, 2019. [Google Scholar]

- Vickers, J.N. Mind over muscle: The role of gaze control, spatial cognition, and the quiet eye in motor expertise. Cogn. Process. 2011, 12, 219–222. [Google Scholar] [CrossRef] [PubMed]

- Vine, S.J.; Moore, L.J.; Wilson, M.R. Quiet eye training facilitates competitive putting performance in elite golfers. Front. Psychol. 2011, 2, 8. [Google Scholar] [CrossRef] [PubMed]

- Zheng, B.; Jiang, X.; Bednarik, R.; Atkins, M.S. Action-related eye measures to assess surgical expertise. BJS Open 2021, 5, zrab068. [Google Scholar] [CrossRef] [PubMed]

- Hannula, D.E.; Althoff, R.R.; Warren, D.E.; Riggs, L.; Cohen, N.J.; Ryan, J.D. Worth a glance: Using eye movements to investigate the cognitive neuroscience of memory. Front. Hum. Neurosci. 2010, 4, 166. [Google Scholar] [CrossRef] [PubMed]

- Althoff, R.; Cohen, N.J.; McConkie, G.; Wasserman, S.; Maciukenas, M.; Azen, R.; Romine, L. Eye movement-based memory assessment. In Current Oculomotor Research; Kluwer Academic/Plenum Publishers: New York, NY, USA, 1999; pp. 293–302. [Google Scholar]

- Hannula, D.E.; Ranganath, C. The eyes have it: Hippocampal activity predicts expression of memory in eye movements. Neuron 2009, 63, 592–599. [Google Scholar] [CrossRef] [PubMed]

- Chainey, J.; O’Kelly, C.J.; Zhang, Y.; Kim, M.J.; Zheng, B. Gaze behaviors of neurosurgeon in performing gross movements under microscope. World Neurosurg. 2022, 166, e469–e474. [Google Scholar] [CrossRef] [PubMed]

- Wu, Y.; Zhang, Z.; Zhang, Y.; Zheng, B.; Aghazadeh, F. Pupil response in visual tracking tasks: The impacts of task load, familiarity, and gaze position. Sensors 2024, 24, 2545. [Google Scholar] [CrossRef]

- Hart, S.G.; Staveland, L.E. Development of NASA-TLX (Task Load Index): Results of empirical and theoretical research. Adv. Psychol. 1988, 52, 139–183. [Google Scholar]

- Leigh, R.J.; Zee, D.S. Smooth visual tracking and fixation. In The Neurology of Eye Movements; Online ed.; Oxford Academic: New York, NY, USA, 2015; pp. 289–359. [Google Scholar]

- Kowler, E. Eye movements: The past 25 years. Vis. Res. 2011, 51, 1457–1483. [Google Scholar] [CrossRef]

- Kredel, R.; Vater, C.; Klostermann, A.; Hossner, E.-J. Eye-tracking technology and the dynamics of natural gaze behavior in sports: A systematic review of 40 years of research. Front. Psychol. 2017, 8, 1845. [Google Scholar] [CrossRef]

- Vickers, J.N.; Causer, J.; Vanhooren, D. The role of quiet eye timing and location in the basketball three-point shot: A new research paradigm. Front. Psychol. 2019, 10, 2424. [Google Scholar] [CrossRef]

- Vickers, J.N.; Rodrigues, S.T.; Edworthy, G. Quiet eye and accuracy in the dart throw. Int. J. Sports Vis. 2000, 6, 30–36. [Google Scholar]

- Ganglmayer, K.; Attig, M.; Daum, M.M.; Paulus, M. Infants’ perception of goal-directed actions: A multi-lab replication reveals that infants anticipate paths and not goals. Infant Behav. Dev. 2019, 57, 101340. [Google Scholar] [CrossRef]

- Huettig, F.; Janse, E. Anticipatory eye movements are modulated by working memory capacity: Evidence from older adults. In Proceedings of the 18th Annual Conference on Architectures and Mechanisms for Language Processing (AMLaP 2012), Garda, Italy, 6–8 September 2012. [Google Scholar]

- Lehtonen, E.; Lappi, O.; Summala, H. Anticipatory eye movements when approaching a curve on a rural road depend on working memory load. Transp. Res. Part F Traffic Psychol. Behav. 2012, 15, 369–377. [Google Scholar] [CrossRef]

- Marcus, D.J.; Karatekin, C.; Markiewicz, S. Oculomotor evidence of sequence learning on the Serial Reaction Time Task. Mem. Cogn. 2006, 34, 420–432. [Google Scholar] [CrossRef]

- Vakil, E.; Bloch, A.; Cohen, H. Anticipation measures of sequence learning: Manual versus oculomotor versions of the Serial Reaction Time Task. Q. J. Exp. Psychol. 2017, 70, 579–589. [Google Scholar] [CrossRef]

- Hollands, M.A.; Patla, A.E.; Vickers, J.N. ‘Look where you’re going!’: Gaze behaviour associated with maintaining and changing the direction of Locomotion. Exp. Brain Res. 2002, 143, 221–230. [Google Scholar] [CrossRef]

- Vine, S.J.; Wilson, M.R. The influence of quiet eye training and pressure on attention and visuo-motor control. Acta Psychol. 2011, 136, 340–346. [Google Scholar] [CrossRef]

- Pasturel, C.; Montagnini, A.; Perrinet, L.U. Humans adapt their anticipatory eye movements to the volatility of visual motion properties. PLoS Comput. Biol. 2020, 16, e1007438. [Google Scholar] [CrossRef] [PubMed]

- Pfeuffer, C.U.; Aufschnaiter, S.; Thomaschke, R.; Kiesel, A. Only time will tell the future: Anticipatory saccades reveal the temporal dynamics of time-based location and task expectancy. J. Exp. Psychol. Hum. Percept. Perform. 2020, 46, 1183–1200. [Google Scholar] [CrossRef] [PubMed]

- Vickers, J.N. Origins and current issues in Quiet Eye research. Curr. Issues Sport Sci. (CISS) 2016, 2016, 101. [Google Scholar]

- Yurko, Y.Y.; Scerbo, M.W.; Prabhu, A.S.; Acker, C.E.; Stefanidis, D. Higher mental workload is associated with poorer laparoscopic performance as measured by the NASA-TLX tool. Simul. Healthc. J. Soc. Simul. Healthc. 2010, 5, 267–271. [Google Scholar] [CrossRef] [PubMed]

- Krejtz, K.; Duchowski, A.T.; Niedzielska, A.; Biele, C.; Krejtz, I. Eye tracking cognitive load using pupil diameter and microsaccades with fixed gaze. PLoS ONE 2018, 13, e0203629. [Google Scholar] [CrossRef] [PubMed]

- Di Stasi, L.L.; Antolí, A.; Cañas, J.J. Evaluating mental workload while interacting with computer-generated artificial environments. Entertain. Comput. 2013, 4, 63–69. [Google Scholar] [CrossRef]

- Recarte, M.A.; Nunes, L.M. Effects of verbal and spatial-imagery tasks on eye fixations while driving. J. Exp. Psychol. Appl. 2000, 6, 31–43. [Google Scholar] [CrossRef] [PubMed]

- Mallick, R.; Slayback, D.; Touryan, J.; Ries, A.J.; Lance, B.J. The use of eye metrics to index cognitive workload in video games. In Proceedings of the 2016 IEEE Second Workshop on Eye Tracking and Visualization (ETVIS), Baltimore, MD, USA, 23 October 2016. [Google Scholar]

- Matthews, G.; Reinerman-Jones, L.E.; Barber, D.J.; Abich, J. The psychometrics of mental workload. Hum. Factors J. Hum. Factors Ergon. Soc. 2014, 57, 125–143. [Google Scholar] [CrossRef] [PubMed]

- Foy, H.J.; Chapman, P. Mental workload is reflected in driver behaviour, physiology, eye movements and prefrontal cortex activation. Appl. Ergon. 2018, 73, 90–99. [Google Scholar] [CrossRef]

- Rosch, J.L.; Vogel-Walcutt, J.J. A review of eye-tracking applications as tools for training. Cogn. Technol. Work 2013, 15, 313–327. [Google Scholar] [CrossRef]

- Dalmaso, M.; Castelli, L.; Galfano, G. Early saccade planning cannot override oculomotor interference elicited by gaze and arrow distractors. Psychon. Bull. Rev. 2020, 27, 990–997. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wu, Y.; Zhang, Z.; Aghazadeh, F.; Zheng, B. Early Eye Disengagement Is Regulated by Task Complexity and Task Repetition in Visual Tracking Task. Sensors 2024, 24, 2984. https://doi.org/10.3390/s24102984

Wu Y, Zhang Z, Aghazadeh F, Zheng B. Early Eye Disengagement Is Regulated by Task Complexity and Task Repetition in Visual Tracking Task. Sensors. 2024; 24(10):2984. https://doi.org/10.3390/s24102984

Chicago/Turabian StyleWu, Yun, Zhongshi Zhang, Farzad Aghazadeh, and Bin Zheng. 2024. "Early Eye Disengagement Is Regulated by Task Complexity and Task Repetition in Visual Tracking Task" Sensors 24, no. 10: 2984. https://doi.org/10.3390/s24102984