Integrating AI in Lipedema Management: Assessing the Efficacy of GPT-4 as a Consultation Assistant

Abstract

:1. Introduction

2. Materials and Methods

2.1. Study Design

2.2. Prompting

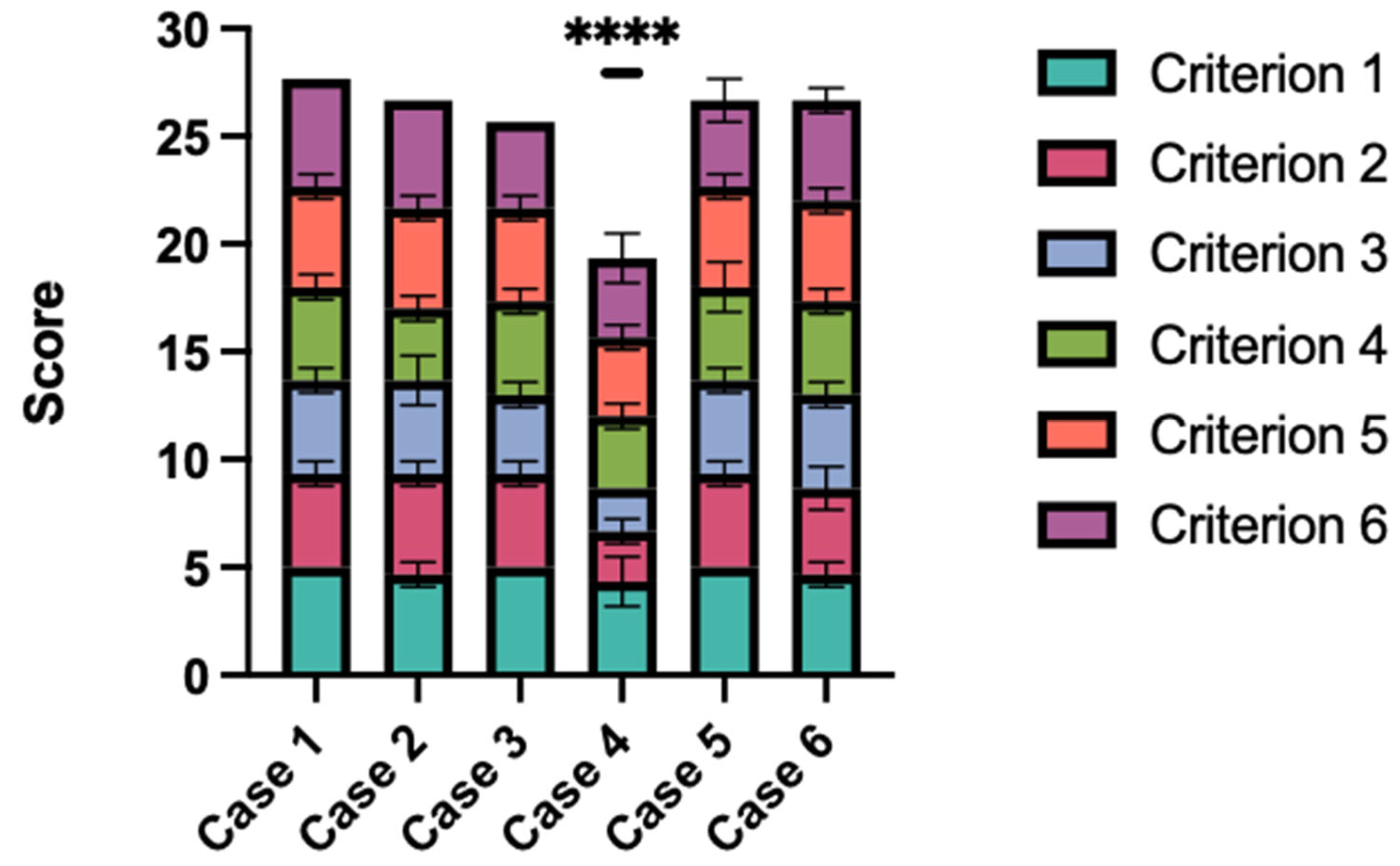

- The large language model (LLM) correctly understood and captured the issue.

- The LLM correctly stated the most likely diagnosis.

- The LLM mentioned the correct recommendations for further diagnostic steps.

- The LLM correctly assessed whether surgery is indicated.

- The LLM summarized the case well and presented it satisfactorily and clearly to the doctor.

- The LLM did the patient history just as well as I would have done it.

3. Results

4. Discussion

Supplementary Materials

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Meskó, B. The Impact of Multimodal Large Language Models on Health Care’s Future. J. Med. Internet Res. 2023, 25, e52865. [Google Scholar] [CrossRef] [PubMed]

- Wójcik, S.; Rulkiewicz, A.; Pruszczyk, P.; Lisik, W.; Poboży, M.; Domienik-Karłowicz, J. Beyond ChatGPT: What does GPT-4 add to healthcare? The dawn of a new era. Cardiol. J. 2023, 30, 1018–1025. [Google Scholar] [PubMed]

- OpenAI. GPT-4. 2023. Available online: https://openai.com/research/gpt-4 (accessed on 18 February 2024).

- Blease, C.; Torous, J. ChatGPT and mental healthcare: Balancing benefits with risks of harms. BMJ Ment. Health 2023, 26, 1–3. [Google Scholar] [CrossRef] [PubMed]

- Lee, P.; Bubeck, S.; Petro, J. Benefits, Limits, and Risks of GPT-4 as an AI Chatbot for Medicine. N. Engl. J. Med. 2023, 388, 1233–1239. [Google Scholar] [CrossRef] [PubMed]

- Bajaj, S.; Gandhi, D.; Nayar, D. Potential Applications and Impact of ChatGPT in Radiology. Acad. Radiol. 2023, 31, 1256–1261. [Google Scholar] [CrossRef] [PubMed]

- Wilhelm, T.I.; Roos, J.; Kaczmarczyk, R. Large Language Models for Therapy Recommendations Across 3 Clinical Specialties: Comparative Study. J. Med. Internet Res. 2023, 25, e49324. [Google Scholar] [CrossRef] [PubMed]

- Gupta, R.; Pande, P.; Herzog, I.; Weisberger, J.; Chao, J.; Chaiyasate, K.; Lee, E.S. Application of ChatGPT in Cosmetic Plastic Surgery: Ally or Antagonist? Aesthetic Surg. J. 2023, 43, NP587–NP590. [Google Scholar] [CrossRef] [PubMed]

- Gupta, R.; Park, J.B.; Bisht, C.; Herzog, I.; Weisberger, J.; Chao, J.; Chaiyasate, K.; Lee, E.S. Expanding Cosmetic Plastic Surgery Research with ChatGPT. Aesthetic Surg. J. 2023, 43, 930–937. [Google Scholar] [CrossRef] [PubMed]

- Najafali, D.; Reiche, E.; Camacho, J.M.; Morrison, S.D.; Dorafshar, A.H. Let’s Chat About Chatbots: Additional Thoughts on ChatGPT and Its Role in Plastic Surgery Along With Its Ability to Perform Systematic Reviews. Aesthetic Surg. J. 2023, 43, NP591–NP592. [Google Scholar] [CrossRef] [PubMed]

- Xie, Y.; Seth, I.; Rozen, W.M.; Hunter-Smith, D.J. Evaluation of the Artificial Intelligence Chatbot on Breast Reconstruction and Its Efficacy in Surgical Research: A Case Study. Aesthetic Plast. Surg. 2023, 47, 2360–2369. [Google Scholar] [CrossRef]

- Sun, Y.X.; Li, Z.M.; Huang, J.Z.; Yu, N.Z.; Long, X. GPT-4: The Future of Cosmetic Procedure Consultation? Aesthet. Surg. J. 2023, 43, NP670–NP672. [Google Scholar] [CrossRef] [PubMed]

- Copeland-Halperin, L.R.; O’Brien, L.; Copeland, M. Evaluation of Artificial Intelligence-generated Responses to Common Plastic Surgery Questions. Plast. Reconstr. Surg. Glob. Open 2023, 11, e5226. [Google Scholar] [CrossRef] [PubMed]

- Meskó, B. Prompt Engineering as an Important Emerging Skill for Medical Professionals: Tutorial. J. Med. Internet Res. 2023, 25, e50638. [Google Scholar] [CrossRef]

- Almeida, L.C.; Farina, E.; Kuriki, P.E.A.; Abdala, N.; Kitamura, F.C. Performance of ChatGPT on the Brazilian Radiology and Diagnostic Imaging and Mammography Board Examinations. Radiol. Artif. Intell. 2024, 6, e230103. [Google Scholar] [CrossRef] [PubMed]

- Hu, Y.; Chen, Q.; Du, J.; Peng, X.; Keloth, V.K.; Zuo, X.; Zhou, Y.; Li, Z.; Jiang, X.; Lu, Z.; et al. Improving large language models for clinical named entity recognition via prompt engineering. J. Am. Med. Inform. Assoc. 2024, 1–10. [Google Scholar] [CrossRef] [PubMed]

- Savage, T.; Nayak, A.; Gallo, R.; Rangan, E.; Chen, J.H. Diagnostic reasoning prompts reveal the potential for large language model interpretability in medicine. NPJ Digit. Med. 2024, 7, 20. [Google Scholar] [CrossRef] [PubMed]

- Leypold, T.; Schäfer, B.; Boos, A.; Beier, J.P. Can AI Think Like a Plastic Surgeon? Evaluating GPT-4’s Clinical Judgment in Reconstructive Procedures of the Upper Extremity. Plast. Reconstr. Surg.—Glob. Open 2023, 11, e5471. [Google Scholar] [CrossRef] [PubMed]

- Reich-Schupke, S.; Schmeller, W.; Brauer, W.J.; Cornely, M.E.; Faerber, G.; Ludwig, M.; Lulay, G.; Miller, A.; Rapprich, S.; Ure, C.; et al. S1 guidelines: Lipedema. JDDG J. Der Dtsch. Dermatol. Ges. 2017, 15, 758–767. [Google Scholar] [CrossRef]

- Forner-Cordero, I.; Forner-Cordero, A.; Szolnoky, G. Update in the management of lipedema. Int. Angiol. 2021, 40, 345–357. [Google Scholar] [CrossRef]

- OpenAI. Introducing GPTs. 2023. Available online: https://openai.com/blog/introducing-gpts (accessed on 18 February 2024).

- Chen, B.; Zhang, Z.; Langrené, N.; Zhu, S. Unleashing the potential of prompt engineering in Large Language Models: A comprehensive review. arXiv 2023, arXiv:2310.14735. [Google Scholar]

- Wei, J.; Wang, X.; Schuurmans, D.; Bosma, M.; Xia, F.; Chi, E.; Le, Q.V.; Zhou, D. Chain-of-thought prompting elicits reasoning in large language models. Adv. Neural Inf. Process. Syst. 2022, 35, 24824–24837. [Google Scholar]

- Wu, S.; Shen, E.M.; Badrinath, C.; Ma, J.; Lakkaraju, H. Analyzing chain-of-thought prompting in Large language models via gradient-based feature Attributions. arXiv 2023, arXiv:2307.13339. [Google Scholar]

- Zhang, Z.; Zhang, A.; Li, M.; Smola, A. Automatic chain of thought prompting in large language models. arXiv 2022, arXiv:2210.03493. [Google Scholar]

- Zhou, Y.; Muresanu, A.I.; Han, Z.; Paster, K.; Pitis, S.; Chan, H.; Ba, J. Large language models are human-level prompt engineers. arXiv 2022, arXiv:2211.01910. [Google Scholar]

- Wei, J.; Bosma, M.; Zhao, V.Y.; Guu, K.; Yu, A.W.; Lester, B.; Du, N.; Dai, A.M.; Le, Q.V. Finetuned language models are zero-shot learners. arXiv 2021, arXiv:2109.01652. [Google Scholar]

- Kojima, T.; Gu, S.S.; Reid, M.; Matsuo, Y.; Iwasawa, Y. Large language models are zero-shot reasoners. Adv. Neural Inf. Process. Syst. 2022, 35, 22199–22213. [Google Scholar]

- Duarte, F. Number of ChatGPT Users (November 2023). 2023. Available online: https://explodingtopics.com/blog/chatgpt-users (accessed on 18 February 2024).

- OpenAI. ChatGPT Can Now See, Hear, and Speak. 2023. Available online: https://openai.com/blog/chatgpt-can-now-see-hear-and-speak (accessed on 18 February 2024).

- OpenAI. DALL·E 3 is Now Available in ChatGPT Plus and Enterprise. 2023. Available online: https://openai.com/blog/dall-e-3-is-now-available-in-chatgpt-plus-and-enterprise (accessed on 18 February 2024).

- Truhn, D.; Weber, C.D.; Braun, B.J.; Bressem, K.; Kather, J.N.; Kuhl, C.; Nebelung, S. A pilot study on the efficacy of GPT-4 in providing orthopedic treatment recommendations from MRI reports. Sci. Rep. 2023, 13, 20159. [Google Scholar] [CrossRef]

- Bužančić, I.; Belec, D.; Držaić, M.; Kummer, I.; Brkic, J.; Fialová, D.; Hadžiabdić, M.O. Clinical decision making in benzodiazepine deprescribing by HealthCare Providers vs AI-assisted approach. Br. J. Clin. Pharmacol. 2023, 90, 662–674. [Google Scholar] [CrossRef]

- Stoneham, S.; Livesey, A.; Cooper, H.; Mitchell, C. Chat GPT vs Clinician, challenging the diagnostic capabilities of A.I. in dermatology . Clin. Exp. Dermatol. 2023, ahead of print. [Google Scholar] [CrossRef]

- Miao, J.; Thongprayoon, C.; Suppadungsuk, S.; Valencia, O.A.G.; Cheungpasitporn, W. Integrating Retrieval-Augmented Generation with Large Language Models in Nephrology, Advancing Practical Applications. Medicina 2024, 60, 445. [Google Scholar] [CrossRef]

- Garcia Valencia, O.A.; Suppadungsuk, S.; Thongprayoon, C.; Miao, J.; Tangpanithandee, S.; Craici, I.M.; Cheungpasitporn, W. Ethical Implications of Chatbot Utilization in Nephrology. J. Pers. Med. 2023, 13, 1363. [Google Scholar] [CrossRef]

| Grading Scale | ||||||

|---|---|---|---|---|---|---|

| Criteria | 1 Strongly Disagree | 2 Disagree | 3 Neither Agree or Disagree | 4 Agree | 5 Strongly Agree | |

| Criterion 1 | The large language model (LLM) correctly understood and captured the issue. | |||||

| Criterion 2 | The LLM correctly stated the most likely diagnosis. | |||||

| Criterion 3 | The LLM mentioned the correct recommendations for further diagnostic steps. | |||||

| Criterion 4 | The LLM correctly assessed whether surgery is indicated. | |||||

| Criterion 5 | The LLM summarized the case well and presented it satisfactorily and clearly to the doctor. | |||||

| Criterion 6 | The LLM did the patient history just as well as I would have done it. |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Leypold, T.; Lingens, L.F.; Beier, J.P.; Boos, A.M. Integrating AI in Lipedema Management: Assessing the Efficacy of GPT-4 as a Consultation Assistant. Life 2024, 14, 646. https://doi.org/10.3390/life14050646

Leypold T, Lingens LF, Beier JP, Boos AM. Integrating AI in Lipedema Management: Assessing the Efficacy of GPT-4 as a Consultation Assistant. Life. 2024; 14(5):646. https://doi.org/10.3390/life14050646

Chicago/Turabian StyleLeypold, Tim, Lara F. Lingens, Justus P. Beier, and Anja M. Boos. 2024. "Integrating AI in Lipedema Management: Assessing the Efficacy of GPT-4 as a Consultation Assistant" Life 14, no. 5: 646. https://doi.org/10.3390/life14050646