Different Senses of Entropy—Implications for Education

Abstract

:1. Introduction

Physics is often called an “exact science,” and for good reason. At our best, we are precise in our measurements, equations, and claims. We do not seem to be at our best, however, when we write and talk about physics to introductory students. Language usage which presents few problems when used among ourselves because of shared assumptions, is potentially misleading or uninformative when used with the uninitiated.

- What are the distinct senses of the word entropy and how are these senses of entropy related logically and historically?

- What are the educational implications of the answer to the question above regarding teaching and learning the scientific senses of entropy?

2. Methods

2.1. Data Collection

2.2. Data Analysis

2.2.1. Principled Polysemy

- The meaning criterion: Featuring a different meaning, not apparent in any other senses.

- The concept elaboration criterion: Featuring unique or highly distinct patterns of language use across contexts. Patterns can relate to modifications of words by an adjective, e.g., “a short time” or typical verb phrases, e.g., “The time sped by”.

- The grammatical criterion: Featuring unique grammatical constructions, e.g., distinguishing between ‘time’ as a count noun, mass noun and proper noun.

- Earliest attested meaning (originating sense).

- Predominance in the semantic network, in the sense of type-frequency.

- Predictability regarding other senses.

- Lived human experience, i.e., experiences at the phenomenological level.

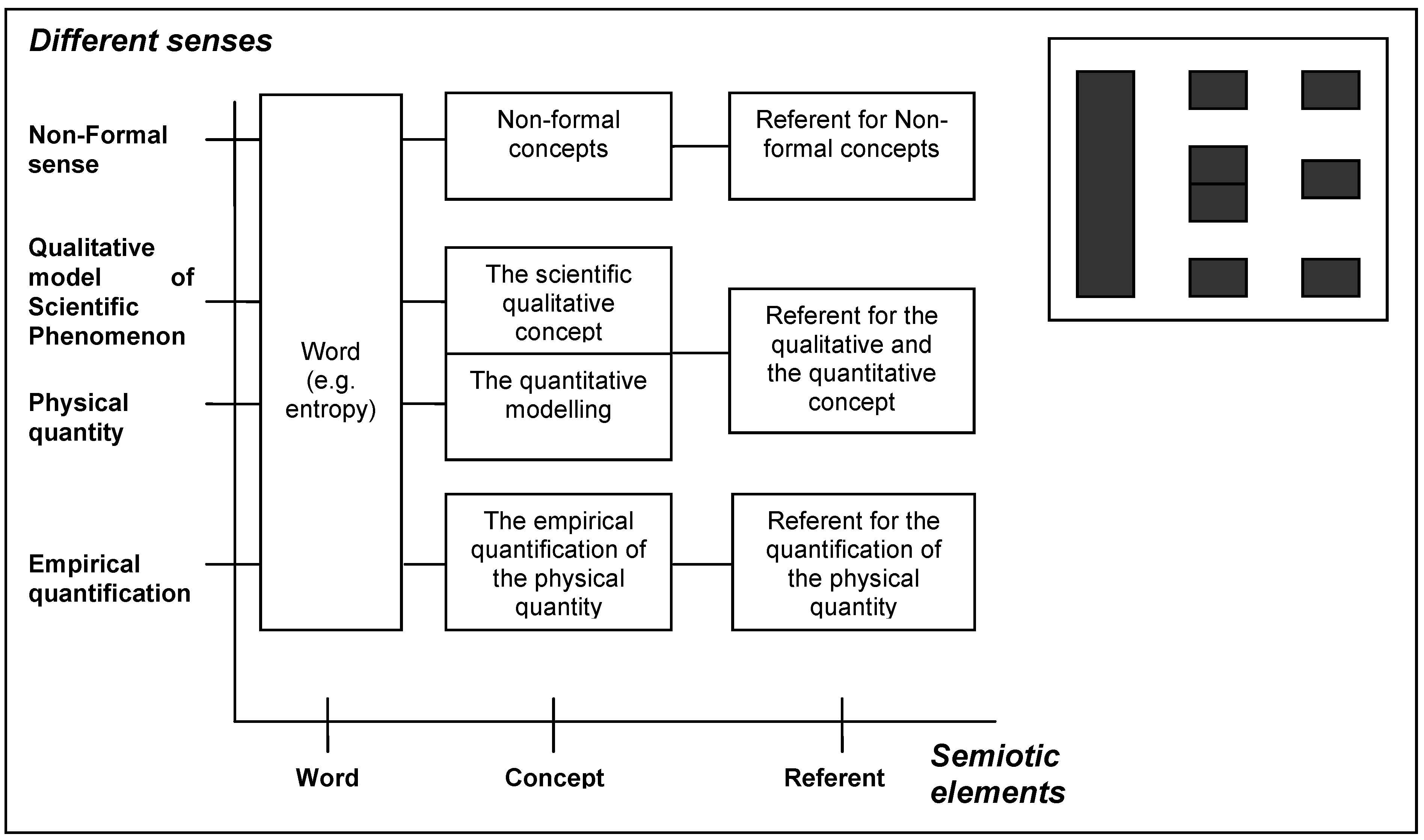

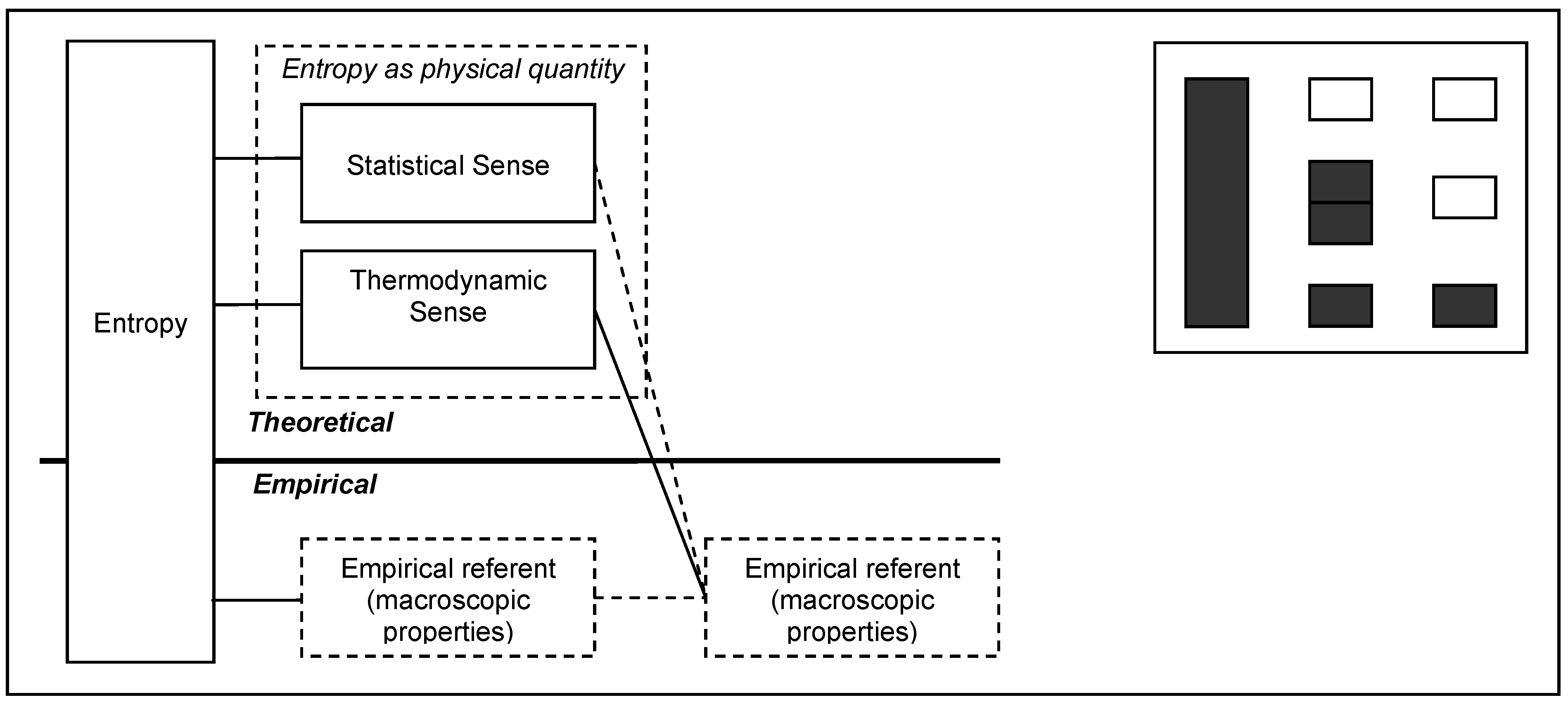

2.2.2. Two-Dimensional Semiotic/Semantic Analysing Schema

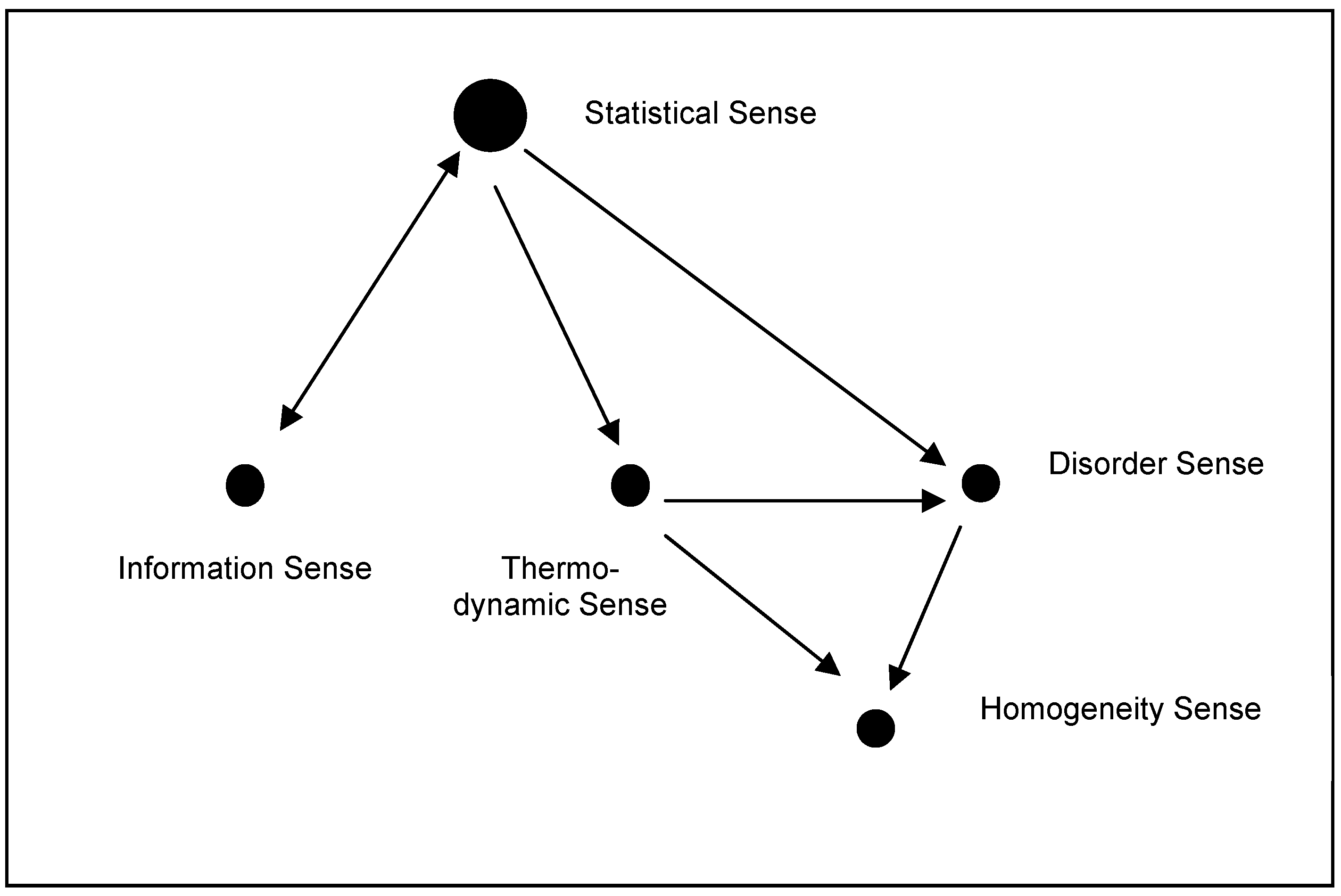

3. Principled Polysemy Analysis of Entropy

- Thermodynamic Sense

- Statistical Sense

- Disorder Sense

- Information Sense

- Homogeneity Sense

3.1. Thermodynamic Sense

When a physical process occurs, such as a piston-and-cylinder cycle in a steam engine, it is possible to compute how much entropy is produced as a result.In a closed system the total entropy cannot go down. Nor will it go on rising without limit. There will be a state of maximum entropy or maximum disorder, which is referred to as thermodynamic equilibrium; once the system has reached that state it is stuck there.

a function of thermodynamic variables, as temperature, pressure, or composition, that is a measure of the energy that is not available for work during a thermodynamic process. A closed system evolves toward a state of maximum entropy.

a hypothetical tendency for the universe to attain a state of maximum homogeneity in which all matter is at a uniform temperature (heat death).

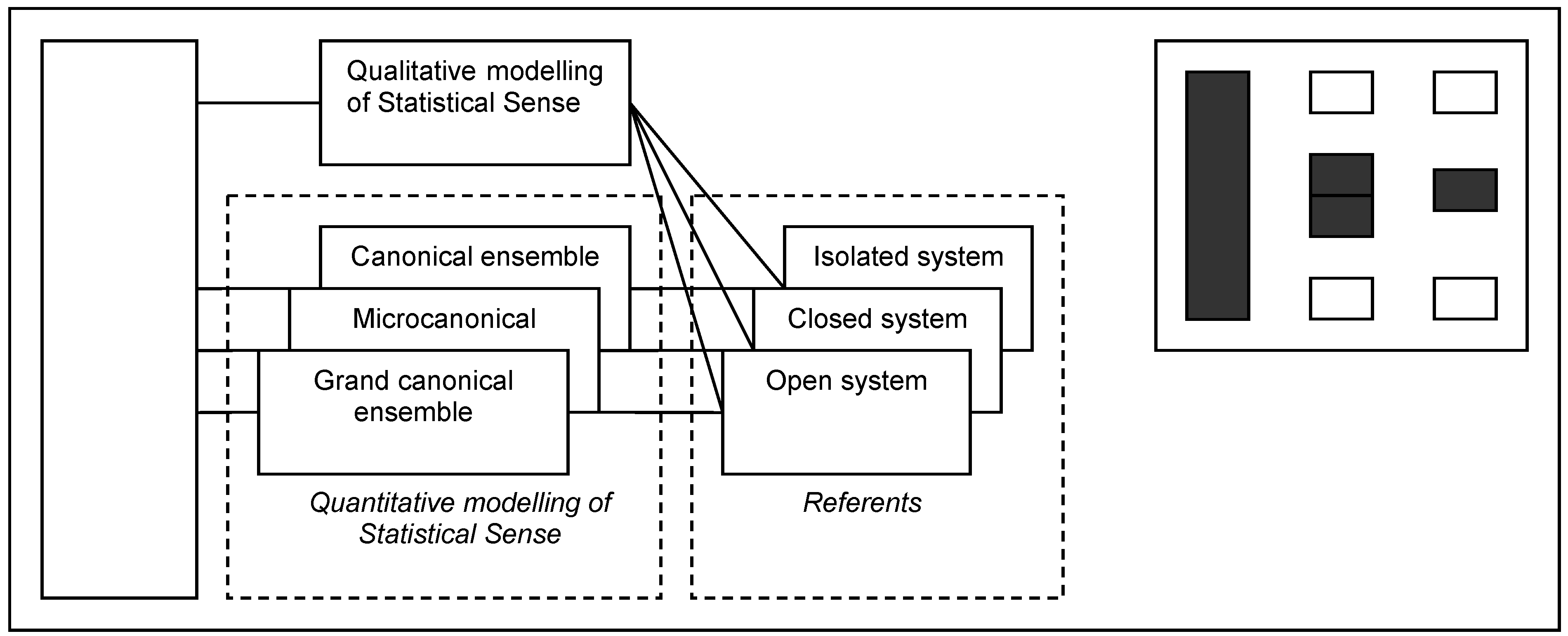

3.2. Statistical Sense

3.2.1. Statistical Sense—Meaning Criterion

3.2.2. Statistical Sense—Concept Elaboration Criterion

3.2.3. Statistical Sense—Grammatical Criterion

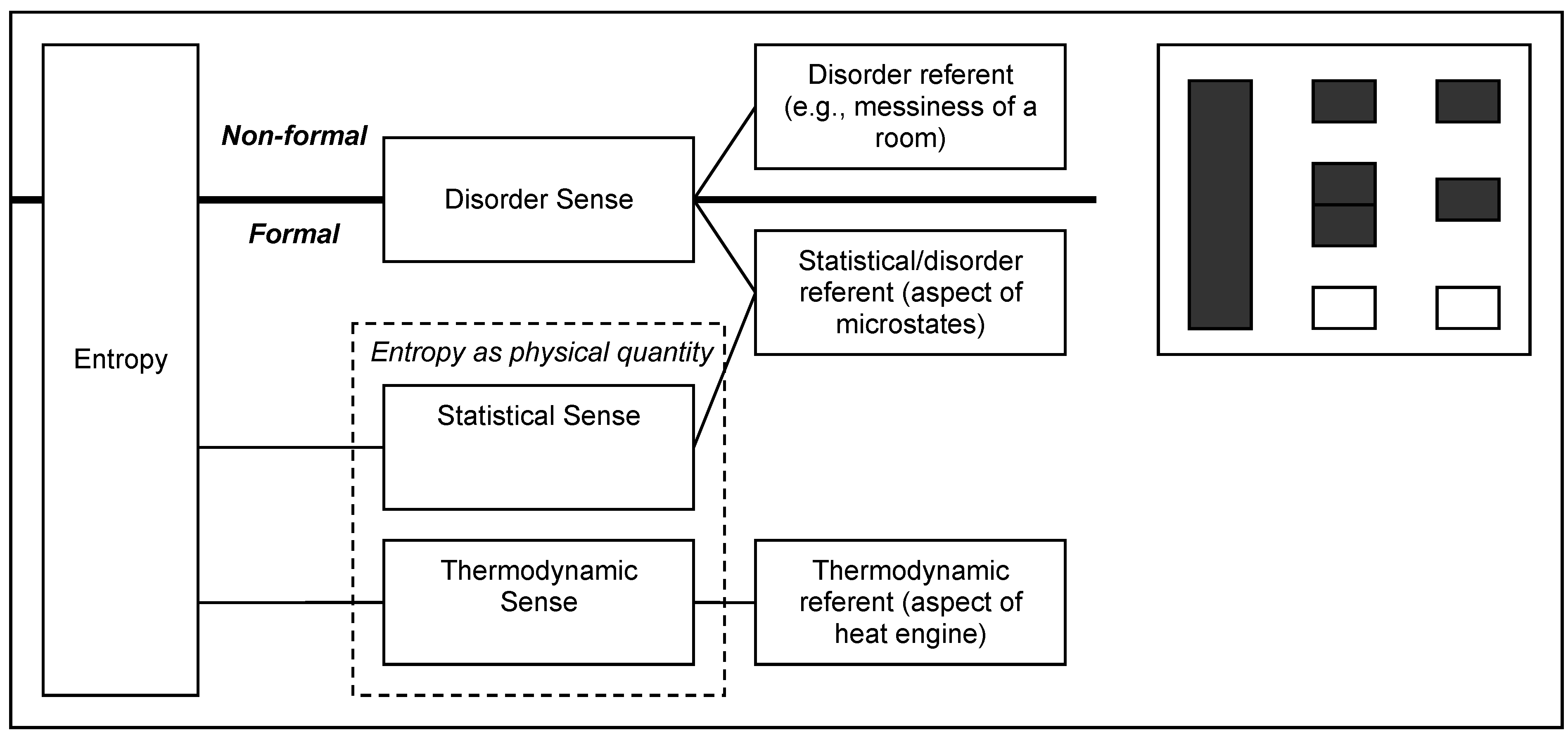

3.3. Disorder Sense

Entropy provides a quantity measure of disorder [26].Disorder is designated by a quantity called entropy, which is denoted S [27].

[The entropy struck after four windows]. In effect, it all starts with a vision to cheat the entropy of decomposition. Get the mouldering windows to be like new again. But the journey is full of surprising events [12].

3.3.1. Disorder Sense—Meaning Criterion

The entropy is a measure of the disorder in a system. If I empty a box of Lego pieces on the floor, there is disorder among the Lego pieces. They are randomly scattered over the floor. The entropy of the Lego pieces is higher when they are scattered than when they are arranged in the box [31].

3.3.2. Disorder Sense—Concept Elaboration Criterion

3.3.3. Disorder Sense—Grammatical Criterion

3.4. Information Sense

Call it entropy. It is already in use under that name and besides, it will give you a great edge in debates because nobody knows what entropy is anyway [33].

3.4.1. Information Sense—Meaning Criterion

If a source can produce only one particular message its entropy is zero, and no channel is required. For example, a computing machine set up to calculate the successive digits of π produces a definite sequence with no chance element [32].

3.4.2. Information Sense—Concept Elaboration Criterion

[W]e describe how we have applied maximum entropy modeling to predict the French translation of an English word in context… A maximum entropy model that incorporates this constraint will predict the translations of in in a manner consistent with whether or not the following word is several [35].

3.4.3. Information Sense—Grammatical Criterion

3.5. Homogeneity Sense

[A] three ton bitumen cube that is cubic at first, but after a while slowly settles into a flat lump of asphalt. It creates a powerful image of how the form of matter is smoothed out into entropy. Also my body is a kind of hydrocarbon lump without a return ticket to the origin [12].He was interested in people, both children and adults, and received a warm response, an entropy, still remaining even though he himself has passed away. [NN] was engaged and well-informed in many areas [12].

3.5.1. Homogeneity Sense—Meaning Criterion

3.5.2. Homogeneity Sense—Concept Elaboration Criterion

3.5.3. Homogeneity Sense—Grammatical Criterion

3.6. Sanctioning Sense and the Semantic Network of Entropy

3.6.1. Earliest Attested Meaning

3.6.2. Predominance in the Semantic Network

3.6.3. Predictability Regarding Other Senses

Grab the handle and pull the drawer back and forth. Eventually, everything will be mixed, like a smooth gruel, you see, no concentrations anywhere but everything is equally thick. Entropy she called it. The state of the desk drawer, is, however, unlike the Earth’s reversible [12].

Still, he claims that ‘growth is the natural state’! He seems to suggest that it is only a matter of technology to avoid entropy. Well, of course you could say that whether an industry is polluting or not can be decided by seeing if the smoke goes out of or into the chimney [12].Human technology will certainly not be totally free from entropy, but the degree of entropy can vary significantly between different technologies [12].

3.6.4. Lived Human Experience

3.6.5. Conclusion on Sanctioning Sense

4. Classification of Senses in 2-D SAS

5. Educational Implications

The analogy I like to use to show the connection [between the interpretations of entropy in macroscopic thermodynamics and statistical mechanics] is that of sneezing in a busy street or in a quiet library. A sneeze is like a disorderly input of energy, very much like energy transferred as heat. It should be easy to accept that the bigger the sneeze, the greater the disorder introduced in the street or in the library. That is the fundamental reason why the ‘energy supplied as heat’ appears in the numerator of Clausius’s expression, for the greater the energy supplied as heat, the greater the increase in disorder and therefore the greater the increase in entropy. The presence of the temperature in the denominator fits with this analogy too, with its implication that for a given supply of heat, the entropy increases more if the temperature is low than if it is high. A cool object, in which there is little thermal motion, corresponds to a quiet library. A sudden sneeze will introduce a lot of disturbance, corresponding to a big rise in entropy. A hot object, in which there is a lot of thermal motion already present, corresponds to a busy street. Now a sneeze of the same size as in the library has relatively little effect, and the increase in entropy is small.

6. Discussion

Entropy is not disorder. Entropy is not a measure of disorder or chaos. Entropy is not a driving force. Energy’s diffusion, dissipation, or dispersion in a final state compared to an initial state is the driving force in chemistry. Entropy is the index of that dispersal within a system and between the system and its surroundings.

Acknowledgements

References

- Williams, H.T. Semantics in teaching introductory physics. Am. J. Phys. 1999, 67, 670–680. [Google Scholar] [CrossRef]

- Baierlein, R. Entropy and the second law: A pedagogical alternative. Am. J. Phys. 1994, 62, 15–26. [Google Scholar] [CrossRef]

- Reif, F. Thermal physics in the introductory physics course: Why and how to teach it from a unified atomic perspective. Am. J. Phys. 1999, 67, 1051–1062. [Google Scholar] [CrossRef]

- Cochran, M.J.; Heron, P.R.L. Development and assessment of research-based tutorials on heat engines and the second law of thermodynamics. Am. J. Phys. 2006, 74, 734–741. [Google Scholar] [CrossRef]

- Kautz, C.H.; Heron, P.R.L.; Shaffer, P.S.; McDermott, L.C. Student understanding of the ideal gas law, Part II: A microscopic perspective. Am. J. Phys. 2005, 73, 1064–1071. [Google Scholar] [CrossRef]

- Lambert, F.L. Disorder—A cracked crutch for supporting entropy discussions. J. Chem. Educ. 2002, 79, 187–192. [Google Scholar] [CrossRef]

- Tyler, A.; Evans, V. The Semantics of English Prepositions: Spatial Scenes, Embodied Meaning and Cognition; Cambridge University Press: Cambridge, UK, 2003. [Google Scholar]

- Evans, V. The meaning of time: polysemy, the lexicon and conceptual structure. J. Ling. 2005, 41, 33–75. [Google Scholar] [CrossRef]

- Strömdahl, H. Discernment of referents—An essential aspect of conceptual change. In NARST Annual International Conference (In NARST 2009 CD Proceedings), Garden Grove, CA, USA, April 17–21, 2009.

- Strömdahl, H. The challenge of polysemy: On discerning critical elements, relationships and shifts in attaining scientific terms. submitted.

- Gries, S.T.; Divjak, D. Behavioral profiles: A corpus-based approach to cognitive semantic analysis. In New Directions in Cognitive Linguistics; Evans, V., Pourcel, S., Eds.; John Benjamins Pub Co: Amsterdam, the Netherlands, 2009; pp. 57–75. [Google Scholar]

- Språkbanken homepage. Available online: http://spraakbanken.gu.se/ (accessed on May 18, 2009).

- Lakoff, G. Women, Fire, and Dangerous Things: What Categories Reveal about the Mind; University of Chicago Press: Chicago, IL, USA, 1987. [Google Scholar]

- Evans, V.; Green, M. Cognitive linguistics. An introduction; Edinburgh University Press: Edinburgh, UK, 2006. [Google Scholar]

- Posner, G.J.; Strike, K.A.; Hewson, P.W.; Gertzog, W.A. Accommodation of a scientific conception: Toward a theory of conceptual change. Sci. Educ. 1982, 66, 211–227. [Google Scholar] [CrossRef]

- Putnam, H. Meaning and reference. J. Phil. 1973, 70, 699–711. [Google Scholar] [CrossRef]

- Andersen, H.; Nersessian, N. Nomic concepts, frames and conceptual change. Philos. Sci. 2000, 67, 224–241. [Google Scholar] [CrossRef]

- Andersen, H. Reference and resemblance. Philos. Sci. 2001, 68, 50–61. [Google Scholar] [CrossRef]

- Clausius, R. The Mechanical Theory of Heat, with its Applications to the Steam-Engine and to the Physical Properties of Bodies; John van Voorst: London, UK, 1867. [Google Scholar]

- Pogliani, L.; Berberan-Santos, M.N. Constantin Carathéodory and the axiomatic thermodynamics. J. Math. Chem. 2000, 28, 313–324. [Google Scholar] [CrossRef]

- Sklar, L. Physics and Change: Philosophical Issues in the Foundations of Statistical Mechanics; Cambridge University Press: Cambridge, UK, 1993. [Google Scholar]

- Tolman, R.C.; Fine, P.C. On the irreversible production of entropy. Rev. Mod. Phys. 1948, 20, 51–77. [Google Scholar] [CrossRef]

- Davies, P. The 5th Miracle. The Search for the Origin and Meaning of Life; Simon & Schuster Paperbacks: New York, NY, USA, 1999. [Google Scholar]

- Dictionary.com. http://dictionary.reference.com/ (accessed on May 18, 2009).

- Lebowitz, J.L. Statistical mechanics: A selective review of two central issues. Rev. Mod. Phys. 1999, 71, 346–357. [Google Scholar] [CrossRef]

- Young, H.D.; Freedman, R.A.; Sears, F.W. Sears and Zemansky's University Physics: With Modern Physics, 11th edition; Pearson Education: San Francisco, CA, USA, 2003. [Google Scholar]

- Henriksson, A. Kemi. Kurs A., 1st edition; Gleerup: Malmö, Sweden, 2001. [Google Scholar]

- Atkins, P. Galileo's Finger. The Ten Great Ideas of Science; Oxford University Press: Oxford, UK, 2003. [Google Scholar]

- Viard, J. Using the history of science to teach thermodynamics at the university level: The case of the concept of entropy. In Eighth International History, Philosophy, Sociology & Science Teaching Conference, Leeds, UK, July 15–18, 2005.

- Saslow, W.M. An economic analogy to thermodynamics. Am. J. Phys. 1999, 67, 1239–1247. [Google Scholar] [CrossRef]

- Ekstig, B. Naturen, Naturvetenskapen och Lärandet; Studentlitteratur: Lund, Sweden, 2002. [Google Scholar]

- Shannon, C.E. A mathematical theory of communication. Mob. Comput. Commun. Rev. 2001, 5, 3–55. [Google Scholar] [CrossRef]

- Müller, I. A History of Thermodynamics. The Doctrine of Energy and Entropy; Springer: Berlin, Germany, 2007. [Google Scholar]

- Lambert, F.L. Entropy is simple—If we avoid the briar patches! Available online: http://www.entropysimple.com/content.htm (accessed on November 28, 2008).

- Berger, A.L.; Pietra, V.J.D.; Pietra, S.A.D. A maximum entropy approach to natural language processing. Comput. Linguist. 1996, 22, 39–71. [Google Scholar]

- Rodewald, B. Entropy and homogeneity. Am. J. Phys. 1990, 58, 164–168. [Google Scholar] [CrossRef]

- Baierlein, R.; Gearhart, C.A. The disorder metaphor (Letters to the editor). Am. J. Phys. 2003, 71, 103. [Google Scholar] [CrossRef]

- Perelman, G. The entropy formula for the Ricci flow and its geometric applications. Available online: http://arxiv.org/abs/math/0211159v1 (accessed on August 20, 2009).

- Joslyn, C. On the semantics of entropy measures of emergent phenomena. Cybernetics Syst. 1990, 22, 631–640. [Google Scholar] [CrossRef]

- Jaynes, E.T. Information theory and statistical mechanics. Phys. Rev. 1957, 106, 620–630. [Google Scholar] [CrossRef]

- Wittgenstein, L. Philosophical Investigations, 3rd edition; Prentice Hall: London, UK, 1999. [Google Scholar]

- Falk, G. Entropy, a resurrection of the caloric—a look at the history of thermodynamics. Eur. J. Phys. 1985, 6, 108–115. [Google Scholar] [CrossRef]

- Norwich, K.H. Physical entropy and the senses. Acta Biother. 2005, 53, 167–180. [Google Scholar] [CrossRef] [PubMed]

- Brissaud, J.-B. The meanings of entropy. Entropy 2005, 7, 68–96. [Google Scholar] [CrossRef]

- Lambert, F.L. Configurational entropy revisited. J. Chem. Educ. 2007, 84, 1548–1550. [Google Scholar] [CrossRef]

© 2010 by the authors; licensee Molecular Diversity Preservation International, Basel, Switzerland. This article is an open-access article distributed under the terms and conditions of the Creative Commons Attribution license (http://creativecommons.org/licenses/by/3.0/).

Share and Cite

Haglund, J.; Jeppsson, F.; Strömdahl, H. Different Senses of Entropy—Implications for Education. Entropy 2010, 12, 490-515. https://doi.org/10.3390/e12030490

Haglund J, Jeppsson F, Strömdahl H. Different Senses of Entropy—Implications for Education. Entropy. 2010; 12(3):490-515. https://doi.org/10.3390/e12030490

Chicago/Turabian StyleHaglund, Jesper, Fredrik Jeppsson, and Helge Strömdahl. 2010. "Different Senses of Entropy—Implications for Education" Entropy 12, no. 3: 490-515. https://doi.org/10.3390/e12030490