Information Theory and Dynamical System Predictability

Abstract

:1. Introduction

2. Relevant Information Theoretic Functionals and Their Properties

3. Time Evolution of Entropic Functionals

4. Information Flow

4.1. Theory

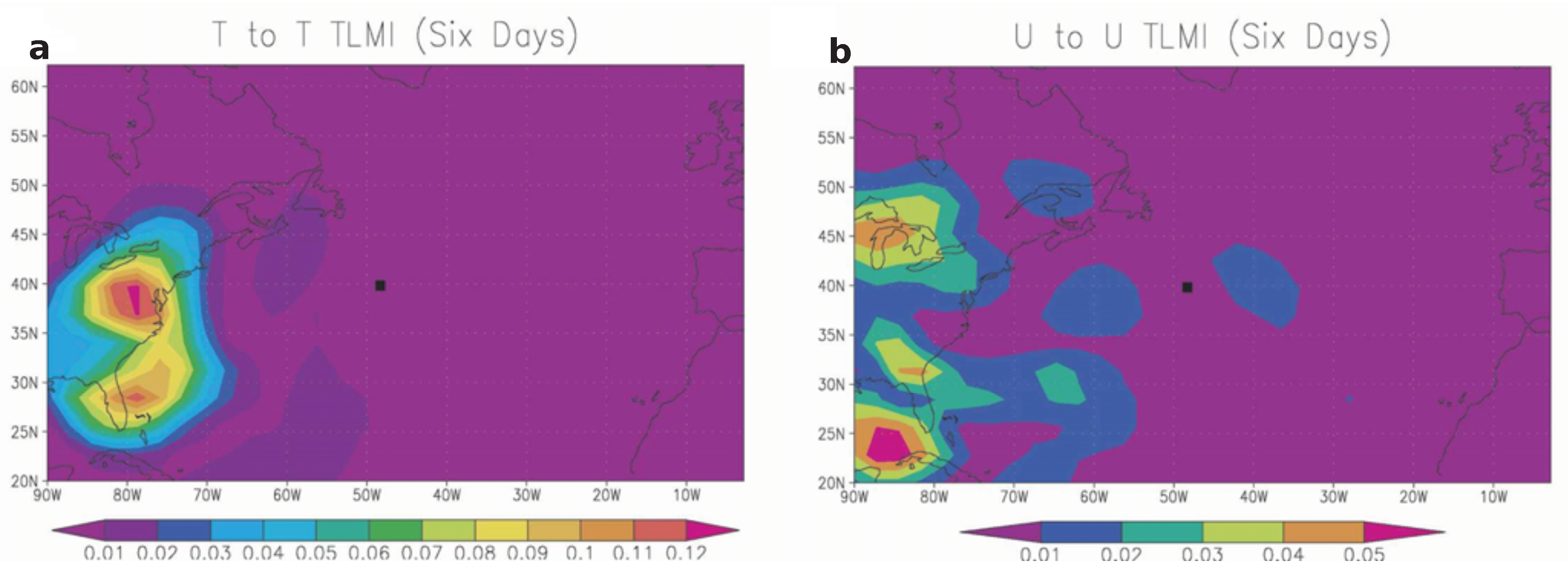

4.2. Applications

5. Predictability

5.1. Introduction

5.2. Error Growth Approaches

5.3. Statistical Prediction

5.4. Proposed Functionals

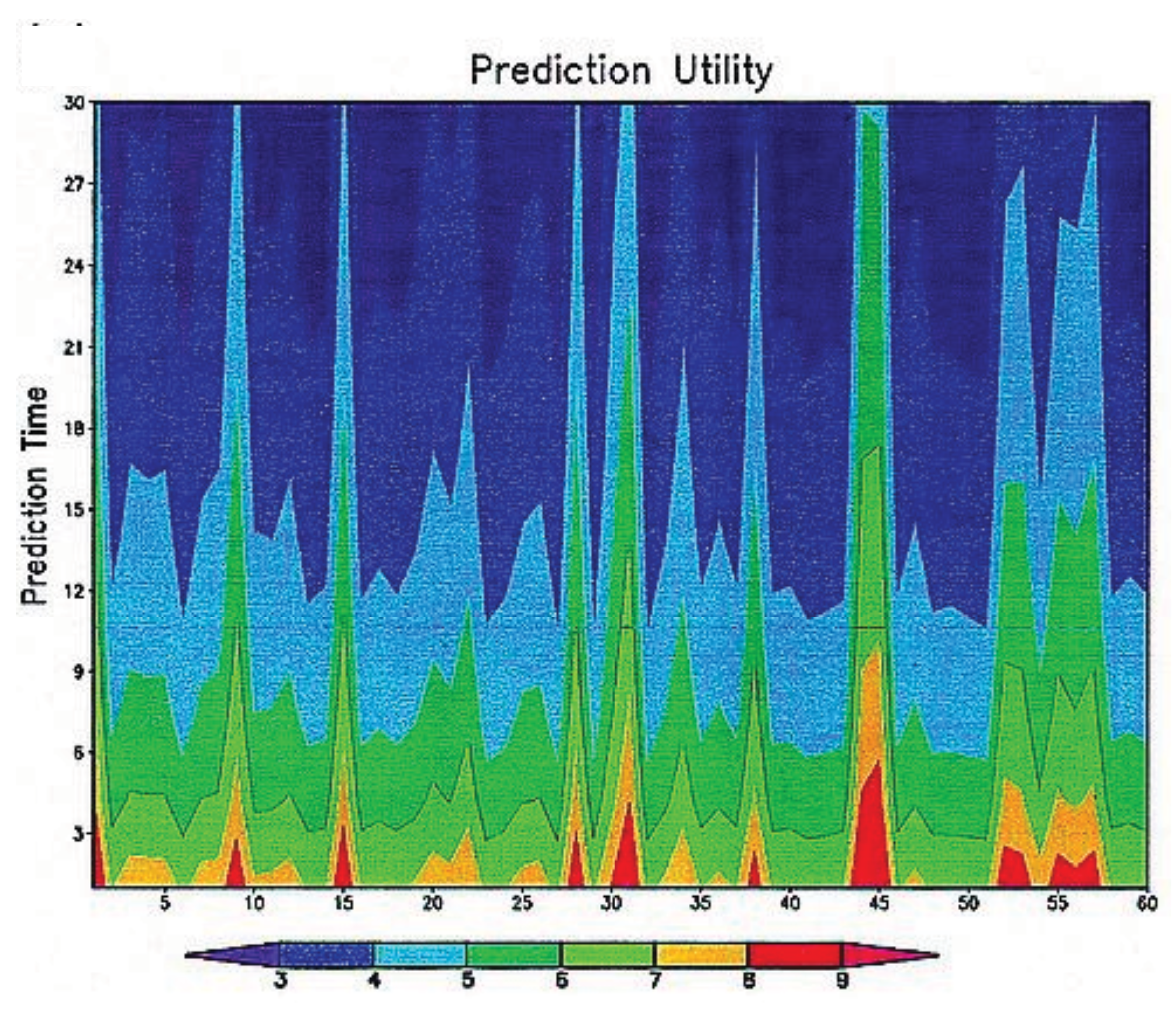

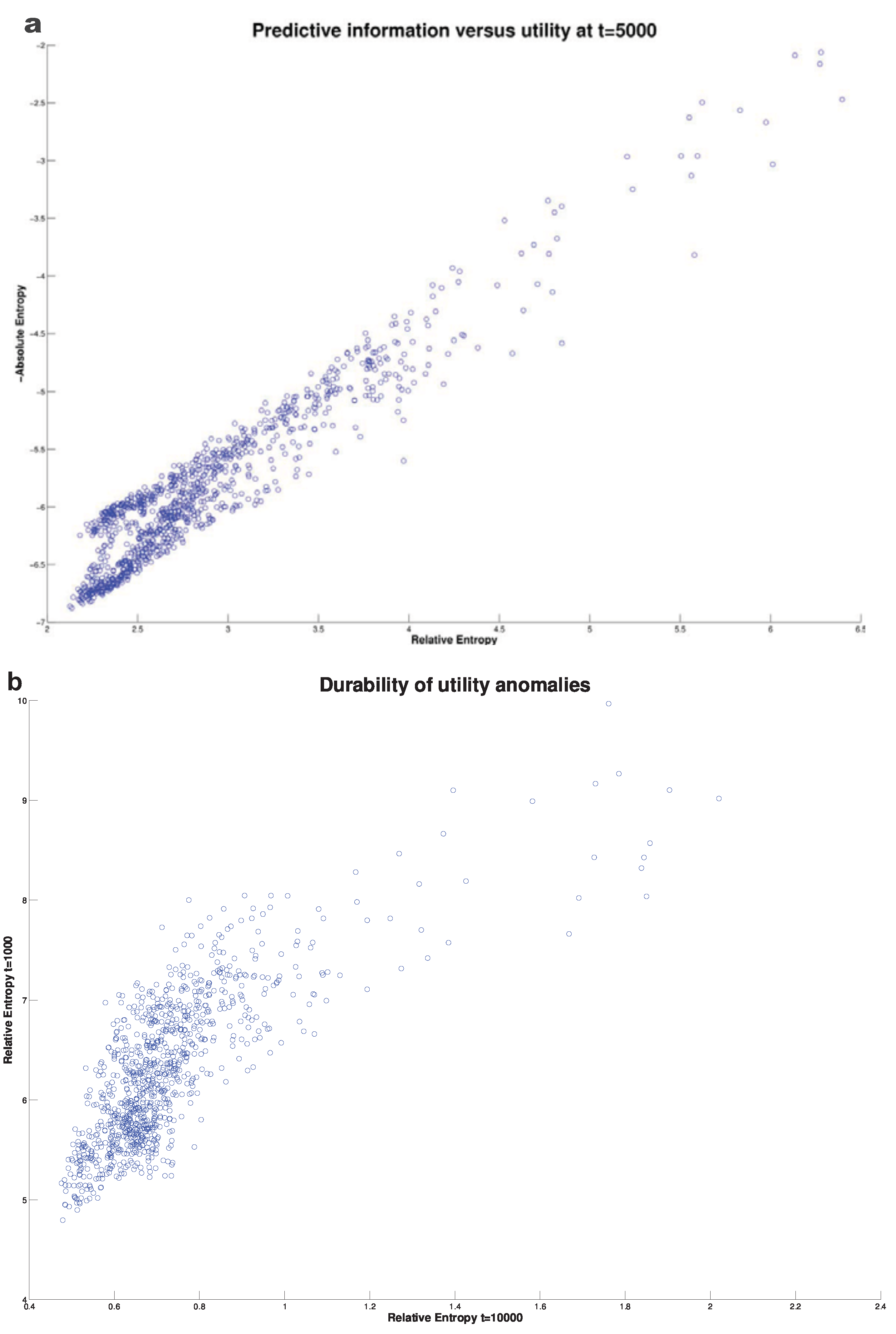

5.5. Applications

- (1)

- In a generic sense how does predictability decay in time?

- (2)

- How does predictability vary from one prediction to the next?

5.5.1. Multivariate Ornstein Uhlenbeck (OU) Stochastic Processes

5.5.2. Low Order Chaotic Systems

5.5.3. Idealized Models of Turbulence

- (1)

- A truncated version of Burgers equation detailed in [78]. This system is a one dimensional inviscid turbulence model with a set of conserved variables which enable a conventional Gibbs Gaussian equilibrium distribution. Predictability issues relevant to the present discussion can be found in [73,79].

- (2)

- The one dimensional Lorenz 1996 model of mid-latitude atmospheric turbulence detailed in [40]. This model exhibits a variety of different behaviors depending on the parameters chosen. For some settings strongly regular wave-like behavior is observed while for others a more irregular pattern occurs with some resemblance to atmospheric turbulence. The most unstable linear modes of the system tend to have most weight at a particular wavenumber which is consistent with the observed atmosphere. Predictability issues from the present perspective can be found in [73].

- (3)

- Two dimensional barotropic quasi-geostrophic turbulence detailed in, for example, [80]. Barotropic models refer to systems with no vertical degrees of freedom. Quasi-geostrophic models are rotating fluids which filter out fast waves (both gravity and sound) in order to focus on low frequency variability. The barotropic versions aim at simulating very low frequency variability and exclude by design variability associated with mid-latitude storms/eddies which have a typically shorter timescale. Predictability issues are discussed in [75] in the context of a global model with an inhomogeneous background state.

- (4)

- Baroclinic quasi-geostrophic turbulence as discussed in, for example, [81]. This system is similar to the last except simple vertical structure is incorporated which allows the fluid to draw energy from a vertical mean shear (baroclinic instability). These systems have therefore a representation of higher frequency mid-latitude storms/eddies. This latter variability is sometimes considered to be approximately a stochastic forcing of the low frequency barotropic variability although undoubtedly the turbulence spectrum is more complicated. Predictability issues from the present perspective were discussed in [82] for a model with an homogeneous background shear. They are discussed from a more conventional predictability viewpoint in [83].

5.5.4. Realistic Global Primitive Equation Models

5.5.5. Other Recent Applications

6. Conclusions and Outlook

- (1)

- The mechanisms responsible for variations in statistical predictability have been clarified. Traditionally it has been assumed that these are associated with variations in the instability of the initial conditions. The information theoretical analysis reveals that other mechanisms are possible. One, which has been referred to as signal here, results from variations in the “distance” with which initial conditions are from the equilibrium mean state of the system. Such excursions often take considerable time to “erode” and so are responsible for considerable practical predictability. Preliminary calculations show that this second effect may indeed be more important than the first in certain weather and climate applications. Note that this is not simply the effect of persistence of anomalies since this signal can transfer between the components of the state vector of a system. From the numerical studies conducted it appears that this second effect may be important when there is a strong separation of time scales within the system. These results are deserving of further theoretical and numerical investigation.

- (2)

- It has been shown that ideas from information transfer allow us to address in generality the question of improving predictions using targeted additional observations. The methods discussed above apply in the long range, non-linear regime for which previous methods are likely inapplicable.

- (3)

- The physical mechanism causing the decline in mid-latitude weather predictability has been studied over the full temporal range of predictions. Numerical experiments have shown the central role of vertical mean shear in controlling the equilibration/loss of predictability.

Acknowledgements

Appendix

Theorem 2

Theorem 3

Theorem 4

Theorem 5

Relationship of the Three Predictability Functionals

References and Notes

- Sussman, G.; Wisdom, J. Chaotic evolution of the solar system. Science 1992, 257, 56. [Google Scholar] [CrossRef] [PubMed]

- Knopoff, L. Earthquake prediction: The scientific challenge. Proc. Nat. Acad. Sci. USA 1996, 93, 3719. [Google Scholar] [CrossRef] [PubMed]

- DelSole, T. Predictability and Information Theory. Part I: Measures of Predictability. J. Atmos. Sci. 2004, 61, 2425–2440. [Google Scholar] [CrossRef]

- Rasetti, M. Uncertainty, predictability and decidability in chaotic dynamical systems. Chaos Soliton. Fractal. 1995, 5, 133–138. [Google Scholar] [CrossRef]

- Jaynes, E.T. Macroscopic prediction. In Complex Systems and Operational Approaches in Neurobiology, Physics and Computers; Haken, H., Ed.; Springer: New York, NY, USA, 1985; pp. 254–269. [Google Scholar]

- Eyink, G.; Kim, S. A maximum entropy method for particle filtering. J. Stat. Phys. 2006, 123, 1071–1128. [Google Scholar] [CrossRef]

- Castronovo, E.; Harlim, J.; Majda, A. Mathematical test criteria for filtering complex systems: Plentiful observations. J. Comp. Phys. 2008, 227, 3678–3714. [Google Scholar] [CrossRef]

- Cover, T.; Thomas, J. Elements of Information Theory, 2nd ed.; Wiley-Interscience: New York, NY, USA, 2006. [Google Scholar]

- Amari, S.; Nagaoka, H. Methods of Information Geometry. Translations of Mathematical Monographs, AMS; Oxford University Press: New York, NY, USA, 2000. [Google Scholar]

- Risken, H. The Fokker-Planck Equation, 2nd ed.; Springer Verlag: Berlin, Germany, 1989. [Google Scholar]

- Daems, D.; Nicolis, G. Entropy production and phase space volume contraction. Phys. Rev. E 1999, 59, 4000–4006. [Google Scholar] [CrossRef]

- Garbaczewski, P. Differential entropy and dynamics of uncertainty. J. Stat. Phys. 2006, 123, 315–355. [Google Scholar] [CrossRef]

- Ruelle, D. Positivity of entropy production in nonequilibrium statistical mechanics. J. Stat. Phys. 1996, 85, 1–23. [Google Scholar] [CrossRef]

- Lebowitz, J.; Bergmann, P. Irreversible gibbsian ensembles. Ann. Phys. 1957, 1, 1–23. [Google Scholar] [CrossRef]

- Gardiner, C.W. Handbook of Stochastic Methods for Physics, Chemistry and the Natural Sciences. In Springer Series in Synergetics; Springer: Berlin, Germany, 2004; Volume 13. [Google Scholar]

- Misra, B.; Prigogine, I.; Courbage, M. From deterministic dynamics to probabilistic descriptions. Physica A 1979, 98, 1–26. [Google Scholar] [CrossRef]

- Goldstein, S.; Penrose, O. A nonequilibrium entropy for dynamical systems. J. Stat. Phys. 1981, 24, 325–343. [Google Scholar] [CrossRef]

- Courbage, M.; Nicolis, G. Markov evolution and H-theorem under finite coarse graining in conservative dynamical systems. Europhys. Lett. 1990, 11, 1. [Google Scholar] [CrossRef]

- Castiglione, P.; Falcioni, M.; Lesne, A.; Vulpiani, A. Chaos and Coarse Graining in Statistical Mechanics; Cambridge University Press: New York, NY, USA, 2008. [Google Scholar]

- van Kampen, N. Stochastic Processes in Physics and Chemistry, 3rd ed.; North Holland: Amsterdam, The Netherlands, 2007. [Google Scholar]

- Carnevale, G.; Frisch, U.; Salmon, R. H theorems in statistical fluid dynamics. J. Phys. A 1981, 14, 1701. [Google Scholar] [CrossRef]

- Carnevale, G. Statistical features of the evolution of two-dimensional turbulence. J. Fluid Mech. 1982, 122, 143–153. [Google Scholar] [CrossRef]

- Carnevale, G.F.; Holloway, G. Information decay and the predictability of turbulent flows. J. Fluid Mech. 1982, 116, 115–121. [Google Scholar] [CrossRef]

- Orszag, S. Analytical theories of turbulence. J. Fluid Mech. 1970, 41, 363–386. [Google Scholar] [CrossRef]

- Salmon, R. Lectures on Geophysical Fluid Dynamics; Oxford Univ. Press: New York, NY, USA, 1998. [Google Scholar]

- Kaneko, K. Lyapunov analysis and information flow in coupled map lattices. Physica D 1986, 23, 436. [Google Scholar] [CrossRef]

- Vastano, J.A.; Swinney, H.L. Information transport in spatiotemporal systems. Phys. Rev. Lett. 1988, 60, 1773. [Google Scholar] [CrossRef] [PubMed]

- Schreiber, T. Spatiotemporal structure in coupled map lattices: Two point correlations versus mutual information. J. Phys. 1990, A23, L393–L398. [Google Scholar]

- Schreiber, T. Measuring information transfer. Phys. Rev. Lett. 2000, 85, 461–464. [Google Scholar] [CrossRef] [PubMed]

- Liang, X.S.; Kleeman, R. Information transfer between dynamical system components. Phys. Rev. Lett. 2005, 95, 244101. [Google Scholar] [CrossRef] [PubMed]

- Liang, X.S.; Kleeman, R. A rigorous formalism of information transfer between dynamical system components I. Discrete maps. Physica D. 2007, 231, 1–9. [Google Scholar] [CrossRef]

- Liang, X.S.; Kleeman, R. A rigorous formalism of information transfer between dynamical system components II. Continuous flow. Physica D. 2007, 227, 173–182. [Google Scholar] [CrossRef]

- Majda, A.J.; Harlim, J. Information flow between subspaces of complex dynamical systems. Proc. Nat. Acad. Sci. USA 2007, 104, 9558–9563. [Google Scholar] [CrossRef]

- Zubarev, D.; Morozov, V.; Ropke, G. Statistical Mechanics of Nonequilibrium Processes. Vol. 1: Basic Concepts, Kinetic Theory; Akademie Verlag: Berlin, Germany, 1996. [Google Scholar]

- Palmer, T.N.; Gelaro, R.; Barkmeijer, J.; Buizza, R. Singular vectors, metrics, and adaptive observations. J. Atmos. Sci. 1998, 55, 633–653. [Google Scholar] [CrossRef]

- Bishop, C.; Etherton, B.; Majumdar, S. Adaptive sampling with the ensemble transform Kalman filter. Part I: Theoretical aspects. Mon. Weath. Rev. 2001, 129, 420–436. [Google Scholar] [CrossRef]

- Kleeman, R. Information flow in ensemble weather predictions. J. Atmos Sci. 2007, 64, 1005–1016. [Google Scholar] [CrossRef]

- Palmer, T. Predicting uncertainty in forecasts of weather and climate. Rep. Progr. Phys. 2000, 63, 71–116. [Google Scholar] [CrossRef]

- Kalnay, E. Atmospheric Modeling, Data Assimilation, and Predictability; Cambridge Univ. Press: Cambridge, UK, 2003. [Google Scholar]

- Lorenz, E. Predictability: A problem partly solved. In Proceedings of the Seminar on Predictability, ECMWF, Shinfield Park, Reading, England; 1996; Volume 1, pp. 1–18. [Google Scholar]

- Oseledec, V. A multiplicative ergodic theorem. Lyapunov characteristic numbers for dynamical systems. English transl. Trans. Moscow Math. Soc. 1968, 19, 197–221. [Google Scholar]

- Pesin, Y. Lyapunov characteristic exponents and ergodic properties of smooth dynamical systems with an invariant measure. Sov. Math. Dokl. 1976, 17, 196–199. [Google Scholar]

- Eckmann, J.; Ruelle, D. Ergodic theory of chaos and strange attractors. Rev. Mod. Phys. 1985, 57, 617–656. [Google Scholar] [CrossRef]

- Toth, Z.; Kalnay, E. Ensemble forecasting at NCEP and the breeding method. Mon. Weath. Rev. 1997, 125, 3297–3319. [Google Scholar] [CrossRef]

- Aurell, E.; Boffetta, G.; Crisanti, A.; Paladin, G.; Vulpiani, A. Growth of noninfinitesimal perturbations in turbulence. Phys. Rev. Lett. 1996, 77, 1262–1265. [Google Scholar] [CrossRef] [PubMed]

- Boffetta, G.; Cencini, M.; Falcioni, M.; Vulpiani, A. Predictability: A way to characterize complexity. Phys. Rep. 2002, 356, 367–474. [Google Scholar] [CrossRef]

- Frederiksen, J. Singular vectors, finite-time normal modes, and error growth during blocking. J. Atmos. Sci. 2000, 57, 312–333. [Google Scholar] [CrossRef]

- Gaspard, P.; Wang, X. Noise, chaos, and (ϵ,τ)-entropy per unit time. Phys. Rep. 1993, 235, 291–343. [Google Scholar] [CrossRef]

- Vannitsem, S.; Nicolis, C. Lyapunov vectors and error growth patterns in a T21L3 quasigeostrophic model. J. Atmos. Sci. 1997, 54, 347–361. [Google Scholar] [CrossRef]

- Houtekamer, P.L.; Lefaivre, L.; Derome, J.; Ritchie, H.; Mitchell, H.L. A system simulation approach to ensemble prediction. Mon. Weath. Rev. 1996, 124, 1225–1242. [Google Scholar] [CrossRef]

- Lyupanov exponents of order 10−6 − 10−7 (years)−1 have been noted in realistic model integrations which sets an analogous timescale for loss of predictability in the solar system (see [1]).

- Jolliffe, I.; Stephenson, D. Forecast Verification: A Practitioner’s Guide in Atmospheric Science; Wiley: Chichester, UK, 2003. [Google Scholar]

- Leung, L.Y.; North, G.R. Information theory and climate prediction. J. Clim. 1990, 3, 5–14. [Google Scholar] [CrossRef]

- It can be important in this context to be rather careful about how a (non-linear) stochastic model is formulated since results can depend on whether the Ito or Stratonovich continuum limit is assumed. A careful discussion of these issues from the viewpoint of geophysical modeling is to be found, for example, in [92].

- Schneider, T.; Griffies, S. A conceptual framework for predictability studies. J. Clim. 1999, 12, 3133–3155. [Google Scholar] [CrossRef]

- We shall refer to this functional henceforth with this terminology.

- We are using the conventional definition of entropy here whereas [55] use one which differs by a factor of m, the state-space dimension. Shannon’s axioms only define entropy up to an unfixed multiplicative factor. The result shown here differs therefore from those of the original paper by this factor. This is done to facilitate clarity within the context of a review.

- Kleeman, R. Measuring dynamical prediction utility using relative entropy. J. Atmos. Sci. 2002, 59, 2057–2072. [Google Scholar] [CrossRef]

- Roulston, M.S.; Smith, L.A. Evaluating probabilistic forecasts using information theory. Mon. Weath. Rev. 2002, 130, 1653–1660. [Google Scholar] [CrossRef]

- Bernardo, J.; Smith, A. Bayesian Theory; John Wiley and Sons: Chichester, UK, 1994. [Google Scholar]

- Kleeman, R.; Moore, A.M. A new method for determining the reliability of dynamical ENSO predictions. Mon. Weath. Rev. 1999, 127, 694–705. [Google Scholar] [CrossRef]

- Hasselmann, K. Stochastic climate models. Part I. Theory. Tellus A 1976, 28, 473–485. [Google Scholar] [CrossRef]

- Kleeman, R.; Power, S. Limits to predictability in a coupled ocean-atmosphere model due to atmospheric noise. Tellus A 1994, 46, 529–540. [Google Scholar] [CrossRef]

- Penland, C.; Sardeshmukh, P. The optimal growth of tropical sea surface temperature anomalies. J. Clim. 1995, 8, 1999–2024. [Google Scholar] [CrossRef]

- Kleeman, R. Spectral analysis of multi-dimensional stochastic geophysical models with an application to decadal ENSO variability. J. Atmos. Sci. 2011, 68, 13–25. [Google Scholar] [CrossRef]

- Saravanan, R.; McWilliams, J. Advective ocean-atmosphere interaction: An analytical stochastic model with implications for decadal variability. J. Clim. 1998, 11, 165–188. [Google Scholar] [CrossRef]

- DelSole, T.; Farrel, B.F. A stochastically excited linear system as a model for quasigeostrophic turbulence: Analytic results for one- and two-layer fluids. J. Atmos. Sci. 1995, 52, 2531–2547. [Google Scholar] [CrossRef]

- Lorenz, E.N. Deterministic non-periodic flows. J. Atmos. Sci. 1963, 20, 130–141. [Google Scholar] [CrossRef]

- Lorenz, E.N. Irregularity: A fundamental property of the atmosphere. Tellus A 1984, 36A, 98–110. [Google Scholar] [CrossRef]

- Nayfeh, A.; Balachandran, B. Applied Nonlinear Dynamics; Wiley Online Library, 1995; Volume 2, p. 685. [Google Scholar]

- Mead, L.R.; Papanicolaou, N. Maximum entropy in the problem of moments. J. Math. Phys. 1984, 25, 2404–2417. [Google Scholar] [CrossRef]

- Majda, A.J.; Kleeman, R.; Cai, D. A framework of predictability through relative entropy. Meth. Appl. Anal. 2002, 9, 425–444. [Google Scholar]

- Abramov, R.; Majda, A. Quantifying uncertainty for non-Gaussian ensembles in complex systems. SIAM J. Sci. Stat. Comput. 2005, 26, 411–447. [Google Scholar] [CrossRef]

- Haven, K.; Majda, A.; Abramov, R. Quantifying predictability through information theory: Small sample estimation in a non-Gaussian framework. J. Comp. Phys. 2005, 206, 334–362. [Google Scholar] [CrossRef]

- Abramov, R.; Majda, A.; Kleeman, R. Information theory and predictability for low frequency variability. J. Atmos. Sci. 2005, 62, 65–87. [Google Scholar] [CrossRef]

- Abramov, R. A practical computational framework for the multidimensional moment-constrained maximum entropy principle. J. Comp. Phys. 2006, 211, 198–209. [Google Scholar] [CrossRef]

- Kleeman, R. Statistical predictability in the atmosphere and other dynamical systems. Physica D 2007, 230, 65–71. [Google Scholar] [CrossRef]

- Majda, A.J.; Timofeyev, I. Statistical mechanics for truncations of the Burgers-Hopf equation: A model for intrinsic stochastic behavior with scaling. Milan J. Math. 2002, 70(1), 39–96. [Google Scholar] [CrossRef]

- Kleeman, R.; Majda, A.J.; Timofeyev, I. Quantifying predictability in a model with statistical features of the atmosphere. Proc. Nat. Acad. Sci. USA 2002, 99, 15291–15296. [Google Scholar] [CrossRef] [PubMed]

- Selten, F. An efficient description of the dynamics of barotropic flow. J. Atmos. Sci. 1995, 52, 915–936. [Google Scholar] [CrossRef]

- Salmon, R. Baroclinic instability and geostrophic turbulence. Geophys. Astrophys. Fluid Dyn. 1980, 15, 167–211. [Google Scholar] [CrossRef]

- Kleeman, R.; Majda, A.J. Predictability in a model of geostrophic turbulence. J. Atmos. Sci. 2005, 62, 2864–2879. [Google Scholar] [CrossRef]

- Vallis, G.K. On the predictability of quasi-geostrophic flow: The effects of beta and baroclinicity. J. Atmos. Sci. 1983, 40, 10–27. [Google Scholar] [CrossRef]

- Strictly speaking there are three contributions. One involves the prediction means; another the higher order prediction moments alone and thirdly a cross term involving both. The authors cited in the text group this latter term with the first and call it the generalized signal.

- Kleeman, R. Limits, variability and general behaviour of statistical predictability of the mid-latitude atmosphere. J. Atmos. Sci. 2008, 65, 263–275. [Google Scholar] [CrossRef]

- Tang, Y.; Kleeman, R.; Moore, A. Comparison of information-based measures of forecast uncertainty in ensemble ENSO prediction. J. Clim. 2008, 21, 230–247. [Google Scholar] [CrossRef]

- Shukla, J.; DelSole, T.; Fennessy, M.; Kinter, J.; Paolino, D. Climate model fidelity and projections of climate change. Geophys. Res. Lett. 2006, 33, L07702. [Google Scholar] [CrossRef]

- Majda, A.; Gershgorin, B. Quantifying uncertainty in climate change science through empirical information theory. Proc. Nat. Acad. Sci. USA 2010, 107, 14958. [Google Scholar] [CrossRef] [PubMed]

- Branstator, G.; Teng, H. Two limits of initial-value decadal predictability in a CGCM. J. Clim. 2010, 23, 6292–6311. [Google Scholar] [CrossRef]

- Teng, H.; Branstator, G. Initial-value predictability of prominent modes of North Pacific subsurface temperature in a CGCM. Clim. Dyn. 2010. [Google Scholar] [CrossRef]

- Bitz, C.M.; University of Washington, Seattle, WA, USA. Private communication to author, 2010.

- Hansen, J.; Penland, C. Efficient approximate techniques for integrating stochastic differential equations. Mon. Weath. Rev. 2006, 134, 3006–3014. [Google Scholar] [CrossRef]

© 2011 by the author; licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution license (http://creativecommons.org/licenses/by/3.0/.)

Share and Cite

Kleeman, R. Information Theory and Dynamical System Predictability. Entropy 2011, 13, 612-649. https://doi.org/10.3390/e13030612

Kleeman R. Information Theory and Dynamical System Predictability. Entropy. 2011; 13(3):612-649. https://doi.org/10.3390/e13030612

Chicago/Turabian StyleKleeman, Richard. 2011. "Information Theory and Dynamical System Predictability" Entropy 13, no. 3: 612-649. https://doi.org/10.3390/e13030612