How Can We Fully Use Noiseless Feedback to Enhance the Security of the Broadcast Channel with Confidential Messages

Abstract

:1. Introduction

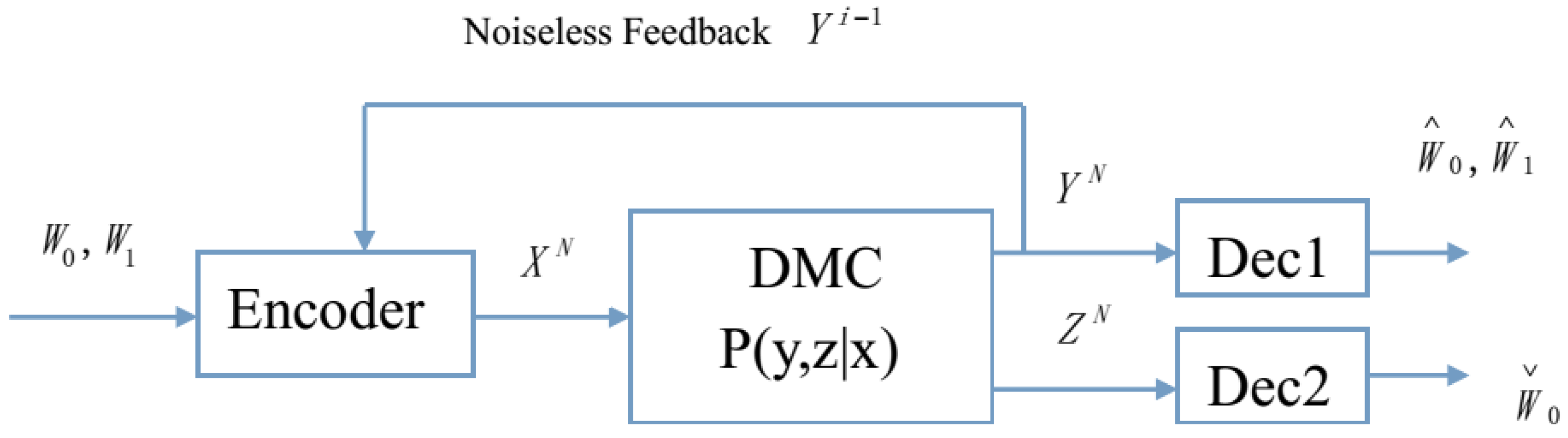

2. Problem Formulation and New Result

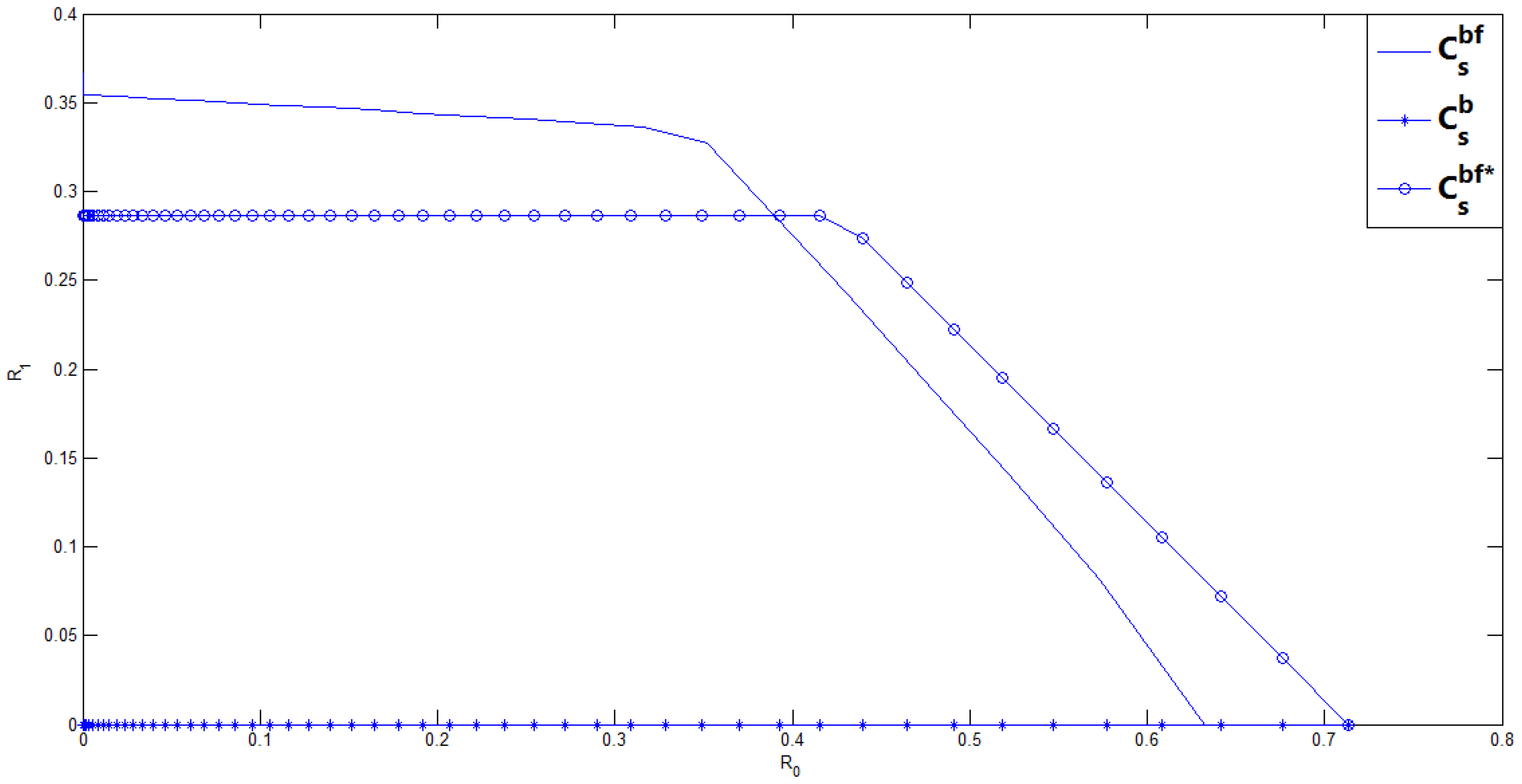

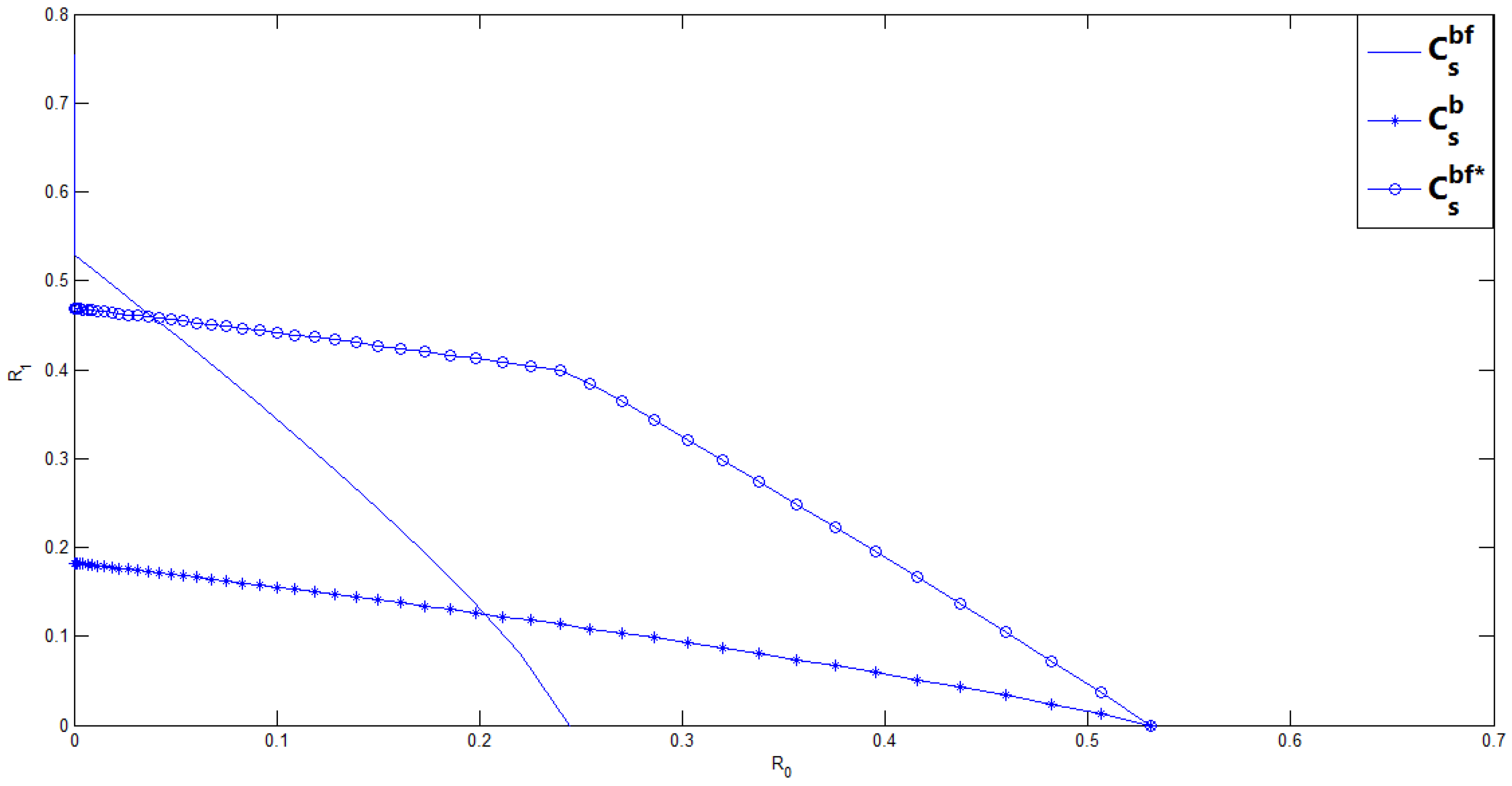

- Comparing our new inner bound with the previous Ahlswede and Cai’s inner bound , in general, we do not know which one is larger. In the next section, we consider a binary case of the BC-CM with noiseless feedback, and compute these inner bounds for this binary case. From this binary example, we show that the maximum achievable (the transmission rate of the confidential message with perfect secrecy constraint) in is larger than that in , however, the enhancement of is at the cost of reducing the transmission rate of the common message .

- Note here that in , the auxiliary random variable U represents the encoded sequence for the common message and V represents the encoded sequence for both the common and confidential messages. The auxiliary random variable is both the legitimate receiver and the wiretapper’s estimation of U, and the index of is related to the update information generated by the noiseless feedback. The auxiliary random variable is the legitimate receiver’s estimation of V, and is the wiretapper’s estimation of V. Both the indexes of and are with respect to the update information. The inner bound is constructed by using the feedback to generate a secret key shared between the legitimate receiver and the wiretapper, and generate update information used to construct estimation of the transmitted sequences U and V. The estimation of U and V helps both the legitimate receiver and the wiretapper to improve their own received symbols Y and Z.

3. Binary Example of the BC-CM with Noiseless Feedback

- First note that in the following explanation, the channel input for the i-th block () is denoted by , and similar conventions are applied to , , , , , , , and . For each block, the transmitted message is composed of a common message, a confidential message, a dummy message and update information.

- (Encoding): In the i-th block (), after the transmitter receives the feedback channel output , he generates a secret key from and uses this key to encrypt the confidential message of the i-th block. In addition, since , the transmitter also knows the legitimate receiver’s channel noise at the i-th block, and thus he chooses as an estimation of , as the legitimate receiver’s estimation of , and as the wiretapper’s estimation of . Note that and the update information is part of the indexes of , and .

- (Decoding at the legitimate receiver): The legitimate receiver does backward decoding, i.e., the decoding starts from the last block. In block n, the legitimate receiver applies Ahlswede and Cai’s decoding scheme [3] to obtain his update information for block n. Then using the channel output as side information, the legitimate receiver applies Wyner-Ziv’s decoding scheme [8] to obtain and . Since , the legitimate receiver knows the legitimate receiver’s channel noise for block , and thus he computes to obtain and the corresponding transmitted message for block . Repeating the above decoding scheme, the legitimate receiver obtains the entire transmitted messages (including both confidential and common messages) for all blocks, and since he also knows the secret keys, the real messages are decrypted by him.

- (Decoding at the wiretapper): The wiretapper also does backward decoding. In block n, the wiretapper receives , and he applies Ahlswede and Cai’s decoding scheme [3] to obtain his update information for block n. Then using the channel output as side information, the wiretapper applies Wyner-Ziv’s decoding scheme [8] to obtain and . Since , the wiretapper knows the common message for block . Repeating the above decoding scheme, finally, the wiretapper obtains the entire common messages for all blocks.

4. Conclusions

Acknowledgments

Author Contributions

Conflicts of Interest

Abbreviations

| MDPI | Multidisciplinary Digital Publishing Institute |

| DOAJ | Directory of open access journals |

| TLA | Three letter acronym |

| LD | linear dichroism |

| BC-CM | broadcast channel with confidential messages |

Appendix A. Proof of Theorem 1

Appendix A.1. Preliminary

Appendix A.2. Code Construction

- Transmission takes place over n blocks, and each block is of length N. Define the confidential message by , where () is for block i and takes values in . Further divide into , where () takes values in , and .

- Define the common message by , where () is for block i and takes values in .

- Let be a randomly generated dummy message transmitted over all blocks, and it is denoted by , where () is for block i and it takes values in .

- Let and be update information transmitted over all blocks, and they are respectively denoted by and , where and () are for block i and take values in and , respectively. Further divide into , where () takes values in , and . Moreover, further divide into , where () takes values in , and .

- Let , , , , , , and be the random vectors for block i (). Define , and similar convention is applied to , , , , , and . The specific values of the above random vectors are denoted by lower case letters.

- In each block i (), randomly produce i.i.d. sequences according to the probability , and index them as , where , , and . Here note that .

- For a given , randomly produce i.i.d. sequences according to the conditional probability , and index them as , where , , , and . Here note that and .

- The sequence is i.i.d. produced according to a new discrete memoryless channel (DMC) with transition probability . The inputs and output of this new DMC are , and , respectively.

- In each block i (), generate in two ways: the first way is to produce i.i.d. sequences according to the probability , and index them as , where 1 represents the first way to define , , and ; the second way is to produce i.i.d. sequences according to the probability , and index them as , where 2 represents the second way to define , , and .

- In each block i (), produce i.i.d. sequences according to the probability , and index them as , where , and .

- In each block i (), produce i.i.d. sequences according to the probability , and index them as , where and .

- In block 1, the transmitter chooses and to transmit.

- In block i (), the transmitter receives the feedback , and he tries to select a pair of sequences such that are jointly typical sequences. If there are more than one pair , randomly choose one; if there is no such pair, an error is declared. Based on Lemma A1, it is easy to see that the error probability goes to 0 ifMoreover, the transmitter also tries to select a pair of sequences such that are jointly typical sequences. If there are more than one pair , randomly choose one; if there is no such pair, an error is declared. Based on Lemma A1, it is easy to see that the error probability goes to 0 if (A3) andhold. Once the transmitter selects such pairs and , he chooses to transmit.Before choosing the transmitted codeword , produce a mapping . Furthermore, we define as a random variable uniformly distributed over , and it is independent of all the random vectors and messages of block i. Here note that is the secret key known by the transmitter and the legitimate receiver, and is a specific value of . Reveal the mapping to the transmitters, legitimate receiver and the wiretapper. Once the transmitter finds a pair such that are jointly typical sequences, and finds a pair such that are jointly typical sequences, he chooses to transmit.

- In block n, the transmitter receives , and he finds a pair such that are jointly typical sequences. Moreover, he also finds a pair such that are jointly typical sequences. Then he chooses and to transmit.

Appendix A.3. Equivocation Analysis

References

- Wyner, A.D. The wire-tap channel. Bell Syst. Tech. J. 1975, 54, 1355–1387. [Google Scholar] [CrossRef]

- Csiszar, I.; Korner, J. Broadcast channels with confidential messages. IEEE Trans. Inf. Theory 1978, 24, 339–348. [Google Scholar]

- Ahlswede, R.; Cai, N. Transmission, identification and common randomness capacities for wire-tap channels with secure feedback from the decoder. Gen. Theory Inf. Trans. Comb. 2006, 258–275. [Google Scholar]

- Ardestanizadeh, E.; Franceschetti, M.; Javidi, T.; Kim, Y. Wiretap channel with secure rate-limited feedback. IEEE Trans. Inf. Theory 2009, 55, 5353–5361. [Google Scholar] [CrossRef]

- Lai, L.; El Gamal, H.; Poor, V. The wiretap channel with feedback: encryption over the channel. IEEE Trans. Inf. Theory 2008, 54, 5059–5067. [Google Scholar] [CrossRef]

- Yin, X.; Xue, Z.; Dai, B. Capacity-equivocation regions of the DMBCs with noiseless feedback. Math. Probl. Eng. 2013, 2013, 102069. [Google Scholar] [CrossRef]

- Dai, B.; Han Vinck, A.J.; Luo, Y.; Zhuang, Z. Capacity region of non-degraded wiretap channel with noiseless feedback. In Proceedings of the 2012 IEEE International Symposium on Information Theory (ISIT), Cambridge, MA, USA, 1–6 July 2012. [Google Scholar]

- Wyner, A.; Ziv, J. The rate-distortion function for source coding with side information at the decoder. IEEE Trans. Inf. Theory 1976, 22, 1–10. [Google Scholar] [CrossRef]

- El Gamal, A.; Kim, Y.H. Information measures and typicality. In Network Information Theory; Cambridge University Press: Cambridge, UK, 2011; pp. 17–37. [Google Scholar]

© 2017 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Li, X.; Dai, B.; Ma, Z. How Can We Fully Use Noiseless Feedback to Enhance the Security of the Broadcast Channel with Confidential Messages. Entropy 2017, 19, 529. https://doi.org/10.3390/e19100529

Li X, Dai B, Ma Z. How Can We Fully Use Noiseless Feedback to Enhance the Security of the Broadcast Channel with Confidential Messages. Entropy. 2017; 19(10):529. https://doi.org/10.3390/e19100529

Chicago/Turabian StyleLi, Xin, Bin Dai, and Zheng Ma. 2017. "How Can We Fully Use Noiseless Feedback to Enhance the Security of the Broadcast Channel with Confidential Messages" Entropy 19, no. 10: 529. https://doi.org/10.3390/e19100529