Variational Characterization of Free Energy: Theory and Algorithms

Abstract

:1. Introduction

Outline

2. Certainty Equivalence

2.1. Donsker–Varadhan Variational Principle

Importance Sampling

2.2. Computational Issues

Comparison with the Standard Monte Carlo Estimator

- (a)

- the speed of convergence towards the stationary distribution and

- (b)

- the (asymptotic) variance of the estimator.

3. Certainty Equivalence in Path Space

3.1. Donsker–Varadhan Variational Principle in Path Space

3.1.1. Likelihood Ratio of Path Space Measures

3.1.2. Importance Sampling in Path Space

3.2. Revisiting Jarzynski’s Identity

Optimized Protocols by Adaptive Importance Sampling

4. Algorithms: Gradient Descent, Cross Entropy Minimization and beyond

4.1. Gradient Descent

| Algorithm 1 Gradient descent |

|

4.2. Cross-Entropy Minimization

| Algorithm 2 Simple cross-entropy method |

|

4.3. Other Monte Carlo-Based Methods

4.3.1. Approximate Policy Iteration

4.3.2. Least-Squares Monte Carlo

5. Illustrative Examples

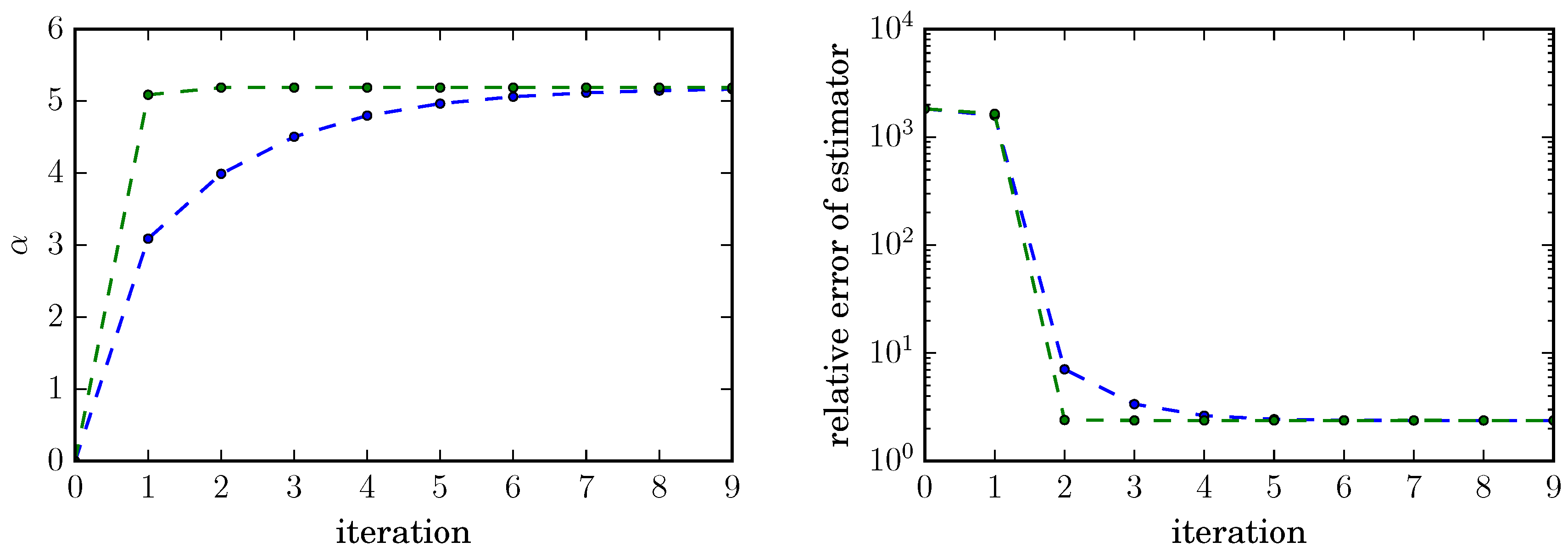

5.1. Example 1 (Moment Generating Function)

5.2. Example 2 (Rare Event Probabilities)

6. Conclusions

Acknowledgments

Author Contributions

Conflicts of Interest

Appendix A. Yet Another Certainty Equivalence

Appendix B. Ratio Estimators

Appendix B.1. The Delta Method

Appendix B.2. Asymptotic Properties of Ratio Estimators

Appendix C. Finite-Dimensional Change of Measure Formula

Appendix C.1. Gaussian Change of Measure

Appendix C.2. Reweighting

Appendix D. Proof of Theorem 2

References

- Hammersely, J.M.; Morton, K.W. Poor Man’s Monte Carlo. J. R. Stat. Soc. Ser. B 1954, 16, 23–38. [Google Scholar]

- Rosenbluth, M.N.; Rosenbluth, A.W. Monte Carlo Calculations of the Average Extension of Molecular Chains. J. Chem. Phys. 1955, 23, 356–359. [Google Scholar] [CrossRef]

- Deuschel, J.D.; Stroock, D.W. Large Deviations; Academic Press: New York, NY, USA, 1989. [Google Scholar]

- Dai Pra, P.; Meneghini, L.; Runggaldier, W.J. Connections between stochastic control and dynamic games. Math. Control Signals Syst. 1996, 9, 303–326. [Google Scholar] [CrossRef]

- Delle Site, L.; Ciccotti, G.; Hartmann, C. Partitioning a macroscopic system into independent subsystems. J. Stat. Mech. Theory Exp. 2017, 2017, 83201. [Google Scholar] [CrossRef]

- Boué, M.; Dupuis, P. A variational representation for certain functionals of Brownian motion. Ann. Probab. 1998, 26, 1641–1659. [Google Scholar]

- Hartmann, C.; Banisch, R.; Sarich, M.; Badowski, T.; Schütte, C. Characterization of rare events in molecular dynamics. Entropy 2014, 16, 350–376. [Google Scholar] [CrossRef]

- Fleming, W.H.; Soner, H.M. Controlled Markov Processes and Viscosity Solutions; Springer: New York, NY, USA, 2006. [Google Scholar]

- Hartmann, C.; Schütte, C. Efficient rare event simulation by optimal nonequilibrium forcing. J. Stat. Mech. Theory Exp. 2012, 2012. [Google Scholar] [CrossRef]

- Jarzynski, C. Nonequilibrium equality for free energy differences. Phys. Rev. Lett. 1997, 78, 2690–2693. [Google Scholar] [CrossRef]

- Sivak, D.A.; Crooks, G.A. Thermodynamic Metrics and Optimal Paths. Phys. Rev. Lett. 2012, 109, 190602. [Google Scholar] [CrossRef] [PubMed]

- Oberhofer, H.; Dellago, C. Optimum bias for fast-switching free energy calculations. Comput. Phys. Commun. 2008, 179, 41–45. [Google Scholar] [CrossRef]

- Rotskoff, G.M.; Crooks, G.E. Optimal control in nonequilibrium systems: Dynamic Riemannian geometry of the Ising model. Phys. Rev. E 2015, 92, 60102. [Google Scholar] [CrossRef] [PubMed]

- Vaikuntanathan, S.; Jarzynski, C. Escorted Free Energy Simulations: Improving Convergence by Reducing Dissipation. Phys. Rev. Lett. 2008, 100, 109601. [Google Scholar] [CrossRef] [PubMed]

- Zhang, W.; Wang, H.; Hartmann, C.; Weber, M.; Schütte, C. Applications of the cross-entropy method to importance sampling and optimal control of diffusions. SIAM J. Sci. Comput. 2014, 36, A2654–A2672. [Google Scholar] [CrossRef]

- Dupuis, P.; Wang, H. Importance sampling, large deviations, and differential games. Stoch. Int. J. Probab. Stoch. Proc. 2004, 76, 481–508. [Google Scholar] [CrossRef]

- Dupuis, P.; Wang, H. Subsolutions of an Isaacs equation and efficient schemes for importance sampling. Math. Oper. Res. 2007, 32, 723–757. [Google Scholar] [CrossRef]

- Vanden-Eijnden, E.; Weare, J. Rare Event Simulation of Small Noise Diffusions. Commun. Pure Appl. Math. 2012, 65, 1770–1803. [Google Scholar] [CrossRef]

- Roberts, G.O.; Tweedie, R.L. Exponential convergence of Langevin distributions and their discrete approximations. Bernoulli 1996, 2, 341–363. [Google Scholar] [CrossRef]

- Glasserman, P. Monte Carlo Methods in Financial Engineering; Springer: New York, NY, USA, 2004. [Google Scholar]

- Lelièvre, T.; Stolz, G. Partial differential equations and stochastic methods in molecular dynamics. Acta Numer. 2016, 25, 681–880. [Google Scholar] [CrossRef]

- Bennett, C.H. Efficient estimation of free energy differences from Monte Carlo data. J. Comput. Phys. 1976, 22, 245–268. [Google Scholar] [CrossRef]

- Øksendal, B. Stochastic Differential Equations: An Introduction with Applications; Springer: Berlin, Germany, 2003. [Google Scholar]

- Lapeyre, B.; Pardoux, E.; Sentis, R. Méthodes de Monte Carlo Pour les Équations de Transport et de Diffusion; Springer: Berlin, Germany, 1998. (In French) [Google Scholar]

- Sivak, D.A.; Chodera, J.D.; Crooks, G.A. Using Nonequilibrium Fluctuation Theorems to Understand and Correct Errors in Equilibrium and Nonequilibrium Simulations of Discrete Langevin Dynamics. Phys. Rev. X 2013, 3, 11007. [Google Scholar] [CrossRef]

- Darve, E.; Rodriguez-Gomez, D.; Pohorille, A. Adaptive biasing force method for scalar and vector free energy calculations. J. Chem. Phys. 2008, 128, 144120. [Google Scholar] [CrossRef] [PubMed]

- Lelièvre, T.; Rousset, M.; Stoltz, G. Computation of free energy profiles with parallel adaptive dynamics. J. Chem. Phys. 2007, 126, 134111. [Google Scholar] [CrossRef] [PubMed]

- Lelièvre, T.; Rousset, M.; Stoltz, G. Long-time convergence of an adaptive biasing force methods. Nonlinearity 2008, 21, 1155–1181. [Google Scholar] [CrossRef]

- Hartmann, C.; Schütte, C.; Zhang, W. Model reduction algorithms for optimal control and importance sampling of diffusions. Nonlinearity 2016, 29, 2298–2326. [Google Scholar] [CrossRef]

- Zhang, W.; Hartmann, C.; Schütte, C. Effective dynamics along given reaction coordinates, and reaction rate theory. Faraday Discuss. 2016, 195, 365–394. [Google Scholar] [CrossRef] [PubMed]

- Hartmann, C.; Latorre, J.C.; Pavliotis, G.A.; Zhang, W. Optimal control of multiscale systems using reduced-order models. J. Comput. Nonlinear Dyn. 2014, 1, 279–306. [Google Scholar] [CrossRef]

- Hartmann, C.; Schütte, C.; Weber, M.; Zhang, W. Importance sampling in path space for diffusion processes with slow-fast variables. Probab. Theory Relat. Fields 2017. [Google Scholar] [CrossRef]

- Lie, H.C. On a Strongly Convex Approximation of a Stochastic Optimal Control Problem for Importance Sampling of Metastable Diffusions. Ph.D. Thesis, Department of Mathematics and Computer Science, Freie Universität Berlin, Berlin, Germany, 2016. [Google Scholar]

- Richter, L. Efficient Statistical Estimation Using Stochastic Control and Optimization. Master’s Thesis, Department of Mathematics and Computer Science, Freie Universität Berlin, Berlin, Germany, 2016. [Google Scholar]

- Nocedal, J.; Wright, S.J. Numerical Optimization; Springer: New York, NY, USA, 1999. [Google Scholar]

- Banisch, R.; Hartmann, C. A sparse Markov chain approximation of LQ-type stochastic control problems. Math. Control Relat. Fields 2016, 6, 363–389. [Google Scholar] [CrossRef]

- Schütte, C.; Winkelmann, S.; Hartmann, C. Optimal control of molecular dynamics using Markov state models. Math. Program. Ser. B 2012, 134, 259–282. [Google Scholar] [CrossRef]

- Bertsekas, D.P. Approximate policy iteration: A survey and some new methods. J. Control Theory Appl. 2011, 9, 310–355. [Google Scholar] [CrossRef] [Green Version]

- El Karoui, N.; Hamadène, S.; Matoussi, A. Backward stochastic differential equations and applications. Appl. Math. Optim. 2008, 27, 267–320. [Google Scholar]

- Bender, C.; Steiner, J. Least-Squares Monte Carlo for BSDEs. In Numerical Methods in Finance; Carmona, R., Del Moral, P., Hu, P., Oudjane, N., Eds.; Springer: Berlin/Heidelberg, Germany, 2012; pp. 257–289. [Google Scholar]

- Gobet, E.; Turkedjiev, P. Adaptive importance sampling in least-squares Monte Carlo algorithms for backward stochastic differential equations. Stoch. Proc. Appl. 2005, 127, 1171–1203. [Google Scholar] [CrossRef]

- Hartmann, C.; Kebiri, O.; Neureither, L. Importance sampling of rare events using least squares Monte Carlo. 2018; under preparation. [Google Scholar]

- Papaspiliopoulos, O.; Roberts, G.O. Importance sampling techniques for estimation of diffusions models. In Centre for Research in Statistical Methodology; Working Papers, No. 28; University of Warwick: Coventry, UK, 2009. [Google Scholar]

© 2017 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Hartmann, C.; Richter, L.; Schütte, C.; Zhang, W. Variational Characterization of Free Energy: Theory and Algorithms. Entropy 2017, 19, 626. https://doi.org/10.3390/e19110626

Hartmann C, Richter L, Schütte C, Zhang W. Variational Characterization of Free Energy: Theory and Algorithms. Entropy. 2017; 19(11):626. https://doi.org/10.3390/e19110626

Chicago/Turabian StyleHartmann, Carsten, Lorenz Richter, Christof Schütte, and Wei Zhang. 2017. "Variational Characterization of Free Energy: Theory and Algorithms" Entropy 19, no. 11: 626. https://doi.org/10.3390/e19110626