Leveraging Receiver Message Side Information in Two-Receiver Broadcast Channels: A General Approach †

Abstract

:1. Introduction

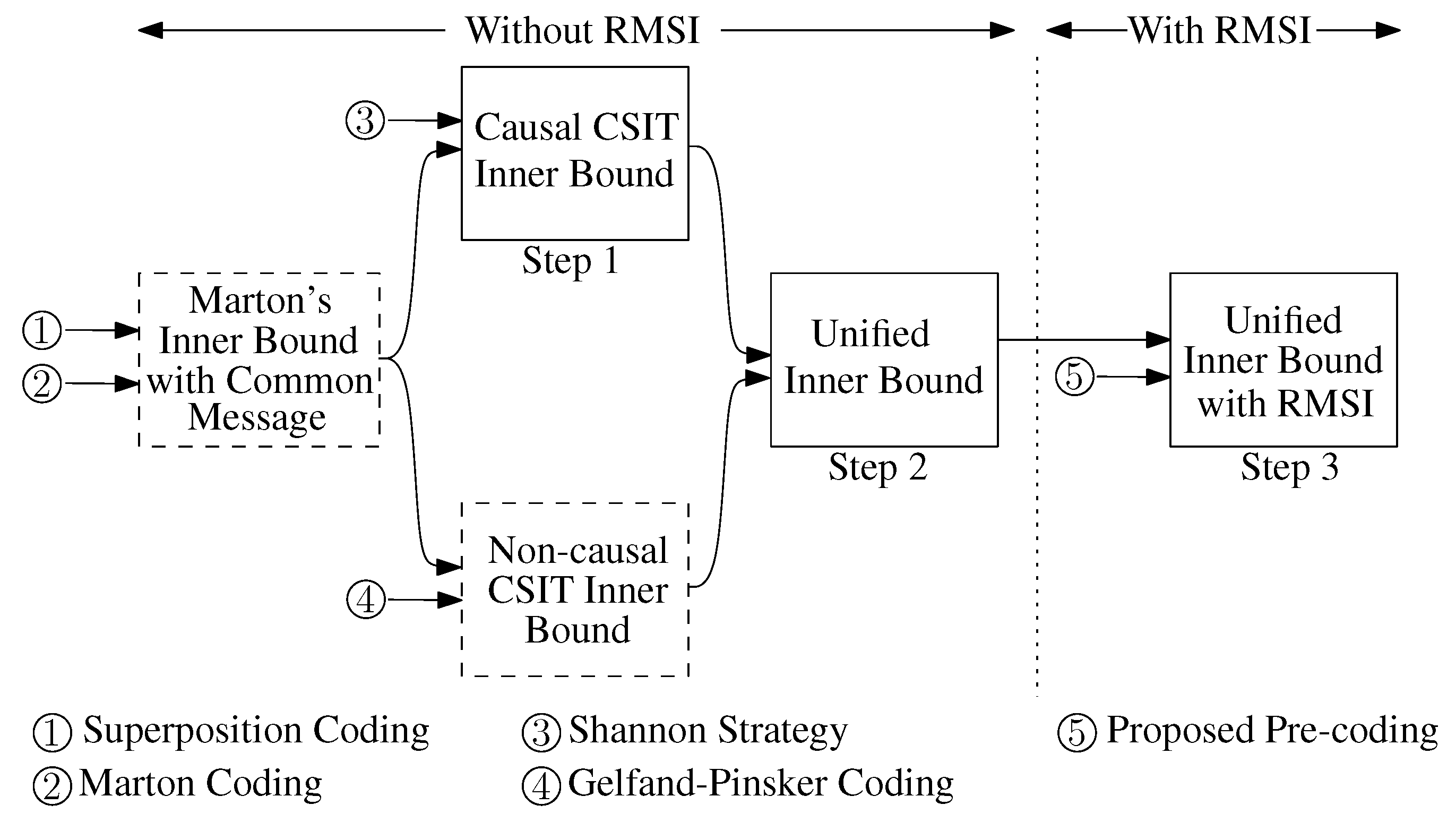

1.1. Broadcast Channel without RMSI

1.1.1. Without State

1.1.2. With Causal CSIT

1.1.3. With Non-Causal CSIT

1.2. Broadcast Channel with RMSI

1.2.1. Without State

1.2.2. With Causal CSIT

1.2.3. With Non-Causal CSIT

2. Summary of the Main Results

- For the channel with RMSI, without state, we establish the capacity region of two new cases, namely the deterministic channel and the more capable channel. In a concurrent work with the preliminary published version of this work [23], Bracher and Wigger [24] established the capacity region of the semideterministic channel without state for which our inner bound is also tight.

- For the channel with RMSI and causal CSIT, we establish the capacity region of the degraded broadcast channel where the channel state is available causally at: (i) only the transmitter; (ii) the transmitter and the non-degraded receiver; or (iii) the transmitter and both receivers.

- For the channel with RMSI and non-causal CSIT, we establish the capacity region of the degraded broadcast channel where the channel state is available non-causally at: (i) the transmitter and the non-degraded receiver; or (ii) the transmitter and both receivers.

3. System Model

4. Broadcast Channel without RMSI

4.1. With Causal CSIT

4.2. With Non-Causal CSIT

4.3. A Unified Inner Bound

5. Broadcast Channel with RMSI

5.1. Moving from without RMSI to with RMSI

5.2. A Unified Inner Bound

6. New Capacity Results

6.1. With RMSI, without State

6.2. With RMSI, with Causal CSIT

6.3. With RMSI, with Non-Causal CSIT

6.4. Discussion on Prior Known Results

7. Conclusions

Acknowledgments

Author Contributions

Conflicts of Interest

Appendix A

- .

- For sufficiently large n,

- If , , then, for sufficiently large n,Note that in this item, we have also used the fact that , which results in .

- , as n tends to infinity.

Appendix B

Appendix C

Appendix D

References

- Cover, T.M. Broadcast channels. IEEE Trans. Inf. Theory 1972, 18, 2–14. [Google Scholar] [CrossRef]

- Ong, L.; Kellett, C.M.; Johnson, S.J. On the equal-rate capacity of the AWGN multiway relay channel. IEEE Trans. Inf. Theory 2012, 58, 5761–5769. [Google Scholar] [CrossRef]

- Gallager, R.G. Capacity and coding for degraded broadcast channels. Probl. Inf. Transm. 1974, 10, 3–14. [Google Scholar]

- El Gamal, A.; Kim, Y.H. Network Information Theory; Cambridge University Press: Cambridge, UK, 2011. [Google Scholar]

- El Gamal, A. The capacity of a class of broadcast channels. IEEE Trans. Inf. Theory 1979, 25, 166–169. [Google Scholar] [CrossRef]

- Pinsker, M.S. Capacity of noiseless broadcast channels. Probl. Inf. Transm. 1978, 14, 28–34. [Google Scholar]

- Han, T.S. The capacity region for the deterministic broadcast channel with a common message. IEEE Trans. Inf. Theory 1981, 27, 122–125. [Google Scholar]

- Marton, K. A coding theorem for the discrete memoryless broadcast channel. IEEE Trans. Inf. Theory 1979, 25, 306–311. [Google Scholar] [CrossRef]

- Körner, J.; Marton, K. General broadcast channels with degraded message sets. IEEE Trans. Inf. Theory 1977, 23, 60–64. [Google Scholar] [CrossRef]

- Steinberg, Y. Coding for the degraded broadcast channel with random parameters, with causal and noncausal side information. IEEE Trans. Inf. Theory 2005, 51, 2867–2877. [Google Scholar] [CrossRef]

- Shannon, C. Channels with side information at the transmitter. IBM J. Res. Dev. 1958, 2, 289–293. [Google Scholar] [CrossRef]

- Lapidoth, A.; Wang, L. The state-dependent semideterministic broadcast channel. IEEE Trans. Inf. Theory 2013, 59, 2242–2251. [Google Scholar] [CrossRef]

- Khosravi-Farsani, R.; Marvasti, F. Capacity bounds for multiuser channels with non-causal channel state information at the transmitters. In Proceedings of the IEEE Information Theory Workshop (ITW), Paraty, Brazil, 16–20 October 2011. [Google Scholar]

- Gel’fand, S.I.; Pinsker, M.S. Coding for channel with random parameters. Probl. Control Inf. Theory 1980, 9, 19–31. [Google Scholar]

- Oechtering, T.J.; Schnurr, C.; Bjelakovic, I.; Boche, H. Broadcast capacity region of two-phase bidirectional relaying. IEEE Trans. Inf. Theory 2008, 54, 454–458. [Google Scholar] [CrossRef]

- Tuncel, E. Slepian–Wolf coding over broadcast channels. IEEE Trans. Inf. Theory 2006, 52, 1469–1482. [Google Scholar] [CrossRef]

- Kramer, G.; Shamai, S. Capacity for classes of broadcast channels with receiver side information. In Proceedings of the IEEE Information Theory Workshop (ITW), Lake Tahoe, CA, USA, 2–6 September 2007. [Google Scholar]

- Oechtering, T.J.; Wigger, M.; Timo, R. Broadcast capacity regions with three receivers and message cognition. In Proceedings of the IEEE International Symposium on Information Theory (ISIT), Cambridge, MA, USA, 1–6 July 2012. [Google Scholar]

- Khormuji, M.N.; Oechtering, T.J.; Skoglund, M. Capacity region of the bidirectional broadcast channel with causal channel state information. In Proceedings of the Tenth International Symposium on Wireless Communication Systems (ISWCS), Ilmenau, Germany, 27–30 August 2013. [Google Scholar]

- Oechtering, T.J.; Skoglund, M. Bidirectional broadcast channel with random states noncausally known at the encoder. IEEE Trans. Inf. Theory 2013, 59, 64–75. [Google Scholar] [CrossRef]

- Kramer, G. Information networks with in-block memory. IEEE Trans. Inf. Theory 2014, 60, 2105–2120. [Google Scholar] [CrossRef]

- Jafar, S. Capacity with causal and noncausal side information: A unified view. IEEE Trans. Inf. Theory 2006, 52, 5468–5474. [Google Scholar] [CrossRef]

- Asadi, B.; Ong, L.; Johnson, S.J. A unified scheme for two-receiver broadcast channels with receiver message side information. In Proceedings of the IEEE International Symposium on Information Theory (ISIT), Hong Kong, China, 14–19 June 2015. [Google Scholar]

- Bracher, A.; Wigger, M. Feedback and partial message side-information on the semideterministic broadcast channel. In Proceedings of the IEEE International Symposium on Information Theory (ISIT), Hong Kong, China, 14–19 June 2015. [Google Scholar]

- Nair, C.; El Gamal, A. An outer bound to the capacity region of the broadcast channel. IEEE Trans. Inf. Theory 2007, 53, 350–355. [Google Scholar] [CrossRef]

© 2017 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Asadi, B.; Ong, L.; Johnson, S.J. Leveraging Receiver Message Side Information in Two-Receiver Broadcast Channels: A General Approach †. Entropy 2017, 19, 138. https://doi.org/10.3390/e19040138

Asadi B, Ong L, Johnson SJ. Leveraging Receiver Message Side Information in Two-Receiver Broadcast Channels: A General Approach †. Entropy. 2017; 19(4):138. https://doi.org/10.3390/e19040138

Chicago/Turabian StyleAsadi, Behzad, Lawrence Ong, and Sarah J. Johnson. 2017. "Leveraging Receiver Message Side Information in Two-Receiver Broadcast Channels: A General Approach †" Entropy 19, no. 4: 138. https://doi.org/10.3390/e19040138