1. Introduction

The general goal of this paper is to investigate the possibility of the integration of the embodied and computational approaches to cognition. The relationship between these two approaches is more common and less obvious than may be suspected [

1,

2,

3,

4,

5]. Here, I start from remarks on embodied cognition and, in spite of contemporary criticism [

6,

7], I try to defend it as an interesting theory. In this defense, I integrate it with contemporary studies of computational cognition. Hence, I concentrate on the possibility of the realization of cognitive processing via computations by nonneural body parts.

Although there is no general agreement on this matter, one influential characterization of embodied cognition was proposed by Wilson and Foglia [

8]:

“Many features of cognition are embodied in that they are deeply dependent upon characteristics of the physical body of an agent, such that the agent’s beyond-the-brain body plays a significant causal role, or a physically constitutive role, in that agent’s cognitive processing.”

This account, despite the fact that it is quite convincing and straightforward, is not without problems. The most problematic, and at the same time most interesting, in this definition, is the notion of “deep dependency” between cognitive processing and the physical body of an agent. We can call this a “body-role problem”. This relation, described by Wilson and Foglia [

8] in terms of the two roles played by the body: “significant causal” and “physically constitutive”, is the issue of the ongoing controversies [

4,

6]. In the context of embodied cognition, there is no obvious answer to the question of what makes the body causally significant, or physically constitutive for cognitive processing. Although Wilson and Foglia [

8] provide some answers for the question of this dependence (they differentiate three kinds of the body roles in cognition: as a constraint, distributor, and regulator), I want to explore this issue in different way. In this paper, I assume that the body or its part is causally significant, or physically constitutive for cognition if, and only if, it is a part of system instantiating cognition. I suppose that there are two possible approaches to the inclusion of non-neuronal parts of the body as responsible for cognition. The first one refers to a mutual manipulability criterion and was developed by Kaplan [

9]—in the next section of this paper, I will rely on this criterion; the second one refers to the specific causal role of the body in cognition. However, only studies on causal specificity exist

per se [

10]; there is actually no work on causal specificity of the body in the context of embodied cognition. I want to pursue the latter project and show that the body, especially non-neural body parts, realizes cognitively relevant computations. A more theoretically satisfactory account of the role of the body in cognition still has to be delivered, but, in this paper, I focus on research covering computational cognition and comparative biology (visual ecology and ethology) that suggests how such a solution can be achieved.

A good starting point for our endeavor is to describe cognition, more precisely, minimal cognition [

11,

12], as a kind of computational process of problem-solving. Therefore, I assume that:

Cognition is a computational process of problem-solving embedded in the sensorimotor organization of the system.

For the sake of clarity, we also need to say what we mean by computation:

Computation is information processing. As Miłkowski writes: “Information-processing systems are systems capable of computation in the broadest sense (...). A computational process is one that transforms a stream of input information to produce a stream of information at the output. During the transformation, the process may also rely on information that is part of the selfsame process (internal states of the computational process)” [

13] or Ladyman: “Computation is a kind of flow of information, in at least the most general sense of the latter idea: any computation takes as input some distribution of possibilities in a state space and determines, by physical processes, some other distribution consistent with that input” [

14].

A similar approach is found in [

15] and in studies integrating computation and embodied cognition [

1,

2]. Accepting this assumption, the body role can be characterized in two ways, as enabling role of the body (ERB) or constraining role of the body (CRB). We can elaborate this distinction as follows:

CRB—the body is a constraining (limiting, blurring) and noise-generating three-dimensional world-agent boundary—a boundary that the cognitive system should learn about (or which constitutes a problem to be solved for the cognitive system) before it starts learning about what is beyond the boundary;

ERB—the body is a filtering, enabling, preprocessing device, thanks to which the cognitive system can easily and efficiently deal with its problems.

This distinction sets a context for my inquiry. Dealing with the previously mentioned problem of the role played by the body in cognition, I will concentrate on a contemporary work dedicated to morphological computations (MC). This is why I focus here on the enabling role of the body because the research on MC concentrates on the positive role of the body in cognitive processing. The choice of MC is justified here—firstly, by the fact that it is often pointed out as the most promising solution to the body-role problem; secondly, because they are the most obvious example of a connection between embodied and computational cognition. Last, but not least, it is a motivation for looking at the enabling role of the body, as Hoffman and Müller [

16] write, “the contribution of body morphology should be that the physical processes do not need to be modelled, but can be used directly.” Therefore, if the non-neural body parts realize cognitive processing and are part of the problem-solving system, this will be enough for a successful integration of the embodied and computational approaches to cognition.

At first glance, this idea seems as convincing as it should but is not without problems. Of course, the body is part of many solutions; using hands, we open jars, hammer nails or catch a ball, but these are not interesting problems for a theory of embodied cognition. Our problem is to show how we can say that nonneural body parts play a role in cognitive processing via computations. In his book,

The Mind Incarnate, Shapiro [

17] considers the relation between minds and bodies, or more precisely, the possibility of inferring body properties from mind properties and vice versa:

“Suppose […] that, […], the extraterrestrials have minds just like human minds. What do they look like? Can their bodies consist in amorphous puddinglike blobs of slimy material? Can they have masses of tentacles surrounding a large, hexagonally shaped eye? Might they be whale-sized, carrying themselves about on hundreds of legs, like centipedes? Or, if they think like human beings, must they have bodies like human beings?”

Therefore, if we conceptualize cognition as computational problem solving, we can ask, if the above-mentioned extraterrestrials have “hexagonally shaped eyes” and “hundreds of legs” being “whale-sized”, do they solve the problems they face in the same way as humans? Do they plan and choose actions in the same way as humans? Of course, if their neurons are similar to human neurons, some kind of similarity is possible between these systems, but as Shapiro [

17] argues, it is reasonable to suppose that, depending on the body morphology, problem-solving of organisms will vary (more arguments for this claim will follow). Sometimes, some problems that are solvable for one organism will be unsolvable for others (e.g., hunting at night or on distal prey, planning, spatial vision or simultaneous monitoring whole surrounding). It is possible that—referring to Marr’s [

18] terminology—systems with different morphologies realize the same computations, but possibly using different algorithms [

15]. However, as I suppose, the sameness of computations may be upheld in the case of abstract descriptions. Humans, mantis shrimp and sabellidae realize vision, but assuming that they realize the same computation will be at least misleading because the environmental factors are vastly different, and the way vision works in these organisms varies dramatically. I will describe this relation as an example of “deep dependency”. We appeal to this kind of deep dependency in the context of contemporary debate on morphological computations. Naturally, we should remember, on the one hand, that the body in the cognitive system is, at the same time, enabling and constraining. Therefore, a full account of the role of the body should describe both roles and the relation between them. However, for the purposes of this paper, I will focus only on the enabling role of the body.

In searching for a solution, I will focus here on studies of morphological computations—the most evident example of research on computational powers of nonneural body parts. However, we cannot ignore the problems concerning MC: the examples of MC are very limited—there are few proper cases of MC. For a long time, the XOR-robot (robot controlled by two networks which compute A OR B, and A AND B, able to display the exclusive disjunction (XOR function) in its actions) construed by Paul [

19] was the only example of proper MC [

16]; some arguments claim that only some MC are genuine computations, according to Müller and Hoffman, the body described as a computational reservoir (I explain this later) is the only case of genuine computational MC [

16,

17,

20]. Other arguments claim that, even if MC are computational, they aren’t genuinely cognitive (they are often described as non-cognitive). However, more arguments claim that, even though the body plays some computational role, it cannot be considered a proper part of the cognitive system [

6,

16]. These points amount to a serious challenge. Embodied cognition is a theory of cognition, so if MC doesn’t play any cognitive role, then it is useless for any theory of cognition. However, if we could describe the cognitive role of MC, we would provide an answer to the body-role problem. Despite these difficulties, I will try to defend the claim that morphological computations play a cognitive role, but this role is mostly not autonomous. A system that can compute independently from any other system is dubbed as computationally autonomous, and a system which is not able to do so, is computationally non-autonomous. Therefore, morphological computations require integration with some nonmorphological kind of computation.

2. Embodied Cognition: Some Problems and Proposed Solutions

Some intuitiveness of the embodied cognition approach is increasingly being called into question for conceptual [

6] and empirical [

7] reasons. This is accompanied by the constant controversy of what the claim that cognition is embodied means exactly. However, is there a solution? As indicated above, the most controversial and most promising is the claim that the body has the constitutive role in cognition. It amounts to a claim that non-neuronal parts of the body (rather than the environment) constitute cognitive processing. It means, in short, that a change in non-neuronal parts of the body should lead to changes in cognition. The loss of body parts should lead to deterioration of cognitive processing; however, the degree of deterioration may vary. The relation between bodily processes and cognitive ones should, at least according to some, be described in non-causal terms of compositional explanations [

21].

However, should we describe the relation between the realization base and realized cognitive processing in such a binary way? Naturally, if the whole base that realizes particular cognitive processing is absent, the realized process also should be absent, but no one assumes that the non-neural body itself may constitute the whole base realizing particular cognitive processing. Therefore, problems with some cognitively relevant non-neural body parts should change or distort the cognitive process, but not wholly remove it [

6]. Aizawa [

6] argues that there is no evidence for embodiment even in the case of such an intuitively embodied cognitive process as perception. This conclusion is supposed to stem from research on the visual system. In particular, even subjects with total paralysis of his or her visual system can see. Therefore, the eye movement doesn’t constitute vision itself, but no doubt the paralysis changes perception [

20]. Thus, the basic question is: even if paralysis does not lead to blindness, is the paralyzed visual system still functional? I am convinced, that there is evidence, even in Aizawa’s [

6] same paper, that such a system is no doubt working but it’s at least partially useless—vision becomes unstable and blurred, objects are seen in displaced locations [

22]. Therefore, the visual system cannot exploit eye movement for perception and fulfill its function in whole body movement coordination. I am also convinced that, in this case, the mutual manipulability criteria for being a part of the cognitive system, proposed by Kaplan [

9], are fulfilled. Kaplan [

9] insists that “mechanism or system boundaries are determined by relationships of mutual manipulability between the properties and activities of putative components and the overall behavior of the mechanism in which they figure”. He follows Craver [

23], and defines mutual manipulability as follows:

“(M1) When u is set to the value u1 in an (ideal) intervention, then w takes on the value f (u1) [or some probability distribution of values f (u1)]. (M2) When w is set to the value w1 in an (ideal) intervention, then u takes on the value f (w1) [or some probability distribution of values f (w1)].”

Kaplan uses the example of the relation between the internal dynamics of the musculoskeletal system, and neural control of this system. In our case, we have a relation between the visual system and muscles involved in the coordination of the eye movement. This is enough for us to archive our goal because the body does act differently, even if this difference if not always as powerful as it was suspected.

However, is there enough evidence for such an extension of the cognitive system on the body? I must admit that one of the most astounding facts about embodied cognition is that, although theoretical research often appeals to the key role of the body in cognition, actual cases show quite a limited impact of the body on cognitive processing. There is evidence of the role played in the cognitive processes by brain regions involved in action control [

24,

25] or interoception [

26], the role of posture and gesturing in perception and thinking [

27]. However, there is actually little evidence on the role of non-neural body parts in cognitive processing. Some examples of non-neural processing are found in [

28]. First, there is research on morphological computations, more precisely on the possibility of realization of XOR-gates using robot morphology [

19] (I will get back to this example later); however, this research has a limited application for embodied cognition. Second, there are the studies presented by Thompson [

29] on the special role of hardware in processing, more precisely, on the role of “time delays, parasitic capacities, cross-talk, meta-stability” [

28] in processing. Such states, normally described as malfunctioning caused by some hardware problems, can stabilize processing and make it more efficient. There are also other neuroethological studies, not cited by Clark [

28], focused on exotic sensory systems presented by MacIver [

30] (see: [

4,

8,

31]). Nevertheless, it is not much, and the question of how we can develop this line in the more satisfying full approach of the body’s role in computational processing remains open.

We can start by assuming that mentioned neuroethological studies indicate that various morphologies and senses, and their placements determine differing solutions to environmental problems. This approach is compatible with Keijzer and Arnellos’ [

32] research on the evolution of the nervous system. Differing morphologies lead to differing kinds of perception and action control or, using Keijzer and Arnellos’ [

32] terminology, differing animal sensorimotor organization (I refer to this organization in the definition of cognition, introduced above), and can be informative about various kinds of embodied computational cognition (I should note that despite our references to the work of Keijzer and his colleagues, he defends an embodied but not computational approach to cognition [

11]). Keijzer and colleagues [

33,

34] study the evolution of the nervous system, mainly after Pantin [

35], describing the basic neuronal unit as a multicellular, neuromuscular sheet, from which all more complex nervous systems evolved. This neuromuscular unit learns through self-organization to act using some basic contractions, and to exploit its own morphological properties. It is intriguing that this research is compatible with soft robotics studies on exploitation of material properties in the process of sensing and action control, in other words, in making the body a part of the controller.

Keijzer [

34] describes this unit (neuromuscular sheet) as sensing despite its lack of any specific sensory system. This basic system copes with environmental problems as a whole, without differentiation between the central and peripheral system. Therefore, when we want to know the function of the periphery of such a system (if we differentiate such a periphery), we need to understand the functions of the central system and vice versa. In such primitive organisms, the role of the morphology of non-neural body parts in coping with environmental problems is beyond doubt. Therefore, in the spirit of these considerations, we can show that it is seen here as an ERB and CRB. However, is this the case for more complex systems? We can say that, in any case, organisms phylo- and ontogenetically learn their own morphology, their possibilities, and constraints and then exploit them in their actions and perceptions. We can observe this in octopuses [

36] to the same degree as in human infants [

37]. I believe that this research can be the first step to solving the body-role problem. Therefore, the body plays a significant causal role in cognitive processing. We can say that it is reasonable to conceptualize central and more peripheral, morphological properties as mutually related and dependent.

3. Computations with Morphology

As we mentioned, the earlier main example of the role of the physical body in cognitive processing is morphological computations, which are determined or even realized by morphological features such as shape or material properties of the body of a natural or artificial system. In

Section 1, I mentioned problems with embodied cognition. The starting problem is whether they are genuinely computational, and what kind of computations they are. The first problem is related to descriptions of morphological computations as physical processes. Thus, in Marr’s [

18] terminology, we deal with the implementation level rather than computation. However, when we look more carefully at papers on MC, we see that they really concentrate on the implementation level because they, in most cases, describe media (e.g., mass-spring systems in [

38]) that are often not considered as an implementation basis for computations. However, they differentiate implementation (e.g., mass-spring system) from computation (e.g., an emulated Volterra series—a model for nonlinear behavior of a system with “fading memory”; output of this model depends not only on an input at a particular time, but on inputs at all other times); therefore, there is no place for confusion [

38]. Another problem prima facie is that, in most cases, morphological computations are analog computations [

39], so they are based on transformations of continuous variables. This becomes evident when explaining morphological computations in terms of reservoir computing [

38,

40,

41,

42,

43]. However, even MC are analog, this should not be a problem for the approach defended in this paper. I leave open the question of whether any morphological computations are “computable by a conventional Turing machine” [

44], or require some kind of a hyper-Turing machine, as Siegelmann suggests.

Now, we can focus on MC. The concept of morphology refers to the shape, size and material properties of bodies of animals and robots. These features are crucial in the performing or realization of computations, as Füchslin and colleagues [

44] argue. They [

44] also distinguish between morphological computation and morphological control. Füchslin and colleagues argue that morphological control is achieved thanks to morphological computations. Therefore, in both cases, we deal with morphological computations, but later computations are related to control issues. However, Müller and Hoffmann [

16], in their recent paper, argue that the main difference between morphological computation and morphological control is that no computations are involved in the latter, which they describe as a process of purely mechanical movement coordination. In this, I follow Müller and Hoffman [

16].

However, Müller and Hoffman [

16] go further, and argue that not every example of morphological computation deserves to be called computation at all, mainly because not every example of MC present in the literature fulfills the criteria for being a computation.

While defining computation, Müller and Hoffmann [

16] impose two conditions that must be fulfilled by any computation. The first condition is called the “usability constraint”. Piccinini argues that “if a physical process is a computation, it can be used by a finite observer to obtain the desired values of a function” [

45]. In the same place, he adds that “If nervous systems are computing systems, then observers in this extended sense are the bodies of organisms whose behavior is influenced by their own neural computations.” However, is the reverse situation possible? I think that there is no substantial reason to say that the body cannot be a computational system, and the brain its finite observer. The second condition requires that a computational system be able to perform multiple computations. Nevertheless, I assume the view that a computational system should perform not multiple types but any computation whatsoever [

31]. Thus, it’s enough for there to be only the one computation in a system. Now, I can present three kinds of MC.

In the philosophical literature, the most discussed case of morphological computations is the XOR-robot construed by Paul [

19]. This robot consists of two perceptrons and one hiding wheel, whose description of action is consistent with the description of an XOR-gate [

13,

15,

28,

46]. Unfortunately, this example is rarely mentioned in more recent studies of morphological computations. Naturally, it’s not an example of analog computations, but it could be related to some form of analog computations in simpler organisms such as bacteria, in which could exist mechanisms that play a similar role to the hiding wheel in an XOR-robot. Paul’s [

19] paper is analyzed in a detailed way by Symons and Calvo [

15]. They indicate that the main significance of the XOR-robot is that it can realize a linearly non-separable function such as XOR, despite the fact that its control structures (perceptrons) cannot compute any such functions, and it achieves this by virtue of its own physical, morphological features. Casacuberta and colleagues [

46] develop this line of argument and propose more architecture similar to Paul’s robot.

Even though this example is intriguing, it is also limited. There is no easy way from these results to take on any interesting approach to the cognitive process. It merely shows that there is genuine MC. However, what we need is an argument that MC can be genuinely cognitive, so we need to extend the study of MC to more complex, sophisticated systems. It is difficult to expect any interesting generalization from Paul’s robot to, for example, the cognitive role of vertebrate bodies in cognition. Even if we have convincing examples of the cognitive role of the body in the simplest organisms, it is not obvious that this approach will generalize and remain useful for the explanation of cognition in some more complex organisms like vertebrates.

Therefore, in the rest of this paper, I focus on two other forms of morphological computing. The first is the self-structuring of information, whereby morphological features of the agent body put a structure on incoming information, mainly through some spatiotemporal coincidence, or ordering received information. This property makes incoming information easier to cope with and could be exploited for dealing with environmental challenges. I argue, in contrast to Müller and Hoffmann [

16], that self-structuring information is a genuine kind of morphological computation; this means it’s truly computational.

The second form of morphological computing is an integration of research on reservoir computing [

47] with MC [

16,

38,

40,

41,

42,

43,

48]. A reservoir is a concept “where any high-dimensional dynamic system with the right dynamic properties can be used as temporal ‘kernel’, ‘basis’ or ‘code’ to pre-process the data that it can be easily processed using linear techniques” [

48]. Therefore, if we could treat the physical body as a computational reservoir (there is some preliminary evidence that we can [

40,

41,

42,

43]), we would have strong support for the claim that the body is engaged in cognitive processing. The body as a reservoir should only be sufficiently complex (nonlinear, and with fading memory”), and thus it “can be utilized for <<computational>> tasks” [

16]. According to Hauser and colleagues “

fading memory is a continuity property of filters. It requires that for any input function

u(·) ∈

U, the output (

Fu)(0) can be approximated by the outputs

(Fv)(0) for any other input function

v(·) ∈

U that approximated

u(·) on a sufficiently long time interval [−

T, 0] in the past” [

38]. Through this property, the body can perform, for example, temporal integration.

4. Bodily Processing through Self-Structuring

Lungarella and Sporns [

49] (see also: [

50,

51]) focus on a process of: “… the self-structuring of sensory inputs through embodied action as a key principle for learning and development […].” They also emphasize that research on self-structuring information makes possible “a quantitative characterization of the relationship between environmental regularities and neural processing […]” [

49] (p. 25). The embodied cognitive systems learn, thanks to the “coordinated and dynamic interaction with environment” [

50]. This key concept in work on morphological computations, which is important for the description of the sensorimotor interaction between system and environment, is described by Lungarella and colleagues in information theoretic terms [

50,

51]. They describe an embodied agent as using bodily actions for the reduction of uncertainty and information dimensionality. They argue that the structure and placement of sensors, as well as possible movements, are determined by morphology and by degrees of freedom of an agent’s body and its “complex yet balanced interplay across multiple time scales” [

50] during the execution of an action. These actions affect the statistical structure of available information. The same acting systems with a particular morphology shape or structure the incoming information. Therefore, embodied, cognitive processing is described in terms of entropy reduction, mutual information (between sensory and motor variables) [

51], as well as complexity reduction and integration of information.

Even if this is an issue not fully elaborated in contemporary literature, varying morphologies will impose varying structures on available information. No doubt, the human eye will not structure the information in the same way as the eye of a housefly [

52]. The compound eye of the latter and the density of placements of facets make more efficient and less computationally demanding motion identification possible. However, in humans, such an important feature is, for example, the exploitation of multimodal dependencies during visuo-tactile synchronic stimulations in the case of an object’s manipulation [

37]. Despite Müller and Hoffman’s criticism that self-structuring doesn’t deserve to be called truly computational [

16], because it doesn’t meet the usability conditions and multiple computation condition, I argue that self-structuring information is computational. On the one hand, we can defend the claim that the system meets the usability condition, however not an objective but subjective form of this condition—it is used for computations by the system, of which it is a part. Furthermore, even the body, in the case of self-structuring information, does not perform differing computations; it performs only some computations.

The self-structuring information not only does meet the form of those conditions, but the morphological features of this eye are an integral part of information processing in the fly—the system uses these features to perform the computations. We can call this process preprocessing of information, or enabling efficient computations. There is no doubt, if we separate morphological from non-morphological processing in the fly’s case, that it will be hard to understand what is going on in the fly’s central processing. This leads us to the second and no less important issue related to information self-structuring. Not only morphological, but also central parts of the system are involved in self-structuring. Lungarella and Sporns [

50,

51], in their work, integrate some morphological features with features of the neural network, being in this case a kind of central controller, because a reduction of entropy and an increase in mutual information (between sensory and motor variables) needs to be not only a particular morphology, but also an appropriate control system. Lungarella and Sporns demonstrate that “…information flow in sensorimotor networks is (a) quantifiable and variable in magnitude; (b) temporally specific, […]; (c) spatially specific, […]; (d) modifiable with experience […]; (e) dependent upon morphology” [

51]. These ideas are quite new in bio-inspired robotics, the field in which Lungarella and Sporns [

50,

51] work, but similar ideas were developed in ethology thirty years ago.

Wehner proposes the theory of so-called “matched filters” [

53,

54]. The sensory system is described in terms of filters tailored to specific information from the environment, so researchers focus on “morphological design and physiological properties of the sensory organs”. They also point to “specific neural response to a crucial but complex natural stimulus” [

54]. The concept points to the evolutionary relationship between the morphology of the senses and the nervous system: “From the morphological design and physiological properties of the sensory organs to the neural circuits that process sensory information in the brain, matched filtering has constituted a major evolutionary strategy across the animal kingdom” [

54]. Thanks to this strategy, the body has adapted to the most demanding challenges of the environment and has reduced the energetic cost of coping with these challenges, so not only does it reduce entropy and increase mutual information, but it also facilitates efficient coping in the environment.

As we see in the account of self-structuring, the information morphology of a system and its central organization are integral parts of information processing process, so it is reasonable not to exclude any form of system. Müller and Hoffmann [

16] say that, in this case, morphology only pre-processes data, and should be described as “morphology facilitating perception” that is not truly computational. They say that that morphology cannot truly compute in this case because it cannot explicitly use computations, and performs only one kind of processing. However, from the presented arguments, we conclude that this is not the case, and such conclusions will be misleading in the case of natural systems. However, we also see that self-structuring is computationally non-autonomous.

5. Computing with Compliant Bodies as Reservoirs

Many contemporary cases of MC refer to studies of reservoir computations in explaining MC [

14]. As described in the previous part of this paper, a reservoir is (a) high-dimensional; (b) nonlinear; (c) with fading memory. They should be engaged “as a nonlinear kernel in the spatiotemporal transformation of incoming data”. However, a reservoir also needs a classical “read-out mechanism” [

16]. As actual research indicates, this kind of system is suitable for “classification and prediction of time series” [

48]. Therefore, contrary to studies of information self-structuring, we deal here not with special processing realized by the body of some particular morphology, but with the use of any sufficiently complex dynamical system for emulation of some computations. In their recent work, Hauser and colleagues [

38,

40,

41,

42,

43] use computation for a nonlinear mass-spring system without [

38] and with feedback [

40], and a soft silicon arm—similar to the eel, or to the arm of the octopus [

42,

43]. These simulations and experiments show that the more complex the body, the less complex the read-out system is needed to perform computations, and vice versa. In the case of Hauser’s study, adding feedback reduces the need for “fading-memory” in the body, emulating computations [

40].

In reservoir computing, the body is a kind of dynamical system. This system is dynamical, but it is time-invariant. Such a system can realize quite sophisticated computations (i.e., emulate Volterra series, see: [

38]), perform temporal integration and combination of incoming information, reduce dimensionality and stabilize pressed information. Nevertheless, it cannot optimize its own performance in changing circumstances. At the same time, the whole system can perform different computations depending on the type of the read-out system connected to the reservoir. We can speculate that, depending on the neural projections of the same body to different neural structures, the body could be used for various computations.

In Hauser and colleagues’ paper [

38], some advantages and limitations of this account are evident. On the one hand, the body is genuinely computational, in that it can perform various computations [

38], but it is quite unspecific [

38]—there is no explicit connection between a type of body and the kind of realized computation, and it cannot optimize in response to the changing environment. This kind of unspecificity is an unwanted feature in the context of the proposal in this paper of the ERB and CRB. Therefore, the body performs some computation but doesn’t perform any specific computations related to any specific kind of body, or any specific kind of morphology, and the optimization lies on the side of a read-out system or controller.

Therefore, we have a complex system analyzed as a kind of sophisticated computational device, thanks to which we can say that the body computes, but at the same time, we don’t have any specific account for morphology-type specific computations. Thus, the application of this research in favor of the embodied cognition is less evident than it might seem because, in the theory of embodied cognition, connections between some type of the body and some type of embodiment and cognition have to be detailed. Describing the body only as sufficiently complex, in the context of a solution for the body-role problem, proposed in the introduction of this paper, is insufficient. If the body type determines the way of coping with a problem, describing the body only as sufficiently complex doesn’t give enough possibilities for differentiation between bodies.

We can agree that the mechanical properties of passive-dynamic walkers (purely mechanical devices, which enable efficient walking on slopes [

55,

56]), not connected to any read-out system are without any doubt non-computational. In addition, it is not certain that we can make this kind of system mobile and complex enough to perform reservoir computing. For these reasons, we exclude it as a possible computational system (in accordance with usability constraint). Therefore, passive-dynamic walkers are not computational at all. However, in the case of self-structuring, we have part of the computational system, but it is non-autonomously computational, so some read-out system is required. In the case described by Lungarella and Sporns [

50,

51], it is a neural network. Earlier remarks on matched filters reinforce our claim that the body is described as a preprocessing device and filtering device. We know, thanks to mentioned examples, that the body can compute (the case of reservoir computing by the compliant body). However, as we can see, this computational power is always related to some reading device related to the compliant body as a reservoir. The universality of this kind of morphological computation is read-out system-dependent. Therefore, the part of the body is computational but always as part of some larger system.

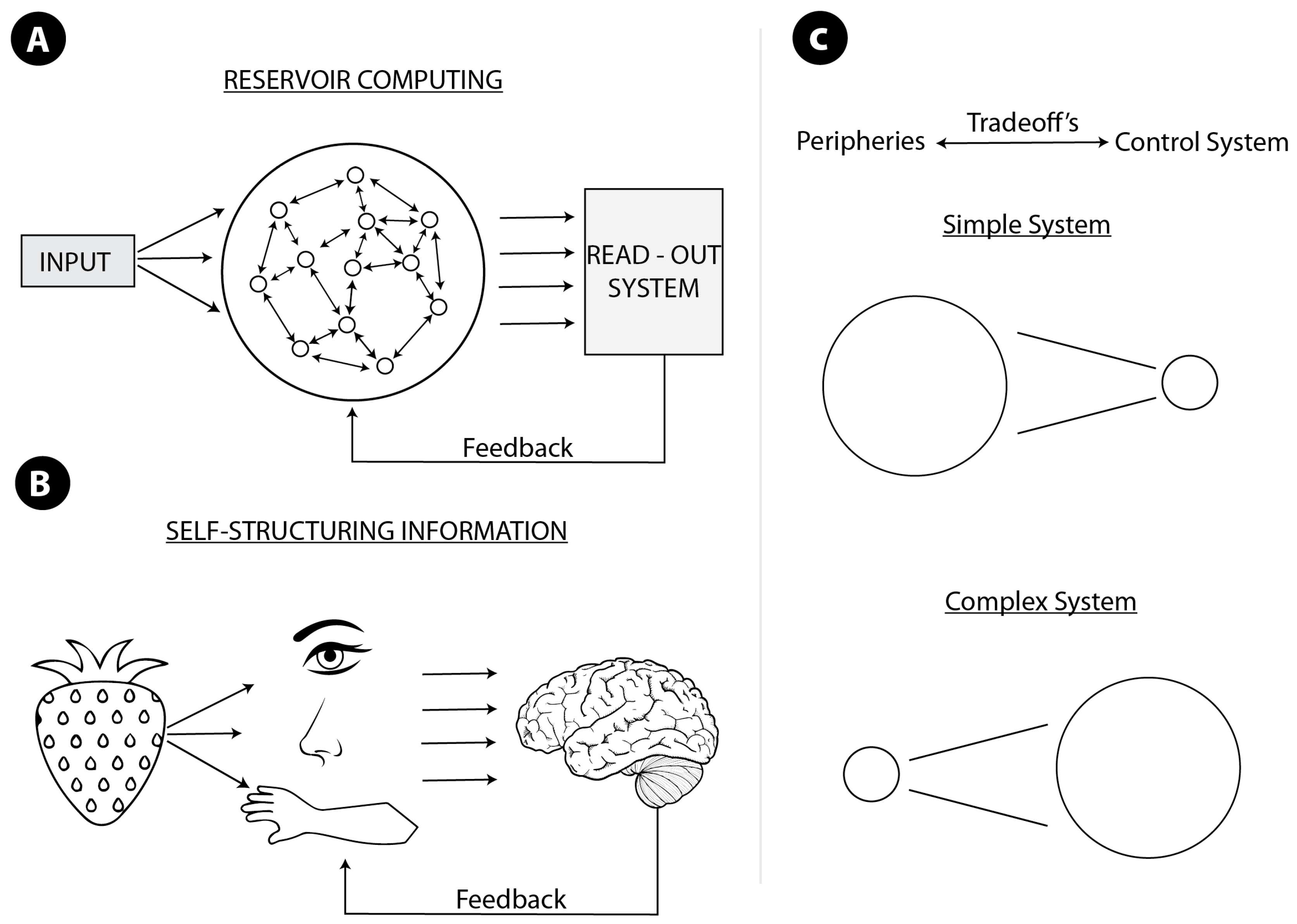

From these remarks, we see more similarities between the body as self-structuring and the body as a reservoir (see:

Figure 1A,B). In addition, what is no less interesting, theorists of reservoir computations describe reservoirs as preprocessing systems [

47], just as Müller and Hoffman [

16] describe the body as self-structuring. As mentioned earlier, there is no reason to doubt that morphological computation (self-structuring information, and the body as a computational reservoir) can be consistent with usability constraints, despite Müller and Hoffman’s [

16] criticism. I will show, in the last section of this paper, that there is a possible view of computational systems compatible with presented arguments: the mechanistic approach to the wide computational mechanism. Now, we can focus more on the interesting issue tradeoffs between central and morphological computations.

6. Tradeoffs in System with Morphological Computations

Many researchers study tradeoffs between sensory systems and forms of more central processing (see:

Figure 1C). Researchers working on morphological computations argue that we can call MC a computation because, thanks to the specific morphology, some tasks can be solved using less demanding central computations. Thus, if some computations are needed to solve some problem i.e., grasping a cup, thanks to the design and material properties of a hand, the program controlling grasping can be simplified. If this is true, and the assumption that grasping always involves the same number of computations, we can conclude that the mentioned hands perform this computation. Even if we follow Müller and Hoffmann [

57] in arguing that even if some morphological features of the body, e.g., the viscosity of the hand, can reduce the number of computations needed for task realization, e.g., cup grasping, we should not conclude that a viscous hand performs some computations. However, we can see that there is an implicit tradeoff between the role of the body (even if this role is not computational) and the role of the central system in movement control and other issues. In accordance with these remarks, I defend the claim that MC is genuinely computational, but it is not autonomous, and its computational nature depends on the connection with some kind of central system. All examples of MC are related to some non-morphological computations. Therefore, we can suppose that if we deal with morphological computations, then there is, in most cases, some kind of tradeoff between the role of morphological and nonmorphological computations. There is a close connection between morphological and non-morphological computations: that MC always comes with non-MC. Moreover, there is a continuum of tradeoffs from systems based mostly on morphological systems to systems mostly based on non-morphological computations. We can also indicate a relation between the body and control system co-evolution, showing that successful modeling needs to model not only a control or controlled system but also the relation between them [

57,

58].

Of course, in our situation, each tradeoff can be analyzed. Here we differentiate (a) task-related tradeoffs; and (b) hardwired tradeoffs. In the former case, tasks force or enable a system/organism to allocate processing of the solution more on central or more on peripheral systems. In the latter case, an alternative system anatomy determines that all solutions to some kind of problems are based more on central or more on the peripheral system. Here, we concentrate on the latter, which determines the previous.

I assume that tradeoffs need at least two components between which a tradeoff is accomplished. Without one of the components, there is merely non-morphological computation or no computations at all. I base my argument on contemporary work about the tradeoff between central and bodily processing. This idea was present at the start of research on MC [

58], but more advanced research has only just started, and the idea is gaining attention amongst researchers [

57,

59,

60]. They show that, in systems with specific morphologies, the more demanding tasks can be realized using simpler (cheaper) controls. In each system, the role of the non-neural body parts in computation may vary. Tradeoffs are observed, as we said earlier, in the matched filters theory. In this theory, as well as in previous ones, it is clear that there is a strong relationship between peripheral and more central processing. More complex and more tailored processing methods on the periphery reduce the complexity of central processing, but also vice versa, as we see in case work on morphological computations with compliant bodies as reservoirs [

38,

40]. Systems without feedback need more complex bodies, with fading memory, but systems with more complex controllers/readouts, can perform equally sophisticated computations with simpler bodies, without fading memory. In the next section, I will illustrate my argument using the example of a mantis shrimp’s visual systems.

7. Mantis Shrimp Color Vision and Tradeoffs

Some authors in the context of MC concentrate on visual systems in a housefly [

52] or blowfly [

54]; others on locomotion and coordination in octopuses [

61], others work on electric eels or bats [

4,

30]. We here direct our attention to the visual system of a mantis shrimp.

Here, I choose the case of vision, which is present only in highly organized systems. Keijzer and Arnellos [

32] describe vision as a sensory sensitivity available only for a multicellular organism, a characteristic for multicellular embodiment:

“While sensitivity to environmental features at a […] unicellular scale is essential to maintain any living organization, in animals with active bodies an organization is present that has such sensitivity at the multicellular level. True (image forming) eyes are a good example. Here the spatial visual structure is somehow taken up by the animal, in a way that is not organized within any individual cell but spread out across several cells where the position of these cells with respect to one another becomes central. In this case, the individual cells are no longer the single level at which the organism interacts with ‘the’ environment but the multicellular organization brings in its own additional organization allowing the environment to expand into the macroscopic configurations that we ourselves inhabit.”

The visual system, its anatomy, and functionality are nontrivially related to the multicellular structure of organisms, with bodies actively exploring the environment. In this context, I want to make general comparisons between the color vision in humans and crustaceans, more specifically, the mantis shrimp. We will see here tradeoffs between receptors and processing available data.

There is no doubt that environment is important for understanding the perceptual abilities of various animals, but the internal organization shouldn’t be omitted. The unique anatomy of a mantis shrimp’s visual system is well known [

62,

63]. Of course, we cannot omit the fact that a mantis shrimp is a marine creature. Thus, the mantis shrimp’s environment is quite different from the human environment, with some differences, for example in light refraction. However, mantis shrimps are also very different from other crustaceans [

63]. This creature lives in shallow waters, in a rich environment with very high light variance. While human color perception is trichromatic (three kinds of photoreceptor are involved in vision), it is known that the mantis shrimp’s color vision is dodeca-chromatic. As Marshall and Arikawa elucidate [

63], mantis shrimps have a variety of receptors in their visual system: “twelve for color, six for polarization, and two with an overlapping function for luminance tasks”. Therefore, as Thoen and colleagues [

64] notice, they should be excellent in a test on wavelength discrimination, but it’s quite the opposite. Mantis shrimps are very poor performers in wavelength comparisons. This rules out the possibility that their central processing in the case of color perception can be similar to color processing in many other organisms, especially primates. Two hypotheses about possible mechanisms of color vision in mantis shrimps were proposed: “(i) multiple dichromatic color-opponent systems […]; or (ii) banning of colors in 12 separate channels without any between-channel comparisons” [

64]. These hypotheses were verified using the following test: “test colors were presented together with the trained colors at varying wavelength intervals to determine at what point the animal could no longer discriminate between the two stimuli (i.e., when the success rate dropped to 50%)” [

64]. It turned out that “when the interval between the trained and test wavelengths was reduced to between 25 and 12 nm (mantis shrimps perform this task well when this interval is larger), the success rates dropped to around 50%, and it was clear that the stomatopods could no longer distinguish test from trained stimuli” [

64]. The authors argue that the mechanism from the first tested hypothesis “predicts very fine discrimination between 1 to 5 nm through the spectrum. Such fine spectral discrimination would be expected in the visual system that made analog comparisons between adjacent spectral sensitives” [

64]. However, the results are quite the opposite, so they support the mechanism from the second of the proposed hypotheses.

Additionally, the uniqueness of a mantis shrimp’s eyes is not reduced to a multiplicity of receptors; it also performs extraordinary scanning eye movements. Thoen and colleagues [

64] proposes that “scanning eye movements may generate a temporal signal for each spectral sensitivity, enabling them to recognize colors instead of discriminating them […]. This system is comparable to spectral linear analyzers”. This way of processing available data “enables the stomatopod to make quick and reliable determinations of color, without the processing delay required for multidimensional color space”. This is not insignificant, in the context of the predatory activity of mantis shrimps in their colorful environment.

As we can see, an organism with fewer photoreceptors can compute color information in a more computationally demanding process of multiple comparisons as in the human case, but an organism with such a complex color system as the mantis shrimp’s must reduce the complexity of internal processing. This is an excellent example of the tradeoffs described above. The system based more on peripheral, morphological processing can (or sometimes even must) reduce the complexity of internal processing, and exploit the periphery. However, systems with simpler morphology, more based on periphery, must use more complex processing. We are convinced that this is not an example of the irrelevancy of morphology in an organism with a more central way of dealing with problems, but for constant tradeoffs between central and peripheral processing. In less complex organisms and artificial systems, the body could be mainly the solution to that problem; it plays mainly an enabling role. Nevertheless, in more complex systems, the body is often also the problem, and plays a constraining role. Therefore, more complex systems, before exploiting properties of their own bodies (perhaps to some limited degree), should learn their properties because more central control units have to be properly calibrated to their complex processing. This is necessary for coping reliably with environmental problems.

Naturally, mantis shrimps are not the only good example. There are studies on octopuses, bats, electric eels, and geckos that also provide fuel for further research on embodied computational cognition. As Miłkowski [

65] notices, each periphery determines its own individual central solutions, which are successful only in connection with a particular kind of periphery. Human eyes with the central processing of a mantis shrimp would yield extremely poor vision, and a mantis shrimp’s eye with human central processing would be computationally intractable. Miłkowski [

65] also presents an interesting relation between humans and octopuses, where octopuses with human eyes would be almost blind because they have not developed the internal processing necessary for dealing with the blind spot present in human eyes. Now, we can move to the last issue of this paper, the account of MC as genuinely computational.

8. Wide Mechanisms and Non-Autonomous Computations

The main goal of this paper is to show that the body can play a “significant causal” and “physically constitutive” role in cognitive processing. Defending such an approach, I argue that morphological computations can be described as non-autonomous (central system-dependent) computations. This means that they are not autonomously computational, but play a genuinely computational role in connection with some read-out or control systems, and at the same time, they are cognitively important, but only in the context of the whole system. Without the context of tradeoffs, we cannot show the real importance of morphological computations in cognition. In the case of self-structuring information, morphologies determine what information, how and in what order it is available for further stages of processing, and generates the specific dependencies and ordering of available information. Therefore, in this processing (or computation), not only is the sensory system but also the motor system engaged. We call this, like Müller and Hoffmann [

16], preprocessing. The preprocessing system determines the kind of more central processes that are involved, and constitutes an integral part of the processing system. In the case of the body as a reservoir, the body is computing, but there is always a need for a read-out or control system.

Therefore, we know that the body could compute and make a difference. Some contemporary studies integrate computation and the mechanistic view with the work on embodied and extended cognition, which can provide support for our endeavor. There are at least four such studies: Miłkowski [

13] proposes an interpretation of morphological computations in terms of a computational mechanism; Kersten [

31] proposes mechanistic interpretations of Wilson’s [

3] wide computationalism; Roe and Baumgaertner [

66] propose an account of extended mechanistic explanations. Kaplan [

9] in his mechanistic research on cognition boundaries extends it beyond the neural system. Despite evident differences, these authors argue for an extension of mechanism beyond the nervous system, which may include morphological computational parts. For the sake of clarity, I present a few remarks on these differences.

Kaplan [

9] defends the mechanistic approach to cognition, Miłkowski [

13] and Piccinini [

67] integrate mechanistic and computational approaches to cognition. However, they differ in the case of the relation (this is not the only difference, but I leave the rest of them aside) between computation and information processing. In this case, I follow Miłkowski [

13] in assuming that functional information processing is sufficient for computation. However, like all of these authors, I believe that the best explanation in cognitive science is mechanistic, so I look for the possibility to apply the mechanistic approach to MC. Kaplan’s remarks are helpful because he indicates the possibility of integration of embodied cognition with mechanistic explanations.

In this endeavor, I differ slightly from Miłkowski, and believe that MC need special treatment in the context of embodied cognition. For this reason, I follow Kersten [

31], and his work on wide computational mechanism (Roe and Baumgaertner [

66] propose an alternative extension of mechanistic explanations). Miłkowski [

13] is interested in indicating the similarities between morphological and non-morphological computations; my aim is to indicate differences between them, and to argue that MC are computations despite these differences.

I concentrate here on Kersten’s paper, in which he states that some physical computing cognitive systems might be entirely ensconced within the body, but some might as easily spread out over the brain, body, and the world [

31]. In his analysis, Kersten [

31] describes examples of the bat sonar system and blind human spatial navigation. Kersten [

31], following Piccinini [

67], enumerates features of the computational mechanism. First, it must be a kind of functional mechanism. Second, it must compute some mathematical function. Third, it must compute its function via the manipulation of medium independent vehicles. Kersten [

31] argues that the sonar navigation system in bats extends not only through the body but also to the environment. It is functional and realizes the function of object detection. However, medium independence is achieved by using, in this analysis, Gibson’s [

68] idea of structural invariances, some “structural regularities in the environment […] picked up by organism’s perceptual system”. Such invariances are vehicles of computations, according to Kersten.

I believe that in the context of our previous remarks, it is evident that a system performing morphological computations is a kind of functional mechanism and realizes at least one computational function, from movement detection in a housefly, or the detection of a female in a blowfly [

53,

54], to a whole number of functions in the case of the body as a reservoir. Perhaps some controversies can be involved in the possibility of medium independence. Because some authors describe MC as a physical processes [

69], in such cases, substrate neutrality seems to be questionable. It should be remembered that this a computational process realized by a physical system. However, Kersten’s [

31] proposal to use Gibson’s idea of invariance fits well with our view. It seems quite natural to interpret morphological properties as kinds of structural invariances and to describe them as vehicles of computations.

Thanks to the application of Kersten’s [

31] solution, we can say that MC are really computational. We can omit here the reference to usability constraint for computation. Of course, this solution can only be a starting point for further detailed analysis of this issue. For example, more research is needed for the integration of a mechanistic approach to morphological computations with the claim that they are a kind of analogical computation. Even if some work is done, there is still no full-blown mechanistic account of analog computations [

64] or on integration in this context of analog and digital computations because there are some arguments regarding the benefits of combining these two kinds of computations [

70].