1. Introduction

Small systems are continually bombarded by noise from their surroundings. Sometimes this noise is helpful; thermal and chemical fluctuations are the fuel that power biological molecular motors [

1]. More often, though, noise is a nuisance. Fluctuations in gene expression or transcription can lead to errors in downstream macromolecules, like RNA, that can be detrimental to a cell’s function [

2]. Noise can also interfere with the functioning of artificial mesoscopic devices, such as micromechanical [

3] and nanomechanical resonators [

4,

5]. In all these situations, an ancillary control mechanism can be employed to suppress fluctuations. This can take the form of a kinetic proofreading scheme [

2] or the addition of an auxiliary control device that employs active feedback, as in a Maxwell’s demon [

6,

7,

8,

9,

10,

11,

12].

No matter the control mechanism, the effect is to force the system into a statistical state distinct from its noisy equilibrium, where it will inevitably dissipate energy. Thus maintaining a system away from equilibrium comes with an energetic cost. Attempts at predicting the properties of such nonequilibrium states by minimizing the energy dissipation have a long history, starting with Prigogine and coworkers [

13] within linear irreversible thermodynamics (see also [

14]). However, it seems no such general variational principle exists beyond the linear regime [

14,

15,

16]. As such, our goal in this work is not to characterize the nonequilibrium state through a thermodynamic variational principle. Instead, we aim to characterize the energetic requirement to hold an originally equilibrium system in a prescribed out-of-equilibrium state using an additional external control system that does not alter the original system’s properties. Indeed, previously in Refs. [

17,

18], we showed that for specific classes of externally imposed controls, this minimum energetic cost could be expressed simply in terms of the systems underlying equilibrium dynamics. In this Article, we expand this program to include new control mechanisms, and in the process offer a unifying perspective on these previous results. In particular, we demonstrate that for various control mechanisms the minimum entropy production (or dissipation) to keep a mesoscopic system in a specified nonequilibrium distribution can be expressed as a time derivative of the relative entropy between the target distribution and the uncontrolled equilibrium Boltzmann distribution. This information-theoretic characterization quantitatively characterizes the intuitive notion that the farther a system is from equilibrium the more energy must be dissipated to maintain it.

2. Setup

We have in mind a small mesoscopic system making random transitions among a set of discrete mesostates, or configurations,

, each with (free) energy

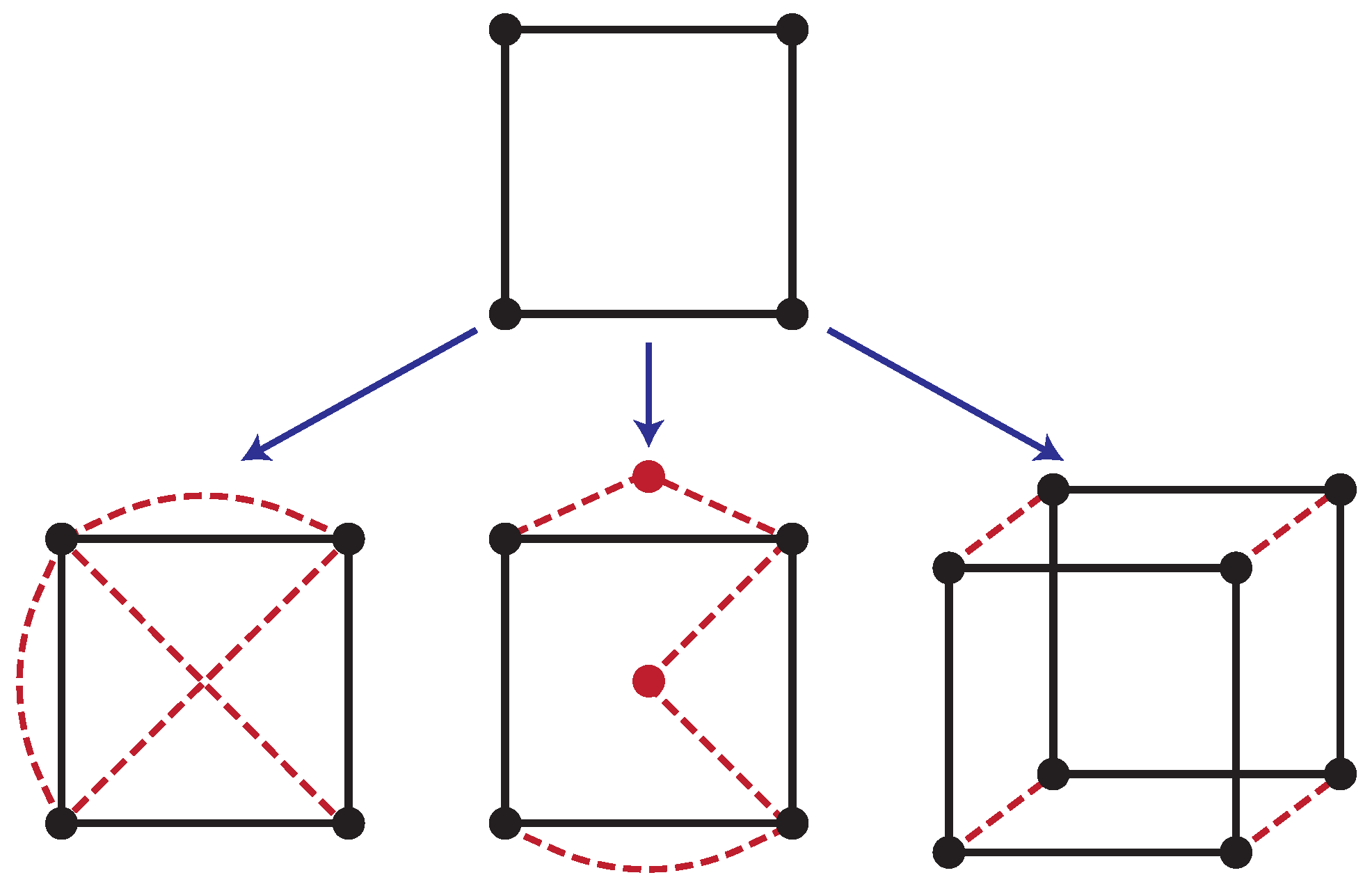

. We can visualize this dyanmics occurring on a graph (or network) like in

Figure 1, where each configuration is assigned a vertex (or node), and possible transitions are represented by edges (or links).

The dynamical evolution is modeled as a Markov jump process on our graph according to transition rates

from

, with

only when

, so that every transition has a reverse. As such, the system’s time-dependent probability density

evolves according to the Master Equation [

19]

which constitutes a probability conservation equation with probability currents

Now, in the absence of any external control, we assume that our system relaxes to a thermal equilibrium steady state at inverse temperature

, given by the Boltzmann distribution

with equilibrium free energy

. To guarantee this, we impose detailed balance on the transition rates [

19,

In equilibrium, each transition is counter-balanced by its reverse. Our goal is then to maintain the system in a predetermined target nonequilibrium steady state

, and to characterize the minimum dissipation necessary.

3. Minimum Dissipation to Suppress Fluctuations

We are interested in pushing and holding our system into a statistical state distinct from the equilibrium Boltzmann distribution. One could imagine a variety of schemes to accomplish this goal. Perhaps the simplest, is if we have complete control to vary the system’s energy function

. We could then hold the system in the statistical state

by shifting all the energy levels to

, thereby making the target state the new equilibrium state:

. After an initial transient relaxation, the system would then remain in

indefinitely as it is in equilibrium. While there is a one time energetic cost to vary the energy levels equal to the free energy difference [

20], the system can be held in

for free. Implementing such a protocol, however, generically requires very fine control over all the individual energies, which often is prohibitive [

20]. As a result, there are a number of situations where this is not possible or not desirable. For example, nature does not utilize this control mechanism; in cells, where the free energies of molecules are fixed, noise reduction is implemented by coupling together various driven chemical reactions that constantly burn energy [

2,

21,

22]. Whereas fluctuations in quantum mesoscopic devices are often suppressed by coupling an auxiliary device that continually and coherently extracts noise through feedback [

4,

23,

24,

25].

Motivated by this observation, we analyze control mechanisms where we cannot alter the internal energies

. Instead, the statistical state of our system is manipulated by introducing additional pathways. In particular, the scenarios we address, depicted in

Figure 1, are: (i) Edge control—additional driven transitions (or edges) are added with transition rates

, which model the coupling of additional thermodynamic reservoirs; (ii) Node control—additional configurations are incorporated and coupled to the original network through driven transitions with rates

, allowing for ancillary intermediate configurations, such as in dissipative catalysis; (iii) Auxiliary control—an entirely new system is coupled to the controlled system, as in feedback control; and finally (iv) Chemical control—where new chemical reactions are included. Though ostensibly a special case of edge control, it adds new complications due to the possibility of breaking conservation laws. The addition of such controllers alters the system’s dynamics leading to a modified Master Equation (cf. (

1))

Yet no matter which control mechanism is employed, we assume the net effect is to push our target system into the nonequilibrium steady state

. While designing such control is generically a challenging problem, we take it as a given and instead focus on the minimum cost.

Our only assumption is that the additional control transition rates satisfy a local detailed balance relation connecting them to the entropy flow into the thermodynamic reservoir that mediates the transition [

26,

27]:

For example, if we implement control by coupling a thermal reservoir at a different inverse temperature

, then we require

, which is proportional to the heat flow into the environment.

The local detailed balance relation implies that our super-system, composed of the system of interest and the controller, with rates

, where

specifies an uncontrolled or controlled transition, satisfies the second law of thermodynamics: Namely, the (irreversible) entropy production is positive

which is typically split into the derivative of the Shannon entropy

and the entropy flow

Our goal will be to bound the entropy production (or dissipation) over all controls that fix the steady state distribution to be

. Due to the local detailed balance relations (

3) and (

5), we can always connect the dissipation to the underlying energetics. Thus, our bound on the entropy production can always be reframed as a minimum energetic cost.

3.1. Edge Control

We begin our investigation with the edge control scheme, where we add a collection of additional edges to the graph, corresponding to new transitions mediated by additional thermal or chemical reservoirs. This analysis was originally carried out in [

17]. We briefly review it here, as this control scheme is the simplest and all the following developments will build on it.

In this scenario the super-system produces entropy in the controlled steady state

at a rate

We now wish to bound this sum solely in terms of properties of the system’s environment as codified by the

and the target distribution

.

To this end, we observe that not only is the total entropy production positive, but link by link the entropy production is positive,

[

12], which follows readily from the inequality

. Thus, each control edge only contributes additional dissipation, implying that the only unavoidable dissipation occurs along the system’s original links:

No matter how control is implemented, the system will inevitable make jumps along the original links, and those will on average dissipate free energy into the environment when the system is held in the target state

.

We now offer some physical insight into the meaning of (

10). To this end, we recognize that

is the entropy production rate of the equilibrium dynamics when the statistical state is the target state

. In other words, it represents the instantaneous entropy production we would observe if we turned off the control and allowed

to begin to relax to equilibrium. An enlightening reformulation of this observation is offered by recalling the intimate connection between the time derivative of the relative entropy,

[

28], and the entropy production rate:

which is a direct consequence of detailed balance (

3). As such, we immediately recognize that the minimum dissipation (

10) can be equivalently formulated as

where the derivative

should be understood to operate on

as if it were evolving under the uncontrolled equilibrium dynamics. As the relative entropy is an information-theoretic measure of distinguishability [

28], Equation (

12) quantifies precisely the intuitive fact that it costs more to control a system the farther it is from equilibrium.

We note that this analysis immediately offers the condition under which we saturate the minimum. As our bound originates in setting aside the extraneous entropy production due to the control transitions, we immediately find as a consequence that this additional entropy production is zero when the control transitions operate thermodynamically reversibly. This requires them to operate much faster than the system dynamics, so that at any instant the system is locally detailed balanced with respect to the control transitions on a link-by-link basis. In other words, the optimal transition rates must verify

Indeed, this implies the optimal dissipation on each driven link (cf. (

5)) should be

3.2. Node Control

More than a fundamental result, the preceding analysis outlines an approach for characterizing the minimum dissipation to hold a system out of equilibrium. We now carry out this analysis again in a new scenario, but allow the addition of

C extra nodes in the network and edges connecting them (

Figure 1).

When we add additional nodes, the system plus controller will have

configurations, with a steady-state distribution

over the super-system. Now, in this case control will be successful when in the resulting steady state the relative likelihood of the

N original states are in the target distribution

, where

. Unfortunately, this is not sufficient to fix the dissipation rate on the original set of links, as the currents are left undetermined. Indeed, it is possible to have the subset of

N system nodes in the target distribution

, but have

small; leading to small currents on the uncontrolled links

and negligible dissipation (cf. (

6)). Thus to arrive at a sensible bound we must also fix the probability currents

on the original uncontrolled links, or equivalently

, the total probability to be in the original configurations. In effect, we are maintaining the function of the system, as the currents represent different possible tasks for the system, e.g., they are the rate of production of a molecule or the rate at which heat flux is converted into useful work.

With this setup, the minimum entropy production rate is again given by the entropy production on the original undriven links in the global steady state

To make this an expression that only depends on

and

, we substitute

to find

Again, the minimum dissipation is dictated by how different the target state is from equilibrium, but here weighted by the total probability

, which fixes the system’s currents. Similarly, optimality is reached when the additional edges that connect the control nodes are very fast, minimizing their contribution to the entropy production.

3.3. Auxiliary Control

Another scheme for control is the addition of an entirely new system, which we call the auxiliary. This scheme was original analyzed in [

18] for quantum mesoscopic devices modeled by a Markovian quantum Master equation. In an effort to unify various results, we recapitulate this argument here, translated into classical language.

We now amend our state space with the addition of an auxiliary control system with states

, so that each configuration of the super-system is labeled by the pair

. Transition rates of the original system are assumed unaltered, but the new transitions between auxiliary states

must depend on the system state in order to implement the feedback control. Such a structure is called bipartite [

10,

29,

30,

31], and is captured in the graph structure by the absence of diagonal links where the system and auxiliary transition simultaneously (

Figure 1). Here, control is successful when the steady-state distribution

has a marginal distribution on the system that is the target distribution

.

Again we can bound the total entropy production in the system plus auxiliary with the entropy production on just the system links

At this point the lower bound still depends on the full distribution

over the entire super-system. However, if we coarse-grain over the auxiliary, we can use the monotonicity of the relative entropy under coarse-graining [

28], to weaken the bound to

This result was originally derived in the context of quantum mesoscopoic devices [

18]. Here we have reframed it in classical language.

3.4. Controlling Chemical Reaction Networks

As a final scenario, we turn to the control of a chemical reaction network. This scenario adds an additional complication: the incorporation of additional control reactions can break an underlying conservation law of the equilibrium dynamics [

32,

33]. For example, adding a chemostat that exchanges matter breaks the conservation of particle number or mass. This observation requires a slight modification of (

12).

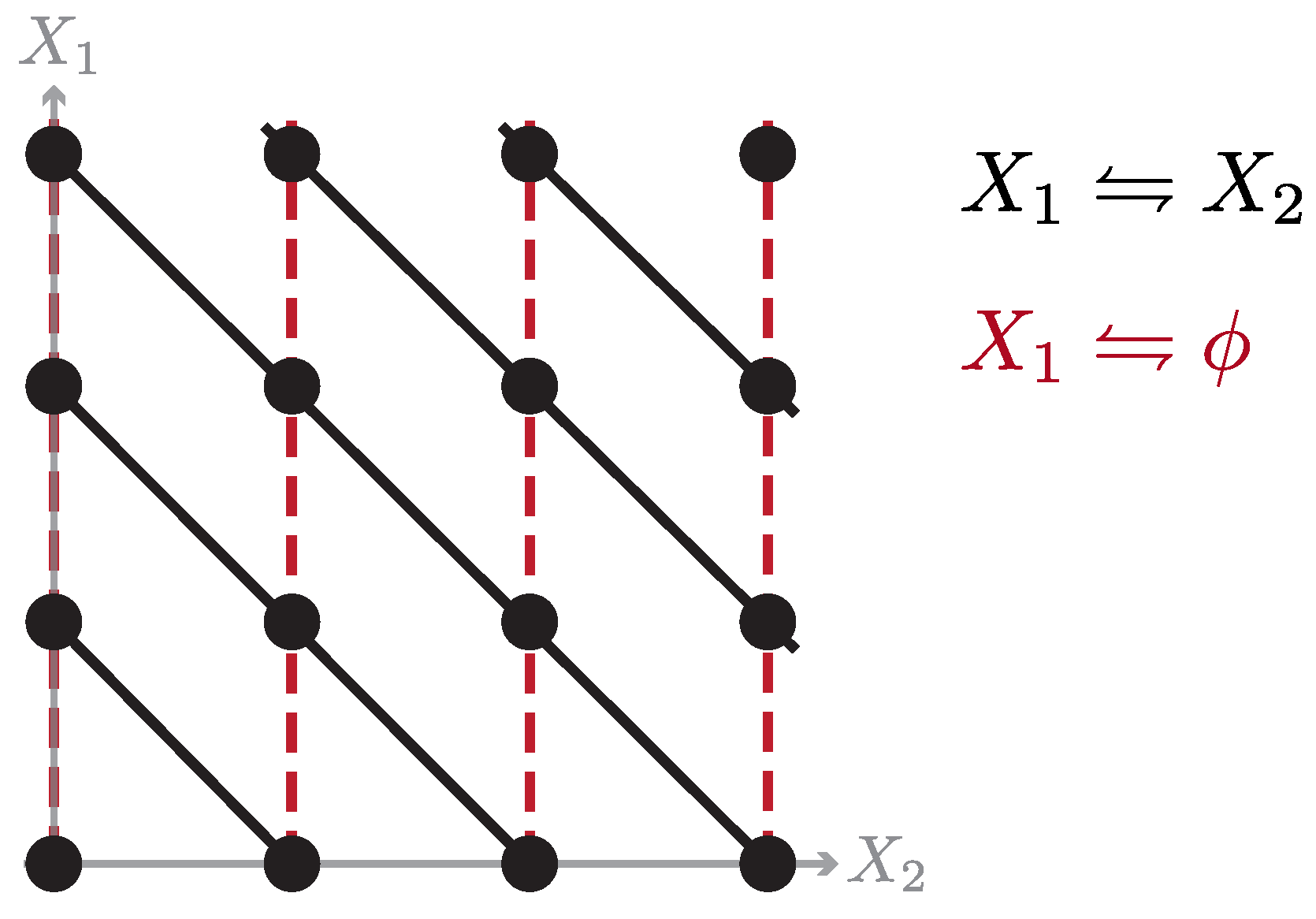

To set the stage, consider a chemical reaction network with configurations specified by the vector of chemical species number

. Transitions then correspond to chemical reactions that change the value of

subject to system-specific constraints; an illustrative example of which is pictured in

Figure 2. For simplicity, we take the only constraint to be particle number

. In which case, the equilibrium steady state is a Poisson distribution constrained to the manifold of fixed particle number,

[

19]; and detailed balance respects the constraints as well:

Now, for control we add new reactions that maintain the system in a fixed target distribution

over chemical space, which may not respect our particle number constraint, i.e., it may have support on configurations

that have different numbers of total particle number (cf.

Figure 2). To make this explicit, we split the target distribution

into two controlloable pieces: the conditional probability given the total particle number,

and the probability to have

particles,

. With this splitting, the minimum dissipation to maintain

again is only due to the entropy produced in the original reactions

An information-theoretic interpretation is provided by recalling that the equilibrium transitions

conserve particle number, to find

In a chemical system, the minimum dissipation depends only on how different the target distribution is from the equilibrium distribution on the conserved sectors, whereas shifting only the number distribution

can in principle be accomplished for free. This conclusion should remain true when there are additional conservation laws as well. Note that when the target distribution conserves particle number,

and we recover (

12).

4. Discussion

We investigated the minimum entropy production or free energy dissipation to maintain a system in a target nonequilibrium distribution using ancillary control. We found that in a variety of scenarios this minimum cost can be formulated using the information-theoretic relative entropy as a measure of how distinguishable the target nonequilibrium state is from equilibrium. Our analysis further revealed that the minimum is reached when the driven control transitions operated reversibly.

As in previous analyses of nonequilibrium thermodynamics, the relative entropy [

34,

35,

36,

37,

38,

39] appeared as a key tool in characterizing dissipation. In these previous works, however, the relative entropy compared the true evolution of the system to the underlying stationary state. By contrast, here we find that when using external control the cost is characterized by the time-variation of the relative entropy under a fictitious uncontrolled equilibrium dynamics, evaluated against the unperturbed equilibrium state.

Looking ahead, we note that while we had in mind throughout the paper autonomous control, nonautonomous control through reversible hidden pumps offer an intriguing alternative to saturate our energetic bound [

40]. Additionally, we have focused on the average or typical behavior of the state of our system, but a number recent predictions, collectively known as thermodynamic uncertainty relations, relate the dissipation to fluctuations in currents or flows [

41,

42,

43,

44,

45]. Such work suggests that it would be intriguing to understand how our lower bound is modified, when one wants to use external control to constrain not just the typical state, but fluctuations as well. Our hope is that the approach developed here offers the possibility of quantifying the minimum energetic cost of nonequilibrium states in other more general scenarios.