Robust Automatic Modulation Classification Technique for Fading Channels via Deep Neural Network

Abstract

:1. Introduction

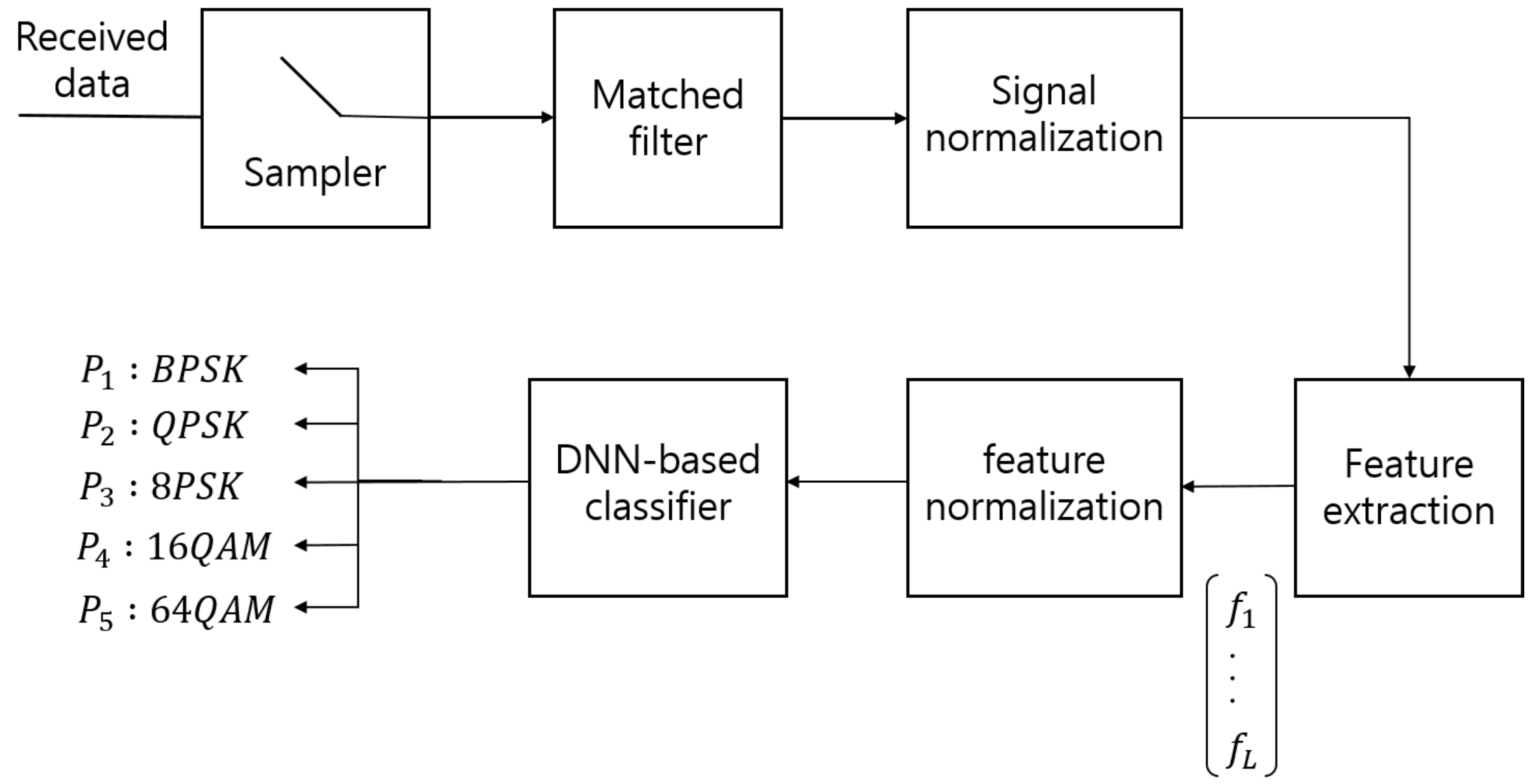

2. System Model

3. Feature Extraction for AMC

- and

- Scenario 1. AWGN channel, signal to noise power ratio SNR = 5 dB

- Scenario 2. Rayleigh flat fading channelm SNR = 5 dB, Doppler frequency = 50 Hz

- Scenario 3. Rayleigh flat fading channel, SNR = 5 dB, Doppler frequency = 100 Hz

- Scenario 4. Rayleigh flat fading channel, both SNR and Doppler frequency are unknown. SNR is uniformly distributed between [, 15] dB and the Doppler frequency lies between [50, 100] Hz.

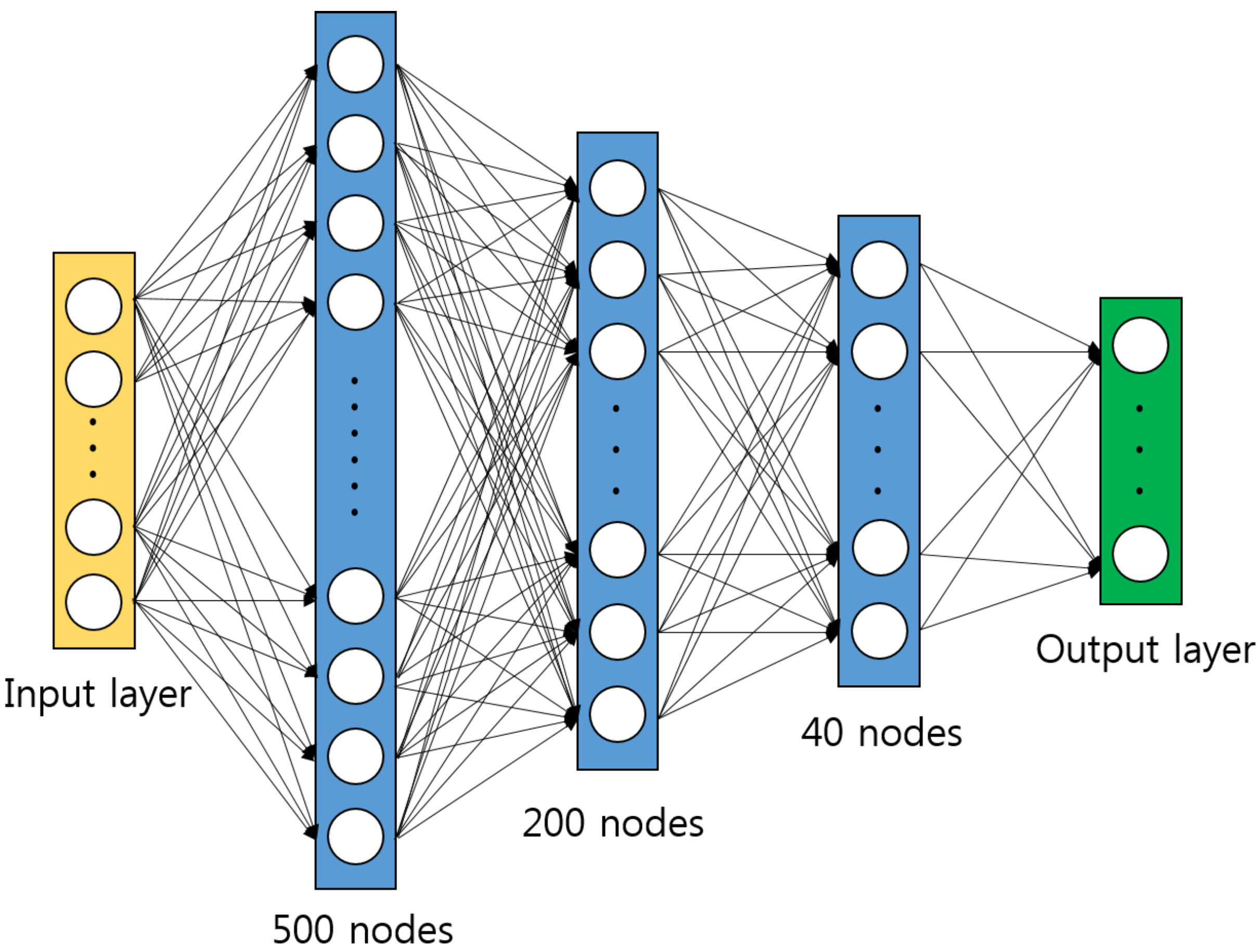

4. DNN-Based AMC Method

4.1. DNN Structure

4.2. Training

4.3. Test

5. Experiments

5.1. Simulation Setup

5.2. Simulation Results

- Artificial neural network algorithm 1 (ANN1) [12]: 10 features (, , , , , , , , , ) are used with the feedforward neural network with single hidden layer.

- Artificial neural network algorithm 2 (ANN2) [13]: 6 features (, , , , , the mean of signal magnitude) are used with the feedforward neural network with single hidden layer.

- Support vector machine algorithm (SVM) [10]: 4 feature vectors (, , , ) are used with SVM.

- Hierarchical classification scheme (HCS) [9]: 3 feature vectors (, , ) are used with decision tree classifier.

6. Conclusions

Acknowledgments

Author Contributions

Conflicts of Interest

References

- Zhu, Z.; Nandi, A.K. Automatic Modulation Classification: Principles, Algorithms and Applications; John Wiley & Sons: River Street Hoboken, NJ, USA, 2014. [Google Scholar]

- Dobre, O.A.; Abdi, A.; Bar-Ness, Y.; Su, W. Blind modulation classification: A concept whose time has come. In Proceedings of the IEEE/Sarnoff Symposium on Advances in Wired and Wireless Communication, Princeton, NJ, USA, 18–19 April 2005; pp. 223–228. [Google Scholar]

- Hazza, A.M.; Alshebeili, S.; Fahad, A. An overview of feature-based methods for digital modulation classification. In Proceedings of the 1st International Conference on Communications, Signal Processing, and Their Applications, Sharjah, UAE, 12–14 February 2013; pp. 1–6. [Google Scholar]

- Azzouz, E.; Nandi, A.K. Automatic Modulation Recognition of Communication Signals; Springer Science & Business Media: New York, NJ, USA, 2013. [Google Scholar]

- Dobre, O.A.; Abdi, A.; Bar-Ness, Y.; Su, W. The classification of joint analog and digital modulations. In Proceedings of the IEEE Military Communications Conference, Atlantic City, NJ, USA, 17–21 October 2005; pp. 3010–3015. [Google Scholar]

- An, N.; Li, B.; Huang, M. Modulation classification of higher order MQAM signals using mixed-order moments and Fisher criterion. In Proceedings of the 2nd International Conference on Computer and Automation Engineering, Singapore, 26–28 February 2010; pp. 150–153. [Google Scholar]

- Huang, F.; Zhong, Z.; Xu, Y.; Ren, G. Modulation recognition of symbol shaped digital signals. In Proceedings of the International Conference on Communications, Circuits and Systems, Xiamen, China, 25–27 May 2008; pp. 328–332. [Google Scholar]

- Xin, Z.; Ying, W.; Bin, Y. Signal classification method based on support vector machine and high-order cumulants. Wirel. Sens. Netw. 2010, 2, 48. [Google Scholar]

- Swami, A.; Sadler, B.M. Hierarchical digital modulation classification using cumulants. IEEE Trans. Commun. 2000, 48, 416–429. [Google Scholar] [CrossRef]

- Li, P.; Zhang, Z.; Wang, X.; Xu, N.; Xu, Y. Modulation recognition of communication signals based on high order cumulants and support vector machine. J. China Univ. Posts Telecommun. 2012, 19, 61–65. [Google Scholar] [CrossRef]

- Han, G.; Li, J.; Lu, D. Study of modulation recognition based on HOCs and SVM. In Proceedings of the IEEE 59th Vehicular Technology Conference, Milan, Italy, 17–19 May 2004; pp. 898–902. [Google Scholar]

- Popoola, J.J.; van Olst, R. Automatic recognition of analog modulated signals using artificial neural networks. Comput. Technol. Appl. 2013, 2, 29–35. [Google Scholar]

- Popoola, J.J.; van Olst, R. Effect of training algorithms on performance of a developed automatic modulation classification using artificial neural network. In Proceedings of the IEEE AFRICON, Pointe-Aux-Piments, Mauritius, 9–12 September 2013; pp. 1–6. [Google Scholar]

- Xi, S.; Wu, H.C. Robust automatic modulation classification using cumulant features in the presence of fading channels. In Proceedings of the IEEE Wireless Communications and Network Conference, Las Vegas, NV, USA, 3–6 April 2006; pp. 2094–2099. [Google Scholar]

- LeCun, Y.; Bengio, Y.; Hinton, G. Deep learning. Nature 2015, 521, 436–444. [Google Scholar] [CrossRef] [PubMed]

- Goldsmith, A. Wireless Communications; Cambridge University Press: Cambridge, UK, 2005. [Google Scholar]

- Tse, D.; Viswanath, P. Fundamentals of Wireless Communications; Cambridge University Press: Cambridge, UK, 2005. [Google Scholar]

- Orlic, V.D.; Dukic, M.L. Automatic modulation classification: Sixth-order cumulant features as a solution for real-world challenges. In Proceedings of the 20th Telecommunications Forum, Belgrade, Serbia, 20–22 November 2012; pp. 392–399. [Google Scholar]

- Guyon, I.; Elisseeff, A. An introduction to variable and feature selection. J. Mach. Learn. Res. 2003, 3, 1157–1182. [Google Scholar]

- Peng, H.; Long, F.; Ding, C. Feature selection based on mutual information criteria of max-dependency, max-relevance, and min-redundancy. IEEE Trans. Pattern Anal. Mach. Intell. 2005, 27, 1226–1238. [Google Scholar] [CrossRef] [PubMed]

- Bishop, C.M. Pattern Recognition and Machine Learning; Springer: New York, NY, USA, 2009. [Google Scholar]

| (Kurtosis) | (Skewness) | (Peak to average ratio) | |||

| (Peak to RMS ratio) |

| Scenario | Features |

|---|---|

| 1 | , , , , , , , , , , , , , , , , , , , , , , , , , , , |

| 2 | , , , , , , , , , , , , , , , , , , , , , , , , , , , |

| 3 | , , , , , , , , , , , , , , , , , , , , , , , , , , , |

| 4 | , , , , , , , , , , , , , , , , , , , , , , , , , , , |

| Scenario | Features |

|---|---|

| 1 | , , , , , , , , , , , , , , , , , , , , , , , , , , , |

| 2 | , , , , , , , , , , , , , , , , , , , , , , , , , , , |

| 3 | , , , , , , , , , , , , , , , , , , , , , , , , , , , |

| 4 | , , , , , , , , , , , , , , , , , , , , , , , , , , , |

| The Number of Data Samples Converted to a Feature Vector | 20,000 |

| The Number of Feature Vectors in Training Set | 30,000 |

| The Number of Feature Vectors in Validation Set | 6000 |

| Initial Learning Rate | |

| Mini-Batch Size | 50 |

| BPSK | QPSK | 8PSK | 16QAM | 64QAM | |

|---|---|---|---|---|---|

| DNN-AMC1 | 99.98 | 93.75 | 97.58 | 72.16 | 74.64 |

| DNN-AMC2 (14) | 99.98 | 90.17 | 95.22 | 63.61 | 69.29 |

| ANN1 | 97.88 | 86.16 | 91.75 | 57.59 | 61.93 |

| ANN2 | 99.69 | 96.14 | 40.00 | 57.34 | 66.09 |

| SVM | 81.9 | 62.70 | 74.20 | 25.40 | 28.60 |

| HCS | 19.22 | 40.10 | 65.07 | 31.38 | 28.27 |

| BPSK | QPSK | 8PSK | 16QAM | 64QAM | |

|---|---|---|---|---|---|

| DNN-AMC1 | 99.97 | 90.37 | 95.66 | 69.23 | 70.85 |

| DNN-AMC2 (14) | 99.98 | 86.79 | 92.22 | 67.14 | 67.7 |

| ANN1 | 96.65 | 84.10 | 90.55 | 56.32 | 61.71 |

| ANN2 | 99.90 | 63.62 | 33.27 | 56.1 | 69.14 |

| SVM | 76.3 | 65.9 | 74.4 | 26.4 | 39.1 |

| HCS | 23.37 | 41.00 | 77.71 | 25.55 | 49.6 |

| BPSK | QPSK | 8PSK | 16QAM | 64QAM | |

|---|---|---|---|---|---|

| DNN-AMC1 | 96.61 | 78.62 | 85.42 | 60.22 | 66.35 |

| DNN-AMC2 (14) | 95.58 | 76.87 | 83.94 | 52.96 | 64.83 |

| ANN1 | 92.39 | 72.24 | 84.40 | 42.97 | 58.82 |

| ANN2 | 84.62 | 44.02 | 50.81 | 45.57 | 65.90 |

| SVM | 67.26 | 53.7 | 58.26 | 26.86 | 33.92 |

| HCS | 18.04 | 41.28 | 50.81 | 35.09 | 29.24 |

© 2017 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Lee, J.H.; Kim, J.; Kim, B.; Yoon, D.; Choi, J.W. Robust Automatic Modulation Classification Technique for Fading Channels via Deep Neural Network. Entropy 2017, 19, 454. https://doi.org/10.3390/e19090454

Lee JH, Kim J, Kim B, Yoon D, Choi JW. Robust Automatic Modulation Classification Technique for Fading Channels via Deep Neural Network. Entropy. 2017; 19(9):454. https://doi.org/10.3390/e19090454

Chicago/Turabian StyleLee, Jung Hwan, Jaekyum Kim, Byeoungdo Kim, Dongweon Yoon, and Jun Won Choi. 2017. "Robust Automatic Modulation Classification Technique for Fading Channels via Deep Neural Network" Entropy 19, no. 9: 454. https://doi.org/10.3390/e19090454