k-Same-Net: k-Anonymity with Generative Deep Neural Networks for Face Deidentification †

Abstract

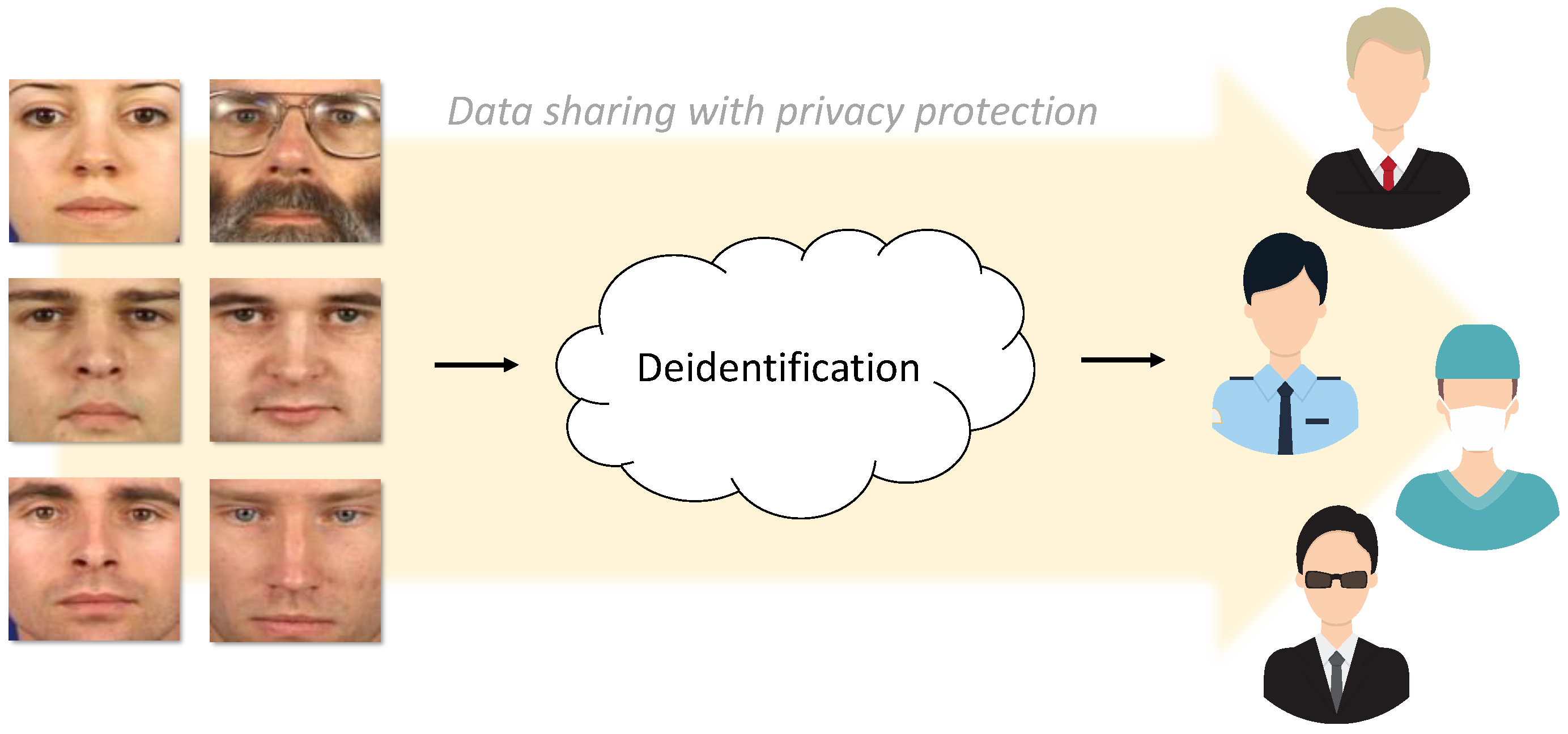

:1. Introduction

- We introduce a novel algorithm for face deidentification, called k-Same-Net, that is based on a formal privacy protection scheme and relies on GNNs.

- We introduce an additional (proxy) image set that can be used for model training in the deidentification approach, but preserves the formal guaranties that come with k-Anonymity-based privacy protection schemes.

- We present an in-depth analysis of the proposed approach centered on reidentification and facial expression recognition experiments.

2. Background and Related Work

3. Neural-Network-Based Deidentification

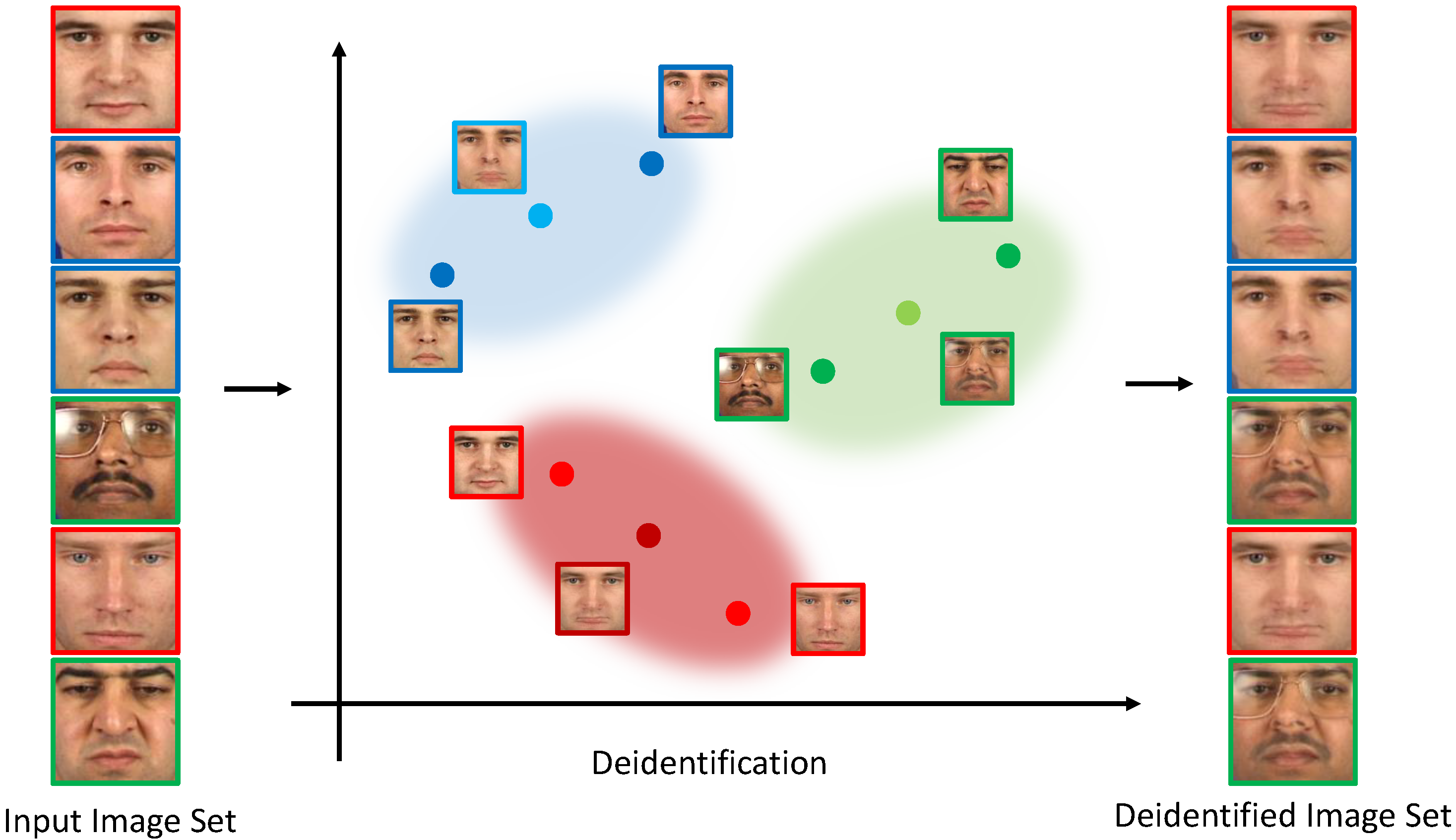

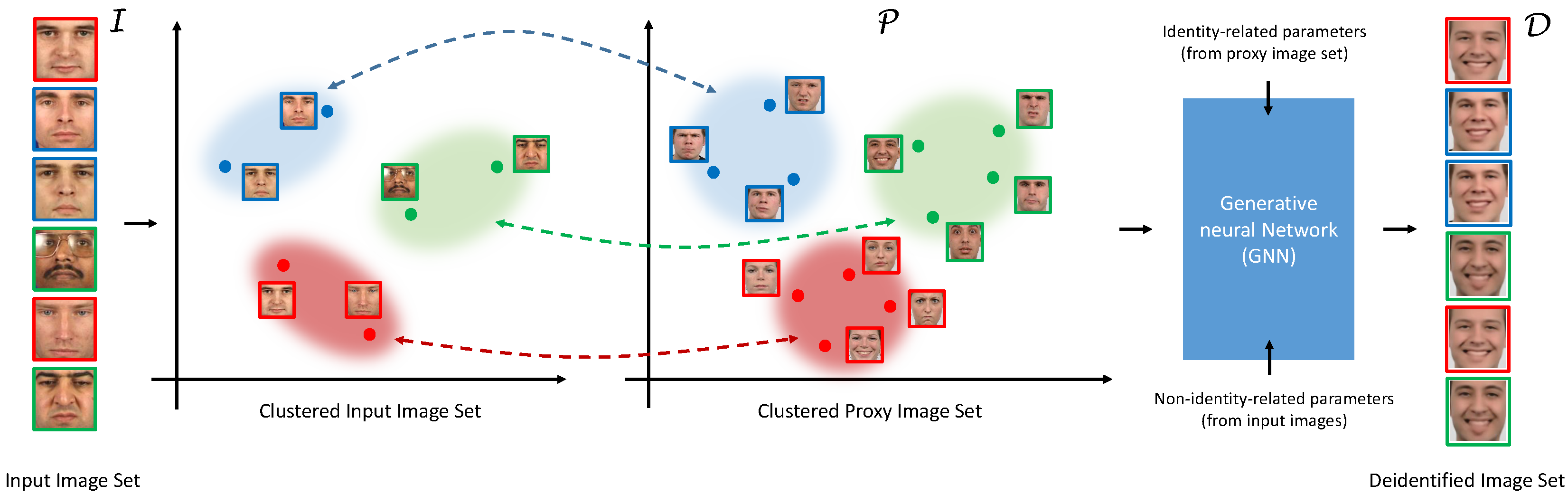

3.1. k-Same-Net Overview

3.2. Deidentification with k-Same-Net

3.3. k-Same-Net and Data Utility

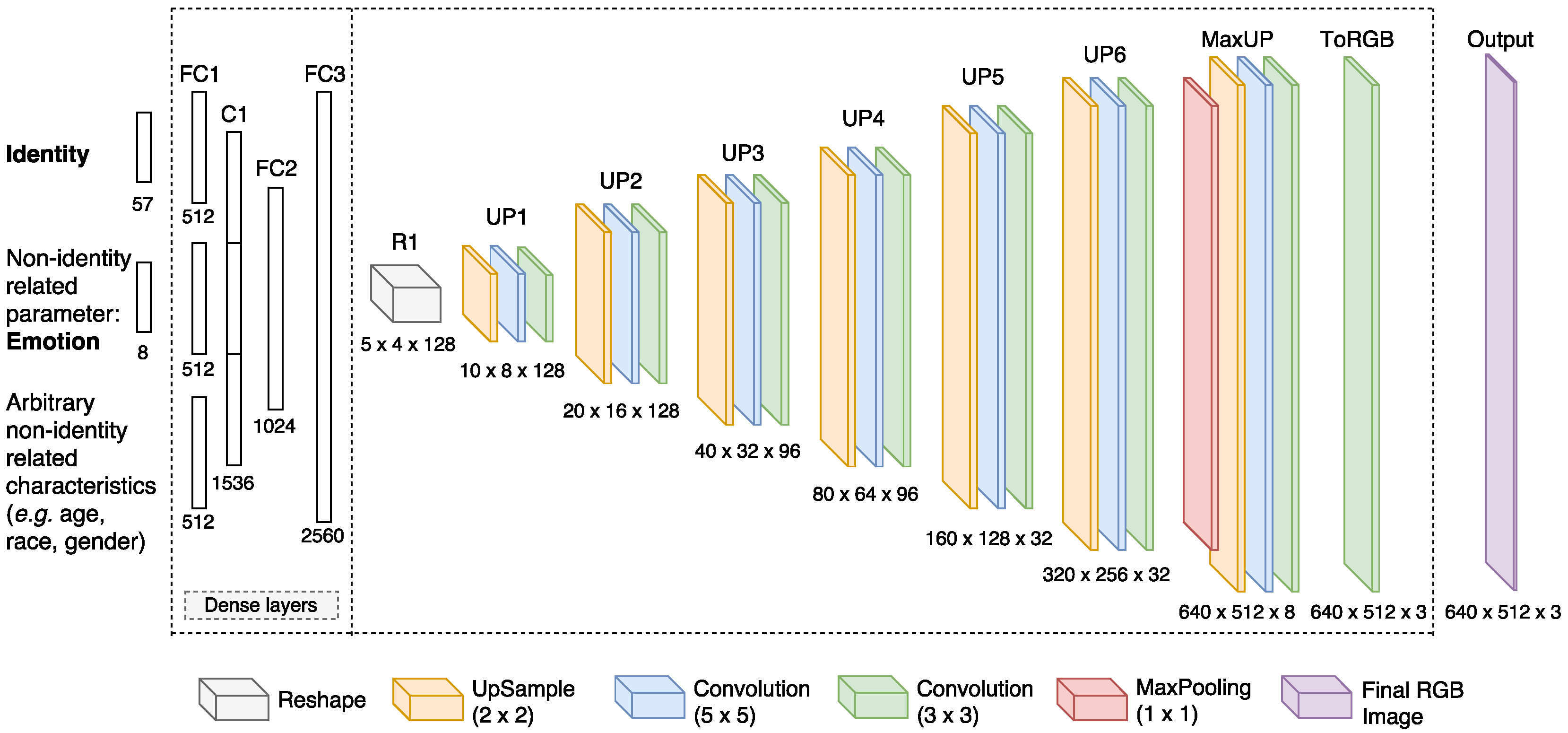

3.4. GNN Architecture and Algorithm Summary

| Algorithm 1: k-Same-Net. |

|

4. Experiments and Results

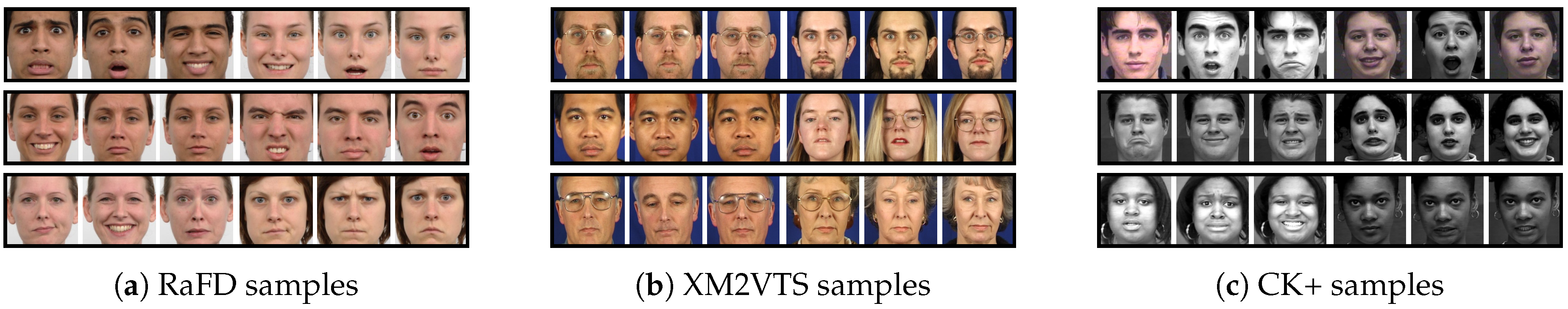

4.1. Datasets

4.2. Network Training

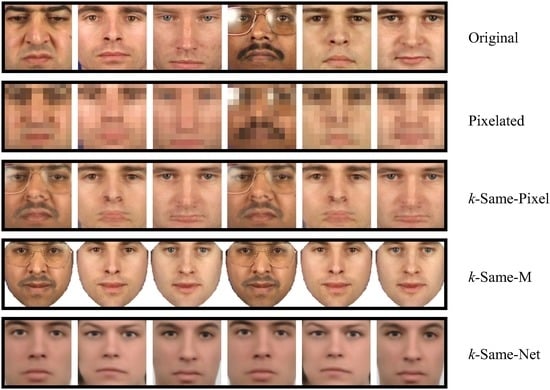

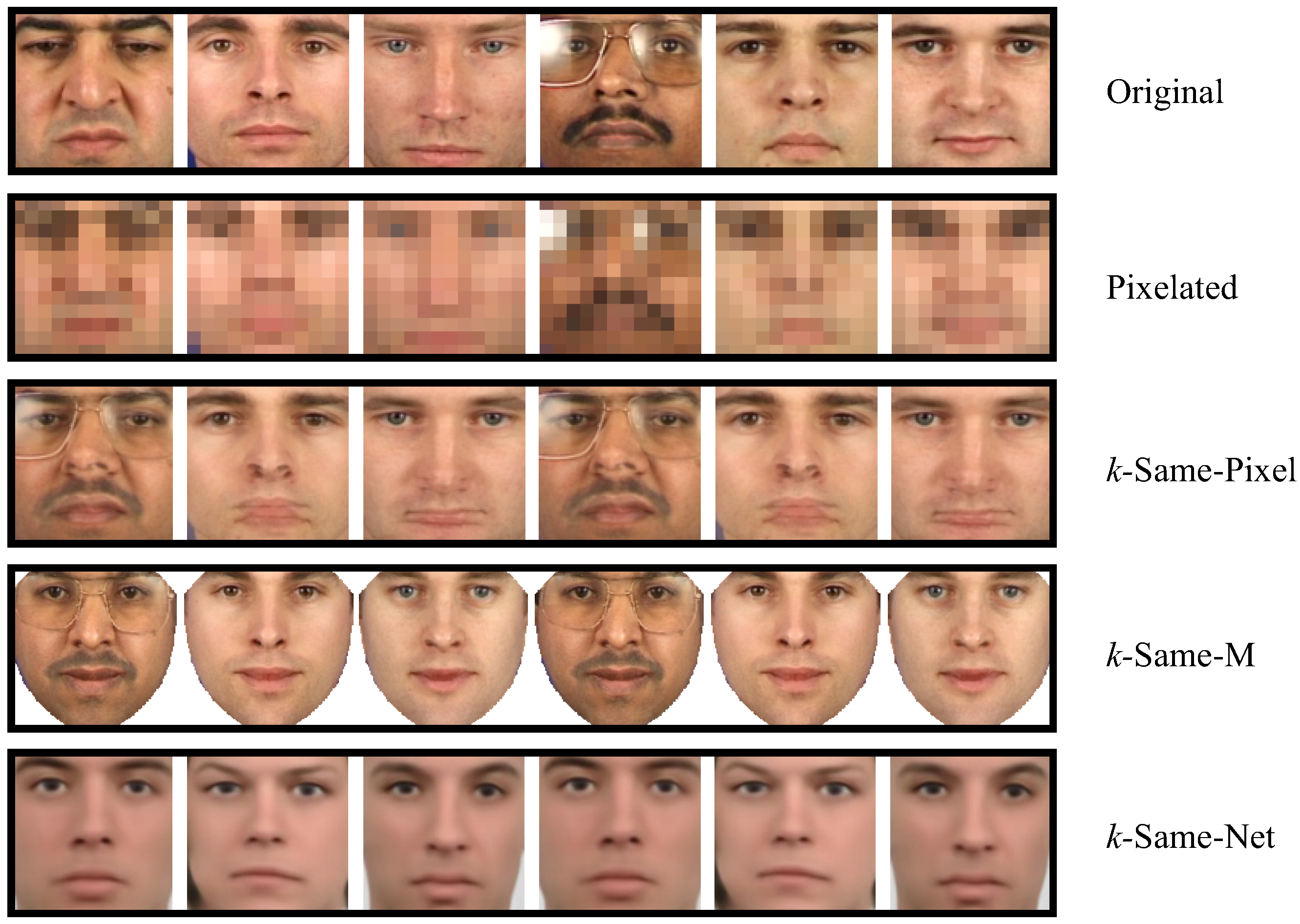

4.3. Qualitative Evaluation

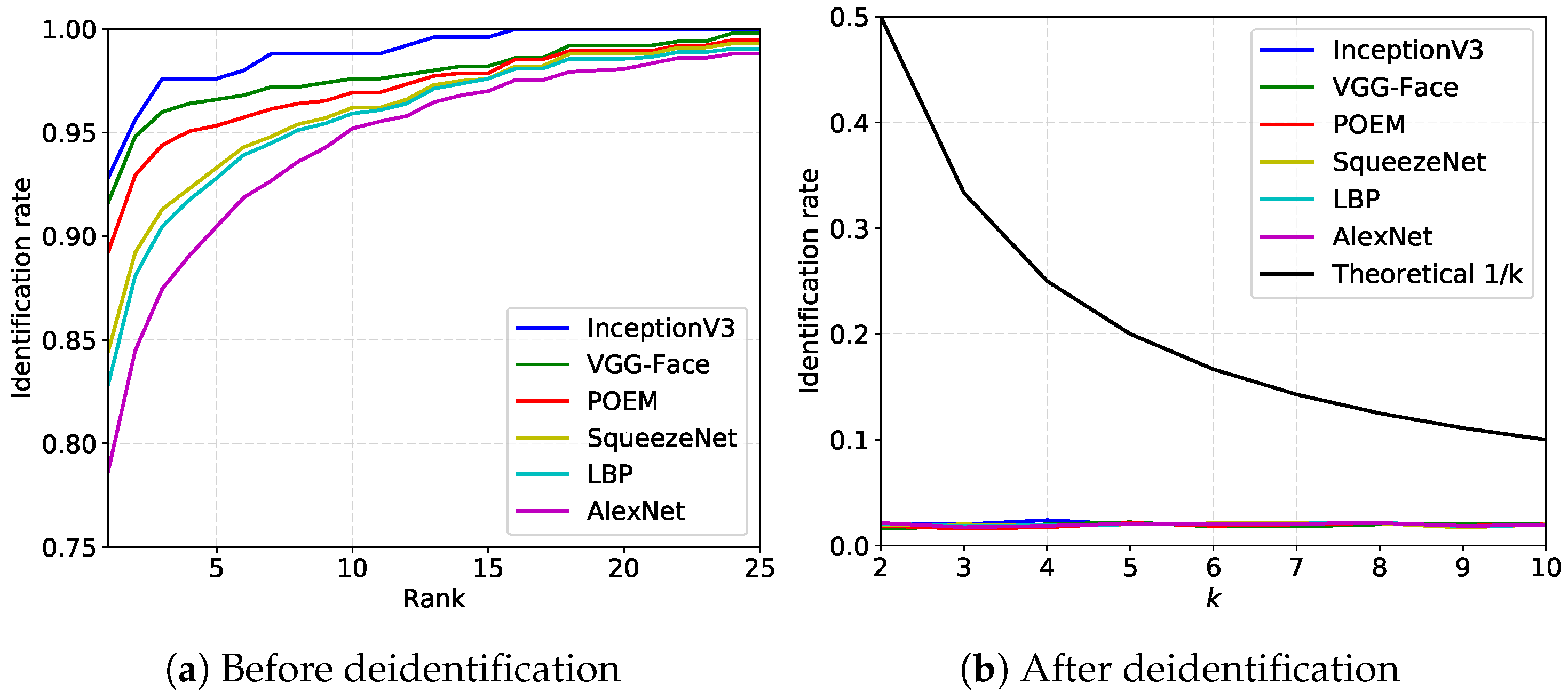

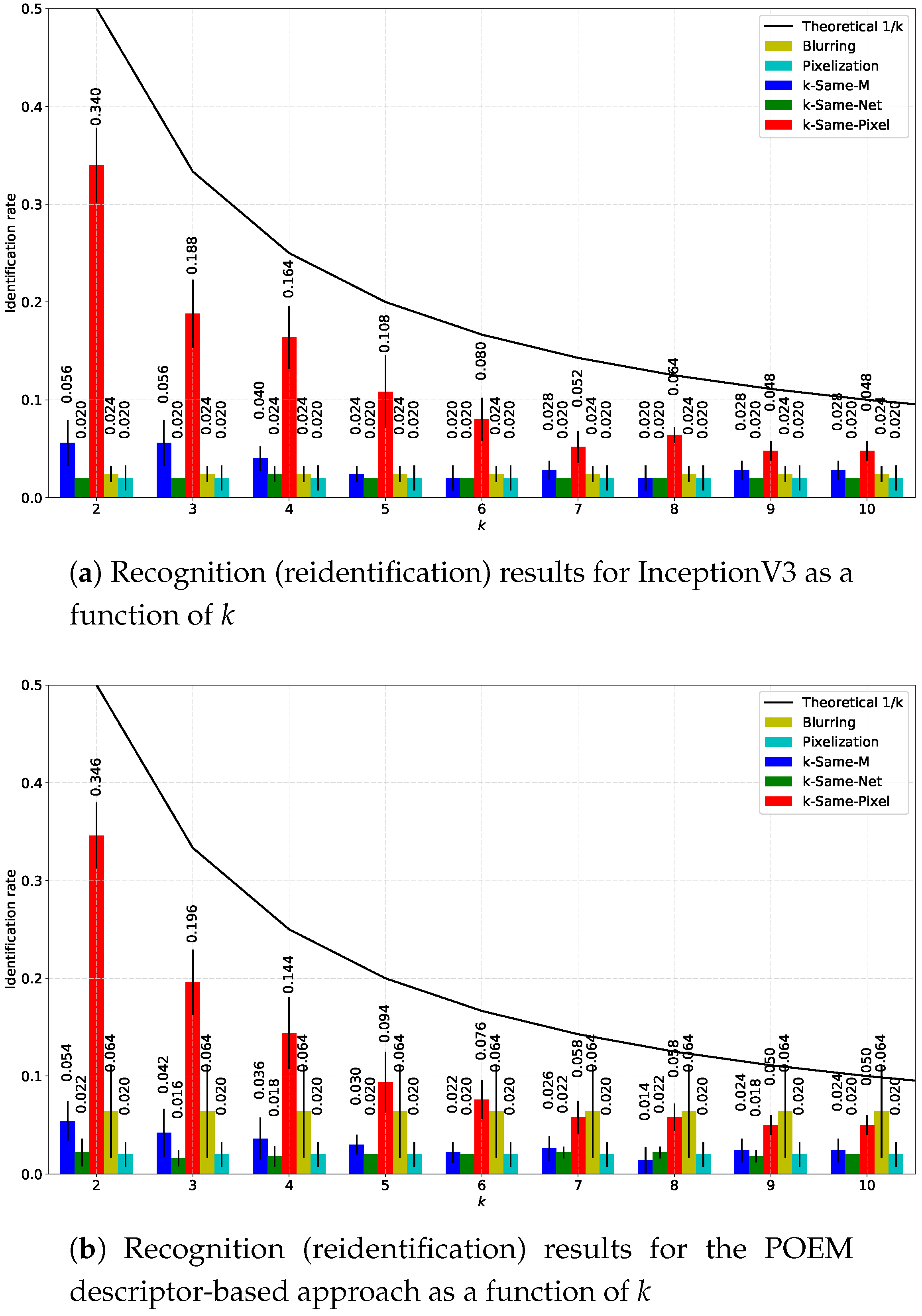

4.4. Recognition and Reidentification Experiments with k-Same-Net

4.5. Comparison with Competing Deidentification Techniques

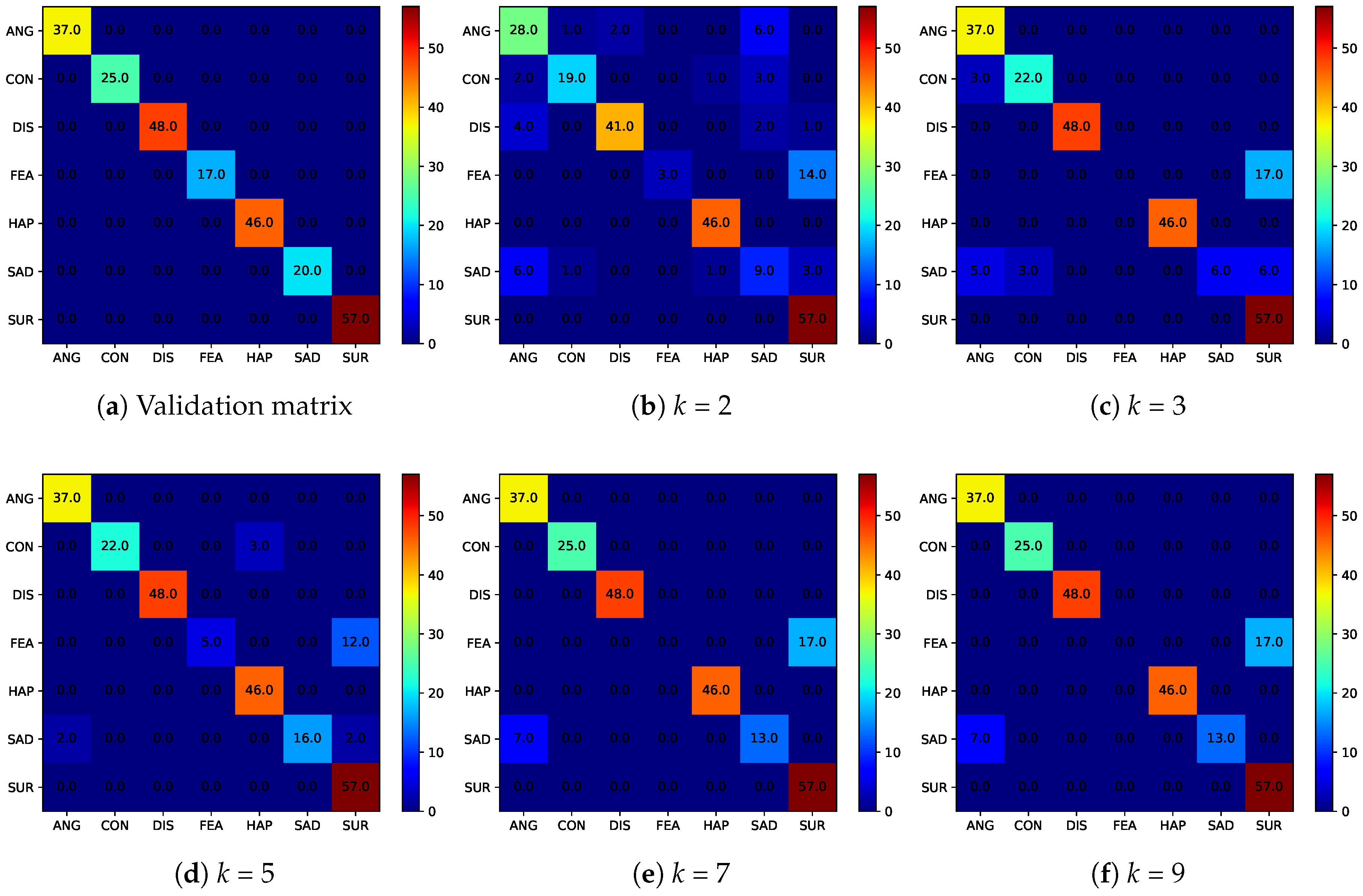

4.6. Utility Preservation

5. Conclusions

Acknowledgments

Author Contributions

Conflicts of Interest

References

- Newton, E.M.; Sweeney, L.; Malin, B. Preserving privacy by de-identifying face images. IEEE Trans. Knowl. Data Eng. 2005, 17, 232–243. [Google Scholar] [CrossRef]

- Gross, R.; Airoldi, E.; Malin, B.; Sweeney, L. Integrating Utility into Face De-identification. In Proceedings of the 5th International Conference on Privacy Enhancing Technologies (PET’05), Cambridge, UK, 28–30 June 2006; Springer: Berlin, Heidelberg, 2006; pp. 227–242. [Google Scholar]

- Meden, B.; Mallı, R.C.; Fabijan, S.; Ekenel, H.K.; Štruc, V.; Peer, P. Face deidentification with generative deep neural networks. IET Signal Process. 2017, 11, 1046–1054. [Google Scholar] [CrossRef]

- Sweeney, L. K-Anonymity: A Model for Protecting Privacy. Int. J. Uncertain. Fuzziness Knowl.-Based Syst. 2002, 10, 557–570. [Google Scholar] [CrossRef]

- Machanavajjhala, A.; Kifer, D.; Gehrke, J.; Venkitasubramaniam, M. L-diversity: Privacy Beyond K-Anonymity. ACM Trans. Knowl. Discov. Data 2007, 1, 1–12. [Google Scholar] [CrossRef]

- Li, N.; Li, T.; Venkatasubramanian, S. t-Closeness: Privacy Beyond k-Anonymity and l-Diversity. In Proceedings of the IEEE 23rd International Conference on Data Engineering, Istanbul, Turkey, 15–20 April 2007; pp. 106–115. [Google Scholar]

- Meden, B.; Emersic, Z.; Struc, V.; Peer, P. κ-Same-Net: Neural-Network-Based Face Deidentification. In Proceedings of the IEEE International Conference and Workshop on Bioinspired Intelligence (IWOBI), Funchal, Portugal, 10–12 July 2017; pp. 1–7. [Google Scholar]

- Agrawal, D.; Aggarwal, C.C. On the Design and Quantification of Privacy Preserving Data Mining Algorithms. In Proceedings of the Twentieth ACM SIGMOD-SIGACT-SIGART Symposium on Principles of Database Systems (PODS ’01), Santa Barbara, CA, 21–23 May 2001; ACM: New York, NY, USA, 2001; pp. 247–255. [Google Scholar]

- Aggarwal, C.C.; Yu, P.S. A General Survey of Privacy-Preserving Data Mining Models and Algorithms. In Privacy-Preserving Data Mining; Aggarwal, C.C., Yu, P.S., Eds.; Springer: Berlin, Germany, 2008; pp. 11–52. [Google Scholar]

- Kovač, J.; Peer, P. Human skeleton model based dynamic features for walking speed invariant gait recognition. Math. Probl. Eng. 2014, 2014. [Google Scholar] [CrossRef]

- Martinel, N.; Das, A.; Micheloni, C.; Roy-Chowdhury, A.K. Re-Identification in the Function Space of Feature Warps. IEEE Trans. Pattern Anal. Mach. Intell. 2015, 37, 1656–1669. [Google Scholar] [CrossRef] [PubMed]

- Kovač, J.; Štruc, V.; Peer, P. Frame-based classification for cross-speed gait recognition. Multimedia Tools Appl. 2017, 1–23. [Google Scholar] [CrossRef]

- Wu, L.; Wang, Y.; Gao, J.; Li, X. Deep adaptive feature embedding with local sample distributions for person re-identification. Pattern Recognit. 2018, 73, 275–288. [Google Scholar] [CrossRef]

- Miller, J.; Campan, A.; Truta, T.M. Constrained k-Anonymity: Privacy with generalization boundaries. In Proceedings of the Practical Privacy-Preserving Data Mining, Atlanta, GA, USA, 26 April 2008; p. 30. [Google Scholar]

- Campan, A.; Truta, T.M.; Cooper, N. P-Sensitive K-Anonymity with Generalization Constraints. Trans. Data Priv. 2010, 3, 65–89. [Google Scholar]

- Hellani, H.; Kilany, R.; Sokhn, M. Towards internal privacy and flexible K-Anonymity. In Proceedings of the 2015 International Conference on Applied Research in Computer Science and Engineering (ICAR), Beirut, Lebanon, 8–9 October 2015; pp. 1–2. [Google Scholar]

- Kilany, R.; Sokhn, M.; Hellani, H.; Shabani, S. Towards Flexible K-Anonymity. In Proceedings of the 7th International Conference on Knowledge Engineering and Semantic Web (KESW), Prague, Czech Republic, 21–23 September 2016; Ngonga Ngomo, A.C., Křemen, P., Eds.; Springer International Publishing: Cham, Switzerland, 2016; pp. 288–297. [Google Scholar]

- Dufaux, F.; Ebrahimi, T. A framework for the validation of privacy protection solutions in video surveillance. In Proceedings of the IEEE International Conference on Multimedia and Expo, Suntec City, Singapore, 19–23 July 2010; pp. 66–71. [Google Scholar]

- Bhattarai, B.; Mignon, A.; Jurie, F.; Furon, T. Puzzling face verification algorithms for privacy protection. In Proceedings of the IEEE International Workshop on Information Forensics and Security (WIFS), Atlanta, GA, USA, 3–5 December 2014; pp. 66–71. [Google Scholar]

- Letournel, G.; Bugeau, A.; Ta, V.T.; Domenger, J.P. Face de-identification with expressions preservation. In Proceedings of the 2015 IEEE International Conference on Image Processing (ICIP), Quebec, QC, Canada, 27–30 September 2015; pp. 4366–4370. [Google Scholar]

- Alonso, V.E.; Enríquez-Caldera, R.A.; Sucar, L.E. Foveation: An alternative method to simultaneously preserve privacy and information in face images. J. Electron. Imaging 2017, 26. [Google Scholar] [CrossRef]

- Garrido, P.; Valgaerts, L.; Rehmsen, O.; Thormaehlen, T.; Perez, P.; Theobalt, C. Automatic Face Reenactment. In Proceedings of the 2014 IEEE Conference on Computer Vision and Pattern Recognition, CVPR 2014, Columbus, OH, USA, 23–28 June 2014; pp. 4217–4224. [Google Scholar]

- Thies, J.; Zollhöfer, M.; Stamminger, M.; Theobalt, C.; Nießner, M. Face2Face: Real-Time Face Capture and Reenactment of RGB Videos. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 27–30 June 2016; pp. 2387–2395. [Google Scholar]

- Martínez-ponte, I.; Desurmont, X.; Meessen, J.; François Delaigle, J. Robust human face hiding ensuring privacy. In Proceedings of the International Workshop on Image Analysis for Multimedia Interactive Services (WIAMIS), Melbourne, Australia, 24–26 October 2011. [Google Scholar]

- Zhang, W.; Cheung, S.S.; Chen, M. Hiding privacy information in video surveillance system. In Proceedings of the IEEE International Conference on Image Processing, Genova, Italy, 14 September 2005; Volume 3. [Google Scholar]

- Korshunov, P.; Ebrahimi, T. Using warping for privacy protection in video surveillance. In Proceedings of the 18th International Conference on Digital Signal Processing (DSP), Fira, Greece, 1–3 July 2013; pp. 1–6. [Google Scholar]

- Rahman, S.M.M.; Hossain, M.A.; Mouftah, H.; El Saddik, A.; Okamoto, E. Chaos-cryptography based privacy preservation technique for video surveillance. Multimedia Syst. 2012, 18, 145–155. [Google Scholar] [CrossRef]

- Agrawal, P.; Narayanan, P.J. Person De-identification in Videos. In Proceedings of the 9th Asian Conference on Computer Vision (ACCV), Xi’an, China, 23–27 September 2009; Revised Selected Papers, Part III. Zha, H., Taniguchi, R.i., Maybank, S., Eds.; Springer: Berlin/Heidelberg, Germany, 2010; pp. 266–276. [Google Scholar]

- Korshunov, P.; Araimo, C.; Simone, F.D.; Velardo, C.; Dugelay, J.L.; Ebrahimi, T. Subjective study of privacy filters in video surveillance. In Proceedings of the IEEE 14th International Workshop on Multimedia Signal Processing (MMSP), Banff, AB, Canada, 17–19 September 2012; pp. 378–382. [Google Scholar]

- Samarzija, B.; Ribaric, S. An approach to the de-identification of faces in different poses. In Proceedings of the 37th International Convention on Information and Communication Technology, Electronics and Microelectronics (MIPRO), Opatija, Croatia, 26–30 May 2014; pp. 1246–1251. [Google Scholar]

- Mosaddegh, S.; Simon, L.; Jurie, F. Photorealistic Face De-Identification by Aggregating Donors’ Face Components. In Proceedings of the 12th Asian Conference on Computer Vision (ACCV), Singapore, Singapore, 1–5 November 2014; Revised Selected Papers, Part III. Cremers, D., Reid, I., Saito, H., Yang, M.H., Eds.; Springer International Publishing: Cham, Switzerland, 2015; pp. 159–174. [Google Scholar]

- Farrugia, R.A. Reversible De-Identification for lossless image compression using Reversible Watermarking. In Proceedings of the 37th International Convention on Information and Communication Technology, Electronics and Microelectronics (MIPRO), Opatija, Croatia, 26–30 May 2014; pp. 1258–1263. [Google Scholar]

- Brkić, K.; Hrkać, T.; Kalafatić, Z.; Sikirić, I. Face, hairstyle and clothing colour de-identification in video sequences. IET Signal Process. 2017, 11, 1062–1068. [Google Scholar] [CrossRef]

- Brkic, K.; Sikiric, I.; Hrkac, T.; Kalafatic, Z. I Know that Person: Generative Full Body and Face De-Identification of People in Images. In Proceedings of the The IEEE Conference on Computer Vision and Pattern Recognition (CVPR) Workshops, Las Vegas, NV, USA, 26 June–1 July 2016. [Google Scholar]

- Gross, R.; Sweeney, L.; de la Torre, F.; Baker, S. Semi-supervised learning of multi-factor models for face de-identification. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Anchorage, AK, USA, 23–28 June 2008; pp. 1–8. [Google Scholar]

- Gross, R.; Sweeney, L.; de la Torre, F.; Baker, S. Model-Based Face De-Identification. In Proceedings of the IEEE Computer Vision and Pattern Recognition Workshop (CVPRW’06), New York, NY, USA, 17–22 June 2006; p. 161. [Google Scholar]

- Meng, L.; Sun, Z.; Ariyaeeinia, A.; Bennett, K.L. Retaining expressions on de-identified faces. In Proceedings of the 37th International Convention on Information and Communication Technology, Electronics and Microelectronics (MIPRO), Opatija, Croatia, 26–30 May 2014; pp. 1252–1257. [Google Scholar]

- Sun, Z.; Meng, L.; Ariyaeeinia, A. Distinguishable de-identified faces. In Proceedings of the 11th IEEE International Conference and Workshops on Automatic Face and Gesture Recognition (FG), Ljubljana, Slovenia, 4–8 May 2015; Volume 4, pp. 1–6. [Google Scholar]

- Meng, L.; Shenoy, A. Retaining Expression on De-identified Faces. In Proceedings of the 19th International Conference of Speech and Computer (SPECOM), Hatfield, UK, 12–16 September 2017; Karpov, A., Potapova, R., Mporas, I., Eds.; Springer International Publishing: Cham, Switzerland, 2017; pp. 651–661. [Google Scholar]

- Du, L.; Yi, M.; Blasch, E.; Ling, H. GARP-face: Balancing privacy protection and utility preservation in face de-identification. In Proceedings of the IEEE International Joint Conference on Biometrics, Clearwater, FL, USA, 29 Septeber–2 October 2014; pp. 1–8. [Google Scholar]

- Ribaric, S.; Ariyaeeinia, A.; Pavesic, N. De-identification for privacy protection in multimedia content: A survey. Signal Process. Image Commun. 2016, 47, 131–151. [Google Scholar] [CrossRef]

- Goodfellow, I.; Pouget-Abadie, J.; Mirza, M.; Xu, B.; Warde-Farley, D.; Ozair, S.; Courville, A.; Bengio, Y. Generative Adversarial Networks. arXiv, 2014; arXiv:1406.2661. [Google Scholar]

- Mirza, M.; Osindero, S. Conditional Generative Adversarial Nets. arXiv, 2014; arXiv:1411.1784. [Google Scholar]

- Sricharan, K.; Bala, R.; Shreve, M.; Ding, H.; Saketh, K.; Sun, J. Semi-supervised Conditional GANs. ArXiv, 2017; arXiv:1708.05789. [Google Scholar]

- Radford, A.; Metz, L.; Chintala, S. Unsupervised Representation Learning with Deep Convolutional Generative Adversarial Networks. arXiv, 2015; arXiv:1511.06434. [Google Scholar]

- Arjovsky, M.; Chintala, S.; Bottou, L. Wasserstein GAN. arXiv, 2017; arXiv:1701.07875. [Google Scholar]

- Nowozin, S.; Cseke, B.; Tomioka, R. f-GAN: Training Generative Neural Samplers Using Variational Divergence Minimization. arXiv, 2016; arXiv:1606.00709. [Google Scholar]

- Tran, L.; Yin, X.; Liu, X. Representation Learning by Rotating Your Faces. arXiv, 2017; arXiv:1705.11136. [Google Scholar]

- Karras, T.; Aila, T.; Laine, S.; Lehtinen, J. Progressive Growing of GANs for Improved Quality, Stability, and Variation. arXiv, 2017; arXiv:1710.10196. [Google Scholar]

- Dosovitskiy, A.; Springenberg, J.T.; Tatarchenko, M.; Brox, T. Learning to Generate Chairs, Tables and Cars with Convolutional Networks. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 692–705. [Google Scholar] [CrossRef] [PubMed]

- Kingma, D.P.; Welling, M. Auto-Encoding Variational Bayes. arXiv, 2013; arXiv:1312.6114. [Google Scholar]

- Chen, X.; Duan, Y.; Houthooft, R.; Schulman, J.; Sutskever, I.; Abbeel, P. InfoGAN: Interpretable Representation Learning by Information Maximizing Generative Adversarial Nets. arXiv, 2016; arXiv:1606.03657. [Google Scholar]

- Driessen, B.; Dürmuth, M. Achieving Anonymity against Major Face Recognition Algorithms. In Proceedings of the 14th IFIP TC 6/TC 11 International Conference of Communications and Multimedia Security (CMS), Magdeburg, Germany, 25–26 September 2013; De Decker, B., Dittmann, J., Kraetzer, C., Vielhauer, C., Eds.; Springer: Berlin/Heidelberg, Germany, 2013; pp. 18–33. [Google Scholar]

- Langner, O.; Dotsch, R.; Bijlstra, G.; Wigboldus, D.; Hawk, S.; van Knippenberg, A. Presentation and validation of the Radboud Faces Database. Cognit. Emot. 2010, 24, 1377–1388. [Google Scholar] [CrossRef]

- Messer, K.; Kittler, J.; Sadeghi, M.; Marcel, S.; Marcel, C.; Bengio, S.; Cardinaux, F.; Sanderson, C.; Czyz, J.; Vandendorpe, L.; et al. Face Verification Competition on the XM2VTS Database. In Proceedings of the 4th International Conference on Audio- and Video-based Biometric Person Authentication (AVBPA’03), Guildford, UK, 9–11 June 2003; Springer: Berlin/Heidelberg, Germany, 2003; pp. 964–974. [Google Scholar]

- Lucey, P.; Cohn, J.F.; Kanade, T.; Saragih, J.; Ambadar, Z.; Matthews, I. The extended cohn-kanade dataset (CK+): A complete dataset for action unit and emotion-specified expression. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), San Francisco, CA, USA, 13–18 June 2010; pp. 94–101. [Google Scholar]

- Kanade, T.; Cohn, J.F.; Tian, Y. Comprehensive database for facial expression analysis. In Proceedings of the Proceedings Fourth IEEE International Conference on Automatic Face and Gesture Recognition, Grenoble, France, 28–30 March 2000; pp. 46–53. [Google Scholar]

- Ekman, P.; Friesen, W.V.; Hager, J.C. FACS investigator’s guide. In A Human Face; Architectural Nexus: Salt Lake City, UT, USA, 2002; p. 96. [Google Scholar]

- Krizhevsky, A.; Sutskever, I.; Hinton, G. Imagenet classification with deep convolutional neural networks. In Proceedings of the 25th International Conference on Neural Information Processing Systems (NIPS’12), Lake Tahoe, NV, USA, 3–6 December 2012; Volume 1, pp. 1097–1105. [Google Scholar]

- Parkhi, O.; Vedaldi, A.; Zisserman, A. Deep Face Recognition. In Proceedings of the British Machine Vision Association (BMVC), Dundee, UK, 29 August–2 September 2015; Volume 1, p. 6. [Google Scholar]

- Szegedy, C.; Vanhoucke, V.; Ioffe, S.; Shlens, J.; Wojna, Z. Rethinking the inception architecture for computer vision. Comput. Vis. Pattern Recognit. 2016, 2818–2826. [Google Scholar] [CrossRef]

- Iandola, F.; Han, S.; Moskewicz, M.; Ashraf, K.; Dally, W.; Keutzer, K. SqueezeNet: AlexNet-level accuracy with 50x fewer parameters and 0.5 MB model size. arXiv, 2016; arXiv:1602.07360. [Google Scholar]

- Ahonen, T.; Hadid, A.; Pietikäinen, M. Face recognition with local binary patterns. In Proceedings of the 8th European Conference on Computer Vision (ECCV), Prague, Czech Republic, 11–14 May 2004; pp. 469–481. [Google Scholar]

- Vu, N.S.; Dee, H.M.; Caplier, A. Face recognition using the POEM descriptor. Pattern Recognit. 2012, 45, 2478–2488. [Google Scholar] [CrossRef]

- Grm, K.; Štruc, V.; Artiges, A.; Caron, M.; Ekenel, H.K. Strengths and weaknesses of deep learning models for face recognition against image degradations. IET Biom. 2017. [Google Scholar] [CrossRef]

- Emersic, Z.; Struc, V.; Peer, P. Ear Recognition: More Than a Survey. Neurocomputing 2017, 255, 1–22. [Google Scholar] [CrossRef]

- Kazemi, V.; Sullivan, J. One Millisecond Face Alignment with an Ensemble of Regression Trees. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Columbus, OH, USA, 23–28 June 2014. [Google Scholar]

- Zhang, Z.; Luo, P.; Loy, C.C.; Tang, X. Facial Landmark Detection by Deep Multi-task Learning. In Proceedings of the 13th European Conference on Computer Vision—ECCV, Zurich, Switzerland, 6–12 September 2014; Fleet, D., Pajdla, T., Schiele, B., Tuytelaars, T., Eds.; Springer International Publishing: Cham, Switzerland, 2014. Part VI. pp. 94–108. [Google Scholar]

- Ren, S.; Cao, X.; Wei, Y.; Sun, J. Face Alignment at 3000 FPS via Regressing Local Binary Features. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014; pp. 1685–1692. [Google Scholar]

- Peng, X.; Zhang, S.; Yang, Y.; Metaxas, D.N. PIEFA: Personalized Incremental and Ensemble Face Alignment. In Proceedings of the IEEE International Conference on Computer Vision (ICCV), Santiago, Chile, 7–13 December 2015; pp. 3880–3888. [Google Scholar]

- Jourabloo, A.; Liu, X. Large-pose Face Alignment via CNN-based Dense 3D Model Fitting. In Proceedings of the IEEE Computer Vision and Pattern Recogntion, Las Vegas, NV, USA, 27–30 June 2016. [Google Scholar]

- Zhu, X.; Lei, Z.; Liu, X.; Shi, H.; Li, S.Z. Face Alignment Across Large Poses: A 3D Solution. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 26 June –1 July 2016. [Google Scholar]

- Jung, H.; Lee, S.; Yim, J.; Park, S.; Kim, J. Joint Fine-Tuning in Deep Neural Networks for Facial Expression Recognition. In Proceedings of the the IEEE International Conference on Computer Vision (ICCV), Los Alamitos, CA, USA, 7–13 December 2015. [Google Scholar]

- Dapogny, A.; Bailly, K.; Dubuisson, S. Pairwise Conditional Random Forests for Facial Expression Recognition. In Proceedings of the The IEEE International Conference on Computer Vision (ICCV), Santiago, Chile, 7–13 December 2015. [Google Scholar]

- Mollahosseini, A.; Hasani, B.; Salvador, M.J.; Abdollahi, H.; Chan, D.; Mahoor, M.H. Facial Expression Recognition from World Wild Web. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR) Workshops, Seattle, WA, USA, 27–30 June 2016. [Google Scholar]

- Afshar, S.; Ali Salah, A. Facial Expression Recognition in the Wild Using Improved Dense Trajectories and Fisher Vector Encoding. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR) Workshops, Seattle, WA, USA, 27–30 June 2016. [Google Scholar]

- Peng, X.; Xia, Z.; Li, L.; Feng, X. Towards Facial Expression Recognition in the Wild: A New Database and Deep Recognition System. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR) Workshops, Seattle, WA, USA, 27–30 June 2016. [Google Scholar]

- Gajšek, R.; Štruc, V.; Dobrišek, S.; Mihelič, F. Emotion recognition using linear transformations in combination with video. In Proceedings of the Tenth Annual Conference of the International Speech Communication Association, Brighton, UK, 6–10 September 2009; pp. 1967–1970. [Google Scholar]

- Zhu, Y.; Li, Y.; Mu, G.; Guo, G. A Study on Apparent Age Estimation. In Proceedings of the IEEE International Conference on Computer Vision (ICCV), Santiago, Chile, 7–13 December 2015. [Google Scholar]

- Ranjan, R.; Zhou, S.; Cheng Chen, J.; Kumar, A.; Alavi, A.; Patel, V.M.; Chellappa, R. Unconstrained Age Estimation With Deep Convolutional Neural Networks. In Proceedings of the IEEE International Conference on Computer Vision (ICCV), Santiago, Chile, 7–13 December 2015. [Google Scholar]

- Huo, Z.; Yang, X.; Xing, C.; Zhou, Y.; Hou, P.; Lv, J.; Geng, X. Deep Age Distribution Learning for Apparent Age Estimation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR) Workshops, Seattle, WA, USA, 27–30 June 2016. [Google Scholar]

- Niu, Z.; Zhou, M.; Wang, L.; Gao, X.; Hua, G. Ordinal Regression With Multiple Output CNN for Age Estimation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR) Workshops, Seattle, WA, USA, 27–30 June 2016. [Google Scholar]

- Konda, J.; Peer, P. Estimating people’s age from face images with convolutional neural networks. In Proceedings of the 25th International Electrotechnical and Computer Science Conference, Portoroz, Slovenia, 19–21 September 2016. [Google Scholar]

- Jia, S.; Cristianini, N. Learning to classify gender from four million images. Pattern Recognit. Lett. 2015, 58, 35–41. [Google Scholar] [CrossRef]

- Mansanet, J.; Albiol, A.; Paredes, R. Local Deep Neural Networks for gender recognition. Pattern Recognit. Lett. 2016, 70, 80–86. [Google Scholar] [CrossRef]

- Levi, G.; Hassner, T. Age and Gender Classification Using Convolutional Neural Networks. In Proceedings of the IEEE International Conference on Computer Vision (ICCV), Santiago, Chile, 7–13 December 2015. [Google Scholar]

- Ranjan, R.; Patel, V.M.; Chellappa, R. HyperFace: A Deep Multi-Task Learning Framework for Face Detection, Landmark Localization, Pose Estimation, and Gender Recognition. arXiv, 2016; arXiv:1603.01249. [Google Scholar]

- Burkert, P.; Trier, F.; Afzal, M.Z.; Dengel, A.; Liwicki, M. DeXpression: Deep Convolutional Neural Network for Expression Recognition. arXiv, 2015; arXiv:1509.05371. [Google Scholar]

| Year | Algorithm | Domain | # Assumptions |

|---|---|---|---|

| 2005 | k-Same-Pixel [1] | Pixel | Client specific image set. |

| 2005 | k-Same-Eigen [1] | PCA space | Client specific image set. |

| 2005 | k-Same-Select [2] | AAM, PCA space | Need to specify selection criteria |

| prior to deidentification. | |||

| 2008 | k-Same-Model (or k-Same-M for short) [35] | AAM | Model parameters obtained during |

| (also known as the ()-map algorithm) | fitting are not unique due to ambiguities. | ||

| 2013 | Driessen–Durmuth’s algorithm [53] | PCA space, Gabor wavelets | Not achieving very strong k-Anonymity; |

| human recognition is still possible. | |||

| 2014 | k-Same-furthest-FET [37] | AAM, PCA space | Neutral emotion not available explicitly; |

| FET not satisfying k-Anonymity; | |||

| efficacy experimentally proven. | |||

| 2014 | GARP-Face [40] | AAM | Utility-specific AAMs for |

| (Gender, Age, Race Preservation) | ethnicity, gender, expression. | ||

| 2015 | k-Diff -furthest [38] | AAM | Distinguishable client specific image set. |

| 2017 | k-SameClass-Eigen [39] | PCA, LDA space | Depends on LDA classifier accuracy; |

| may fail on unknown faces. |

| Type | Method | Pros | Cons |

|---|---|---|---|

| Naive | Pixelization [1] | Easy to implement. Training not required. | No formal privacy guarantees. |

| attack | Blurring [1] | ||

| Formal | k-Same-Pixel [1] | Easy to implement. | Ghosting effect, visible artifacts. |

| k-Same-M [35] | Visually convincing. | Training required. | |

| k-Same-Net (ours) | Visually convincing. Offering data utilization. |

| Feature Type | Method | Rank-1 ()—Before deid. | Rank-1 (), —After deid. |

|---|---|---|---|

| Learned | Inception V3 [61] | ||

| VGG-Face [60] | |||

| SqueezeNet [62] | |||

| AlexNet [59] | |||

| Hand-crafted | LBP [63] | ||

| POEM [64] |

© 2018 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Meden, B.; Emeršič, Ž.; Štruc, V.; Peer, P. k-Same-Net: k-Anonymity with Generative Deep Neural Networks for Face Deidentification. Entropy 2018, 20, 60. https://doi.org/10.3390/e20010060

Meden B, Emeršič Ž, Štruc V, Peer P. k-Same-Net: k-Anonymity with Generative Deep Neural Networks for Face Deidentification. Entropy. 2018; 20(1):60. https://doi.org/10.3390/e20010060

Chicago/Turabian StyleMeden, Blaž, Žiga Emeršič, Vitomir Štruc, and Peter Peer. 2018. "k-Same-Net: k-Anonymity with Generative Deep Neural Networks for Face Deidentification" Entropy 20, no. 1: 60. https://doi.org/10.3390/e20010060