Content Adaptive Lagrange Multiplier Selection for Rate-Distortion Optimization in 3-D Wavelet-Based Scalable Video Coding

Abstract

:1. Introduction

2. Related Work

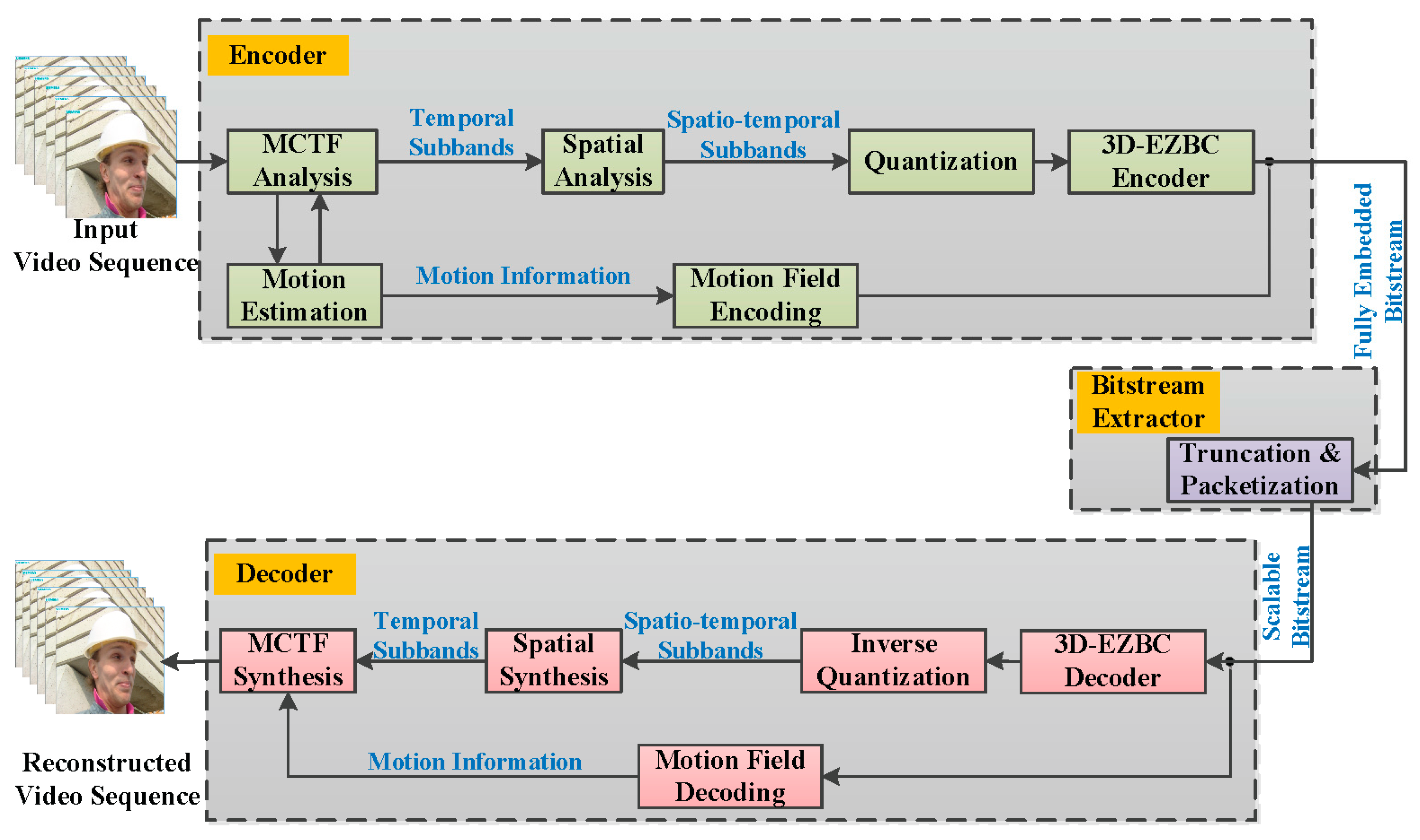

2.1. System Model

2.2. Lagrange Optimization and Lagrange Multiplier Selection

2.3. Problem Formulation

3. Proposed Lagrange Multiplier Selection Algorithm

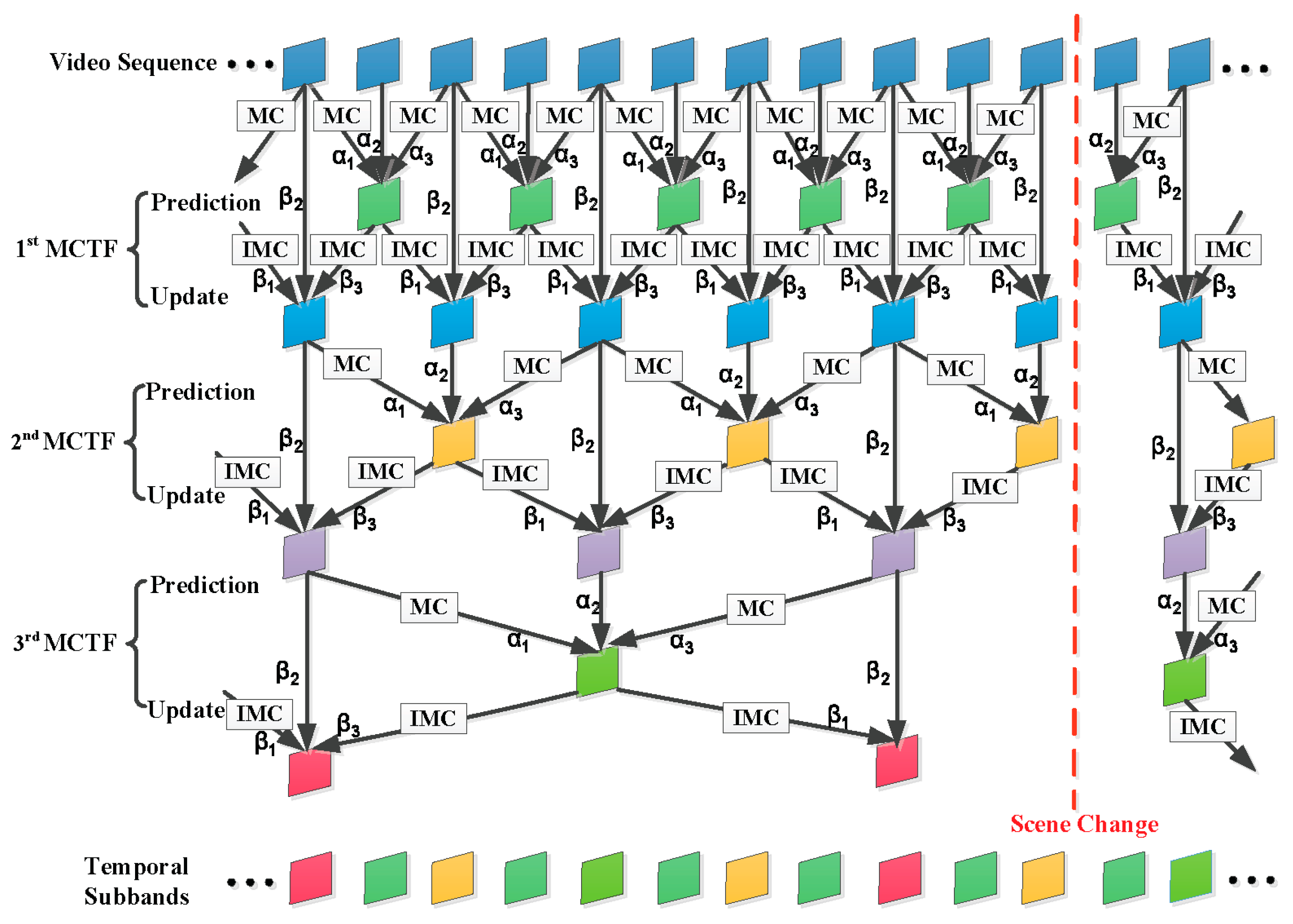

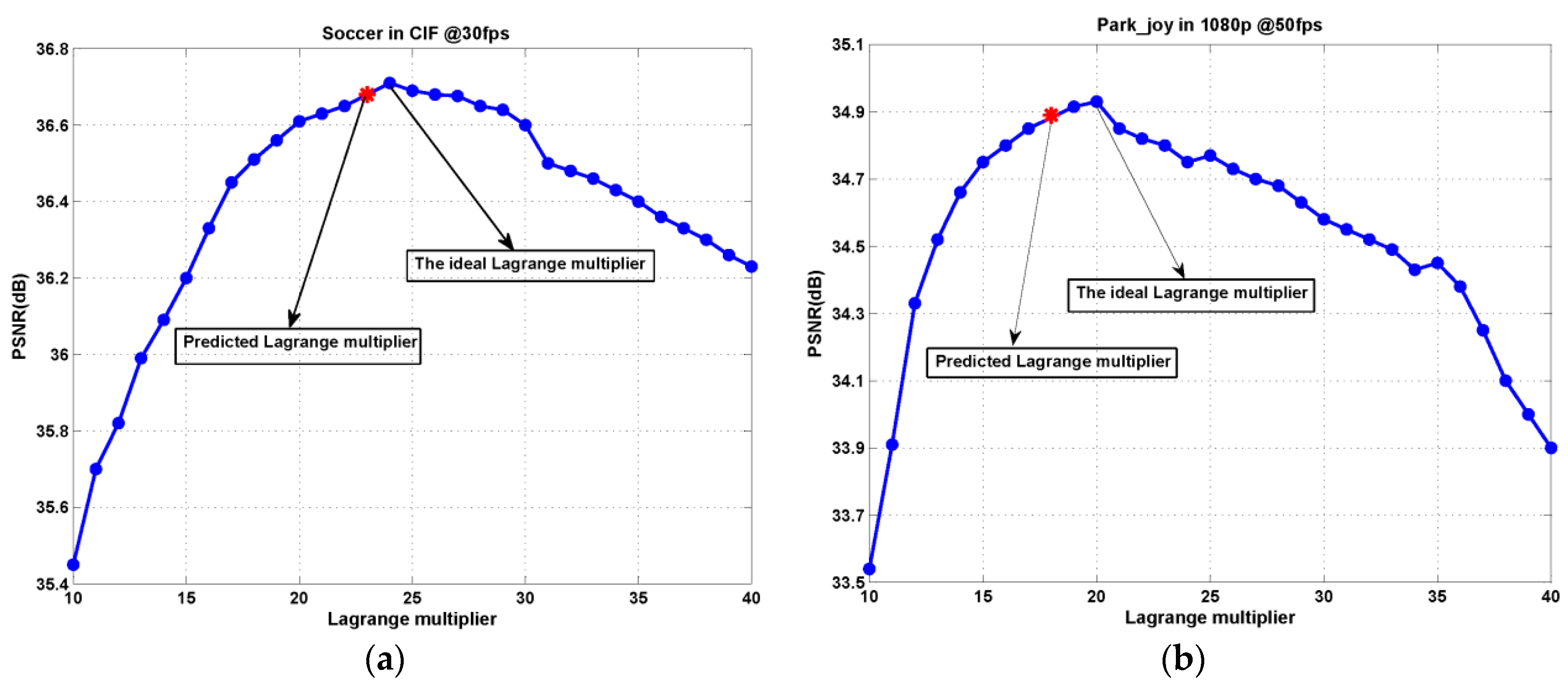

3.1. Lagrange Multiplier Selection Bottlenecks in 3-D Wavelet-Based SVC

3.2. Analyzing the Distortion Relationship between Temporal Subbands and Reconstructed Frames

3.3. Adaptive Lagrange Multiplier Selection

3.4. Summary of the Proposed Algorithm

| Algorithm 1 An efficient algorithm for content adaptive Lagrange multiplier selection |

|

4. Experimental Results

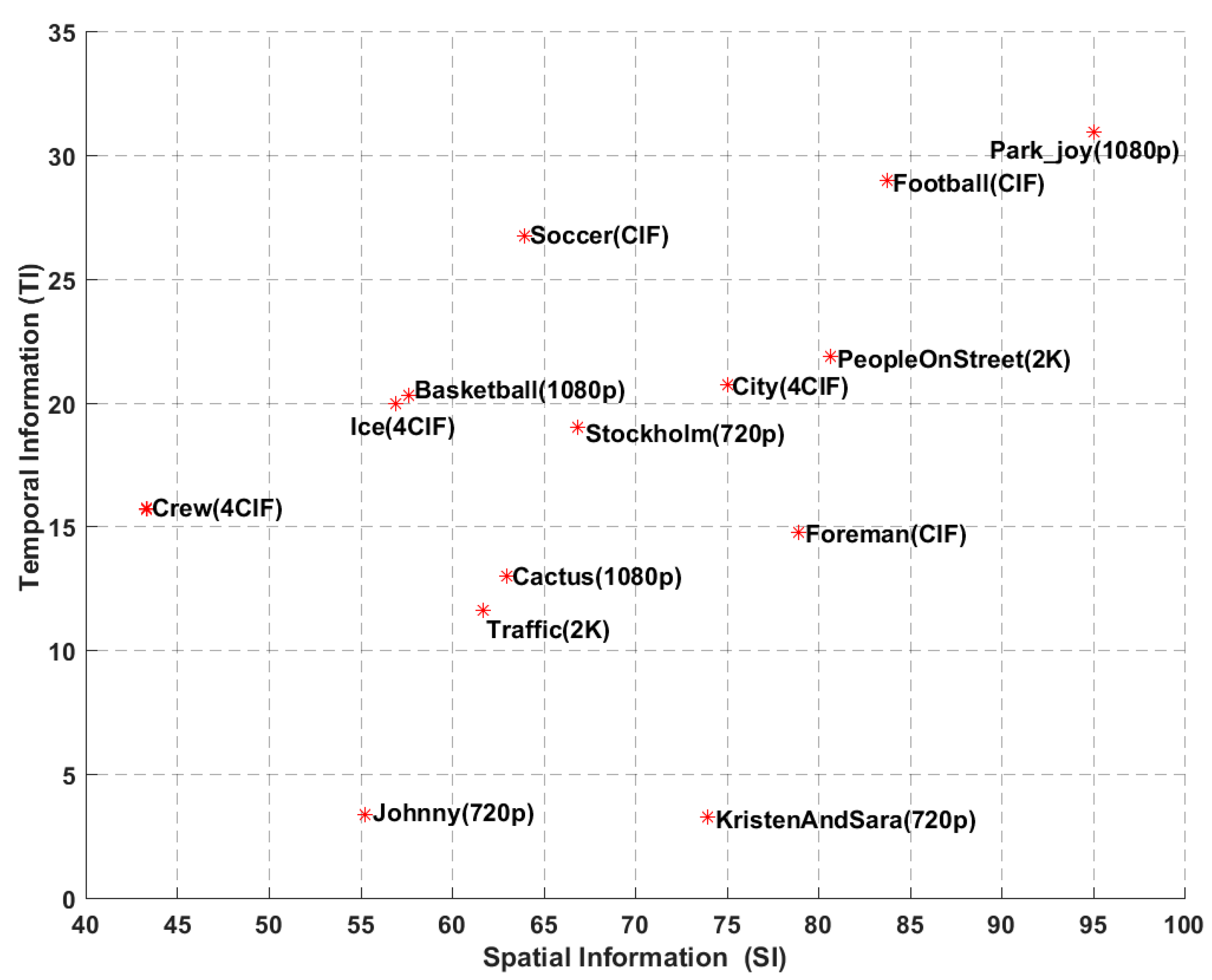

4.1. Video Test Sequences

4.2. Experimental Setup

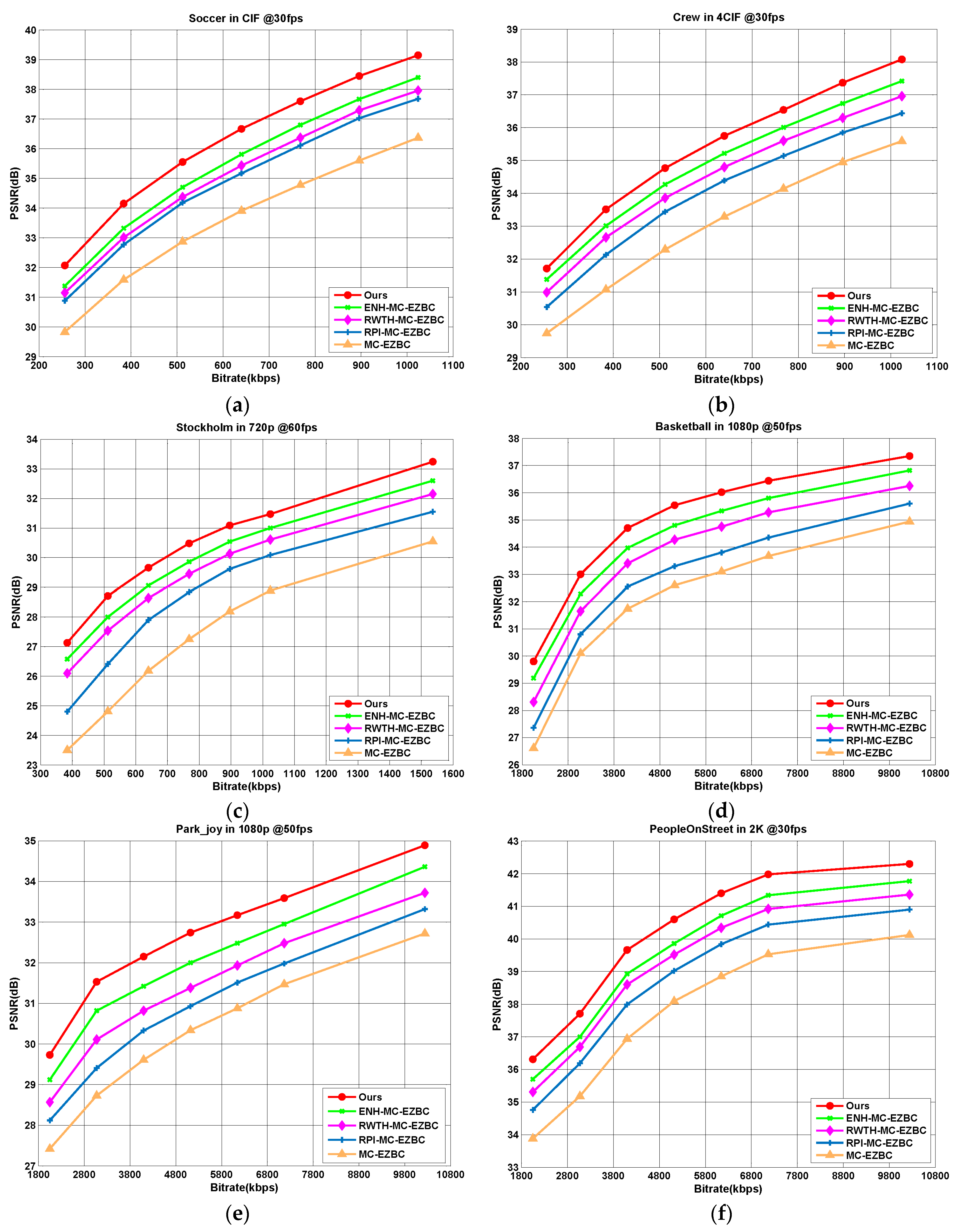

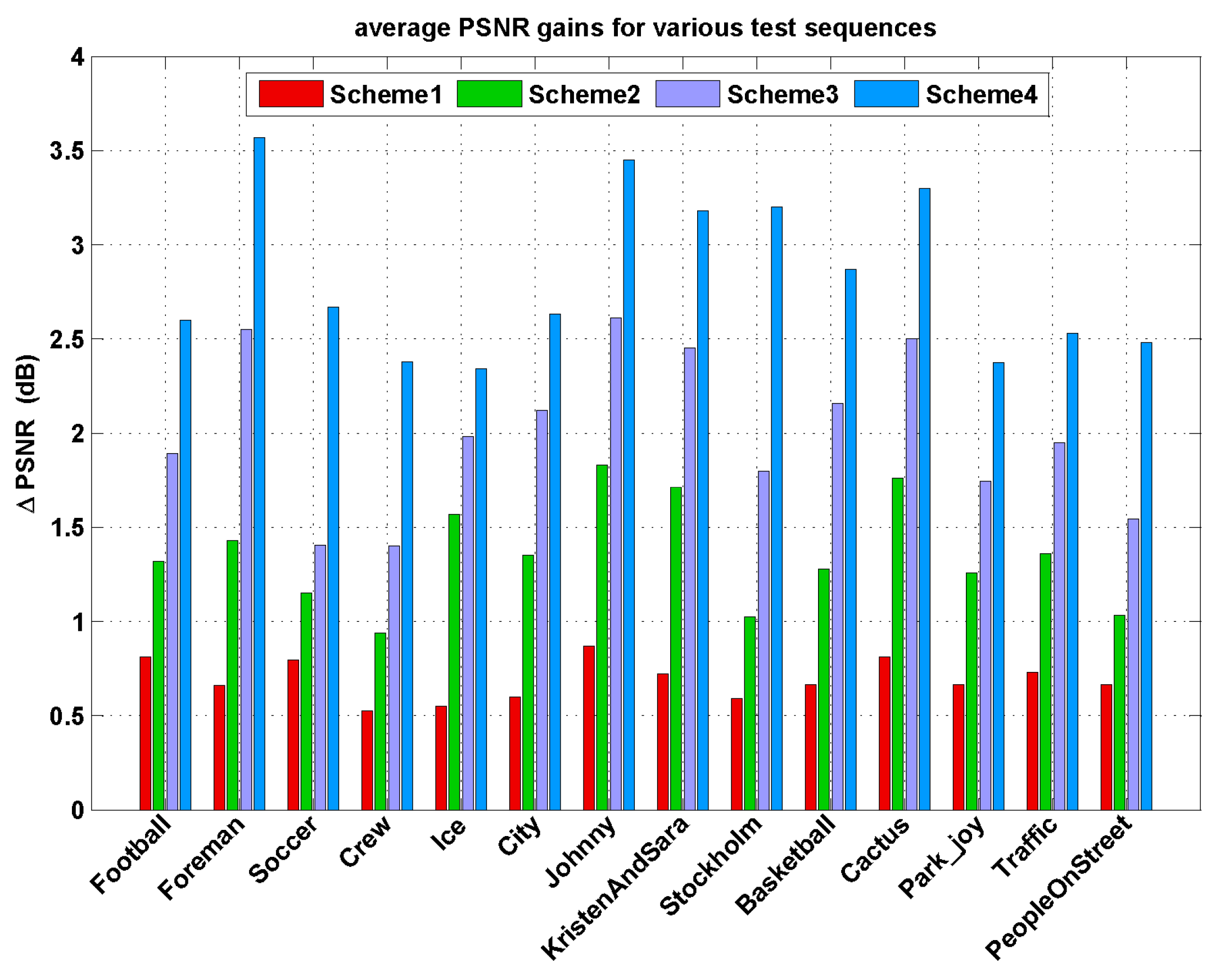

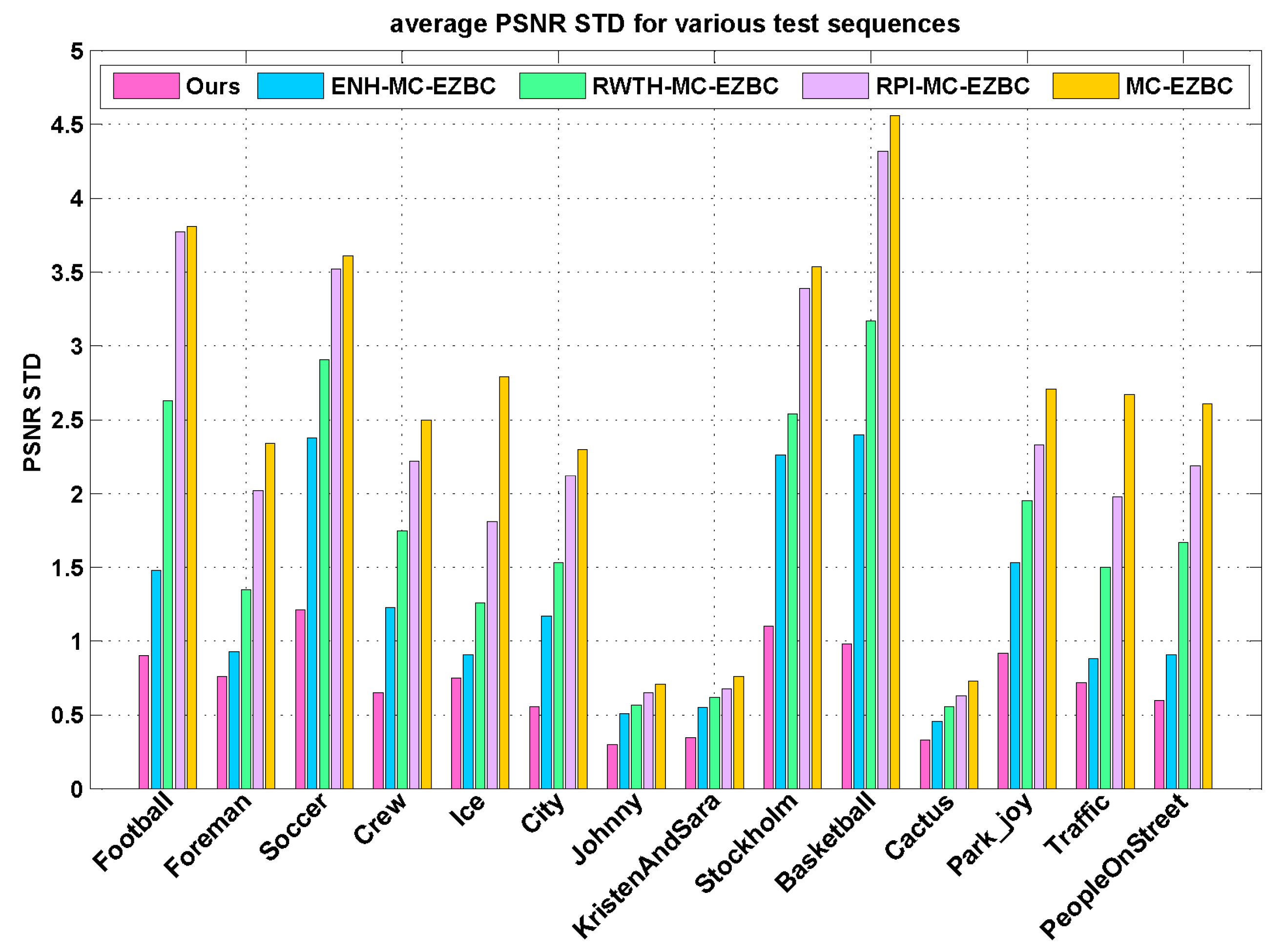

4.3. Performance Evaluation

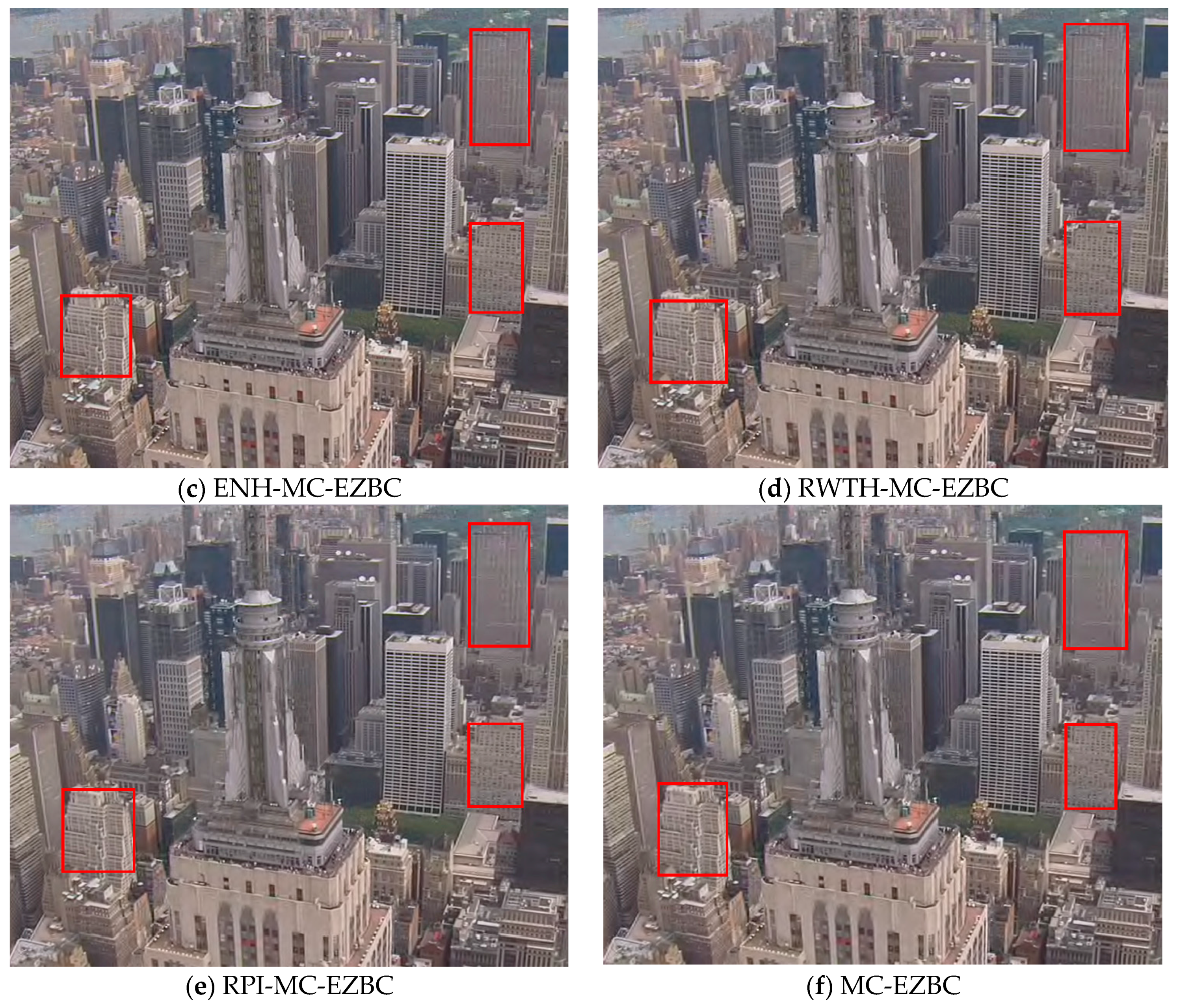

- MC-EZBC: the original MC-EZBC without employing proper RDO scheme.

- RPI-MC-EZBC: the bidirectional MC-EZBC from Rensselaer Polytechnic Institute which uses Haar filters for the conventional MCTF framework with the default Lagrange multiplier value for all the temporal decomposition levels.

- RWTH-MC-EZBC: the improved version of MC-EZBC from RWTH Aachen University, which uses longer filters instead of Haar filters for the conventional MCTF framework with the corresponding fixed Lagrange multiplier for each temporal decomposition level.

- ENH-MC-EZBC: the enhanced MC-EZBC using an adaptive MCTF framework with the corresponding fixed Lagrange multiplier for each temporal decomposition level.

- Proposed method: our codec with the proposed content adaptive Lagrange multiplier selection method.

4.3.1. Comparison of Rate-Distortion Performance

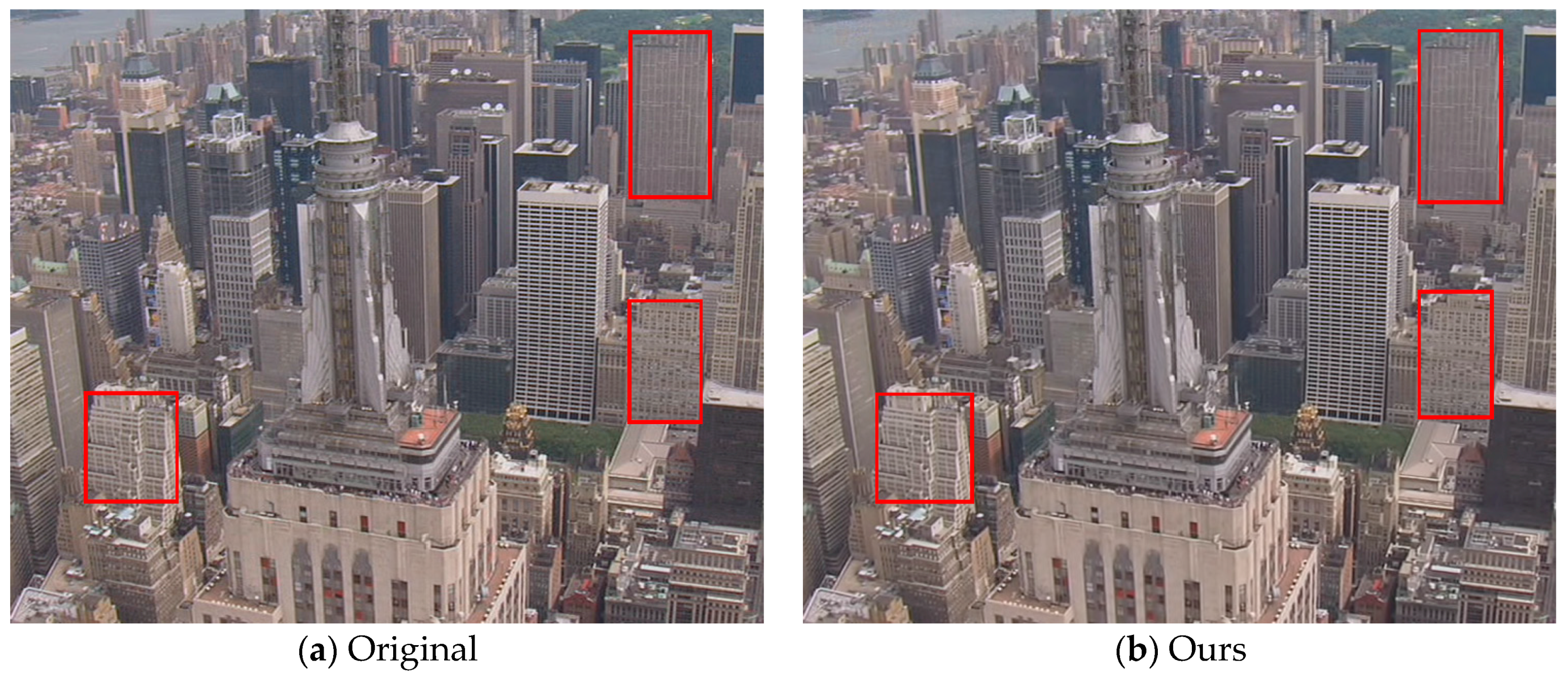

4.3.2. Comparison of Subjective Performance

4.3.3. Comparison of Computational Complexity

5. Conclusions

Acknowledgments

Author Contributions

Conflicts of Interest

References

- Chen, H.; Kao, M.P.; Nguyen, T.Q. Bidirectional scalable motion for scalable video coding. IEEE Trans. Image Process. 2010, 19, 3059–3064. [Google Scholar] [CrossRef] [PubMed]

- Wu, Y.S.; Yeh, C.H.; Tseng, W.Y.; Chang, M.K. Scalable video streaming transmission over cooperative communication networks based on frame significance analysis. In Proceedings of the 2012 IEEE International Conference on Signal Processing, Communication and Computing (ICSPCC), Hong Kong, China, 12–15 August 2012; pp. 274–279. [Google Scholar]

- Chen, D. New Bilinear Model for Inter-Frame Video Compression. Ph.D. Thesis, Rensselaer Polytechnic Institute, New York, NY, USA, 2014. [Google Scholar]

- Condoluci, M.; Araniti, G.; Molinaro, A.; Iera, A. Multicast resource allocation enhanced by channel state feedbacks for multiple scalable video coding streams in LTE networks. IEEE Trans. Veh. Technol. 2016, 65, 2907–2921. [Google Scholar] [CrossRef]

- Omidyeganeh, M.; Ghaemmaghami, S.; Shirmohammadi, S. Application of 3D-wavelet statistics to video analysis. Multimed. Tools Appl. 2013, 65, 441–465. [Google Scholar] [CrossRef]

- Belyaev, E.; Egiazarian, K.; Gabbouj, M. A low-complexity bit-plane entropy coding and rate control for 3-D DWT based video coding. IEEE Trans. Multimedia 2013, 15, 1786–1799. [Google Scholar] [CrossRef]

- Belyaev, E.; Egiazarian, K.; Gabbouj, M.; Liu, K. A Low-complexity joint source-channel video coding for 3-D DWT codec. J. Commun. 2013, 8, 893–901. [Google Scholar] [CrossRef]

- Das, A.; Hazra, A.; Banerjee, S. An efficient architecture for 3-D discrete wavelet transform. IEEE Trans. Circuits Syst. Video Technol. 2010, 20, 286–296. [Google Scholar] [CrossRef]

- Jin, X.S.; Lin, M.L.; Zhao, Z.J.; Chen, M.; Fan, Z.P. Bitrate allocation for 3-D wavelet video coder. In Proceedings of the 2011 IEEE International Conference on Advanced Computer Control (ICACC), Harbin, China, 18–20 January 2011; pp. 261–264. [Google Scholar]

- Belyaev, E.; Forchhammer, S.; Codreanu, M. Error concealment for 3-D DWT based video codec using iterative thresholding. IEEE Commun. Lett. 2017, 21, 1731–1734. [Google Scholar] [CrossRef]

- Fradj, B.B.; Zaid, A.O. Scalable video coding using motion-compensated temporal filtering and intra-band wavelet based compression. Multimed. Tools Appl. 2014, 69, 1089–1109. [Google Scholar] [CrossRef]

- Wu, Y.; Woods, J.W. Aliasing reduction via frequency roll-off for scalable image/video coding. IEEE Trans. Circuits Syst. Video Technol. 2008, 18, 48–58. [Google Scholar]

- Secker, A.; Taubman, D. Highly scalable video compression using a lifting-based 3D wavelet transform with deformable mesh motion compensation. In Proceedings of the 2002 IEEE International Conference on Image Processing (ICIP), Rochester, NY, USA, 22–25 September 2002; pp. 749–752. [Google Scholar]

- Xiong, R.; Xu, J.; Wu, F.; Li, S. Barbell-lifting based 3-D wavelet coding scheme. IEEE Trans. Circuits Syst. Video Technol. 2007, 17, 1256–1269. [Google Scholar] [CrossRef]

- Belyaev, E.; Vinel, A.; Surak, A.; Gabbouj, M.; Jonsson, M.; Egiazarian, K. Robust vehicle-to-infrastructure video transmission for road surveillance applications. IEEE Trans. Veh. Technol. 2015, 64, 2991–3003. [Google Scholar] [CrossRef]

- Li, S.; Zhu, C.; Gao, Y.; Zhou, Y.; Dufaux, F. Lagrangian multiplier adaptation for rate-distortion optimization with inter-frame dependency. IEEE Trans. Circuits Syst. Video Technol. 2016, 26, 117–129. [Google Scholar] [CrossRef]

- Li, S.; Xu, M.; Wang, Z.; Sun, X. Optimal bit allocation for CTU level rate control in HEVC. IEEE Trans. Circuits Syst. Video Technol. 2017, 27, 2409–2424. [Google Scholar] [CrossRef]

- Wang, M.; Ngan, K.N.; Li, H. Low-delay rate control for consistent quality using distortion-based Lagrange multiplier. IEEE Trans. Image Process. 2016, 25, 2943–2955. [Google Scholar] [CrossRef] [PubMed]

- Gong, Y.; Wan, S.; Yang, K.; Wu, H.; Liu, Y. Temporal-layer-motivated lambda domain picture level rate control for random-access configuration in H.265/HEVC. IEEE Trans. Circuits Syst. Video Technol. 2017, 27, 2109–2123. [Google Scholar] [CrossRef]

- Gonzalez-de-Suso, J.L.; Jimenez-Moreno, A.; Martine z-Enriquez, E.; Diaz-de-Maria, F. Improved method to select the Lagrange multiplier for rate-distortion based motion estimation in video coding. IEEE Trans. Circuits Syst. Video Technol. 2014, 24, 452–464. [Google Scholar] [CrossRef] [Green Version]

- Kuo, C.H.; Shih, Y.L.; Yang, S.C. Rate control via adjustment of Lagrange Multiplier for video coding. IEEE Trans. Circuits Syst. Video Technol. 2016, 26, 2069–2078. [Google Scholar] [CrossRef]

- Zhang, F.; Bull, D.R. An adaptive Lagrange multiplier determination method for rate-distortion optimization in hybrid video codecs. In Proceedings of the 2015 IEEE International Conference on Image Processing (ICIP), Quebec City, QC, Canada, 27–30 September 2015; pp. 671–675. [Google Scholar]

- Zhao, L.; Zhang, X.; Tian, Y.; Wang, R.; Huang, T. A background proportion adaptive Lagrange multiplier selection method surveillance video on HEVC. In Proceedings of the 2013 IEEE International Conference on Multimedia and Expo (ICME), San Jose, CA, USA, 15–19 July 2013; pp. 1–6. [Google Scholar]

- Sullivan, G.J.; Wiegand, T. Rate-distortion optimization for video compression. IEEE Signal Process. Mag. 1998, 15, 74–90. [Google Scholar] [CrossRef]

- Li, X.; Oertel, N.; Hutter, A.; Kaup, A. Advanced Lagrange multiplier selection for hybrid video coding. In Proceedings of the 2007 IEEE International Conference on Multimedia and Expo (ICME), Beijing, China, 2–5 July 2007; pp. 364–367. [Google Scholar]

- Seo, S.W.; Han, J.K.; Nguyen, T.Q. Rate control scheme for consistent video quality in scalable video codec. IEEE Trans. Image Process. 2011, 20, 2166–2176. [Google Scholar] [PubMed]

- Hou, J.; Wan, S.; Ma, Z.; Chau, L. Consistent video quality control in scalable video coding using dependent distortion quantization model. IEEE Trans. Broadcast. 2013, 59, 717–724. [Google Scholar] [CrossRef]

- Song, J.; Yang, F.; Zhou, Y.; Gao, S. Parametric planning model for video quality evaluation of IPTV services combining channel and video characteristics. IEEE Trans. Multimedia 2017, 19, 1015–1029. [Google Scholar] [CrossRef]

- Ohm, J.R. Three-dimensional subband coding with motion compensation. IEEE Trans. Image Process. 1994, 3, 559–571. [Google Scholar] [CrossRef] [PubMed]

- Xiong, R.; Xu, J.; Wu, F.; Li, S.; Zhang, Y. Optimal subband rate allocation for spatial scalability in 3D wavelet video coding with motion aligned temporal filtering. In Proceedings of the 2005 IEEE International Conference on Visual Communications and Image Processing (VCIP), Beijing, China, 10–13 November 2005; pp. 1–12. [Google Scholar]

- Xiong, R.; Xu, J.; Wu, F.; Li, S.; Zhang, Y. Subband coupling aware rate allocation for spatial scalability in 3-D wavelet video coding. IEEE Trans. Circuits Syst. Video Technol. 2007, 17, 1311–1324. [Google Scholar] [CrossRef]

- Peng, G.; Hwang, W.; Chen, S. Optimal bit-allocation for wavelet-based scalable video coding. In Proceedings of the 2012 IEEE International Conference on Multimedia and Expo (ICME), Melbourne, Australia, 9–13 July 2012; pp. 663–668. [Google Scholar]

- Peng, G.; Hwang, W. Optimal bit-allocation for wavelet scalable video coding with user preference. In Advanced Video Coding for Next-Generation Multimedia Services; Ho, Y.S., Ed.; InTech Press: Rijeka, Croatia, 2013; pp. 117–138. ISBN 978-953-51-0929-7. [Google Scholar]

- Hsiang, S.T.; Woods, J.W. Embedded video coding using invertible motion compensated 3-D subband/wavelet filter bank. Signal Process. Image Commun. 2001, 16, 705–724. [Google Scholar] [CrossRef]

- Wu, Y.; Hanke, K.; Rusert, T.; Woods, J.W. Enhanced MC-EZBC scalable video coder. IEEE Trans. Circuits Syst. Video Technol. 2008, 18, 1432–1436. [Google Scholar]

- Ortega, A.; Ramchandran, K. Rate-distortion methods for image and video compression. IEEE Signal Process. Mag. 1998, 15, 23–50. [Google Scholar] [CrossRef]

- Everett, H. Generalized Lagrange multiplier method for solving problems of optimum allocation of resources. Oper. Res. 1963, 11, 399–417. [Google Scholar] [CrossRef]

- Ramchandran, K.; Vetterli, M. Best wavelet packet bases in a rate-distortion sense. IEEE Trans. Image Process. 1993, 2, 160–175. [Google Scholar] [CrossRef] [PubMed]

- Schuster, G.M.; Katsaggelos, A.K. An optimal quadtree-based motion estimation and motion-compensated interpolation scheme for video compression. IEEE Trans. Image Process. 1998, 7, 1505–1523. [Google Scholar] [CrossRef] [PubMed]

- Wiegand, T.; Schwarz, H.; Joch, A.; Kossentini, F.; Sullivan, G.J. Rate-constrained coder control and comparison of video coding standards. IEEE Trans. Circuits Syst. Video Technol. 2003, 13, 688–703. [Google Scholar] [CrossRef]

- Hsiang, S.T.; Woods, J.W.; Ohm, J.R. Invertible temporal subband/wavelet filter banks with half-pixel-accurate motion compensation. IEEE Trans. Image Process. 2004, 13, 1018–1028. [Google Scholar] [CrossRef] [PubMed]

- Tsai, C.Y.; Hang, H.M. A rate-distortion analysis on motion prediction efficiency and mode decision for scalable wavelet video coding. J. Vis. Commun. Image Represent. 2010, 21, 917–929. [Google Scholar] [CrossRef]

- Sharabayko, M.P.; Ponomarev, O.G. Fast rate estimation for RDO mode decision in HEVC. Entropy 2014, 16, 6667–6685. [Google Scholar] [CrossRef]

- Liu, Z.G.; Peng, Y.H.; Yang, Y. An adaptive GOP structure selection for haar-like MCTF encoding based on mutual information. Multimed. Tools Appl. 2009, 43, 25–43. [Google Scholar] [CrossRef]

- Xu, R.; Taubman, D.; Naman, A.T. Motion estimation based on mutual information and adaptive multi-scale thresholding. IEEE Trans. Image Process. 2016, 25, 1095–1108. [Google Scholar] [CrossRef] [PubMed]

- Bar-Yosef, Y.; Bistritz, Y. Gaussian mixture models reduction by variational maximum mutual information. IEEE Trans. Signal Process. 2015, 63, 1557–1569. [Google Scholar] [CrossRef]

- Taubman, D. High performance scalable image compression with EBCOT. IEEE Trans. Image Process. 2000, 9, 1158–1170. [Google Scholar] [CrossRef] [PubMed]

- Mallat, S.; Falzon, F. Analysis of low bit rate image transform coding. IEEE Trans. Signal Process. 1998, 46, 1027–1042. [Google Scholar] [CrossRef]

- Wan, S.; Yang, F.; Izquierdo, E. Lagrange multiplier selection in wavelet-based scalable video coding for quality scalability. Signal Process. Image Commun. 2009, 24, 730–739. [Google Scholar] [CrossRef]

- Devore, J.; Farnum, N.; Doi, J. Applied Statistics for Engineers and Scientists; Cengage Learning Press: Boston, MA, USA, 2013. [Google Scholar]

- Anegekuh, L.; Sun, L.; Jammeh, E.; Mkvawa, I.; Ifeachor, E. Content-based video quality prediction for HEVC encoded videos streamed over packet networks. IEEE Trans. Multimed. 2015, 17, 1323–1334. [Google Scholar] [CrossRef]

- Banitalebi-Dehkordi, A.; Pourazad, M.T.; Nasiopoulos, P. An efficient human visual system based quality metric for 3D video. Multimed. Tools Appl. 2016, 75, 4187–4215. [Google Scholar] [CrossRef]

- MC-EZBC Video Coder Software Repository. Available online: http://www.cipr.rpi.edu/research/mcezbc/ (accessed on 30 January 2018).

| Frame Mode | ||||||

|---|---|---|---|---|---|---|

| bi-direction | −1/2 | 1 | −1/2 | 1/4 | 1 | 1/4 |

| uni-left | −1 | 1 | 0 | 1/2 | 1 | 0 |

| uni-right | 0 | 1 | −1 | 0 | 1 | 1/2 |

| intra | N/A | N/A | N/A | N/A | 1 | N/A |

| Sequences | MCTF Level | |||

|---|---|---|---|---|

| 1st | 2nd | 3rd | 4th | |

| Football | 0.9921 | 0.9894 | 0.9951 | 0.9975 |

| Foreman | 0.9860 | 0.9954 | 0.9967 | 0.9982 |

| Soccer | 0.9984 | 0.9962 | 0.9981 | 0.9993 |

| Crew | 0.9976 | 0.9983 | 0.9989 | 0.9991 |

| Ice | 0.9787 | 0.9899 | 0.9935 | 0.9980 |

| City | 0.9843 | 0.9894 | 0.9932 | 0.9982 |

| Johnny | 0.9885 | 0.9891 | 0.9964 | 0.9989 |

| KristenAndSara | 0.9932 | 0.9967 | 0.9970 | 0.9985 |

| Stockholm | 0.9924 | 0.9962 | 0.9984 | 0.9990 |

| Basketball | 0.9787 | 0.9812 | 0.9899 | 0.9988 |

| Cactus | 0.9949 | 0.9950 | 0.9966 | 0.9990 |

| Park_joy | 0.9815 | 0.9843 | 0.9957 | 0.9981 |

| Traffic | 0.9870 | 0.9932 | 0.9960 | 0.9991 |

| PeopleOnStreet | 0.9893 | 0.9919 | 0.9949 | 0.9993 |

| Average | 0.9888 | 0.9919 | 0.9957 | 0.9986 |

| Sequences | Resolution | Characteristics | |

|---|---|---|---|

| Football | 352 288 | 260 | Fast camera and human subject motion, highly spatial details |

| Foreman | 352 288 | 300 | Fast camera and content motion with pan at the end |

| Soccer | 352 288 | 300 | Fast changes in motion, rapid camera panning |

| Crew | 704 576 | 300 | Multiple moderate objects movement |

| Ice | 704 576 | 240 | Still background and moderate human subject motion |

| City | 704 576 | 300 | Fast camera motion, high detail of buildings |

| Johnny | 1280 720 | 100 | Still background and low local motion |

| KristenAndSara | 1280 720 | 100 | Still background and moderate local motion |

| Stockholm | 1280 720 | 100 | Moderate camera panning, high detail of buildings |

| Basketball | 1920 1080 | 100 | Fast camera and human subject motion, highly spatial details |

| Cactus | 1920 1080 | 100 | Circling motion and highly spatial details |

| Park_joy | 1920 1080 | 100 | Camera and content motion, high detail of trees |

| Traffic | 2560 1600 | 100 | Moderate translational motion and highly spatial details |

| PeopleOnStreet | 2560 1600 | 100 | Still background and many human subject motion |

| Resolution | Encoding Speed | ||||

|---|---|---|---|---|---|

| Ours | ENH-MC-EZBC | RWTH-MC-EZBC | RPI-MC-EZBC | MC-EZBC | |

| CIF | 5.31 | 5.82 | 4.87 | 1.52 | 1.21 |

| 4CIF | 5.16 | 5.55 | 4.52 | 1.29 | 1.05 |

| 720p | 3.05 | 3.57 | 2.71 | 0.87 | 0.64 |

| 1080p | 1.09 | 1.34 | 0.83 | 0.35 | 0.29 |

| 2K | 0.72 | 0.79 | 0.55 | 0.16 | 0.12 |

| Average | 3.07 | 3.41 | 2.70 | 0.84 | 0.66 |

© 2018 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Chen, Y.; Liu, G. Content Adaptive Lagrange Multiplier Selection for Rate-Distortion Optimization in 3-D Wavelet-Based Scalable Video Coding. Entropy 2018, 20, 181. https://doi.org/10.3390/e20030181

Chen Y, Liu G. Content Adaptive Lagrange Multiplier Selection for Rate-Distortion Optimization in 3-D Wavelet-Based Scalable Video Coding. Entropy. 2018; 20(3):181. https://doi.org/10.3390/e20030181

Chicago/Turabian StyleChen, Ying, and Guizhong Liu. 2018. "Content Adaptive Lagrange Multiplier Selection for Rate-Distortion Optimization in 3-D Wavelet-Based Scalable Video Coding" Entropy 20, no. 3: 181. https://doi.org/10.3390/e20030181