2.1. PLL Theory

For better understanding of the proposed method, we first describe an overview of the PLL theory. A PLL is a system synchronizing an output signal with a reference or input signal in frequency as well as in phase. In particular, our proposal is based on the binary-valued continuous-time PLL, where the reference and output signals are square waves.

In

Figure 2, which illustrates a block diagram of a typical PLL, the product

f(

t)

g(

t) of the reference signal

f(

t) and the output signal

g(

t) is computed by the phase detector. As shown in

Figure 3, both of

f(

t) and

g(

t) are square waves that alternately take two values, namely 1 and −1, with 50 % duty ratio. The product

f(

t)

g(

t) is filtered by a low-pass filter to yield an averaged value

of the product. The averaged product

, which is also known as the time correlation of the signals

f(

t) and

g(

t), depends on the phase difference

ϕ between

f(

t) and

g(

t). The relation between

and

ϕ is shown in

Figure 4.

The time correlation is taken by a voltage-controlled oscillator (VCO) whose output frequency varies depending on its input q(ϕ). When q(ϕ) equals to zero, it oscillates at a predetermined central frequency. The larger q(ϕ) is, the lower the output frequency is; the smaller q(ϕ) is, the higher the frequency is. A typical design is to make the output frequency linearly depend on the input voltage q(ϕ), but saturate at certain lowest and highest input values.

As can be seen from

Figure 4, when

q(

ϕ) is positive, the output signal

g(

t) is varied in the direction such that its phase will be lagged relatively from that of the reference

f(

t). When negative, of course,

g(

t) is varied so that its phase is advanced. As long as the feedback characteristic of this loop is properly designed, the system will be converged to the stable equilibrium point

q(

ϕ) =

π/2. This is called the locked state. Note that the point

q(

ϕ) = 3

π/2 is an unstable equilibrium point, and thus the system will not stay here in a real operation environment where various disturbances exist.

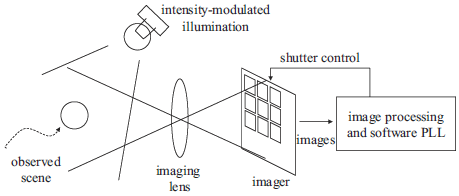

2.2. Imager-based PLL – A Simplified Case

In the proposed synchronization system, we take the sum of the incident light power accepted by all the pixels of the imager of the vision system as the reference signal f(t). For the sake of simplicity, we assume, for the moment, that there is no background light, that is, all of the light component that the imager accepts is originated from the modulated illumination. We also assume that the average brightness of the scene does not change too rapidly and the amplitude of f(t) is considered to be constant, even when some of the pixels are saturated. Then, without loss of generality, f(t) can be defined by a square wave with the low value 0 and high value 1. The final assumption we make for the moment is that the photo integration time of the vision sensor is equal to its frame time. Loosening or validating these assumptions will be discussed later.

We also define, as the output signal of the PLL, a time function

g(

t) such that

g(

t) = 1 when the frame number index of the vision sensor is odd and

g(

t) = −1 when even. The signals

f(

t) and

g(

t) are illustrated in

Figure 5. Note that the vision frame rate will be exactly twice the illumination modulation frequency when the PLL system is locked.

We formulate the time correlation of

f(

t) and

g(

t) as

where

T denotes the period of the correlation time window, which is sufficiently longer than the vision frame time.

The main difference between this formulation and the standard theory described in Section 2.1 is that

f(

t) takes 1 and 0 instead of 1 and −1. This difference comes from the fact that light brightness cannot be negative. Nevertheless, the PLL system will behave in the same way as the standard one as long as

g(

t) has 50% duty ratio, because

where

f′(

t) is the square wave with the values 1 and −1. This is a common technique used when correlation of optical signals is computed [

12,

13].

Unfortunately, we still cannot implement this time correlation computation as it is formulated. Most vision sensors output an image, which is the result of time integration of incident light over the frame time, just frame by frame, and therefore the reference value

f(

t) or the product

f(

t)

g(

t) at any arbitrary time instant is unavailable. However, by considering that

g(

t) is a constant during one frame period, we can obtain the time correlation as

where

i is the frame number index and

F(

i) is the sum of the pixel values obtained within the frame

i.

This notation assumes that the correlation window length T is multiples of the frame time, but we do not stick to this case. Actually, we even do not execute integration over a fixed time window but replace it with discrete-time summation and low-pass filtering. In this case, we have no explicit correlation time windows, but the time constant of the employed low-pass filter plays the corresponding role.

The whole procedure of the proposed time-correlation computation is depicted in

Figure 6. The three horizontal axes, from top to bottom, stand for

f(

t),

g(

t) and an conceptual illustration of the imager output, respectively. The blue and red rectangles on the third axis show integrated photocurrent amount in the pixels in odd and even frames, respectively, and the green vertical arrows show the output from the imager, namely the sum of the pixel values over all the pixels, obtained at the ends of frames, which are proportional to the heights of the blue/red triangles at the corresponding frames. The outputs at odd and even frames are multiplied by 1 and −1, respectively, and fed to the summation and low-pass filtering process.

The resulting correlation is then used to adjust the frame time length of the vision sensor and this process serves as a voltage-controlled oscillator in PLLs. It makes the frame time length equal to a half of the illumination modulation period when the correlation is zero, longer when the correlation is positive, and shorter when negative.

In the literature, image sensor technologies to compute time correlation between incident light brightness and some given signals by introducing multiplication hardware within a pixel have been presented [

12,

13]. Unlike these prior proposals, we do not need any dedicated pixel structures and thus off-the-shelf image sensors can be used. The reason this is possible is that our application does not require correlation results per pixel but only the sum over all the pixels is needed, which allows us to execute the multiplication outside of the pixel array.

To implement our algorithm, we need to have a means to precisely adjust the frame time length of the vision sensor in real time. Many industrial cameras offer functions to control the shutter by external trigger signals, and then implementing a circuitry to adjust the frame time is easy. In some cameras, we may even have access to built-in functions to control the frame time by software, and such functions can be utilized unless they come with severe processing delays. If there are no means to adjustment the frame time at all, admittedly, our method cannot be applied, and the virtual synchronization will be the only way.

2.3. Effect of Background Light

So far, we have assumed that there is no background light, which will not be supposed in most realistic situations. In situations with background light, the incident light accepted by the imager is given by the sum of the light component originated from the modulated illumination and that originated from the other light sources. Note that the former is proportional to the brightness of the modulated illumination and the latter is independent of the modulated illumination.

By remembering that our algorithm always takes the difference of the imager output of successive two frames, we can expect that the background light component will be canceled unless the scene changes too rapidly. This discussion is validated in the following experiments section. When most of the pixels are saturated by background light, the proposed method is, of course, not able to carry out synchronization because the modulated illumination cannot offer any information. Note that this situation, in which most of the pixels are saturated, prohibits almost any kinds of visual information processing.

2.4. Effect of Photo Integration Time Shorter than Frame Time

We have also assumed so far that the imager accepts incident light over the whole frame time. In some cases, this is not true and mechanical or electronic shutters are introduced to limit the photo integration time to be shorter.

The proposed algorithm will work well even in this shorter integration time case. To illustrate this, the reference and output signals in this case is shown in

Figure 7 and the correlation of them is shown in

Figure 8. The output signal

g(

t) is redefined so that it takes positive or negative values only within the integration time and zero otherwise. The phase difference

ϕ between

f(

t) and

g(

t) is redefined so that

ϕ is zero if the middle time of a period when the modulated illumination is on coincides with the mid time of the integration time of the corresponding frame as shown in

Figure 7.

As can be seen from

Figure 8, the point

q(

ϕ) =

π/2 is the only stable equilibrium point even in this case. Therefore the proposed algorithm will work without any modification. This discussion is also validated in the simulation and experiment sections later.

It should be emphasized that the modulated, that is, blinking illumination does not disturb the visual observability of scenes. Because the vision sensor gets locked with the π/2 phase shift, the sensor operates in such a way that a half of every frame time is always illuminated, and the accumulated incident light within one frame time is always constant as long as the system is locked. Tracking of the locked PLL state can go on simultaneously with visual measurement for applications.