1. Introduction

One of the most common operations in ship maintenance is blasting, which consists in projecting a high-pressure jet of abrasive matter onto a surface to remove adherences or traces of rust. The objective of this task is to maintain hull integrity, guarantee navigational safety conditions and assure that the surface offers little resistance to the water in order to reduce fuel consumption. This can be achieved by grit blasting [

1] or ultra high pressure water jetting [

2]. In most cases these techniques are applied using manual or semi-automated procedures with the help of robotized devices [

3]. In either case defects are detected by means of human operators; this is therefore a subjective task and hence vulnerable to cumulative operator fatigue and highly dependent on the experience of the personnel performing the task.

Various authors have recently addressed the development of sensor systems supported by the infrastructure supplied by computer vision systems. The possible fields of application are quite diverse, including automatic robot welding [

4], 2-D position measuring [

5], vehicle applications [

6], optical measuring of objects [

7], distance estimation [

8] and others. Vision systems have likewise been used to detect defects on large metal surfaces. There are therefore numerous references in the literature [

9] that meet sets of requirements which differ depending on the application (type of defects, volume of defects to be detected per time unit, precision, robustness,

etc.). In systems of this kind controlled lighting systems are commonly used to highlight the defects and thus simplify the subsequent phases of image pre-processing and segmentation. However, such solutions are not acceptable in the case of inspection of large surfaces under variable and non-uniform lighting conditions as in automatic detection of surface defects in ship hulls in the open air. There is therefore a need to define a model that will make it possible to detect defects of this kind in real time with a high rate of accuracy. Such a method must be implemented in a system that is robust enough to be used in an aggressive environment such as a shipyard.

This paper proposes a sensor system for detecting defects in ship hulls which is simple enough to be implemented in such a way as to meet the real-time requirements for the application. This sensor considers a local thresholding method, which is based on the automatic calculation of a global reference value that has been denominated Histogram Range for Background Determination (HRBD). This value is subsequently used to calculate the local threshold of each area of the image, making it possible to determine whether or not a pixel belongs to a defect. The method has been tested against other classic thresholding methods and has proved highly stable in variable lighting conditions. At the same time, the proposed sensor system has been implemented and validated in real conditions at a shipyard in Spain.

Section 2 details the constituent elements of the sensor system and the sequence followed in the processing of the images captured. Section 3 details the defect detection method that has been developed. Section 4 presents the implementation of the sensor system developed for the shipyard case study, the measurements considered to assess the performance of the method, a comparison of the results with those of other common thresholding methods, and the results of the sensor system tests at the shipyard. Finally, Section 5 presents our conclusions.

2. Sensor System

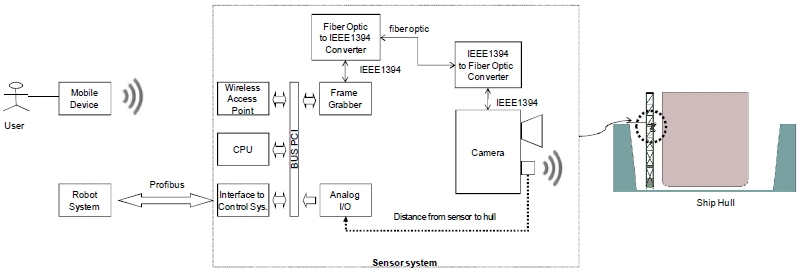

The sensor system proposed in this article (

Figure 1) operates on the basis of image acquisition via a digital camera equipped with a wide-angle lens. The camera is placed so that its optical axis is perpendicular to the plane of the surface to be inspected. The distance between that plane and the camera is measured with the help of an ultrasound sensor with a working range of 40 to 300 cm. The images obtained with this lens are slightly distorted (

Figure 2(a)), and therefore they have to be corrected with a camera model that includes the intrinsic (focal distance, image centre, radial and tangential distortion of the lens) and extrinsic (rotation matrix and translation vector) parameters of the camera. These parameters are derived by a one-off calibration in the workshop before the sensor system is put into operation at the shipyard. The software used for this purpose was the Toolbox for Matlab Caltech [

10] which is coming to be one of the commonly used calibration software. This toolbox implements, inter alia, the method developed by Zhang [

11]. That method requires the camera to observe a flat pattern from several (at least two) viewpoints, so that both the camera and the flat pattern can move freely without the need to identify that movement.

Once the sensor system has been calibrated, it is ready to operate in real conditions at the shipyard. From that point on, the sensor system corrects the distorted images captured by the camera in real time (

Figure 2(b)). That correction factors in the distance detected by the ultrasound sensor so that the camera model derived from the calibration procedure is loaded for each distance. The defect detection method proposed in this article is applied to the corrected image. This method makes it possible to obtain another image in which all the detected defects are marked (

Figure 2(c)). The position of the defects in the image and the parameters derived from the calibration are used to find the 3D coordinates of the points on the hull that the robot has to access for cleaning.

All cleaning methods (Grit Blasting, UHP Water Jetting) are based on the projection of a jet of grit or water of a given width, and so the image is divided into cells (

Figure 2(d)). The size of these cells indicates the area that the jet of grit or water is capable of cleaning when projected on to the vessel’s surface. In this way the sensor system sends back a matrix of MxN cells (

Figure 2(e)) where there may be defects and grit blasting may be necessary. The cell size is a user-defined input parameter. This is calculated from the distance between the blasting nozzle and the ship hull, the speed of the grit jet and the rate of cleaning head movement.

Finally, it is important to note that the images obtained in this way at the shipyard are typically captured in the open air and under highly variable atmospheric and lighting conditions. This is an aspect that will very much influence the method that is designed for defect detection as described in the following section.

3. Method for Defect Detection: UBE

The proposed method for the detection of defects has been denominated UBE (thresholding based on Unsupervised Background Estimation) and has been divided into two stages. In the first stage a global calculation is carried out on the images to estimate a parameter that has been called a Histogram Range for Background Determination (HRBD). This will serve as a reference during the local calculation. In the second stage, using this parameter as a starting point, the image is binarized following the steps detailed below.

3.1. First Stage. Determination of HRBD and Sensitivity

The proposed method is inspired by an algorithm described by Davies [

12] and used in systems of document exploration for optical character recognition. Davies’ method executes the segmentation of images by determining a threshold once the percentage of existing characters with regard to the background is known. This algorithm is not suitable for application to images where it is not possible to determine the foreground/background ratio beforehand. The method proposed in this article, thresholding based on Unsupervised Background Estimation (UBE), is an improvement on the method described by Davies in that it makes it possible to automatically estimate the foreground/background ratio by analysing the histogram of the image.

Figure 3 shows two typical situations that can arise with images of ship hulls taken in different conditions. The first of these (T1) was taken at the shipyard with a solar radiation level of 385 W/m

2 and the second (T16) with a level of 252 W/m

2. We can observe the following:

After performing these observations, the greater part of the background was judged to be situated between points Vi and Vd. The difference between these two values has been called the HRBD-Histogram Range for Background Determination.

Once the HRBD has been calculated, a sensitivity value (S) is calculated so that the calculation of the local threshold in the second stage of the method can be fine-tuned; this value is a ratio determined by the number of histogram entries different from zero (Nxs) divided by the HRBD (see

Figure 3(h)). This value computes the ratio between the total size of the histogram with values different from zero and the estimated size of the background, HRBD, and is calculated according to the following Equation:

Figure 4(b,d,f,h) shows graphically the results of calculating the HRBD = Vd − Vi on the histograms of four images from the case study (Images T1, T6, T16 and T36). In the figures, note how the points that allow the calculation of the HRBD are located on both sides of the most significant distribution. An intensive search for the significant minima both to the left (Vi) and to the right (Vd) of the main distribution was performed to determine the HRBD automatically, in which a significant minimum was taken to be the nearest minimum to the left or right, respectively, of the maximum of the histogram. Also shown are the numeric values derived from calculation of the HRBD and the sensitivity.

3.2. Second Stage. Segmentation of the Image Pixels

Once the HRBD has been calculated, the image is scanned pixel by pixel to determine which pixel belongs to the background and which does not. To do this, the method analyses the neighborhood of each pixel; this neighborhood is formed by a window of size k × k centred on the pixel in question, k being a natural odd number greater than one and smaller than the dimensions of the image. The neighborhood analysis determines the value of the local threshold (t) for the k pixel binarization.

To determine the local threshold of each pixel, first the range of the neighborhood of the pixel is determined as the difference between the maximum and the minimum of the Local Grey Level (LGL):

If the range is equal to or lower than the HRBD, the threshold is fixed by:

If the range is greater than the HRBD, the threshold is calculated by:

If the current value of the pixel is equal to or greater than this threshold it is considered to be a background pixel (grey level 255); otherwise it is considered as a pixel associated with the defect and is allotted a grey level value of zero.

Figure 5 details the relationship between r and HRBD that implies when a defect is present or not.

Finally, the binarized image is processed using an erosion filter followed by a dilation filter to reduce the noise, which improves the performance for foreground (defects) segmentation. The pseudocode for the algorithm followed by the sensor system in operation is depicted in

Figure 6.

4. Sensor System Validation

4.1. Sensor System Implementation

The sensor system has been implemented on a Pentium-IV at 2 GHz with a Matrox Meteor II/1394 card. This card is connected to the microprocessor via a PCI bus and is used as a frame-grabber. For that purpose the card has a processing node based on the TMS320C80 DSP from Texas Instruments and the Matrox NOA ASIC. In addition, the card has a firewire input/output bus (IEEE 1394) which enables it to control a half-inch digital colour camera (Sony DFW-SX910) equipped with a wide-angle lens (Cosmiscar H416 4, 2 mm). The software development environment used to implement the system software modules was the Visual C++ programming language powered by the Matrox Imaging Library v8.0. The system also has a Siemens CP5611 card which acts as a Profibus-DP interface for connection with the corresponding robotized blasting system. A Honeywell sensor is used to measure the distance to the ship by ultrasound, with a range of 200–2,000 mm and an output of 4–20 mA. User access to the sensor system is by means of an industrial PDS (Mobic T8 from Siemens) and a wireless access point. Among other functions, the software that has been developed allows the operator to: (1) enter the system configuration parameters, (2) visualize the possible cleaning points for validation by the operator before blasting commences, and (3) calibrate the sensor system.

4.2. Validation Environment

The proposed sensor system was assessed at the Navantia shipyard in Ferrol (Spain) on a robotized system used to perform automatic spot-blasting. This kind of blasting consists in cleaning only areas of the ship hull that are in poor condition rather than the entire hull. This operation accounts for 70% of all cleaning work carried out at that shipyard. The robotized system (

Figure 7) consists of a mechanical structure divided into two parts: primary and secondary. The primary structure holds the secondary structure (XYZ table), which supports the cleaning head and the sensor system. More information regarding this system can be found in [

13].

With the help of this platform, 200 images of the ship hull were taken, similar to T1 and T16 in

Figure 3. In this way a catalogue was compiled of typical surface defects as they appear before grit blasting. Images were acquired at different points in time and were classified into four time intervals: A (8 a.m. to 10 a.m.), B (10 a.m. to 1 p.m.), C (1 p.m. to 4 p.m.) and D (4 p.m. to 7 p.m.). In this way it was possible to achieve a complete analysis of the sensor system’s performance, which included its behaviour in variable lighting conditions.

The images illustrate three main features of the lighting conditions that the algorithms must deal with: (1) lighting conditions vary as the sun’s position and weather conditions change in the course of the day, (2) lighting is not uniform because of the position of the hull relative to the sun, and (3) there is a difference in brightness between the upper and the lower levels of the dry-dock.

4.4. System Sensor Appraisal

In order to check the quality of the proposed segmentation algorithm (UBE) for the sensor system, two alternative solutions were also implemented, based on two well-known classic thresholding algorithms. The first of these (Alg1) is based on the method of Otsu [

17] and the second (Alg2) on the method of Niblack [

18].

Otsu’s method considers “as optimal” the grey level that maximizes the variance between classes. For this purpose it considers the use of a set of theresholds. In this case study we consider two classes present in the image: the background and defects, so being only necessary the calculation of one global threshold. Niblack’s method locally adapts the threshold according to the local mean and standard deviation (calculated in windows of k × k pixels size).

The three solutions were applied to the catalogue of 200 images that had been taken at the shipyard (one of these is shown in

Figure 8(a)). The result was 3 × 200 images in which the defects had been segmented using each of the three methods considered (one of them is shown in

Figure 8(b), corresponding to the UBE method). To apply the metrics described above, human inspectors were needed to segment each of the catalogue images manually (one of these is shown in

Figure 8(c)).

Table 1 shows the results after applying each of the proposed algorithms (Alg1, Alg2 and UBE) to the catalogue of 200 images. The average values and the yield were calculated for each of the three metrics considered (ME, RAE and EMM). The average value of the three metrics and the average yield were also calculated. As the table shows, the best yields were achieved with the proposed UBE method (see shaded figures).

Figure 9 shows the mean yields for each time interval (A, B, C and D). Note how the three algorithms show maximum efficiency when lighting conditions are best (time intervals A and B). As lighting conditions get worse, the performance of both Alg1 and Alg2 deteriorates rapidly compared with UBE, which maintains its performance in the worst conditions (time interval D). We may conclude that the UBE algorithm presents the best stability when faced with changes in lighting conditions.

4.5. Results for the Sensor System Incorporated in the Cleaning Robot

To validate the sensor system in real working conditions, it was used in the blasting of an oil tanker having a length of 120 m and a height of 12 m, using pyrite slag as grit. The target surface, with defects evenly distributed over the entire length of the hull and covering approximately 30% of the total surface, was divided into 360 panels 2 m wide by 2 m high.

Before the tests began, an inspector examined the areas that had to be blasted in each panel, with the aid of a camera (

Figure 10(a,b) shows an example of a panel). An operator then blasted half of the panels and the other half was blasted by the robotized system equipped with the proposed sensor system.

Figure 10(c) shows the segmented image calculated by the sensor systems and used in the process that determined the XYZ points on the hull which contained defects.

Figure 10(d) shows the discrepancies between the calculations made by the sensor system and those of the inspector. It identifies the cells marked by the system as defective when they were not (Type I error) and the cells marked free of defects when in the inspector’s opinion they required treatment (Type II error).

The panels that had been blasted by the operators were also inspected to identify discrepancies with the inspector’s analysis.

Table 2 shows the average number of cells cleaned by the operator and by the sensor system with Type I and Type II errors for the 360 panels indicated above.

As we can see, the sensor system produced better results as regards false positives—i.e., cells marked as defective when they are not (Type I error). This is essentially because the operator tends to blast larger areas than are necessary, and moreover he is less able to control the cut-off of the grit jet. On the other hand, the sensor system identified more false negatives (Type II error) than the operator. This difference was not very significant and is quite acceptable in view of the clear advantages offered by the sensor system as regards Type I errors.

5. Conclusions

This paper has presented a sensor system based on an original thresholding method (UBE), especially suited for image segmentation under variable and non-uniform lighting conditions. A comparison of the method proposed for detection of defects with other classic thresholding methods shows that it achieves a higher performance. The sensor system incorporates a robotized system for cleaning ship hulls, making it possible to fully automate grit blasting. The results as regards to reliability were very similar to those achieved with human operators, while faster (15–25%) inspection was achieved and the consequences of operator fatigue minimized. The proposed sensor system can readily be used in other robotized cleaning systems using either grit or pressurized water.

Acknowledgments

The work submitted here has been conducted within the framework of the “EFTCoR: Environmentally Friendly and Cost-Effective Technology for Coating Removal (EFTCoR)” project. 5th Framework Programme of the European Community (ref GRD2-2001-50004). It has also received funding from the Spanish government (national plan of I+D+I, PET 2008-0131) and from the Government of the Region of Murcia (Séneca Foundation).

References and Notes

- Momber, A. Blast Cleaning Technologies; Springer Verlag: Berlin Heidelberg, Germany, 2008; pp. 109–164. [Google Scholar]

- Le Calve, P. Qualification of Paint Systems after UHP Water Jetting and Understanding the Phenomenon of “Blocking” of Flash Rusting. J. Protec. Coat. Lin 2007, 8, 13–26. [Google Scholar]

- Ortiz, F; Alonso, D; Pastor, P; Alvarez, B; Iborra, A. Bioinspiration in Robotics. Walking and Climbing Robots; Habib, MK, Ed.; I-Tech Education and Publishing: Rijeka, Croatia, 2007; Chapter 12,; p. 187. [Google Scholar]

- Park, J; Lee, S; Lee, IJ. Precise 3D Lug Pose Detection Sensor for Automatic Robot Welding Using a Structured-Light Vision System. Sensors 2009, 9, 7550–7565. [Google Scholar]

- Luna, C; Lázaro, J; Mazo, M; Cano, A. Sensor for High Speed, High Precision Measurement of 2-D Positions. Sensors 2009, 9, 8810–8823. [Google Scholar]

- Hannan, M; Hussain, A; Samad, S. System Interface for an Integrated Intelligent Safety System (ISS) for Vehicle Applications. Sensors 2010, 10, 1141–1153. [Google Scholar]

- Chiabrando, F; Chiabrando, R; Piatti, D; Rinaudo, F. Sensors for 3D Imaging: Metric Evaluation and Calibration of a CCD/CMOS Time-of-Flight Camera. Sensors 2009, 9, 10080–10096. [Google Scholar]

- Lázaro, J; Cano, A; Fernández, P; Luna, C. Sensor for Distance Estimation Using FFT of Images. Sensors 2009, 9, 10434–10446. [Google Scholar]

- Zheng, H; Kong, LX; Nahavandi, S. Automatic Inspection of Metallic Durface Fefects Using Genetic Algorithms. J. Mater. Process. Tech 2002, 125, 427–433. [Google Scholar]

- Bouguet, JY. Camera Calibration Toolbox for Matlab. Available online: http://www.vision.caltech.edu/bouguetj/calib_doc/ (accessed on 10 March 2010).

- Zhang, Z. A Flexible New Technique for Camera Calibration. IEEE Trans. Pattern. Anal 2000, 22, 1330–1334. [Google Scholar]

- Davies, ER. Machine Vision: Theory Algorithms Practicalities, 3rd ed; Elsevier: Riverside, CA, USA, 2005; pp. 103–129. [Google Scholar]

- Iborra, A; Pastor, J; Alonso, D; Alvarez, B; Ortiz, FJ; Navarro, PJ; Fernández, C; Suardiaz, J. A Cost-Effective Robotic Solution for the Cleaning of Ships’ Hulls. Robotica 2010, 28, 453–464. [Google Scholar]

- Zhang, YJ. A Review of Recent Evaluation Methods for Image Segmentation. Proceedings of 6th International Symposium on Signal Processing and its Applications, Kuala Lumpur, Malaysia, 13–16 August 2001; pp. 148–151.

- Abak, AT; Baris, U; Sankur, B. The Performance Evaluation of Thresholding Algorithms for Optical Character Recognition. Proceedings of the 4th International Conference on Document Analysis and Recognition, Ulm, Germany, August 1997; pp. 697–700.

- Sezgin, M; Sankur, B. Survey over Image Thresholding Techniques and Quantitative Evaluation. J. Electron. Imaging 2004, 1, 146–168. [Google Scholar]

- Otsu, NA. Threshold Selection Method from Gray-Level Histograms. IEEE Trans. Syst. Man. Cybren 1978, 8, 62–66. [Google Scholar]

- Niblack, W. An Introduction to Digital Image Processing; Strandberg Publishing Company: Birkeroed, Denmark, 1986; pp. 115–116. [Google Scholar]

© 2010 by the authors; licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution license (http://creativecommons.org/licenses/by/3.0/).