1. Introduction

Nowadays, visual servoing is a well known approach to guide a robot using visual information. The two main types of visual servoing techniques are position-based and image-based [

1]. The first one uses 3-D visually-derived information when making motion control decisions. The second one performs the task by using information obtained directly from the image. However, the interaction matrix employed in these visual servoing systems requires known different camera parameters and the depth of the image features.

A typical approach to determine the depth of a target is the use of multiple cameras. The most commonly applied configuration using more than one camera is stereo vision (SV). In this case, in order to be able to calculate the depth of a feature point by triangulation, the correspondence of this point in both cameras must be assured.

In this paper the use of 3D time-of-flight (ToF) cameras is proposed in order to obtain the required 3D information in visual servoing approaches. These cameras provide range images which give depth measurements of the visual features. In the last years 3D-vision systems based on the ToF principle have gained more importance compared to SV. Using a ToF camera, illumination and observation directions can be collinear, therefore, this technique does not produce incomplete range data due to shadow effects. Furthermore, SV systems have difficulties in estimating the 3D information of planes such as walls or roadways. They cannot find the corresponding physical point of the observed 3D-space in both camera systems. Hence the 3D information of that point cannot be calculated by applying the triangulation principle. Another standard technique to obtain 3D information is the use of laser scanners. The advantages of ToF cameras over laser scanners are the high frame rates and the compactness of the sensor. These aspects have motivated the use of a ToF camera to obtain the required 3D information to guide the robot.

Some previous works have been developed in order to guide a robot by visual servoing using ToF Cameras. Within these works, a visual servoing system using PSD (Position Sensitive Device) triangulation for PCB manufacturing is presented in [

2]. In [

3] a position-based visual servoing is described to perform the tracking of a moving sphere using a pan-tilt unit. In this last paper a ToF Camera manufactured by CSEM is used. A similar approach is described in [

4] to determine object positions by means of an eye-to-hand camera system. Unlike these previous approaches, in this paper the range images are not used directly to estimate the 3D pose of the objects in the workspace. A new image-based visual servoing system which integrates range information in the interaction matrix is presented to perform the robot guidance. Another advantage of the proposed system over the previous ones is the possibility of performing the camera calibration during the task. To do so, the visual servoing system uses the range images not only to determine the depths of the features but also to adjust the ToF camera parameters during the task.

When a ToF camera is used, some aspects must be taken into consideration, such as large fluctuations in precision caused by external interfering factors (e.g., sunlight) and scene configurations (

i.e., distances, orientations and reflectivity). These influences produce systematic errors which must be processed. Specifically, the distance computed from the range images is very changing depending on the integration time parameter. This paper presents a method for the online adaptation of the integration time of ToF cameras. This online adaptation is necessary to capture the images in the best condition independently of the changes in distance (between camera and objects) caused by the movements of the camera when it is mounted on a robotic arm. Previous works have been developed for ToF camera calibration [

5–

7]. These works perform an estimation of the camera parameters and distance errors when static scenes are observed. In these researches, a fixed distance between the camera and the objects is considered. Therefore, these previous works cannot be applied in visual servoing tasks where the camera performs the tracking of a given trajectory. In this last case, the camera parameters such as the integration time must be modified in order to optimally observe the scene. To do this, several previous works adapt the camera parameters, such as the amplitude of the integration time, during the task. In [

8] a CSEM-Swissrange camera is employed for the navigation of a mobile robot in an environment with different objects. This work automatically estimates the value of the integration time according to the intensity pattern obtained by the camera. However, this parameter is depends on illumination and reflectance conditions. To solve this problem, in [

9] a PMD camera is also used for mobile robot navigation. This work proposes an algorithm based on the amplitude parameter. In contrast with [

4], the range of working distance analyzed is between 0.25 m and 1 m for the application of visual servoing.

This paper is organized as follows: In Section 2, a visual servoing approach for guiding a robot by using an eye-in-hand ToF camera is presented. Section 3 describes the operation principle of the ToF cameras and the PMD camera employed. In Section 3, an offline camera calibration approach for computing the required integration time from an amplitude analysis is shown. In Section 5, an algorithm for updating the integration time during the visual servoing task is described. In Section 6, experimental results confirm the validity of the visual servoing system and the calibration method. The final Section presents the main conclusions.

2. Visual Servoing Using Range Images

A visual servoing task can be described by an image function,

et, which must be regulated to 0:

where

s = (

f1,

f2, …

fM) is a M × 1 vector containing M visual features observed at the current state (

fi = (

fix,

fiy)), while

denotes the visual features values at the desired state,

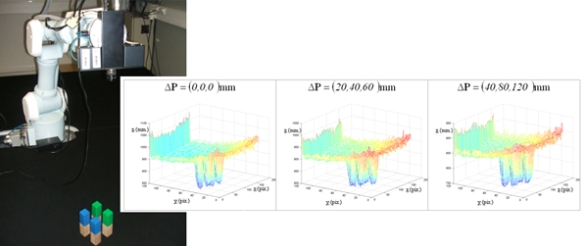

i.e., the image features observed at the desired robot location. In

Figure 1(a) the eye-in-hand camera system is shown. A PMD19K camera is located at the end-effector of a 7 d.o.f Mitsubishi PA-10 robot that acquires grayscale images of 160 × 120. In

Figure 1(b), an example of a visual servoing task is represented. This figure represents the initial and desired image features from the camera point of view.

Ls represents the interaction matrix which relates the variations in the image with the variations in the camera pose [

1]:

where

ṙ represents the camera velocity.

By imposing an exponential decrease of

et (

ėt = −

λ1et) it is possible to obtain the following control action for a classical image-based visual servoing:

where

λ1 > 0 is the control gain,

is the pseudoinverse of an approximation of the interaction matrix and

vc is the eye-in-hand camera velocity obtained from the control law in order to continuously reduce the error

et.

is chosen as the Moore-Penrose pseudoinverse of

L̂s [

1]. In order to completely define the control action, the value of the interaction matrix for the visual features extracted from the range images will be obtained in the following paragraphs.

First, the interaction matrix will be calculated when only one image feature (

fx,

fy) is extracted. The transformation between the range image

I(i,j) and 3D coordinates (relative to the camera position) is given by [

10]:

where f is the camera focal length, s

x and s

y are the pixel size in the x and y directions and

,

are the normalized pixel coordinates, relative to the position (u

0, v

0) of the optical center on the sensor array

.

To obtain the interaction matrix, the intrinsic parameters ξ = (f

u, f

v, u

0, v

0) are considered, where f

u = f·s

x and f

v = f·s

y. Therefore, considering these intrinsic parameters,

Equation (4) is equal to:

From (5) the coordinates of the image feature can be obtained as:

The time derivative of the previous equation is:

Considering the camera velocity

,

,

divided in translational

,

,

and rotational velocity

α̇C,

β̇C,

γ̇C, the following expression can be obtained from

Equation (7):

Developing the previous equation, an expression which relates the time derivative of the image features with the camera translational and rotational velocity can be obtained:

where:

The matrix obtained in

Equation (9) is the interaction matrix,

Ls, therefore,

s⋅ =

Ls ·

ṙ. The pseudo inverse of the interaction matrix derived in (9) is calculated for the control action in (3). In this last equation, an approximation of the interaction matrix is considered due the necessity of estimating the camera intrinsic parameters, ξ. If M visual features can be extracted from the image, the interaction matrix can be obtained as

Ls= [

Ls1 Ls2 … LsM]

T, where

Lsi is the interaction matrix determined in (9) for only one feature.

Various previous works have studied the image-based visual servoing stability. In applications with commercial robots the complete dynamical robot model is not provided. In this cases, the system stability is deduced depending on kinematics properties [

11–

14]. Paper [

1] describes that the local asymptotic stability can be ensured when the number of rows of the interaction matrix is greater than 6. However, we cannot ensure global asymptotic stability. As is indicated in [

1], to ensure the local stability, the desired visual features must be closed the current ones. Furthermore,

and

must be equal or very similar. To do so, the camera depth and intrinsic parameters must be correctly computed. The following algorithm [

15] has been used in order to estimate the camera intrinsic parameters. In addition, the accurate determination of the camera depth is one of the main problems. It will be solved in the following sections.

3. Analysis of the Distance Measurement Computed with the ToF Camera

In this section, a behaviour analysis of ToF cameras is provided. This analysis helps to define the methods to improve the depth measurement which will be used in the visual servoing system. A PMD19K camera has been used in this analysis. The PMD19K camera contains a Photo Mixer Device (PMD) array with a size of 160 × 120 pixels. This technology is based on CMOS technology and a time-of-flight (ToF) principle.

There are other similar cameras based on the same principle and with CMOS technology such as the CamCube 2 or 3 of PMD-Technologies and the SR2, SR3000 or SR4000 of CSEM-Technologies. The specifications and a comparison of the behaviour of these cameras is available in [

8] and [

16], respectively. PMD19K works with a wavelength of near-infrared (NIR) light of 870 nm and it can capture up to 15 fps with a depth resolution of 6 mm. Furthermore, in the experiments here presented, the camera is connected by Ethernet and it is programmable by SDK for Windows, although it can be connected by Firewire interface and programmable for Linux, too. The ToF camera technology is based on the principle of modulation interferometry [

6,

16]. The scene is illuminated with NIR light (PMD19K module with a default frequency of ω = 20 Mhz) and this light is reflected by the objects in the scene. The difference between both signals, emitted and reflected, causes a phase delay which is detected for each pixel and used to estimate the distance value. Thus, the ToF camera provides

D depth information of dynamic or static scenes irrespective of the object’s features such as: intensity, depth and amplitude data simultaneously for each pixel of each image captured. The intensity represents the grayscale information, the depth is the distance value calculated within the camera and the amplitude is the signal strength of the reflected signal (quality of depth measures). Then, given the speed light, c, the frequency modulation, ω, the correlation between signals for four internal phase delays, r

0(0°), r

1(90°), r

2(180°), r

3(270°), the camera compute the phase delay, ϕ, the amplitude, a, and the distance between sensor and the target, z, as follows:

This type of cameras has some disadvantages [

17]: they are sensitive to background light and interferences and they cause oversaturation and underexposure pixels. The PMD camera has two adjustable parameters to attenuate these errors in the pixels: the modulation frequency and the integration time. To do not change the original calibration determined by the manufacturer, only the behaviour of integration time has been studied to be adjusted. The integration time is defined as the exposure time or the effective length of time a camera’s shutter is open. This is time is needed so that the light reachs the image sensor suitably.

In a visual servoing system with eye-in-hand configuration (

Figure 1) the camera is mounted at the end-effector of a robotic arm. Therefore, when the robot is moved, the distance between sensor and target,

, changes and the integration time, τ, has to be on-line adjusted to minimize the error in the computed depth. Whenever this parameter is suitably computed, the image range can be acquired in better conditions and so the features extraction process in the image can be improved looking for reaching the best features without modified the light environment or the object surfaces in the scene.

Figure 2 shows the stability of the distance measurements obtained from the range images with regards to the integration time. May

et al. [

9] show this dependency in a Swisrange SR-2 camera for the navigation of a mobile robot. The same is studied by Wiedemman

et al. [

8] to build maps with a mobile robot and by Gil

et al. [

17] to guide a robotic arm by using an eye-in-hand configuration for visual servoing (

Figure 1). In this last work, a PMD19K camera was used.

In previous works, some experiments were done in order to observe the evolution of the distance measured by the camera when the integration time changed. In those experiments from 750 images (an integration time offset of 100 ms between each image), a relationship between mean distance value,

, and integration time, τ, in microseconds is shown when the robot (

Figure 1) is moved and the distance between sensor and target changes. As

Figure 2 shows, when integration time is small, the distance computed is unstable and nontrustworthy. In the same way, when the integration time is high an oversaturation phenomenon sometimes appears in the signal which determines the distance curves. Normally, this phenomenon only appears when the distance measured between scene and camera is below a fixed nominal distance or distance threshold, as it is explained in [

17]. In

Figure 2(a), oversaturation appears when the integration time is greater than 45 ms. However, in

Figure 2(b), the oversaturation only occurs when the integration time is greater than 70 ms. Therefore, the nearer the target is, the smaller the threshold of integration time must be. Thus, the farther the target is, the more precise the distance computed is. In addition, something similar happens with the intensity as it is explained in [

9], although it is more sensitive to the background light and interferences [

8,

12]. Consequently, in the calibration process, the flat zone of the curve (

Figure 2) has to be computed in order to use a ToF camera such as PMD19K for visual servoing. This zone determines the minimum and maximum integration times allowed to avoid the oversaturation and the instability problems. In this paper, these values have been fixed using the calibration method presented in [

17], where the histogram which represents the frequency distributions of the amplitude measurements of PMD19k are adjusted by means of probability density functions (PDF) using Kolmogorov-Smirnov and Anderson-Darling methods.

4. Camera Calibration: Computing Integration Time from an Amplitude Analysis

As regards the amplitude measurements, the curve which shows the evolution of the mean amplitude can be computed from a set of images acquired using a nominal fixed distance (the same as the mean distance that was computed in

Figure 2). The analysis of the mean amplitude curve determines the thresholds of time integration, [τ

min, τ

max] which are needed in order to guarantee the precise computation of the distance measurements (

Figure 3). The amplitude parameter, a, of a ToF camera defines the quality of the range images computed using a specific integration time. The minimum threshold, τ

min, is computed as the minimum integration time needed to compute the image depth in the desired camera location. It is determined as the time value where a least squares line fitting the mean amplitude curve crosses the zero axis (

Figure 3). The maximum threshold, τ

max, is computed as the maximum integration time needed to compute the image depth in the initial camera location. These limits (

Figure 3) are computed depending on the distance between target and camera by means of an offline process, as follows:

Pose the Robot in the initial pose and capture an image, I

τ, for some integration time,

τ ∈ [0,85ms] At each iteration:

The amplitude analysis of

Figure 3 shows a group of curves (a curve for each camera location). The curves show how the linearisation level (part of flat slope) determines the degree of oversaturation. Thus, the amplitude curves grow quickly until they reach an absolute maximum value when the camera is near the target and the curves are more linear when the camera is moved away from the target.

Once, the integration time values for final and initial camera positions have been computed, some intermediate integration time, τ

k,

Figure 3(a) are computed for the robot trajectory. To do this, empirical tests have been done with the following algorithm:

Fix the integration time as τ0 = τmax for image I0

Compute the deviation error ea = ad – (am)0 where ad = max{am} according to a desired minimum distance.

Update integration time following the control law τk = τk–1 (1 + K · ea) where K is a proportional constant and it is adjusted depending on the robot velocity.

This way, some intermediate integration time values,

τk ∈ [

τmin,

τmax], have been estimated for different distances between the final and the initial positions. Therefore, the proper computation of

is done using a polynomial interpolation which fits these intermediate positions (

Figure 4). In general, polynomial interpolation may not fit precisely at the end points. But this is not a problem because they are fixed with the time integration needed for the desired and the initial camera positions. Considering, τ

min and τ

max as the values 10 ms and 46.4 ms (upper quartile of the maximum value shown in

Figure 3(b), 57.4 ms.) respectively and some intermediate time, τ

k, all computed, according the previous calibration method,

is given by:

where:

5. Algorithm for Updating the Camera Integration Time During the Task

From the previous analysis, a method to automatically update the integration time is presented in this section in order to be applied during visual servoing tasks.

Considering

cMo the extrinsic parameters (pose of the object frame with respect to the camera frame), an object point can be expressed in the camera coordinate frame as:

Considering a pin-hole camera projection model, the point

with 3D coordinates relative to the camera reference frame is projected onto the image plane at the point

p of 2D coordinates. This point is computed from the focal length (distance between retinal plane and optical center of camera) as:

Finally, the units of (17) specified in terms of metric units (e.g., mm.) are scaled and transformed in coordinates in pixels relative to the image reference frame, as:

where ξ = (f

u, f

v, u

0, v

0) are the camera intrinsic parameters.

The intrinsic parameters describe properties of the camera used, such as the position of the optical center (u

0, v

0), the size of the pixel and the focal length defined by (f

u, f

v). They are computed from a calibration process based on [

15]

During a visual servoing task, the camera extrinsic parameters are not known, and

cMo is considered as an estimation of the real camera pose. In order to determine this pose, we must minimize progressively the error between the observed data,

so, and the position of the same features computed by back-propagation employing the current extrinsic parameters,

s (16)–(18). Therefore an error function which must be progressively reduced is defined as:

The time derivative of

e will be:

To make

e decrease exponentially to 0,

ė = −λ

2e, we obtain the following control action:

where λ

2 is a positive control gain and

is the pseudoinverse of the interaction matrix (9). Once the error is annulled the extrinsic parameters will be obtained. This approach is used by the virtual visual servoing systems to compute the camera locations. More details about the convergence, robustness and system stability can be seen in [

11,

12].

Consequently, two estimations are obtained for the depth of a given image feature: one depth (

z1) from the previous estimated extrinsic parameters and another depth (

) from (10). This last depth is calculated from the range image and, therefore, can be updated by modifying the camera integration time. The adequate integration time will be obtained when

z1 and

z2 are equal. Therefore, a new control law is applied in order to update the integration time, τ, by minimizing the error between

z1 and

z2:

where λ

3 > 0.

The algorithm for updating the camera integration time is summarized in the following lines: First perform the offline camera calibration to determine the initial integration time and

(see Section 4).

At each iteration of the visual servoing task:

Apply the control action to the robot:

Estimate the extrinsic parameters using virtual visual servoing.

Determine the depth, z1, from the previous extrinsic parameters and z2 from the range image (10).

Update the integration time by applying

In order to describe more clearly the interactions among all the subsystems that compose the proposed visual servoing system, a block diagram is represented in

Figure 5. In this block diagram (

Figure 5) it is possible to observe that in the feedback of the visual servoing system a complete convergence of virtual visual servoing is performed in order to determine the extrinsic parameters. Moreover, the convergence and stability aspects when virtual visual servoing techniques are used as feedback of a visual servoing system are discussed in [

18].

7. Conclusions

This paper presents a new image-based visual servoing system which integrates range information in the interaction matrix. Another property of the proposed system is the possibility of performing the camera calibration during the task. To do this, the visual servoing system uses the range images not only to determine the depths of the object features but also to adjust the camera integration time during the task.

When a ToF camera is employed to guide a robot, the distance between the camera and the objects of the workspace change. Therefore, the camera integration time must be updated in order to correctly observe the objects of the workspace. As it is demonstrated in the experiments, the integration time must be updated depending on the distance between the camera and the objects. The use of the proposed approach guarantees that the information obtained from the ToF camera is accurate because an adequate integration time is employed at each moment. This last aspect permits obtaining a better estimation for the objects depth. Therefore, the behaviour of the visual servoing is enhanced with respect to previous approaches where this parameter is not accurately estimated. Currently, we are working in determining the accurate dynamic model of the robot to improve the visual servoing control law in order to assure the given specifications during the task.