The Coverage Problem in Video-Based Wireless Sensor Networks: A Survey

Abstract

:1. Introduction

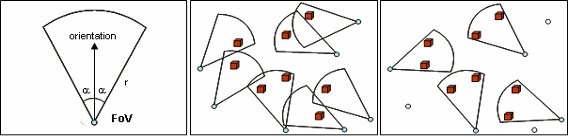

2. Video-based Wireless Sensor Networks

3. Fundaments of Directional Coverage

4. Coverage in Deterministic Deployment

4.1. Optimal Camera Placement

4.2. Optimal Sensor Placement

5. Coverage in Random Deployment

5.1. Node Localization after Deployment

5.2. Coverage Algorithms

5.3. Coverage Metrics

6. Connected Coverage and Energy Preservation

6.1. Saving Energy by Redundant Nodes

6.2. Algorithms for Coverage, Connectivity and Energy Preservation

7. Other Relevant Issues

8. Open Research Areas

9. Conclusions

References

- Kuorilehto, M; Hännikäinen, M; Hämäläinen, T. A Survey of Application Distribution in Wireless Sensor Networks. EURASIP J. Wire. Commun. Netw 2005, 5, 774–788. [Google Scholar]

- Baronti, P; Pillai, P; Chook, V; Chessa, S; Gotta, A; Hu, Y. Wireless Sensor Networks: A Survey on the State of the Art and the 802.15.4 and ZigBee Standards. Comp. Commun 2006, 30, 1655–1695. [Google Scholar]

- Yick, J; Mukherjee, B; Ghosal, D. Wireless Sensor Network Survey. Comp. Netw 2008, 52, 2292–2330. [Google Scholar]

- Pescaru, D; Istin, C; Curiac, D; Doboli, A. Energy Saving Strategy for Video-Based Wireless Sensor Networks under Field Coverage Preservation. Proceedings of IEEE International Conference on Automation, Quality and Testing, Robotics, Cluj-Napoca, Romania; 22–25 May 2008; pp. 289–294. [Google Scholar]

- Charfi, Y; Canada, B; Wakamiya, N; Murata, M. Challenging Issues in Visual Sensor Networks. IEEE Wire. Commun 2009, 16, 44–49. [Google Scholar]

- Soro, S; Heinzelman, W. A Survey of Visual Sensor Networks. Advan. Multimedia 2009, 21, 21. [Google Scholar]

- Huang, C; Tseng, Y. The Coverage Problem in a Wireless Sensor Network. Proceedings of 2nd ACM International Workshop on Wireless Sensor Networks and Applications, San Diego, CA, USA, September 19; 2003; pp. 115–121. [Google Scholar]

- Meguerdichian, S; Koushanfar, F; Potkonjak, M; Srivastava, M. Coverage Problems in Wireless ad hoc Sensor Networks. Proceedings of 20th IEEE Infocom, Anchorage, AK, USA; 22–26 April 2001; pp. 1380–1387. [Google Scholar]

- Cardei, M; Wu, J. Energy-Efficient Coverage Problems in Wireless ad hoc Sensor Networks. Comp. Commun 2006, 29, 413–420. [Google Scholar]

- Willig, A. Recent and Emerging Topics in Wireless Industrial Communications: A Selection. IEEE Trans. Ind. Inform 2008, 4, 102–124. [Google Scholar]

- Huang, C; Tseng, Y; Lo, L. The Coverage Problem in Three-Dimensional Wireless Sensor Networks. IEEE Global Telecommunications Conference, Dallas, TX, USA, November 29–December 3; 2004; pp. 3182–3186. [Google Scholar]

- Perillo, M; Heinzelman, W. DAPR: A Protocol for Wireless Sensor Networks Utilizing an Application-Based Routing Cost. Proceedings of IEEE Wireless Communications and Networking Conference, Atlanta, GA, USA; 21–25 March 2004; pp. 1540–1545. [Google Scholar]

- Soro, S; Heinzelman, W. On the Coverage Problem in Video-Based Wireless Sensor Networks. 2nd International Conference on Broadband Networks, Boston, MA, USA; 3–7 October 2005; pp. 932–939. [Google Scholar]

- Akyildiz, I; Su, W; Sankarasubramaniam, Y; Cayirci, E. A Survey on Sensor Networks. Comp. Netw 2002, 38, 393–422. [Google Scholar]

- Akyildiz, I; Melodia, T; Chowdhury, K. A Survey on Wireless Multimedia Sensor Networks. Comp. Netw 2007, 51, 921–960. [Google Scholar]

- Huang, C; Tseng, Y. A Survey of Solutions to the Coverage Problems in Wireless Sensor Networks. J. Int. Tec 2005, 6, 1–8. [Google Scholar]

- Tao, D; Mal, H; Liu, Y. Energy-Efficient Cooperative Image Processing in Video Sensor Networks. Proceedings of Pacific Rim Conference on Multimedia, Jeju Island, Korea; 13–16 November 2005; pp. 572–583. [Google Scholar]

- Chen, M; Leung, V; Mao, S; Yuan, Y. Directional Geographical Routing for Real-Time Video Communications in Wireless Sensor Networks. Comp. Commun 2007, 30, 3368–3383. [Google Scholar]

- Erdem, U; Sclaroff, S. Optimal Placement of Cameras in Floorplans to Satisfy Task Requirements and Cost Constraints. Proceedings of the 5th Workshop on Omnidirectional Vision, Camera Networks and Non-classical Cameras, Prague, Czech Republic; 16 May 2004. [Google Scholar]

- Pescaru, D; Istin, C; Naghiu, F; Gavrilescu, M; Curiac, D. Scalable Metric for Coverage Evaluation in Video-Based Wireless Sensor Networks. Proceedings of 5th Symposium on Applied Computational Intelligence and Informatics, Timisoara, Romania; 28–29 May 2009; pp. 323–327. [Google Scholar]

- Osais, YE; St-Hilaire, M; Riu, FR. Directional Sensor Placement with Optimal Sensing Ranging, Field of View and Orientation. Mob. Netw. Appl 2010, 15, 216–225. [Google Scholar]

- Younis, M; Akkaya, K. Strategies and Techniques for Node Placement in Wireless Sensor Networks: A Survey. Ad Hoc Netw 2008, 6, 621–655. [Google Scholar]

- Tarabanis, K; Allen, P; Tsai, R. A Survey of Sensor Planning in Computer Vision. IEEE Trans. Robot. Autom 1995, 11, 86–104. [Google Scholar]

- Marengoni, M; Draper, B; Handson, A; Sitaraman, R. A System to Place Observers on a Polyhedral Terrain in a Polynomial Time. Image Vis. Comput 1996, 18, 773–780. [Google Scholar]

- Bose, P; Guibas, L; Lubiw, A; Overmars, M; Souvaine, D; Urrutia, J. The Floodlight Problem. Int. J. Comput. Geom. Appl 1997, 7, 153–163. [Google Scholar]

- Khan, S; Javed, O; Rasheed, Z; Shah, M. Human Tracking in Multiple Cameras. Proceedings of 8th IEEE International Conference on Computer Vision, Vancouver, BC, Canada; 7–14 July 2001; pp. 331–336. [Google Scholar]

- Collins, R; Lipton, A; Fujiyoshi, H; Kanade, T. Algorithms for Cooperative Multisensor Surveillance. Proc. IEEE 2001, 89, 1456–1477. [Google Scholar]

- Cai, Q; Aggarwal, JK. Tracking Human Motion in Structured Environments Using a Distributed-Camera System. IEEE Trans. Patt. Anal. Mach. Int 1999, 21, 1241–1247. [Google Scholar]

- Pito, R. A Solution to the Next Best View Problem for Automated Surface Acquisition. IEEE Trans. Patt. Anal. Mach. Int 1999, 21, 1016–1030. [Google Scholar]

- Mayer, J; Bajcsy, R. Occlusions as a Guide for Planning the Next View. IEEE Trans. Patt. Anal. Mach. Int 1993, 15, 417–433. [Google Scholar]

- Mittal, A; Davis, L. Visibility Analysis and Sensor Planning in Dynamic Environments. Proceedings of 8th European Conference on Computer Vision, Prague, Czech Republic; 11–14 May 2004; pp. 175–189. [Google Scholar]

- Hörster, E; Lienhart, R. Approximating Optimal Visual Sensor Placement. Proceedings of IEEE International Conference on Multimedia and Expo, Toronto, ON, Canada; 9–12 July 2006; pp. 1257–1260. [Google Scholar]

- Hörster, E; Lienhart, R. On the Optimal Placement of Multiple Visual Sensors. Proceedings of the 4th ACM International Workshop on Video Surveillance and Sensor Networks, Santa Barbara, CA, USA; 27 October 2006; pp. 111–120. [Google Scholar]

- Ram, S; Ramakrishnan, K; Atrey, P; Singh, V; Kankanhalli, M. A Design Methodology for Selection and Placement of Sensors in Multimedia Surveillance Systems. Proceedings of the 4th ACM International Workshop on Video Surveillance and Sensor Networks, Santa Barbara, CA, USA; 27 October 2006; pp. 121–130. [Google Scholar]

- Zhao, J; Cheung, S; Nguyen, T. Optimal Camera Network Configurations for Visual Tagging. IEEE J. Sel. Topics Signal Proc 2008, 2, 464–479. [Google Scholar]

- Zhao, J; Cheung, S. Multi-Camera Surveillance with Visual Tagging and Generic Camera Placement. Proceedings of IEEE/ACM International Conference on Distributed Smart Cameras, Vienna, Austria; 25–28 September 2007; pp. 259–266. [Google Scholar]

- Adriaens, J; Megerian, S; Pontkonjak, M. Optimal Worst-Case Coverage of Directional Field-of-View Sensor Networks. Proceedings of 3rd Annual IEEE Communications Society Conference on Sensor, Mesh and Ad Hoc Communications and Networks, Reston, VA, USA; 25–28 September 2006; pp. 336–345. [Google Scholar]

- Couto, M; Souza, C; Rezende, P. Strategies for Optimal Placement of Surveillance Cameras in Art Galleries. Proceedings of 18th International Conference on Computer Graphics and Vision, Moscow, Russia; 23–27 June 2008; pp. 1–4. [Google Scholar]

- Sambhoos, P; Hansan, AB; Han, R; Lookabaugh, T; Mulligan, J. Weeblevideo: Wide Angle Field of View Video Sensor Networks. Proceedings of ACM SenSys Workshop on Distributed Smart Cameras, Boulder, CO, USA; 31 October 2006. [Google Scholar]

- Gonzalez-Barbosa, JJ; García-Ramirez, T; Salas, J; Hurtado-Ramos, J; Rico-Jiménez, JJ. Optimal Camera Placement for Total Coverage. Proceedings of IEEE International Conference on Robotics and Automation, Kobe, Japan; 12–17 May 2009; pp. 844–848. [Google Scholar]

- Zhou, Z; Das, S; Gupta, H. Variable Radii Connected Sensor Cover in Sensor Networks. ACM Trans. Sensor Netw 2009, 5, 1–36. [Google Scholar]

- Hörster, E; Lienhart, R. Calibrating and Optimizing Poses of Visual Sensors in Distributed Platforms. Multi. Syst 2006, 12, 195–210. [Google Scholar]

- Yu, C; Sharma, G. Plane-Based Calibration of Cameras with Zoom Variation. Proceedings of SPIE Visual Communication and Image Processing, San Jose, CA, USA; 17 January 2006. [Google Scholar]

- Han, X; Cao, X; Lloyd, EL; Cheng, CC. Deploying Directional Sensor Networks with Guaranteed Connectivity and Coverage. Proceedings of 5rd Annual IEEE Communications Society Conference on Sensor, Mesh and Ad Hoc Communications and Networks, San Francisco, CA, USA; 16–20 June 2008; pp. 153–160. [Google Scholar]

- Ai, J; Abouzeid, AA. Coverage by Directional Sensors in Randomly Deployed Wireless Sensors Networks. J. Comb. Optim 2006, 11, 21–41. [Google Scholar]

- Bender, P; Pei, Y. Development of Energy Efficient Image/Video Sensor Networks. Wirel. Pers. Commun 2009, 51, 283–301. [Google Scholar]

- Rahimi, M; Ahmadian, S; Zats, D; Laufer, R; Estrin, D. Magic Numbers in Networks of Wireless Image Sensors. Proceedings of Workshop on Distributed Smart Cameras, Boulder, CO, USA; 31 October 2006; pp. 71–81. [Google Scholar]

- Shih, E; Cho, S; Ickes, N; Min, R; Sinha, A; Wang, A; Chandrakasan, A. Physical Layer Driven Protocol and Algorithm Design for Energy-Efficient Wireless Sensor Networks. Proceedings of ACM Conference on Mobile Computing and Networking, Rome, Italy; 16–21 July 2001; pp. 272–287. [Google Scholar]

- Xingyu, P; Hongyi, Y. Redeployment Problem for Wireless Sensor Networks. Proceeding of International Conference on Communication Technology, Guilin, China; 27–30 November 2006. [Google Scholar]

- Pescaru, D; Gui, V; Toma, C; Fuiorea, D. Analysis of Post-Deployment Sensing Coverage for Video Wireless Sensor Networks. Proceedings of 6th International Conference RoEduNet, Craiova, Romania; 23–24 November 2007. [Google Scholar]

- Chow, KY; Lui, KS; Lam, EY. Achieving 360° Angle Coverage with Minimum Transmission Cost in Visual Sensor Networks. Proceedings of IEEE Wireless Communications and Networking Conference, Kowloon, Hongkong; 11–15 March 2007; pp. 4112–4116. [Google Scholar]

- Wu, CH; Chung, YC. A Polygon Model for Wireless Sensor Network Deployment with Directional Sensing Areas. Sensors 2009, 9, 9998–10022. [Google Scholar]

- Mao, G; Fidan, B; Anderson, B. Wireless Sensors Network Localization Techniques. ACM Comp. Netw. J 2007, 51, 2529–2553. [Google Scholar]

- Hu, L; Evans, D. Localization for Mobile Sensor Networks. Proceedings of ACM Conference on Mobile Computing and Networking, Philadelphia, PA, USA; September 2004; pp. 45–57. [Google Scholar]

- Sayed, AH; Tarighat, A; Khajehnouri, N. Network-Based Wireless Location: Challenges Faced in Developing Techniques for Accurate Wireless Location Information. IEEE Signal Proce. Mag 2005, 22, 24–40. [Google Scholar]

- Fuiorea, D; Guia, V; Pescaru, D; Toma, C. Using Registration Algorithms for Wireless Sensor Network Node Localization. Proceedings of 4th IEEE International Symposium on Applied Computational Intelligence and Informatics, Timisoara, Romania; 17–18 May 2007; pp. 209–214. [Google Scholar]

- Fuiorea, D; Gui, V; Pescaru, D; Paraschiv, P; Codruta, I; Curiac, D; Volosencu, C. Video-Based Wireless Sensor Networks Localization Technique Based on Image Registration and Sift Algorithm. WSEAS Trans. Comp 2008, 7, 990–999. [Google Scholar]

- Lee, H; Aghajan, H. Vision-Enabled Node Localization in Wireless Sensor Networks. Proceedings of Cognitive Systems with Interactive Sensors, Paris, France; March 2006; pp. 15–17. [Google Scholar]

- Funiak, S; Paskin, M; Guestrin, C; Sukthankar, R. Distributed Localization of Networked Cameras. Proceedings of the 5th International Conference on Information Processing in Sensor Networks, Nashville, TN, USA; 19–21 April 2006; pp. 34–42. [Google Scholar]

- Shafique, K; Hakeem, A; Javed, O; Haering, N. Self Calibrating Visual Sensor Networks. Proceedings of IEEE Workshop on Applications of Computer Vision, Copper Mountain, CO, USA; 7–9 January 2008; pp. 1–6. [Google Scholar]

- Devarajan, D; Radke, R. Distributed Metric Calibration of Large Camera Networks. Proceedings of the 1st Workshop on Broadband Advanced Sensor Networks, San Jose, CA, USA; 25 October 2004. [Google Scholar]

- Devarajan, D; Radke, R; Chung, H. Distributed Metric Calibration of Ad Hoc Camera Networks. ACM Trans. Sensor Netw 2006, 2, 380–403. [Google Scholar]

- Mantzel, WE. Linear Distributed Localization of Omini-Directional Camera Networks. Proceedings of the 6th Workshop on Ominidirectional Vision, Camera Networks and Non-classical Cameras, Beijing, China; 21 October 2005. [Google Scholar]

- Barton-Sweeney, A; Lymberopoulos, D; Savvides, A. Sensor Localization and Camera Calibration in Distributed Camera Sensor Networks. Proceedings of the 3rd International Conference on Broadband Communications, Networks and Systems, San Jose, CA, USA; 1–5 October 2006; pp. 1–10. [Google Scholar]

- Cai, Y; Lou, W; Li, M; Li, XY. Target-Oriented Scheduling in Directional Sensor Networks. In Proceedings of IEEE Infocom; Anchorage, AK, USA, May 6–12 2007; pp. 1550–1558. [Google Scholar]

- Tezcan, N; Wang, W. Self-Orienting Wireless Sensor Networks for Occlusion-Free Viewpoints. Comp. Netw 2008, 52, 2558–2567. [Google Scholar]

- Yu, C; Soro, S; Sharma, G; Heinzelman, W. Lifetime-Distortion Trade-Off in Image Sensor Networks. Proceedings of the IEEE International Conference on Image Processing, San Antonio, TX, USA; September 2007; pp. 129–132. [Google Scholar]

- Kandoth, C; Chellappan, S. Angular Mobility Assisted Coverage in Directional Sensor Networks. Proceedings of International Conference on Network-Based Information Systems, Indianapolis, IN, USA; 19–21 August 2009; pp. 376–379. [Google Scholar]

- Liu, X. Coverage with Connectivity in Wireless Sensor Networks. Proceedings of 3rd IEEE International Conference on Broadband Communications, Networks and Systems, San Jose, CA, USA; 1–5 October 2006; pp. 1–8. [Google Scholar]

- Istin, C; Pescaru, D. Deployments Metrics for Video-Based Wireless Sensor Networks. Trans. Autom. Contr. Comp. Sci 2007, 52, 163–168. [Google Scholar]

- Wan, P; Yi, C. Coverage by Randomly Deployed Wireless Sensors Networks. IEEE Trans. Inform. Theory 2006, 52, 2658–2669. [Google Scholar]

- Wang, X; Xing, G; Zhang, Y; Lu, C; Pless, R; Gill, C. Integrated Coverage and Connectivity Configuration in Wireless Sensor Networks. Proceedings of 1st ACM Conference on Embedded Networked Sensor Systems, Los Angeles, CA, USA; November 2003; pp. 28–39. [Google Scholar]

- Liu, L; Ma, H; Zhang, X. On Directional K-Coverage Analysis of Randomly Deployed Camera Sensor Networks. Proceedings of IEEE International Conference on Communications, Beijing, China; 19–23 May 2008; pp. 2707–2711. [Google Scholar]

- Chang, C; Aghajan, H. Collaborative Face Orientation Detection in Wireless Image Sensor Networks. Proceedings of ACM SenSys Workshop on Distributed Smart Cameras, Boulder, CO, USA; 31 October 2006. [Google Scholar]

- Ye, F; Zhong, G; Cheng, J; Lu, S; Zhang, L. PEAS: A Robust Energy Conserving Protocol for Long-Lived Sensor Networks. Proceedings of 23rd International Conference on Distributed Computing Systems, Providence, RI, USA; 19–22 May 2003; pp. 28–37. [Google Scholar]

- Margi, CB; Petkov, V; Obraczka, K; Manduchi, R. Characterizing Energy Consumption in a Visual Sensor Network Testbed. 2nd IEEE Conference on Testbeds and Research Infrastructures for the Development of Networks and Communities, Barcelona, Spain; July 2006; pp. 339–346. [Google Scholar]

- Margi, CB; Manduchi, R; Obraczka, K. Energy Consumption Tradeoffs in Visual Sensor Networks. Proceedings of 24th Brazilian Symposium on Computer Networks, Curitiba, Brazil; May 2006. [Google Scholar]

- Chen, B; Jamieson, K; Balakrishnan, H; Morris, R. SPAN: An Energy Efficient Coordination Algorithm for Topology Maintenance in Ad Hoc Wireless Networks. Wirel. Netw 2002, 8, 481–494. [Google Scholar]

- Al-Karaki, JN; Kamal, AE. Routing Techniques in Wireless Sensor Networks: A Survey. IEEE Wirel. Commun 2004, 11, 6–28. [Google Scholar]

- Halawani, S; Kahn, AW. Sensors Lifetime Enhancement Techniques in Wireless Sensor Networks: A Survey. J. Comp 2010, 2, 34–47. [Google Scholar]

- Bai, Y; Qi, H. Redundancy Removal through Semantic Neighbor Selection in Visual Sensor Networks. Proceedings of 3rd ACM/IEEE International Conference on Distributed Smart Cameras, Como, Italy; August 2009; pp. 1–8. [Google Scholar]

- Cerpa, A; Estrin, D. Ascent: Adaptive Self-Configuring Sensor Networks Topologies. Proceedings of 21st IEEE Infocom, New York, NY, USA; 23–27 June 2002; pp. 1278–1287. [Google Scholar]

- Istin, C; Pescaru, D; Ciocarlie, H; Curiac, D; Doboli, A. Reliable Field of View Coverage in Video-Camera Based Wireless Networks for Traffic Management Applications. Proceedings of IEEE International Symposium on Signal Processing and Information Technology, Sarajevo, Bosnia and Herzegovina, 16–19; December 2008; pp. 63–68. [Google Scholar]

- Istin, C; Pescaru, D; Doboli, A; Ciocarlie, H. Impact of Coverage Preservation Techniques on Prolonging the Network Lifetime in Traffic Surveillance Applications. Proceedings of 4th International Conference on Intelligent Computer Communication and Processing, Cluj-Napoca, Romania; 28–30 August 2008; pp. 201–206. [Google Scholar]

- Zamora, NH; Kao, JC; Marculescu, R. Distributed Power-Management Techniques for Wireless Network Video Systems. Proceedings of the Conference on Design, Automation and Test in Europe, Nice, France; 16–20 April 2007; pp. 1–6. [Google Scholar]

- Rahimi, M; Estrin, D; Baer, R; Uyeno, H; Warrior, J. Cyclops: Image Sensing and Interpretation in Wireless Networks. Proceedings of the 2nd International Conference on Embedded Networked Sensor Systems, Baltimore, MD, USA; 3–5 November 2004; p. 311. [Google Scholar]

- Soro, S; Heinzelman, W. Camera Selection in Visual Sensor Networks. Proceedings of IEEE Conference on Advanced Video and Signal Based Surveillance, London, UK; 5–7 September 2007; pp. 81–86. [Google Scholar]

- Dagher, J; Marcellin, M; Neifeld, M. A Method for Coordinating the Distributed Transmission of Imagery. IEEE Trans. Image Proc 2006, 15, 1705–1717. [Google Scholar]

- Park, J; Bhat, PC; Kak, AC. A Look-Up Table Based Approach for Solving the Camera Selection Problem in Large Camera Networks. Proceedings of ACM SenSys Workshop on Distributed Smart Cameras, Boulder, CO, USA; 31 October 2006. [Google Scholar]

- Ercan, A; Yang, D; Gamal, A; Guibas, L. Optimal Placement and Selection of Camera Network Nodes for Target Localization. Proceedings of the International Conference on Distributed Computing and Sensor Systems, San Francisco, CA, USA; 18–20 June 2006; pp. 389–404. [Google Scholar]

- Ercan, A; Gamal, A; Guibas, L. Camera Network Node Selection for Target Localization in the Presence of Occlusions. Proceedings of ACM SenSys Workshop on Distributed Smart Cameras, Boulder, CO, USA; 31 October 2006. [Google Scholar]

- McCurdy, N; Griswold, W. A System Architecture for Ubiquitous Video. Proceedings of the 3rd Annual International Conference on Mobile Systems, Seattle, WA, USA; 6–8 June 2005; pp. 1–14. [Google Scholar]

- Lee, I; Shaw, W; Park, J. On Prolonging the Lifetime for Wireless Video Sensors Networks. Mob. Netw. Appl 2010, 15, 575–588. [Google Scholar]

- Willians, J; Lee, W. Interactive Virtual Simulation for Multiple Camera Placement. Proceedings of IEEE International Workshop on Haptic Audio Visual Environments and Theirs Applications, Ottawa, ON, Canada, 4–5; November 2006; pp. 124–129. [Google Scholar]

- Feng, WC; Kaiser, E; Feng, WC; Baillif, ML. Panoptes: Scalable Low-Power Video Sensor Networking Technologies. ACM Trans. Multimed. Comp. Commun. Appl 2005, 1, 151–167. [Google Scholar]

- Kulkarni, P; Ganesan, D; Shenoy, P; Lu, Q. SensEye: A Multi-Tier Camera Sensor Network. Proceedings of the 13th ACM International Conference on Multimedia, Hilton, Singapore; 6–11 November 2005; pp. 229–238. [Google Scholar]

- Kulkarni, P; Ganesan, D; Shenoy, P. The Case of Multi-Tier Camera Sensor Networks. Proceedings of International Workshop on Network and Operating Systems Support for Digital Audio and Video, Stevenson, Washington, DC, USA; 13–14 June 2005; pp. 141–146. [Google Scholar]

- Ma, H; Liu, Y. Some Problems of Directional Sensor Networks. Int. J. Sensor Netw 2007, 2, 44–52. [Google Scholar]

| Optimal Placement Solution | Algorithm Approach | Short Description |

|---|---|---|

| Mittal and Davis [31] | Probabilistic visibility analysis | Optimal placement of cameras considering occlusion created by dynamic obstacles. |

| Erdem and Sclaroff [19] | Binary Optimization | Suitable for planar regions, following task-specific requirements. |

| Hörster and Lienhart [32] | ILP | The monitored field is modeled as a 2D grid. Optimal placement considers cost restrictions. |

| Hörster and Lienhart [33] | BIP / Heuristics | Proposes both an exact and an approximated solution for optimal placement. |

| Ram et al. [34] | BIP / Based on performance metrics | Optimal placement of multimedia camera/sensors, considering heterogeneous nodes. |

| Zhao et al. [35] | BIP | The monitored field is modeled in 3D. The authors expect self and mutual occlusion. |

| Zhao and Cheung [36] | BIP | Grid-based optimal camera placement. Also discusses visual tagging. |

| Couto et al. [38] | IP | Model the art gallery problem using ominidirectional cameras. |

| Gonzalez-Barbosa et al. [40] | ILP | Employs directional and ominidirectional cameras in a hybrid way for coverage optimization. |

| Hörster and Lienhart [42] | BIP | Automatically calibrate camera directions for coverage maximization with minimum overlapping. |

| Algorithm | Short Description |

|---|---|

| Ai and Abouzeid [45] | The SNCS protocol utilizes the residual energy of each node as a priority for putting nodes into sleep mode. Sleeping nodes can become active when theirs energy resources surpass the residual energy of current active nodes. |

| Pescaru et al. [4] | The proposed algorithms turn off less significant redundant nodes. Each active node evaluates its energy and if it is lower than a threshold, a redundant neighbor node in sleep mode is turned on. |

| Cai et al. [65] | Define subsets of sensors to cover a target, with individual sensors participating in one or more cover sets. Only one subset is activated at any time, saving energy by deactivating the remaining sets. |

| Istin et al. [83] | The nodes that detect FoV loss inform the neighboring nodes. Based on the answer, the node identifies the optimal cameras that should to be turned on. After the obstacles passes by and the original FoV is restored, the original node informs the neighboring cameras that attended the previous request that they should turn themselves off. |

| Zamora et al. [85] | Nodes that do not view the monitored target go to sleep mode, self activating after a fixed sleep time. Nodes can also exchange messages indicating the current and past views of the cameras. Such information is used to send nodes into sleep mode, potentially prolonging network lifetime. |

© 2010 by the authors; licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution license (http://creativecommons.org/licenses/by/3.0/).

Share and Cite

Costa, D.G.; Guedes, L.A. The Coverage Problem in Video-Based Wireless Sensor Networks: A Survey. Sensors 2010, 10, 8215-8247. https://doi.org/10.3390/s100908215

Costa DG, Guedes LA. The Coverage Problem in Video-Based Wireless Sensor Networks: A Survey. Sensors. 2010; 10(9):8215-8247. https://doi.org/10.3390/s100908215

Chicago/Turabian StyleCosta, Daniel G., and Luiz Affonso Guedes. 2010. "The Coverage Problem in Video-Based Wireless Sensor Networks: A Survey" Sensors 10, no. 9: 8215-8247. https://doi.org/10.3390/s100908215