A Novel Method to Increase LinLog CMOS Sensors’ Performance in High Dynamic Range Scenarios

Abstract

: Images from high dynamic range (HDR) scenes must be obtained with minimum loss of information. For this purpose it is necessary to take full advantage of the quantification levels provided by the CCD/CMOS image sensor. LinLog CMOS sensors satisfy the above demand by offering an adjustable response curve that combines linear and logarithmic responses. This paper presents a novel method to quickly adjust the parameters that control the response curve of a LinLog CMOS image sensor. We propose to use an Adaptive Proportional-Integral-Derivative controller to adjust the exposure time of the sensor, together with control algorithms based on the saturation level and the entropy of the images. With this method the sensor’s maximum dynamic range (120 dB) can be used to acquire good quality images from HDR scenes with fast, automatic adaptation to scene conditions. Adaptation to a new scene is rapid, with a sensor response adjustment of less than eight frames when working in real time video mode. At least 67% of the scene entropy can be retained with this method.1. Introduction

The dynamic range (DR) of an image sensor defines the relation between the minimum and maximum light that it can detect [1]. Broadly speaking, the dynamic range for a CCD/CMOS sensor represents its capacity to retain scene information from both highly lighted and shaded scenes. Common CCD/CMOS sensors present a linear response to scene irradiance. More advanced sensors try to increase the dynamic range by converting the linear response to a logarithmic-like response, as shown in Figure 1, providing image enhancement [2] as shown in Figure 2.

A high dynamic range is of major importance for computer vision systems that work with images taken from outdoor scenes—traffic control [3], security surveillance systems [4], outdoor visual inspection, etc. [5]. Researchers and manufacturers have recently developed a new generation of image sensors and new techniques that make it possible to increase the typical 60 dB range for a CCD sensor to 120 dB. Reference [6] reports several techniques to expand the dynamic range by pre-estimation of the sensor response curve. Reference [7] proposes to combine several RGB images of different exposures into one image with greater dynamic range. A US patent [8] claimed a CCD which reduces smear, thus providing greater dynamic range. Reference [9] reported a technique to convert a linear response of a CMOS sensor to a logarithmic response, and [10] proposed attaching a filter to the sensor to attenuate the light received by the pixels following a fixed pattern; after that, the image is processed to produce a new image with a greater dynamic range.

Although great progress has been made in the last decade concerning CMOS imaging, logarithmic response shows a large fixed pattern noise (FPN) and slower response time for low light levels, yielding limited sensitivity [11]. The main disadvantage of using a fixed logarithmic curve is that it reduces the contrast of the image as compared to a linear response, so that a part of the scene information is lost, as seen in Figure 2(b).

2. The Lin-Log CMOS Sensor

The work described in this paper concerns the development of a novel method which combines different algorithms to adjust the parameters which control the response curve of a Lin-Log CMOS sensor in order to increase its yield in HDR scenes.

LinLog™ CMOS image technology was developed at the Swiss Federal Institute of Electronics and Microtechnology (Zurich, Switerland). A LinLog CMOS sensor presents a linear response for low light levels and a logarithmic compression as light intensity increases. Linear response for low light levels assures high sensitivity, while compression for high light levels avoids saturation.

The transition between the two responses can be adjusted. Special attention is required to guarantee a smooth transition between them. There are various cameras (e.g., like the MV1-D1312-40-GB-12 from Photonfocus AG equipped with the Photonfocus A1312-40 active pixel LinLog CMOS image sensor which we have used for test purposes) that use LinLog technology, and sensor response can be controlled by adjusting four parameters, hereafter designated T1, T2, V1 and V2.V1 and V2 represent the compression voltage applied to the sensor. T1 and T2 are normalized parameters, expressed as a fraction of the exposure time, and can be adjusted from 0 to a maximum value of 1; their values determine the percentage of exposure time during which V1 and V2 are applied [Figure 3(a)]. The values of these four parameters determine the LinLog response of the sensor [Figure 3(b)]. Note that the final LinLog response is a combination of: (1) the linear response, (2) the logarithmic response with strong compression (V1) and (3) the logarithmic response with weak compression (V2) [Figure 3(b)]. These responses are combined by adjusting T1 and T2 values.

We have taken control characteristics of the LinLog CMOS sensor to develop a real-time image improvement method for high dynamic range scenes. This is made up of three different algorithms: (1) an algorithm to control the exposure time, (2) an algorithm to avoid image saturation, and (3) an algorithm to maximize the image entropy.

3. Exposure Time Control Module

We have developed a module that controls the exposure time in order to assure that the average intensity level of the image tends to a set value (usually near the mean of available intensity levels), thus offering automatic correction of the deviations caused by variable lighting conditions in the scene. For this purpose we have implemented an Adaptive Proportional-Integral-Derivative controller (APID) which compensates non-linear effects at the time of image acquisition, by adjusting the exposure time as scene lighting conditions vary. An adaptive control system [12] measures the process response, compares it with the response given by a reference process and is capable of adjusting process parameters to assure the desired response as shown in Figure 4.

In our case, the process that is controlled is acquisition of an image by a LinLog CMOS sensor. The output is the intensity level of that image. To quantify this level we use Equations (1) and (2):

- The integral action must be set to a reference value.

- The time taken to calculate integration error must be limited.

- The integral term must not continue to increase once the maximum or the minimum output values have been reached.

The next step is to model the process. Usually, if we obviate the LinLog effect, the total number of electrons for every pixel in the image can be defined as Equation (5):

The gain of the process can thus be defined as CPs(t) (where C represents the constants of Equation (5). PID parameters Kp, Ki, and Kd are functions of this gain [10], so temporal variation of them is related to gain variation. We can use Equation (7) to estimate the gain variation every time the system feeds back, τ being the feedback period. From now on, to simplify following Equations (7), (8) and (9) we will use G(t) for CPs(t):

In time t we use G(t-τ) to calculate PID parameters, since G(t) cannot be calculated until Te(t) is known. To calculate G(t) we use Nc(t-τ), Nc(t-2τ), Te(t-τ) and Te(t-2τ), justified by Equation (8):

Only parameters Kp and Ki are updated, as shown in Equation (9), where α, β, M and l are parameters to be fixed by the designer:

Figure 6(a) shows the response of the proposed APID controller—“*”, blue- versus other controllers mentioned in the references: Proportional-Integral (PI) [17,18]—“o” red- and a controller designated Incremental, based on increments that are proportional to the error (“-” green) [19]. In order to compare the responses, the PI and APID controllers were configured with the same constants (Kp = 0.01, ki = 0.4 and Kd = 0) and the parameters of the Incremental controller were adjusted to achieve the best combination of speed and stability. Even so, unwanted oscillations may appear and this has proven to be the slowest of the three controllers. During the first frames the PI and APID controllers showed the same response because they had the same initial configuration.

Figure 6(b) shows the performance details of the controllers versus a decrease of the process gain ΔG = −1. The APID controller increases its internal gain, producing faster performance (l = 0.1, M = 0.6, α = 0.01, β = 0.1).

Figure 6(c) shows the performance details of the controllers versus an increase of the process gain ΔG = 3. The APID controller reduces its internal gain to prevent overshoot and oscillations and keeps speed. To the contrary, the PI controller is unable to prevent overshoot. Table 1 shows measurements for time response, overshoot and oscillation of controller responses shown in Figure 6.

4. Saturation Control

We can detect image saturation when saturation width (see Equation (10)) reaches a given value. To reduce saturation we increase the voltage values that control the LinLog compression effect:

Figure 7 shows the algorithm for saturation control. This measures the saturation width given by Equation (10). V1 and V2 are increased or reduced depending on whether the measured value is greater or smaller than the set value.

Figure 8 shows the effect of varying V1 and V2 values.

5. Entropy Maximization

The concept of information entropy describes how much randomness (or uncertainty) there is in a signal or an image; in other words, how much information is provided by the signal or image. In terms of physics, the greater the information entropy of the image, the higher its quality will be [20].

Shannon’s entropy (information entropy) [21] is used to evaluate the quality of acquired images. Assuming that images have a negligible noise content, the more detail (or uncertainty) there is, the better the image will be (the entropy value for a completely homogenous image is 0). That is, without analyzing the image content, we assume (for two images obtained from an invariant scene) that the richer the information, the greater will be the entropy of the image.

The response curves, as shown in Figure 9, cause a loss of resolution in the bright areas of the image. Moreover, although the algorithm presented in Section 4 prevents saturation it can reduce the contrast in dark areas of the image. To deal with this problem, we have developed an algorithm (see Figure 10) that maximizes the entropy (Equation (11)) of the image.

For this purpose we adjust T1 (Figure 11) to produce light linearization for the high irradiance response curve. To reduce the complexity of the algorithm, T2 is set to a maximum and remains constant:

The main difficulty in developing an algorithm for entropy maximization [22] lies in the fact that it is not possible to fix a target entropy a priori, since this value depends on the scene. As shown in Figure 10, the algorithm is local maximizer-like [23] and has desirable properties for our purpose. The most desirable property in this case is robustness; the control method based on the conjugated gradient ensures an asymptotic tendency toward the nearest local maximum with δ accuracy, and furthermore is an easy method to implement. For this reason it has already been used to control parameters of a camera sensor [24]. In other cases, non-adaptive PI controllers have been used [17,18], but they are not robust in non-linear systems. The second-order Taylor polynomial expansions of the gradient method (Newton, Levenberg-Marquard, etc.) [25] present a higher convergence speed but are more prone to instabilities [26]. When the scene changes, the gradient direction may also change and, in a first step, the algorithm will get the maximization direction wrong, but this will be corrected in the next step. Therefore, the algorithm’s performance is robust if we assume that scene variation is slower than the period between algorithm steps.

The execution of the algorithm will be stopped when a minimum variation in entropy, δ, is reached. To avoid undesired oscillations of image contrast γ needs to be small. Even so, the algorithm developed here shows a quick response when working in continuous grabbing mode. We can see how V1, V2 and T1 are adjusted (Figure 12) and the improvement provided by the algorithm developed (Figure 13).

6. Results and Discussion

The proposed method comprises three algorithms to control the sensor response: the algorithm that controls exposure time is executed simultaneously with the other two—the algorithm that controls image saturation (adjusts V1 and V2) and the algorithm that maximizes the image entropy (adjusts T1); these last two algorithms are executed consecutively. Hence, the total time for the adjustment process will be the maximum of: (1) exposure adjustment time and (2) the sum of the times of the two algorithms for controlling the LinLog parameters. The time exposure controller takes less than 10 frames to respond to the step inputs (Figure 6) with the sensor running at 27 fps, which makes it suitable for use in real-time outdoor vision (Figure 15).

To gauge the performance of the image saturation control and the entropy maximization algorithms, an experiment has been designed to determine both the response speed and the resulting image quality. For this purpose:

(a) The sensor response has been modelled versus the irradiance, by approximating it to the curves provided by the manufacturer (Figures 9 and 11), as seen in Equations (12) and (13):

(b) Three synthetic scenes have been generated with patterns with i ∈ [1,2,3] as illustrated in Figure 14. Each value of the pattern represents the irradiance at the point (x, y) (Table 2). The dynamic range of each scene is shown in Table 2.

The characteristic entropy value has been calculated for each one of the scenes (Table 3). The entropy value (defined as pattern entropy) has been calculated using Equation (11). Pattern data are expressed in double precision floating point format.

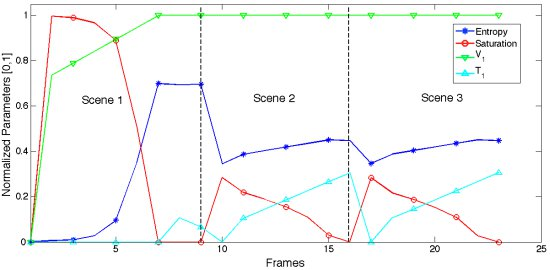

(c) The synthetic scenes have been consecutively processed with the camera model to evaluate the temporal performance of the system both in the start-up and when responding to the scene changes. Figure 15 shows the time evolution of the LinLog parameters produced by the saturation control and entropy maximization algorithms.

To use a sensor’s model together with synthetic scenes allowed defining a metric to quantify the retained entropy, which resulted on reliable yield evaluation, useful for further comparisons.

Figure 14 shows the synthetic scenes used to test the proposed method, together with images obtained when the scenes have been processed by the LinLog CMOS sensor’s model [Equations (12,13)]. Figure 15 shows how the control method adjusts LinLog parameters as different scenes are presented to the sensor.

Table 3 shows the numeric results of the experiment. Besides the response time, it shows the recovered entropy once the synthetic scenes are processed by: (1) a model of a typical linear CMOS sensor (DR of 60 dB)—adjusted so that I0 corresponds to grey level 0—and (b) the proposed LinLog model with its parameters adjusted using the proposed control method.

The pattern entropy values will be reduced during the digital image generation process. Hence, the pattern entropy percentage retained in the acquired image of the scene provides an objective measurement of the goodness of the sensor’s parameters control process. The higher the entropy of the acquired image, the better the control process is, as there is more scene information. As we can see in Table 3, with the proposed method at least 67% of pattern entropy can be retained in the images.

Images (a) to (f) in Figure 16 show how the proposed method performs over a very high dynamic range scene (the ceiling of our lab, with a powerful lighting source).

Figure 17 shows how exposure time was controlled by the APID controller for a period of almost two hours between 4:30 and 6:10 pm on a windy day with clouds crossing the camera field of view (producing illumination changes), to acquire images from the scene shown in Figure 13. Sunset lasts from 5:40 until 6:10 pm.

7. Conclusions

This paper presents a reliable method for optimizing LinLog CMOS sensor response and hence improving images acquired from high dynamic range scenes. Adaptation to environment conditions is automatic and very fast.

The implementation has been divided into three algorithms. The first makes it possible to control the exposure time by using an Adaptive PID (APID) controller; the second controls image saturation through appropriate compression of the response curve for brilliant scenes and the third provides entropy maximization by slightly linearizing the response curve for high scene irradiance.

The simplicity of the control algorithms used in this method makes the computational cost of the processing needed to calculate the image parameters (histogram-based descriptors) negligible; therefore the computational cost of implementing the presented method practically coincides with the cost of calculating the histogram. As Table 3 shows, the control takes up less than eight frames with high quality images.

The method proposed in this paper has been implemented using NI LabVIEW [27], resulting in: (1) high-level hardware-independent development; (2) rapid prototyping due to the use of libraries (Real-Time, PID and FPGA libraries); and (3) rapid testing of the control application.

The hardware used to implement the system consisted of a Real-Time PowerPC Embedded Controller (cRIO-9022) and a reconfigurable chassis based on a Virtex-5 FPGA (cRIO-9114) from National Instruments. The chosen system permits deterministic control and real time execution of applications. The control system and the camera to be easily connected thanks to an Embedded PowerPC with GBit Ethernet and RS232 ports.

Acknowledgments

The work submitted here was carried out as part of the projects EXPLORE (TIN2009-08572) and SiLASVer (TRACE PET 2008-0131) funded by the Spanish National R&D&I Plan. It has also received funding from the Government of the Region of Murcia (Séneca Foundation).

References and Notes

- Reinhard, E; Ward, G; Pattanaik, S; Debevec, P. High Dynamic Range Imaging: Acquisition, Display, and Image-Based Lighting; Elsevier/Morgan Kaufmann: Amsterdam, The Netherlands, 2006; pp. 7–18. [Google Scholar]

- Bandoh, Y; Qiu, G; Okuda, M; Daly, S; Aach, T; Au, OC. Recent Advances in High Dynamic Range Imaging Technology. Proceedings of the 17th IEEE International Conference on Image Processing, ICIP’10, Hong Kong, China, 26–29 September 2010; pp. 3125–3128.

- Llorca, DF; Sánchez, S; Ocaña, M; Sotelo, MA. Vision-based traffic data collection sensor for automotive applications. Sensors 2010, 10, 860–875. [Google Scholar]

- Foresti, GL; Micheloni, C; Piciarelli, C; Snidaro, L. Review: Visual sensor technology for advanced surveillance systems: Historical view, technological aspects and research activities in Italy. Sensors 2009, 9, 2252–2270. [Google Scholar]

- Navarro, PJ; Iborra, A; Fernández, C; Sánchez, P; Suardíaz, J. A sensor system for detection of hull surface defects. Sensors 2010, 10, 7067–7081. [Google Scholar]

- Battiato, S; Castorina, A; Mancuso, M. High dynamic range imaging for digital still camera: An overview. J. Electron. Imag 2003, 12, 459–469. [Google Scholar]

- Brauers, J; Schulte, N; Bell, A; Aach, T. Multispectral High Dynamic Range Imaging; IS/&T/SPIE Electronic Imaging: San Jose, CA, USA, 2008; pp. 680704:1–680704:12. [Google Scholar]

- Hazelwood, M; Hutton, S; Weatherup, C. Smear Reduction in CCD Images. US Patent 7,808,534, 2010.

- Burghartz, JN; Graf, H; Harendt, G; Klinger, W; Richter, H; Strobel, M. HDR CMOS Imagers and Their Applications. Proceedings of the 8th International Conference on Solid-State and Integrated Circuit Technology, ICSICT’06, Shanghai, China, 22–26 October 2006; pp. 528–531.

- Nayar, SK; Mitsunaga, T. High Dynamic Range Imaging: Spatially Varying Pixel Exposures. Proceedings of the IEEE conference on Computer Vision and Pattern Recognition, Hilton Head Island, SC, USA, 13–15 June 2000; 1, pp. 472–479.

- Bigas, M; Cabruja, E; Forest, J; Salvi, J. Review of CMOS image sensors. Microelectron. J 2006, 37, 433–451. [Google Scholar]

- Dumont, GA; Huzmezan, M. Concepts, methods and techniques in adaptive control. Proceedings of the 2002 American Control Conference; 2002; pp. 1137–1150. [Google Scholar]

- Bela, L. Instrument Engineers’ Handbook: Process Control; Chilton Book Company: Radnor, PA, USA, 1995; pp. 20–29.

- Mohan, M; Sinha, A. Mathematical Model of the Simplest Fuzzy PID Controller with Asymmetric Fuzzy Sets. Proceedings of the 17th World Congress the International Federation of Automatic Control, Seoul, Korea, 6–11 July 2008; 7, pp. 15399–15404.

- Chaínho, J; Pereira, P; Rafael, S; Pires, AJ. A Simple PID Controller with Adaptive Parameter in a dsPIC: A Case of Study. Proceedings of the 9th Spanish-Portuguese Congress on Electrical Engineering, Marbella, Spain, 30 June–2 July 2005.

- Hang, CC; Astrom, JK; Wo, WK. Refinements of the Ziegler-Nichols tuning formula. IEEE Proc. Control Theory Appl 1991, 138, 111–118. [Google Scholar]

- Navid, N; Roberts, J. Automatic Camera Exposure Control. Proceedings of the 2007 Australasian Conference on Robotics & Automation, Brisbane, Australia, 10–12 December 2007.

- Neves, JA; Cunha, B; Pinho, A; Pinheiro, I. Autonomous Configuration of Parameters in Robotic Digital Cameras. Proceedings of the 4th Iberian Conference on Pattern Recognition and Image Analysis, IbPRIA’09, Póvoa de Varzim, Portugal, 10–12 June 2009.

- Nilsson, M; Weerasinghe, C; Lichman, S; Shi, Y; Kharitonenko, I. Design and Implementation of a CMOS Sensor Based Video Camera Incorporating a Combined AWB/AEC Module. Proceedings of 2003 IEEE International Conference on Acoustics, Speech, and Signal Processing, (ICASSP '03), Hong Kong, China, 6−10 April 2003; 2, pp. 477–480.

- Tsai, DY; Lee, Y; Matsuyama, E. Information entropy measure for evaluation of image quality. J Digital Imaging 2008, 21, 338–347. [Google Scholar]

- Shannon, CE; Weaver, W. The Mathematical Theory of Communication; University of Illinois Press: Urbana, IL, USA, 1949. [Google Scholar]

- Gray, RM. Entropy and Information Theory, 2nd ed; Springer-Verlag Inc: New York, NY, USA, 2010; pp. 17–44. [Google Scholar]

- Hendrix, EMT; Toth, BG. Introduction to Nonlinear and Global Optimization; Springer: Cambridge, UK, 2010. [Google Scholar]

- Moneta, CA; de Natale, FGB; Vernazza, G. Adaptive Control in Visual Sensing. Proceedings of the IMACS International Symposium on Signal Processing, Robotics, and Neural Networks, Lille, France, 25–27 April 1994.

- Malis, E. Improving Vision-Based Control Using Efficient Second-Order Minimization Techniques. Proceedings of the IEEE Internacional Conference on Robotics and Automation (ICRA’04), New Orleans, LA, USA, 26 April–1 May 2004.

- Kabus, S; Netsch, T; Fischer, B; Modersitzki, J. B-spline registration of 3D images with levenberg-marquardt optimization. Proc. SPIE 2004, 5370, 304–313. [Google Scholar]

- LabVIEW (Laboratory Virtual Instrumentation Engineering Workbench). National Instruments Corporation: Austin, TX, USA. Available online: http://www.ni.com/labview/ (accessed on 10 August 2011).

| Incremental | PI | APID | |||||||

|---|---|---|---|---|---|---|---|---|---|

| Δ G (t) | 0⇨2 | 2⇨1 | 1⇨4 | 0⇨2 | 2⇨1 | 1⇨4 | 0⇨2 | 2⇨1 | 1⇨4 |

| Settling time | -- | 13 | -- | 5 | 8 | 12 | 5 | 6 | 4 |

| Overshoot | 4 | 0 | 4 | 0 | 0 | 24 | 0 | 0 | 0 |

| Stationary oscillation | 4 | 0 | 16 | 0 | 0 | 0 | 0 | 0 | 0 |

| Scene | Pattern, Ip(x,y) | DR(dB) |

|---|---|---|

| 1 | 105 POS(cos(x2)+cos(y2)) | 104 |

| 2 | 5·105 POS(cos(x3+y3)) | 116 |

| 3 | 5·105 POS(sin(x2+y2)) | 116 |

| Scene | Pattern Entropy | Lin-Log | Lineal | ||||||

|---|---|---|---|---|---|---|---|---|---|

| Time (frames) | Retained Entropy | Retained Entropy(%) | Retained Entropy | Retained Entropy(%) | |||||

| Control Mode (Fast/Precise) | F | P | F | P | F | P | |||

| 1 | 7.90 | 8 | 8 | 5.32 | 5.33 | 67.35 | 67.46 | 0.08 | 1.00 |

| 2 | 4.86 | 6 | 27 | 3.42 | 4.38 | 70.42 | 71.57 | 1.01 | 20.79 |

| 3 | 4.58 | 6 | 27 | 3.42 | 3.48 | 70.50 | 71.62 | 1.01 | 20.77 |

© 2011 by the authors; licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution license (http://creativecommons.org/licenses/by/3.0/).

Share and Cite

Martínez-Sánchez, A.; Fernández, C.; Navarro, P.J.; Iborra, A. A Novel Method to Increase LinLog CMOS Sensors’ Performance in High Dynamic Range Scenarios. Sensors 2011, 11, 8412-8429. https://doi.org/10.3390/s110908412

Martínez-Sánchez A, Fernández C, Navarro PJ, Iborra A. A Novel Method to Increase LinLog CMOS Sensors’ Performance in High Dynamic Range Scenarios. Sensors. 2011; 11(9):8412-8429. https://doi.org/10.3390/s110908412

Chicago/Turabian StyleMartínez-Sánchez, Antonio, Carlos Fernández, Pedro J. Navarro, and Andrés Iborra. 2011. "A Novel Method to Increase LinLog CMOS Sensors’ Performance in High Dynamic Range Scenarios" Sensors 11, no. 9: 8412-8429. https://doi.org/10.3390/s110908412