High Dynamics and Precision Optical Measurement Using a Position Sensitive Detector (PSD) in Reflection-Mode: Application to 2D Object Tracking over a Smart Surface

Abstract

: When related to a single and good contrast object or a laser spot, position sensing, or sensitive, detectors (PSDs) have a series of advantages over the classical camera sensors, including a good positioning accuracy for a fast response time and very simple signal conditioning circuits. To test the performance of this kind of sensor for microrobotics, we have made a comparative analysis between a precise but slow video camera and a custom-made fast PSD system applied to the tracking of a diffuse-reflectivity object transported by a pneumatic microconveyor called Smart-Surface. Until now, the fast system dynamics prevented the full control of the smart surface by visual servoing, unless using a very expensive high frame rate camera. We have built and tested a custom and low cost PSD-based embedded circuit, optically connected with a camera to a single objective by means of a beam splitter. A stroboscopic light source enhanced the resolution. The obtained results showed a good linearity and a fast (over 500 frames per second) response time which will enable future closed-loop control by using PSD.1. Introduction

The majority of large and micro scale robotic applications involve high dynamics trajectory control. Rapid sensors for objects positioning are thus required, also combining high accuracy and repeatability. These features are required to attain a precise loop control. The continuous improvement of robotic and micro-robotic applications has been related to the design of optical sensors featuring ever increasing performance.

In the last twenty years the video camera and its related software for image processing became a classical sensorial system for robotics. The visual feedback for robot control is commonly termed as Visual Servoing (VS). The early applications of VS were simple movements and pick-and-place tasks, whereas today VS refers to advanced real-time object manipulation, substantially increasing the flexibility of automation processes [1]. VS represents the result of confluence between important technical areas such as image processing, kinematics and dynamics, theory of control, and real-time computational operations [2].

In VS, the processing delay time needed to extract visual information is the major problem especially for fast dynamic systems, a common case in micro-robotics. Moreover, if several video cameras are required, the computation time required to solve the specific algorithms, increases accordingly. This problem imposes the dynamic limits and the high processing unit costs of most VS applications. According to [1], the VS systems are divided into:

position-based, these systems retrieve the three dimensional information about the scene where a known camera model is used to estimate the position and the orientation of the target with respect to the camera coordinate system;

image-based, 2D image measurements are used directly to estimate the desired movement of the robot;

“2½D” represents a combination of the previous two categories.

In [3] a typical vision-guided robot application, using a video camera integrated into a robot control system, and a simulation platform developed under the Matlab™ environment is presented. Two configurations were described. In the first case the video camera for image capture was mounted on the robot end-effector, in a so-called “eye-in-hand” configuration. In the second case, the camera is fixed at a certain place, called “eye-to-hand” configuration. The system was implemented as a simulator before programming real time vision-guided robot applications, being typical for the deployment of specific algorithms.

A micromanipulation robotic system which operates with micro size objects, presented in [4], integrates a VS-based motion control. The robotic system is composed of two arms equipped with grippers. The VS is of image-based type, and is composed of two orthogonal video cameras, one of them with vertical axis orientation and the second one being horizontal. The system can identify, recognize, track and control moving targets such as micro size objects manipulated on the stage.

The VS systems require complex and top performance hardware and software elements, whose costs are accordingly high. For reasons of simplicity and cost-effectiveness, we have studied and proposed to implement a single object motion tracking system for robotic and micro-robotic applications, industrial or scientific, using a fast and very low cost sensor. This sensor is called a position sensitive (or sensing) detector (PSD) which consists of a special monolithic PIN photodiode with several electrodes in order to achieve detection in 1D or 2D. Compared to discrete element detectors, PSDs have many advantages including high position resolution, fast response time and very simple signal conditioning circuits [5].

Regarding the existing PSD applications, they are quite varied, for instance aeronautics, civil engineering, surgery, robotics, etc., all using light sources (e.g., laser, led) projected by lenses or mirrors over the 1D or 2D surface of the PSD. For instance, an example of an application is related to NASA spacecraft missions (e.g., spacecraft docking) [6]. An important vision-based navigation sensor system (VISNAV) was implemented with a PSD photo-detector in the focal plane of an optical system pointing to a set of light sources or beacons, the line of sight vector between spacecrafts being determined under high-precision relative position conditions.

The high rate of PSD sensors inspired [7] to implement an optical complex system for the x-y detection of elementary particles within an original nuclear microscope (Ion Electron Emission Microscope, IEEM) developed by the SIRAD group at the Legnaro National Laboratory, Padova, Italy. The conclusions of [7] demonstrated the practical limits of PSD versus CCD sensors, as both useful and spurious signals were detected by the sensitive area of PSD and the calculation of the tracked particles versus the spurious ones was an impossible task.

So far, the robotic applications have usually been designed for running repetitive and tedious tasks in structured environments. However, there exist some complex industrial operations (e.g., grinding, deburring, polishing, manufacturing inspection) that involve the execution of robotic tasks in unstructured frameworks where it is essential to execute unknown trajectories [8]. The specific sensorial systems in these cases are typically cameras or tactile sensors. The PSD is an alternate sensor which offers the possibility to obtain the contours of unknown objects [8]. The authors implemented a control loop in a SCARA experimental robot using a PSD sensor for following a particular shape. A recent paper [9] reported a kinematic Kalman filter sensor fusion of a PSD with an inertial measurement unit. The technique was applied to get a very accurate velocity and position sensing of an industrial robotic arm end-effector.

Another application that integrated PSD was in civil structures engineering for optical recording of the relative-story displacement during earthquakes followed by the remnant deformations measurement of the building structure [10]. The sensor unit was composed of three PSDs and lenses capable of measuring the relative-story displacement successfully.

A major accuracy improvement in microsurgery refers to performing the active tremor compensation within existing robotic hands systems. The authors of [11] developed and tested such a 3D system under experimental conditions. The choice of sensorial elements again balanced the CCD and PSD sensors. After some detailed comparative analysis, the authors of [11] considered the PSD as a suitable sensorial element, offering high accuracy and high frequency response with low cost and complexity.

We conclude that the potential exists for PSDs as cost-effective sensors generating continuous and lag-free data which could be fed into PID controllers with a minimal processing in many fields of activity. The simple conditioning circuits and algorithms can be embedded into microcontroller-based systems, a remarkable technical feature.

Obviously, PSD is not intended to replace all CCD cameras in VS or other technical fields, but may find interesting alternate applications such as the one described in this paper. To test the performance of this kind of sensor for microrobotics, we’ve made a comparative analysis between a precise but slow video camera and a custom-made fast PSD system applied to the tracking of a diffuse-reflectivity object transported by a microconveyor called Smart-Surface. The Smart-Surface micro-robotic application which has been designed by the team of Yahiaoui et al.[12,13] at the MN2S department of the FEMTO-ST Institute, France, is a micro-manipulation unit for transporting various small planar objects using air levitation. The principle of objects conveyance supposes cardinally oriented inclined air jets. By combining these orthogonal motion vectors, different planar trajectories may be obtained. Specific MEMS technology was used to fabricate the array of nozzles used for motion vector generation.

The sensorial system used in [13] was a video camera capable of up to 60 frames per second (fps). Provided the fast Smart-Surface dynamics like travel times from border to border was under 100 ms as well as the Firewire 1394 camera bus delay of 15 ms, it was considered as appropriate to upgrade the existing system with a fast PSD by means of an optical beam splitter. The designed PSD system and the first calibration results are presented in the paper. The tracking of a trajectory is depicted, showing positive results.

The paper is structured into five sections. After this first introductory part, Section 2 describes the PSD device and the specific signals conditioning. Section 3 presents the PSD calibration and tracking algorithm based on the four specific PSD signals in referential conditions, and the tracking mathematical calculus. Section 4, called Experiments with a Smart-Surface, describes the Smart-Surface structure, the experimental setup and the data processing results along with a comparative discussion. The conclusions part finalizes the paper by pointing out some future work directions like the closed-loop control.

2. PSD Device and Signal Conditioning

The PSD is a silicon optical device which has the capacity to convert an incident light spot position into a series of analogue signals. The property to generate continuous data represents the basic feature and advantage of a PSD. According to surface structure, the PSD detectors may be divided into two classes: segmented or isotropic. The segmented detectors are made of independent elements and require broader light spots. For instance four-quadrant photodiodes are commonly used in atomic force microscopy position control feedback. The isotropic PSDs consist of a single active element and are based on the lateral photovoltaic effect. Depending on the patterned electrodes, the isotropic PSDs are divided into two sub-classes: linear and two-dimensional. The latter category is manufactured either with four pins (two anodes and two cathodes) or with five pins (one cathode and four anodes).The isotropic PSDs show a very good continuity (no dead spaces) and are robust with respect to the spot size and the image focusing. All types of PSDs show very fast response times, in the microsecond range. Other important features are their good resolution and linearity over a wide range of light intensities.

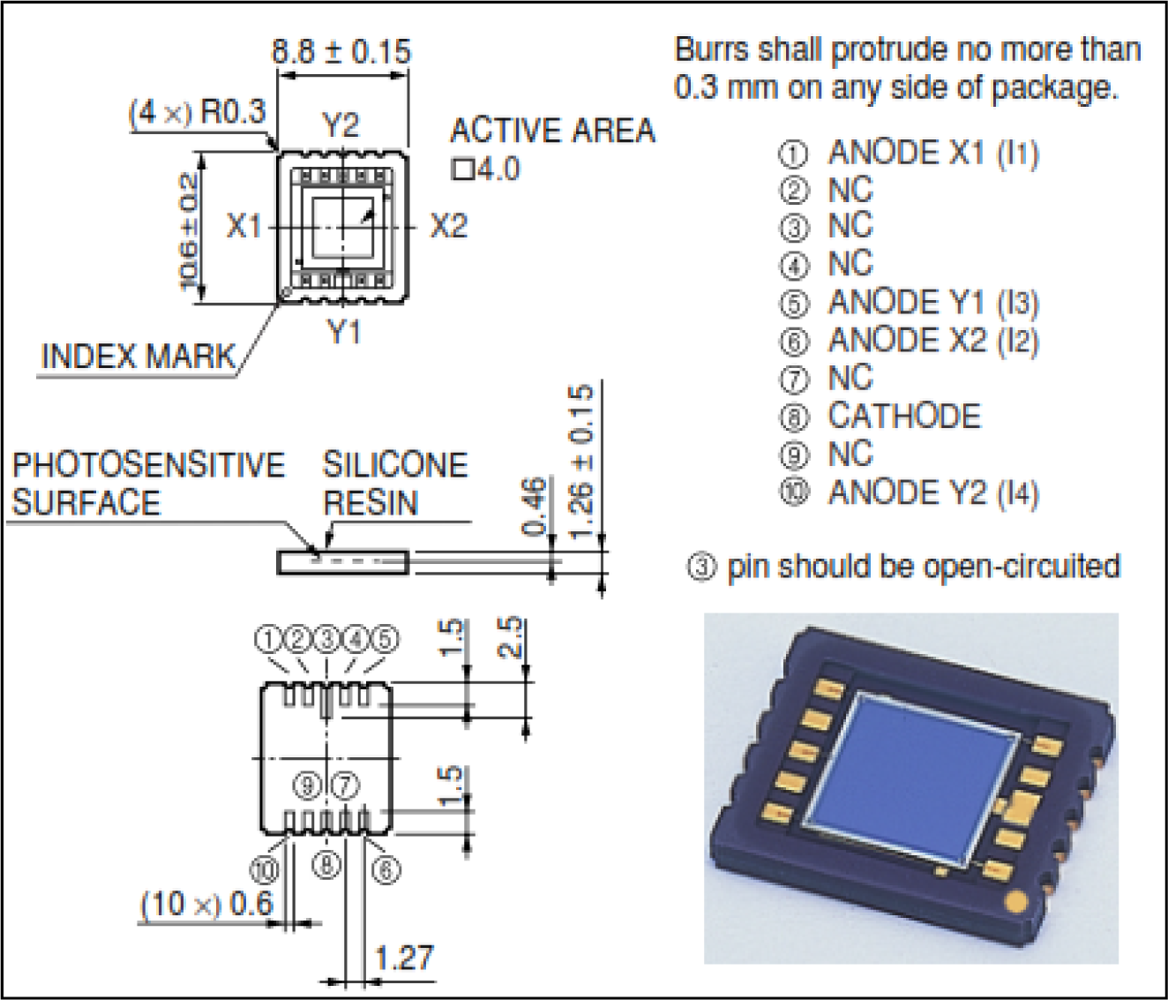

In Figure 1 the constructive details regarding distinct sensing electrodes are presented. The output analogue signals depend on the distance between the corners and the light spot position (more exactly the position refers to the centre of the incident spot light). The PSD primary signal processing involves signal amplification, analog-to-digital conversion and calculation using standard formulae like the ones described in Equations (1–3).

We used a commercial Hamamatsu PSD unit model no. S5990-01 featuring a two-dimensional square 4 × 4 mm2 sensing area, as depicted in Figure 1. The manufacturer formula for the x-y coordinates of a laser or LED spot focused on the surface is:

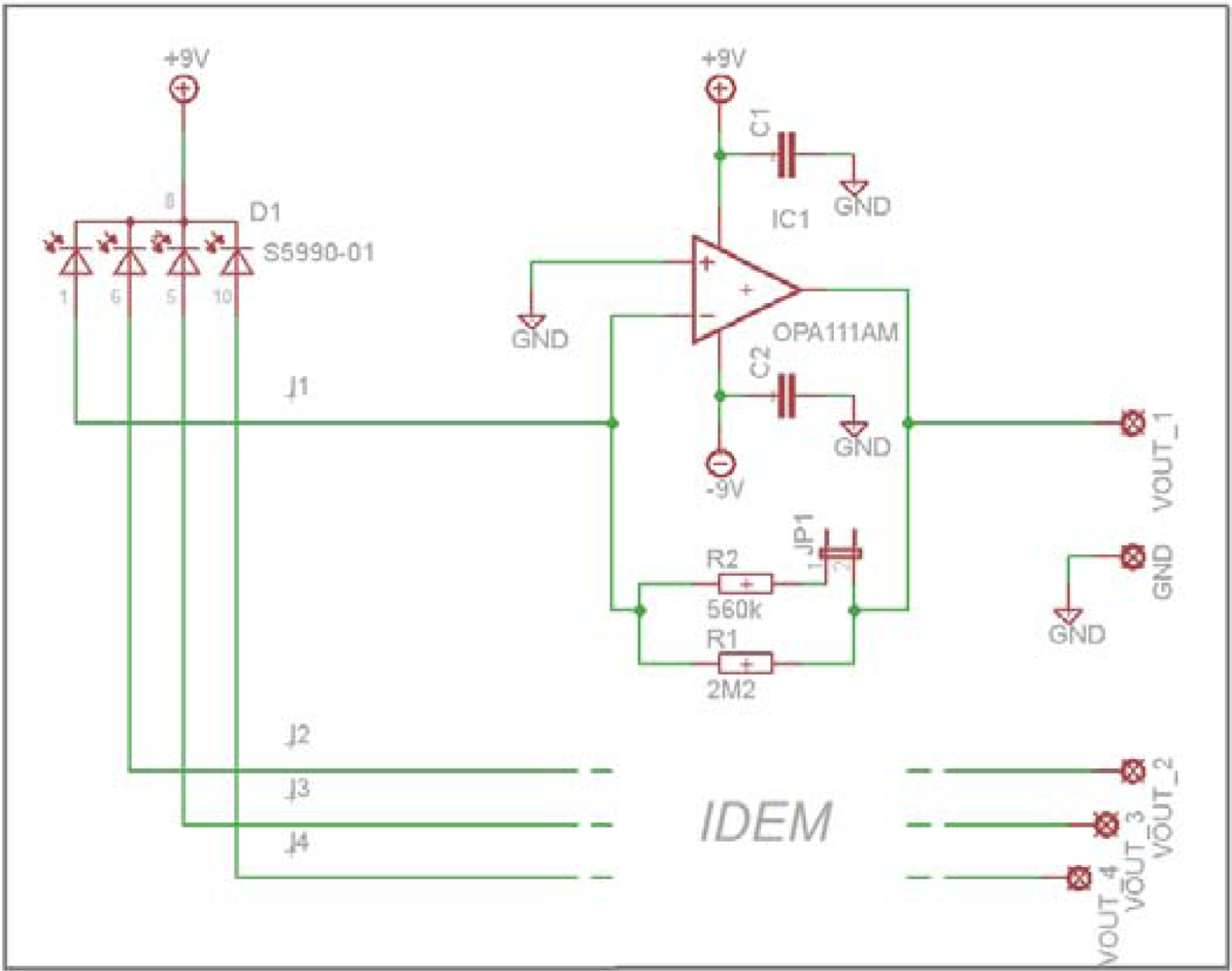

An electronic circuit with four transimpedance amplifiers has been designed (Figure 2) to convert and amplify the I1 to I4 signals. It is based on a single-stage schematic featured with low noise and low bias currents op-amps (type OPA111). The output signals are:

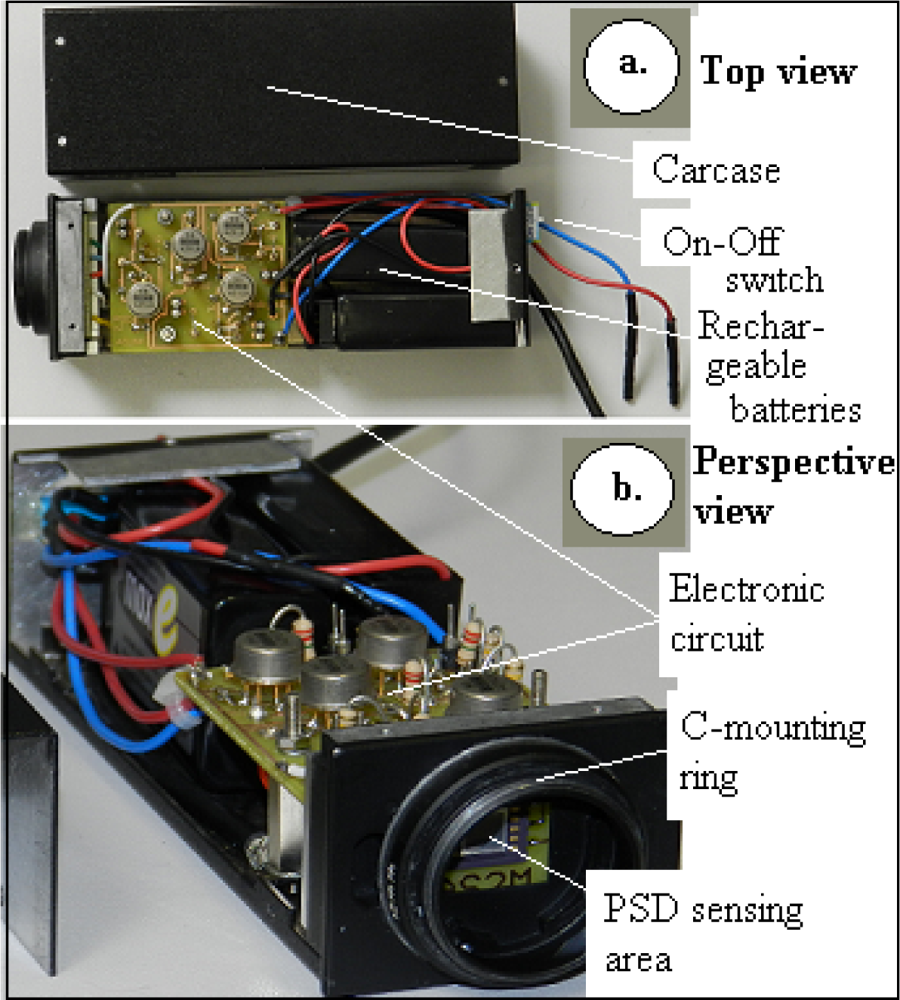

The complete and custom-manufactured PSD circuit can be seen in Figure 3. The system includes the PSD sensor (front view), the amplifier circuit, four jumpers for the High/Low gain selection, the batteries and an On/Off switch. The C mounting ring insures the device adaptation to the optical axis. The device electrical source was composed of two embedded 9V DC rechargeable batteries providing over 10 hours autonomy. They have been embedded in order to get the best achievable EMF noise protection—a critical aspect for such low amplitude signals.

3. PSD Calibration Algorithm

Unlike the typical applications where a light spot is focused on the PSD surface, the actual system was designed to work in the diffuse reflectivity mode, where the object is projected over the PSD surface like in the schematic shown in Figure 4. Under epi-illumination, the white matt object will appear very bright against the highly reflective silicon Smart-Surface which shall appear dark. Despite that contrast, the actual amount of incident light returned to the PSD is very small, with the resulting currents ranging around 0.1 μA, depending on the object reflectivity and on the lens f-ratio. Therefore, we chose a stroboscopic lighting based on a monostable circuit, which, as will be shown, successfully improves the signal-to noise ratio. The monostable circuit has been designed with the classic NE555, an integrated circuit suitable for a panel of timer applications and basically operating by charging and discharging an external RC network.

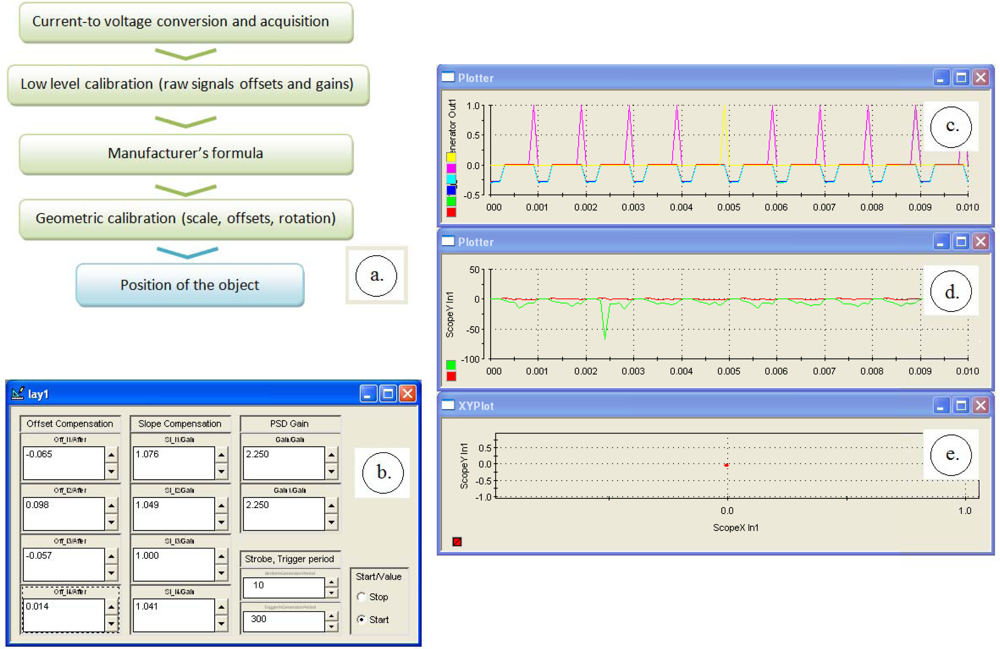

There are four steps to get the calibrated x-y position (Figure 5(a)): the initial current-to-voltage and analog-to-digital conversion, a low-level calibration, the conversion formula and the secondary calibration. The low level PSD calibration supposes equalizing the VOUT_1 to VOUT_4 signals. That is to be performed over a flat gray surface with the stroboscopic lighting on and by adjusting the offset and slope parameters. Figure 5(b) shows the dedicated interface which allowed the manually equalizing of the four raw signals.

After the raw signals calibration, the device is ready to acquire the x-y data by applying the formulas (1–2). This x-y data are however not reliable yet because they require further calibration by means of a reference camera or by positioning the object to a set of precisely known places. We chose the first solution, the reference position being provided by a camera connected to the same optical lens by means of a beamsplitter. The x-y signals calibration involve adjusting a gain parameter and some offset and rotation parameters between the two coordinate axis systems of the PSD and of the camera. The formula which providing the final, corrected xC-yC coordinates, is:

The formulas (1–2) and (4) have been implemented in a Simulink model as shown in Figure 6 and deployed on a dSPACE data acquisition and control board. The PSD instantaneous coordinates xC-yC are thus computed in real time, allowing fast object tracking. As said, the system uses stroboscopic lighting which is generated by the same dSPACE system. Finally, the external camera is also synchronized with the algorithm, by connecting a digital port of the dSPACE machine to the external trigger input of the camera. The results are presented in the next section.

4. Experiments with a Smart-Surface

The Smart-Surface is a pneumatic device conceived for small objects manipulation by using the air levitation. The device array is a 9 mm × 9 mm square and is composed by 8 × 8 pneumatic micro-conveyors. A micro-conveyor has four nozzles able to generate inclined air-jets in the four cardinal directions. An object of small weight and small dimensions, can be conveyed to a desired position with intermittent air-flow pulses of a pre-adjustable pressure up to 20 kPa [13]. The used experimental object is a disk of 3.5 millimetres diameter and 12 milligrams weight. These physical features were chosen from dynamic considerations.

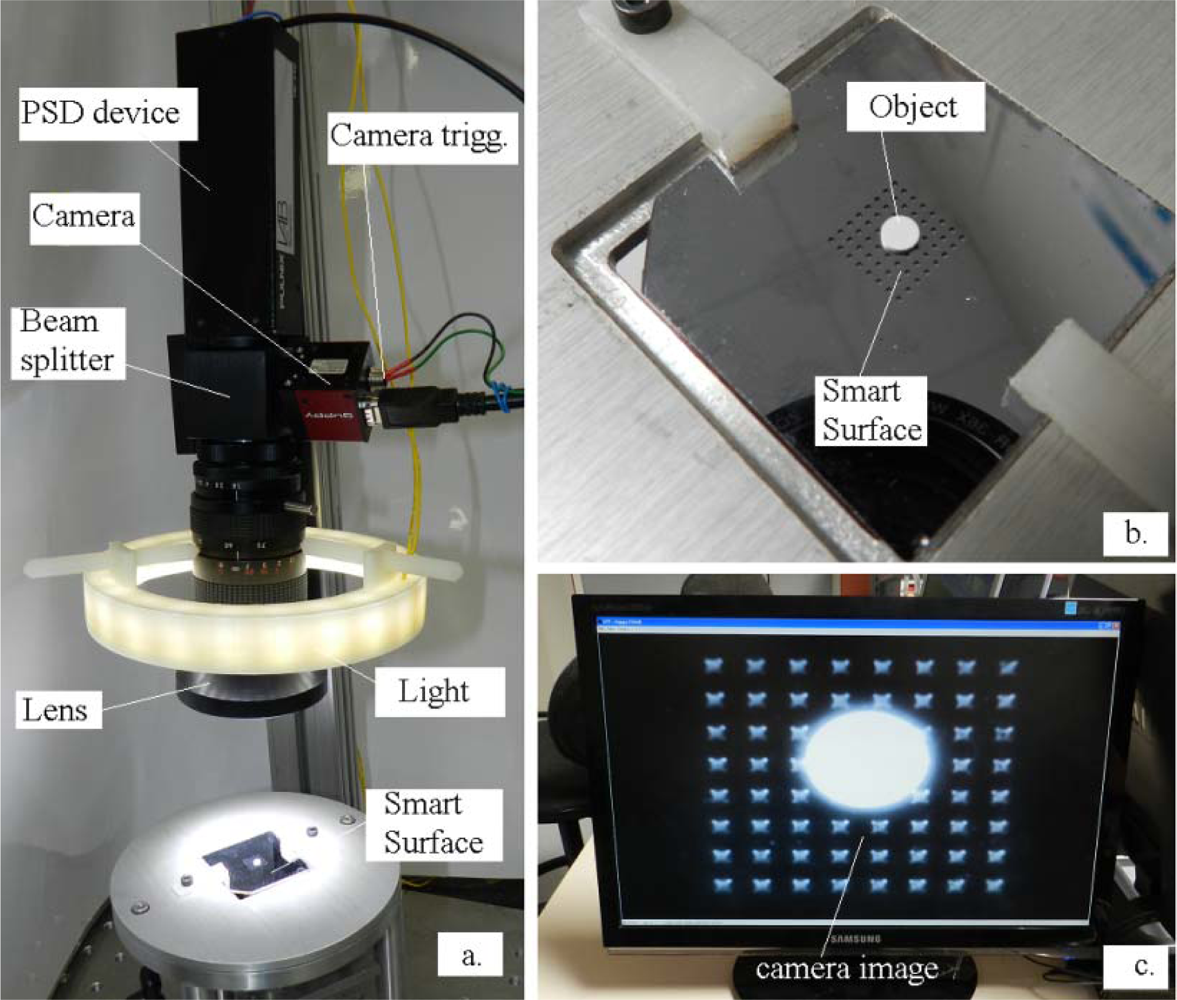

Figure 7(a) shows the main elements of the application. The camera model is an IEEE 1394 bus AVT Guppy model equipped with a 1/2” (6.4 × 4.8 mm2) monochrome sensor. The focusable TV lens specifications are: 12.5–75 mm, f/1.8, C-mount. The equivalent system focal length is however altered because of the added beamsplitter cube of 38 mm. The Smart-Surface and its corresponding object are presented in Figure 7(b). The scene is epi-illuminated with a number of 24 high efficiency 5 mm white LEDs positioned in three parallel rows. The camera source image through the optical system is shown in Figure 7(c). This is the acquired image which is processed to get reference coordinates.

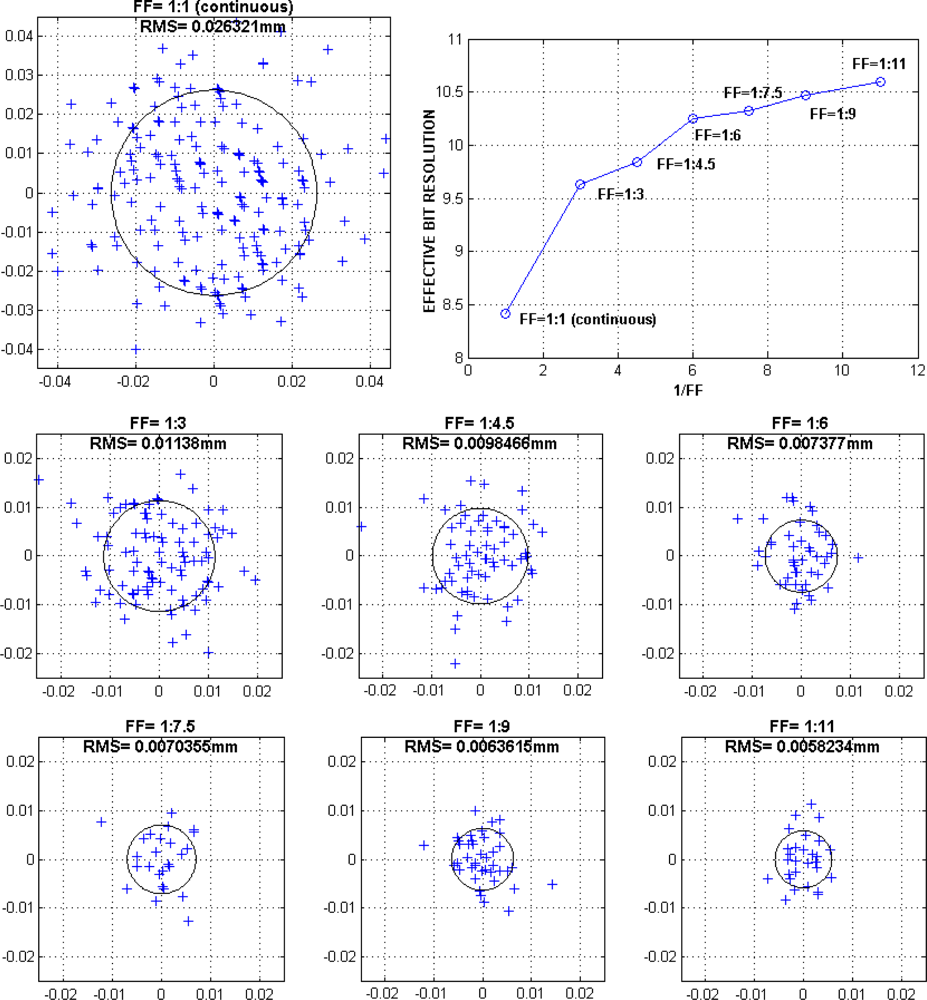

The Smart-Surface is lighted by strobed pulses; their higher intensity leading to better signal-to-noise ratios. To determine the light pulses optimum fill factor (FF), or duty cycle, which is the ratio of the pulse duration to the total period, it is necessary to evaluate the dispersion of the PSD measurement points with the variation of this parameter. Figure 8 shows the numerical dispersion results in terms of root mean square (RMS) values corresponding to DC (direct current) and to a series of fill factors ranging from 1:3 to 1:11. During experiments the average (light and dark) supply current was kept to the nominal value of 0.27 A. The net improvement in terms of effective bits resolution is straightforward. We may observe that fill factor FF = 1:11 provide the best results with a corresponding RMS value of 5.8 μm. However, we may notice a saturation above FF = 1:11. Better effective resolutions could be probably achieved only by upgrading the lighting ring intensity.

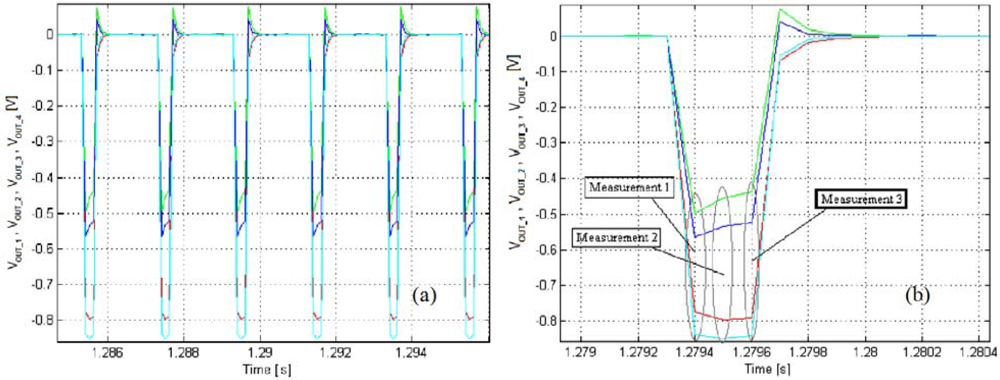

For the following experiments we selected a lighting strobe frequency of 500 Hz and a fill factor FF = 1:6. The slower camera trigger has been configured for a frame rate of 33.3 fps, which corresponds to an image acquisition every 15 light shots. The dSPACE Simulink has been configured with a sample period of 10−4 s, which means a number of three data samples for every 0.33 ms light impulse. Figure 9 shows the typical Vout_1...4 input waveforms. As may be observed from the zoomed Figure 9(b), the curves are slightly transient; therefore, only the 3rd measurement point (in bold case in the figure) is selected for the x-y conversion, the other points being ignored by the tracking algorithm.

As stated, the camera acquisition period is of 30 ms, precisely triggered each 15th light pulse. The frames are recorded individually and processed off-line for evaluation under Matlab™. The image processing consists of two steps. First, the acquired image is thresholded to get a binary image. The value of the threshold is the median value between the intensity of the background and the intensity of the object. Then, the object is tracked using a binary-large object (BLOB) extraction approach. The position of the center of the object is computed as the centroid of the BLOB to get accurate sub-pixel position. The obtained frames are displayed like in Figure 10.

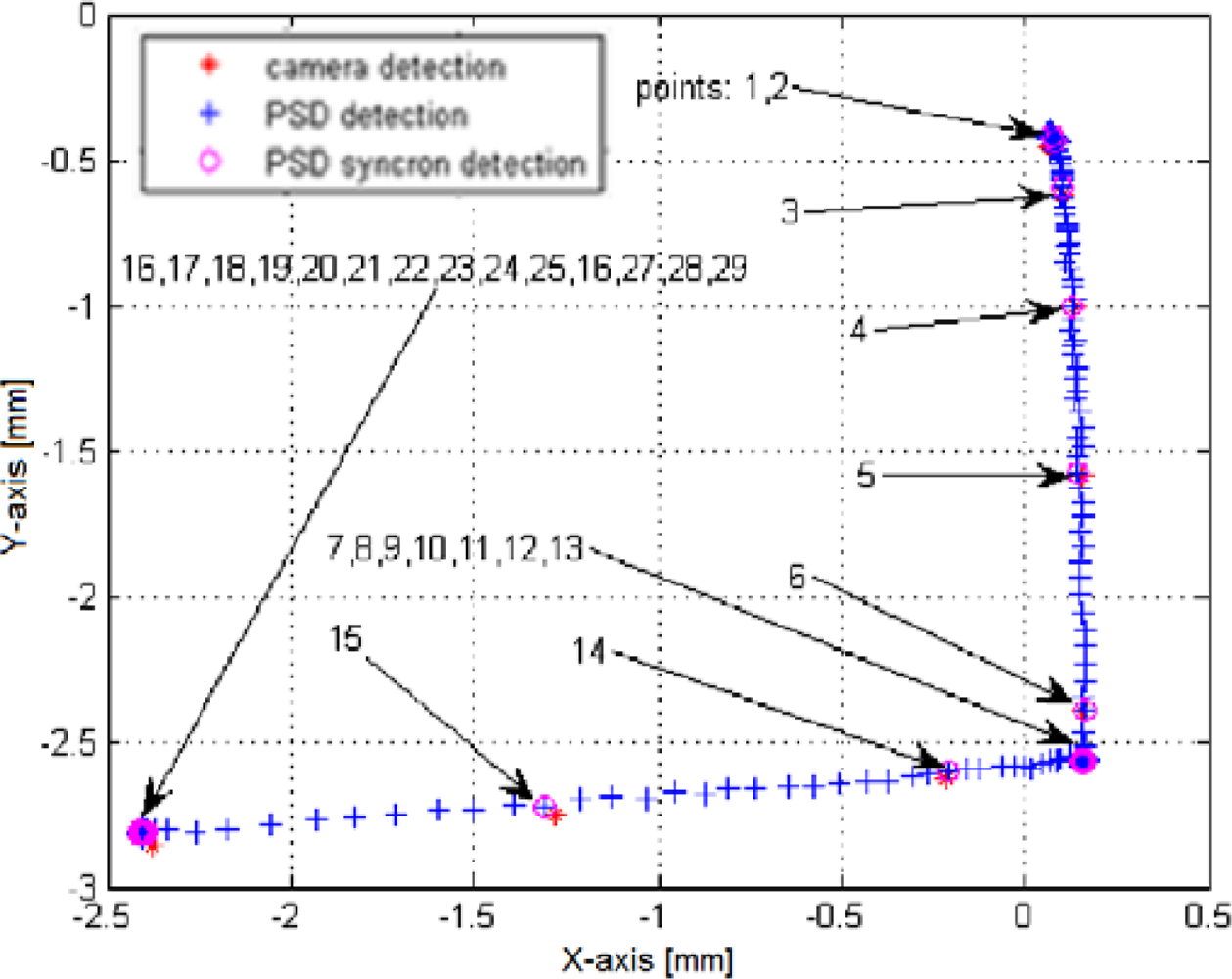

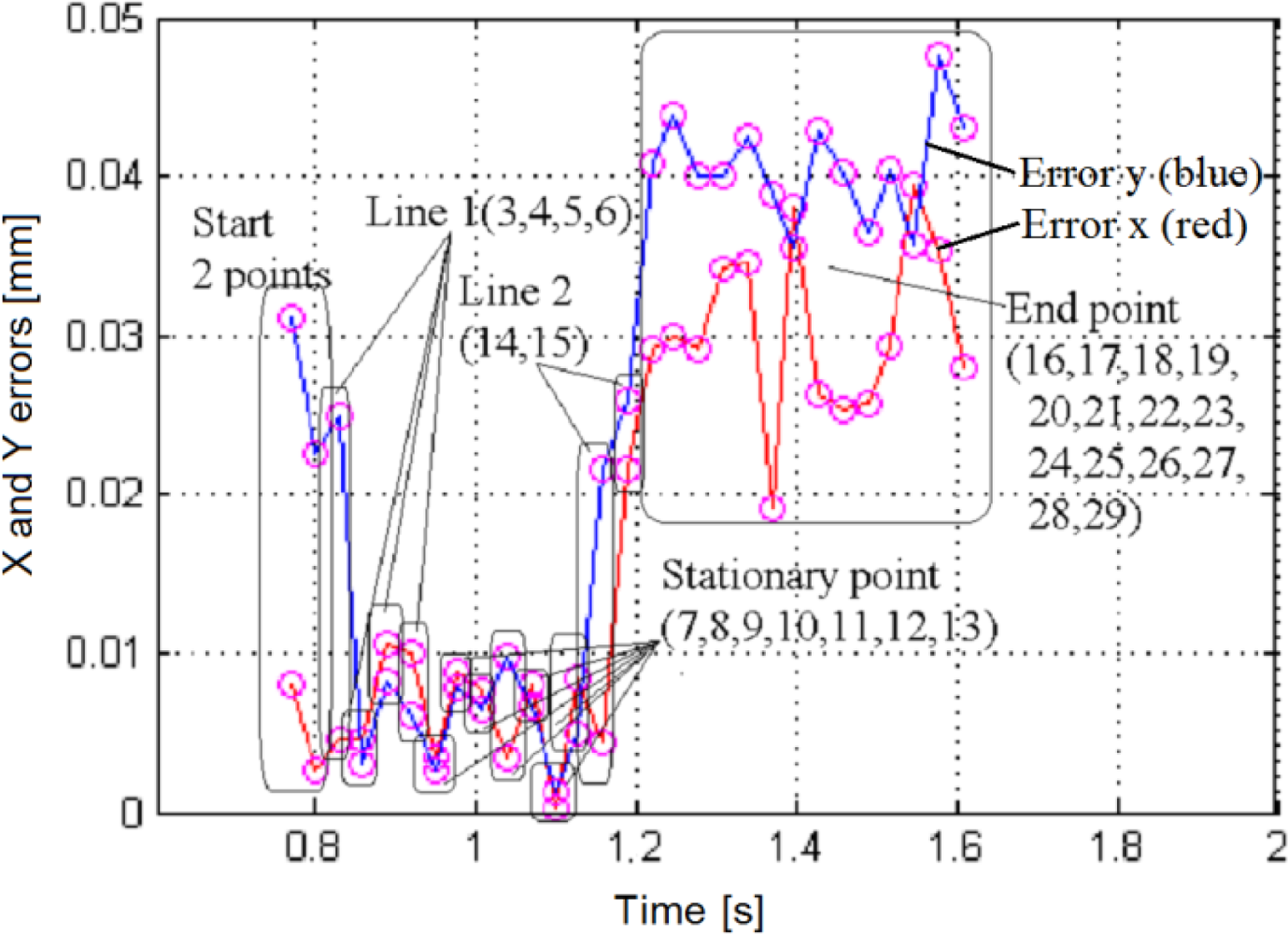

Figure 11 displays the superposed datasets issued from both the PSD and the camera. The imposed trajectory is of an inverted ”L” shape, brief air bursts being applied in –Y then –X direction. The object acceleration is slower than its deceleration. A linearity deviation of ∼5° can be noticed, which is somehow fair for this type of pneumatic actuation [13]. The superposition of the two trajectories shows a very good agreement. The x and y errors are inferior to 50 μm, as shown in Figure 12. This corresponds to a relative error of less than 1.5% over the entire measurement range, making the method highly suitable for further closed-loop applications.

5. Conclusions and Perspectives

Position sensitive detectors (PSDs) are analogue optical sensors featuring fast response times and simple electronic circuitry. These devices have been used in a various applications ranging from space navigation to surgery, all involving a laser or other direct light spots projected over the PSD surface. The proposed approach consisted of a rather different and indirect mode, by focusing the reflected light issued from a diffuse-reflectivity object on a PSD, whose object coordinates could be thus evaluated.

The designed system is considered as a replacement for the expensive cameras tracking single objects of good contrast and fast dynamics. Some typical applications suitable for the designed system can be found in microrobotics. To test the performance of this kind of sensor for microrobotics, we have made a comparative analysis between a precise but slow video camera and a custom-made fast PSD system applied to the tracking of a diffuse-reflectivity object transported by a pneumatic microconveyor called Smart-Surface. The fast system dynamics prevented until now the full control of the smart surface by visual servoing, unless using a very expensive high frame rate camera.

The system consisted of a PSD plus its battery supply and low noise signal conditioning circuitry, all embedded in a single case provided with a universal C-mount adapter. An optical beamsplitter was mounted between the PSD and the focusable objective provided an additional optical port for a reference camera. Because of the small amount of effectively returned light, a stroboscopic lighting system has been designed in order to enhance the system resolution. The system was controlled and synchronized by a dSPACE real time acquisition and processing unit.

The tests were performed at a framerate of 500 fps for the PSD and confronted with a slower reference camera of 33.3 fps. The calibration procedure was linear, involving the adjustment of gain, slope and rotation angle. There has been found a good agreement between the PSD and the camera trajectories, the maximum relative error being less than 1.5% over the measurement range. Using strobed light, the detection resolution dropped to 6μm RMS.

The results demonstrate the suitability of the PSD system for the closed-loop control. Several perspectives arise. Provided sensing algorithm simplicity, the associated control could be deployed on the same basic microcontroller unit, thus resulting a completely-embedded system. The Hamamatsu S5990-01 response time is of 1 μs, faster frame rates are thus readily achievable. A better concentrated, higher intensity lighting source of 980 nm wavelength would further improve the signal-to-noise ratio. Several other enhancements could be performed such as an automatic gain control (AGC) for the input signals and a semi-automated, assisted x-y calibration procedure. By using a quadratic (or a higher order) polynomial calibration or a look-up table interpolation [9], the net accuracy is expected to increase by a factor of 10. Over that value several other limitations arise such as the lens distortion, the finite reference camera resolution (subpixel algorithms required), the electronic noise (better lighting and reflectivity, additional filters), the surface property (avoiding dust particle contamination of PSD and scene) etc.

Acknowledgments

These works were supported under the Romanian CNCS-UEFISCDI Project “Advanced devices for micro and nanoscale manipulation and characterization—ADMAN” (PN-II-RU-TE-2011-3-0299). These works were also partially supported by the NRA (French National Research Agency) project “Smart Blocks” (ANR-2011-BS03-005). We are thankful to Reda Yahiaoui, Jean-François Manceau and Rabah Zeggari for the use of their pneumatic conveyor. We also thank the anonymous reviewers for helpful comments.

References

- Kragic, D.; Christensen, H.I. Survey on Visual Servoing for Manipulation; Technical Report of the Centre for Autonomous Systems, Numerical Analysis and Computer Science: Stockholm, Sweden, 2002. [Google Scholar]

- Hutchinson, S.; Hager, G.D.; Corke, P.I. A tutorial on visual servo control. IEEE Trans. Robot. Autom 1996, 12, 651–670. [Google Scholar]

- Soares, L.R.; Victor, H.; Alcalde, C. A Laboratory Platform for Teaching Computer Vision Control of Robotic Systems. Proceedings of the 38th ASEE/IEEE Frontiers in Education Conference, Saratoga Springs, NY, USA, 22–25 October 2008; pp. S1B3–S1B8.

- Huang, X.; Zeng, X.; Wang, M. The Uncalibrated Microscope Visual Servoing for Micromanipulation Robotic System. In Visual Servoing; Fung, R.-F., Ed.; InTech: Shanghai, China; April; 2010; pp. 53–76. [Google Scholar]

- Hamamatsu PSD Manufacturer. Available online: http://jp.hamamatsu.com/products/sensor-ssd/pd123/index_en.html (accessed on 28 September 2012).

- Gunnam, K.K.; Hughes, D.C.; Junkins, J.L.; Kehtarnavaz, N. A vision-based DSP embedded navigation sensor. IEEE Sens. J 2002, 2, 428–442. [Google Scholar]

- Giubilato, M.P.; Mattiazzo, S. Novel Imaging Sensor for High Rate and High Resolution Applications. Proceedings of the SNIC Symposium, Stanford, CA, USA, 3–6 April 2006; pp. 1–6.

- Hernndez, J.S.; Tur, J.M.M. A 2D Unknown Contour Recovery Method Immune to System Non-linear Effects. Proceedings of the 2006 IEEE International Conference on Robotics and Biomimetics, Kunming, China, 17–20 December 2006; pp. 583–588.

- Wang, C.; Chen, W.; Tomizuka, M. Robot End-effector Sensing with Position Sensitive Detector and Inertial Sensors. Proceedings of the 2012 IEEE International Conference on Robotics and Automation (ICRA), Saint Paul, MN, USA, 14–18 May 2012; pp. 5252–5257.

- Matsuya, I.; Katamura, R.; Sato, M.; Iba, M.; Kondo, H.; Kanekawa, K.; Takahashi, M.; Hatada, T.; Nitta, Y.; Tanii, T.; Shoji, S.; Nishitani, A. Measuring relative-story displacement and local inclination angle using multiple position-sensitive detectors. Sensors 2010, 10, 9687–9697. [Google Scholar]

- Ortiz, R.M.; Riviere, C. Tracking Rotation and Translation of Laser Microsurgical Instruments. Proceedings of the 25th Annual International Conference of the IEEE Engineering in Medicine and Biology Society, Cancun, Mexico, 17–21 September 2003; pp. 3411–3414.

- Zeggari, R.; Yahiaoui, R.; Malapert, J.; Manceau, J.-F. Design and fabrication of a new two-dimensional pneumatic micro-conveyor. Sens. Actuators A: Phys 2010, 164, 125–130. [Google Scholar]

- Yahiaoui, R.; Zeggari, R.; Malapert, J.; Manceau, J.-F. A MEMS-based pneumatic micro-convey or for planar micromanipulation. Mechatronics 2012, 22, 515–521. [Google Scholar]

© 2012 by the authors; licensee MDPI, Basel, Switzerland This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution license (http://creativecommons.org/licenses/by/3.0/).

Share and Cite

Ivan, I.A.; Ardeleanu, M.; Laurent, G.J. High Dynamics and Precision Optical Measurement Using a Position Sensitive Detector (PSD) in Reflection-Mode: Application to 2D Object Tracking over a Smart Surface. Sensors 2012, 12, 16771-16784. https://doi.org/10.3390/s121216771

Ivan IA, Ardeleanu M, Laurent GJ. High Dynamics and Precision Optical Measurement Using a Position Sensitive Detector (PSD) in Reflection-Mode: Application to 2D Object Tracking over a Smart Surface. Sensors. 2012; 12(12):16771-16784. https://doi.org/10.3390/s121216771

Chicago/Turabian StyleIvan, Ioan Alexandru, Mihai Ardeleanu, and Guillaume J. Laurent. 2012. "High Dynamics and Precision Optical Measurement Using a Position Sensitive Detector (PSD) in Reflection-Mode: Application to 2D Object Tracking over a Smart Surface" Sensors 12, no. 12: 16771-16784. https://doi.org/10.3390/s121216771