Calibration between Color Camera and 3D LIDAR Instruments with a Polygonal Planar Board

Abstract

: Calibration between color camera and 3D Light Detection And Ranging (LIDAR) equipment is an essential process for data fusion. The goal of this paper is to improve the calibration accuracy between a camera and a 3D LIDAR. In particular, we are interested in calibrating a low resolution 3D LIDAR with a relatively small number of vertical sensors. Our goal is achieved by employing a new methodology for the calibration board, which exploits 2D-3D correspondences. The 3D corresponding points are estimated from the scanned laser points on the polygonal planar board with adjacent sides. Since the lengths of adjacent sides are known, we can estimate the vertices of the board as a meeting point of two projected sides of the polygonal board. The estimated vertices from the range data and those detected from the color image serve as the corresponding points for the calibration. Experiments using a low-resolution LIDAR with 32 sensors show robust results.1. Introduction

Recently, multi-sensors have been frequently used in the field of robot vision. For instance, a ranging sensor such as high-speed 3D LIDAR is used in conjunction with a color camera for various robot navigation tasks. The 3D LIDAR sensor is capable of providing 3D position and depth information about objects, whereas the color camera captures their 2D color features. Therefore, by providing the 2D image data with the 3D positional information, one can visualize the objects with a more realistic view. However, as a prerequisite, we need to know their relative positions and orientations by calibrating both sensors of the LIDAR and the color camera.

A checkerboard plane has been used to calibrate between a camera and a LIDAR. The calibration method using a checkerboard usually involves a two-step process [1], namely intrinsic and extrinsic calibrations. Therefore, two measurements from the checkerboard are required for the two-step calibration, which may cause two sources of error [2,3]. Also, we often observe a systematic range-reflectivity-bias in the LIDAR on the checkerboard as seen in Figure 1. The measurement variations on the checkerboard will cause measurements errors and affect the final calibration. Thus, the calibration targets with different patterns and colors may produce slightly different calibration results. To reduce the impact of the reflectivity bias, we use a calibration board with a monochromatic color (e.g., a white planar board). In addition, we adopt a board with a polygonal shape such as triangle or diamond to improve the calibration accuracy. That is, the polygonal board enables us to estimate the vertices (i.e., corners) from the scanned range data. Then, the estimated vertices serve as reference points between the color image and the 3D scanned data for the calibration. The vertices of the polygonal planar board in the 2D image are detected by a corner detection method and their corresponding points in the 3D LIDAR are estimated from the scanned 3D data.

In this paper we are interested in finding a projection matrix between the camera and the LIDAR directly without needing to perform a separate two-step (i.e., intrinsic and extrinsic) parameter estimation. The direct estimation needs to identify corresponding points between the 2D image and 3D LIDAR to solve the equations for the projection matrix. However, it is hard to expect the LIDAR to scan a specific point such as a vertex of a calibration board, while its corresponding pixel point in the 2D image can be readily detected. For example, a less expensive 3D LIDAR such as Velodyne HDL-32E has a lower vertical resolution compared with a more expensive scanner with 64 sensors such as the Velodyne HDL-64E, making it almost impossible for the 3D LIDAR to scan specific points (e.g., vertices) on the board. With scanners of low vertical resolution, our approach for the direct calibration is to estimate specific unscanned points on the board using the scanned data. That is, given scanned data on the board, we estimate specific 3D positions on the board such as the corners (vertices). To this end, we use a polygonal board, where the vertices of the board are the meeting points between the two adjacent sides. Therefore, to localize the vertices on the planar board we first need to estimate the equations for the projected side lines of the board. As shown in Figure 2, the slope of the projected side can be estimated from the scanned points near the border. Then, with the information of the calculated slopes and the (known) real lengths of the adjacent sides of the planar board, it is possible to calculate their meeting points (i.e., the vertices of the polygonal board).

In this paper, we propose a new calibration method between a camera and a 3D LIDAR using a polygonal board such as a triangle or diamond plane. By estimating the 3D locations of vertices from the scanned laser data and their corresponding corners in the 2D image, our approach for the calibration is to find point-to-point correspondences between the 2D image and the 3D point clouds. The corresponding pairs are used to solve the equations to obtain the calibration matrix.

This paper is composed of the following sections: in Section 2, we survey previous works related to camera and range sensor calibration. The mathematical formulation of the calibration between 2D and 3D sensors is presented in Section 3. In Section 4, we address the proposed calibration method. Experiments conducted on real data are explained in Section 5 and the conclusions follow in Section 6.

2. Related Works

Calibration between sensors can be done by finding geometric relationships from co-observable features in the data captured by both sensors. For a color camera and a range sensor, feature points in 2D images can be readily detectable, but it is hard to identify the corresponding 3D points from the range data. Therefore, instead of pinpointing individual 3D feature points, the projected 3D points on the planar board (or on the line) were used to formulate constraints to solve the equations for a transformation matrix. For example, Zhang and Pless [1] proposed the use of a calibration board with a checkerboard pattern on it. Here, the corners of the checker pattern are detected in the images with various board positions for the intrinsic parameter estimation. Then, the estimated intrinsic parameters are used to set a constraint for the extrinsic parameters. Note that if we need the intrinsic parameters, then we take this two-step parameter estimation with the checkerboard. However, if the final goal is to get the projection matrix between the camera and the LIDAR, then it is not necessary to estimate the intrinsic and extrinsic parameters separately. Rather, the two measurements in the separate parameter estimation can cause an additional source of error [2,3].

A planar board plays an important role in the calibration. Wasielewski and Strauss [4] used a rig with a black and white planar board to calibrate a 2D laser scanner with respect to a camera. Willis et al. [5] also designed a rig which has many corners that can be used to find the corresponding data in the LIDAR. Naroditsky et al. [6] used a white planar board with a black line. In [7], a triangle plane board was used and its side lines were used to minimize the distance between the projected line of the plane and the intersected laser point on the line. Kwak et al. [8] also tried to minimize the projected errors of the laser points on the line created by v-shaped planes.

As 3D laser range sensors become popular, the calibration problem turned to a calibration between a 3D LIDAR and a camera [2,3,9–15]. Here, the calibration methods using a planar checkerboard were extended from 2D to 3D LIDAR. Andreasson et al. [9] used a calibration board which was framed with a reflective tape, enabling the use of the reflective (remission) data from the laser scanner to automatically estimate the 3D positions of the chess board corners. In [10–12], methods exploiting the detected edges or trihedrons from natural scenes were proposed instead of an extra calibration rig. Lines and corners from indoor or outdoor structured environment were used as reference features for the calibration. Aliakbarpour et al. [13] used an Inertial Measurement Unit (IMU) to provide extra information for robust calibration. Also, a simple laser pointer was used as a bright spot to find corresponding points. Pandey et al. [14] used three checkerboards which have different normal vectors, because three views are required to completely constrain the six degree of freedom (DOF) pose of one sensor with respect to the other. Geiger et al. [15] used multiple sheets of checkerboards. So, the camera and scanner were calibrated using a single image. All the previous methods mentioned above are for the rigidly mounted sensors with off-line calibration. Recently, an on-line calibration for the sensors of occasional movements was proposed [16], where the point-based feature correspondences were used for the calibration.

Many types of special rig for 3D range sensor besides the LIDAR were used to estimate extrinsic parameters between a camera and a range sensor [17–19]. For example, a time-of-flight (ToF) device or Microsoft Kinect™ has a limited field of view compared to the omnidirectional 3D LIDAR, but it can acquire high density 3D point clouds. Jung et al. [17] designed a planar board with round holes on it and Shahbazi et al. [18] used a multi-resolution white squares pattern on a black plane to calibrate between a camera and the ToF device with a low resolution.

In the previous studies, various types of calibration rigs or environmental structures were used to improve the calibration accuracy. However, the performance of those methods relies on the density and location of actual scanned points on the calibration board (or the environmental structure). This implies that the accuracy of the calibration may drop quickly for a low resolution 3D LIDAR with a relatively small number of sensors. In this work, we solve this problem by adopting the following novel approaches:

- (i)

We propose a polygonal planar board with adjacent sides as a calibration rig. Then, our calibration matrix can be obtained by simply solving linear equations given by a set of corresponding points between the 2D-3D vertices of the polygonal board.

- (ii)

The 3D vertices of the polygonal board are estimated, but not measured, from the scanned 3D points on the board. That is, once the geometric structure of the calibration board is known, we can calculate specific 3D points such as the vertices of the board without actually scanning those points. This property enables us to estimate the projection matrix directly using the corresponding pairs between 2D image and 3D points, which is especially useful for a low resolution 3D LIDAR with a relatively small number of sensors.

- (iii)

Using our approach, the combined projection matrix of the extrinsic and intrinsic matrices can be estimated without estimating them separately. Of course, our method can be used only for the extrinsic transformation matrix as usual.

3. Calibration Model for Camera and 3D LIDAR

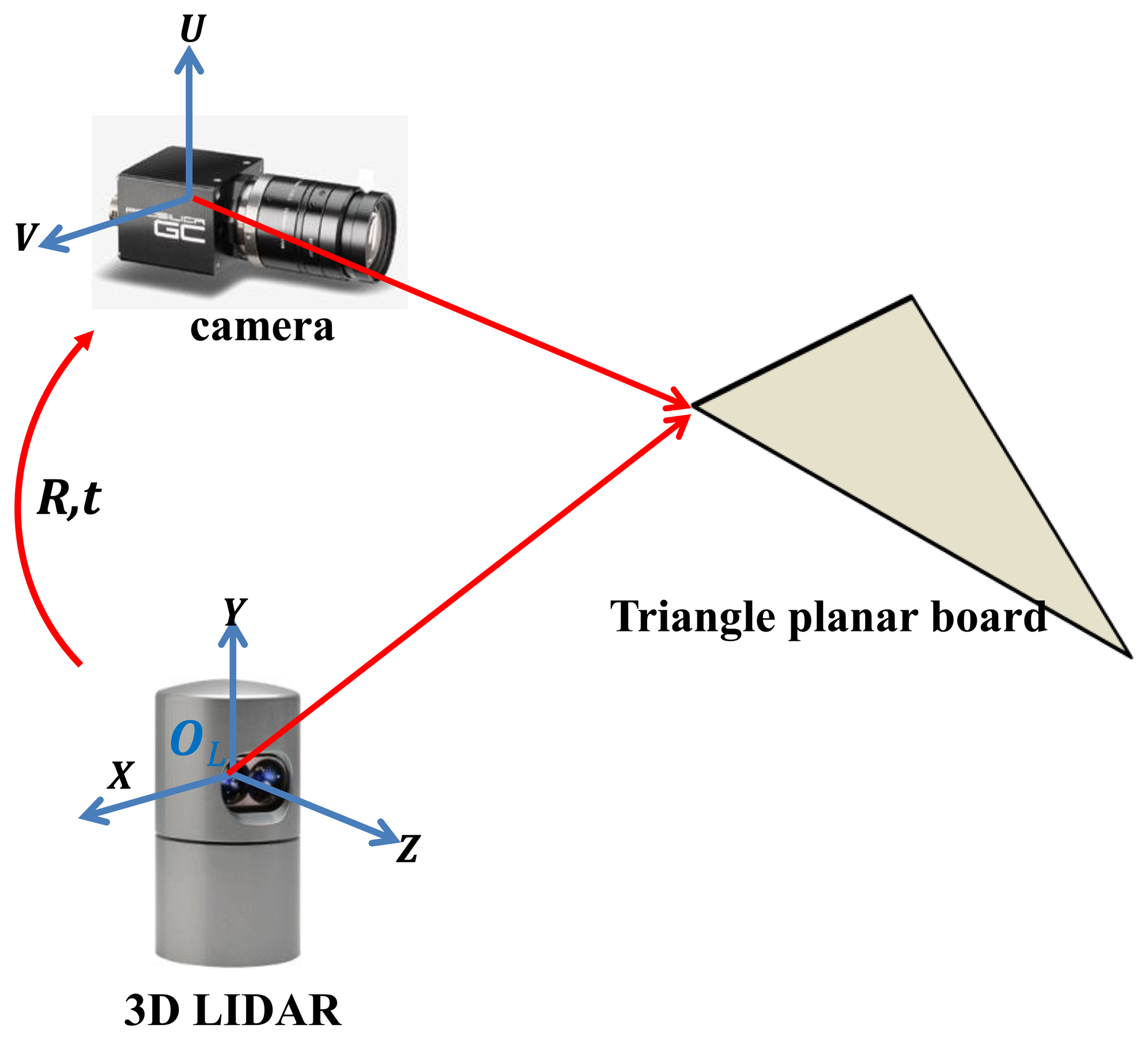

We set a triangle board in front of the rigidly mounted camera and 3D LIDAR (see Figure 3). A Velodyne HDL-32E LIDAR with 32 vertically mounted laser sensors is used as the 3D LIDAR. The image data captured by the camera are formed by two-dimensional coordinate system (U, V) and the range data of the 3D point clouds are represented by three-dimensional coordinate system (X,Y,Z). Our goal is to estimate the projective transformation matrix M of intrinsic and extrinsic parameters between the color coordinate (U, V) and the LIDAR coordinate (X,Y,Z). Then the transformation from a 3D point (x,y,z) to a 2D point (u,v) can be represented by:

For each corresponding pair we have two equations as in Equation (4). To determine the unknown coefficients mpq we need a sufficient number of corresponding pairs.

4. Vertex Correspondences in Polygonal Board

Our calibration method uses a polygonal planar board with adjacent sides (e.g., triangle and diamond boards) (see Figure 4). Li et al. [7] also used a triangular board for the calibration, where the reference for the calibration errors in [7] is the boundary line (edge) of the board and the calibration criterion is to minimize the distances from the scanned laser points on the boarder of the plane to the boundary line. In this paper, we use key points (e.g., the vertices) on the board instead of the line to make point-to-point correspondences between 2D image and 3D points, leading direct solution of the linear equations for the estimation of the projection matrix.

Noting that our vertex-based calibration method can be applied for any polygonal board with adjacent sides, we explain our method with a simple triangle planar board and the extension to other polygonal board such as a diamond plane should be straightforward. The overall steps of our method can be summarized as follows.

- (i)

Data acquisition: Place one or more triangle planar boards in front of the camera and 3D LIDAR. Take camera images and measure the 3D point clouds of the 3D LIDAR for various locations of the board. To reduce the measured errors in the 3D LIDAR and to easily detect vertices of the triangle planar board in the image, it is recommended to use a bright monochromatic color for the board. Also, the board color should be distinctive from the background and the size of the board has to be large enough to include multiple laser scanning lines of the 3D LIDAR on the board surface.

- (ii)

Matching 2D-3D point correspondences: Detect vertices of the triangle plane in images and identify their corresponding 3D points from the laser scans by estimating the meeting points of two adjacent sides of the board.

- (iii)

Estimate the calibration parameters between 3D LIDAR and camera. With the corresponding pairs solve the linear equations for the initial estimate and refine the solutions for the final estimates.

Of the above three steps we elaborate steps (ii) and (iii) in the following subsections.

4.1. Matching 2D-3D Point Correspondences

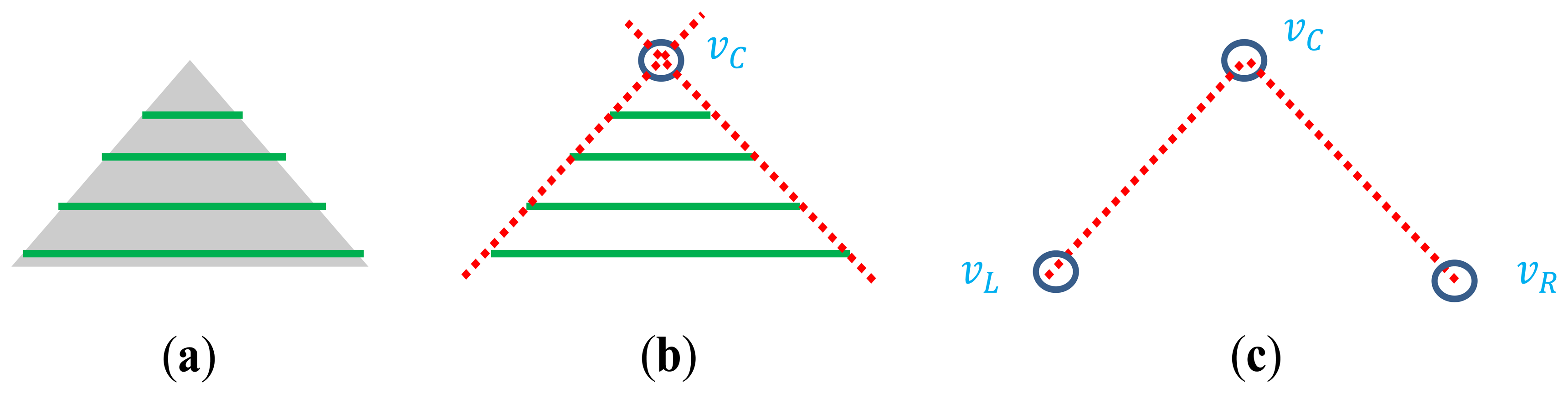

In order to solve the linear equations for the transformation matrix, we need to find point-to-point correspondences between the image and the 3D laser point at the vertices of the triangle planar board. For a 2D image, the vertices can be easily detected by a corner detection method such as Features from Accelerated Segment Test (FAST) [20]. Among all the detected corners, only three corners which represent vertices of the triangle plane are selected. The three vertices on the triangle board are located at the top center, viC(uC, vC), at the lower left, viL(uL, vL), and at the lower right, viR(uR, vR). The corresponding vertices in the laser 3D coordinate are vC(xpC, ypC, zpC), vL(xpL, ypL, zpL)and vR(xpR, ypR, zpR). The vertices in the image can be readily detected by the corner detection algorithm, whereas the corresponding vertices in the 3D LIDAR coordinate are hard to locate and the chance to lie the scan line exactly on the three vertices of the triangle board is quite low especially for a low resolution LIDAR such as the Velodyne HDL-32E. In this situation, our strategy to identify the 3D correspondences of the vertices is to estimate them by calculating the meeting points of the side lines on the planar board.

4.2. Estimation of 3D Points on the Board

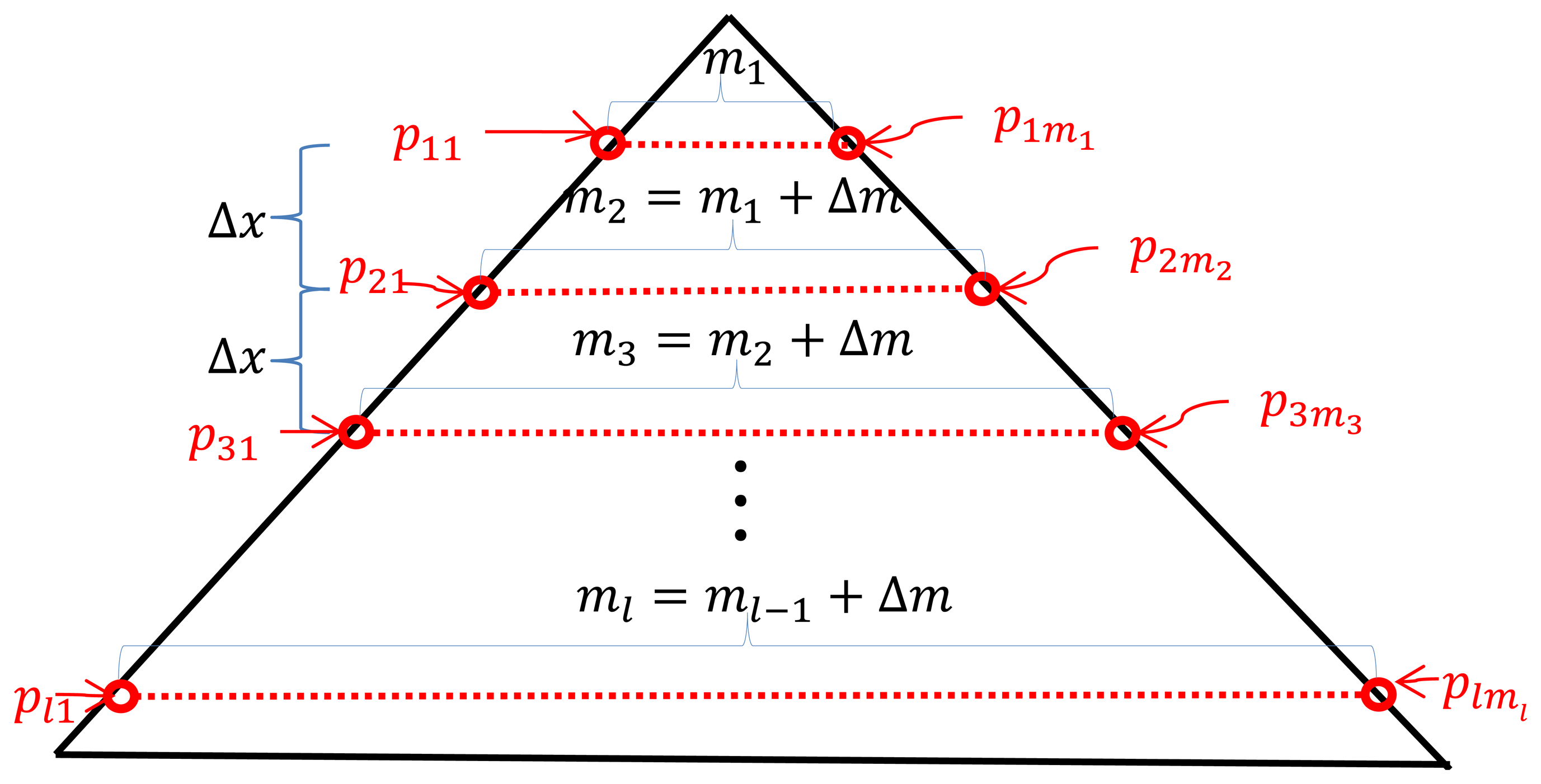

To locate the vertices on the triangle board in the 3D LIDAR coordinate, we first need to measure the 3D point clouds on the board plane. Suppose that there are l scan lines P = {P1, P2 , … , Pl} on the triangle board and each line Pn at the line n consists of mn points such that Pn = {Pn1, Pn2 , … , Pnmn}, where pnm represents the mth point in the nth scan line on the board scan (see Figure 5). Although the calibration board is a flat panel, the 3D points P generated from the 3D LIDAR usually have uneven measurements on the board, so we need to sort out the 3D points which are close to the board surface with smaller errors. To this end, we employ a 3D plane fitting method based on the Random Sample Consensus (RANSAC) [21] algorithm with the following three steps: (i) among all 3D points in P we take three sample points at random and calculate the plane equation formed by the points; (ii) according to the calculated plane equation, each 3D point in P is classified into either an inlier point or an outlier point by a distance threshold; (iii) repeat the steps (i) and (ii) by selecting another three points randomly in P until we find the best fitted plane A according to the largest inlier line density. Here, the inlier line density is the density of the 3D points included in the inlier for each line scan on the triangle board. Note that the inlier points selected by the RANSAC algorithm are used to estimate the adjacent sides of the triangle board, which requires the inlier 3D points to be spread all over the scan line. Therefore, we define the inlier line density as the criterion of the RANSAC algorithm instead of the total number of inliers, so we first define the inlier ratio, which is the ratio between the number of detected inliers and the total number of data as:

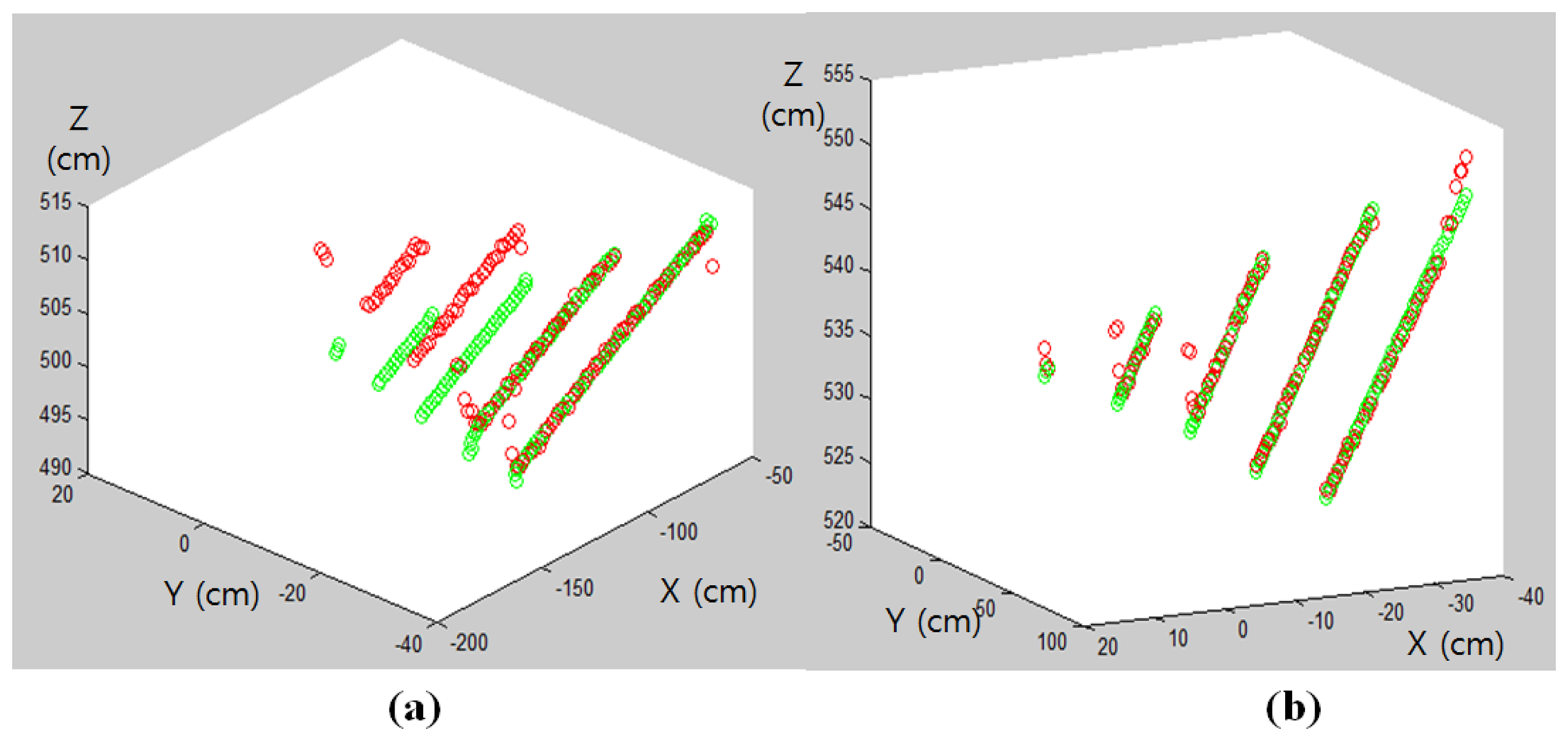

By using the inlier line ratio in Equation (7), all scanning lines contribute equally regardless of their lengths and we can avoid the bias problem of the inlier ratio. For example, using the inlier line ratio we can obtain more accurate plane estimation as shown in Figure 6b.

Once we estimate the board plane using the inlier 3D points of the RANSAC algorithm, we can project all the scanned 3D points P onto the estimated plane to have the projected 3D points , where (see Figure 7).

4.3. Estimation of Vertices in Triangle Board

To estimate the three vertices of the triangle planar board in the LIDAR coordinate we use the projected 3D points P′ on the estimated board plane A. To this end, we estimate the slopes of the side lines in the triangle planar board. Then, we can determine the 3D positions of the vertices by calculating the meeting points of two side lines.

Let us denote the three sides of the triangle board as SL for the left side, SR for the right side, and SB for the bottom side (see Figure 8). Also, we denote the segments of each side as , , and in the 3D LIDAR coordinate. The straight lines which include , , and are expressed as , , and and the vectors representing the slopes of , , and are , , and , respectively.

To calculate and , a 3D line fitting method based on the RANSAC algorithm is used. That is, the estimation of the side line is based on the projected 3D points near the border of the triangle plane, namely { , , …, } for the left line and { , , …, } for the right line (see Figure 8). Here, to improve the accuracy of the line estimation, we can use the virtual points between the two consecutive points, where one is off the board and the other is on the board, e.g., pn0 and pn1 in Figure 9. Specifically, the virtual point is between pn0 and pn1. Also, is between pnmn and pnm1+1. The locations of the virtual points are determined by the average distance between the scanned points for each scan line. So, by calculating the average Euclidean distance for the scan line n, we can locate the virtual 3D points on the left and the right sides as follows:

Now, locating the boundary points and , we can estimate and , respectively, as follows:

The 3D coordinate of the center vertex on the triangle vC can be detected by finding the intersection of and . Since and are generated from the projected 3D points P′ on the plane A, there always exists an intersection of the two lines. The intersection of and is the top vertex vC on the triangle plane. Once we identify the 3D coordinate of the top vertex vC, we can calculate the 3D coordinates of the other two vertices vL and vR by using the known lengths of the side lines and as follows (see Figure 10):

4.4. Suitability Test for the Detected Vertices

The suitability of the detected vertices can be tested by comparing the known real length of the bottom line of the triangle and its estimated length from the detected vertices vL and vR. That is, the following normalized error εB between and is used to test the suitability of the vertex estimation:

If εB in Equation (11) is less than a threshold TB, then we declare that the estimated vertices vC, vL, and vR are accurate enough and accept them as the coordinates of the vertices. Otherwise, we go back to the first step of the plane estimation.

4.5. Estimation of Calibration Matrix

The vertices of the triangle board captured by the camera as a 2D image can be readily detected by a corner detection method such as FAST [20]. Then, we have n pairs of vertices of the polygonal boards between the 3D points ℙ3 = {(x1, y1, z1),( x2, y2, z2), … , ( xn, yn, zn)} and their corresponding 2D points ℙ2 = {(u1,v1), (u2,v2), … , (un, vn)}, where (xi, yi, zi) and (ui, vi) are the 3D and 2D coordinates, respectively, for a vertex on the polygonal planar board. Given these n pairs of correspondences, we have 2n linear equations by substituting each correspondence to Equation (4). Then, by using the singular value decomposition (SVD) method, we can solve the linear equations. However, due to some measurement errors of the correspondence pairs, the solution of the projection matrix does not yield an exact transformation matrix. Therefore, we need a refinement process such that, starting from the solution of Equation (4), we iteratively update the solution by using a nonlinear least squares method. Specifically, Levenberg-Marquardt algorithm [22] can be applied to update the solution of Equation (4) for the final solution.

4.6. Extension to a Diamond-Shape Planar Board

Note that, as we have more scan lines on the board, we can estimate the plane more accurately. Also, a polygonal structure with more intersections between edges definitely improves the accuracy of the solution for the camera calibration. For example, a diamond board with four vertices as in Figure 11 should be better than a triangle board. The vertex detection method for the triangle board can be directly applied to the diamond board. That is, in the diamond board we can estimate each vertex by computing the intersection of the adjacent side lines. Then, the suitability test for the detected vertices can be done by accumulating the distance errors between the known real length of the side line and its estimated length from the detected vertices. Specifically, the normalized error in Equation (11) is accumulated for each line in the diamond and tested by a threshold TB.

5. Experimental Results

Experiments with the diamond planar board are conducted to evaluate the performance of our method. The lengths of four sides of the diamond board used in our experiment are known and equal to 72 cm. For the sensors we used a color camera with resolution of 659 × 493 and a Velodyne HDL-32E LIDAR (see Figures 3). The Velodyne HDL-32E LIDAR has 360° horizontal and 41.3° (+10.67 to −30.67) vertical field of view with 32 scan lines, so its vertical angular resolution is 1.33 degree. With these sensors we took 2D images and 3D LIDAR data with 12 different positions of the diamond board as shown in Figure 12.

Our correspondence-based estimation of M in Equation (1) can be applied to 2D images with or without compensating lens distortion. In our experiments, we used the 2D image data without compensating the lens distortion. To find the correspondences of the vertices between the 2D image and the 3D laser data, we first detect 4 corners on the diamond board in the image. As shown in Figure 13, these corners are selected from the detected key points of the FAST algorithm. Once all key points including the four vertices are detected by the FAST algorithm, the exact locations of the vertices are determined by clicking the mouse around the detected vertices manually. Therefore, the role of the FAST algorithm is to locate the exact 2D coordinates of the vertices which can be easily selected by mouse clicking near the point. The corresponding corners in the 3D coordinate of the LIDAR are estimated from the side lines of the estimated plane with a threshold TB = 0.01 in Equation (11) (see Figure 14 for the estimated corners on the diamond board).

Now, we have four corresponding corners between the 2D image and 3D data and are ready to solve the equations for the projection matrix. Note that we need more than 12 correspondence pairs for estimating 12 calibration parameters and we have to take more than three different positions of the diamond board. Then, the calibration parameters are determined by solving the linear equations and the refinement process.

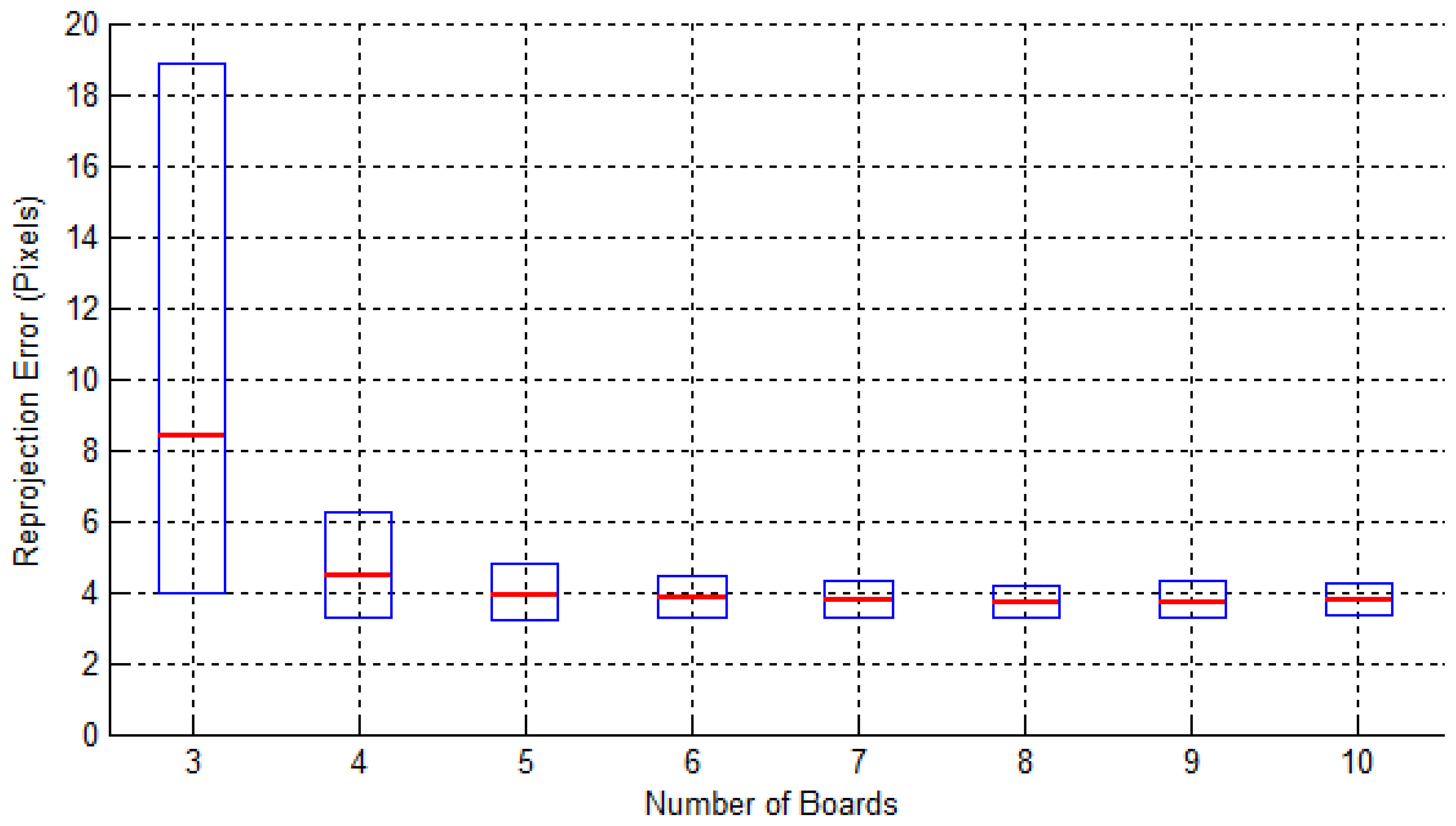

To evaluate the accuracy of the proposed method for different positions of the diamond board, we executed our calibration method for various positions of the diamond board and calculated the calibration pixel errors. Among all 12 positions in Figure 12, we select three positions for the calibration. Then, we have a total of 12C3 = 220 possible combinations for the experiments. For each experiment we have 3 × 4 = 12 corresponding vertex pairs for the solution of the matrix equation. Once we have the final estimation of the calibration matrix, we can compute the reprojection errors for all 48 vertices in all 12 positions. The reprojection errors are calculated based on the distances in pixels between the vertex in 2D and its projected 3D vertex by the estimated matrix. Then, we calculate the average root mean squares for all 48 reprojection errors. The results are shown as box-plots in Figure 15. As one can see from the results, the reprojection errors decrease as the number of boards used increases and they sharply drop after three to four boards. The mean values of the reprojection errors converged to about 4 pixels after five board sets.

After the calibration of the camera and the LIDAR (see Figure 16a), we superimpose the 3D laser data on the 2D image according to the estimated projection matrix. The result is shown in Figure 16, where on the 2D image of Figure 16b the 3D laser data of Figure 16c in the red-dot box of the camera's field of view are superimposed. As one can see in Figure 16d, the superimposed 3D data match the actual depths of the 2D image quite well.

We conducted comparative experiments with the checkerboard method in [15]. The estimated parameters using [15] are used to reproject the 3D scan data on the 2D checker image as in Figure 17a. Also, we applied our method to estimate the projection matrix. Then, as in Figure 17b, the projection matrix is used to reproject the 3D scan data of the checkerboard onto the 2D checker image to facilitate the visual comparisons (i.e., the 2D images and 3D scan data from diamond calibration boards are used only for the parameter estimation not for the reprojection). Overall, from Figure 17, we can notice that our method represents the depths on the boundaries of the objects more accurately (e.g., see the results at the third row (bottom)).

6. Conclusions

In this paper, we have proposed a new approach for the calibration of a camera and a 3D LIDAR based on 2D-3D key point correspondences. The corresponding 3D points are the vertices of a planar board with adjacent sides and they are estimated from the projected 3D laser points on the planar board. Since our approach is based on 2D-3D point correspondences, the projection matrix can be estimated without separating the intrinsic and extrinsic parameters. Also, our monochromatic calibration board provides more reliable measurements of the 3D points on the board than the checkerboard. Experimental results confirm that our 2D-3D correspondence based calibration method yields accurate calibration, even for a low resolution 3D LIDAR.

Acknowledgments

This work was supported by the Agency for Defense Development, Korea, and by the MSIP (Ministry of Science, ICT and Future Planning), Korea, under the ITRC (Information Technology Research Center)) support program (NIPA-2014-H0301-14-4007) supervised by the NIPA (National IT Industry Promotion Agency).

Author Contributions

C.S. Won led the work. Y. Park and S. Yun contributed to the algorithm and the experiments. K. Cho, K. Um, and S. Sim contributed to the problem formulation and algorithm verification.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Zhang, Q.; Pless, R. Extrinsic calibration of a camera and laser range finder (improves camera calibration). Proceedings of the 2004 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS 2004), Sendai, Japan, 28 September–2 October 2004; pp. 2301–2306.

- Yang, H.; Liu, X.; Patras, I. A simple and effective extrinsic calibration method of a camera and a single line scanning lidar. Proceedings of the IEEE International Conference on Pattern Recognition (ICPR), Tsukuba, Japan, 11–15 November 2012; pp. 1439–1442.

- Zhou, L.; Deng, Z. A new algorithm for computing the projection matrix between a LIDAR and a camera based on line correspondences. Proceedings of the IEEE International Conference on Ultra Modern Telecommunications and Control Systems and Workshops (ICUMT), St, Petersburg, Russia, 3–5 October 2012; pp. 436–441.

- Wasielewski, S.; Strauss, O. Calibration of a multi-sensor system laser rangefinder/camera. Proceedings of the IEEE Intelligent Vehicles' 95 Symposium, Detroit, USA, 25–26 September 1995; pp. 472–477.

- Willis, A.R.; Zapata, M.J.; Conrad, J.M. A linear method for calibrating LIDAR-and-camera systems. Proceedings of the IEEE International Symposium on Modeling, Analysis & Simulation of Computer and Telecommunication Systems, 2009, London, UK, 21–23 September 2009; pp. 1–3.

- Naroditsky, O.; Patterson, A.; Danilidis, K. Automatic alignment of a camera with a line scan LIDAR system. Proceedings of the 2011 IEEE International Conference on Robotics and Automation (ICRA), Shanghai, China, 9–13 May 2011; pp. 3429–3434.

- Li, G.; Liu, Y.; Dong, L.; Cai, X. An algorithm for extrinsic parameters calibration of a camera and a laser range finder using line features. Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems, San Diego, CA, USA, 29 October–2 November 2007; pp. 3854–3859.

- Kwak, K.; Huber, D.F.; Badino, H.; Kanade, T. Extrinsic calibration of a single line scanning lidar and a camera. In Intelligent Robots and Systems (IROS). Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems, San Francisco, CA, USA, 25–30 September 2011; pp. 3283–3289.

- Andreasson, H.; Lilienthal, A. 6D scan registration using depth-interpolated local image features. Robot. Autonom. Syst. 2010, 58, 157–165. [Google Scholar]

- Kern, F. Supplementing lasers canner geometric data with photogrammetric images for modeling. ISPRS 2002, 34, 454–461. [Google Scholar]

- Scarmuzza, D.; Harati, A.; Siegwart, R. Extrinsic self calibration of a camera and a 3d laser range finder from natural scenes. Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems, San Diego, CA, USA, 29 October–2 November 2007; pp. 4164–4169.

- Gong, X.; Lin, Y.; Liu, J. 3D LIDAR-camera extrinsic calibration using an arbitrary trihedron. Sensors 2013, 13, 1902–1918. [Google Scholar]

- Aliakbarpour, H.; Núñez, P.; Prado, J.; Khoshhal, K. An efficient algorithm for extrinsic calibration between a 3d laser range finder and a stereo camera for surveillance. Proceedings of the International Conference on Advanced Robotics, Munich, Germany, 22–26 June 2009; pp. 1–6.

- Pandey, G.; McBride, J.; Savarese, S.; Eustice, R. Extrinsic calibration of a 3d laser scanner and an omnidirectional camera. Proceedings of the 7th IFAC Symposium on Intelligent Autonomous Vehicles, Munich, Germany, 11–14 July 2010.

- Geiger, A.; Moosmann, F.; car, O.; Schuster, B. Automatic camera and range sensor calibration using a single shot. Proceedings of the 2012 IEEE International Conference on Robotics and Automation (ICRA), St. Paul, MN, USA, 14–18 May 2012; pp. 3936–3943.

- Levinson, J.; Thrun, S. Automatic online calibration of cameras and lasers. Proceedings of the Robotics: Science and Systems, Berlin, Germany, 24–28 June 2013.

- Jung, J.; Jeong, Y.; Park, J.; Ha, H. A novel 2.5 D pattern for extrinsic calibration of tof and camera fusion system. Proceedings of the 2011 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), San Francisco, CA, USA, 25–30 September 2011; pp. 3290–3296.

- Shahbazi, M.; Homayouni, S.; Saadatseresht, M.; Sattari, M. Range camera self-calibration based on integrated bundle adjustment via joint setup with a 2D digital camera. Sensors 2011, 11, 8721–8740. [Google Scholar]

- Herrera, D.; Kannala, J.; Heikkilä, J. Accurate and Practical Calibration of a Depth and Color Camera Pair. In Computer Analysis of Images and Patterns; Springer Berlin: Heidelberg, Germany, 2011; pp. 437–445. [Google Scholar]

- Rosten, E.; Drummond, T. Machine Learning for High-Speed Corner Detection. Computer Vision–ECCV; Springer Berlin: Heidelberg, Germany, 2006; pp. 430–443. [Google Scholar]

- Fischler, A.; Bolles, C. Random sample consensus: A paradigm for model fitting with applications to image analysis and automated cartography. Commun. ACM 1981, 22, 381–395. [Google Scholar]

- Moré, J. The Levenberg-Marquardt Algorithm: Implementation and Theory. In Numerical Analysis; Springer: Berlin/Heidelberg, Germany, 1978; pp. 105–116. [Google Scholar]

© 2014 by the authors; licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution license ( http://creativecommons.org/licenses/by/3.0/).

Share and Cite

Park, Y.; Yun, S.; Won, C.S.; Cho, K.; Um, K.; Sim, S. Calibration between Color Camera and 3D LIDAR Instruments with a Polygonal Planar Board. Sensors 2014, 14, 5333-5353. https://doi.org/10.3390/s140305333

Park Y, Yun S, Won CS, Cho K, Um K, Sim S. Calibration between Color Camera and 3D LIDAR Instruments with a Polygonal Planar Board. Sensors. 2014; 14(3):5333-5353. https://doi.org/10.3390/s140305333

Chicago/Turabian StylePark, Yoonsu, Seokmin Yun, Chee Sun Won, Kyungeun Cho, Kyhyun Um, and Sungdae Sim. 2014. "Calibration between Color Camera and 3D LIDAR Instruments with a Polygonal Planar Board" Sensors 14, no. 3: 5333-5353. https://doi.org/10.3390/s140305333