1. Introduction

From an early age, manipulating objects has shown a number of advantages for the cognitive development of children. One of the first formalizations of this idea can be found in Piaget [

1]. He proposed four states of cognitive development in which the state of “concrete operations” is a preliminary condition for achieving the state of “formal operations”. In the “concrete operations” state logical reasoning is done through objects that are real in the sense that you can see them or manipulate them. This is the concept of constructivism. Papert [

2] elaborates this concept through the use of programming environments such as Logo, in order to allow children building their own knowledge through physical representations of abstract concepts.

Fitzmaurice [

3] proposed one of the first approaches to tangible interfaces, which he called graspable user interfaces. These allowed direct control of electronic or virtual objects through physical handles. Some years later Ishii and Ullmer [

4] defined tangible user interfaces (TUIs) as systems that mix physical with digital information, employing physical artefacts both as representations and as control elements for computational media. Tangible user interfaces are physical objects or artefacts that are electronically augmented in order to trigger digital events [

5]. Shaer and Hornecker [

6] indicate that tangible interfaces allow to use physical objects to represent and manipulate digital data.

There are some examples in the literature on creating interfaces based on tangible kits in order to help students in the learning process. Most of these tangible kits are made up of: tangible items or artefacts (wooden blocks, LEGO constructions, robots, animated toys, etc.); a specific software (that simulates some situation with educational interest); and some hardware elements that allow one to communicate the tangible elements with the software (camera, sensors, actuators, etc.). Ullmer et al. [

7] proposes a classification of tangible interfaces in three types: interactive tables where the tangible objects are manipulated; construction kits based in modules that can be assembled with each other; and token and constraint systems which combine some characteristics of the first two.

Zuckerman [

8] proposed a classification of tangible (or manipulative) interfaces into two types: Froebel-inspired manipulatives (FiMs) and Montessori-inspired manipulatives (MiMs). The FiM tangible interfaces use materials that simulate real-world structures, such as wooden blocks that can be used to build a castle, or pieces of plastic that can be used to build an airplane. We can find many educational projects based on FiMs: ActiveCube [

9], Topobo [

10], Block Jam [

11] and roBlocks [

12].

On the other hand, Montessori-inspired manipulatives (MiMs) model more abstract structures where each block represents, for example, a mathematical operation or any other abstract concept. SystemBlocks and FlowBlocks [

8] are two projects based on MiMs that aim to teach mathematical concepts such as probability, dynamical systems and programming concepts (loops, variables and branching).

Tangible interfaces in education have been applied to various areas, such as literature or storytelling (KidPad [

13]), music (Reactable [

14] and Block Jam [

11]), science and, especially, mathematics and programming (see below). At the same time, most tangible interfaces experiments involve transversal skills such as reading comprehension, as well as oral and written expression.

Many projects have been developed in the field of programming using tangible interfaces. The reason for this is the difficulty that children have while learning the abstract syntax of programming languages. For example, Quetzal [

15] is a tangible programming language for controlling Lego Mindstorms robots. In the same line, Tern [

15] is another tangible programming language in which you can connect wooden blocks (like puzzle pieces) forming structures that control virtual robots represented on the computer. Similar to Tern, T-Maze [

16] allows to build your own program and control a virtual character in order to get it out of a maze. Finally, Sheets [

17] allows you to create programs using paper cards that allow step-by-step execution of the program, with the aim to understand how it works.

Tangible interfaces are also used for construction and interaction in 3D environments in a simpler and more intuitive way. For example, projects like ActiveCube [

9] or the project proposed by Anderson et al. [

18] make use of physical building blocks in order to build virtual structures.

There are some studies in the literature assessing the performance of learning methodologies that use tangible objects. Okun [

19] presented a comparative study on the use of Tangram to teach Mathematics, both using wooden blocks and virtual blocks in a computer. It was observed that the use of tangible objects showed more benefits, especially at early ages. Klahr et al. [

20] performed an experiment in which children had to build a car using a kit of pieces, trying to optimize its speed. For this experiment there were two student groups: one built a physical car and the other built a virtual car. They concluded that children performed better when using the real, tangible car. Finally, Manches [

21] performed an experiment in which children learned to break down numbers using different partitioning strategies. For this experiment there were three student groups: one using a virtual environment, other using tangible objects and last one using paper and pencil. Children got more correct solutions when using the physical option compared with the two other options.

In the projects commented above the tangible interface interacted with ad-hoc software developed specifically for each experiment. This software was not directly reusable in other projects with other tangible interfaces. Our proposal is to use a virtual world platform instead. There are many advantages in this approach: adding new tangible interfaces is easier; the final applications have multiuser capabilities without any extra effort; the application may use additional tools such as chats, asynchronous messaging, etc.; 3D models made with standard tools such as SketchUp or Blender are easily included; and non-technical users may edit or even build the whole virtual world. The objective is to allow teachers with no knowledge of programming to build their own mixed reality educational applications.

In references [

22,

23], virtual worlds are defined as computer generated environments that use two or three dimensional computer generated images to represent elements of the physical world or imaginary scenarios. In these worlds, users are able to perform a wide range of interactions with the synthetic elements of the virtual world and with other users [

24].

The development of virtual world technologies has opened new opportunities in the educational field. According to the U.S. National Science Foundation, virtual worlds have the potential to play a significant role in education [

25]. Virtual worlds allow different types of educational activities such as role playing, problem based learning, collaborative simulations (learn by simulation), collaborative construction (building activities), and language learning among others. Collaborative simulations and collaborative construction are the most common activities in virtual worlds [

26].

Mixed reality combines physical world elements (tangible interfaces) with virtual worlds [

27]. According to Milgram’s taxonomy [

28] there are two possible scenarios for mixed reality: real experiments with virtual overlays, which are called augmented reality (AR); and virtual experiments that are complemented with real world components, which are called augmented virtuality (AV). The research described in this paper is in the scope of AV environments, thus the physical component for interaction is a key element: we will call it the tangible interface [

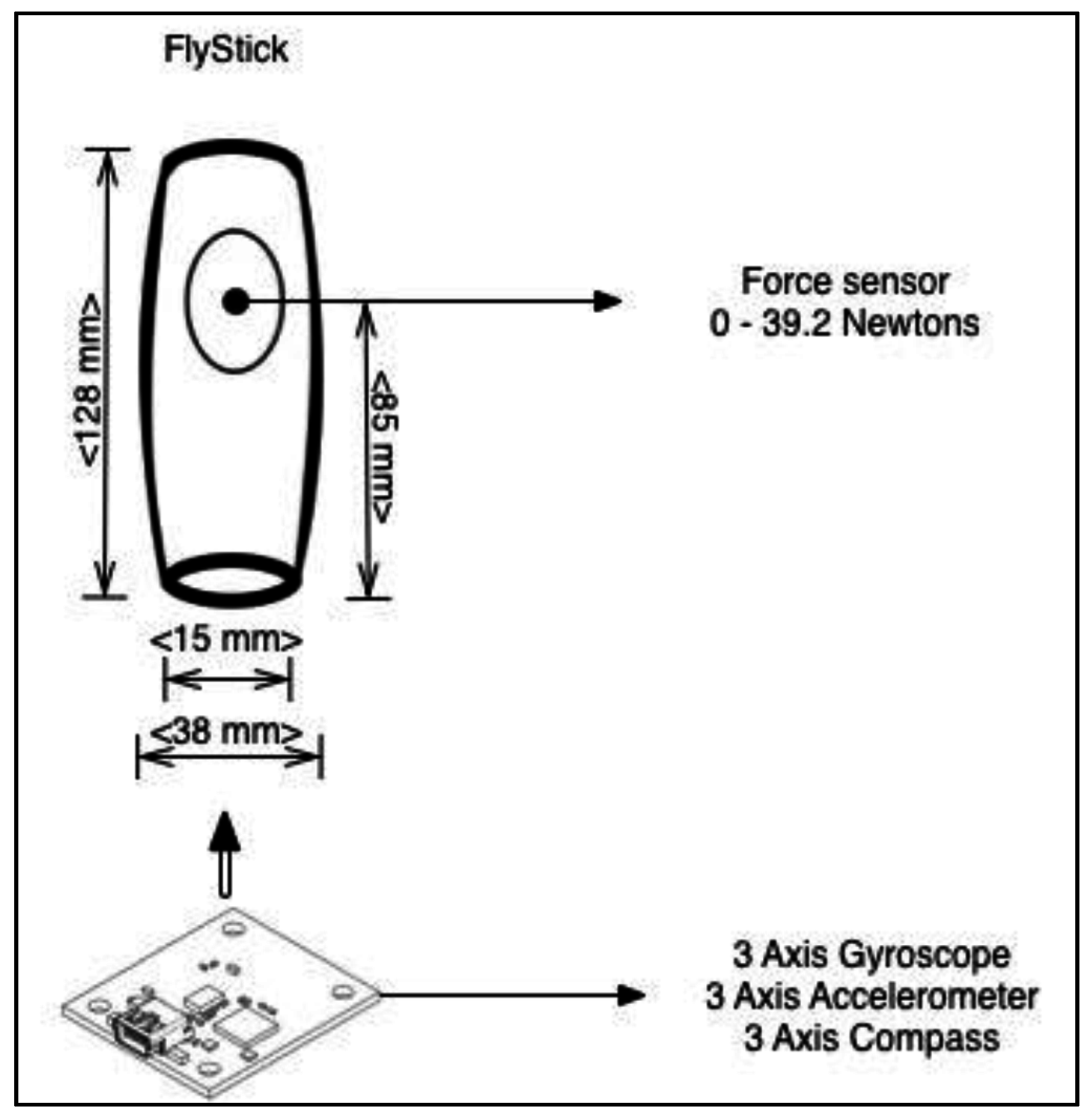

29]. In this paper we describe two prototypes of tangible interfaces that have been developed and evaluated in the area of mixed reality in education, using a middleware developed by Mateu [

30].

Research done by Starcic et al. [

31] describes a system for teaching geometry using tangible interfaces, aimed to inclusive education. This study concludes that tangible user interfaces are an effective approach for teaching geometry particularly for students with motor skill problems.

The research done by Ishii [

4] explores the combination of tangible elements with virtual reality, which allows users to understand and manipulate digital information using everyday objects as user interfaces. The aim of tangible interfaces in virtual reality is to close the gap between virtuality and the physical environment. According to Takuya et al. [

32], several research groups have studied tangible user interfaces for modelling 3D objects. This review confirmed that this approach allows users to interact using human natural constructionist skills overriding the limitation of traditional user interfaces.

Studies done by Thriona and Klahr [

33] indicate that the use of physical objects in education helps the exploration and development of skills in learners. When these tools are combined with objects in virtual reality an improvement in the students’ knowledge acquisition process is noticed.

Kaufmann et al. [

34] proposed a virtual model builder that used augmented reality, called Construct3D, which was focused in teaching mathematics and geometry by means of using geometric shapes (lines, planes, cubes, spheres, cylinders and cones). This tool provides basic editing with the support of virtual reality glasses and a pencil to perform movements like selecting figures and operations. After some experiments that involved a group of students, the author concludes that the students presented visual coordination problems whit the system: an accuracy issue was noticed when performing the activities involving interactions on a virtual 3D environment. However, they were highly interested and motivated with the activity.

Wang and Dou [

35] designed a tangible interactive tool called StoryCube, which helped children to interact with virtual reality objects through the use of a tangible user interface, replacing the mouse and keyboard. The tangible interface allowed children to operate and modify virtual objects while they narrated a story in a virtual world, allowing them to pick up elements of the story and its characters to decorate their own history. The tangible interface prototype was designed using RFID (Radio Frequency IDentification) tags and readers, accelerometers, buttons and a joystick. After the evaluation of the tangible interface it was observed that children preferred to play with buttons and to manipulate objects, compared to more traditional user interface alternatives. There are other examples of tangible interfaces applied to virtual reality, such as KidPad [

36], Telling tales [

37], the Tangible Interface for Storytelling TOK [

38], StoryRooms [

39], ToonTastic [

40], PuzzleTale [

41] and TellTable [

42].

It is important to mention that tangible interfaces in virtual reality contribute to the development of collaborative work CSCW (Computer-Supported Cooperative Work) [

43]. An experiment by Gu et al. [

44] shows that the mixed reality interaction supports group collaboration when students are challenged on the designing of a common task. There is a wide range of input devices for augmented reality that have been developed for collaborative work such as Towards Natural [

45], Built-it [

46] and DiamondTouch [

47].

Other project using mixed reality in education is the environment proposed by Nikolakis et al. [

48]. In this project, students learn geometry by using a haptic glove that allows the creation of geometrical objects. This project was assessed with high school students, concluding that the mixed reality environment provides a more efficient problem solving approach for learning geometry.

In order to be able to manipulate 3D objects naturally, at least six degrees of freedom (6-DOF) are needed, three for translation on the

X,

Y,

Z axis, and three for rotation around them. Zhai [

49] shows the difficulties in making a standard 6-DOF device. In first place, the manufacturing costs are higher. In second place, there is only a very limited knowledge about what properties should have a good 6-DOF device in order to make it both ergonomic and functional.

Mine [

50] designed an immersion device aimed to allow the user performing tasks within a virtual reality environment through various interaction techniques, including a remote control (a handheld widget), which allow immersion in a virtual space creating a perception of standing in direct interaction with virtual objects. This device was designed for two hands interaction: each hand interacted with a 6-DOF sensor and the user wore a stereoscopic viewer in order to perceive movements in 3D. But the author says he has had problems regarding the use of the device, since the movement was not intuitive for users, possibly due to magnetic interference affecting the sensors. Additionally, the author noted the high hardware cost of the system.

Another similar study is the 3-Draw system developed by Sachs et al. [

51], which was designed for using both hands: each hand controls a 6-DOF sensor device in order to interact with images on a conventional screen. Users were able to draw three dimensional contours by combining both devices. Sachs reported that the user interface was natural and fast, and the simultaneous use of the two hands provided a kinaesthetic feedback that allowed users to feel as if they were holding the objects displayed on the screen.

JDCAD is an interactive 3D modelling system designed and built by Liang [

52]. It uses a 6-DOF sensor combined with a helmet with an embedded screen. Liang showed promising results when compared to conventional systems, since it was easier to use, and time savings were observed when performing design tasks. A similar project was developed by Shaw and Green [

53]: a design system with two 6-DOF tangible interfaces, which were used to draw free form polygonal surfaces in a virtual reality environment. We may cite other works with similar characteristics such as: 3D animations [

54], 3DM (three Dimensional Modeller) [

55], WIM (world in miniature) system [

56] and TwoHanded Interface [

57].

The tangible user interface Novint Falcon [

58] is a haptic device with USB connection that was designed to replace the mouse. Users are able to control it making movements in three dimensions: (i) up and down; (ii) forward and backward and (iii) from right to left. As the user moves the bubble grip, sensors communicate with the computer. This bubble allows users to manipulate objects in a virtual 3D application, providing tactile feedback.

However, to our knowledge, all the existing projects in this area use ad-hoc 3D applications for the virtual reality part of the system. Therefore, changing the learning application (for example, adding a new activity) cannot be done by end users (teachers), but has to be programmed by the developers. In our proposal, mixed-reality is implemented using a full-fledged virtual world, based in the OpenSim platform (which is an open software version of Second Life). This platform provides editing capabilities to any user, in a quite easy fashion. Therefore, the use of a virtual world platform instead of an ad-hoc 3D application will allow teachers to extend the educational applications, or even to create new ones by themselves. The main objective of this study is to assess whether the use of virtual worlds and tangible interfaces is beneficial for student learning when compared to more traditional forms of learning. This study provides evidence that students using the aforementioned technologies, exhibit increasing concentration and interest int the activities. They also retain knowledge significantly better as they outperformed control group students in a delayed post-test conducted two weeks after the study.

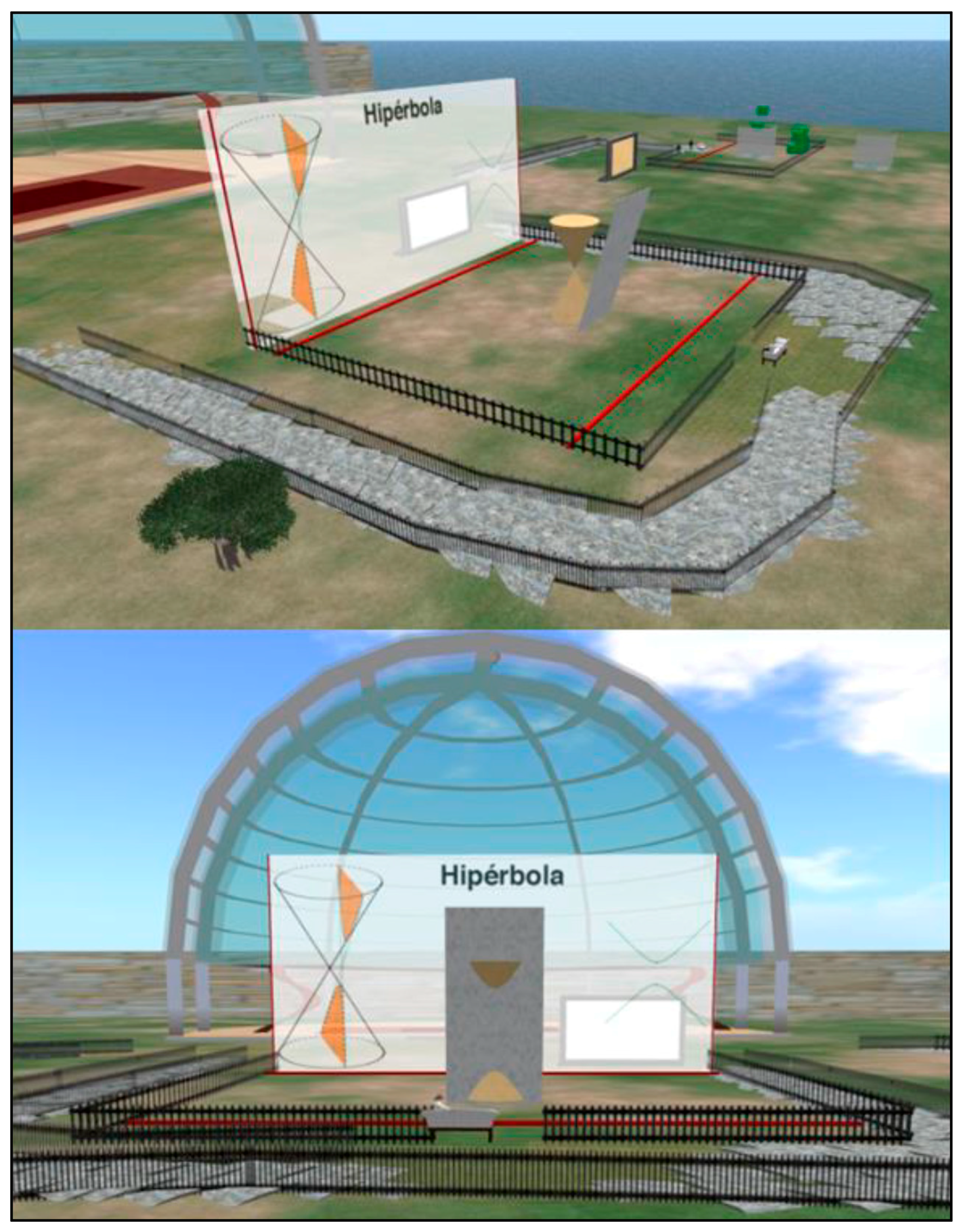

3. Implementing Educational Applications with the Tangible Interfaces: A Pilot Study

3.1. Research Design

The two tangible interfaces described above have been evaluated in “Florida Secundaria”, a high school in Valencia, Spain. The study was organized in four sessions of three hours. The first session was devoted to an introduction to virtual worlds. In this first session, students explored the virtual world, and learned how to create, move and rotate objects, how to interact with other avatars and how to customize the look of their avatar.

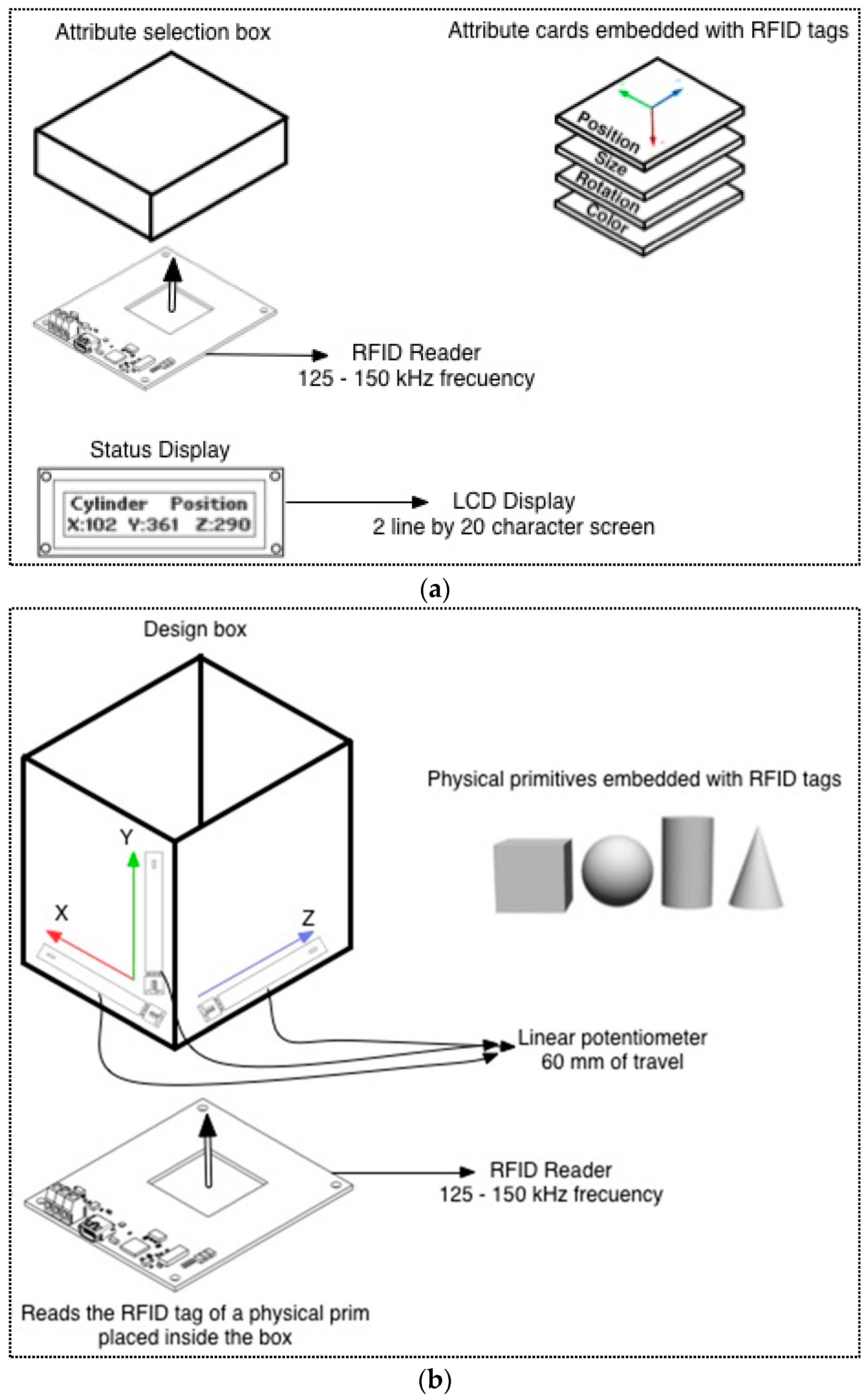

In the other three sessions the students performed several educational activities within the virtual world, where they used the tangible interfaces PrimBox and FlyStick.

The activities were aimed to learn geometry, with the main objective of developing spatial vision, taking advantage of the three-dimensional virtual world. In this study we settled a group that made the experiment using the mixed reality applications, and a control group that worked the same materials with a traditional methodology.

The traditional methodology consisted on explaining the geometrical concepts using the blackboard. Later, the students had to take some paper-and-pencil tests on the subject. In contrast to the traditional methodology, students using the tangible interfaces were practicing the geometrical concepts by themselves. The control groups worked in traditional classrooms, equipped with blackboards, where the students attended the explanations given by the teacher on the blackboard. The experimental group used a computer classroom, where the students interacted with tangible interfaces while exploring the virtual world.

In total 60 students participated in the study (30 students using mixed reality and 30 students using the traditional methodology).

For each group, the students were chosen randomly. After choosing the students we found that in the group using virtual worlds, 23% of the pupils had special educational needs, compared with 16% of pupils in the group using traditional methodologies. The special educational needs were mainly dyslexia, attention deficit disorder and hyperactivity (ADHD) and learning difficulties with some curricular adaptations.

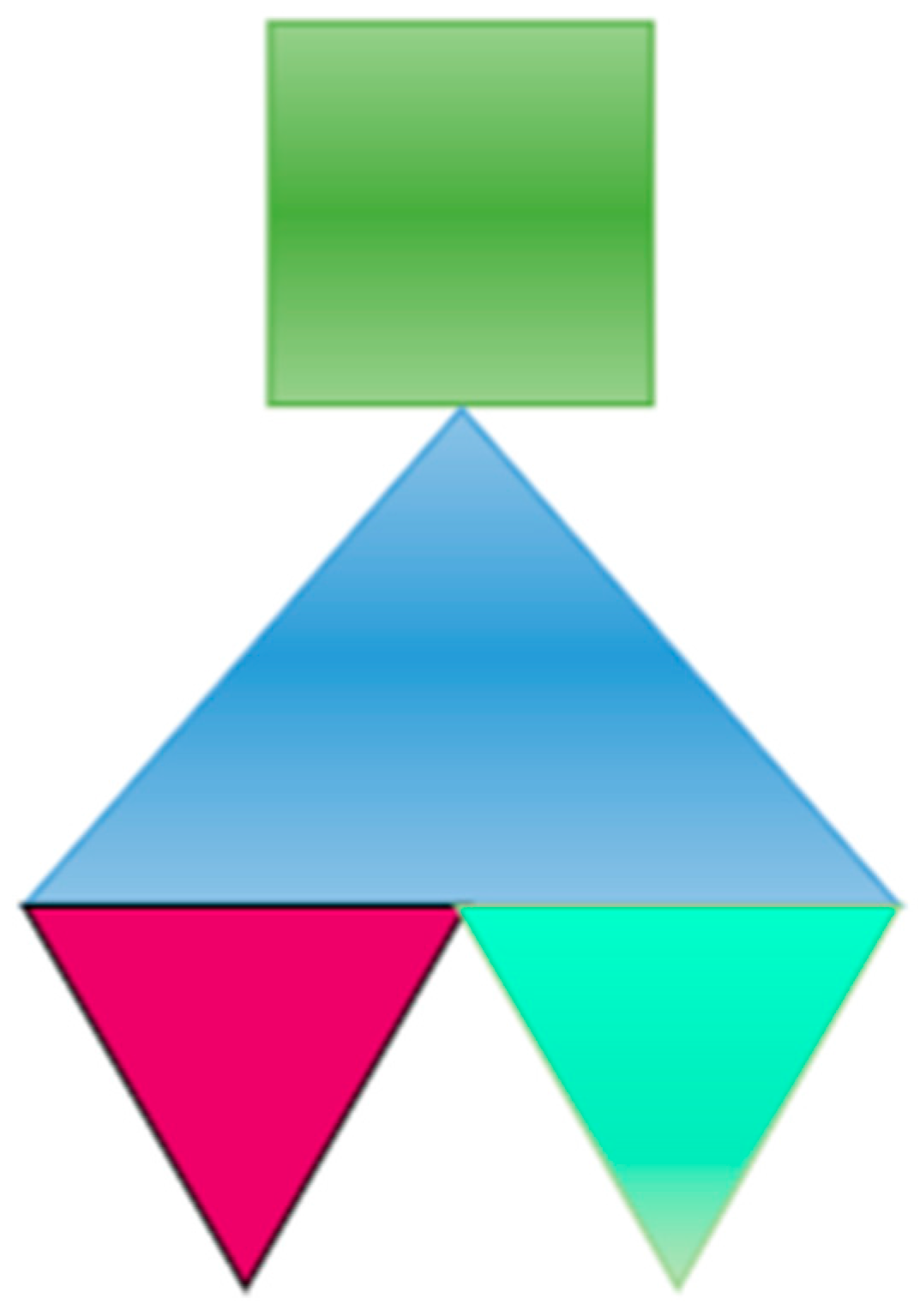

The sessions were conducted on students of second and third year of secondary education (ESO). We prepared two activities (see

Table 1). The activity 1 was performed in pairs. One student was given a sheet of paper showing a geometrical construction made with several (basic) geometrical figures. This student had to give appropriate instructions to her partner, who had to replicate the geometrical construction in the virtual world. The second student, who had not seen the sheet of paper, received all the necessary information from the first student using geometrical language (vertex, angles, points, half a point, position, rotation, etc.) The learning objective was to practice such technical language and the associated concepts.

Activity 2 consisted in learning how the different conic sections are generated from the intersection of a plane with a cone, by experimenting in the virtual world with a tangible interface.

These two activities were also performed by the control group, using a traditional methodology. In the case of the first activity, the second student had to draw the figure on paper based on the instructions received from the first student. In the case of the second activity the students attended a traditional (blackboard based) class where the teacher explained and draw the different conic sections on the blackboard.

Regarding the second activity, the student in the control group has a passive attitude where she hears the teacher’s explanations, while the student in the experimental group, besides listening to the teacher’s explanations, can interact with the tangible interface FlyStick and check how the conic sections are generated in real time. In order to evaluate the study, we have used:

An introductory questionnaire about virtual worlds.

A usability test about PrimBox and FlyStick.

A semi-structured interview with the Mathematics teacher.

Exercises and tests on the subject.

The questionnaire about previous knowledge on virtual worlds showed that most of the students were aware of virtual worlds and they had even played with them previously (see

Table 2). That means that virtual worlds are attractive to students and their motivation is high: virtual worlds capture their attention and interest. Regarding ease of use, we found that virtual worlds were perceived as simple to use and to interact with, so the learning curve was very flat.

With respect to the usability questionnaire (see

Table 3), students found the interaction with the tangible interfaces (PrimBox and FlyStick) simple and easy, as well as the completion of the proposed activities in the virtual world. The only problem found was the Internet connection, which sometimes was too slow.

After the study, we also made a semi-structured interview with the teacher of mathematics. A summary of the interview is presented in

Table 4.

3.2. Activity 1: PrimBox

Activity 1, which used the tangible interface “PrimBox”, involved two student groups: the control group, which used a traditional teaching methodology, and the experimental group, which used the tangible interface and the virtual world. The activity was designed to develop the spatial perception and to practice the technical language of Mathematics and Geometry.

In both groups, the activity was performed in pairs, where a student received a geometrical figure (see example in

Figure 6) and had to explain, using a mathematical language, the position and orientation of each part of the figure, in order to copy it.

The control group was composed of 29 students of the 3th course of secondary education and the experimental group was composed of 30 students of the same course. In the case of the control group (see

Figure 7), the activities were performed in conventional classroom where each pair of students interacted with each other in order to draw the required geometrical shape, one of them having seen the figure, and the other one having to draw it.

In the experimental group (see

Figure 8), the activity was carried out in a computer classroom where the students used the virtual world to create objects, customized their avatars, explored the different activities that were present in the virtual island, and used the tangible interface PrimBox to perform Activity 1.

In order to evaluate the performance (in a 0–100 scale) three factors were taken into account: the appropriate use of the geometrical language, the correct orientation of the figure, and the correct positioning of the basic geometrical parts. We also used the same three metrics in the control group. From these three metrics, the teacher assessed a score for each pair of students in a 0–100 scale. The average score in this measurement was higher in the experimental group using the tangible PrimBox (all the students scored 100) while the control group got an average score of 74.5.

If we also take into account the time used to complete the task (less time means better performance) we can discriminate between students that got the same score. We may define an “efficiency” measure as the ratio between the quantities score and time in order to measure student performance. The larger is the score and the shorter is the time used, the larger is the efficiency. This measurement allows comparing the performance among students of the same group. However, for students in different groups the “time” quantity is not directly comparable. Students using the virtual world scenario may use some time in “auxiliary” activities (accuracy errors of the tangible interface, Internet connection failures, etc.) not directly related with the core of the activity. To allow a comparison of efficiency between the control and the experimental group, this time variable was normalized, dividing it by the average time in each group.

As can be seen in

Table 5, the efficiency average in the experimental group using PrimBox (113.28 points) is slightly higher than the efficiency average of the control group (106.77 points), and also the standard deviation of the data is lower in the experimental group than in the control group. This means that the students who used PrimBox showed a higher efficiency compared to the students in the control group but this difference did not reach a level of statistical significance. In addition, we observed a higher motivation in students performing the activity using the tangible interface with respect to the students doing the activity using a traditional approach.

3.3. Activity 2: FlyStick

The second study aimed to improve the understanding of the conic curves. Two groups of students were involved in the study: one group using the tangible interface FlyStick and the other group using a traditional approach. In the traditional approach group students received an explanation of the conic curves on the blackboard. In the experimental group the students used virtual worlds for practicing the concepts using the tangible FlyStick. Later, both groups we were evaluated using a test where they were asked questions about the different conic sections (see an example of a question in

Figure 9).

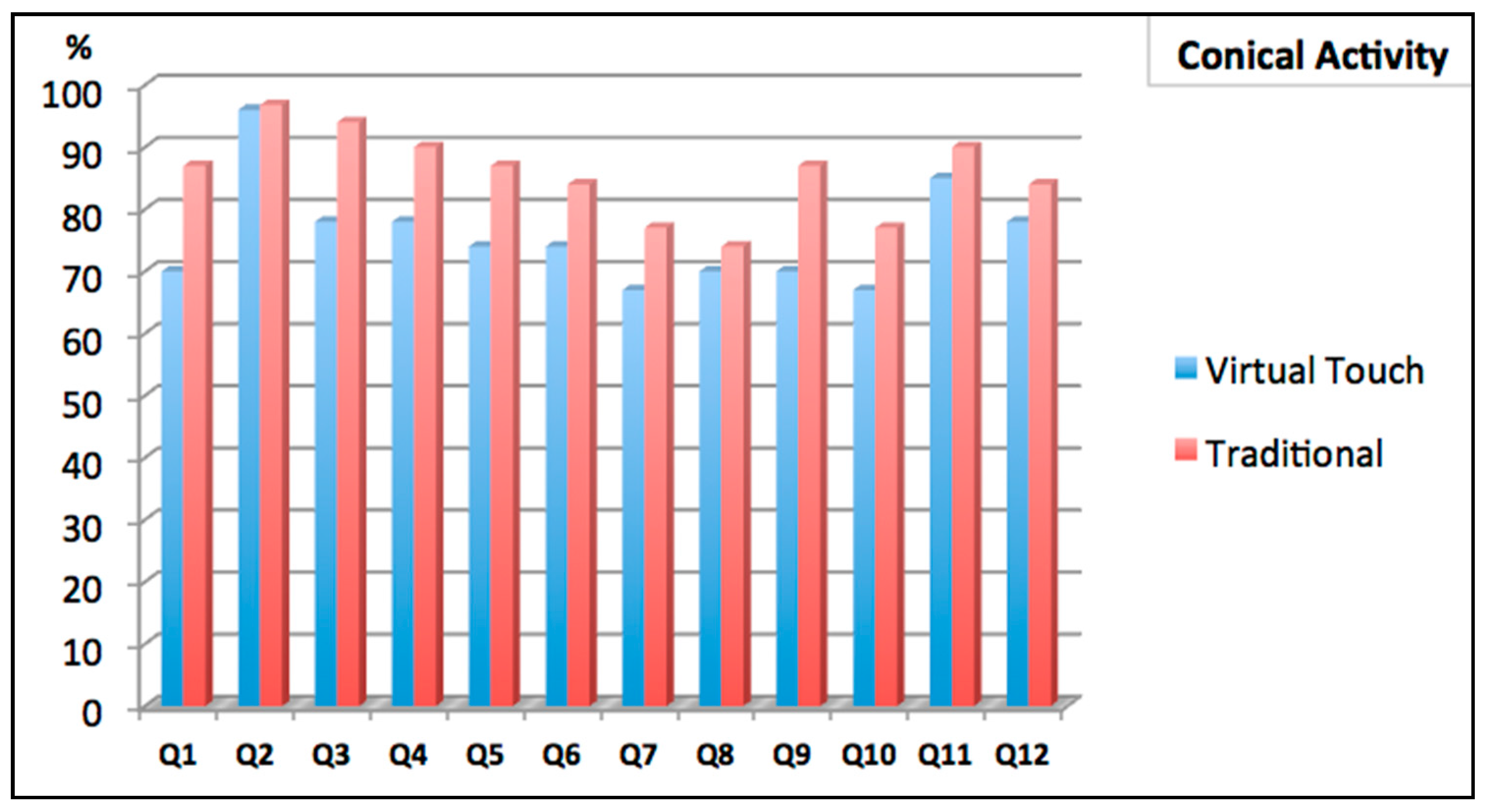

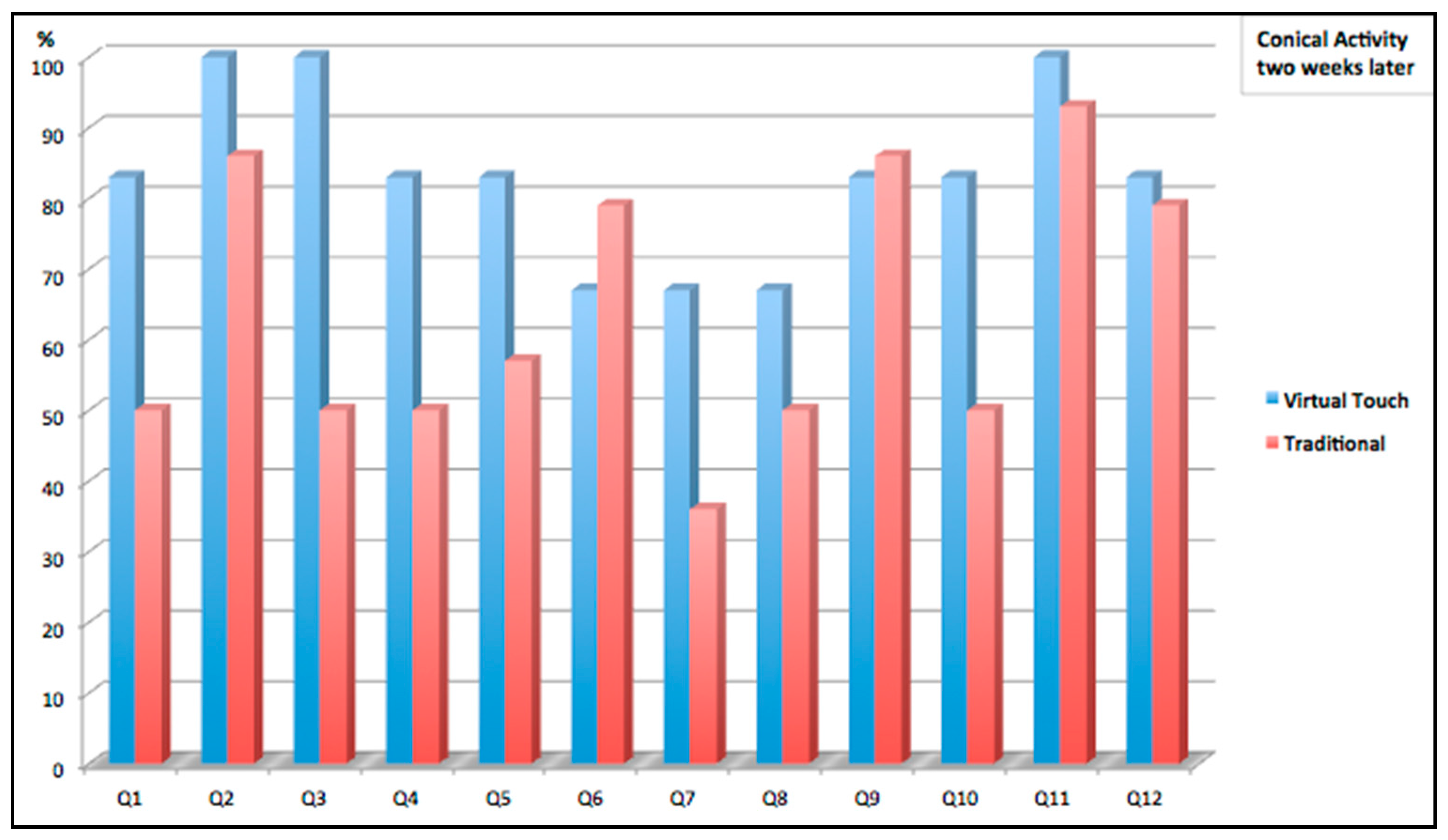

Two competence tests were used to assess learning (see

Figure 10 and

Figure 11). The first test took place just after the activity was performed (one group using FlyStick and the control group in a normal class). The second test took place two weeks later. Although the results of the first test were better for the students using the traditional methodology (see

Figure 12), the students that had used FlyStick got better results in the second test: Virtual Touch methodology allowed better retention of the knowledge. This suggests that using Virtual Touch learning is more meaningful, probably because motivation makes students remember the concepts more accurately in a long term.

These activities took into account that students with special educational needs are more problematic when understanding an activity. However, students with special educational needs improved their results in the study with respect to their previous qualifications in Mathematics in an 86%. Doing activities with virtual worlds and tangible interfaces enabled them to improve mathematical competence, showing better performance.

As explained before, the students took two tests: one just after the activity was done, and a second one two weeks later. We observed that the students in the control group had significantly worse scores in the second test, while the students that used the mixed reality system got scores similar to their first scores. This fact suggest that the learning process has been more significant when using the mixed reality system.

To investigate for statistical significance we applied a paired t-test control as it is suitable for comparing ‘repeated measures’ (before and-after tests) for the same group of students. The control group was assessed at the time of the study and then control students repeated the test two weeks later. Similarly, for the experimental group, students took the test at the time of the study and then again two weeks later. In this way, we controlled for statistical significance between the initial test performance (during the study) and the performance two weeks later.

We checked for the two following hypotheses:

H1: The scores for the students in the control group show no significant differences between the first and second tests.

H2: The scores for the students in the mixed-reality group show no significant differences between the first and second tests.

The paired t-test control rejected null hypothesis H1 for the control group, and, therefore, there are significant differences between the scores in the two tests. In fact, 84.6% of these students got worse results in the test that they took two weeks later. On the other hand, the paired t-test failed to reject null hypothesis H2 and therefore, there were no significant differences in the scores that students in the mixed reality group obtained in these two tests. Therefore, the data support the conclusion that new knowledge is better retained when using the tangible interface. Using the mixed reality system, resulted to a more meaningful learning.