1. Introduction

Earthquakes and similar disasters in remote areas pose a challenge for relief efforts when the transportation infrastructure is damaged. Victims in such disasters have a greater chance of survival the sooner they are discovered; conducting an extensive search even just a few hours faster will save many more lives. Survival rates of earthquake victims continually drop significantly the longer the delay before rescue, with a sharp drop off at around 48 h [

1,

2]. In the 2013 earthquake that hit Lushan, China, Chinese researchers provided an autonomous rotary-wing UAV to assist search and rescue efforts [

2]. Interest in using drones for search and rescue and assessing dangerous situations has also been increasing in local fire fighting departments [

3].

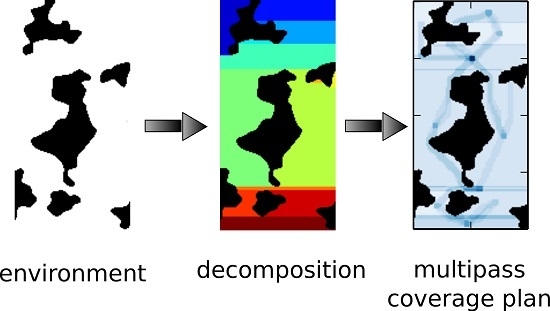

Consider a scenario where an earthquake or flood causes a building to collapse, trapping survivors who are difficult to access by ground. It would be advantageous to have aerial vehicles autonomously fly at low altitudes navigating over rubble searching for survivors. However, such environments may be subject to change, requiring quick decision making while acting on a previously generated plan. There are several reasons why generating a multipass coverage plan is advantageous for target search. Scenarios involving noisy sensors or targets that appear or disappear over time require multiple passes to correctly determine whether or not a target is present. Further, given constraints in mission duration, the agent must be able to properly decide where to search, and for how long, before returning to base.

Mutual information [

4] is an ideal metric to maximize for improving search. Mutual information measures the dependence of two random variables, such as a noisy sensor measurement in the future and the current best estimate of a target’s location. Picking a future sensor measurement that maximizes mutual information will effectively reduce the expected uncertainty of the target’s location [

5]. Most importantly, assessing the mutual information of an action allows one to formally assess the trade-offs between searching a region multiple times or searching another region not searched, making it an ideal metric for multipass multi-target search [

6]. The mutual information of conditionally independent events has been shown to be submodular [

6], which implies that multiple correlated sensor measurements offer diminishing returns in how much information is gathered when summing the total information of each measurement individually. When trying to maximize the submodular reward function of mutual information, the reward is

path dependent requiring one to plan in the space of trajectories (

Section 3), making planning more computationally burdensome.

This presents several challenges to which we will outline our proposed solution. The algorithm must be able to react quickly to new information that can affect the search plan, suggesting an anytime formulation. An anytime algorithm is an algorithm that quickly finds a feasible solution, and continually improves the solution as available planning time permits. Greedy approaches to informative path planning that only consider all immediate actions are quick to react to changes in the environment, but may get trapped [

7,

8]. However, previous work has shown that for submodular optimization problems, greedy approaches can get to within a constant factor of the optimal solution [

6,

9]. Similarly, model predictive control based techniques can look multiple time steps into the future [

10] further improving performance, but can be trapped similarly. While both discrete [

11] and sample based motion planners [

12] have been used in information gathering problems and are capable of generating plans of long duration, they have only been applied to relatively simple environments and have not been used to generate multipass coverage plans, with the exception of the authors’ previous work in the area [

13].

We present what we believe to be the first multipass coverage planner that maximizes mutual information for long duration trajectories that span the entire mission duration. Since such path planning problems are at least as hard as other NP-hard planning problems, use of admissible heuristics to guide the search is essential to improve algorithm performance. While there are many admissible heuristics for path planning, these do not extend well to multipass coverage due to the path dependence of the reward. We discuss the heuristics we developed for multipass coverage planning and demonstrate how they improve solution quality in the given computational time budget. We also extend three other state-of-the-art coverage planners to handle the multipass coverage planning problem and benchmark how the four algorithms perform in simulated natural environments based on total information gathered, expected time for human ground crews to search a cell after the autonomous search, and the time to compute the solution.

This paper significantly extends the preliminary work by the authors [

13] by benchmarking the proposed

-admissible branch and bound algorithm in realistic simulated environments, and extending the analysis of the algorithm’s performance. The new realistic environment models account for sensors viewing multiple cells, the physics of the vehicle, and realistic variations in region shapes using state of the art environment decomposition techniques on simulated obstacle occupancy grids generated by gradient noise. Experimental results compare the performance of the proposed

-admissible branch and bound algorithm to other state of the art algorithms and also assesses how well in expectation the various algorithms guide human relief crews using a simplified effort allocation model. Findings show that

-admissible branch and bound is able to gather the most information and reduces the expected time for ground crews to search a cell the most. Such quality plans come at the cost of requiring the most time to compute, however. We conclude our analysis with a discussion on the low cost of supercomputing capabilities contrasted with the large value of a statistical life.

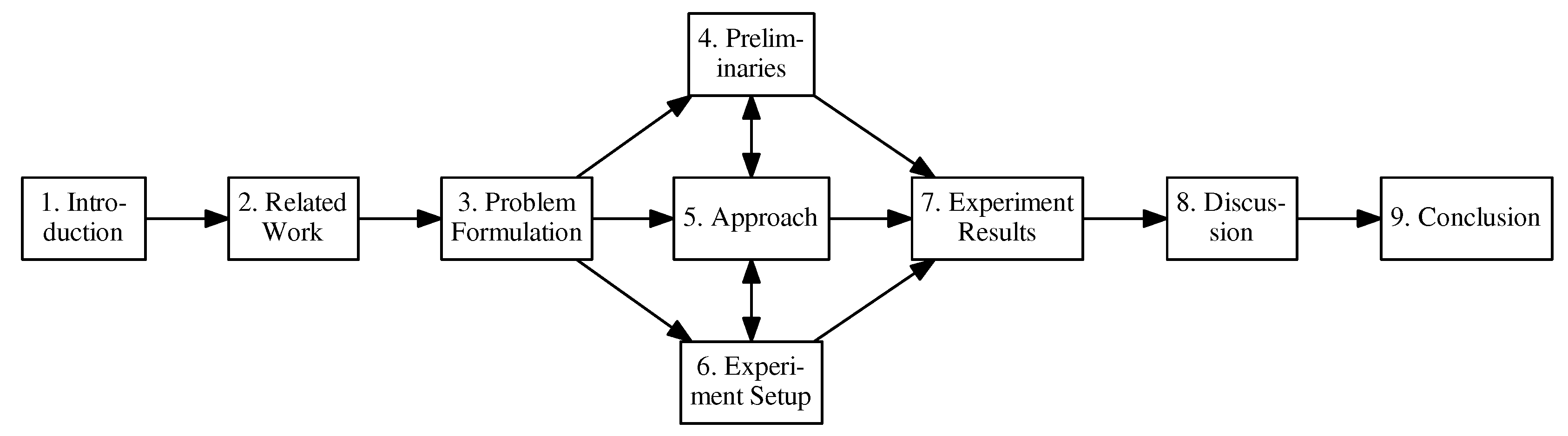

The paper is organized as follows, following the road-map in

Figure 1.

Section 1 offers an introduction and motivation to the topics presented.

Section 2 covers related work in the literature.

Section 3 offers a formal problem formulation for multipass coverage planning for target search. The detailed description of the algorithm presented in this paper is divided into three related sections. Cross references are provided to establish ties between related concepts; readers may skip the cross references on an initial read.

Section 4 introduces important preliminary concepts and introduces the benchmark algorithms.

Section 5 outlines the proposed algorithm in the paper, and outlines how the analysis will be conducted.

Section 6 discusses implementation details of the algorithm outlining the simulated natural environment developed for benchmarking the algorithms.

Section 7 covers the experiments and their results,

Section 8 discusses the experiment results and

Section 9 concludes the work and discusses future work.

2. Related Work

Research interest in probabilistic target search appeared as early as 1956 [

14] studying the probability of detection of a target given various sensor conditions. Since then, a large body of work has been developed on the task of target search, including many surveys of the subject [

15,

16,

17].

First and foremost, our literature survey will focus on problems requiring active search where agents keep moving to locate targets [

18,

19] instead of static surveillance [

20] (consider placement of static sentries in the art gallery problem to detect intruders [

21]). Several important factors that delineate different approaches in the literature include the number of targets to be tracked, the assumptions about the target motion model, and who is searching for the targets. Some approaches are only for finding a single target, while others are for finding multiple targets. When working under a Bayesian framework, it is common to track a probability density function of a single target’s location [

22]. This can be extended to a predefined number of targets in parallel [

23,

24], or using random finite sets to track the likelihood of the existence of a random number of targets [

7,

18,

25].

Further, whether or not targets are stationary or dynamic affects the search. If targets are known to be stationary (or static), one could divide the search space into cells on a grid and track hypotheses in each cell [

7,

22,

26]. Or, one can track the number of targets contained within each cell [

27,

28]. Since our work assumes stationary targets, there is a grid of cells, but the approach collects multiple cells into regions for faster evaluation of coverage plans by planning at the coarser granularity of searching regions instead of cells, requiring fewer actions for a full duration coverage plan at the cost of generating suboptimal plans. Targets that react and move according to the agent’s position can be assumed to have an adversarial relationship characterized by pursuit-evasion games [

16]. Alternatively, target motion can be modeled as a Markov process which is unaffected by the agent’s actions [

19,

29,

30]. Certain approaches also factor coordination between agents to find the target(s), either implicitly or explicitly [

30]. Regarding multiagent algorithms, in particular explicit coordination methods are exponentially complex with the number of agents [

30]. Multiagent coordination where the agents share and fuse observed data is common [

19,

23,

24,

31,

32,

33]. Multiagent search can also be adversarial where two competing teams are searching for the same target [

34].

The use of information theory to improve the gathering of information in target search is widespread. Mutual information is a natural choice for modeling uncertainty reduction, and it works for continuous, discrete, and hybrid representations and is compatible with Bayesian search techniques. However, computing plans of arbitrary length that maximize mutual information is exponentially complex [

6]. Many approaches either greedily maximize mutual information [

7,

8] or look only several actions into the future, similar to model predictive control [

10,

19,

22,

23].

In contrast to finding trajectories that maximize mutual information, one could plan trajectories that minimize the time to detect the target [

35,

36,

37]. Minimizing expected time to detection vs. guaranteeing capture or reducing target location uncertainty will be appropriate in different scenarios [

37]. However, the mentioned works are formulated for either a single target [

35,

36] or for a fixed number of targets [

37], which is unsuitable for widespread disasters on land where the number of survivors is unknown

a priori.

Hierarchical approaches can be used to improve the representation of the target distribution or of the environment to speed up the search. Quad-tree like structures representing the target distribution have been used [

7,

38]. Alternate approaches take advantage of the structure of the environment (e.g., rooms in an indoor map) [

30,

39] but assume that regions are convex and a line of sight sensor is used. They do not generate coverage plans for each region, which is something we explicitly do. Other work considers uncertain or changing environments [

8,

40] and focuses on potentially hostile environments that can destroy robots.

Branch and bound is a discrete optimization technique that uses admissible heuristics to guide and significantly speed up the search for the optimal solution compared to e.g., brute force enumeration of all candidate solutions. Branch and bound was initially introduced for target search when search effort of a particular region was indivisible [

29].

It is important to note that the term heuristic in the robotics community has multiple meanings that vary widely given context, often causing confusion. In the most general sense, heuristics use incomplete information about a problem to find a solution quicker. Certain heuristic algorithms tend to discover suboptimal solutions very quickly (which we denote as suboptimal heuristic algorithms), while admissible heuristics, when combined with a search algorithm such as Branch and Bound or A*, have formal properties that guarantee the discovery of optimal solutions or discover optimal solutions with a minimal number of iterations. Further, inadmissible heuristics do not formally qualify as admissible heuristics but tend to offer performance tradeoffs for the algorithm designer when substituted for admissible heuristics. An -admissible heuristic offers computational speedups at bounded loss in solution optimality.

Of particular interest is related work that considers a moving target but seeks to maximize the probability of target detection subject to fuel and risk constraints [

41]. For information gathering, branch and bound has been used for feature subset selection [

42], and has been used in the similar traveling salesman problem (TSP) [

43,

44]. Branch and bound has been combined with Gaussian Processes for informative path planning [

11] and is arguably most closely related to our research. However, the bounding heuristic used cannot account for multiple sensor measurements in the same location, for which we must explicitly account.

An example recursive greedy algorithm heuristic solver solves the submodular orienteering problem [

45]. This algorithm, however, assumes that each state is only visited once, making it unsuitable for our application.

In the scenario with uninformative prior information, there is a close resemblance between trajectories that maximize mutual information and coverage plans. A recent survey outlines the various techniques used in coverage planning [

46]. Two approximate solutions are of interest which focus on the 2D case. Zelinsky’s algorithm uses dynamic programming to generate a value function, then traverses level sets to cover the entire region [

47]. The proposed algorithm can get stuck in the presence of obstacles and must back out to continue its coverage plan. Boustrophedon coverage outlines an approach to partition polygonal environments and suggests using a modified version of depth first search (DFS) to dictate the search order of the various regions [

48]. For optimal coverage on graphs, this is equivalent to the traveling salesman problem, which is NP-hard. Interestingly, the solution to the Chinese Postman Problem [

49] where the goal is to find the optimal tour covering all edges of a graph can be solved in polynomial time.

With regards to information gathering algorithms not directed towards target search, a time-varying information objective was explored in [

12], when using sampling based motion planning for environmental information gathering.

3. Problem Formulation

We define a common benchmark problem to solve multipass coverage planning problems by defining an environment (sensor model plus motion model) with an objective function to maximize. We are most interested in (1) the quality of the solution the algorithm generates (how much information the algorithm gathers which in turn changes how long it takes for relief crews to search a cell for survivors) and (2) how long it takes to compute the solution.

Define to be workspace of the robot where . Let be the configuration space consisting of time domain T, workspace W and all higher order tangent spaces required to define the robot’s trajectory. We assume that the robot starts at point . For our application, we are interested in planning in the space of feasible trajectories. A feasible trajectory is a time indexed curve in C that starts at and that the robot’s dynamics is capable of reproducing. We denote the space of all feasible trajectories in time domain with end time t as . Let be the space of all valid trajectories whose end times vary between 0 and t.

Sensor measurements used for target search are stored in a discrete evidence grid covering workspace W. Instead of counting the sensor measurements the agent observes, we count the sensor measurements observed in each cell separately with . Define grid cell for and for user defined resolution r (typically, ) where such that the collection exactly covers the workspace.

For each cell

define

X to be the hidden state of whether or not the cell contains a target.

X may be discrete or continuous.

is the

kth sensor measurement in

g and

is the collection of the

k sensor measurements

. A continuous example is the Bernoulli process where

and

. This can model targets that disappear and reappear from view randomly. Let

be an observed sequence of measurements (a realization of

). When it is necessary to denote the particular grid cell in which sensor measurements are taken we shall use superscripts, e.g.,

is the observed sequence of measurements in

. Each cell in the evidence grid is assumed to be independent from all other cells, that is

for

and

. For all

we assume that

is independent of time and thus

and

are not affected by the time(s) at which the measurements are taken. The rationale behind this is that targets with restricted mobility (can only move within one grid cell) can be accurately modeled with a stationary process model, plus removing the dependence on time improves the ability to precompute the mutual information of future sensor measurements. Given the forward sensor model

, we compute the inverse sensor model using a recursive application of Bayes’ Rule, (Equation (

1)):

in which all sensor measurements are conditionally independent. From this model it is possible to compute the mutual information

. In practice, this can be computed

a priori for any process and sensor model, and tabulated on the number of positive and negative sensor measurements observed (see

Section 5 and

Table 1 in

Section 6.3 ). This model can account for either static (consider the occupancy grid model) or stationary targets (target’s presence at any moment of time is the outcome of a Bernoulli Process, but the target’s distribution is independent of time). The outlined approach in this paper would need to be modified to be used with nonstationary targets (whose target distribution is time dependent such as a Markov chain) or dynamic targets (whose belief distribution may be in more than one cell). We will assume the use of an occupancy grid model [

50]. This model has closed form solutions which are obtained by judiciously applying basic properties of probability and information theory; use of other process/sensor models lies beyond the scope of this paper. Define submodular function

to be the total mutual information gathered by a total of

q future sensor observations. We note that in this approach, mutual information is used to predict the total information gain of future trajectories conditioned on any previous sensor measurements observed.

Given a particular configuration

, the sensor model also defines a sensor configuration footprint, encoding which cells in

are observed given the perspective (field-of-view), and characteristics of the sensor (consider the projection of the image plane onto the evidence grid for a downward facing camera). This extends to trajectories, defining the trajectory footprint of the sensor following the path of the trajectory (Equation (

2)).

It holds that different sensors have different footprints (e.g., downward facing camera vs. scanning LIDAR).

is equivalent to a 2D array the same size as the evidence grid by defining

to be the number of observations in the indexed cell. The cumulative mutual information collected by time

t assuming the robot has followed

is given by the sum of the mutual information of the future sensor measurements and the hidden state conditioned on previously observed data within each cell.

The problem of multipass coverage planning for information gathering with mission duration constraints is defined as follows: Find

, the trajectory of time duration

t that maximizes the cumulative mutual information in

,

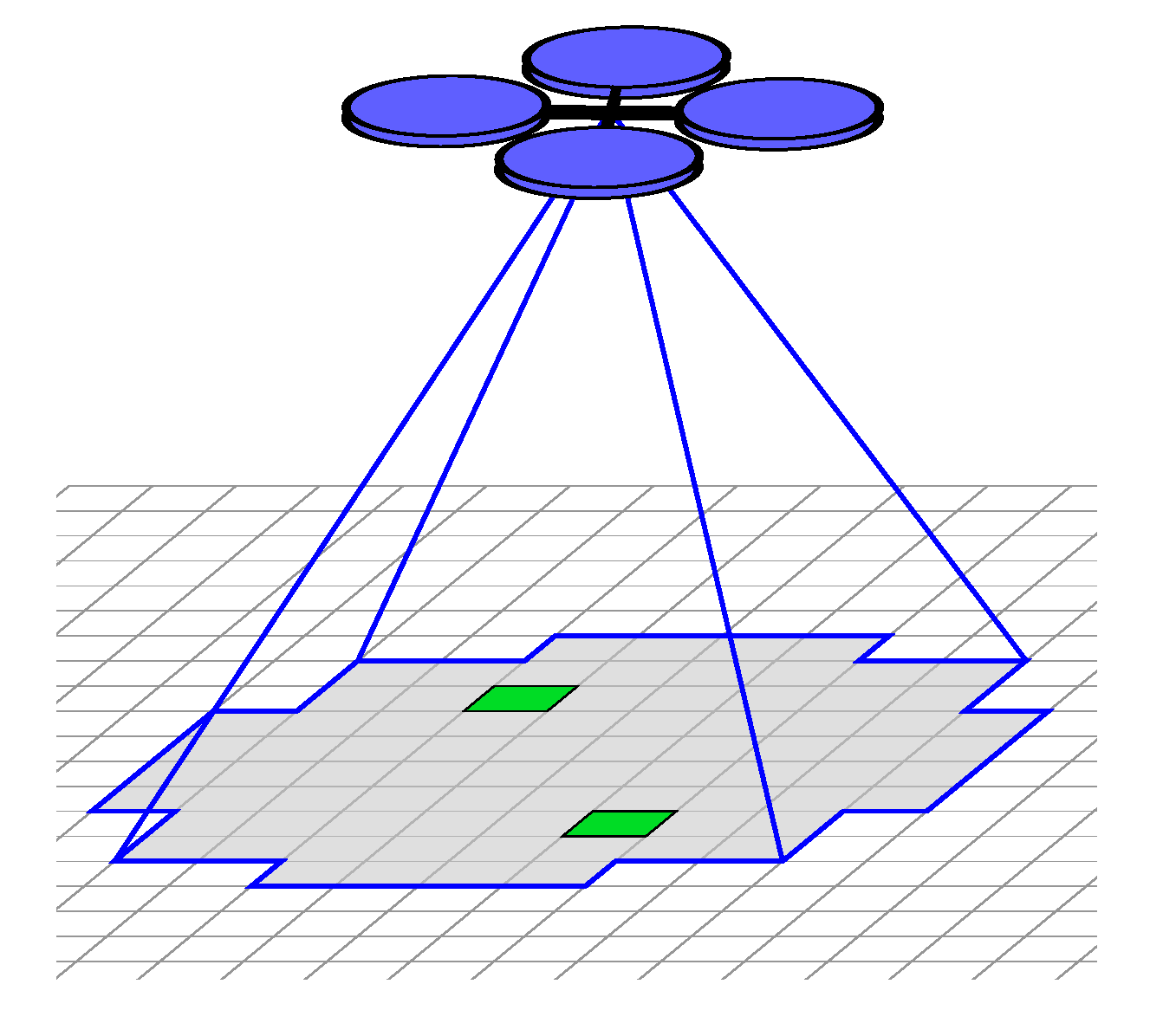

3.1. Motion and Sensor Model

We assume a motion model

where the current trajectory and next action determine the subsequent trajectory (along with next state

c and trajectory footprint). In order to minimize the number of actions required to generate a coverage plan to minimize the depth at which one must search to find the solution, we cluster the cells of the evidence grid into contiguous, connected regions. The agent then has the choice to either traverse between connected regions, or search a given region. See

Figure 2 for an example motion model. At a lower level hidden from the planner trying to solve (Equation (

3)), the agent stitches motion primitives together to generate a trajectory to complete a given action (

Section 5.3). Previously, we tested the proposed approach in rectangular environments [

13]. In this work we extend such findings to more natural simulated Boustrophedon environments as will be covered in

Section 6.1 and

Section 6.2.

4. Preliminaries

Now that we are given a proper problem formulation, we now introduce relevant background material before proceeding to the approach.

4.1. Benchmark Algorithms

In addition to our proposed algorithm, there are three additional algorithms we would like to benchmark for the described scenario, that we can classify as being either heuristic solvers or planning algorithms. It is reasonable to suppose that heuristic solvers will get solutions faster by solving an easier problem (often in polynomial time), but will get lower quality solutions. Planning algorithms, on the other hand, solve the problem at hand by reasoning over many candidate solutions. Planning algorithm performance can be improved by using heuristics to guide the search. In practice, the use of properly designed heuristics to guide the search reduces the computational effort by a significant constant factor making exponentially complex algorithms tractable, while still obtaining (near-)optimal solutions.

We evaluate two heuristic solvers including (1) a single step greedy heuristic solver; and (2) the depth first search coverage based planner which is used in covering Boustrophedon decomposed environments [

48] and has been adapted to generate multipass coverage plans. For planning algorithms, we test both depth first branch and bound and

-admissible branch and bound (

-admissible B&B: Algorithm 1 [

13]).

-admissible B&B uses improved heuristics to dramatically increase the quality of the solutions. Similar to ARA* [

51] and

e-admissible search [

52],

-admissible B&B uses an inadmissible heuristic to speed up the search time for branch and bound to find a good solution with bounds on sub-optimality.

The greedy heuristic algorithm greedily maximizes total information gathered per unit of time for the next immediate action. When the greedy heuristic is selecting the next best action, it is possible (but unlikely in natural environments) that two or more actions result in the same (maximal) amount of information gathered per unit of time. Such ties are resolved by selecting the action that acquires more total information. This is done iteratively until the planned trajectory spans the entire mission duration.

For the depth first coverage solver, one uses a modified version of depth first search (DFS) to generate a tree that spans all regions in the environment. Further, the iterative greedy heuristic g computes how many times region i should be searched to maximize mutual information. The agent then traverses the tree based on the DFS order, keeping track of how many times it has searched each region . To ensure a more uniform coverage of the environment, the agent searches the current region at most once per visit. Traversing the DFS tree will have the agent visit most nodes multiple times, and the tree can be traversed multiple times until the time expires (provided there is enough time to search). Each region is searched at most .

To illustrate the necessity of using priority heuristics and -admissible heuristics, we contrast -admissible B&B to depth first branch and bound (DF-B&B), as this is the more typical implementation of branch and bound (especially when implemented using recursion instead of a queue). Common practices with branch and bound tends to favor a depth first ordering when enumerating partial solutions because it tends to find initial (complete) solutions quicker enabling one to start bounding the space earlier in the search. Best first search strategies, such as A* with good heuristics, will evaluate fewer nodes when looking for the optimal solution however. Note that DF-B&B differs from DFS in that DF-B&B computes for every node, where DFS finds a spanning tree of all regions which in turn defines the search order, and only computes once to determine how many times each region should be searched. DFS therefore is much faster to compute than DF-B&B.

Note that by convention our motion model returns actions that traverse regions (sorted based on edge information) before actions that exhaustively search regions. Without modification, DF-B&B will only focus on actions that traverse between many regions and find low quality solutions within the allotted time. To generate high quality solutions quickly, the action set ordering should be randomized (i.e., permuted) such that DF-B&B will deliberate over more diverse action sequences. Such permutation schemes should be complicated enough to prevent cycles in the action space. This modification remains within the scope of the depth first search algorithms since the motion models do not require a preference over the ordering of different actions.

In previous work [

13] we used Zelinsky’s algorithm [

47] as a benchmark, however Zelinsky’s algorithm does not readily extend to sensor models whose footprints extend beyond a single cell in the grid and hence the sensor models used in this study.

4.2. Heuristics

Several challenges exist in designing heuristics for information gathering for multipass trajectories that do not exist in path planning for waypoint following:

All regions must be explored (possibly multiple times), requiring the heuristic to be more computationally demanding and explore a larger portion of, if not the entire workspace.

The reward function is path dependent since mutual information is submodular, requiring planning in the space of trajectories instead of the configuration space.

There is an increased number of paths of equal or similar reward, further compounding the problem.

To counter these challenges we define the notion of an

-admissible heuristic for branch and bound in previous work, and observe that at significant performance speedup we can more greedily prune the solution space with a bounded loss in optimality [

13]. For our application we effectively transform the admissible iterative greedy heuristic

g into an

-admissible

(see

Section 6).

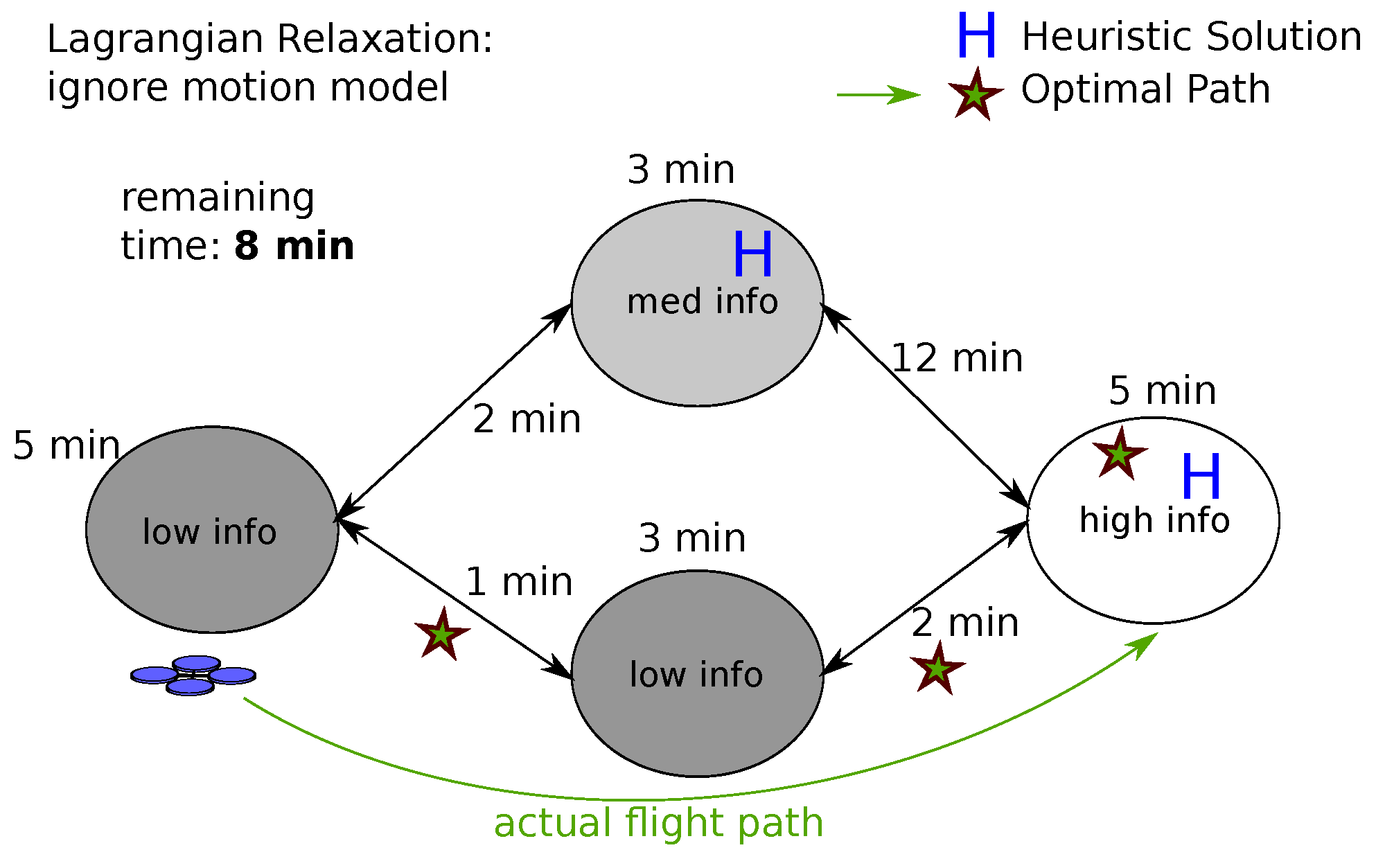

Iterative Greedy Heuristic

For completeness we now describe the iterative greedy heuristic developed for multipass search in previous work [

13]. Relax the problem formulation in (Equation (

3)) by permitting sequences of actions that are not feasible. In other words, regardless of the agent’s current state, the agent must select

L discrete actions to take, from all

actions that are available from any state (Note that to guarantee admissibility

is the sum of all actions that transition between regions and all actions that search a given region,

Section 3.1).

Define decision variable

with

the number of times the

ith action is taken, with decision vector

. For the multipass coverage planning problem, actions can be selected multiple times. Selecting action

i the

th time yields the incremental reward of

.

is monotone increasing and concave (

but

). The agent’s goal is to select

to maximize the function:

The strategy to find the optimal solution is to sort all actions based on the incremental reward. While picking one action may affect the reward of other actions, those actions affected are a small subset of all

actions and can be recomputed, since information content is localized to a given cell, and the information in cells may be accessed by different actions. During each round the action with the most reward is selected; by the concavity of the reward function the reward for taking an action

a the

ith time is collected before taking action

a the

th time [

13]. An illustration of how the iterative greedy heuristic works in practice can be found in

Figure 3.

This formulation is similar to previous work that uses multi-armed bandit (MAB) relaxations for gathering information [

53]. However, the MAB heuristic computes dynamic allocation indices [

54] in scenarios where reward is uncertain. The iterative greedy heuristic gathers expected information gain, which automatically factors measurement uncertainty, offering a simpler approach for the problem at hand.

One of the main limitations to the iterative greedy heuristic is that it does not account for the flight time associated with the agent transitioning between regions. This means that many different distributions of information will have the same heuristic reward even though it will be easier to gather the reward in some scenarios and not in others. Algorithms like A* or best first branch and bound expand all nodes with optimistic reward greater than or equal to the optimal reward, dramatically increasing search time if there are many paths of similar cost. One might consider getting a tighter bound on total information a node can gather with the remaining time available before the mission ends by estimating the total remaining traversal time. One such way to obtain a lower bound is to generate a minimum spanning tree [

55] of all regions the heuristic visits. While this approach works for traveling salesman problems [

43], such an approach cannot properly bound the information gathered when traversing between regions, making it unsuitable for the problem at hand.

Further, in large maze-like environments, it is often difficult for the planner to explore the search frontier when a candidate solution backs itself into a corner and requires multiple actions to return to the frontier, which is likely to happen when a multipass algorithm is used in large environments.

The iterative greedy heuristic requires the evaluations of many footprints for their information content. In order to speed this up, we first note that changes in cost can be computed locally for the region being evaluated immediately. Second, caching the rewards for footprints is essential to improving the speed of the heuristic. Whenever a region is selected greedily, at the next iteration the rewards only have to be recomputed for the previously selected region (and any other regions it may overlap), resulting in a constant factor performance improvement with . Caching is therefore a constant factor improvement in the run time of the heuristic.

5. Approach

In order to solve (Equation (

3)), we benchmarked four different algorithms using the same reward and motion model to determine their ability to generate quality solutions and determine how long it takes them to compute the solution. As we did in previous work [

13], we observe that

can be computed from

as a 3D look-up table dependent on the number of positive and negative observations for a given cell by applying the chain rule for mutual information [

4] and the exchangeability property of Binomial random variables, offering

mutual information look-up speeds in RAM with as little as 32 kilobytes of memory for a 20 × 20 × 10 array of double precision floats.

Our proposed method is

-admissible branch and bound [

13], is based off of branch and bound [

56] and is summarized in Algorithm 1 with subroutines Branch (Algorithm 2) and Bound (Algorithm 3). In short, branch and bound works by iteratively partitioning the solution space (branch), and determining if a subspace contains a solution that can potentially beat the current best known solution using an admissible heuristic (bound). Subspaces that can be determined to not contain the best solution are pruned or

fathomed, speeding the search. When expanding the search tree denoting subsets of the solution space, define a search node

N to be a partial solution with a family of candidate solutions, combined with a reference to its parent node, its priority, and other quantities required for improving the search. Search node

N is different from a node in the graph of the motion model. DF B&B can be created by modifying Algorithm 1 such that

is a stack (LIFO queue), priority heuristic

is no longer required, line 14 in Algorithm 1 is eliminated, and admissible heuristic

g is used in place of

-admissible heuristic

.

5.1. Priority Heuristic

For the priority heuristic in

-admissible branch and bound (Algorithm 1 line 14), we use the weighted heuristic that discounts future reward to offer a compromise between best first and depth first ordering. For a node

N in the search tree, define priority

to be (Equation (

5)) [

13]:

given current reward

, admissible bounding heuristic

, and future reward discount factor

. Setting

towards zero biases the search towards depth-first like behavior.

| Algorithm 1 -Admissible Branch and Bound |

- Require:

Priority Queue , Queue , evaluation function , -admissible heuristic , priority heuristic , Start node , end time , maximum number of iterations , heuristic reward - 1:

- 2:

- 3:

- 4:

while is not empty or do - 5:

- 6:

- 7:

- 8:

if N is a complete candidate solution and then - 9:

//new best solution found. Store it. - 10:

end if - 11:

- 12:

for all do - 13:

if and not in and N not in ) then - 14:

- 15:

- 16:

- 17:

- 18:

end if - 19:

end for - 20:

end while

|

| Algorithm 2 Branch |

- Require:

motion model - 1:

for all available actions i from do - 2:

//roll out trajectory using motion model - 3:

//append to new search node - 4:

end for - 5:

return

|

| Algorithm 3 Bound |

- Require:

Search node/partial solution N, current best reward B, heuristic - Ensure:

determination if N should be expanded. - 1:

if then - 2:

return True //current partial solution can potentially beat best known solution - 3:

else - 4:

return False //current partial solution cannot beat best known solution - 5:

end if

|

5.2. Agent Model

While this work is targeted at quadrotors, the outlined approach generalizes to other physical systems. Although quadrotors are a differentially flat system [

57] requiring 4th order dynamics, we will focus on using a second order integrator subject to velocity and acceleration constraints for this work. We first observe that physical systems have bounds on their acceleration capabilities due to the force output of their actuators, or the maneuvers their controllers can reliably execute, imposing a maximum nominal acceleration on the platform

. Lower accelerations for quadrotors reduces the maximum attitude angles observed (similarly with their derivatives), which can affect sensor measurements. Second, we observe that it is often the stopping distance vs. the range of onboard collision detection sensors that dictates how fast a vehicle can safely fly in partially known environments, requiring a hard bound on maximum nominal velocity

. When traveling at maximum speed

, decelerating at

, the stopping distance before the system comes to rest is

. The vehicle can safely stop if its stopping distance is shorter than its sensing range. Alternatively, if the vehicle wishes to steer around an obstacle

distance ahead maintaining forward speed, the distance the robot can steer,

. The robot steers clear if

exceeds the distance it must move to avoid collision. In short, a vehicle that wishes to double its safe forward speed must quadruple its acceleration capabilities or sensing range. Further, analysis of single order integrator systems flying through Poisson forests suggest that above a certain speed, collision will occur with probability one regardless of the planner or controller used [

58].

Define

as the L2 norm while

. Other flat states such as altitude or yaw could be included but are ignored for our work.

5.3. Motion Primitives

In order to execute a high level behavior, sequences of waypoints

are chained together to create multiple point to point maneuvers. For now we assume that a decomposition algorithm divides the environment into contiguous regions that are easy to search (how we do this is covered in

Section 6.2). When traversing between regions (central point to central point), a simple line connecting the regions may not suffice due to the presence of obstacles. To solve this, we create an alternate motion model that is an 8 connected graph on the underlying evidence grid. A* can generate a sequence of cells minimizing path cost (path length). However, this sequence of waypoints is very dense; many of the waypoints are unnecessary to define the shape of the path and can be eliminated by the following method. One only keeps a waypoint

in the sequence if the angle between vectors

and

is above some threshold, e.g.,

. Otherwise, remove waypoint

i and continue.

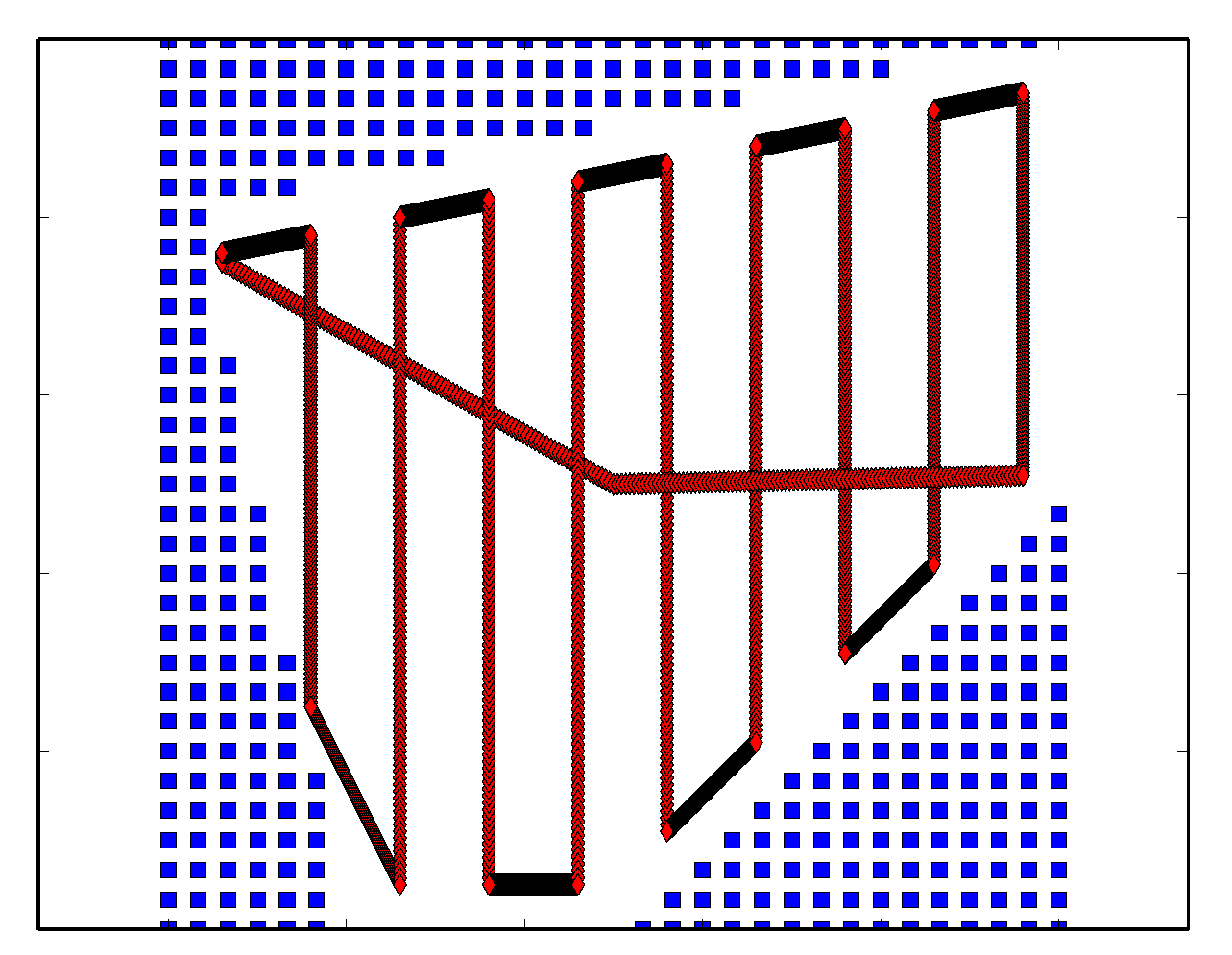

In order to search a region, a dynamically feasible coverage plan is needed. The proposed method creates rectilinear uniform coverage plans (

Figure 4) with “plow lines” that are parallel to the slice direction defined by the Boustrophedon Decomposition algorithm (

Section 6.2). The agent transitions between adjacent “plow lines” rectilinearly. In order to compute the start and stop positions of the plow line, the geometry of the (boustrophedon) region is known, and line search is conducted to find the max and min values along a given plow line. Spacing between plow lines is dictated by the maximum sensing radius.

The use of a uniform coverage plan makes the tacit assumption that information is uniformly distributed within the region. This may or may not be the case in practice, reducing the effectiveness of the information gathering algorithm. Having a coverage planner that can directly reason over contiguous nonuniform regions is compatible with the proposed approach, but is to be considered in future work. An alternate approach is to use the distribution of information to guide the selection of regions. The mere act of intelligently decomposing the environment into separate regions can dramatically simplify the problem by solving information gathering problems in smaller contiguous regions. We note that for illustrative purposes, all algorithms benchmarked in this paper have access to the same motion model, suffering similarly.

Apart from rectilinear coverage plans, one might consider using spirals [

37] due to their ability to efficiently cover 2D areas. However, given the problem specification of having to account for obstacles while generating dynamically feasible coverage plans, The task of covering non-circular/non-square regions found from a decomposition algorithm makes spiral based coverage plans impractical due to inefficiencies in covering the edges.

5.4. Point to Point Maneuver

For vehicle trajectory

connecting points

and

for times

, solve the following optimization problem:

For the system in (6), the problem in (Equation (

7)) is a simple bang-bang control problem [

59] minimizing the trajectory time interval

by selecting optimal control

. The corresponding trajectory can be written in closed form with continuous position and velocity, and piece-wise constant acceleration at

. The system either has one or two switching times depending on whether the system has enough time to accelerate to its maximum velocity.

Without loss of generality we will consider a scalar model, letting with dynamics . Let , and .

Theorem 1. Necessary condition: the time optimal control resides within the domain .

Proof. There are two cases, when there exists 1 switching time (when

and t is not the switching time or when

) or when there are 2 switching times (

) for

. When

, one can show by using the Pontryagin’s Maximum Principle (PMP) [

59] that control for the time optimal solution

.

When , the velocity constraint will become active during the trajectory. As a simple illustration, suppose that the system accelerates to the maximum velocity . To continue accelerating would violate the velocity constraint, so the system must either stop accelerating or slow down. For any system slowing down before the prescribed deceleration at the end of the trajectory, the traversal time would increase, yielding a suboptimal solution to the time optimal problem. Therefore, the system will cruise at with until it is time to slow down and . ☐

For a more rigorous proof, consider a formulation of Pontryagin’s Maximum Principle with state inequality constraints to get the case when the velocity constraint becomes active. Typical formulations of PMP without inequality constraints will not elicit costate trajectories that have multiple switching times.

With the domain of u and known problem constraints, the switching times can be determined along with the optimal control by inspection (speed up, cruise, slow down). With known control u, and can be solved for closed-form by quadrature. To increase the average speed of the vehicle, it is advantageous to remove the zero velocity and acceleration constraints at the end of each maneuver. Boundary conditions with are easy to solve for closed form using the mentioned approach when the velocity is in the direction of the vector . Two extensions are to use splines (where it is difficult to guarantee hard bounds on velocity and acceleration) or to use Dubbins like trajectories where the use of circles connect adjacent plow lines.

5.5. Search Effort Allocation Model

For comparison of results between algorithms, we define a simplified search effort allocation model to see how effective the various multipass coverage planning algorithms are at directing rescue teams. This simplified model can study the practical consequences of having informative trajectories for search and rescue but ignores effects due to finite search resources/time, survivor survival rates, and the time spent transitioning between cells. The search effort allocation model is

distinct from the sensor model used by the planner based on the different attributes or roles the autonomous agent and human ground crews have during the search and rescue operation. We assume that after executing the autonomous search using one of the benchmark algorithms outlined in

Section 4.1, the agents generate an occupancy map which become prior probabilities

for the human rescue teams on the ground. Let

D be the event that the target is detected within a given cell, while

is the absence of detection.

X is the presence or absence of a target in the cell as in the previous model (Equation (

1)).

is the detection time constant effectively rescaling the given search time to search effort. Define the probability of detection of a target in a given cell (Equation (

8)) [

5,

60]:

Using Bayes’s rule and (Equation (

8)), we compute the probability the target is still present despite there not being a detection after searching for

t seconds (Equation (

9)):

The human rescue teams search the cell for

T units of time for a survivor until the survivor is found or

. If

, the cell is skipped. Using this policy, the maximum search time

for the cell is (Equation (

10)):

Note that the probability density function

is due to (Equation (

8)). We wish to compute the expected time for the search in a given cell to end by letting

for a given trajectory generated by the algorithm. We start off by computing the expected time to search the cell given prior probability

by using the law of total expectation over

X (Equation (

11)):

This leaves the computation of the distribution of priors

. For each cell in the evidence grid, the distribution of outcomes for

given the sensor data (planned

K sensor measurements) can be computed for all realizations.

can be computed directly/deterministically from the known number of sensor measurements and known sensor data

. Since we assume that all cells have the same characteristics, we marginalize over the distribution of sensor measurements (computed from the trajectory footprint) and marginalize over all realizations. We also use the exchangability property of the sensor measurements to use a Binomial random variable

(Equation (

12)):

Without loss of generality we assume , meaning t implies the number of time constants spent searching a given cell.

6. Experimental Setup

We now discuss the formulation for modeling quadrotors with downward facing cameras in natural environments, and discuss experimental setup. When selecting our

-admissible heuristic for

-admissible branch and bound we only want the algorithm to expend effort when there may exist a solution that is at least a factor 1 +

better than the current best known solution. We let

where

B is the reward of the current best known solution.

at start up if no heuristic solution is known or computed. Unless otherwise stated we let

. Simulations were run on a workstation laptop running 64 bit Ubuntu 14.04 LTS with a Core i7-6920HQ which has a 2.9 GHz clock with 64 GB RAM. The proposed branch and bound algorithm, the greedy algorithm and the depth first search (DFS) coverage planner were developed in Python, using NumPy [

61] for array operations and networkx [

62] for graph operations.

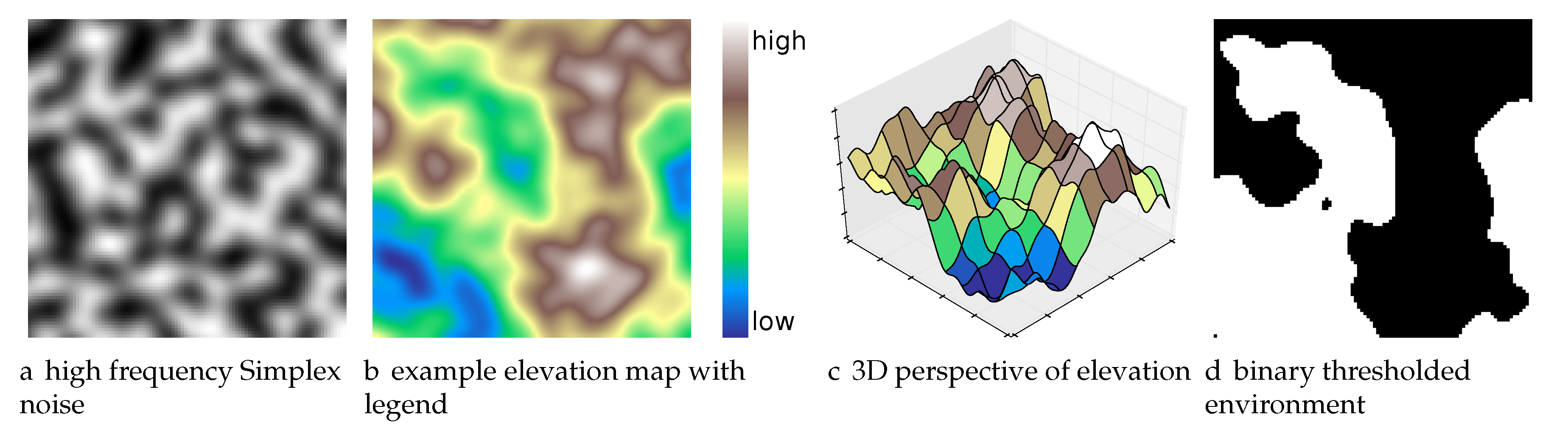

6.1. Procedurally Generated Natural Environments

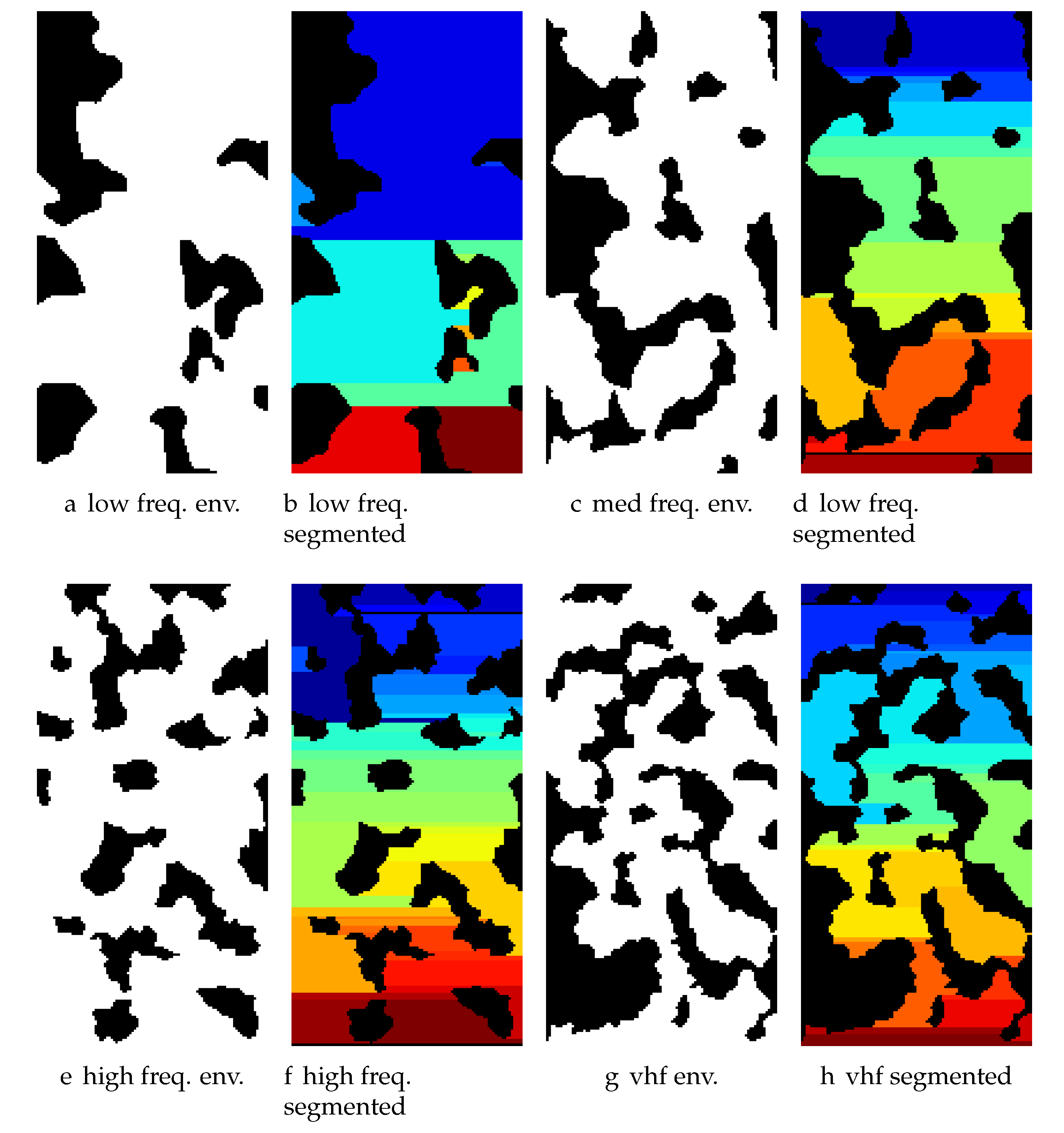

In order to procedurally generate natural environments, we use multiple frequency bands of a gradient noise function (for a single band:

Figure 5a) to generate elevation maps (

Figure 5b is a colored elevation map, while

Figure 5c is a 3D perspective of the colored elevation map in

Figure 5b), and then threshold that map to create an obstacle grid (

Figure 5d). In contrast to Perlin noise, Simplex noise (

Figure 5a) has minimal directional artifacts making it most suitable for natural terrain [

63,

64] (we use the OpenSimplex algorithm developed by Kurt Spencer [

65] and ported to Python by A. Svensson [

66]).

The frequency content and hence the complexity of the environment can be controlled by summing multiple frequency components (e.g.,

Figure 5a). OpenSimplex defines the 2D gradient noise function

where

but we restrict to integer grid coordinates given a seed value for the random number generator.

defines the elevation map.

is the number of frequency components in the terrain generator, and

is the frequency of the

ith band and

is the weight of the

ith band.

For our experiments, we set

,

and

. Define 5 different environment frequency contents: very low frequency (freq.) (vlf), low freq. (low), medium freq. (med), high freq. (high) and very high freq. (vhf). For (vlf,low,med,high,vhf), the frequencies

correspond to

and the frequencies

correspond to

. For each environment complexity, our database of benchmark environments consisted of 20 Simplex environments and 4 random starting locations per environment. Algorithm 4 outlines the approach used to generate Simplex environments. This is contrasted to defining random obstacles over, e.g., randomly generated quadtree maps [

67].

| Algorithm 4 Generate_Environments(T) |

- Require:

Noise function T - Ensure:

set of environments - 1:

- 2:

for all RNG seed i from 1 to N do - 3:

for all coordinates in the environment do - 4:

- 5:

end for - 6:

Threshold such that 66% of the workspace is collision free. - 7:

Select the largest connected component and set it as free space. Make all other regions obstacles. - 8:

if amount of free space is within tolerance then - 9:

Remove obstacles below the minimum size threshold in pixels in . - 10:

add to Q - 11:

end if - 12:

end for - 13:

return Q

|

6.2. Boustrophedon Decomposition

Given an environment, the free space must be decomposed into individual components that can be searched or “plowed” using the Boustrophedon (i.e., “the way of the ox”) decomposition algorithm [

48]. We use a discrete implementation with the following modifications:

We ignore small obstacles under a given pixel count. We argue that small obstacles smaller than the sensing radius do not adversely affect the coverage plan yet including a small convex obstacle introduces 3 additional regions to the decomposition.

We impose a greedy merge rule to combine adjacent regions if their boundaries overlap sufficiently due to critical points far away causing otherwise continuous regions to be broken into smaller regions.

We also remove regions that are too small to sensibly cover and repair region connections afterwards.

Since we will be generating trajectories that cover the region, the Boustrophedon algorithm identifies start and end points for each plow line for the trajectory planner.

For the first

computed environments, for the frequency content environments (low,med,high,vhf), the average/standard deviation of number of regions was

. Simplex environments are more complex than previous environments [

13] due to increased number of loops, and the fact that region traversals gather a significant amount of reward and create significant overlaps between actions. Observe in

Figure 6 that the regions vary widely in size given the complexity of the environment. Having fewer nodes in the environment is advantageous in speeding up the search. Larger contiguous regions can be searched more efficiently by acceleration limited agents (

Section 5.2). One disadvantage to having such large regions is that the information content of the region may not be uniformly distributed, and the coverage planner may not be able to account for this.

6.3. Camera and Sensor Model

For our experiments, we will restrict the sensor model to a binary Bayes static occupancy grid model [

50] where

is fully characterized by probability of detection

and false positive rate

. We let

. Unless otherwise mentioned the target evidence grid

is a 200 × 100 grid.

When computing the recursive Bayes filter (

1) for the binary Bayes static occupancy grid model, define

with

defined using prior knowledge (we typically assume least informative prior

) for new sensor observation

, resulting in (

13):

This results in parameter update equation (Equation (

14)):

In order to compute the multi-step look-up table

for the binary Bayes static occupancy grid model, we can take a more direct approach using a Binomial random variable to represent the next

q observations instead of using the chain rule of mutual information. Let

be a Binomial RV where:

with

known from the Bayes filter,

using law of total probability. Mutual information for discrete random variables

X,

Y with

being the joint probability mass function of

and

being the marginal probability mass functions of

. Manipulation yields (

15):

Assuming the initial distribution

,

and

, we compute the following table

used in the experiments as shown in

Table 1.

Observe that when nz0 =nz1, the mutual information for is a constant 0.390. This is a limitation of the sensor model itself and is unlikely to occur for large when the static binary Bayes sensor model accurately models the environment and . Since X is Bernoulli there is at most 1 bit of information. The table is also symmetric when swapping nz0 and nz1 because . Note that since mutual information is submodular, .

For this work, we assume that the quadrotor has a downward facing camera with a circular field of view and can make observations in multiple cells (

Figure 7). We suppose that there exists image processing software that will identify the positive positions of detected targets in the image frame at a near continuous rate (overlap of FOVs of adjacent images processed is significant), while empty positions of the frame are considered negative detections. We assume that the probability of detection

and false positive rate

are known. These locations are then mapped from the image frame to the evidence grid. To avoid multiple correlated sensor measurements we only record a single observation for a given cell during a particular action (transition between regions or search a region). This implies that targets in cells within a given distance from the quadrotor can be detected.

For realistic flight scenarios factoring actual hardware capabilities, motion blur on a global shutter camera occurs when camera/environment motion causes the displacements of image features during the image exposure to be greater than one pixel. Both rotational and translational velocities of the quadrotor contribute to motion blur, placing constraints on both the altitude and forward speed of the vehicle to prevent motion blur. To compare, we will consider the IDS UI-3251LE camera with optics that have a 90deg FOV (7 mm focal length lens), 1600(H) × 1200(V) pixels, flying at 5–20 m altitude, and a 1 ms exposure time (suitable for brightly lit indoor environments) and outdoor environments.

Define

to be the maximum speed of the ground in the image plane where

assuming nominal altitude above the ground

, and

is the maximum attitude angle change expected of the camera during maneuver execution or disturbance rejection. We also note that

where

is the lens focal length and

is the size of the image sensor along the direction of motion [

68].

Define the displacement

of the ground plane due to agent motion within the exposure time

in pixels (Equation (

16)):

with

as the number of pixels along the direction of motion and

is the field of view in meters along the direction of motion.

indicates motion blur. We note that an increase in altitude

reduces motion blur by increasing pixel size on the ground but rotational disturbances will dominate for high altitudes. A gimballed camera can reduce rotational disturbances at increased payload costs. This results in the permissible flight envelope by

. For example, setting

results in a

causing motion blur while

results in

not causing motion blur.

We define cell pitch m (not to be confused with camera pixel pitch) mapping cell indices to Euclidean coordinates in meters. We also assume that the camera has a circular 90deg FOV such that the sensing radius is 8.8 m. The trajectory footprint of every action in the motion model is created by sweeping the sensor footprint along the trajectory planned by the Boustrophedon coverage planner. Therefore in practice the shape of the field of view does not matter as the sensor acts as a sweep sensor with the given sweep radius.

7. Experimental Results

For each algorithm we normalize its performance with respect to the iterative greedy heuristic which always overestimates the reward. In other words, one computes the ratio of information each algorithm gathers in an environment by dividing the amount of mutual information (in bits) the algorithm gathers by the heuristic reward (in bits). Since the heuristic is admissible this ratio is ≤100%. This also normalizes for the variations in how much total information exists in a given environment. We ran 3 experiments; the first two were to understand various aspects about -admissible B&B.

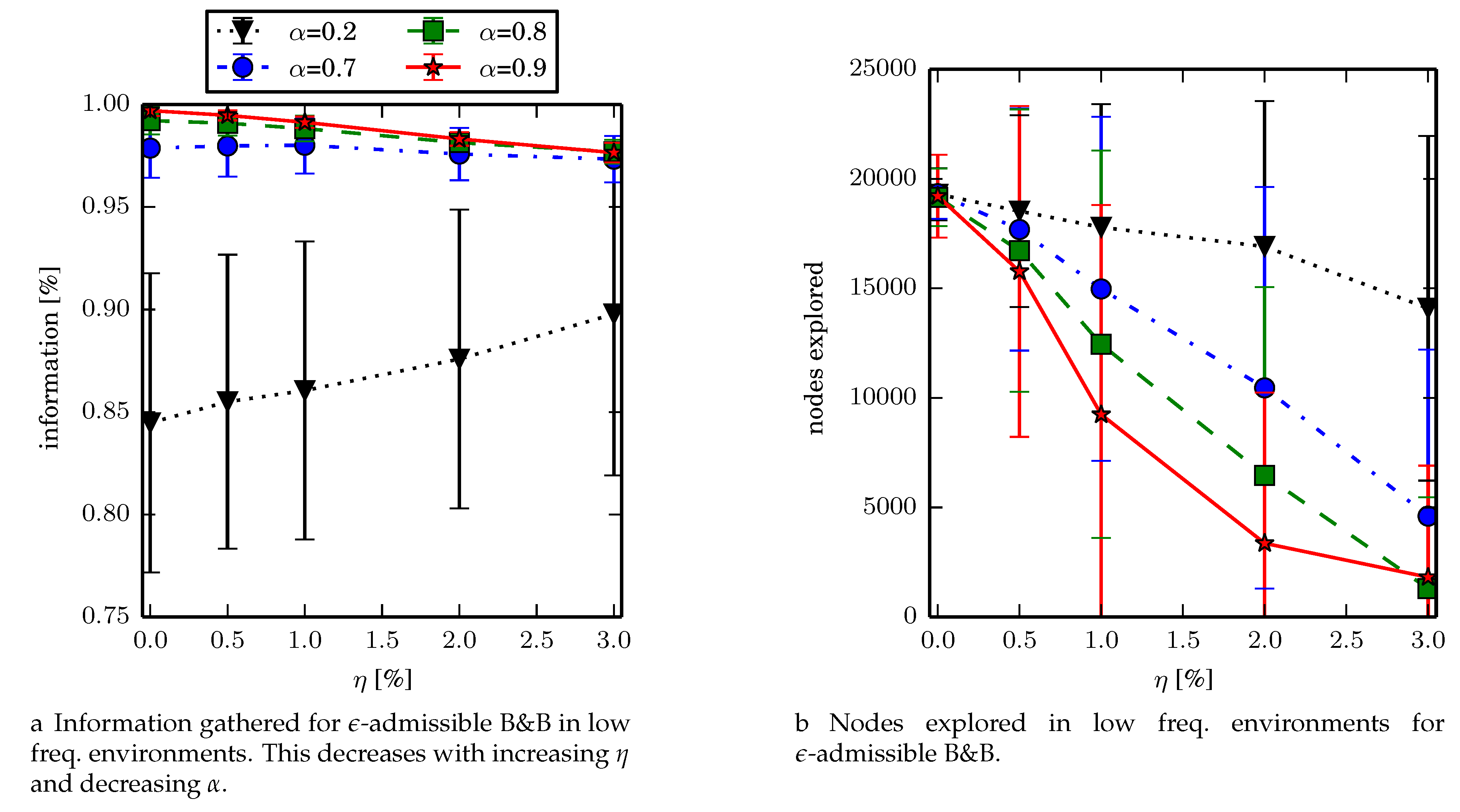

Experiment 1: vary , and in low frequency environments for -admissible B&B to identify algorithm performance for different heuristic parameters.

Experiment 2: setting , vary size of evidence grid in low frequency environments and observe how -admissible B&B scales with the evidence grid size.

Experiment 3: benchmark the four algorithms in Simplex environments, setting for -admissible B&B.

For uniform environments, all cells are initialized with the most uninformative prior of . By inspecting the mutual information look up table, we observe that cells that have two or more prior sensor measurements have low enough information content that a nonuniform covering will gather more information than a uniform covering. When generating nonuniform environments, we want the geometry of low entropy regions to not correspond with the geometry of the obstacles. Therefore the nonuniform envionments generate a distribution of low entropy regions using Simplex noise thresholded at 50% (i.e., 50% of the environment is low entropy, while the remaining environment is set to high entropy).

For the environments (low,med,high,vhf), define the low entropy regions with the following frequency parameters (vlf,vlf,low,med).

7.1. Experiment 1

We tested the branch and bound algorithm in the low environment, terminating if the priority queue is empty or after 6000 iterations have occurred. We set

, and

and observe total information gathered, and number of nodes explored. Fewer nodes explored implies more nodes in the search space are pruned. As both

and

increase, the number of nodes explored decreases due to better prioritization or pruning. Results are summarized in

Figure 8. In terms of the reduction in nodes expanded, for

on average only expands 48% of the nodes that are expanded when

suggesting that

-admissible branch and bound can speed up the search by about a factor of two while finding solutions that are on average within

of the optimal solution.

In determining the parameters for future experiments, was chosen for the following reasons . Setting ensures that -admissible B&B finds solutions within 0.2% of the optimal solution. While expands the fewest nodes in the lowf environments, in larger environments, setting instead of decreases the time to finding the first solution which significantly increases the success rate of the algorithm and reduces memory usage (Experiment 3).

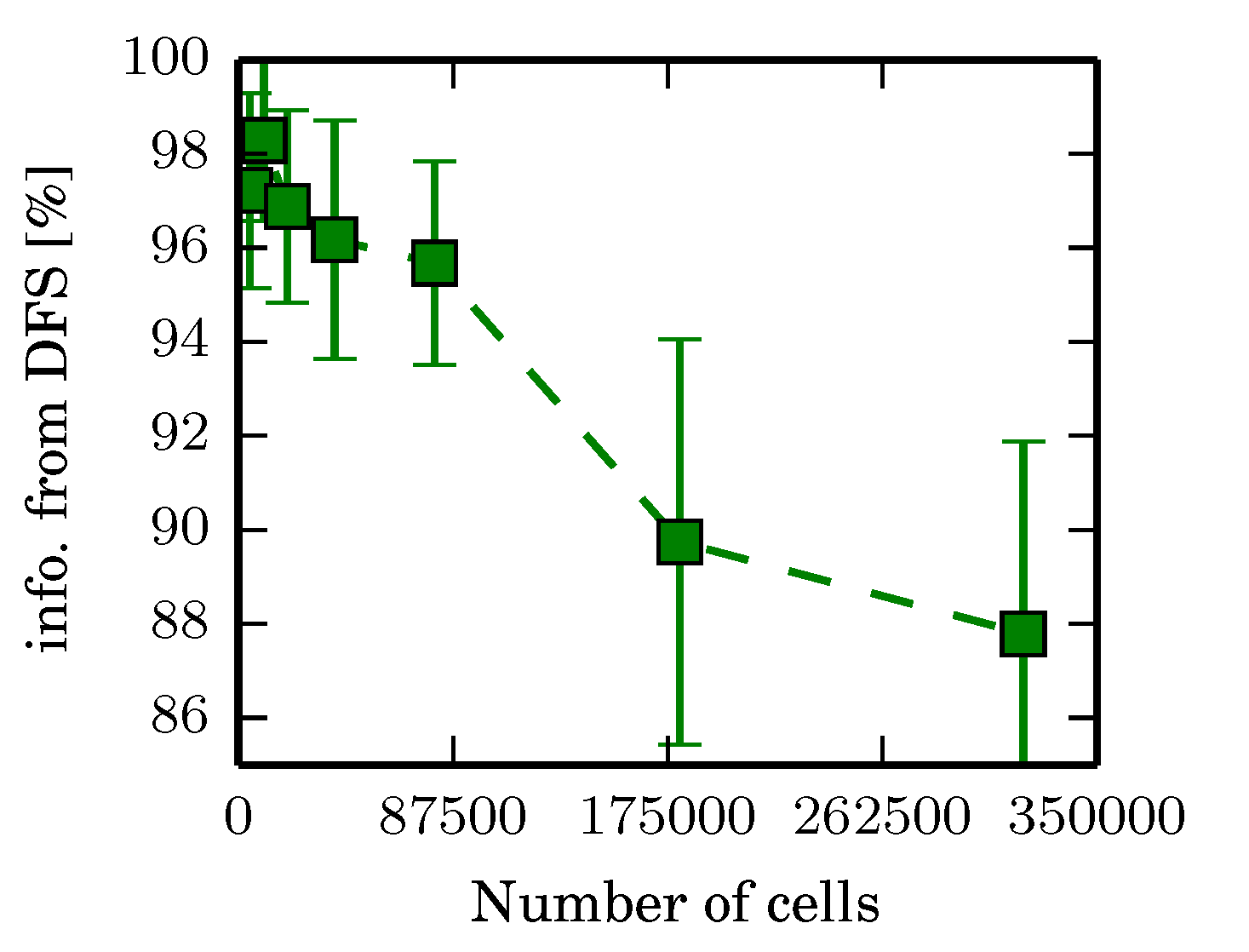

7.2. Experiment 2

For Experiment 2 we varied the size of the evidence grid to consist of {4608, 10,368, 20,000, 39,200, 80,000, 180,000, 320,000 } where each environment has a 2:1 aspect ratio. In addition, the resolution of the cells changes such that the area of the environment in meters is the same in all trials. Also, the sensor footprint is the same in meters in all environments. For the Experiment 2 Simplex environments, the following parameters are: and .

For each environment size we generated 10 environments with 4 starting locations for a total of 40 trials. Environments are generated using Simplex noise for environments with 320,000 cells (or 800 × 400 cells) and are downsampled to smaller environment sizes.

After the environment is re-scaled, Boustrophedon decomposition generates the discrete graph before running the proposed branch and bound algorithm. The algorithm also scales parameters for Boustrophedon decomposition such as the minimum area of an obstacle before it is removed. The environments do not scale perfectly, however, and the number of regions per environment can still vary (

Figure 9a). Note that the size of the environment in cell count varies by a factor of 70, while the number of regions varies by a factor of 1.3, implying that the increase is insensitive to increase in cell count. Also note that

, defined by the total time to cover each region twice, is insensitive to the number of cells and its standard deviation over all cell sizes is about 750 s with a mean of 9980, varying by about 7.5% (

Figure 9b). Branch and bound runs for 6000 iterations or until

is empty. The time to the final solution is approximately linear (

Figure 9e) when the number of nodes expanded remains constant (

Figure 9c).

One thing of interest is that the quality of the solution degrades as the size of the environment increases (

Figure 9f,g). This is not a limitation of the algorithm itself;

Figure 10 shows that the quality of solutions found using DFS on the same environments degrades similarly with evidence grid size. One possibility that explains this is that the environment is not scaling perfectly. When the sensor footprint is discretized in a coarser grid (when

is smaller resulting in larger grid resolutions), the footprint tends to be more square. This can introduce artifacts in the total area swept by a moving sensor by a factor at most

when moving diagonally, dramatically increasing how much information per unit of time is gathered when traversing between regions. Traversals in environments with fewer cells would therefore get a slight performance boost.

Note that in certain environments, many of the resulting statistics are heavily skewed and non-Gaussian so the error bars (

Figure 9d) which represent one standard deviation dip below 0. None of the trials violate constraints such as

.

7.3. Experiment 3

For Experiment 3 we benchmarked the proposed branch and bound algorithm with against DFS and the greedy algorithm. The algorithm terminates after either the priority queue is empty or iterations have completed. All algorithms are benchmarked with both uniform and nonuniform prior distributions.

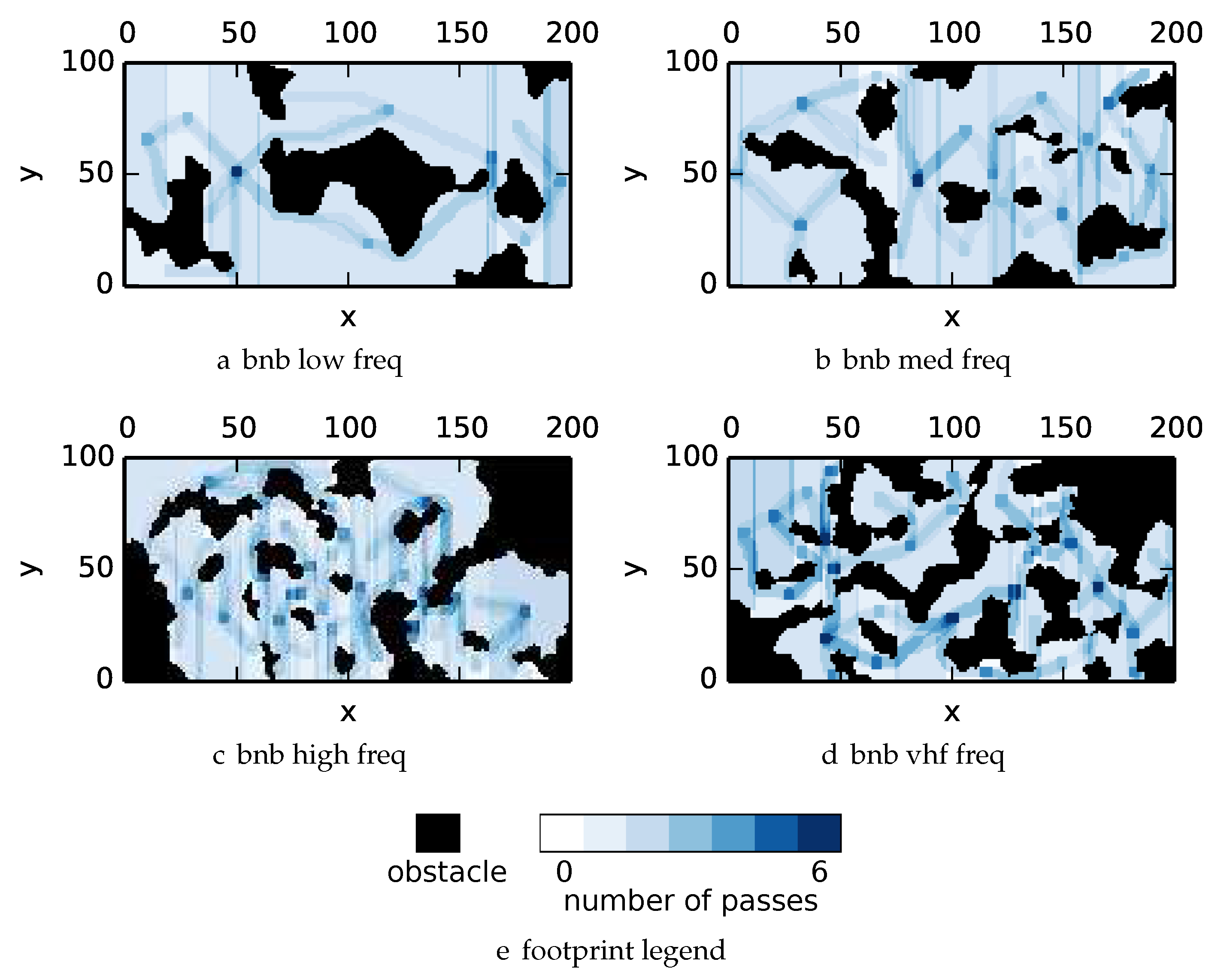

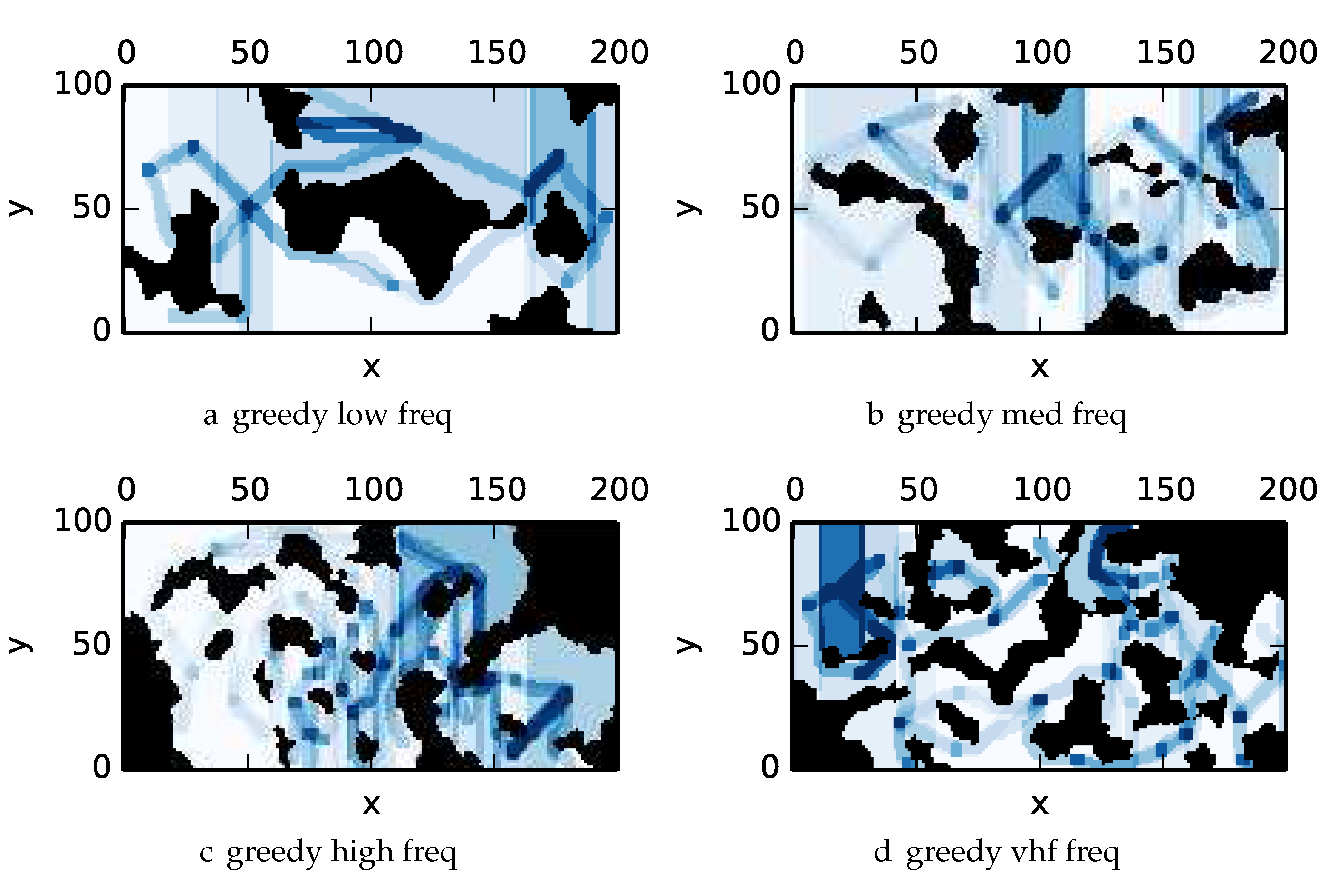

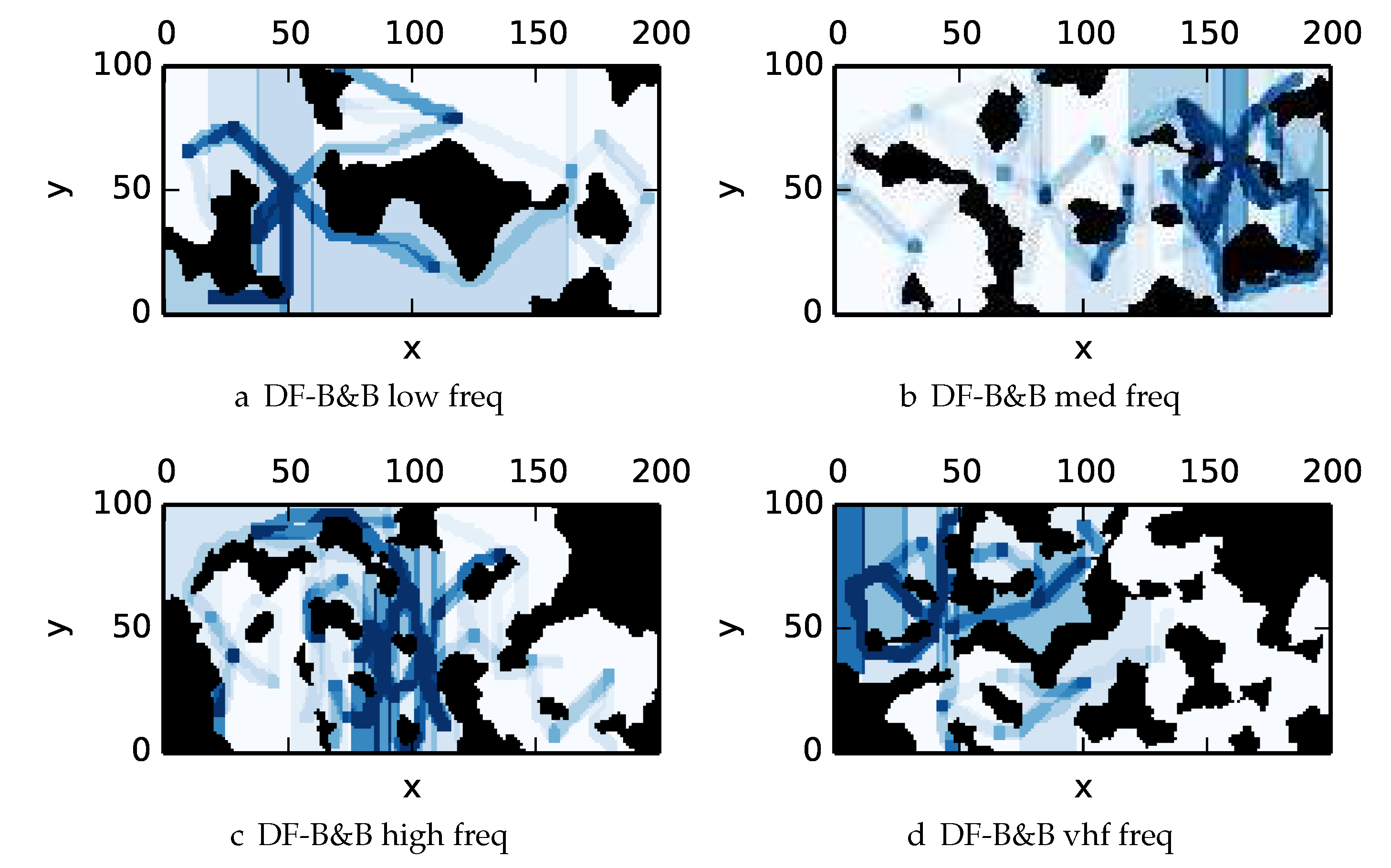

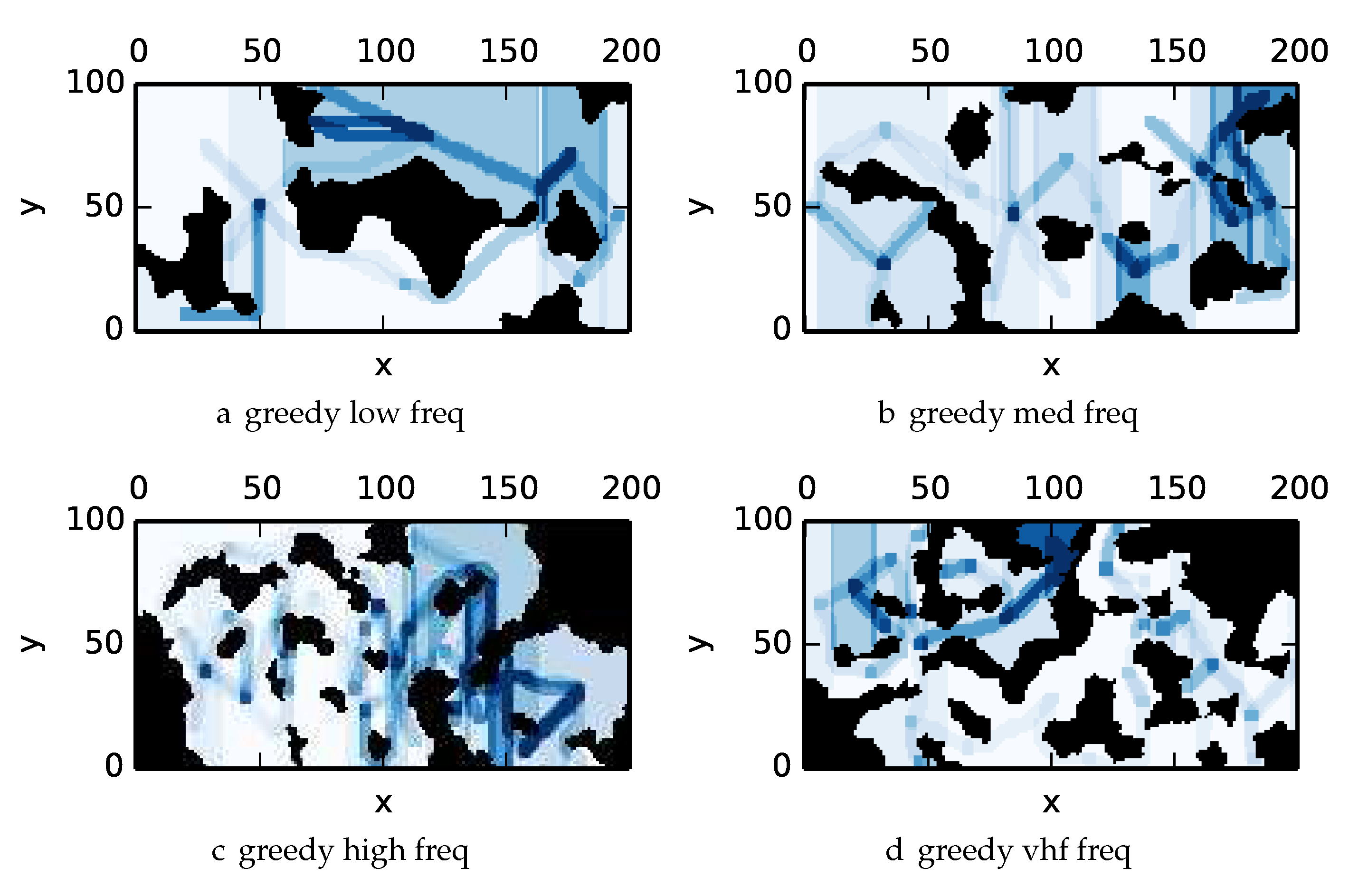

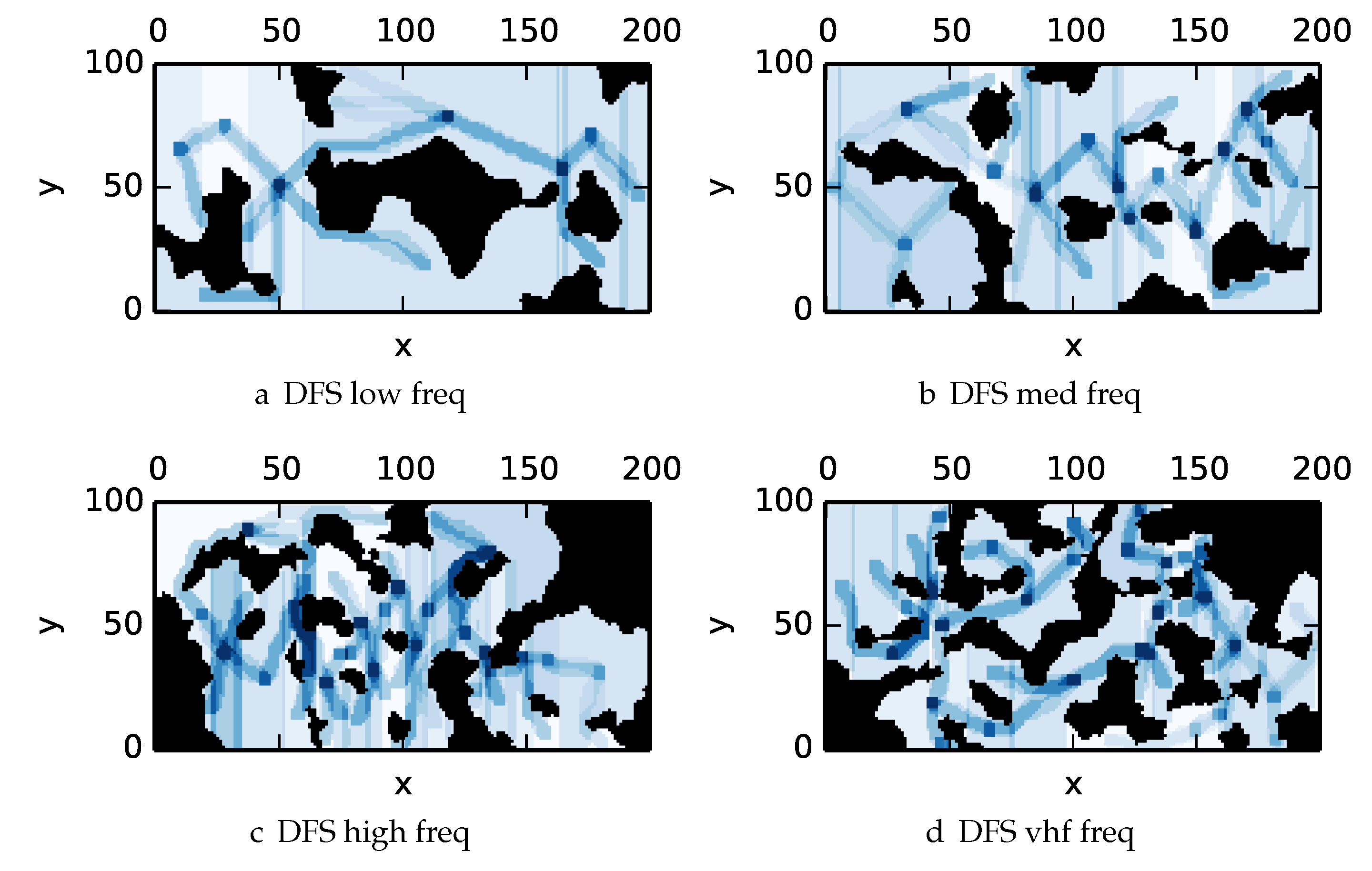

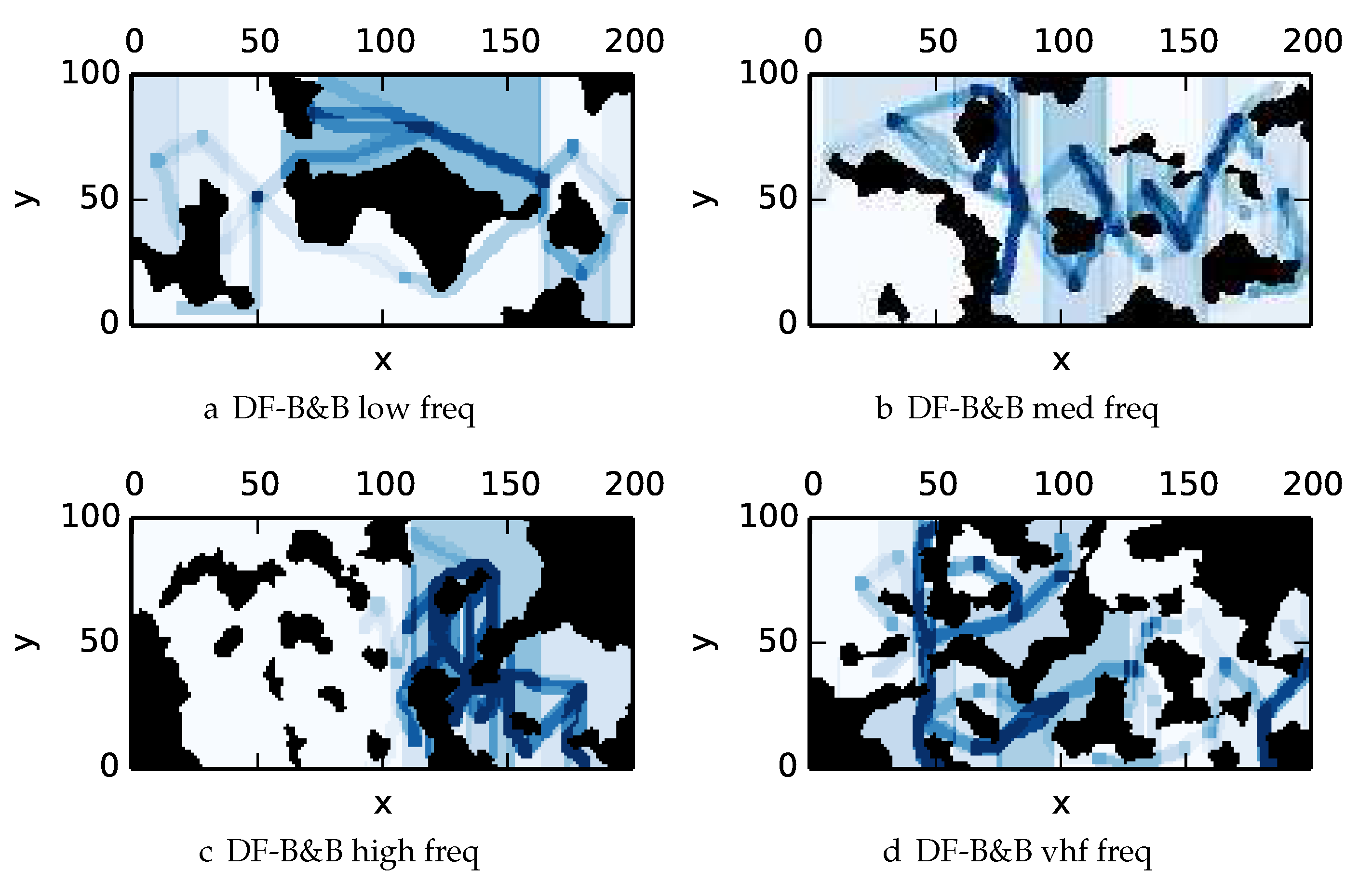

To get an understanding of how the various algorithms perform in different environments, we illustrate trajectory footprints representing median algorithm performance from

-admissible B&B (

Figure 11), greedy (

Figure 12), DFS (

Figure 13) and DF-B&B (

Figure 14) in uniform environments.

Figure 15 shows the entropy in example environments with information non-uniformly distributed in the environment.

Figure 16,

Figure 17,

Figure 18 and

Figure 19 show the median performance of their respective algorithms in nonuniform environments.

The trajectory footprints in

Figure 11,

Figure 12,

Figure 13 and

Figure 14 and

Figure 16,

Figure 17,

Figure 18 and

Figure 19 are an array representation of the best trajectory discovered by the mentioned algorithm,

. Areas not visited are white, obstacles are black, and blue regions indicate how many times the areas have been visited (see the legend in

Figure 11e). Multipass coverage plans that are more uniform should be more uniform in color. Notice that all cells in a given region are not the same color; dots and lines shown within the regions show the information gathered between region traversals as these are visited additional times along commonly traveled paths between regions. Other lines are due to sensor footprints overlapping between adjacent regions.

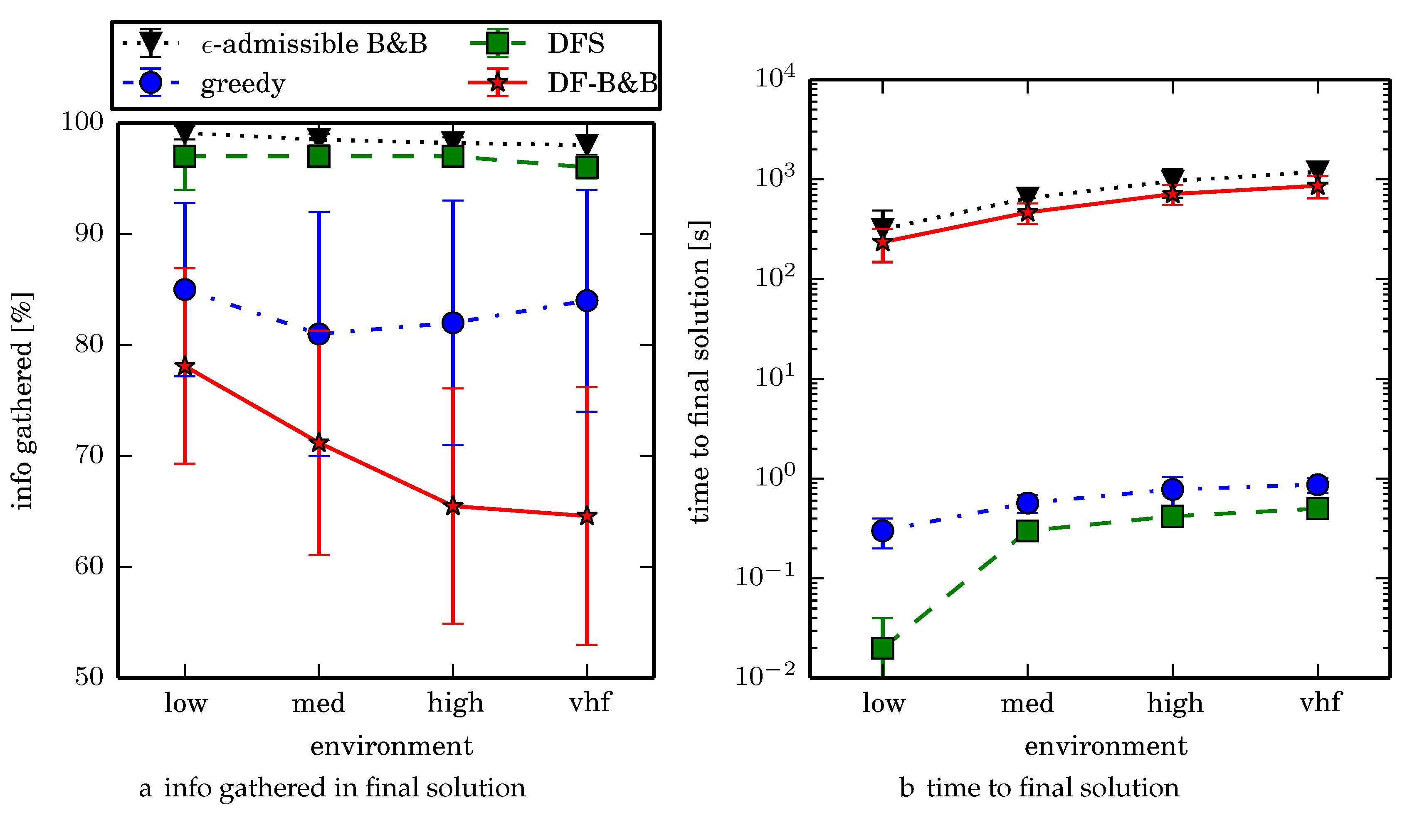

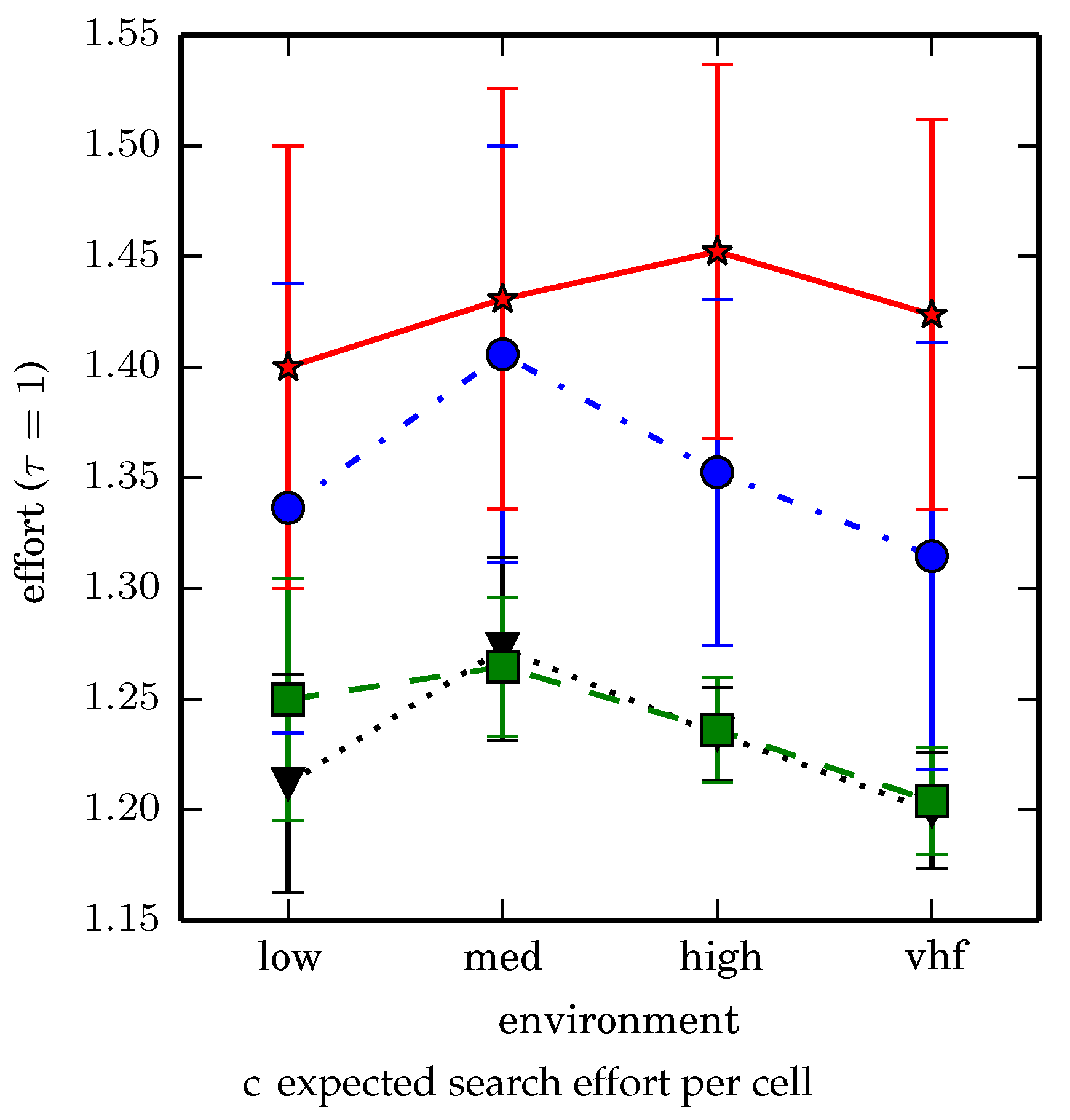

One can observe that branch and bound covers the environment most uniformly (resulting in the highest quality solution) while the greedy solver can get stuck or miss regions. DFS’s performance falls in between

-admissible B&B and the greedy heuristic solver while DF-B&B has the worst performance. Results are summarized on the uniform environments in

Table 2 and on the nonuniform environments in

Table 3.

denotes the number of solution improvements found during the search. The described effort allocation model computes the expected time to search a cell in number of time constants (e.g., with

), denoted as “effort” on

Table 2 for the uniform environment using detection threshold

.

Figure 20 and

Figure 21 summarize key results in

Table 2 and

Table 3 respectively.

8. Discussion

Experiment 1 identifies the parameters that are to be used for -admissible B&B for the other experiments. The results of Experiment 2 demonstrate that -admissible B&B scales reasonably well with increasing environment size due to the hierarchical nature of the algorithm. Experimental results demonstrate that the -admissible heuristic is able to significantly speed up branch and bound with bounded loss in optimality.

We now direct our attention to assessing the four algorithms for their performances in solution quality and time to compute the solution in Experiment 3.

-admissible B&B finds the highest quality solutions but takes the longest time to compute because it reasons over many candidate solutions. One limitation to

-admissible B&B is that the heavy reliance on the iterative greedy heuristic and all the heuristic’s limitations (see

Section 5.1) can cause the planner to continually expand too many candidate solutions at a fixed depth preventing the planner from finding a solution within the allotted time and memory constraints. Since Python is rather memory inefficient our experiments were limited to around 6000 iterations; more memory efficient languages ought to handle more iterations. Memory efficient search techniques such as beam-stack search [

69] could alleviate such bottlenecks, but it is unknown how such techniques would interact with

-admissible heuristics.

The heuristic solver DFS finds the second highest quality solutions in the shortest amount of time offering an effective trade-off in solution quality vs. speed to compute. We note that the quality of the DFS solutions degrades in environments with many more (smaller) regions due to the fact that more time is spent traversing between regions vs. searching the individual regions.

Comparing the two algorithms that gather the most information on average, -admissible B&B gathers 2.3% more information on average than DFS search taking 1–2 orders of magnitude longer to compute. To get a better sense of the real world performance improvement, -admissible B&B searches uniform environment at a rate that is 2.8% faster than the DFS coverage planner (where the search rate is inversely proportional to time to search a given cell), while -admissible B&B searches nonuniform environments at a rate that is 0.8% faster than DFS. We think that this decreased performance improvement in the nonuniform environments is a limitation of the Boustrophedon decomposition algorithm and/or the region coverage planner. Generating region coverage plans that factor in the information content by non-uniformly covering regions, or a polygonal decomposition that factors in information content (consider an extension of the weighted Voronoi Diagram that accounts for obstacles) would alleviate this and allow -admissible B&B to better reason about nonuniform distributions of information. When dealing with computational time budget constraints (fixed time budget for computing resources), we observe that -admissible B&B is an anytime algorithm. In the vhf uniform environments for example, around 90% of the time the first solution is discovered within 4.5 min (in contrast with about 90% of the trials terminating around 24 min).

One must consider the trade-offs between the added cost of computing better paths to the increased rate of rescuing survivors. We observe that the dramatic reduction in costs for supercomputing capabilities in recent years combined with the high value of a statistical life (around

$7 million in 2005 USD [

70]) imply that computer time is extremely cheap relative to human lives. Spending an additional

$70,000 renting a sizeable chunk of supercomputing resources is considered cost effective if it increases the expected number of statistical lives rescued by at least 0.01 lives. Increasing the amount of area one is able to thoroughly search within 48 h by 2.8% is instrumental to increasing survival rates. More accurate figures are required for detailed analysis.

The greedy heuristic algorithm is able to find higher quality solutions than DF-B&B but not DFS or

-admissible B&B. The greedy heuristic algorithm is also slower than DFS. The poor solution quality of DF-B&B despite the long time to compute the solution highlights the importance of generating effective heuristics to guide branch and bound to find high quality solutions. This is a finding that is not always recognized in the literature (usage of DF-B&B in place of heuristic guided B&B has occurred [

11]). Note that DF-B&B is actually guaranteed to find optimal solutions when run to completion but is unable to find high quality solutions in a reasonable amount of time.

A practical way to verify the quality of heuristic solvers is through use of branch and bound which is guaranteed to find solutions near the optimal solution, suggesting use of branch and bound as a means of verifying suboptimal heuristic solvers.

9. Conclusions

In this paper we identify the task of generating multipass coverage plans for target search, as agents searching for targets must assess the trade-offs of searching a given region multiple times. This relatively unexplored area of research requires generating plans that visit most if not all of the workspace multiple times, where even seemingly small problems require long action sequences to solve.

Given the difficulty of the problem, we created the algorithm

-admissible branch and bound (

-admissible B&B) [

13] which uses heuristics to improve the quality of the solution found in the given time. We benchmarked four different algorithms in Simplex environments assessing various performance metrics such as how long it takes to compute the solution and the quality of the solution (information gathered minimizing uncertainty in bits and expected time for a relief crew to search a cell).

The two most promising algorithms are

-admissible B&B and the Depth First Search (DFS) heuristic solver [

48].

-admissible B&B finds the highest quality solutions (gathers 2.2% more information than DFS and generates plans that search uniform environments 2.8% and nonuniform environments 0.8% faster than DFS) but takes the most time to compute. DFS on the other hand finds the second highest quality solutions but takes the shortest time to compute. The greedy algorithm and DF-B&B are both outperformed by DFS in terms of solution quality and time to solution. One of the greatest challenges to the practitioner not fully addressed by these findings is properly assessing the trade-offs in generating higher quality solutions at increased computational cost. Access to cheap supercomputing capabilities with the high value of a statistical life suggests that even small improvements in solution quality can be cost effective. However, for very large problem instances that we did not investigate in this paper (hundreds to thousands of regions, or tens of millions of cells), the findings of this study must be reinvestigated. Using branch and bound techniques may become intractable for very large problems, and heuristic techniques may offer the only feasible solution that can be computed in realistic time, with significant drops in solution quality.

For future work, we would like to improve the environment decomposition and region coverage planners in such a manner that can improve how information is gathered in a nonuniform environment. Extending techniques such as weighted Voronoi Diagrams offers a plausible means of doing so. We also plan to extend this work to a multiagent scenario by having multiple MAVs perform a coordinated search effort in a decentralized manner. During the plan execution, such agents will have to share their information in a robust fashion subject to communication network limitations.