GNSS/Electronic Compass/Road Segment Information Fusion for Vehicle-to-Vehicle Collision Avoidance Application

Abstract

:1. Introduction

- (1)

- A new PF based fusion model for the real-time vehicle state estimation employing GNSS, electronic compass and road segment.

- (2)

- A new AR based Adaptive high precision vehicle motion model for use with the PF algorithm

- (3)

- Specification and execution of scenarios for simulation and field experiments to demonstrate the superiority of the AR vehicle motion based PF fusion algorithm over GNSS only and PF based fusion with traditional CV and CA vehicle motion models. The performance is measured in terms of the accuracy of the vehicle state estimation and prediction accuracy of potential collision.

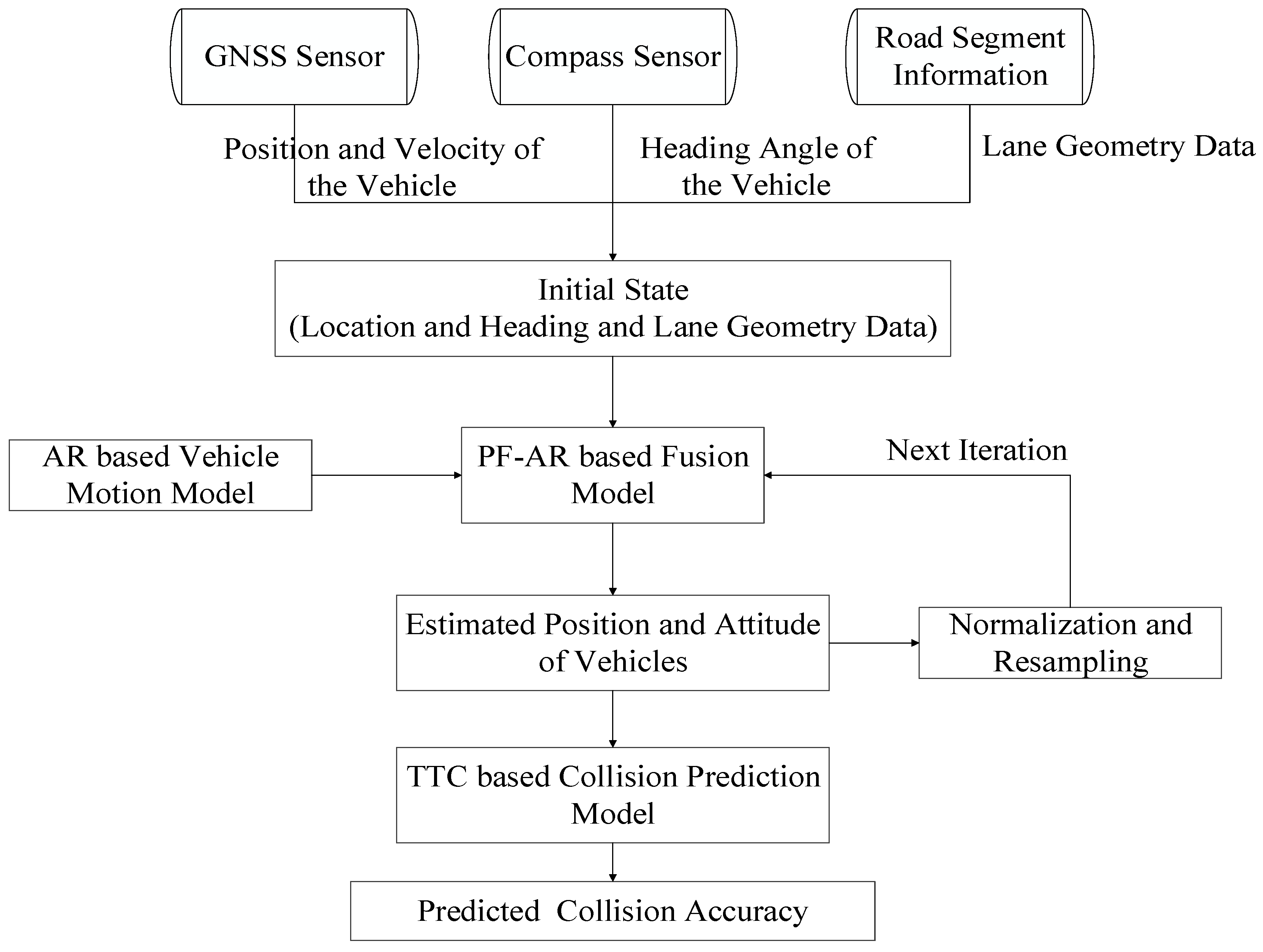

2. Fusion Algorithm-Based V2V Collision Avoidance System

2.1. Requirement Navigation Performance

2.2. Integrated Vehicle State Estimation Framework

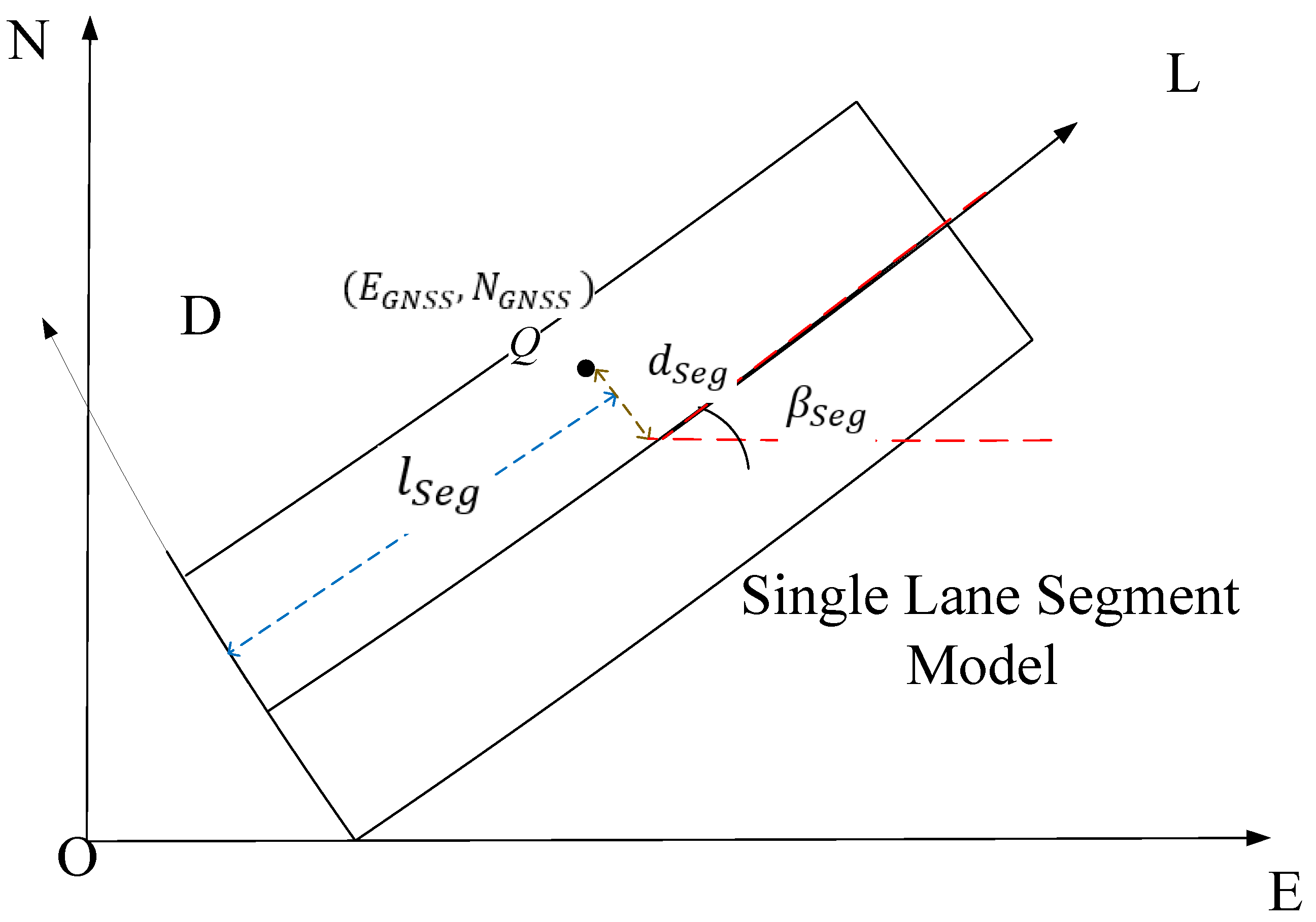

2.3. PF-AR Fusion-Based Vehicle Real-Time State Estimation Model

- ➢

- are the Easting and Northing coordinates (in meters) of the vehicle’s geometric centre in the local coordinates system;

- ➢

- is the heading velocity of the vehicle output from GNSS sensor;

- ➢

- is the heading of the vehicle from compass sensor output;

- ➢

- , is the longitudinal displacement of the vehicle in lane segment coordinates

- ➢

- , is the lateral displacement of the vehicle in lane segment coordinates

- ➢

- , is the tangent angle between the tangent line of the lane central line and the Easting-axis coordinates.

2.4. Collision Avoidance

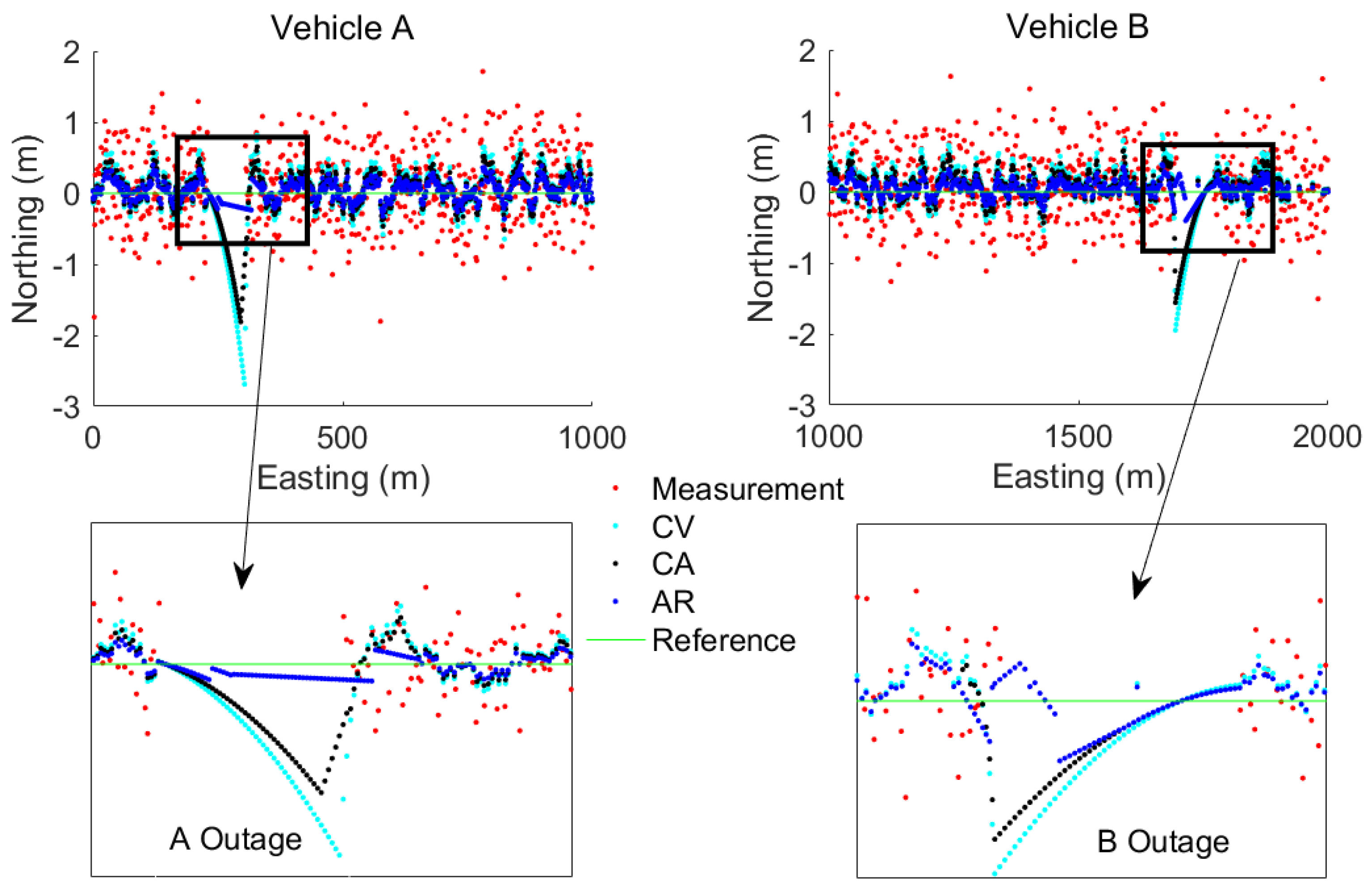

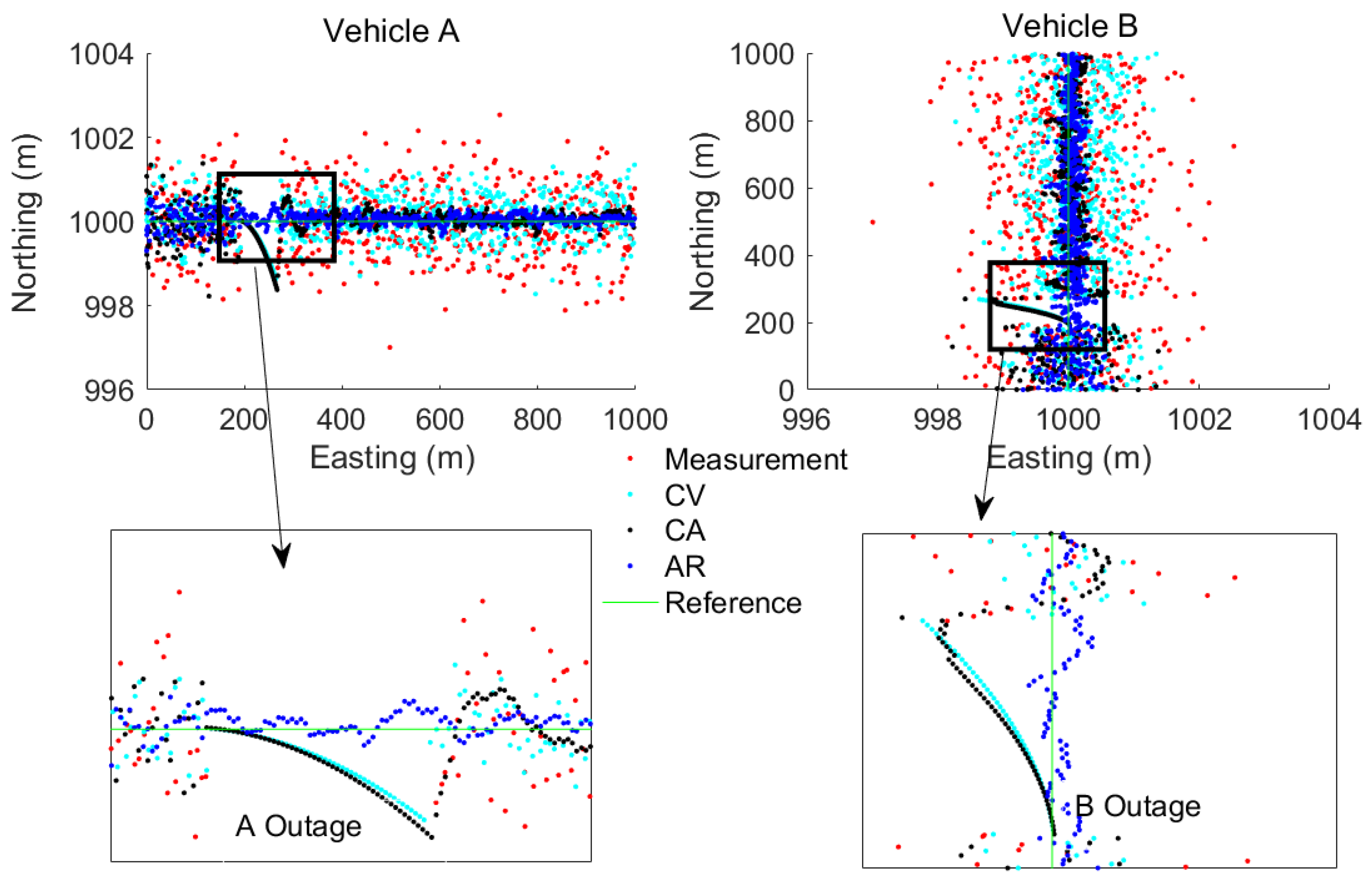

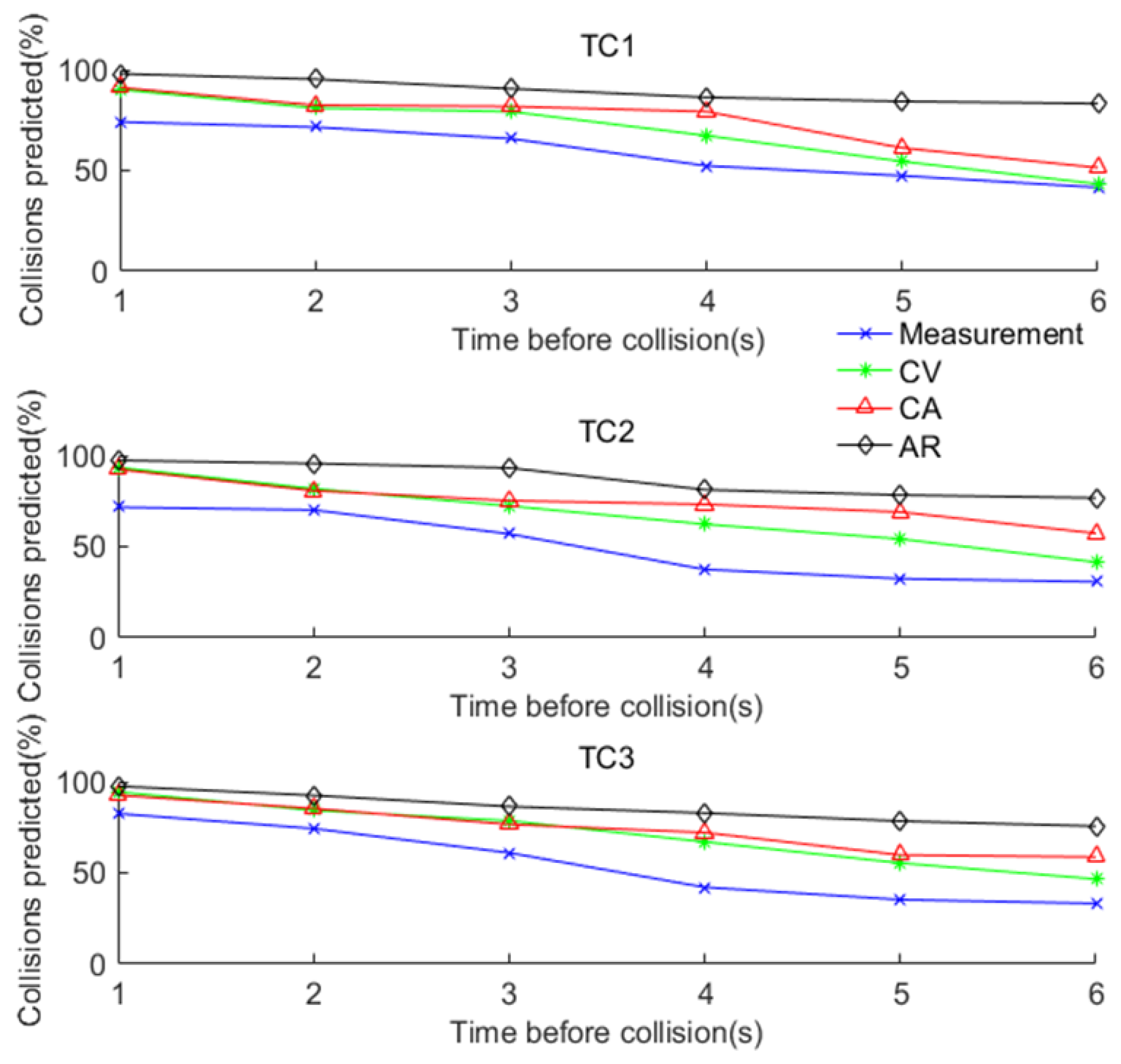

3. Simulation

4. Initial Field Test and Results Analysis

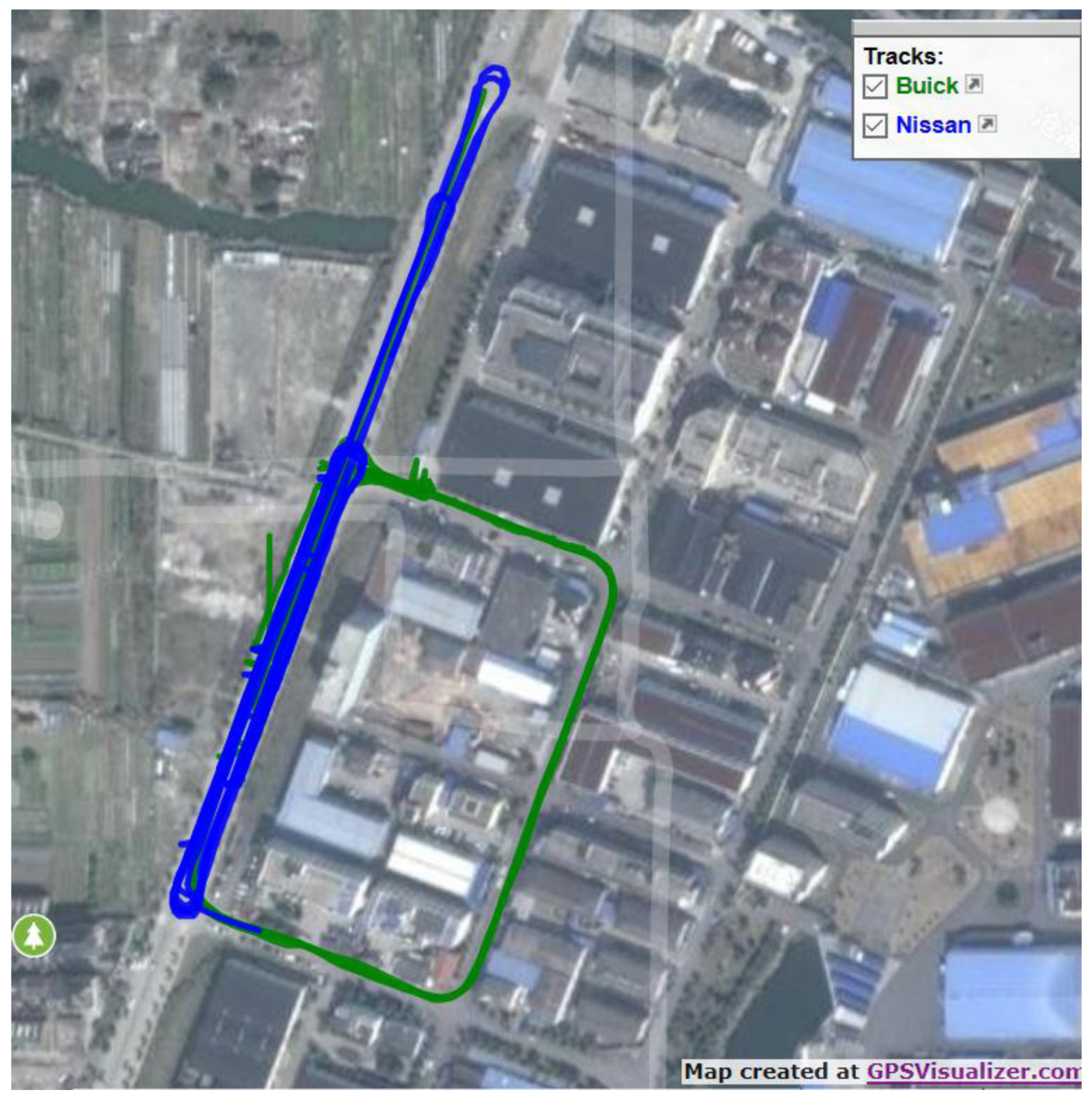

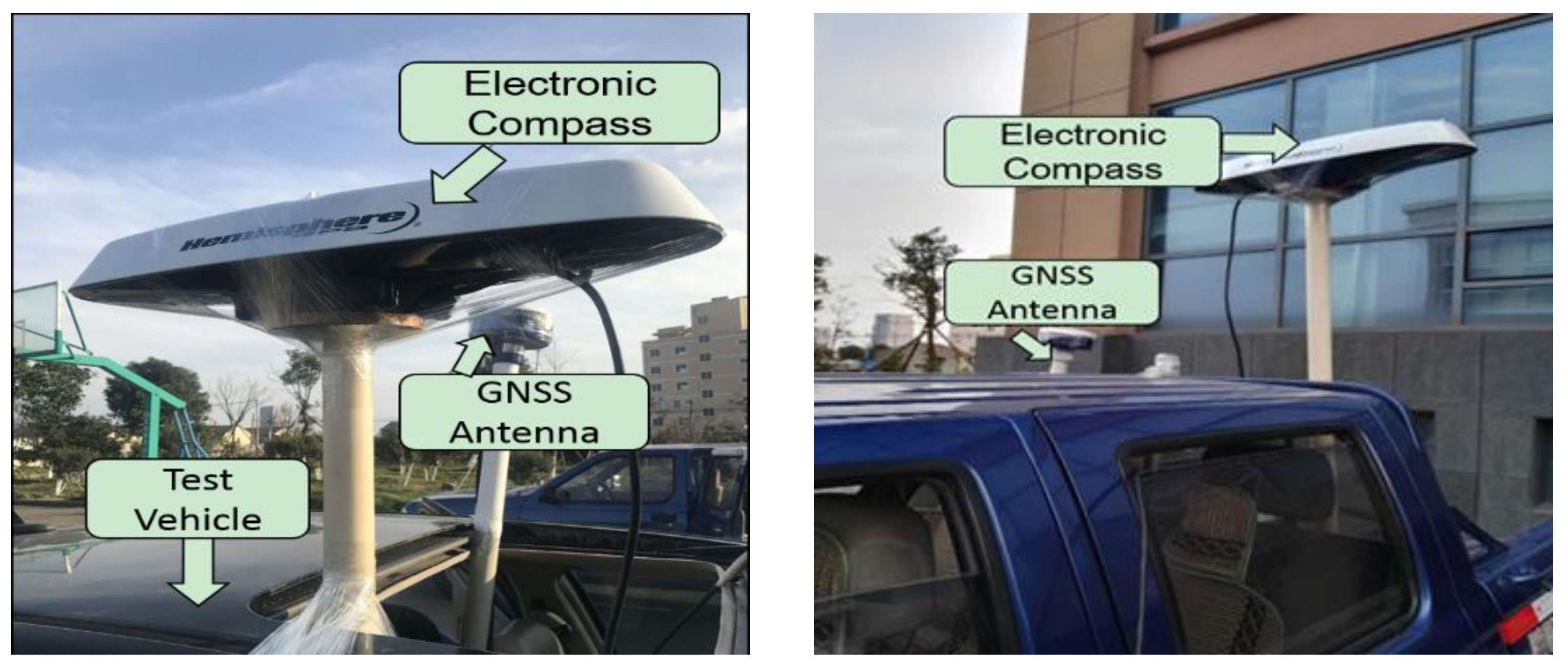

4.1. Equipment Set-up and Data Collection

- (1)

- The reference states (position, velocity and heading) data for both vehicles, post-processed from the on-board RTK GNSS and high grade IMU integration (from I-Mar RT-200) as well as the driving and collision point information recorded by a video;

- (2)

- The RTK GNSS positioning and velocity data for both vehicles, from the ComNav GNSS RTK network and heading of both vehicles from Hemisphere electronic compass.

- (3)

- Road Centreline data, collected by driving a vehicle equipped with an integrated GNSS RTK/high grade IMU, along the road centreline. The data captured were post-processed to extract the reference centreline. Base on the road centreline, the lane centreline and lane segment information could be defined.

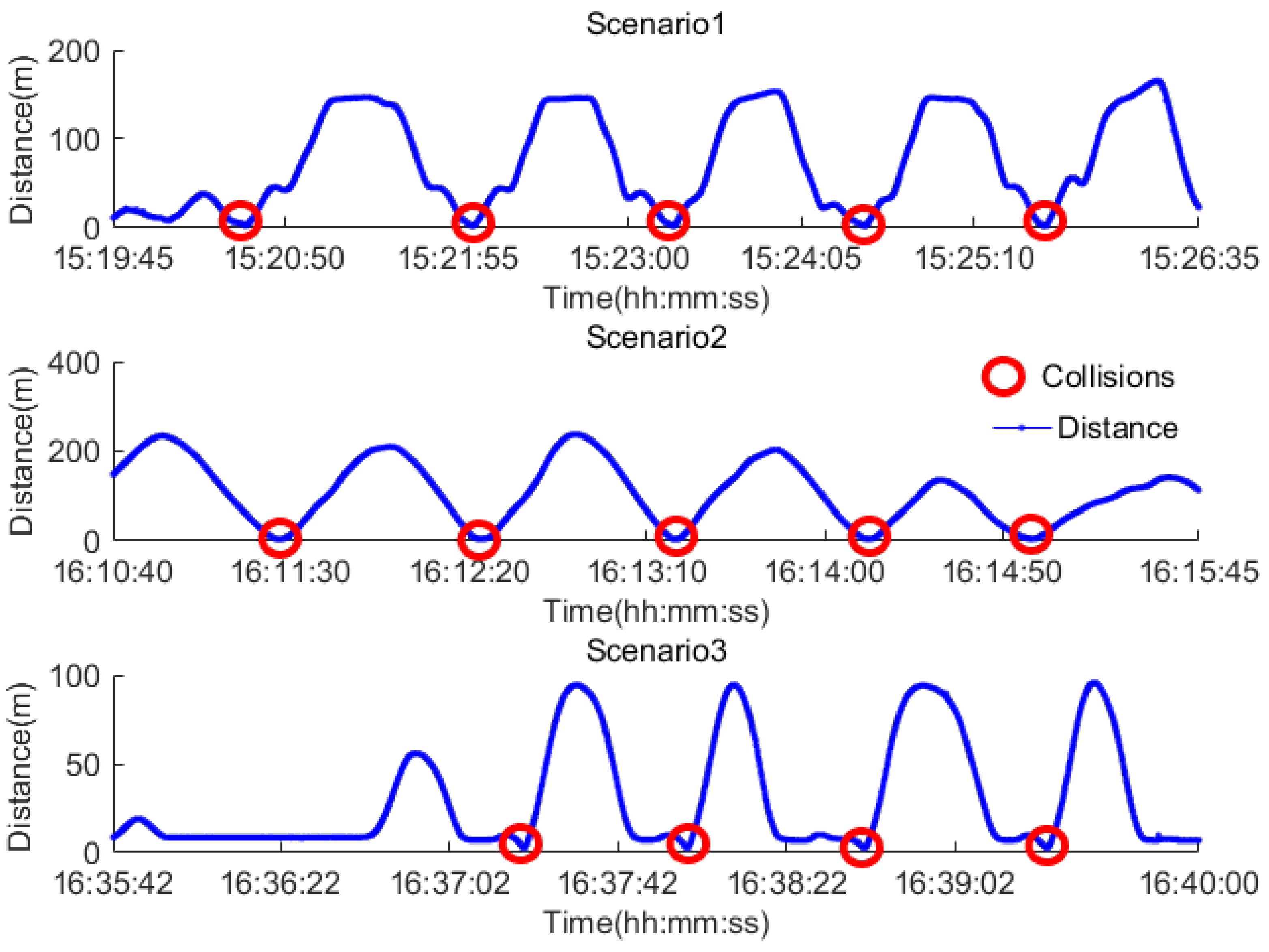

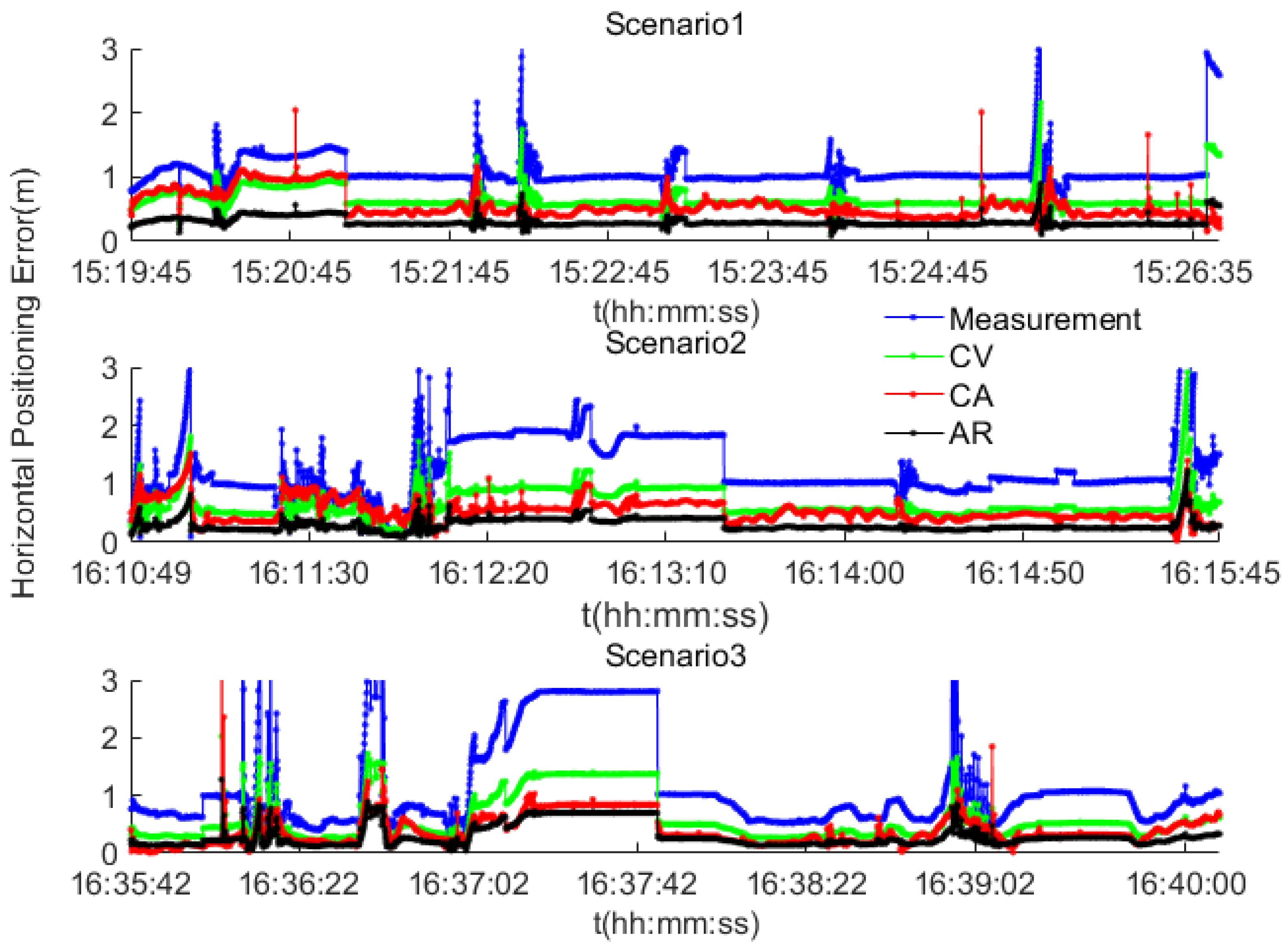

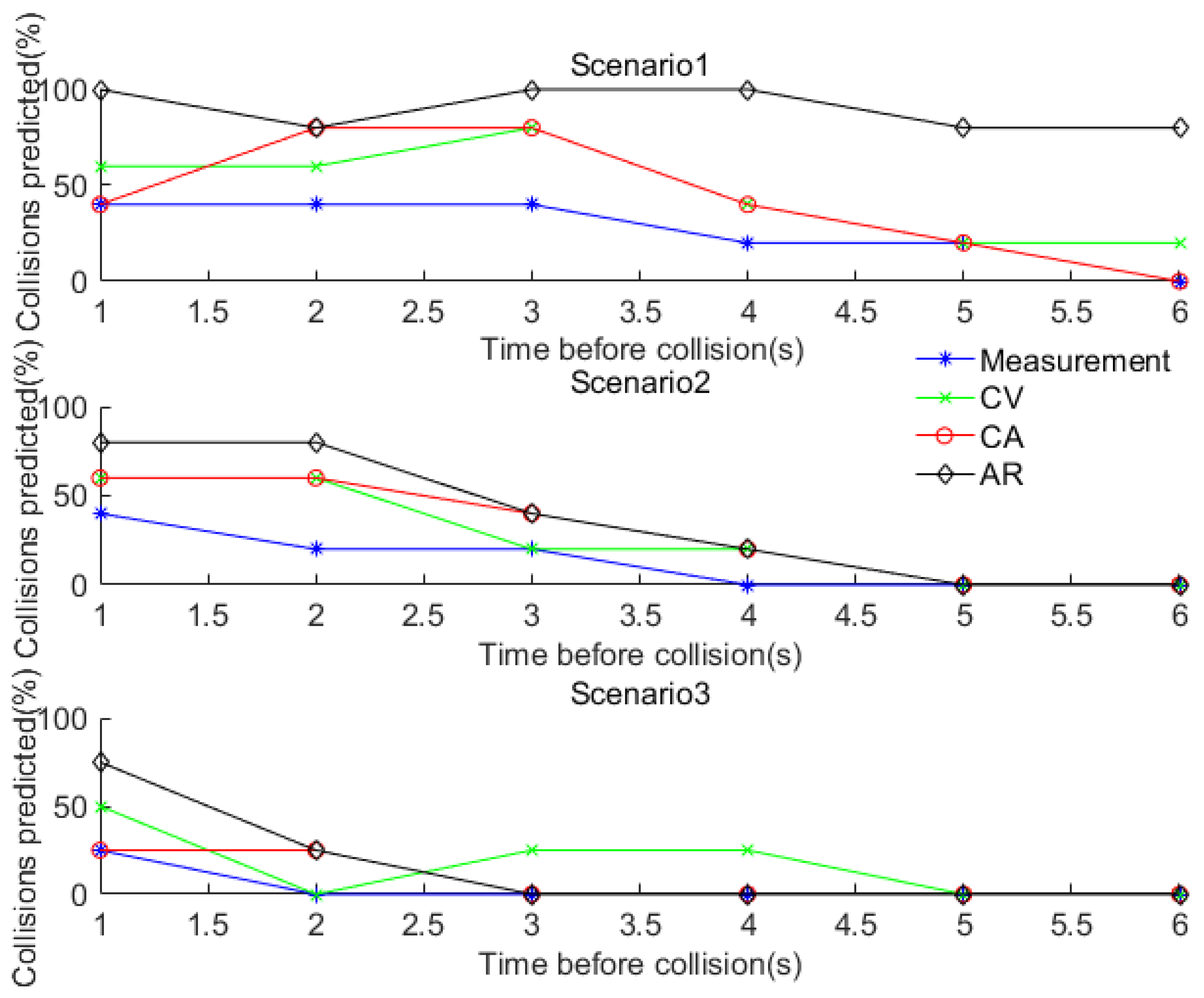

4.2. Results Analysis

5. Conclusions

Acknowledgments

Author Contributions

Conflicts of Interest

References

- National Center for Statistics and Analysis. 2015 Motor Vehicle Crashes: Overview; Traffic Safety Facts Research Note; Report No. DOT HS 812 318; National Highway Traffic Safety Administration: Washington, DC, USA, 2016.

- Araki, H.; Yamada, K.; Hiroshima, Y.; Ito, T. Development of Rear-End Collision Avoidance System. Inf. Vis. 1996, 224–229. [Google Scholar]

- Araki, H.; Yamada, K.; Hiroshima, Y.; Ito, T. Development of Rear-End Collision Avoidance System. JSAE Rev. 1997, 18, 314–316. [Google Scholar] [CrossRef]

- Risack, R.; Mohler, N.; Enkelmann, W. A Video-Based Lane Keeping Assistant. Inf. Vis. 2000, 356–361. [Google Scholar]

- Ujjainiya, L.; Chakravarthi, M.K. Raspberry—Pi Based Cost Effective Vehicle Collision Avoidance System Using Image Processing. ARPN J. Eng. Appl. Sci. 2015, 10, 3001–3005. [Google Scholar]

- Ueki, J.; Mori, J.; Nakamura, Y.; Horii, Y.; Okada, H. Vehicular Collision Avoidance Support System (VCASS) by Inter-Vehicle Communications for Advanced ITS. IEICE Trans. Fundam. Electron. Commun. Comput. Sci. 2005, 88, 1816–1823. [Google Scholar] [CrossRef]

- Ferrara, A.; Paderno, J. Application of Switching Control for Automatic Pre-Crash Collision Avoidance in Cars. Nonlinear Dyn. 2006, 46, 307–321. [Google Scholar] [CrossRef]

- Huang, J.; Tan, H. Error Analysis and Performance Evaluation of a Future-Trajectory-Based Cooperative Collision Warning System. IEEE Trans. Intell. Transp. Syst. 2009, 10, 175–180. [Google Scholar] [CrossRef]

- Ong, R.; Lachapelle, G. Use of GNSS for vehicle-pedestrian and vehicle-cyclist crash avoidance. Int. J. Veh. Saf. 2011, 5, 137–155. [Google Scholar] [CrossRef]

- Toledo-Moreo, R.; Zamora-Izquierdo, M.A. Collision avoidance support in roads with lateral and longitudinal maneuver prediction by fusing GPS/IMU and digital maps. Transp. Res. Part C 2010, 18, 611–625. [Google Scholar] [CrossRef]

- GENESI Project. GNSS Evolution Next Enhancement of System Infrastructures. TASK 1-Technical Note, Forecast of Mid and Long Term Users’ Needs; Document ID: ESYS-TN-Task-001; European Space Agency (ESA): Paris, France, 2007. [Google Scholar]

- Peyret, F.; Gilliéron, P.Y.; Ruotsalainen, L.; Engdahl, J. COST TU1302-SaPPART White Paper-Better use of Global Navigation Satellite Systems for safer and greener transport. Proc. R. Soc. A Math. Phys. Eng. Sci. 2015, 241, 141–152. [Google Scholar]

- Gustafsson, F.; Gunnarsson, F.; Bergman, N.; Forssell, U.; Jansson, J.; Karlsson, R.; Nordlund, P.J. Particle Filters for Positioning, Navigation and Tracking. IEEE Trans. Signal Process. 2002, 50, 425–435. [Google Scholar] [CrossRef]

- Rekleitis, I.M.; Dudek, G.; Milios, E.E. Multi-robot cooperative localization: A study of trade-offs between efficiency and accuracy. Intell. Robots Syst. 2002, 3, 2690–2695. [Google Scholar]

- Selloum, A.; Betaille, D.; Carpentier, E.L.; Peyret, F. Lane level positioning using particle filtering. In Proceedings of the 12th International IEEE Conference on Intelligent Transportation Systems, St. Louis, MO, USA, 3–7 October 2009. [Google Scholar]

- Selloum, A.; Betaille, D.; Carpentier, E.L.; Peyret, F. Robustification of a map aided location process using road direction. In Proceedings of the 2010 13th International IEEE Annual Conference on Intelligent Transportation Systems, Madeira Island, Portugal, 19–22 September 2010. [Google Scholar]

- Betaille, D.; Toledo-Moreo, R. Creating Enhanced Maps for Lane-Level Vehicle Navigation. IEEE Trans. Intell. Transp. Syst. 2010, 11, 786–798. [Google Scholar] [CrossRef]

- Hamming, R.W. The Art of Probability for Engineers and Scientists; Addison-Wesley: Redwood City, CA, USA, 1991. [Google Scholar]

- Speekenbrink, M. A tutorial on particle filters. J. Math. Psychol. 2016, 73, 140–152. [Google Scholar] [CrossRef]

- Miller, R.; Huang, Q. An Adaptive Peer-to-Peer Collision Warning System. In Proceedings of the Vehicular Technology Conference, Birmingham, AL, USA, 6–9 May 2002. [Google Scholar]

- Green, M. How long does it take to stop? Methodological analysis of driver perception-brake times. Transp. Hum. Factors 2000, 2, 195–216. [Google Scholar] [CrossRef]

- Delaigue, P.; Eskandarian, A. A comprehensive vehicle braking model for predictions of stopping distances. J. Automob. Eng. 2004, 218, 1409–1417. [Google Scholar] [CrossRef]

- Zhou, J.; Traugott, J.; Scherzinger, B.; Miranda, C.; Kipka, A. A New Integration Method for MEMS Based GNSS/INS Multi-sensor Systems. In Proceedings of the International Technical Meeting of the Ion Satellite Division (ION GNSS), Tampa, FL, USA, 14–18 September 2015. [Google Scholar]

| Sources | Positioning Error (Standard Deviation, 2σ) |

|---|---|

| RTK GNSS dynamic mode | 0.3 m–0.7 m |

| Electronic heading error | 0.1 m–0.3 m |

| Road segment error | 0.05 m–0.1 m |

| Total positioning error budget | 0.32 m–0.77 m |

| Simulated Data | Noise | Noise Value Range | |

|---|---|---|---|

| RTK GNSS Output | E, N axis coordinates | White Gaussian Noise~N(0, 0.52) | −1.6793~1.3728 m |

| Uniformly distributed noise~U(−0.25, 0.25) | −0.2488~0.2500 m | ||

| velocity | White Gaussian Noise~N(0, 0.22) | −0.5228~0.5546 m/s | |

| Uniformly distributed noise~U(−0.1, 0.1) | −0.0998~0.0999 m/s | ||

| Electronic compass | Heading data | White Gaussian Noise~N(0, 0.12) | −0.3154~0.2658 rad |

| Uniformly distributed noise~U(−0.05, 0.05) | −0.0497~0.0498 rad | ||

| Test Case (TC) | Data Rate | Number of Samples for Each Vehicle | Collision Type | Gap Duration | Number of Collision | |

|---|---|---|---|---|---|---|

| Vehicle A | Vehicle B | |||||

| TC1 | 10 Hz | 667 | 667 | Head-on collision | 7 s | 1500 |

| TC2 | 10 Hz | 666 | 666 | Intersection perpendicular collision | 7 s | 1500 |

| TC3 | 10 Hz | 692 | 692 | Rear-end collision | 7 s | 1500 |

| Accuracy Percentage (95%) | Motion Model | ||

|---|---|---|---|

| CV | CA | AR | |

| TC1 | 1.18 | 1.09 | 0.31 |

| TC2 | 1.21 | 1.04 | 0.30 |

| TC3 | 1.13 | 0.92 | 0.28 |

| Scenarios | Start Time (Beijing Time) | End Time (Beijing Time) | Collision Type | Number of Collision |

|---|---|---|---|---|

| 1 | 15:19:45 | 15:26:35 | Rear-end collision | 5 |

| 2 | 16:10:40 | 16:15:45 | Intersection perpendicular collision | 5 |

| 3 | 16:35:42 | 16:40:00 | Head-on collision | 4 |

© 2017 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Sun, R.; Cheng, Q.; Xue, D.; Wang, G.; Ochieng, W.Y. GNSS/Electronic Compass/Road Segment Information Fusion for Vehicle-to-Vehicle Collision Avoidance Application. Sensors 2017, 17, 2724. https://doi.org/10.3390/s17122724

Sun R, Cheng Q, Xue D, Wang G, Ochieng WY. GNSS/Electronic Compass/Road Segment Information Fusion for Vehicle-to-Vehicle Collision Avoidance Application. Sensors. 2017; 17(12):2724. https://doi.org/10.3390/s17122724

Chicago/Turabian StyleSun, Rui, Qi Cheng, Dabin Xue, Guanyu Wang, and Washington Yotto Ochieng. 2017. "GNSS/Electronic Compass/Road Segment Information Fusion for Vehicle-to-Vehicle Collision Avoidance Application" Sensors 17, no. 12: 2724. https://doi.org/10.3390/s17122724