Structure-From-Motion in 3D Space Using 2D Lidars

Abstract

:1. Introduction

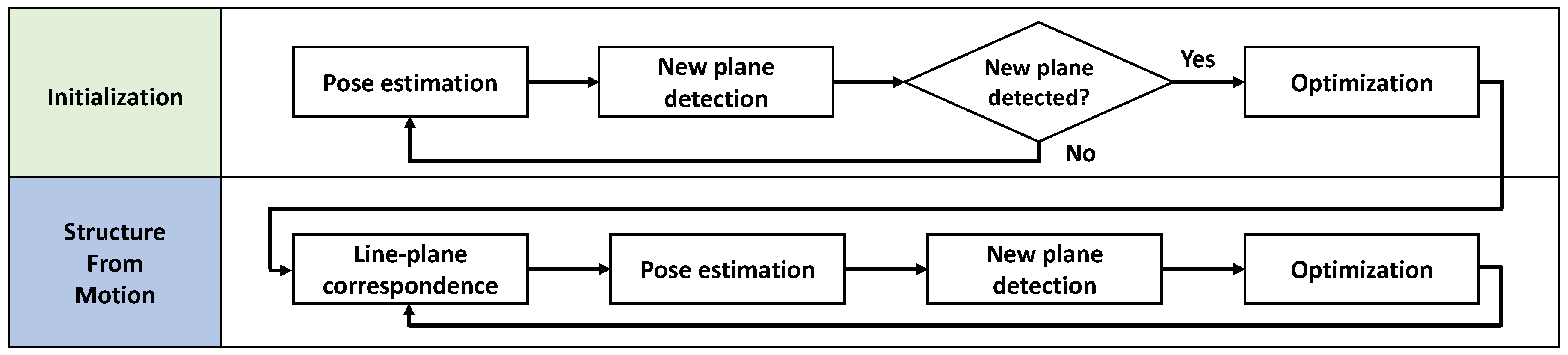

2. Overview of the Proposed Method

3. Sensor Pose Estimation

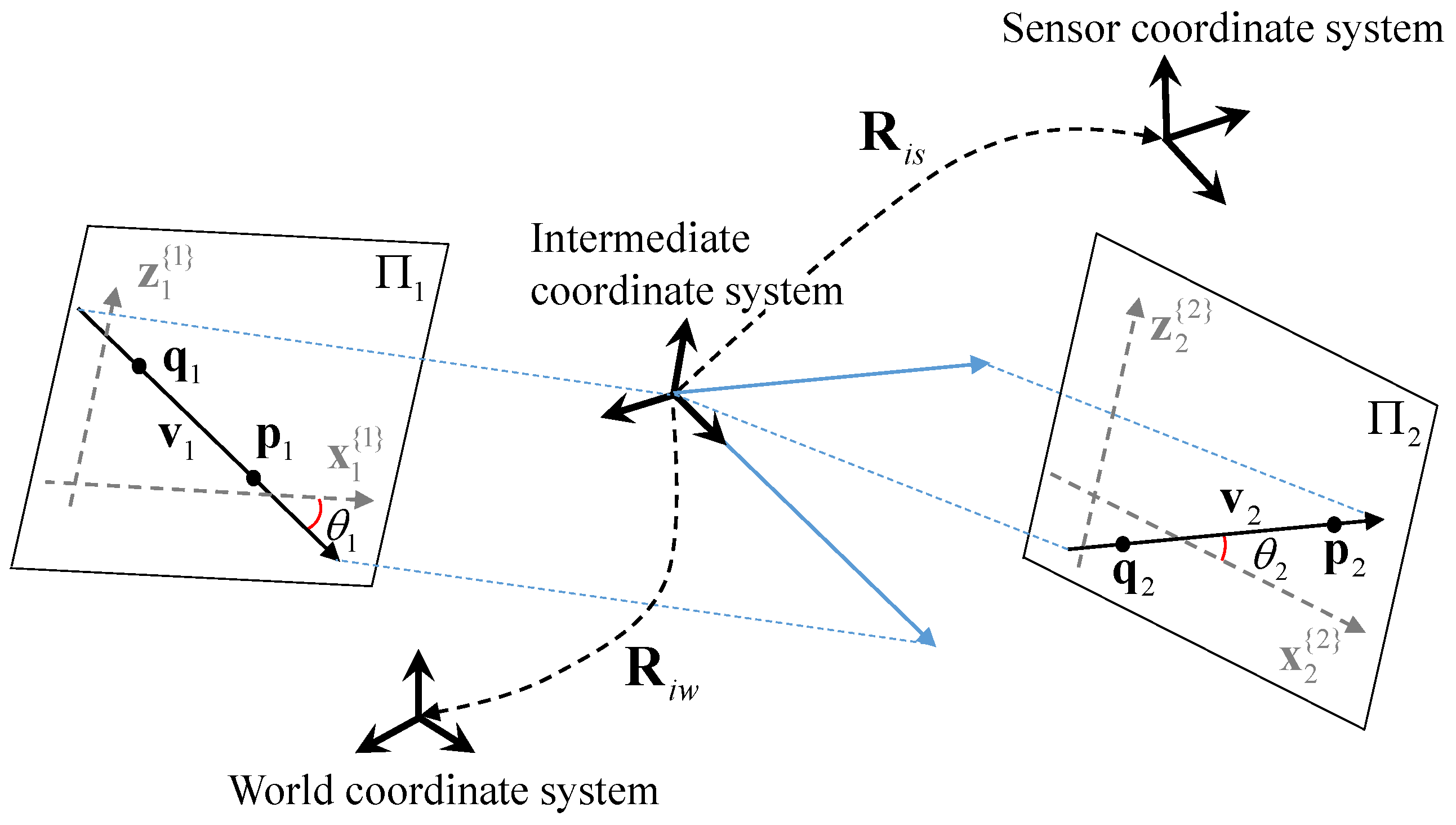

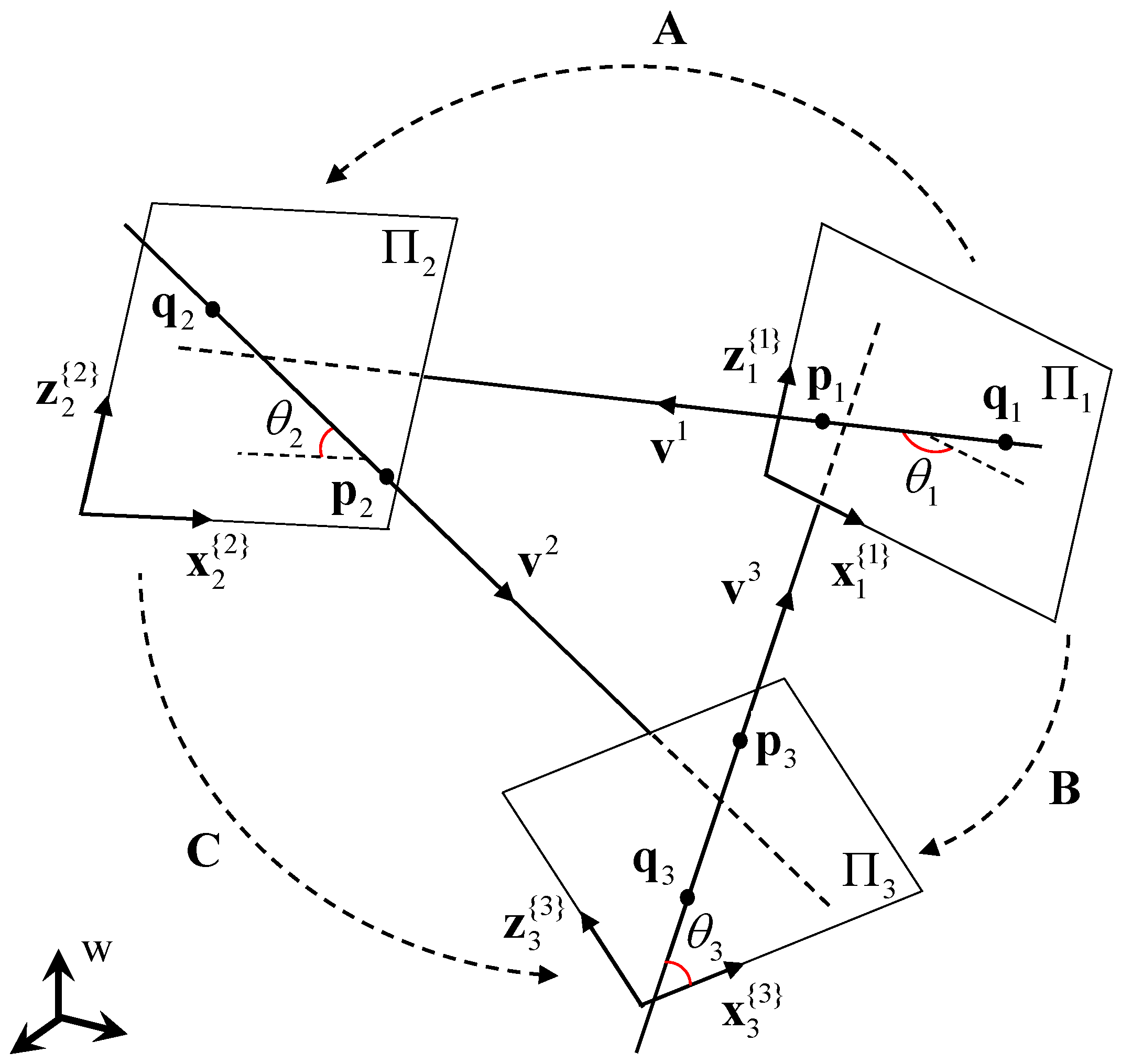

3.1. Pose Parameterization Using Two Lines on Two Planes

3.2. Estimation of the Line Angles on the Planes

3.3. Physical Constraint for Solution Selection

| Algorithm 1 6-DOF Pose Estimation of a Lidar System using Three Lines |

| INPUT (a) Six points on the three scan lines (two in each line) in the sensor coordinate system (b) Parameters of three planes in the world coordinate system (c) One-to-one correspondences between scan lines and planes. OUTPUT: Sensor-to-world transformation, and 1. Compute using three line vector, , in the sensor coordinate system. (n = 1–3) 2. Compute three rotation matrices , , and using plane parameters in the world coordinate system. 3. Compute the coefficients of to . 4. Compute the coefficients of 16th order polynomial equation using to . 5. Solve the equation to obtain candidates of 6. Obtain combinations of , and from Equations (21) to (24). 7. Extract combinations of , and that satisfy Equation (3) and Equation (12). 8. Determine solutions of the sensor-to-world transformation, and , which meet the conditions mentioned in Section 3.3. |

4. Structure from Motion

4.1. Line-Plane Correspondence

4.2. New Plane Detection

4.3. Nonlinear Optimization

- the correspondence between points and planes are not changed in the optimization process;

- the overlap between a single scan and a point cloud is very narrow (several lines on two scanning planes);

- the nonlinear optimization utilizes Jacobian while the registration does not.

5. Experimental Results

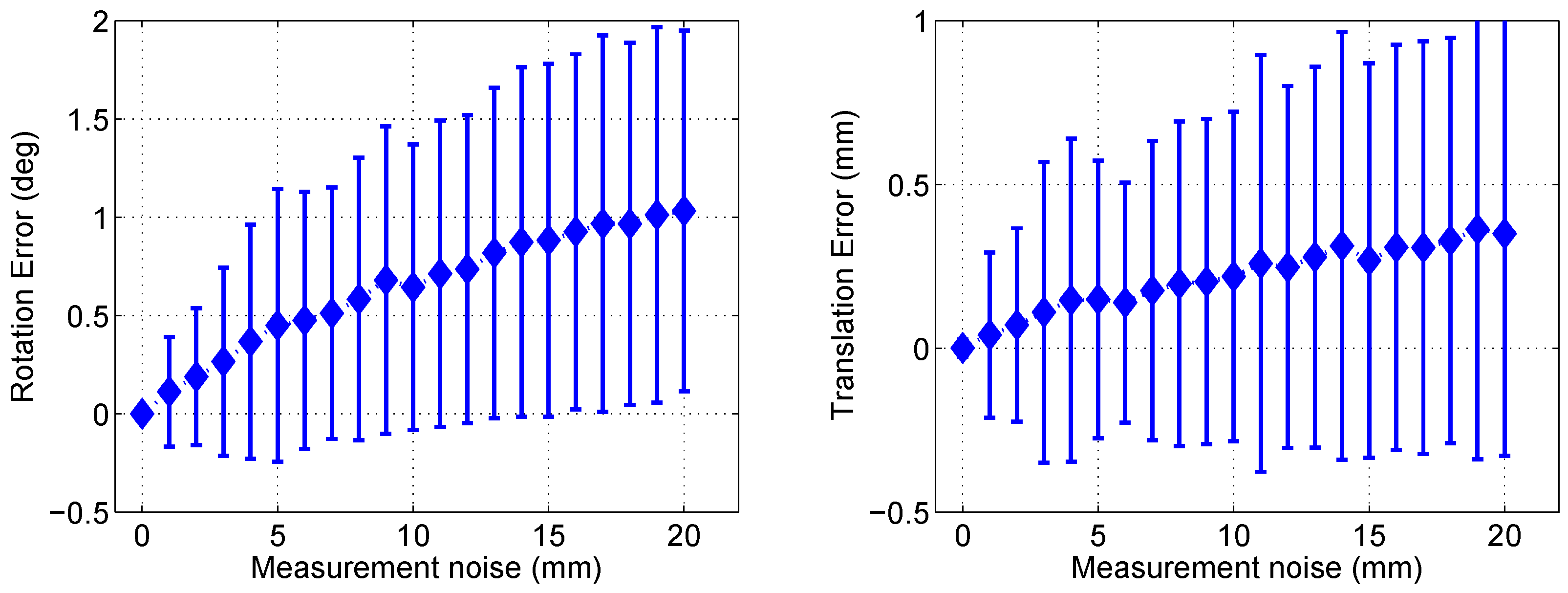

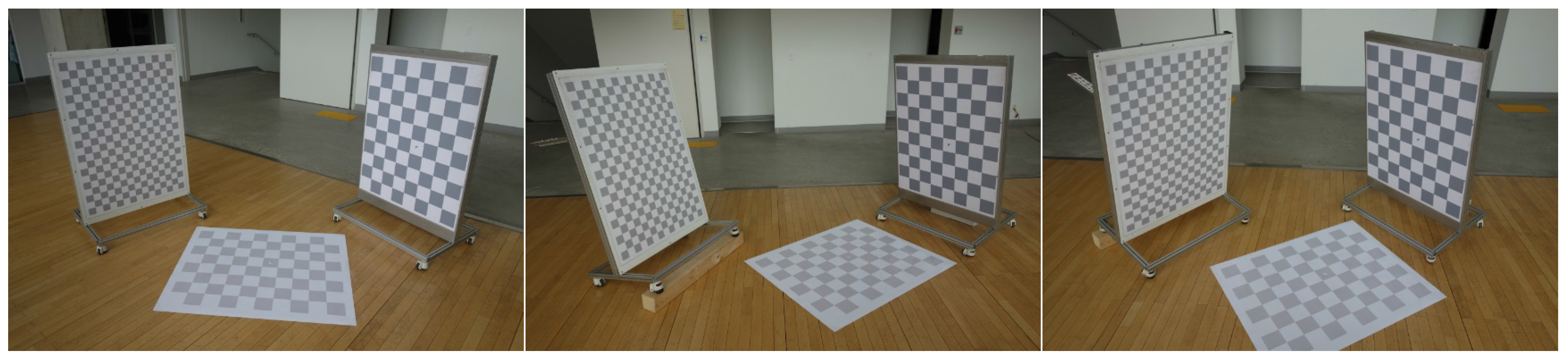

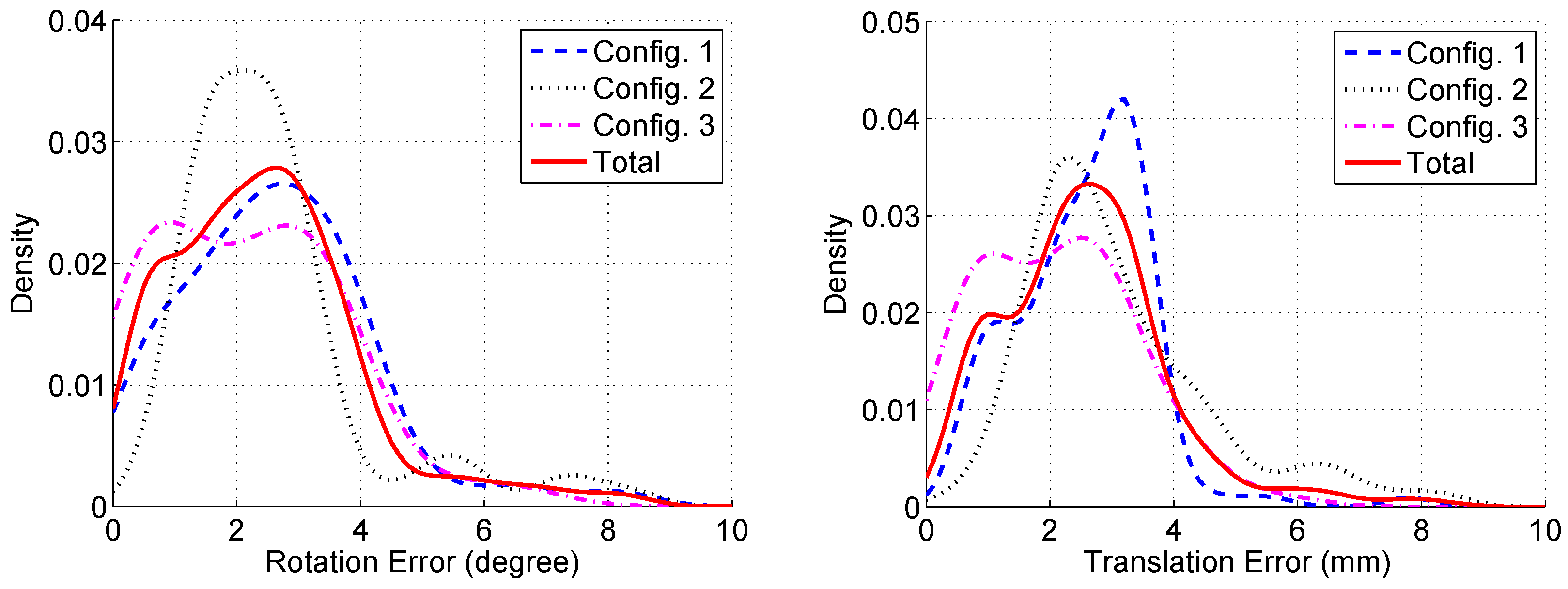

5.1. Evaluation of Pose Estimation Algorithm

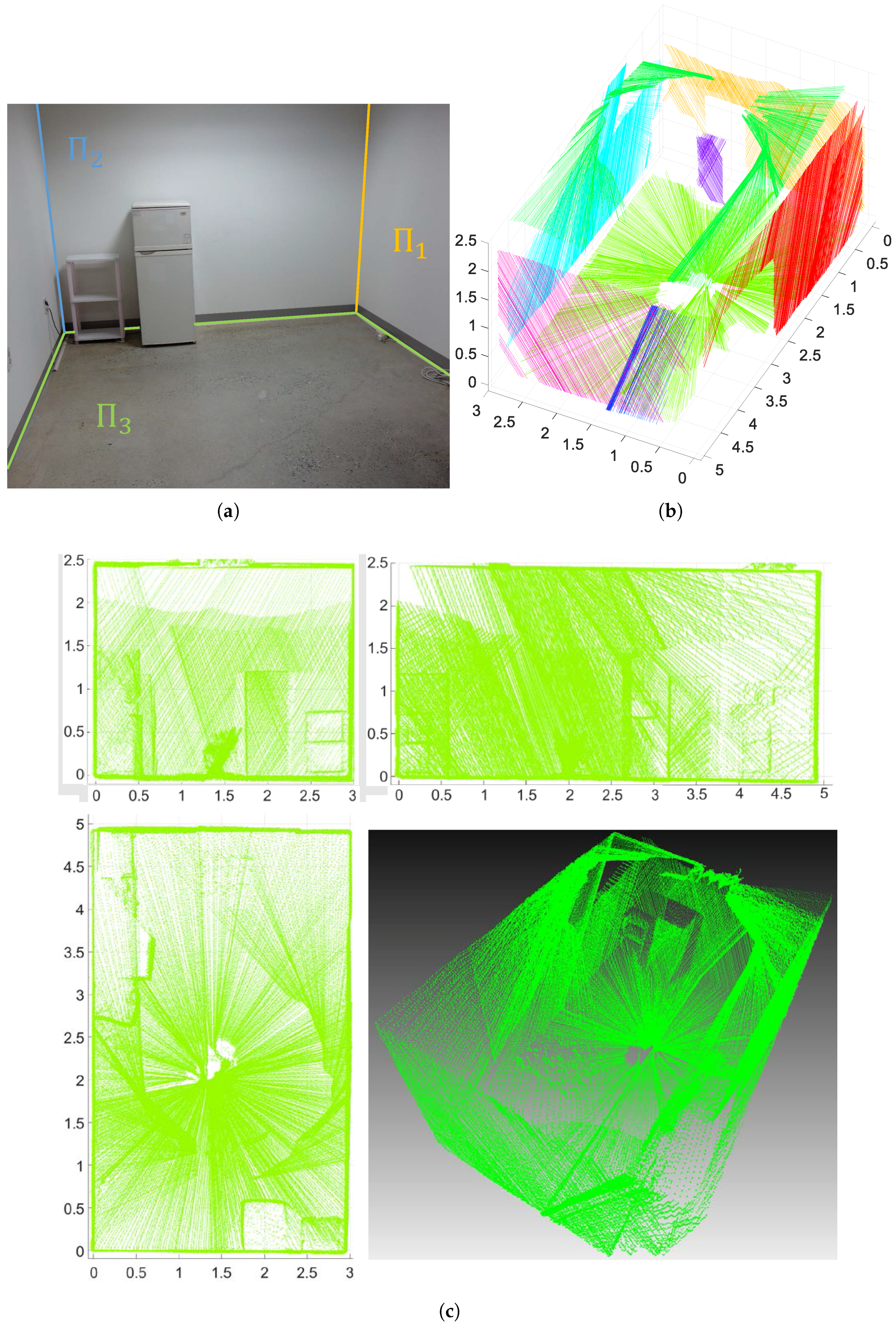

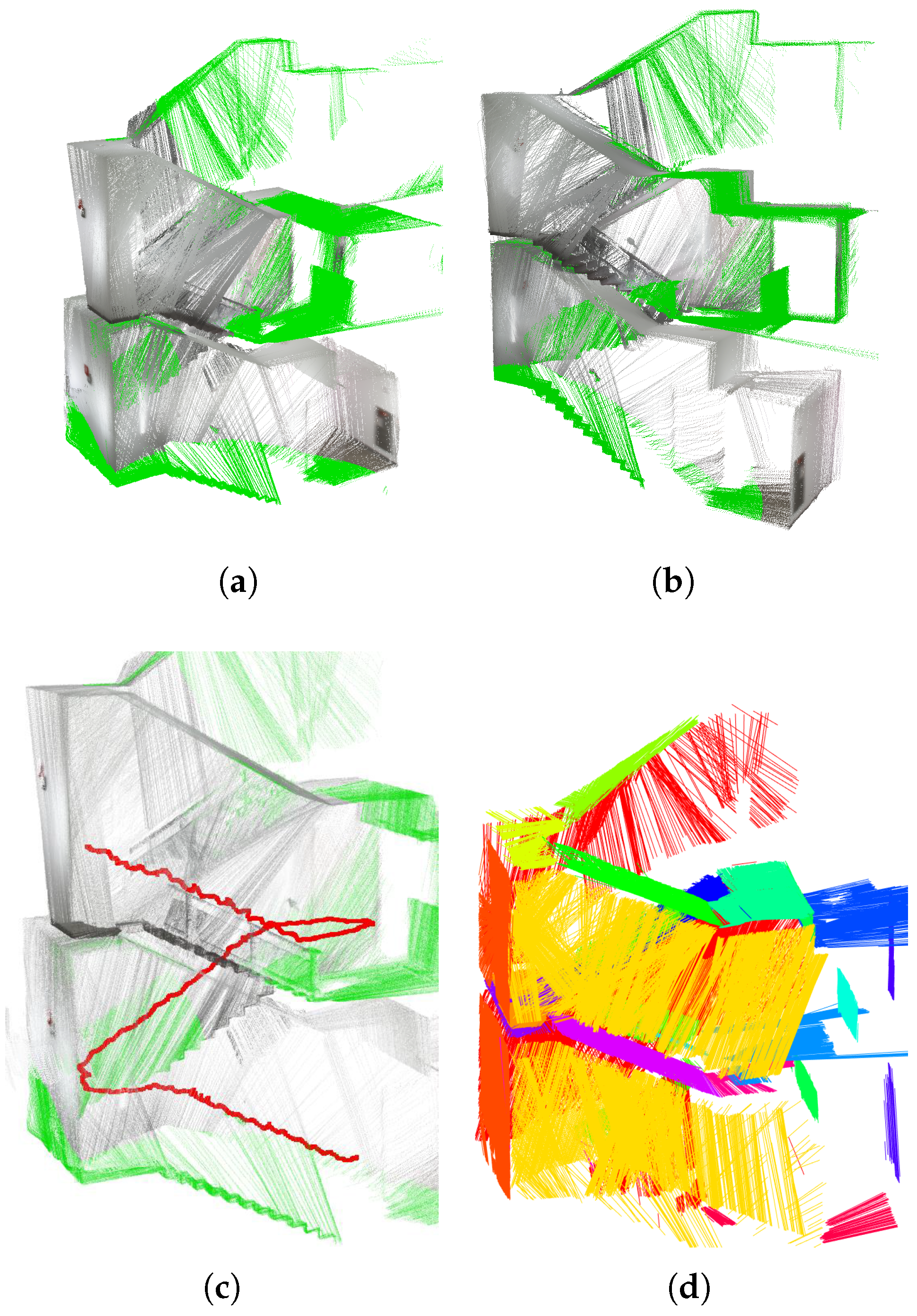

5.2. Evaluation of Structure-from-Motion Algorithm

6. Conclusions

Acknowledgments

Author Contributions

Conflicts of Interest

References

- Newman, P.; Cole, D.; Ho, K. Outdoor SLAM using visual appearance and laser ranging. In Proceedings of the IEEE International Conference on Robotics and Automation, Orlando, FL, USA, 15–19 May 2006; pp. 1180–1187.

- Nüchter, A.; Lingemann, K.; Hertzberg, J.; Surmann, H. 6D SLAM with approximate data association. In Proceedings of the 12th International Conference on Advanced Robotics, Seattle, WA, USA, 17–20 July 2005; pp. 242–249.

- Cole, D.M.; Newman, P.M. Using laser range data for 3D SLAM in outdoor environments. In Proceedings of the IEEE International Conference on Robotics and Automation, Orlando, FL, USA, 15–19 May 2006; pp. 1556–1563.

- Choi, D.G.; Shim, I.; Bok, Y.; Oh, T.H.; Kweon, I.S. Autonomous homing based on laser-camera fusion system. In Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems, Vilamoura, Portugal, 7–12 October 2012; pp. 2512–2518.

- Han, Y.; Lee, J.Y.; Kweon, I.S. High quality shape from a single rgb-d image under uncalibrated natural illumination. In Proceedings of the IEEE International Conference on Computer Vision, Sydney, Australia, 1–8 December 2013; pp. 1617–1624.

- Yu, L.F.; Yeung, S.K.; Tai, Y.W.; Lin, S. Shading-based shape refinement of rgb-d images. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Portland, OR, USA, 23–28 June 2013; pp. 1415–1422.

- Lai, K.; Bo, L.; Ren, X.; Fox, D. RGB-D object recognition: Features, algorithms, and a large scale benchmark. In Consumer Depth Cameras for Computer Vision; Springer: London, UK, 2013; pp. 167–192. [Google Scholar]

- Schwarz, M.; Schulz, H.; Behnke, S. RGB-D object recognition and pose estimation based on pre-trained convolutional neural network features. In Proceedings of the IEEE International Conference on Robotics and Automation, Seattle, WA, USA, 26–30 May 2015; pp. 1329–1335.

- Kerl, C.; Sturm, J.; Cremers, D. Dense visual SLAM for RGB-D cameras. In Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems, Vilamoura, Portugal, 7–12 October 2012; pp. 2100–2106.

- Kerl, C.; Sturm, J.; Cremers, D. Robust odometry estimation for RGB-D cameras. In Proceedings of the IEEE International Conference on Robotics and Automation, Karlsruhe, Germany, 6–10 May 2013; pp. 3748–3754.

- Whelan, T.; Johannsson, H.; Kaess, M.; Leonard, J.J.; McDonald, J. Robust real-time visual odometry for dense RGB-D mapping. In Proceedings of the IEEE International Conference on Robotics and Automation, Karlsruhe, Germany, 6–10 May 2013; pp. 5724–5731.

- Wolf, D.F.; Sukhatme, G.S. Mobile robot simultaneous localization and mapping in dynamic environments. Auton. Robots 2005, 19, 53–65. [Google Scholar] [CrossRef]

- Buckley, S.; Vallet, J.; Braathen, A.; Wheeler, W. Oblique helicopter-based laser scanning for digital terrain modelling and visualisation of geological outcrops. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2008, 37, 1–6. [Google Scholar]

- Hesch, J.A.; Mirzaei, F.M.; Mariottini, G.L.; Roumeliotis, S.I. A Laser-aided Inertial Navigation System (L-INS) for human localization in unknown indoor environments. In Proceedings of the IEEE International Conference on Robotics and Automation, Anchorage, AK, USA, 3–8 May 2010; pp. 5376–5382.

- Bosse, M.; Zlot, R.; Flick, P. Zebedee: Design of a spring-mounted 3-D range sensor with application to mobile mapping. IEEE Trans. Robot. 2012, 28, 1104–1119. [Google Scholar] [CrossRef]

- Patz, B.J.; Papelis, Y.; Pillat, R.; Stein, G.; Harper, D. A practical approach to robotic design for the darpa urban challenge. J. Field Robot. 2008, 25, 528–566. [Google Scholar] [CrossRef]

- Bosse, M.; Zlot, R. Continuous 3D scan-matching with a spinning 2D laser. In Proceedings of the IEEE International Conference on Robotics and Automation, Kobe, Japan, 12–17 May 2009; pp. 4312–4319.

- Sheehan, M.; Harrison, A.; Newman, P. Automatic self-calibration of a full field-of-view 3D n-laser scanner. In Experimental Robotics; Springer: Berlin/Heidelberg, Germany, 2014; pp. 165–178. [Google Scholar]

- Montemerlo, M.; Becker, J.; Bhat, S.; Dahlkamp, H.; Dolgov, D.; Ettinger, S.; Haehnel, D.; Hilden, T.; Hoffmann, G.; Huhnke, B.; et al. Junior: The stanford entry in the urban challenge. J. Field Robot. 2008, 25, 569–597. [Google Scholar] [CrossRef]

- Leonard, J.; How, J.; Teller, S.; Berger, M.; Campbell, S.; Fiore, G.; Fletcher, L.; Frazzoli, E.; Huang, A.; Karaman, S.; et al. A perception-driven autonomous urban vehicle. J. Field Robot. 2008, 25, 727–774. [Google Scholar] [CrossRef]

- Markoff, J. Google cars drive themselves, in traffic. The New York Times, 9 October 2010. [Google Scholar]

- Shim, I.; Choi, J.; Shin, S.; Oh, T.H.; Lee, U.; Ahn, B.; Choi, D.G.; Shim, D.H.; Kweon, I.S. An autonomous driving system for unknown environments using a unified map. IEEE Trans. Intell. Transp. Syst. 2015, 16, 1999–2013. [Google Scholar] [CrossRef]

- Buehler, M.; Iagnemma, K.; Singh, S. The DARPA Urban Challenge: Autonomous Vehicles in City Traffic; Springer: Berlin/Heidelberg, Germany, 2009; Volume 56. [Google Scholar]

- Zhang, J.; Singh, S. LOAM: Lidar odometry and mapping in real-time. In Proceedings of the Robotics: Science and Systems Conference (RSS), Berkeley, CA, USA, 12–16 July 2014; pp. 109–111.

- Zhang, J.; Singh, S. Visual-lidar odometry and mapping: Low-drift, robust, and fast. In Proceedings of the IEEE International Conference on Robotics and Automation, Seattle, WA, USA, 26–30 May 2015; pp. 2174–2181.

- Marquardt, D.W. An algorithm for least-squares estimation of nonlinear parameters. J. Soc. Ind. Appl. Math. 1963, 431–441. [Google Scholar] [CrossRef]

- Besl, P.J.; McKay, N.D. Method for registration of 3-D shapes. Robotics-DL tentative. 1992, 586–606. [Google Scholar]

- Chen, Y.; Medioni, G. Object modelling by registration of multiple range images. Image Vis. Comput. 1992, 10, 145–155. [Google Scholar] [CrossRef]

- Belongie, S. Rodrigues’ Rotation Formula. From MathWorld—A Wolfram Web Resource, Created by Eric W. Weisstein. 1999. Available online: http://mathworld.wolfram.com/RodriguesRotationFormula.html (accessed on 15 June 2003).

- Zhang, Z. A flexible new technique for camera calibration. IEEE Trans. Pattern Anal. Mach. Intell. 2000, 22, 1330–1334. [Google Scholar] [CrossRef]

- Zhang, Q.; Pless, R. Extrinsic calibration of a camera and laser range finder (improves camera calibration). In Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems, Sendai, Japan, 28 September–2 October 2004; Volume 3, pp. 2301–2306.

- Bok, Y.; Choi, D.G.; Kweon, I.S. Extrinsic calibration of a camera and a 2D laser without overlap. Robot. Auton. Syst. 2016, 78, 17–28. [Google Scholar] [CrossRef]

- Choi, D.G.; Bok, Y.; Kim, J.S.; Kweon, I.S. Extrinsic calibration of 2-D lidars using two orthogonal planes. IEEE Trans. Robot. 2016, 32, 83–98. [Google Scholar] [CrossRef]

| Configuration | Number of Scans | Rotation Error | Translation Error |

|---|---|---|---|

| Mean(std) in Degree | Mean(std) in mm | ||

| 1 | 113 | 2.6431 (1.5471) | 2.5639 (1.0834) |

| 2 | 108 | 2.6104 (1.5523) | 3.0944 (1.5391) |

| 3 | 130 | 2.1617 (1.4839) | 2.1124 (1.2203) |

| Total | 351 | 2.4548 (1.5379) | 2.5599 (1.3458) |

| (Unit: mm) | Width | Length | Height |

|---|---|---|---|

| Laser distance meter | 2973.1 | 4918.7 | 2374.7 |

| Proposed | 2957.6 | 4924.1 | 2371.65 |

| Error | 15.5 (0.521%) | 5.4 (0.110%) | 3.05 (0.128%) |

© 2017 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license ( http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Choi, D.-G.; Bok, Y.; Kim, J.-S.; Shim, I.; Kweon, I.S. Structure-From-Motion in 3D Space Using 2D Lidars. Sensors 2017, 17, 242. https://doi.org/10.3390/s17020242

Choi D-G, Bok Y, Kim J-S, Shim I, Kweon IS. Structure-From-Motion in 3D Space Using 2D Lidars. Sensors. 2017; 17(2):242. https://doi.org/10.3390/s17020242

Chicago/Turabian StyleChoi, Dong-Geol, Yunsu Bok, Jun-Sik Kim, Inwook Shim, and In So Kweon. 2017. "Structure-From-Motion in 3D Space Using 2D Lidars" Sensors 17, no. 2: 242. https://doi.org/10.3390/s17020242