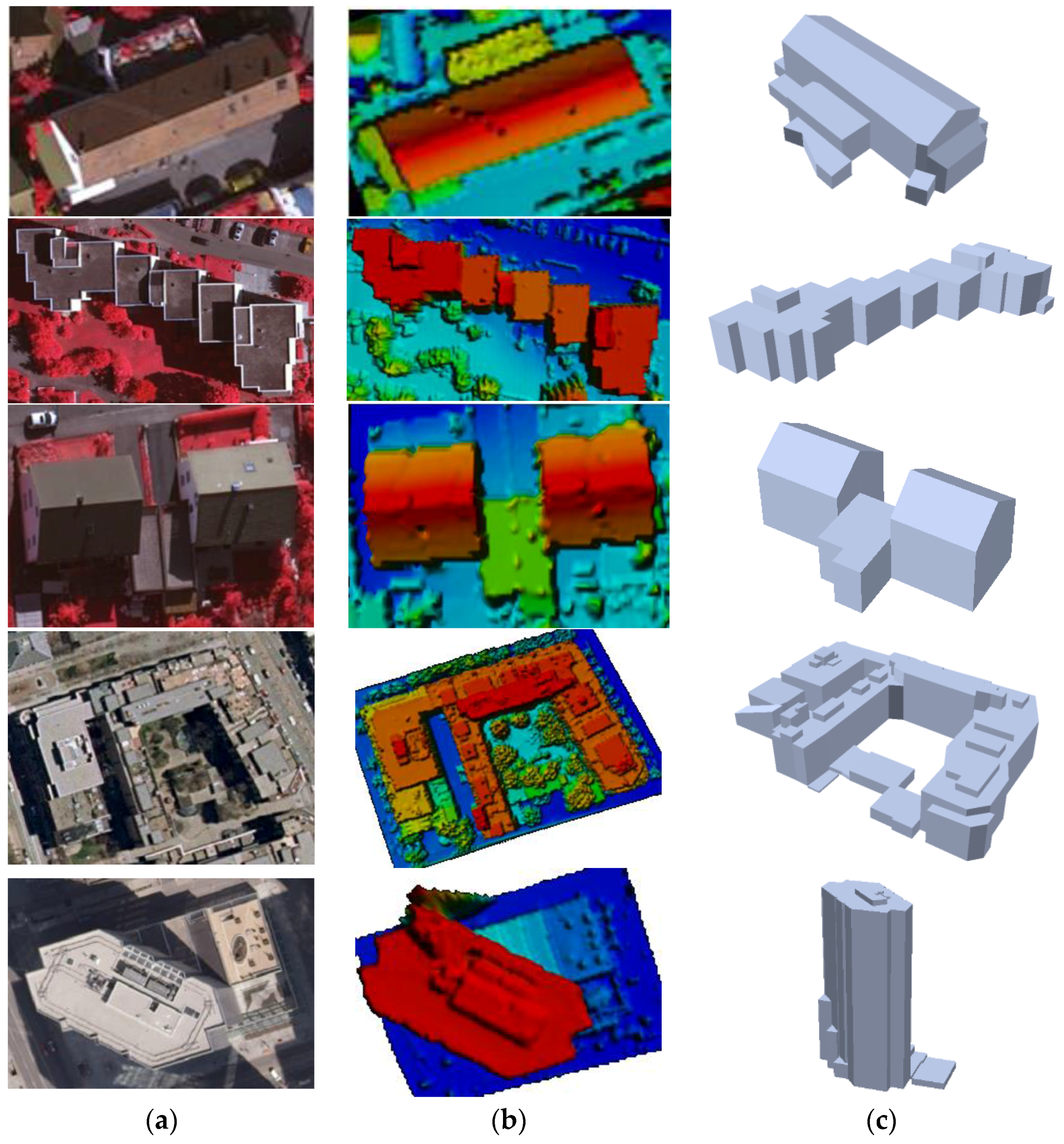

A key problem domain that we address in this paper is to reconstruct a 3D geometric model of building rooftop from remotely sensed data such as airborne laser point clouds. The representation that we follow for 3D rooftop models draws on ideas from geometric modeling used in Photogrammetry and Geographical Information Science (GIS). In the representation scheme, a 3D rooftop is modeled with either primitive geometric elements (i.e., points, lines, planes and objects), or primitive topological elements (i.e., vertices, edges, faces, and edge-groups (rings of edges on faces)). Typically, both primitive geometric and topological elements are used together for representing 3D rooftop models (e.g., CityGML and Esri ArcGIS’s shapefile). CityGML is an open data model and XML-based format for the storage and exchange of virtual 3D city models [

1].

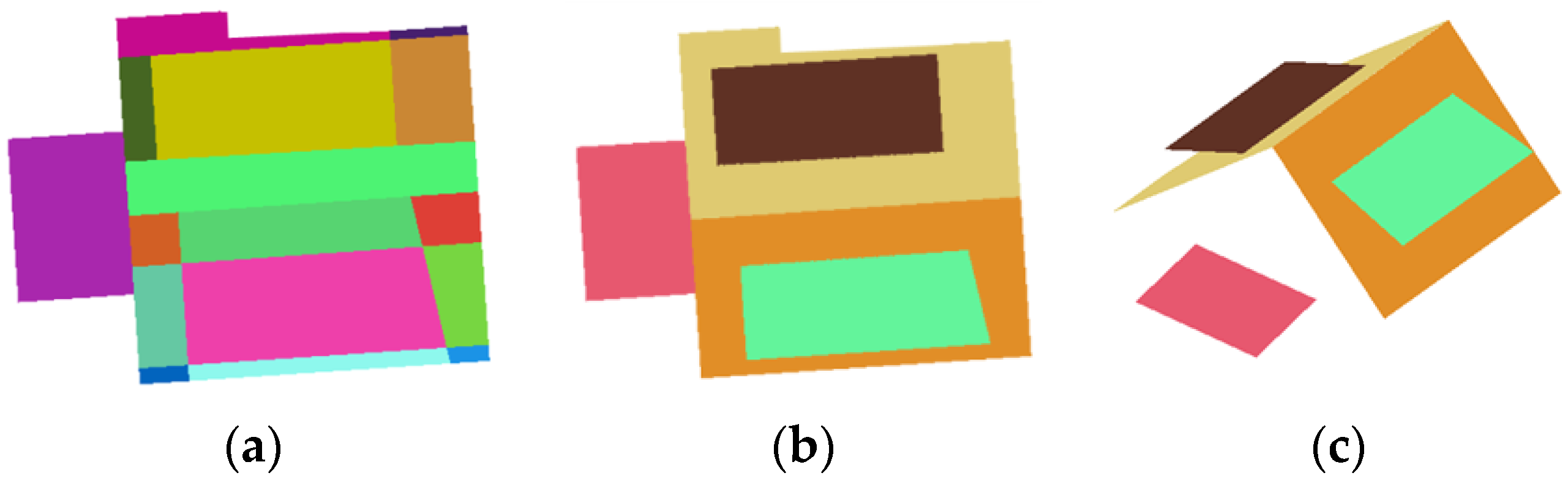

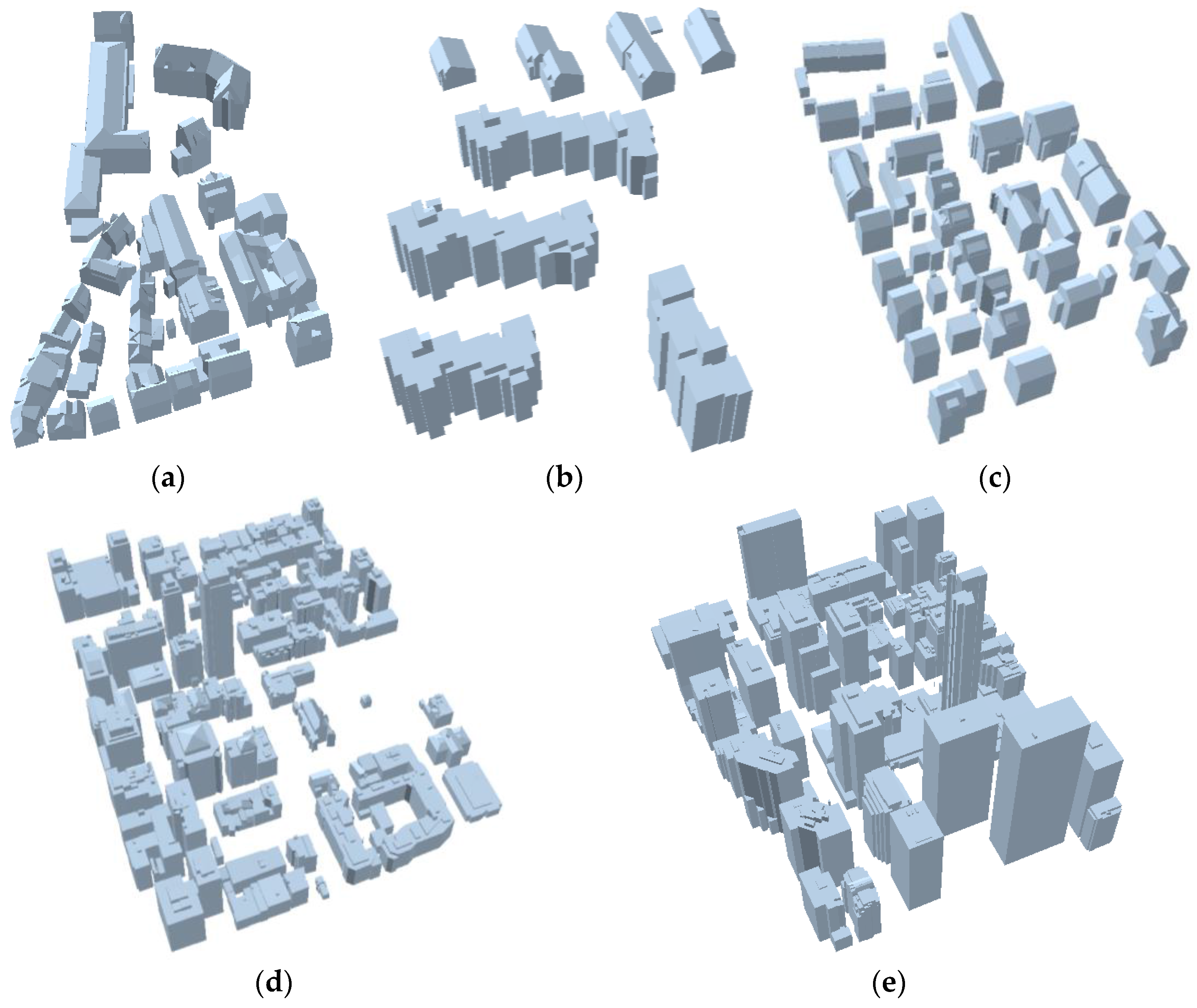

In CityGML, 3D rooftop models can be differently represented according to the level-of-detail (LoD). A prismatic model of rooftop that is a height extrusion of a building footprint is defined as LoD1 in CityGML, while LoD2 requires a detailed representation of the primitive geometric and topological elements in a 3D rooftop model. An important aspect in GIS-driven 3D model representation is that the reconstructed model elements should correspond to semantically meaningful spatial entities used in architecture, civil and urban planning: for instance, the reconstructed geometric elements represent roof lines (ridges and eaves), roof planes (gables and hips), vents, windows, doors, wall columns, chimneys, etc. Thus, a photo-realistic reconstructed rooftop model can be used for assisting human decisions on but not limited to asset management, renovating planning, energy consumption, evacuation planning, etc. As discussed in Rottensteiner et al. [

2], a city-scale building model will provide an important mean to manage urban infrastructure more effectively and safely for addressing critical issues related to rapid urbanization. In this study, we aim to reconstruct LoD2 models of the rooftops from remote sensed data.

Traditionally, 3D rooftop models are derived through interaction with a user using photogrammetric workstations (e.g., multiple-view plotting or mono-plotting technology). This labor-intensive model generation process is tedious and time-consuming, which is not suitable for reconstructing rooftop models at city-scale. As an alternative method, great research efforts have been made for developing a machine-intelligent algorithm to reconstruct photo-realistic rooftop models in a fully-automated manner for the last two decades [

3]. Recently, airborne light detection and ranging (LiDAR) scanners became one of the primary data acquisition tools, which enable rapid capturing of targeted environments in 3D with high density and accuracy. Due to these advantages, state-of-the-art technologies for automatically reconstructing 3D rooftop models using airborne LiDAR data have been proposed by many researchers [

2,

3,

4,

5,

6]. However, only limited success in a controlled environment has been reported, and the success of developing an error-free rooftop modeling algorithm is not achieved yet [

2].

As discussed previously, the aforementioned factors lead to errors in recovering the modeling cues sufficiently well for generating an error-free rooftop model. Typically, knowledge of a rooftop object of interest (e.g., roof type, structure, number of roof planes, etc.) is unknown. Thus, recovering all the primitive topological elements accurately with an error-free geometric model is a very challenging vision task. To address this issue, many researchers have introduced some modeling constraints to compensate the limitations of erroneous modeling cues [

7,

8,

9,

10]. These constraints are used as prior knowledge on targeted rooftop structures: (1) for constructing the modeling cues to conform to Gestalt law (i.e., parallelism, symmetry, and orthogonality), and linking fragmented modeling cues in the frame of perceptual grouping; and (2) by determining optimal parametric rooftop model fit into part of rooftop objects through a trial-and-error of model section from a given primitive model database. We refer these modeling constraints as an “explicit regularity” imposed on rooftop shape as the definition of regularity is fully and clearly described. However, only a few of the explicit regularity terms can be applicable, and the shapes of rooftops in reality appear too complex to be reconstructed with those limited constraints.

In this paper, we focus on the data-driven modeling approach to reconstruct 3D rooftop models from airborne LiDAR data by introducing flexible regularity constraints that can be adjusted to given objects in the recovery of modeling cues and topological elements. The regularity terms that are used in this study represent a regular pattern of the line orientations, and the linkage between adjacent lines. In contrast to the term of “explicit regularity”, we refer to it as an “implicit regularity” because its pattern is not directly expressed, but found with given data and object (rooftop). This implicit regularity is used as a constraint for changing the geometric properties of the modeling cues and topological relations among adjacent modeling cues to conform to a regular pattern found in the given data. This data-adaptive regularity (or regularization process) allows us to reconstruct more complex rooftop models.

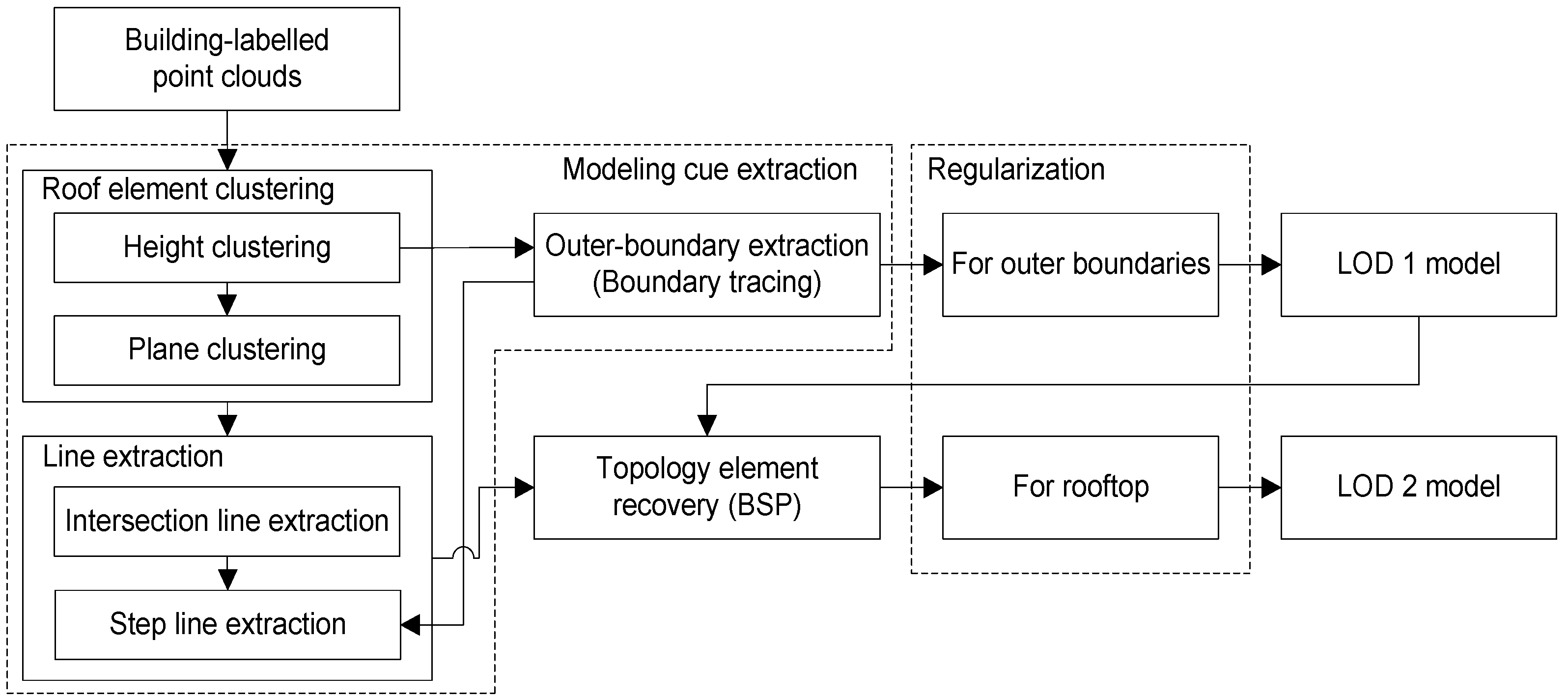

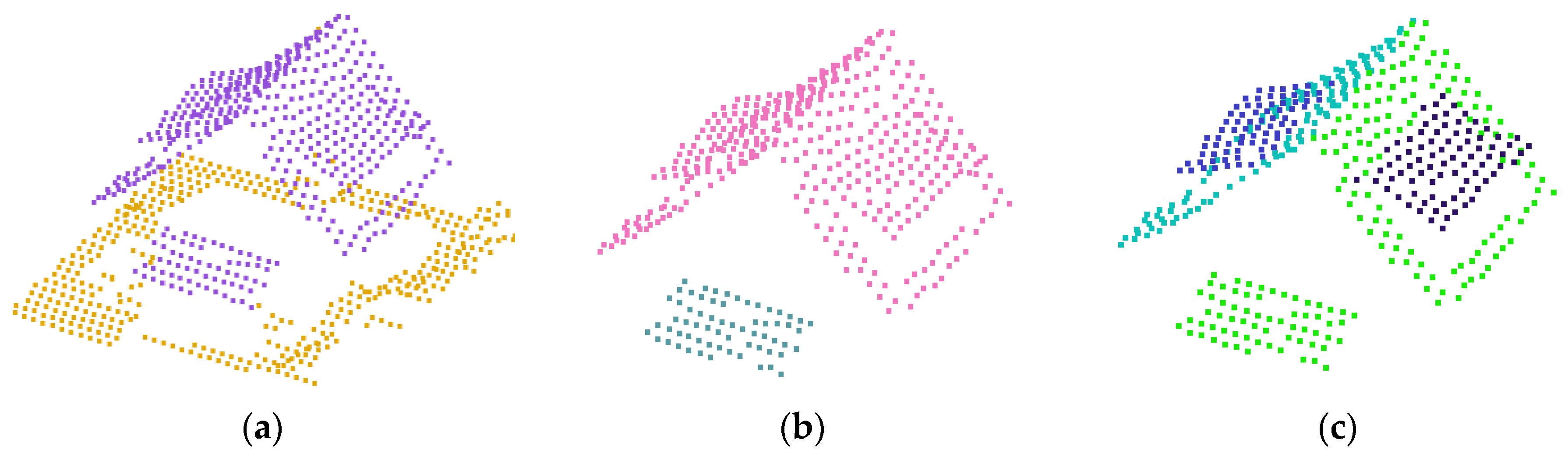

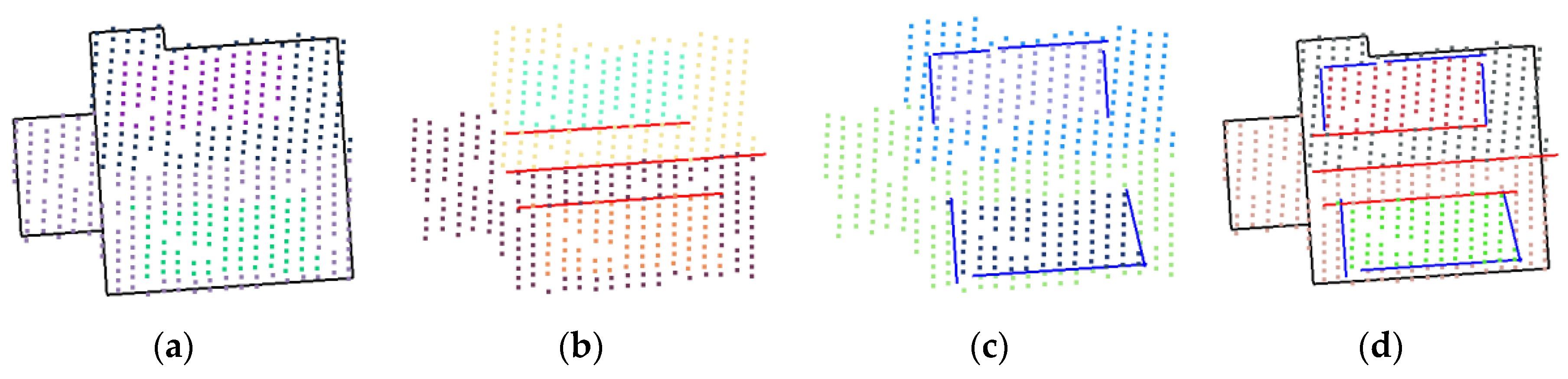

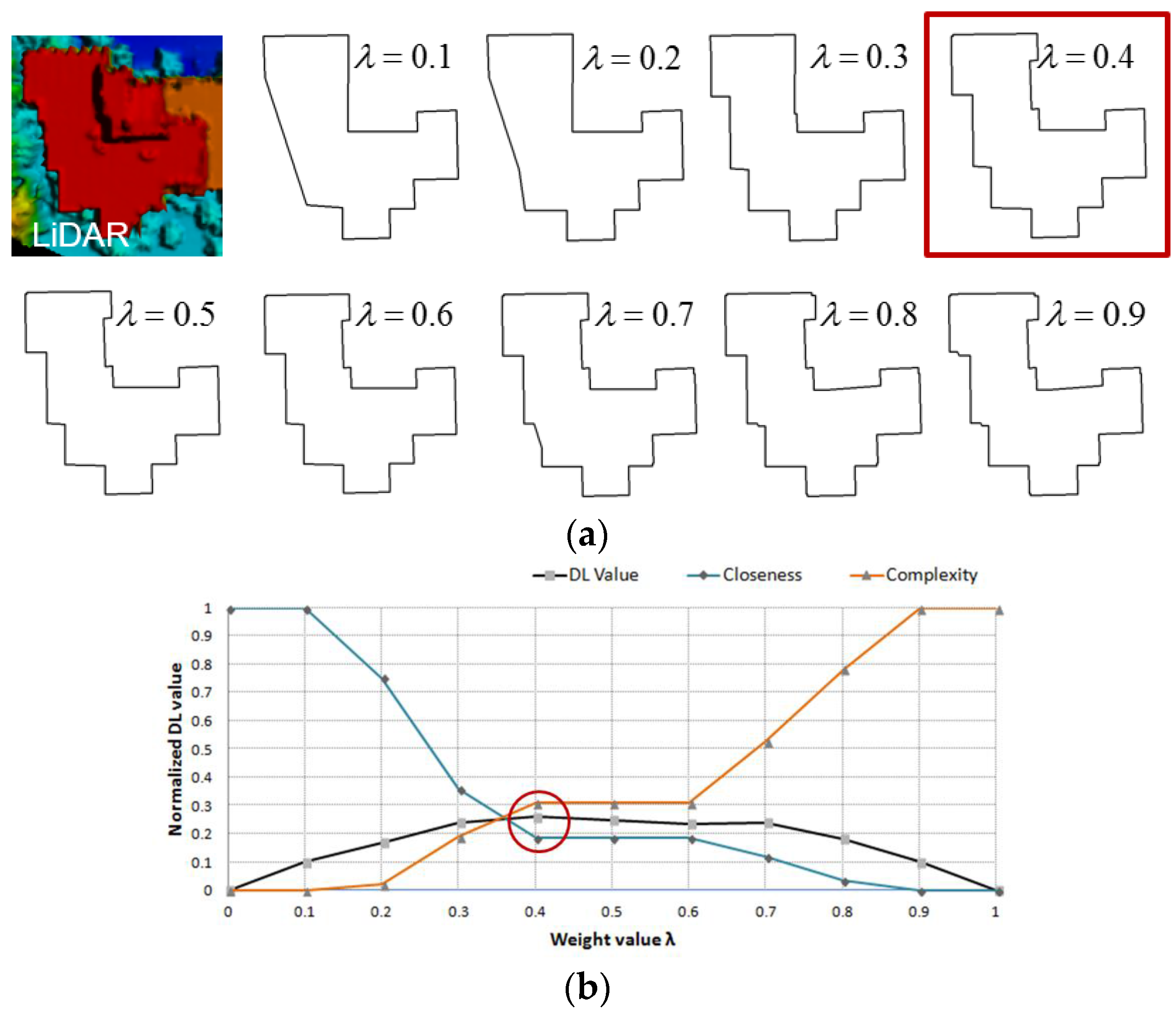

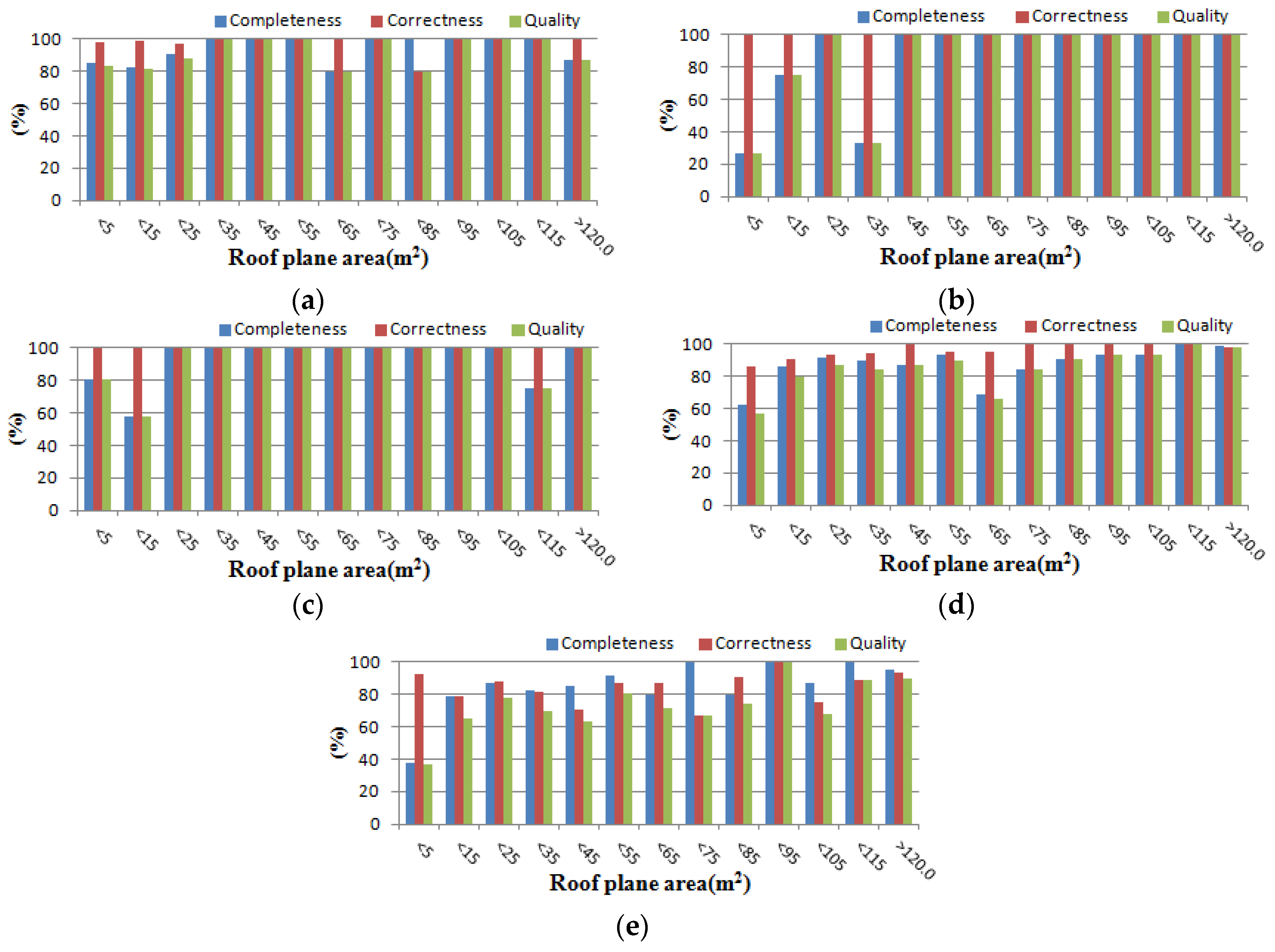

In this paper, we describe a pipeline of 3D rooftop model reconstruction from airborne LiDAR data. First, to gain some computational efficiency, we decompose a rooftop object into a set of homogeneous point clouds based on height similarity and plane similarity, from which the modeling cues of line and plane primitives are extracted. Secondly, the topological elements among the modeling cues are recovered by iteratively partitioning and merging over a given point space with line primitives extracted at a global scale using the Binary Space Partitioning (BSP) technique. Thirdly, errors in the modeling cues and topological elements are implicitly regularized by removing erroneous vertices or rectifying the geometric properties to conform to globally derived regularity. This implicit regularization process is implemented in the framework of Minimum Description Length (MDL) combined with Hypothesize and Test (HAT). The parameters governing the MDL optimization are automatically estimated based on Min-Max optimization and Entropy-based weighting method. The proposed parameter estimators provide optimal weight values that adapt according to building properties such as; size, shape, and the number of boundary points. The proposed pipeline of rooftop model generation was developed based on previous works reported in [

11]. We extended the work by proposing data-adaptive parameter estimation, conducting an extensive performance evaluation and engineering works to implement a computationally efficient modeling pipeline.

Related Works

Numerous building reconstruction algorithms have been published for the past two decades. Although it is difficult to clearly classify these various methods into specific categories, there are several ways to categorize the methods: the used data source (single vs. multi-sources), the data processing strategy (data-driven (or generic), model-driven (or parametric)), and the amount of human interaction (manual, semi-automatic, or fully automated) [

12]. Of those, classifying existing methods into data-driven or model-driven approaches provides a good insight for understanding and developing 3D building model reconstruction algorithms.

In the model-driven approaches, 3D building models are reconstructed by fitting parameterized primitives to data. This is possible because many buildings in rural and suburban area have common shapes in whole building or building roof parts. These common roof shapes such as flat, gable, and hip roof are considered as standard primitives for representing building rooftop structures. Simple buildings can be well represented as regularized building models using pre-defined parameterized primitives even with low density data and presence of missing data. However, complex buildings and arbitrarily shaped buildings are difficult to model using a basic set of primitives. In addition, the selection of the proper primitives among a set of primitives is not an easy task. To address the limitations, Verma et al. [

8] presented a parametric modeling method to reconstruct relatively complex buildings by combining simple parametric roof shapes that are categorized into four types of simple primitives. In this study, the roof-topology graph is constructed to represent the relationships among the various planar patches of approximate roof geometry. The constructed roof-topology graph is decomposed into sub-graphs, which represents simple parametric roof shapes, and then parameters of the primitives are determined by fitting LiDAR data. Although they decomposed complex buildings into simple building parts, many building parts cannot be still explained by their four simple shape primitives. Similarly, Milde et al. [

13] reconstructed 3D building models by matching sub-graphs of the region adjacency graph (RAG) with five basic roof shapes and then by combining them using three connectors. Kada and McKinley [

14] decomposed the building’s footprint into cells, which provided the basic building blocks. Three types of roof shapes including basic, connecting, and manual shapes are defined. Basic shapes consist of flat, shed, gabled, hipped, and Berliner roofs while connecting shapes are used to connect the roofs of the sections with specific junction shapes. The parameterized roof shapes of all cells are determined from the normal direction of LiDAR points. The entire 3D building model is represented by integrating the parameterized roof elements with the neighboring pieces. Although a high level of automation is achieved, the method still requires manual works to adjust cell parameters and to model more complex roof shapes like mansard, cupola, barrel, and even some detail elements. Lafarge et al. [

15] reconstructed building models from a digital surface model (DSM) by combining generic and parametric methods. Buildings are considered as assemblages of 3D parametric blocks from a library. After extracting 2D building supports, 3D parametric blocks are placed on the 2D supports using Gibbs model, which controls both the block assemblage and the fitting to data. The optimal configuration of 3D blocks is determined using the Bayesian framework. They mentioned that the optimization step needs to be improved to achieve both higher precision and shorter computing time as future work. Based on a predefined primitive library, Huang et al. [

10] conducted a generative modeling to reconstruct roof models that fit the data. The library provides three groups including 11 types of roof primitives whose parameters consist of position parameters, contour parameters, and shape parameters. Building roofs are represented as one primitive or an assemblage of primitives allowing primitives overlaps. For combining primitives, they derived combination and merging rules which consider both vertical and horizontal intersections. Reversible Jump Markov Chain Monte Carlo (RJMCMC) with a specified jump mechanism is conducted for the selection of roof primitives, and the sampling of their parameters. Although they have shown potential and flexibility of their method, there are issues to be solved: (1) uncertainty and instability of the reconstructed building model; (2) influence of prior knowledge and scene complexity on completeness of the reconstruction; and (3) heavy computation time.

In contrast with model-driven approaches, data-driven approaches do not make any assumptions regarding to the building shapes, thus they can theoretically handle all kinds of buildings. However, the approach may cause considerable deformations due to the sensitivity to surface fluctuations and outliers in the data. In addition, it requires a regularization step during the reconstruction process. In general, the generic approach starts by extracting building modeling cues such as surface primitives, step lines, intersection lines, and outer boundary lines followed by reconstructing the 3D building model. The segmentation procedure for extracting surface primitives divides a given data set into homogeneous regions. Classical segmentation algorithms such as region growing [

16,

17] and RANSAC [

18] can be used for segmenting building roof planes. In addition, Sampath and Shan [

19] conducted eigenanalysis for each roof point within its Voronoi neighborhood, and then adopted the fuzzy k-means approach to cluster the planar points into roof segments based on their surface normal. Then, they separated the clusters into parallel and coplanar segments based on their distance and connectivity. Lafarge and Mallet [

20] extracted geometric shapes such as planes, cylinders, spheres, or cones for identifying the roof sections by fitting points into various geometric shapes, and then proposed a method for arranging both the geometric shapes and the other urban components by propagating point labels based on MRF. Yan et al. [

21] proposed a global solution for roof segmentation. Initial segmentation is optimized by minimizing a global energy function consisting of the distances of LiDAR points to initial planes, spatial smoothness between data points, and the number of planes. After segmenting points or extracting homogeneous surface primitives, modeling cues such as intersection lines and step lines can be extracted based on geometrical and topological relationships of the segmented roof planes. Intersection lines are easily obtained by intersecting two adjacent planes or segmented points while step lines are extracted at roof plane boundary with abrupt height discontinuity. To extract step lines, Rottensteiner et al. [

16] detected edge candidate points and then extracted step lines from an adjustment considering edge points within user-specified threshold. In addition, Sohn et al. [

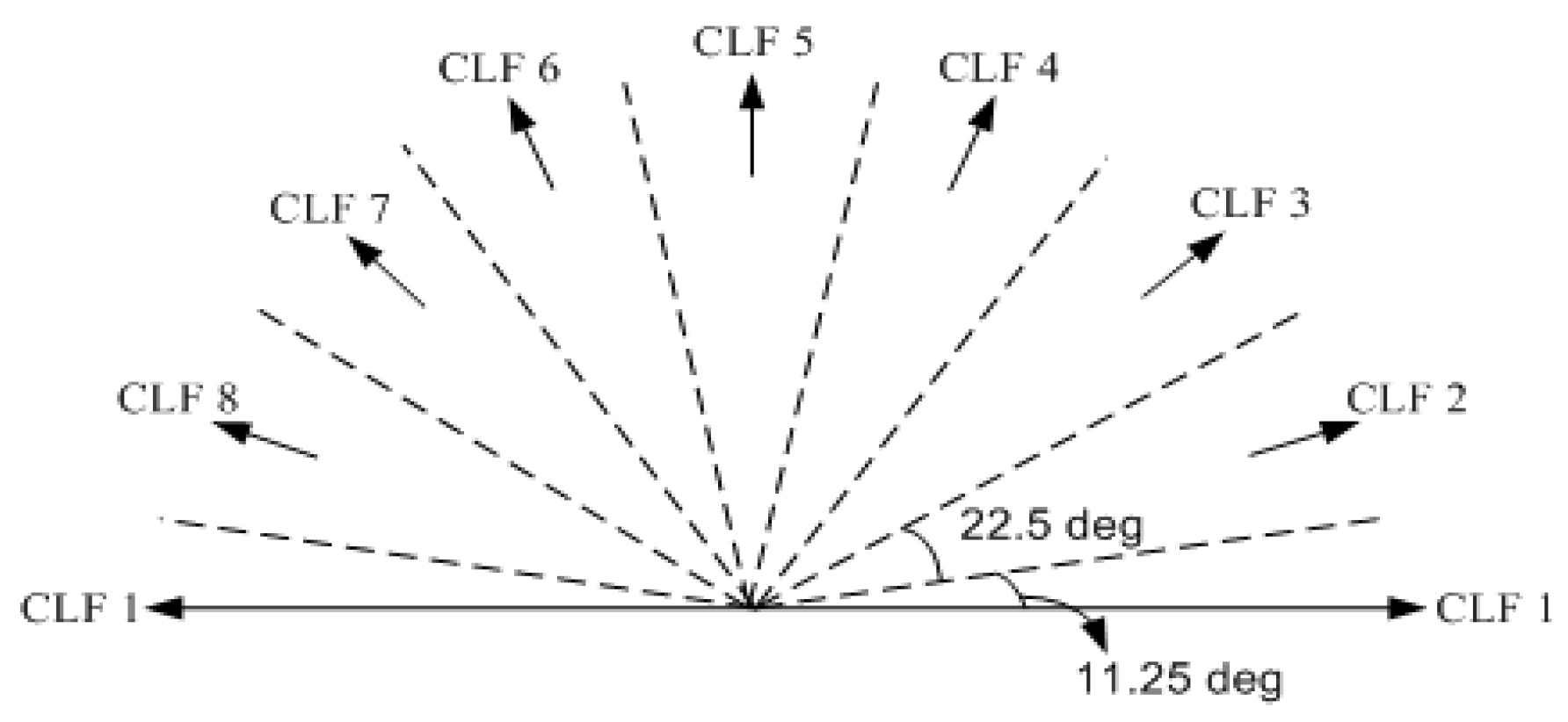

22] proposed a step line extractor, called Compass Line filter (CLF), for extracting straight lines from irregularly distributed LiDAR points. Although outer boundary is one type of step line, it is recognized as a separate process in many data-driven approaches. Some researchers delineated initial boundary lines from building boundary points using alpha shape [

23], ball-pivoting [

8], and contouring algorithm [

24]. Then, the initial boundary was simplified or regularized. The detail reviews for simplification or regularization of boundary will be given in following paragraphs. Once all building modeling cues are collected, 3D building models are reconstructed by aggregating the modeling cues. To reconstruct topologically and geometrically correct 3D building models, Sohn et al. [

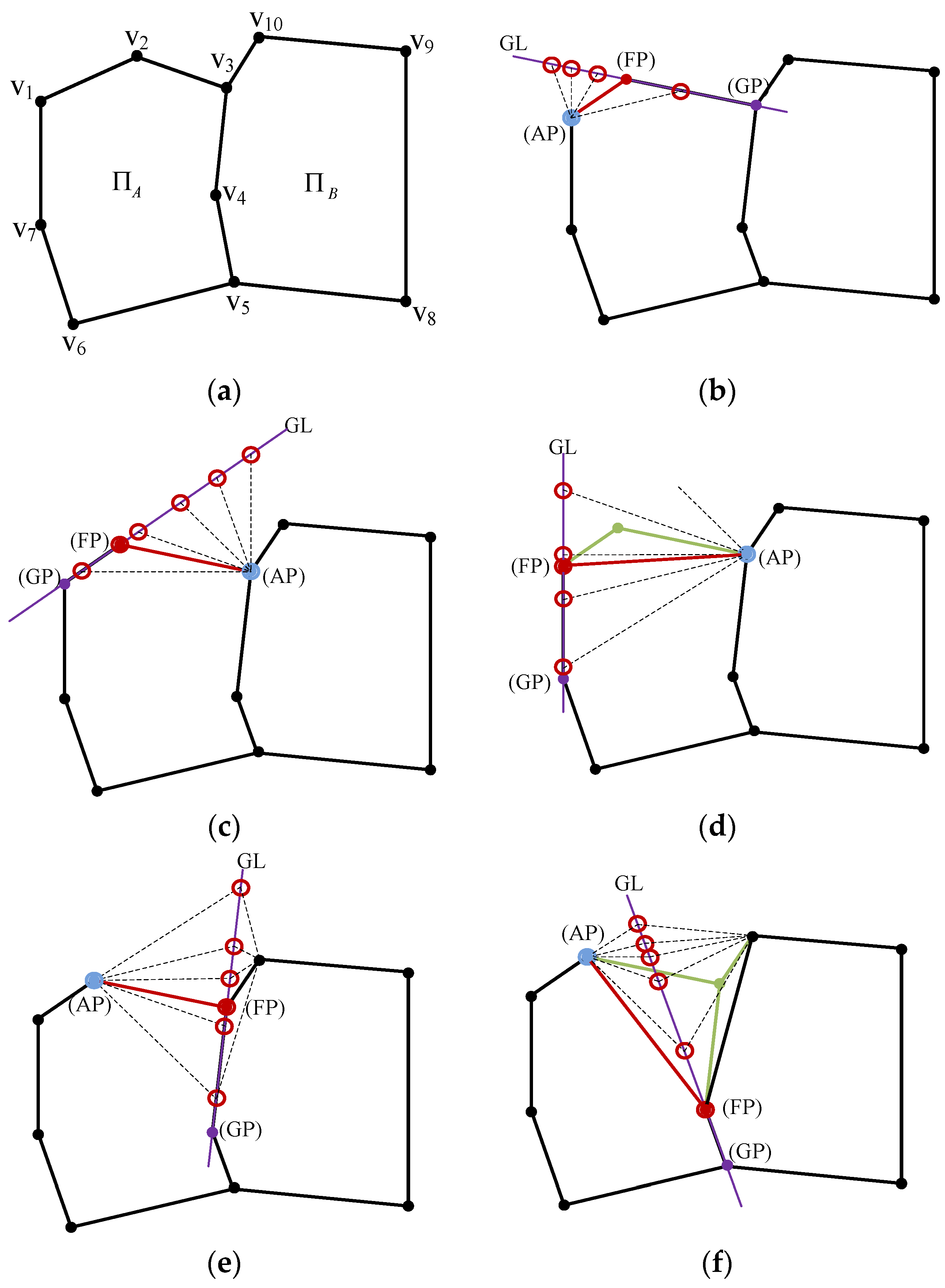

22] proposed the BSP technique, which progressively partitions a building region into homogeneous binary convex polygons. Rau and Lin [

25] proposed a line-based roof model reconstruction algorithm, namely TIN-Merging and Reshaping (TMR), to reconstruct topology with geometric modeling. Oude Elberink and Vosselman [

26], and Perera and Maas [

27] used a roof topology graph to preserve roof topology. In the latter, roof corners are geometrically modeled using the shortest closed cycles and the outermost cycle derived from the roof topology graph.

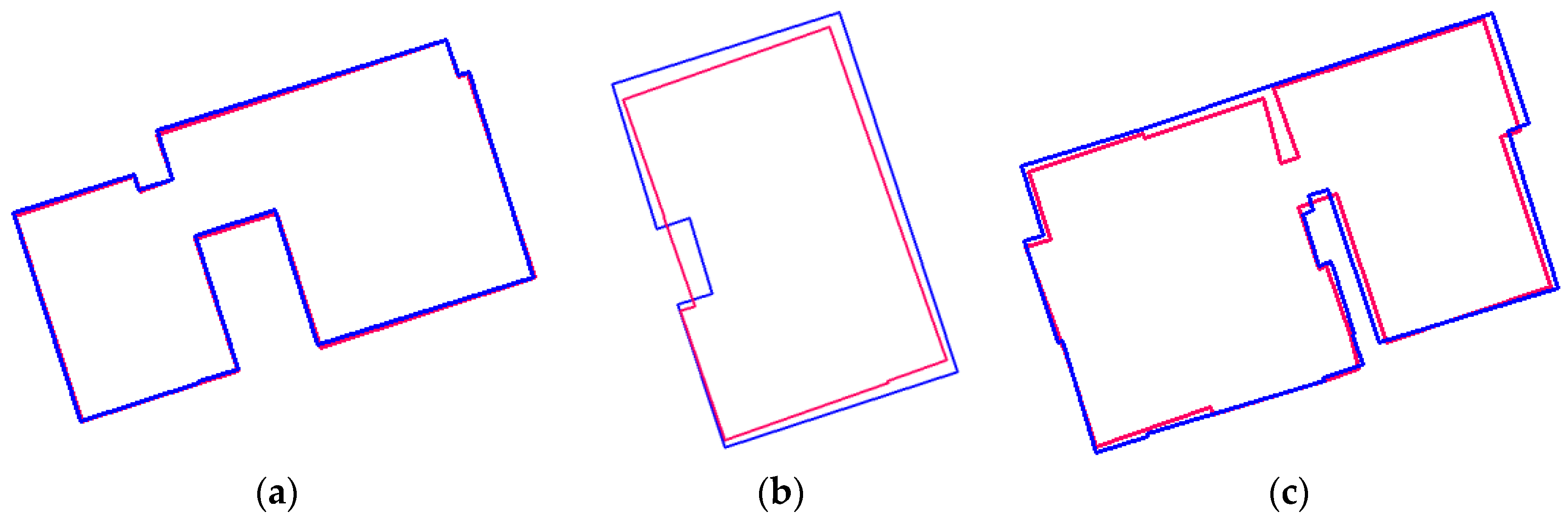

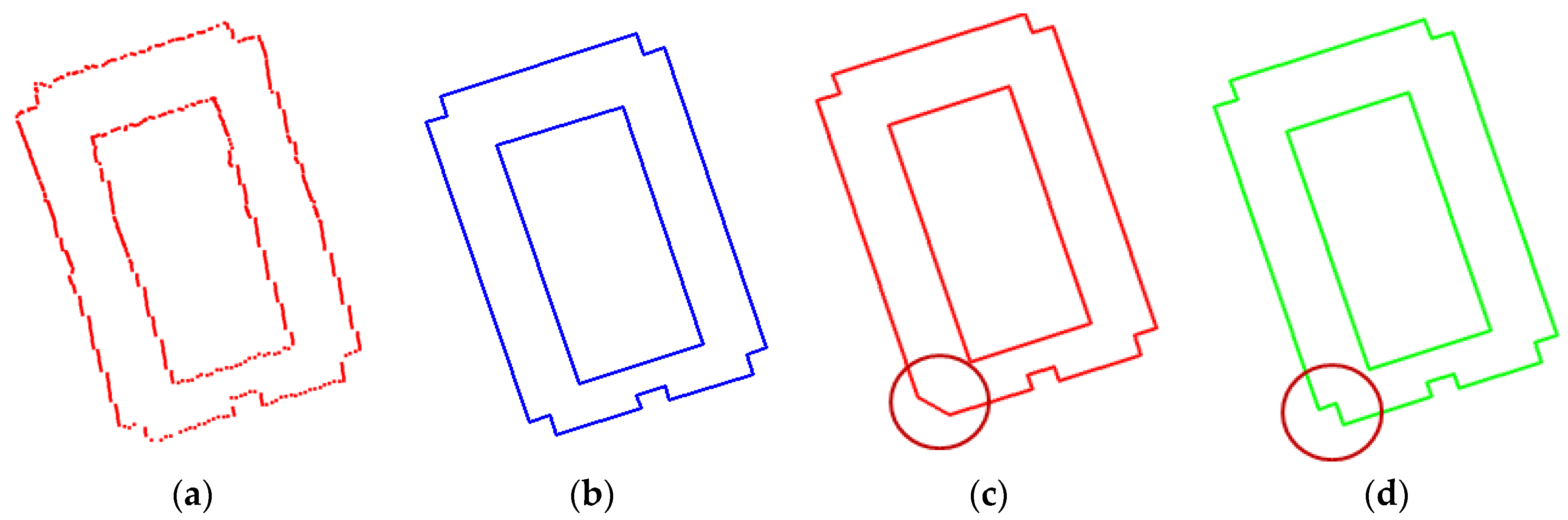

Detection of building boundary is an intermediate step for 3D building reconstruction although it is not required in all building reconstruction algorithms. Generally, the initial boundaries extracted from irregular LiDAR points have jagged shape with large numbers of vertices. Thus, a simplification or regularization process is required to delineate plausible building boundaries with certain regularities such as orthogonality, parallelism, and symmetry. Various techniques related to the regularization of building boundary have been proposed in the literature [

28]. In most methods, the boundary detection process starts by extracting boundary points from segmented points. From extracted boundary points, initial building boundaries are generated by tracing boundary points followed by a simplification or regularization process, which improves the initial boundary. The easiest method to improve initial boundary is to simplify the initial boundary by removing vertices but preserving relevant points. The well-known Douglas–Peucker (DP) algorithm [

29] is widely recognized as the most visually effective line simplification algorithm. The algorithm starts by selecting two points which have the longest distance and recursively adding vertices whose distance from the line is less than a given threshold. However, the performance of the algorithm fully depends on the used threshold and is substantially affected by outliers. Another approach extracts straight lines from boundary points using the Hough Transform [

30] or using RANSAC [

31]. The extracted lines are then connected by intersections of the extracted straight lines to generate closed outer boundary lines. However, Brenner [

28] pointed out that the methods require some additional steps due to missing small building edges.

On the other hand, the regularization process imposes certain regularities when the initial boundary is simplified. Vosselman [

7] assumed that building outlines are along or perpendicular to the main direction of a building. After defining the position of a line by the first two boundary points, the line is updated using the succeeding boundary points until the distance of a point to the line exceeds some bound. The next line starts from this point in a direction perpendicular to the previous line. A similar approach was proposed by Sampath and Shan [

9]. They grouped points on consecutive edges with similar slopes and then applied a hierarchical least squares solution to fit parametric lines representing the building boundary.

Some methods are based on the model hypothesis and verification approach. Ameri [

32] introduced the Feature Based Model Verification (FBMV) for modification and refinement of polyhedral-like building objects. In their approach, they imposed the geometrical and topological model information to the FBMV process as external and internal constraints which consider linearity for straightening consecutive lines, connectivity for establishing topology between adjacent lines, orthogonality, and co-planarity. Then, the weighted least squares minimization was adopted to produce a good regularized description of a building model. Weidner and Förstner [

33] adopted the MDL concept to regularize noisy building boundaries. For four local consecutive points, ten different hypothetical models are generated with respect to regularization criteria. Then, MDL, which depends on the mutual fit of the data and model and on the complexity of the model, is used to find the optimal regularity of the local configuration. Jwa et al. [

34] extended the MDL-based regularization method by proposing new implicit hypothesis generation rules and by re-designing model complexity terms where line directionality, inner angle and number of vertices are considered as geometric parameters. Furthermore, Sohn et al. [

11] used the MDL-based concept to regularize topologies within rooftop model. Zhou and Neumann [

35] introduced global regularities in building modeling to reflect the orientation and placement similarities among 2.5D elements, which consist of planar roof patches and roof boundary segments. In their method, roof-roof regularities, roof-boundary regularities, and boundary-boundary regularities are defined and then the regularities are integrated into a unified framework.