Weighted Kernel Entropy Component Analysis for Fault Diagnosis of Rolling Bearings

Abstract

:1. Introduction

2. The Theoretical Background of WKECA for Fault Diagnosis

2.1. Brief Review of KECA

2.2. Introduction of WKECA

2.3. Selecting Optimal Weights for Weighted Kernel Entropy Component Analysis by Genetic Algorithm

- (1)

- Individual encoding: defined the individual is a set of weights l1, l2, ..., lc, the encoding method based on binary for each weight is used.

- (2)

- Population initialization: an initial population with nr individuals (set to 20) is randomly created.

- (3)

- Fitness calculation: the individual selection for the next generation is done based on the fitness. Taking advantage of Liu and Wang’s work [19], the fitness function is defined as f(X) = CA + kRBW, where CA is the training accuracy which can represent the performance of extracted features, k is a positive constant, and RBW is the Fisher criterion which can indicate the class separability. RBW is the ratio of between-class distance Sb and within-class distance Sw [33]. High classification accuracy and large class separability can be obtained by maximizing the fitness function, which results in evolving more discriminate information than KECA with a proper k. Therefore, good generalization performance for WKECA is possible to be acquired on both training and testing samples.

- (4)

- Genetic operators: new chromosomes are generated to update and optimize population continuously by genetic operators including selection, cross-over and mutation. The crossover probability and mutation probability are set to 0.7 and 0.01, respectively. The selected probability of every individual is , m = 1,... , nr, where f(wm) is the individual’ fitness value.

- (5)

- Terminating conditions: when the value of fitness does not change again during the iteration procedure or the number of iterations has reached the maximum value (50 in this study) the program will terminate.

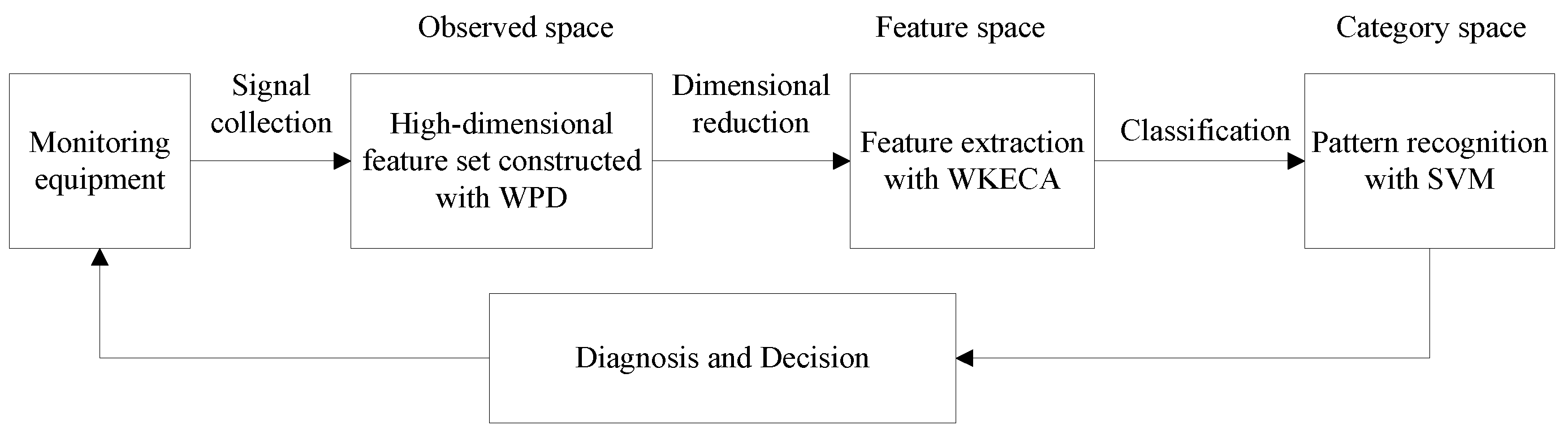

2.4. Fault Diagnosis Based on WKECA

- (1)

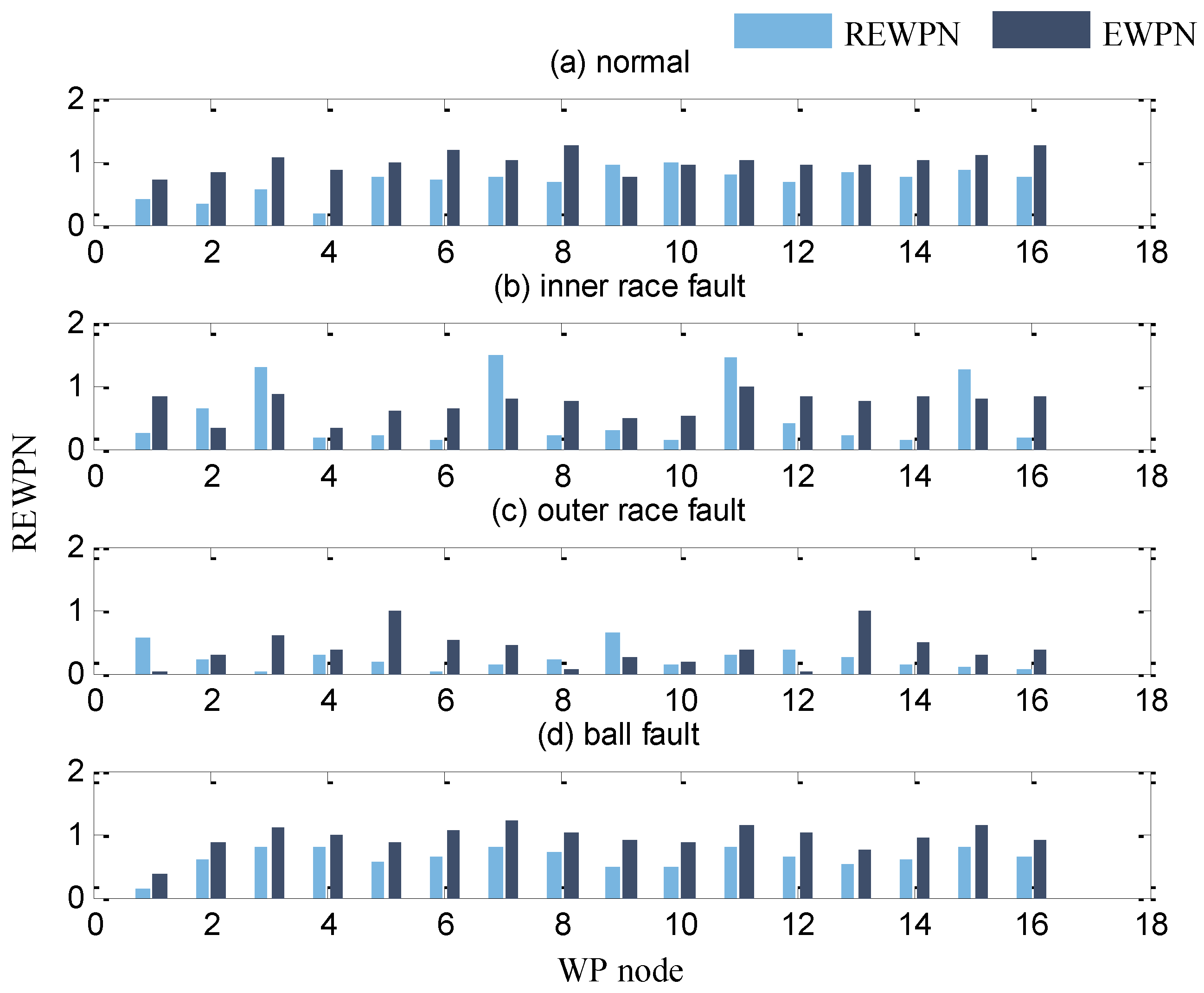

- Decomposing the vibration signals into different frequency bands by using WPD, and then we can acquire the high dimensional feature set X = [x1, ..., xN]T including REWPNs and EWPNs, where N is the number of the signal samples.

- (2)

- Carrying out feature extraction to the high-dimensional dataset obtained from vibration signals with WKECA algorithm, capturing their intrinsic manifold structure, and then we can obtain the low-dimensional features by projecting the original high-dimensional observed space into low-dimensional feature space. Meanwhile, the optimal mapping direction can be acquired so that new testing samples can be mapped into the low-dimensional feature space.

- (3)

- Implementing pattern classification of the datasets in the low-dimensional feature space with support vector machine (SVM) classifier.

- (4)

- Determining the type of failures by the classification results, and we can put forward the corresponding decisions or control measures.

3. Experimental Results and Analysis

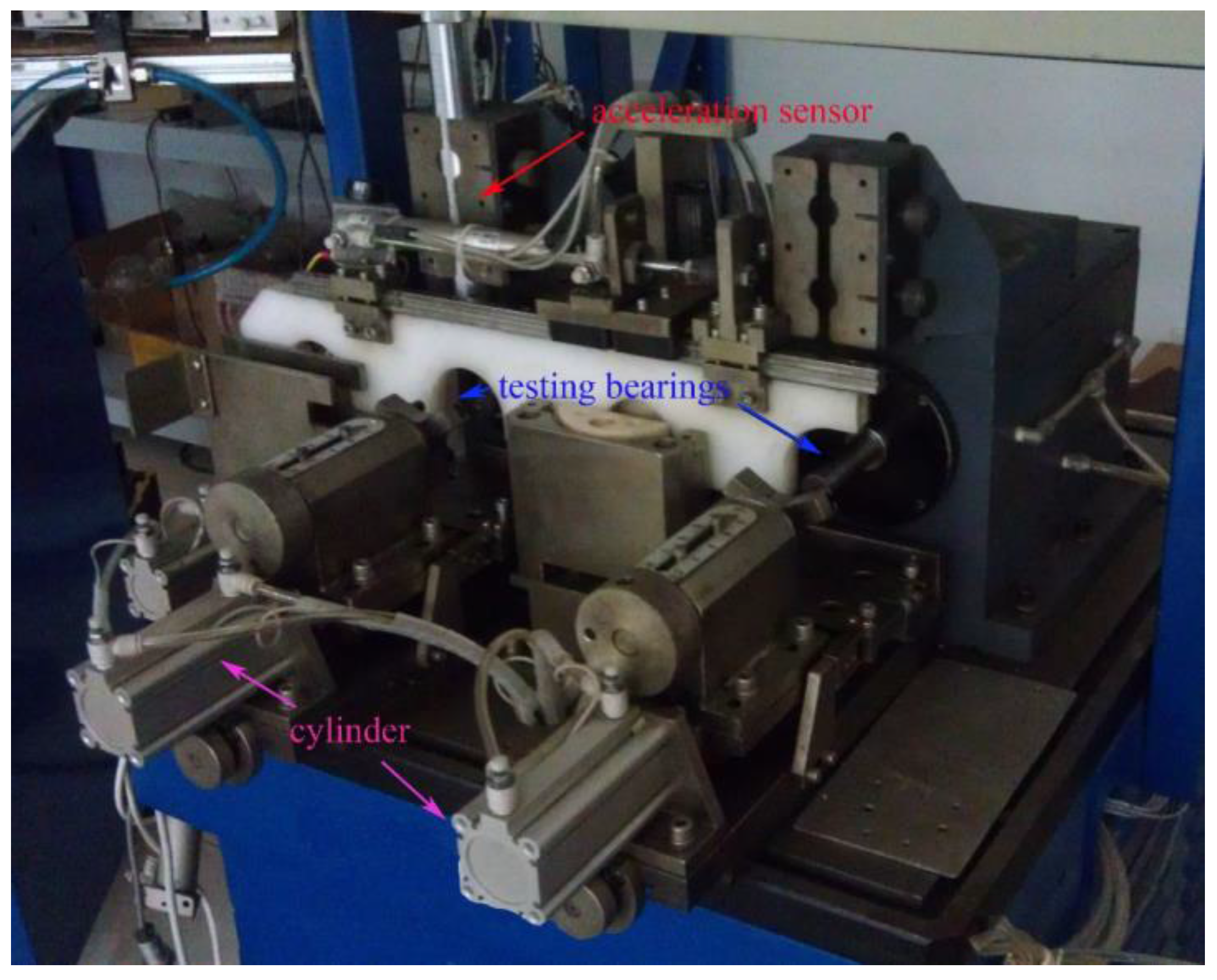

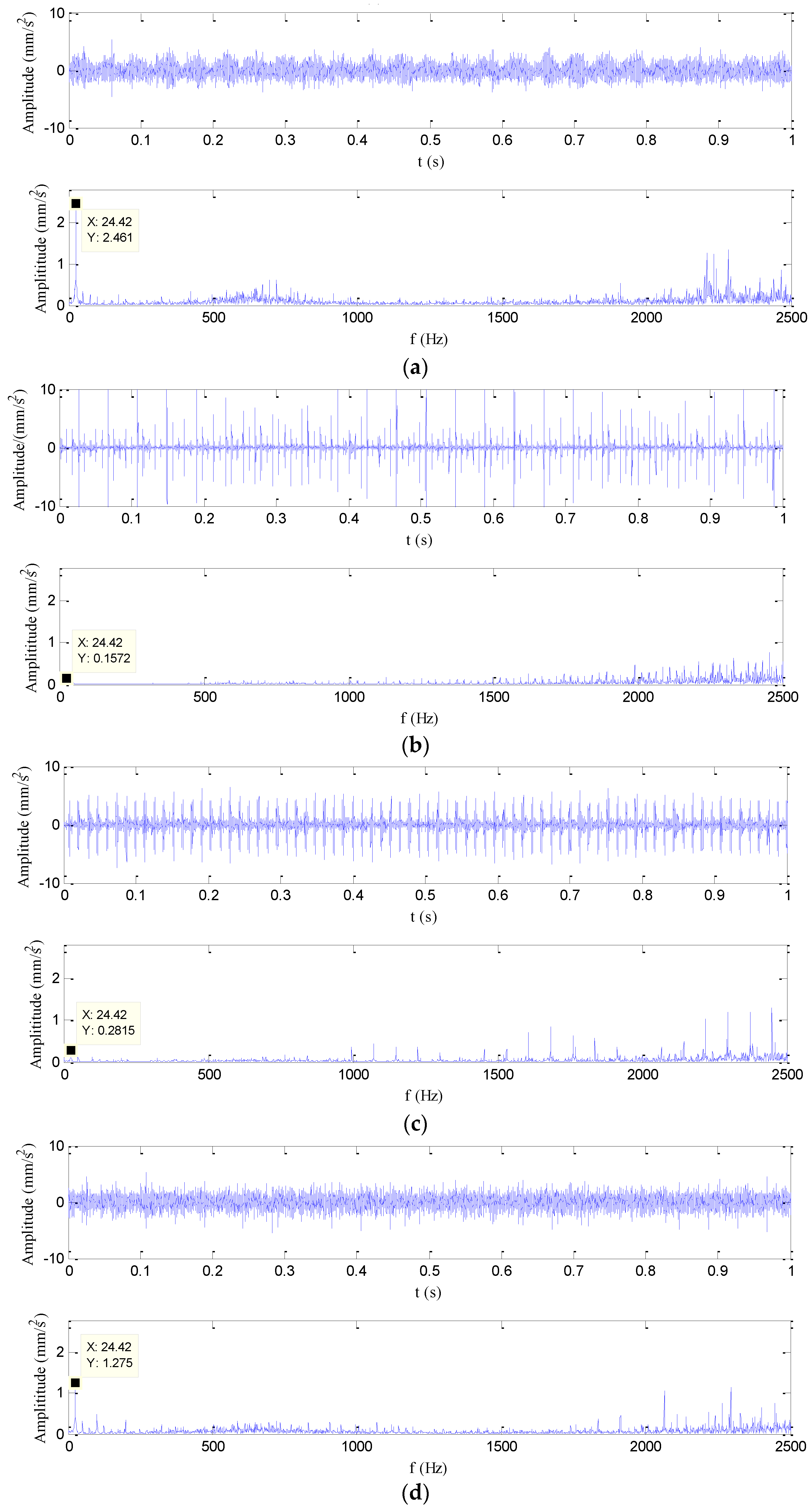

3.1. Experimental Description

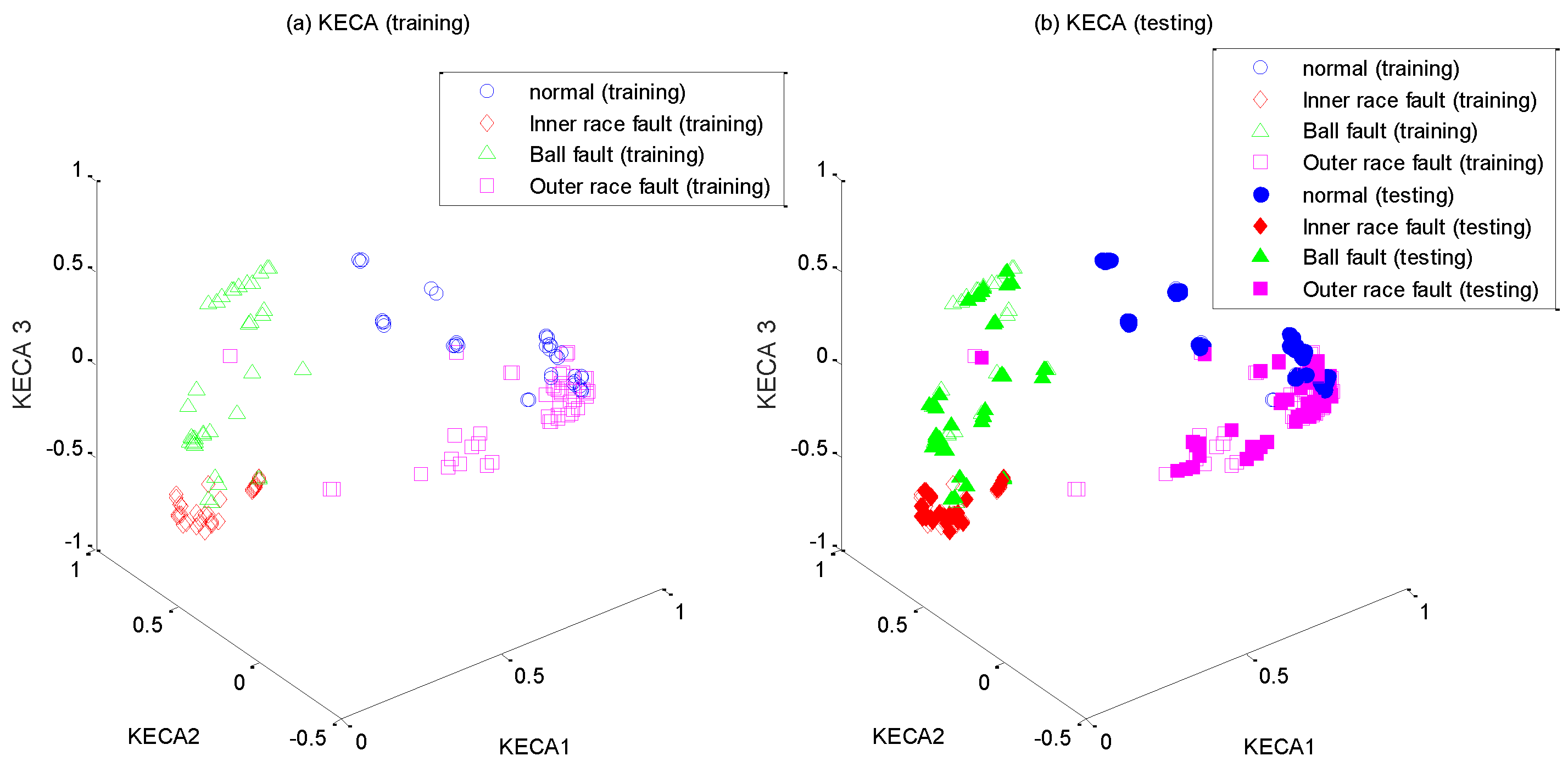

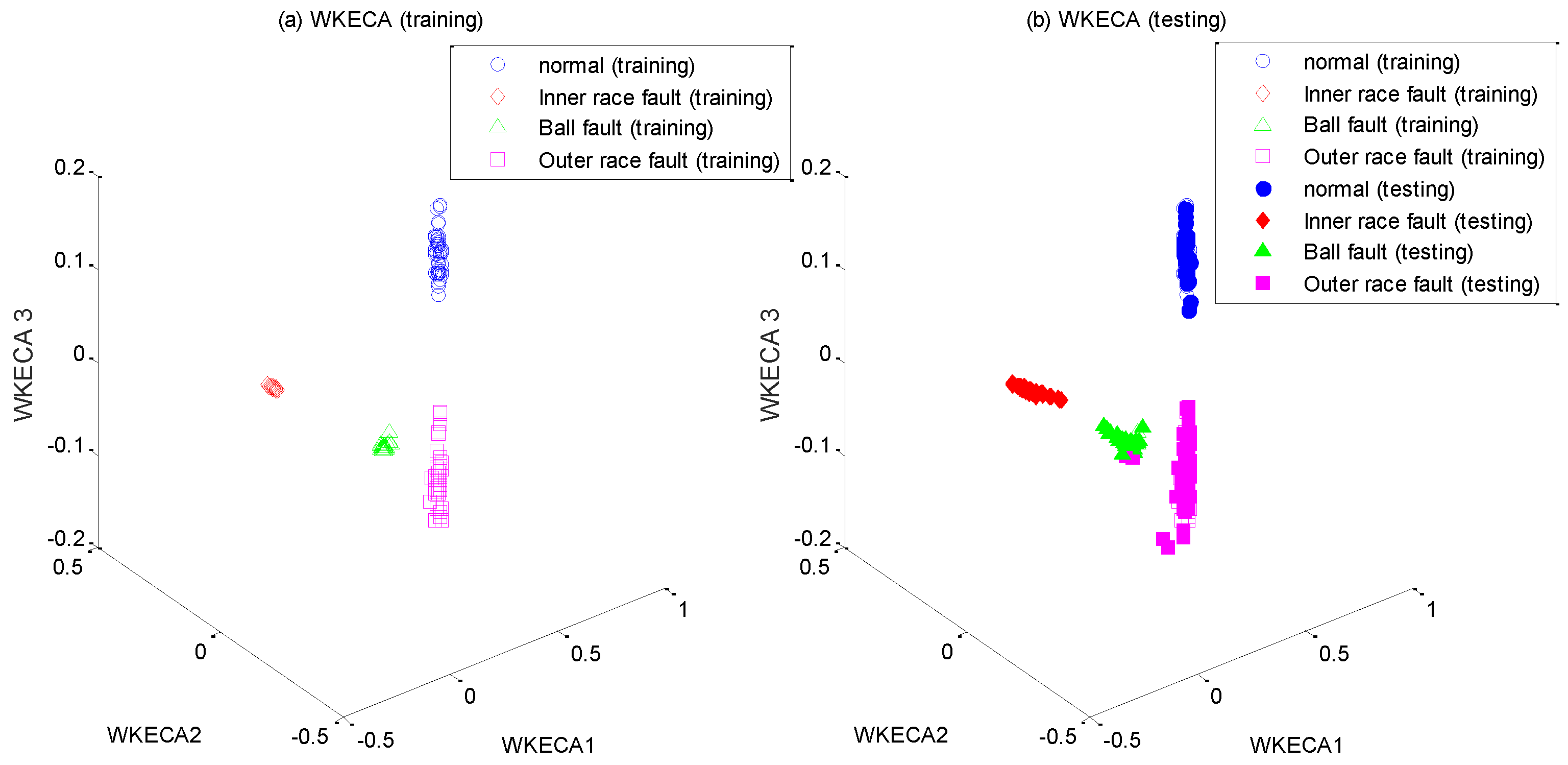

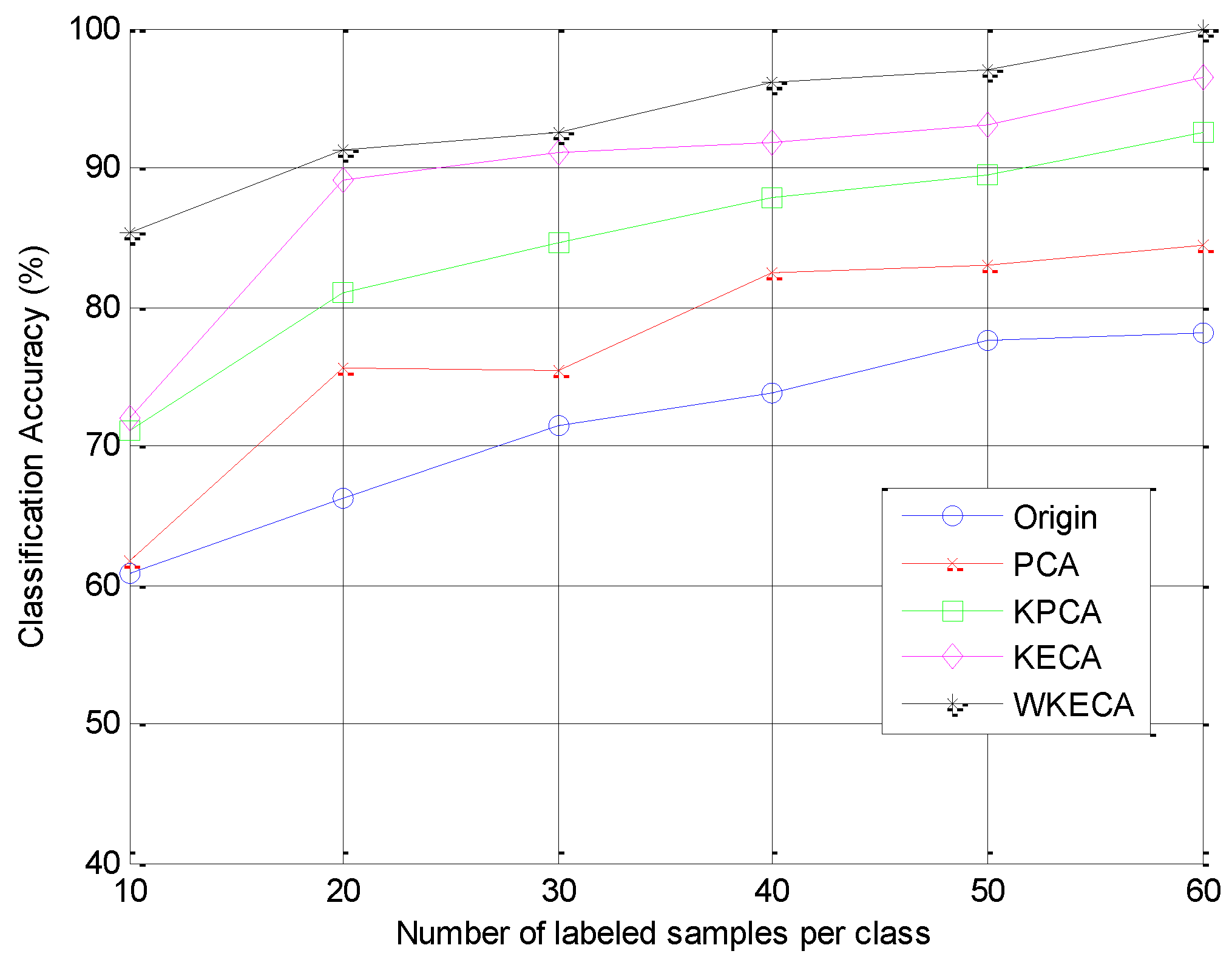

3.2. Dimensionality Reduction and Pattern Classification

3.3. Results and Discussion

4. Conclusions

Acknowledgments

Author Contributions

Conflicts of Interest

References

- Rai, A.; Upadhyay, S.H. A review on signal processing techniques utilized in the fault diagnosis of rolling element bearings. Tribol. Int. 2016, 96, 289–306. [Google Scholar] [CrossRef]

- Smith, W.A.; Randall, R.B. Rolling element bearing diagnostics using the Case Western Reserve University data: A benchmark study. Mech. Syst. Signal Proc. 2015, 64, 100–131. [Google Scholar] [CrossRef]

- Cong, F.; Zhong, W.; Tong, S.; Tang, N.; Chen, J. State Space Formulation of Nonlinear Vibration Responses Collected from a Dynamic Rotor-Bearing System: An Extension of Bearing Diagnostics to Bearing Prognostics. Sensors 2017, 17, 369. [Google Scholar]

- Henao, H.; Capolino, G.A.; Fernandez-Cabanas, M.; Filippetti, F. Trends in fault diagnosis for electrical machines: a review of diagnostic techniques. IEEE Ind. Electron. Mag. 2014, 8, 31–42. [Google Scholar] [CrossRef]

- Frosini, L.; HarlişCa, C.; Szabó, L. Induction machine bearing fault detection by means of statistical processing of the stray flux measurement. IEEE Trans. Ind. Electron. 2015, 62, 1846–1854. [Google Scholar] [CrossRef]

- Li, Y.; Xu, M.; Wang, R.; Huang, W. A fault diagnosis scheme for rolling bearing based on local mean decomposition and improved multiscale fuzzy entropy. J. Sound Vib. 2016, 360, 277–299. [Google Scholar] [CrossRef]

- Yang, Y.; Dong, X.J.; Peng, Z.K.; Zhang, W.M.; Meng, G. Vibration signal analysis using parameterized time–frequency method for features extraction of varying-speed rotary machinery. J. Sound Vib. 2015, 335, 350–366. [Google Scholar] [CrossRef]

- Immovilli, F.; Bellini, A.; Rubini, R.; Tassoni, C. Diagnosis of bearing faults in induction machines by vibration or current signals: a critical comparison. IEEE Trans. Ind. Appl. 2008, 46, 1350–1359. [Google Scholar] [CrossRef]

- Tang, B.; Song, T.; Li, F.; Deng, L. Fault diagnosis for a wind turbine transmission system based on manifold learning and Shannon wavelet support vector machine. Renew. Energ. 2014, 62, 1–9. [Google Scholar] [CrossRef]

- Lei, Y.; He, Z.; Zi, Y.; Hu, Q. Fault diagnosis of rotating machinery based on multiple ANFIS combination with GAs. Mech. Syst. Signal Proc. 2007, 21, 2280–2294. [Google Scholar] [CrossRef]

- Yu, J. Machinery fault diagnosis using joint global and local/nonlocal discriminant analysis with selective ensemble learning. J. Sound Vib. 2016, 382, 340–356. [Google Scholar] [CrossRef]

- Li, B.; Zhang, Y. Supervised locally linear embedding projection (SLLEP) for machinery fault diagnosis. Mech. Syst. Signal Proc. 2011, 25, 3125–3134. [Google Scholar] [CrossRef]

- Liao, G.; Liu, S.; Shi, T.; Zhang, G. Gearbox condition monitoring using self-organizing feature maps. Proc. Inst. Mech. Eng. C J. Mech. Eng. Sci. 2004, 218, 119–129. [Google Scholar] [CrossRef]

- Su, L.; Shi, T.; Liu, Z.; Zhou, H.; Du, L.; Liao, G. Nondestructive diagnosis of flip chips based on vibration analysis using PCA-RBF. Mech. Syst. Signal Proc. 2017, 85, 849–856. [Google Scholar] [CrossRef]

- Shao, R.; Hu, W.; Wang, Y.; Qi, X. The fault feature extraction and classification of gear using principal component analysis and kernel principal component analysis based on the wavelet packet transform. Measurement 2014, 54, 118–132. [Google Scholar] [CrossRef]

- Liu, H.; Zhang, J.; Cheng, Y.; Lu, C. Fault diagnosis of gearbox using empirical mode decomposition and multi-fractal detrended cross-correlation analysis. J. Sound Vib. 2016, 385, 350–371. [Google Scholar] [CrossRef]

- Trendafilova, I.; Cartmell, M.P.; Ostachowicz, W. Vibration-based damage detection in an aircraft wing scaled model using principal component analysis and pattern recognition. J. Sound Vib. 2008, 313, 560–566. [Google Scholar] [CrossRef] [Green Version]

- Schölkopf, B.; Smola, A.; Müller, K.R. Nonlinear component analysis as a kernel eigenvalue problem. Neural Comput. 1998, 10, 1299–1319. [Google Scholar] [CrossRef]

- Liu, N.; Wang, H. Weighted principal component extraction with genetic algorithms. Appl. Soft. Comput. 2012, 12, 961–974. [Google Scholar] [CrossRef]

- Zhang, Z.H.; Hancock, E.R. Kernel entropy-based unsupervised spectral feature selection. Int. J. Pattern Recognit. Artif. Intell. 2012, 26, 1260002. [Google Scholar] [CrossRef]

- Jenssen, R. Kernel Entropy Component Analysis. IEEE Trans. Pattern Anal. Mach. Intell. 2010, 32, 847–860. [Google Scholar] [CrossRef] [PubMed]

- Shi, J.; Jiang, Q.; Zhang, Q.; Huang, Q.; Li, X. Sparse kernel entropy component analysis for dimensionality reduction of biomedical data. Neurocomputing 2015, 168, 930–940. [Google Scholar] [CrossRef]

- Yang, Y.; Li, X.; Liu, X.; Chen, X. Wavelet kernel entropy component analysis with application to industrial process monitoring. Neurocomputing 2015, 147, 395–402. [Google Scholar] [CrossRef]

- Gómez-Chova, L.; Jenssen, R.; Camps-Valls, G. Kernel entropy component analysis for remote sensing image clustering. IEEE Geosci. Remote Sens. Lett. 2012, 9, 312–316. [Google Scholar] [CrossRef]

- Jenssen, R. Kernel Entropy Component Analysis: New Theory and Semi-Supervised Learning. In Proceedings of the IEEE International Workshop on Machine Learning for Signal Processing, Beijing, China, 18–21 September 2011; pp. 1–6.

- Fukunaga, K. Introduction to Statistical Pattern Recognition, 2nd ed.; Rheinboldt, W., Ed.; Academic press: Cambridge, MA, USA, 1990. [Google Scholar]

- Sierra, A.; Echeverría, A. Evolutionary discriminant analysis. IEEE Trans. Evol. Comput. 2006, 10, 81–92. [Google Scholar] [CrossRef]

- Renyi, A. On measures of entropy and information. Fourth Berkeley Symp. Math. Statist. Prob. 1961, 1, 547–561. [Google Scholar]

- Jenssen, R. Information Theoretic Learning and Kernel Methods. In Information Theory and Statistical Learning; Emmert-Streib, F., Dehmer, M., Eds.; Springer: New York, NY, USA, 2009; pp. 209–230. [Google Scholar]

- Gao, L.; Qi, L.; Chen, E.; Guan, L. A fisher discriminant framework based on Kernel Entropy Component Analysis for feature extraction and emotion recognition. In Proceedings of the IEEE International Conference on Multimedia and Expo Workshops (ICMEW), Chengdu, China, 14–18 July 2014; pp. 1–6.

- Mitchell, M. An Introduction to Genetic Algorithms; MIT Press: London, UK, 1998. [Google Scholar]

- Tang, K.S.; Man, K.F.; Kwong, S.; He, Q. Genetic algorithms and their applications. IEEE Signal Process. Mag. 1996, 13, 22–37. [Google Scholar] [CrossRef]

- He, Q.; Kong, F.; Yan, R. Subspace-based gearbox condition monitoring by kernel principal component analysis. Mech. Syst. Signal Proc. 2007, 21, 1755–1772. [Google Scholar] [CrossRef]

- Chen, X.; Liu, D.; Xu, G.; Jiang, K.; Liang, L. Application of wavelet packet entropy flow manifold learning in bearing factory inspection using the ultrasonic technique. Sensors 2015, 15, 341–351. [Google Scholar] [CrossRef] [PubMed]

- Yan, R.; Gao, R.X.; Chen, X. Wavelets for fault diagnosis of rotary machines: A review with applications. Signal Process. 2014, 96, 1–15. [Google Scholar] [CrossRef]

- Lei, Y.; He, Z.; Zi, Y. Application of an intelligent classification method to mechanical fault diagnosis. Expert Syst. Appl. 2009, 36, 9941–9948. [Google Scholar] [CrossRef]

- Hu, Q.; He, Z.; Zhang, Z.; Zi, Y. Fault diagnosis of rotating machinery based on improved wavelet package transform and SVMs ensemble. Mech. Syst. Signal Proc. 2007, 21, 688–705. [Google Scholar] [CrossRef]

- Li, B.; Chen, X. Wavelet-based numerical analysis: A review and classification. Finite Elem. Anal. Des. 2014, 81, 14–31. [Google Scholar] [CrossRef]

- Feng, Y.; Schlindwein, F.S. Normalized wavelet packets quantifiers for condition monitoring. Mech. Syst. Signal Proc. 2009, 23, 712–723. [Google Scholar] [CrossRef]

- Chen, Y.; He, Z.; Yang, S. Research on on-line automatic diagnostic technology for scratch defect of rolling element bearings. Int. J. Precis. Eng. Manuf. 2012, 13, 357–362. [Google Scholar] [CrossRef]

- Zhou, H.; Shi, T.; Liao, G.; Xuan, J.; Su, L.; He, Z.; Lai, W. Using supervised kernel entropy component analysis for fault diagnosis of rolling bearings. J. Vib. Control 2015. [Google Scholar] [CrossRef]

- Tandon, N.; Choudhury, A. A review of vibration and acoustic measurement methods for the detection of defects in rolling element bearings. Tribol. Int. 1999, 32, 469–480. [Google Scholar] [CrossRef]

- Jiang, L.; Shi, T.; Xuan, J. Fault diagnosis of rolling bearings based on marginal fisher analysis. J. Vib. Control 2014, 20, 470–480. [Google Scholar] [CrossRef]

| Operating Condition | Normal (%) | Inner Race Fault (%) | Outer Race Fault (%) | Ball Fault (%) | Average Accuracy (%) |

|---|---|---|---|---|---|

| Original | 68 | 86 | 76 | 80 | 77.5 |

| PCA | 72 | 90 | 88 | 82 | 83 |

| KPCA | 92 | 92 | 84 | 90 | 89.5 |

| KECA | 96 | 98 | 82 | 96 | 93 |

| WKECA | 100 | 100 | 92 | 96 | 97 |

| Performance | k = 0.001 | k = 0.01 | k = 0.1 | k = 1 |

|---|---|---|---|---|

| f(X) | 0.9702 | 0.9939 | 1.0236 | 1.2328 |

| RBW | 1.4506 | 1.4875 | 1.7913 | 2.0828 |

| CAtest | 0.97 | 0.965 | 0.935 | 0.905 |

© 2017 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license ( http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhou, H.; Shi, T.; Liao, G.; Xuan, J.; Duan, J.; Su, L.; He, Z.; Lai, W. Weighted Kernel Entropy Component Analysis for Fault Diagnosis of Rolling Bearings. Sensors 2017, 17, 625. https://doi.org/10.3390/s17030625

Zhou H, Shi T, Liao G, Xuan J, Duan J, Su L, He Z, Lai W. Weighted Kernel Entropy Component Analysis for Fault Diagnosis of Rolling Bearings. Sensors. 2017; 17(3):625. https://doi.org/10.3390/s17030625

Chicago/Turabian StyleZhou, Hongdi, Tielin Shi, Guanglan Liao, Jianping Xuan, Jie Duan, Lei Su, Zhenzhi He, and Wuxing Lai. 2017. "Weighted Kernel Entropy Component Analysis for Fault Diagnosis of Rolling Bearings" Sensors 17, no. 3: 625. https://doi.org/10.3390/s17030625