1. Introduction

Scientific models and theories aimed at explaining the behaviour of specular reflections are sufficiently consolidated. However, the automatic processing, using artificial vision techniques, of scenes where there are specular surfaces, has problems that have not been solved yet. Vision systems designed to deal with specular objects must cope with the optical difficulties associated with this type of material [

1]. The surfaces have a high reflection coefficient which causes undesired reflections and shine, concealing, in some cases, chromatic, morphological and topographical information about the object.

Most artificial vision techniques ignore specular reflections and focus on the diffuse component of the interaction of light with objects. Thus, these techniques, which are conceived to deal with Lambertian surfaces, produce wrong results when other surfaces are in the visual scene [

2,

3]. The solutions put forward in the literature adopt opposite ways of approaching the problem: developing methods to detect specularities in images, in order to take advantage of existing artificial vision techniques; and designing new vision techniques that explicitly deal with these surfaces.

The methods designed for detecting specularities in the scene differ depending on the vision level in which they are approached. They take advantage of certain phenomena and characteristics of light to separate the contribution of diffuse and specular reflection: spectral distribution of reflections [

4,

5,

6,

7], polarization [

8,

9,

10,

11], analysis of the behaviour of specularities in several images [

12,

13,

14,

15,

16], and combinations of them [

17,

18,

19]. They are used either to avoid or to remove specularities in the original image. The solutions in the last case are at the low level of vision systems because reflections and shine are considered as noise to be removed. They offer a filtered image to be processed at higher levels.

Designing new vision techniques allows specular reflections to be considered as a peculiar characteristic of the surface, which allows better performance of the vision process [

20,

21,

22,

23,

24,

25,

26]. They use mechanisms of active vision that, in some cases, are modifications or refinements of classic techniques. They are generally focused on the extraction of the object shape [

27,

28,

29,

30,

31,

32,

33,

34,

35,

36,

37,

38].

The techniques developed to deal with specular surfaces, either for detecting specularities or for extracting the object shape, must fulfill certain requirements, making them only viable in specific applications: for example, requirements related to specific electromagnetic characteristics of objects (i.e., specific methods for metallic or dielectric objects), having previous knowledge of the geometry of the scene or of the surface reflectance, etc.

Specifically, automated visual inspection is the most used tool for testing products in industry due to their ease of use and its cost [

39,

40,

41,

42]. However, there are very few vision systems that deal with the surface inspection of specular products. They share the same aforementioned drawback of the generic vision system for specular objects: they are very specific as they assume specific constraints. The lighting of the scene and the acquisition equipment are determinant factors in the proposed solutions since they help the detection of defects in images [

43,

44,

45,

46,

47].

Techniques that use structured lighting appear in few systems cited in the literature. They are considered to be the most reliable and suitable for inspecting the 3D shape of products [

36,

48,

49,

50]. Moreover, they have advantage over other techniques including laser, time of flight or LIDAR because the same sensor is used to determine colour information instead of acquiring colour and shape information in an independent manner. Techniques differ in the way they acquire the lighting pattern by means of projecting on the surface [

51,

52,

53] or focusing the system on the reflection [

36,

48,

54,

55] (assuming the object as part of optical system where lighting patterns are projected); the equipment used to generate patterns [

56,

57,

58] (screens, projectors, etc.); and, in the method used to codify patterns [

59,

60,

61,

62,

63].

Proposed solutions try to satisfy specific requirements by means of adapting to the application domain and to specific constraints of the products. A system designed to satisfy the quality control of a product generally cannot be applied to another system. As a consequence, the contribution of this paper is to provide an active vision model able to explain the problem of inspecting specular surfaces and able to help in designing vision systems for this purpose. Moreover, the paper proposes a method based on the model that is able to minimize the negative effects of specular surfaces in visual inspection and able to take advantage of specular reflections as a peculiar characteristic. The method is focused on controlling the acquisition conditions (e.g., lighting angles, viewpoints, chromaticity and other lighting characteristics, etc.) to maximize the likelihood of detecting defects. Particular characteristics of the inspection problem make control of the acquisition conditions possible.

In order to validate the model the use of simulation is proposed as an inspection system design methodology that could be systematically applied, studying conditions in which the inspection has to be carried out and designing solutions in a flexible way. Virtual inspection makes use of the virtual manufacturing technology to model and simulate the inspection process, and the physical and mechanical properties of the inspection equipment, to provide an environment for studying the inspection methodologies [

64]. Simulation based on virtual imaging enables the rendering of realistic images, providing a very efficient way to perform tests compared to the numerous attempts of manual experiments [

65]. The introduction of simulation provides a flexible and low-cost method (compared with experimentation in the laboratory) of testing original hypotheses and the benefits that can be drawn from this research.

The rest of this paper is organized as follows.

Section 2 describes the active vision model. The method based on the model for inspecting specular surfaces is developed in

Section 3.

Section 5 and

Section 6 evaluate the proposed method by controlling image acquisition conditions. Finally,

Section 7 concludes the paper.

2. Active Vision Model to Deal with Specular Surfaces

We are interested in modeling the automatic process of artificial vision in order to provide solutions to the problem of inspecting specular surfaces. First of all, a model describing the image formation and the variables that take part in this process is presented.

An image

I is defined as a two-dimensional representation provided by

F. Let

F a function that models a visual acquisition system,

VAS. It includes all equipment and scene configuration to capture an image: lighting, positions, viewpoints, cameras, etc. Let

a vector made up of

scene magnitudes that contribute to the formation of

I Equation (

1). Each vector

is an element of a representation space

P related to optical magnitudes of the visual perception phenomenon.

The components

of the vector of

scene magnitudes are measurable physical values involved in the process of image formation. They could be, in practice: scale, viewpoint, light intensity, frequency, saturation, etc. Each component could be modeled as a function depending on three inputs Equation (

2): the subject of interest,

m, in the scene (e.g., the object to be inspected), the environment,

e, in which the subject is placed and, finally, the camera,

c, that captures images from the scene.

The contribution of each element (

m,

e and

c) can be expressed as three vectors made up of magnitudes:

related to the object Equation (

3),

related to the environment Equation (

4) and

related to the camera Equation (

5). Intensity and wavelength of light sources, medium of transmission, relative position between scene and vision device, are examples of environment variables

. Regarding the camera contribution

, the variables are related to the sensor characteristics, optical and electronic elements: zoom, focus, diaphragm, size of the sensor, signal converters, etc. Finally, the reflectance, colour, shape, topography of the object are examples of object variables

. The values of each vector establish elements of the set

M for the object,

E for the environment and

for the camera. In Diagram 1 an outline of the magnitudes can be found.

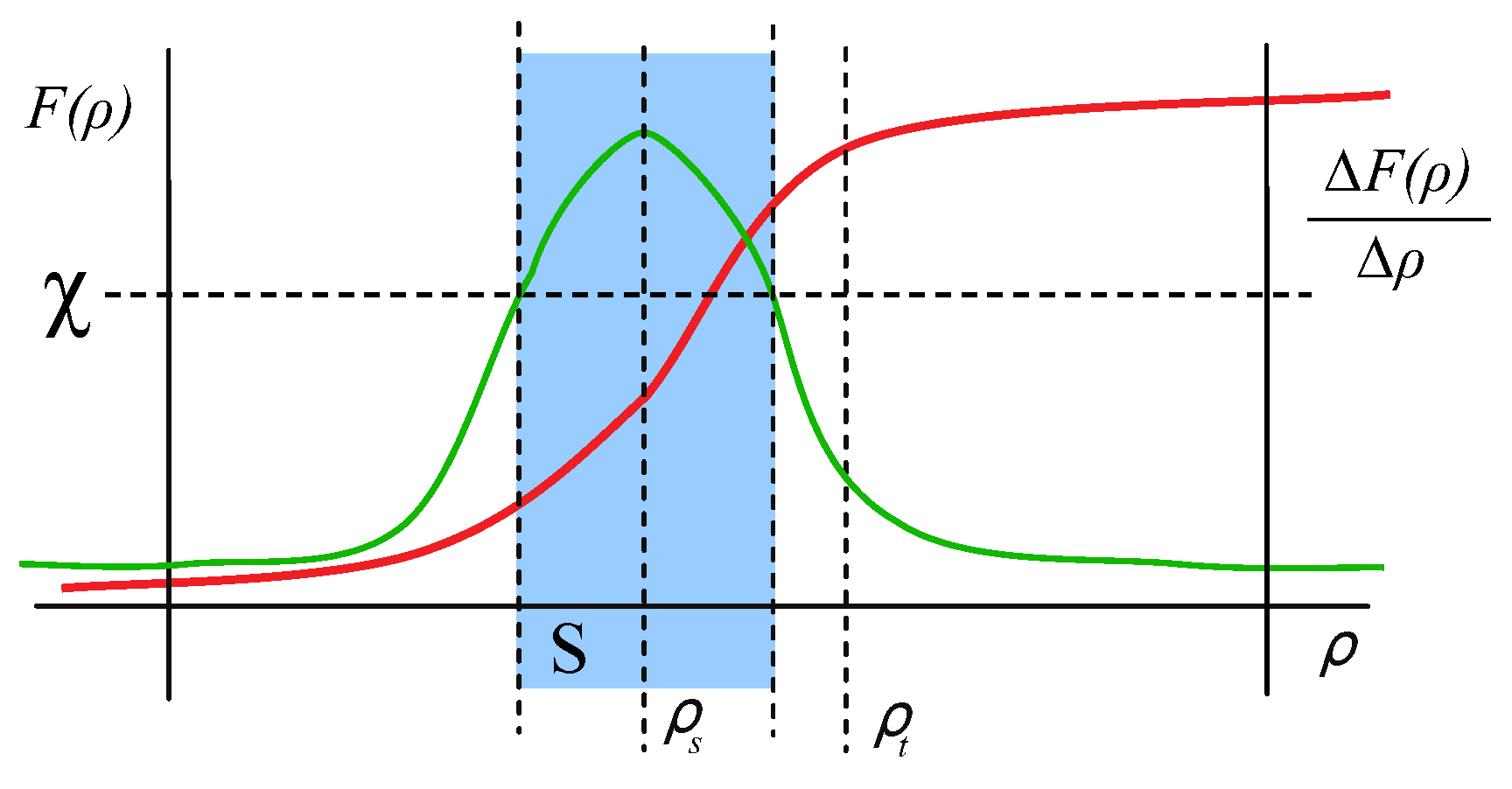

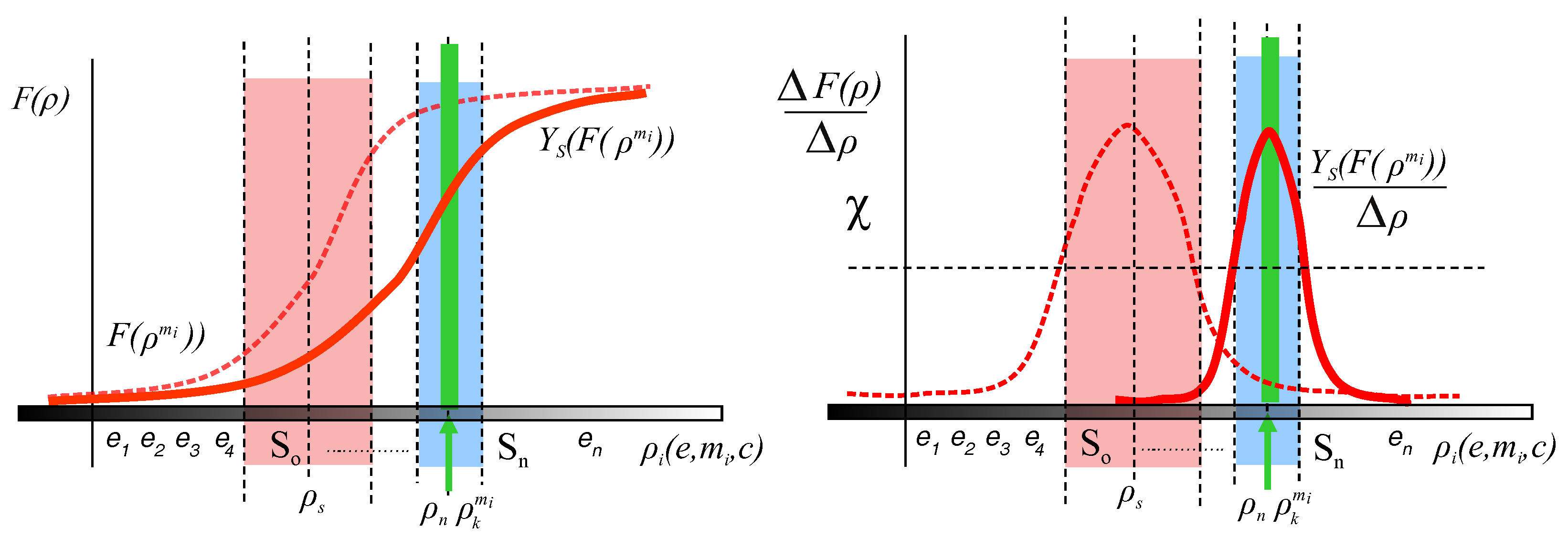

To define our model, it is worth to remember the sensitivity as an important static characteristic of a sensor. The sensitivity is the slope of the calibration curve (see

Figure 1). First, we can define the calibration curve as the function that maps a physical scene magnitude and its representation in the image space. Depending on the camera parameters the calibration curve will have a different function for different scene magnitudes. For instance, a camera with large depth of field will have a smoother calibration curve for intensity measurement (along a larger set of scene magnitudes for intensity, the camera will be able to distinguish between them) than a camera with short depth of field which will have a very abrupt calibration curve for the same scene magnitude (only a small set of intensity values will be distinguishable). With this function, sensitivity indicates the detectable output change for the minimum change of the measured magnitude. As a naive example, the detectable output could be the ability to perceive two different color that are actually different in the real scene. If the color change is too small, the sensor would return the same value of intensity. In our case, the detectable output in F for the minimum change of the

scene magnitudes:

Diagram 1.

Levels of magnitudes involved in the formation of the image. The image function as the most abstract level on the top, then each scene magnitude, the three elements that composes , and the individual measurements of each element on the bottom of the tree.

Diagram 1.

Levels of magnitudes involved in the formation of the image. The image function as the most abstract level on the top, then each scene magnitude, the three elements that composes , and the individual measurements of each element on the bottom of the tree.

Usually, the camera parameters are calibrated to a set of values

so that the sensitivity is optimized for all variables of

simultaneously with respect to a single metric (not necessarily maximized for each variable

). For example, a camera could be calibrated using a specific zoom, focus and sensor size optimizing the sensitivity of the system to perceive the colour of a subject in a wide range of object distances and viewpoints but the system is not optimized separately for distances or viewpoints. However, a given camera

c has maximum sensitivity for a value of each

. For convenience, we are going to define the

tuning point as the corresponding point

in the

scene magnitudes space

P for each camera of the set

in which the sensitivity of the

VAS is the optimum (see

Figure 1) . The sensitivity decreases in general for values of

differently from

.

In the same way, since the VAS depends on the contribution of the environment e, for each value of the set E, the corresponding point in the scene magnitudes will be named as the working point. The detected output of this point is related to the sensitivity curve of the VAS for each of the magnitudes because it restricts the limits where the system can work. In average conditions or in simple approaches to vision problems, its effect is usually considered to be negligible because perception takes place close to . This simplification is unacceptable in the case of adverse conditions as it occurs, for example, in dark environments, remote objects from the camera, or in the presence of specular objects that limit the capability of the VAS to perceive the scene.

Acquisition of the scene and representation on a plane carried out by

F Equation (

1) cause situations, related to vision in adverse conditions, where it is not possible to distinguish between the different scene magnitude vectors

that contribute to an image. The capacity to discern elements of

P is related to the measurement in the image

I of the magnitudes

m,

e, and/or

c, that contribute to each of the components

. A specific application could be interested in knowing the intensity lighting of a scene from the environment

e, or the focal length from the camera

c, for calibration purposes. However, generally, the contribution of the object

m to the image, and, therefore, its magnitudes

, is the aim of the measurement. For example, as we can see in

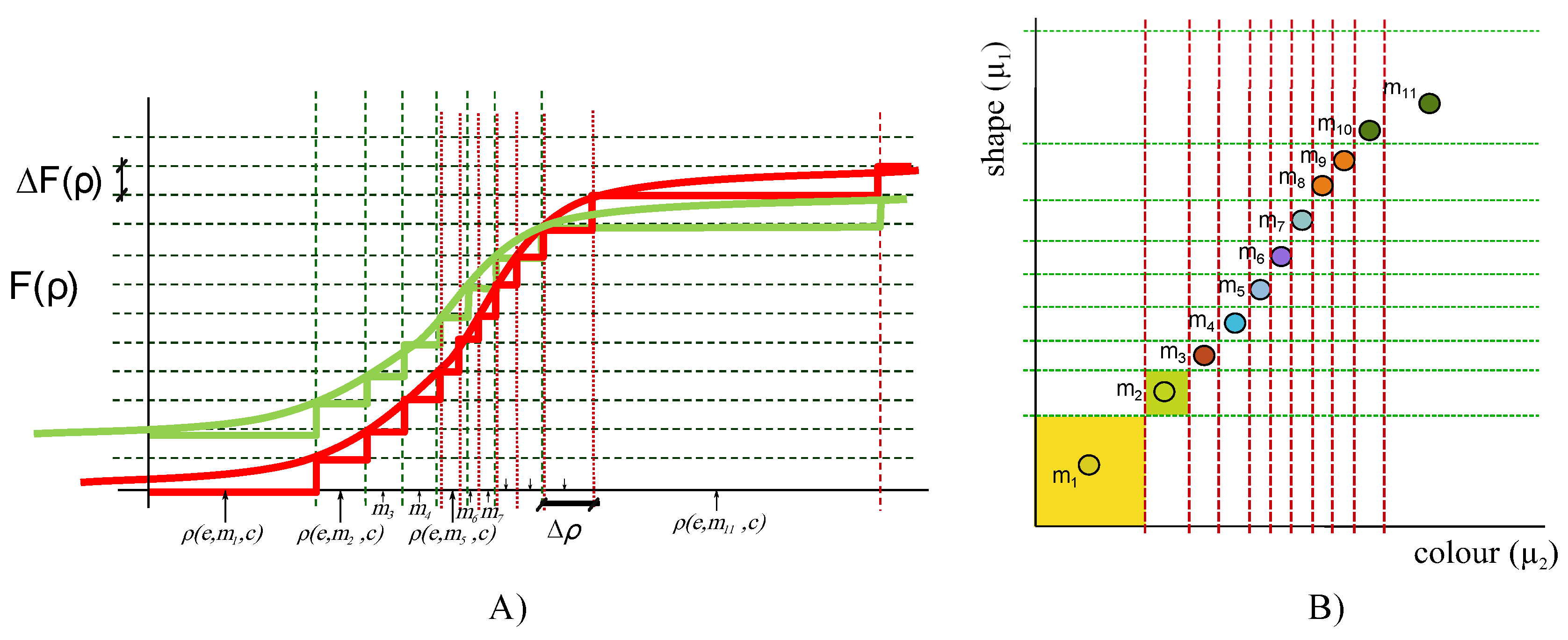

Figure 2, a

VAS used to perceive color (

) of objects is able to distinguish the different objects in the scene. However, if it is used to perceive shape (

) using the same camera (including all variables

), neither objects

and

nor

and

could be distinguished each other (as we mentioned above, a given camera has maximum sensitivity for a specific value of each

). Hence, capacity to discern magnitudes that contribute to the vector

can be delimited to distinguish elements of set

M in the image (e.g., the colour of a region of an object, or the shape of a surface, or both, etc.). It is important to understand that the scene magnitudes are continuous, not discrete. The representation in the

Figure 2 shows a set of magnitudes that are distinguishable in the space of the image

, but it is an example of the certain magnitudes in the continuous space of magnitudes

P.

An object could be distinguishable from another one in the

VAS when they are distinguishable in the measurement performed in

F (i.e., in the vertical axis of

Figure 1 and

Figure 2A). Let

be the set of objects that can be distinguished for an specific object

, and let

the minimum difference perceptible by the system (sensitivity), then

could be established as:

Figure 2B, shows the measurement performed in

F analysed from the point of view of the object space

M considering just colour and shape. Objects close to

, in the yellow area, are not distinguishable, for that object in this example. However, they are distinguishable for the object

(light green area). Following this example,

but

, if the measurement performed in

F takes into account both colour and shape.

A

VAS has to deal with different situations that are a consequence of the subsets of

P delimited by the problem to be solved (e.g., delimited by objects or by their characteristics to be analysed, or by environments, or by the cameras, etc.). A minimum value of sensitivity

can be established in which any object is distinguishable from another considered in the acquisition (see

Figure 1). The values of the vector of magnitudes

in which sensitivity is higher than a threshold

conform the subset

S of

P defined by Equation (

8).

There are no perception difficulties for situations of the subset

. They involve values of environment and camera, in addition to characteristics of objects, that make up magnitudes

of the set

S Equation (

8). In other words, it is possible to distinguish the images of two different objects (

and

in Equation (

9)) from the acquisition performed by

F if the

VAS is working on points of

S (

denote a scene magnitude vector whose component

m is the object

).

Vision systems working on

scene magnitudes of the complementary of

S,

, (

) present the aforementioned adverse conditions to distinguish characteristics of objects from images. Hence, solutions have to be provided to achieve distinct images from different objects that the camera perceives as the same one. These solutions should be able to compensate the low sensitivity in

(sensitivity less than

in

Figure 1). Among the three variables that provide values to the

scene magnitudes, object is a constant due to them being the subject of interest. However, environment conditions and camera characteristics could be modified to set up

scene magnitudes of the subset

S. Thus, the

VAS is going to be able to have different images from different objects in the scene in order to distinguish them Equation (

9). For this purpose, we propose two complementary alternatives (Diagram 2):

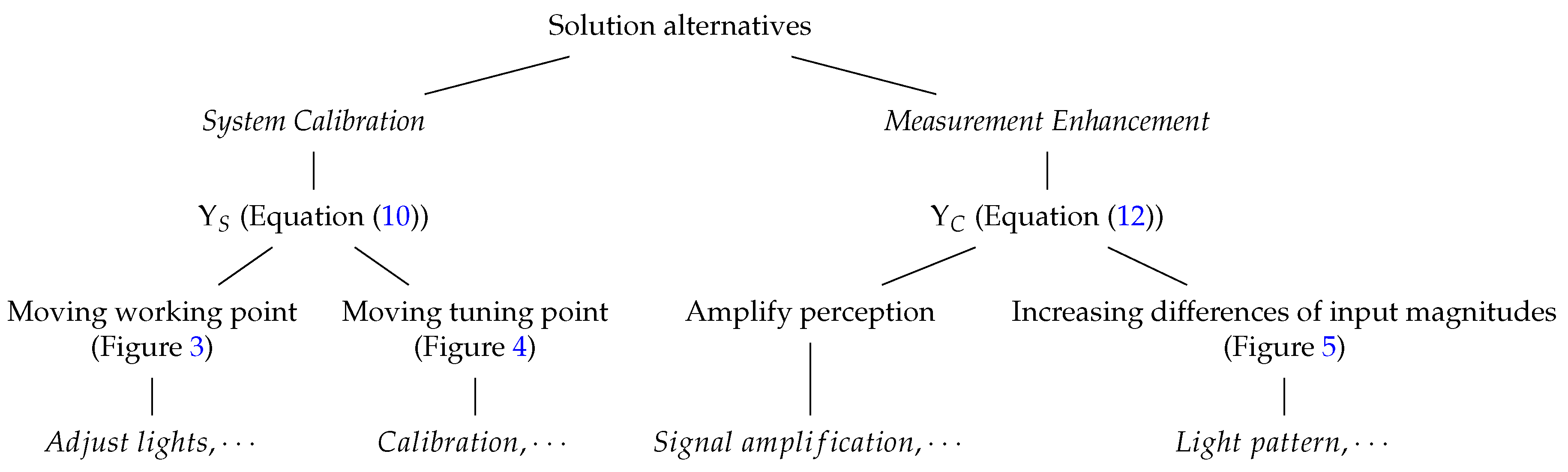

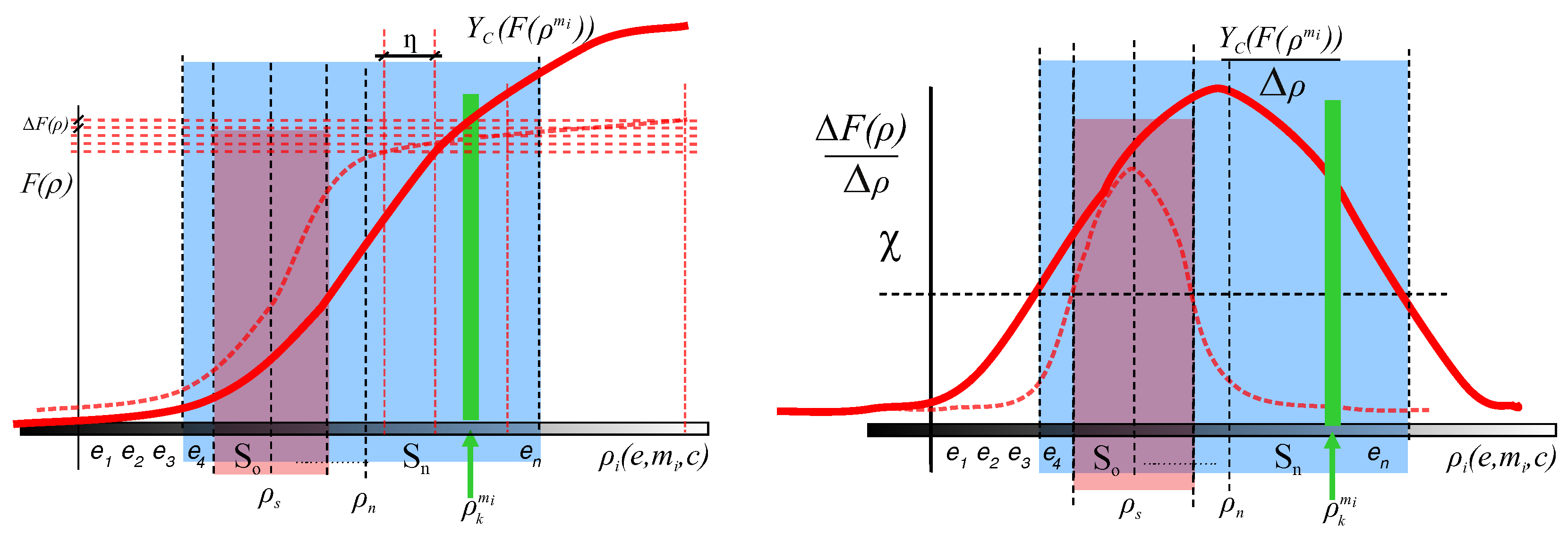

System Calibration (calibrating the system). This alternative tries to minimize the distance between the working point and the tuning point .

Measurement Enhancement (conditioning the measurement). Enhance the target measurements or parameters. This alternative can be considered as conditioning the measurement performed by the VAS.

System Calibration consists of shifting one of the points so that the

working point be an element of the set

S. The goal could be generating a new image of the object

by a transformation

Equation (

10) in which the

working point (

) be close enough to the

tuning point. Do not confuse calibrating the system with calibrating the camera. The alternative presented here could be calibrating the sensor as well as changing other parameters of environment or object of interest. Hence, to carry out this alternative it is necessary to adjust the environment to shift the

working point, for example moving the object closer to the camera, adjusting lighting conditions, etc. (

Figure 3 shows an outline of this process). On the other hand, in order to shift the

tuning point (see

Figure 4), the camera could be recalibrated or new acquisition equipment can be used (traditionally this is done by replacing the camera with a more suitable one). In this case, subset

S of

P changes for the new camera from

to

.

Diagram 2.

Diagram with the different alternatives to improve the system perception.

Diagram 2.

Diagram with the different alternatives to improve the system perception.

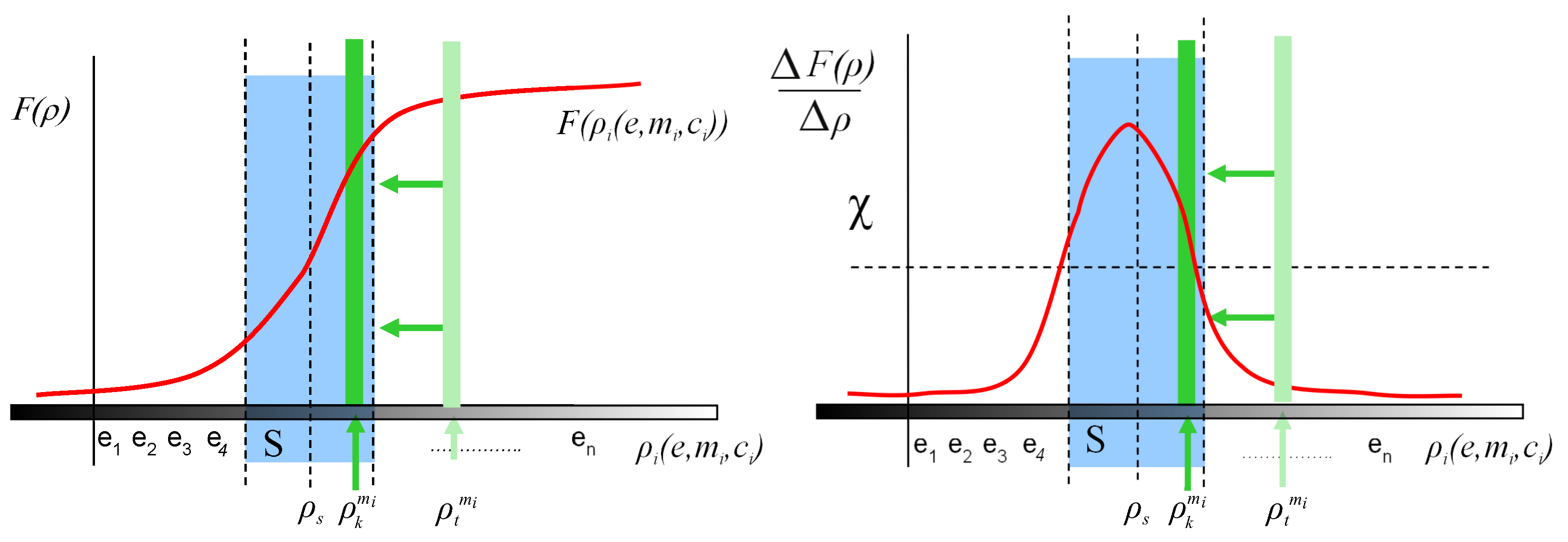

For the second alternative, Measurement Enhancement aims to directly influence the sensitivity curve of the acquisition system. In a nutshell, it tries to somehow highlight or enhance the parameters which want to be perceived. It can be carried out by means of two new alternatives. First, the output signal of the perception system can be amplified. That is, the classic conception of measurement system amplification at the signal conditioning step. Limitations of this technique are related to increasing the amplitude of the signal in ranges of minimal sensitivity (both minimum and maximum of the range of the sensor because the contribution of the object in the output signal is insignificant, the signal-to-noise ratio is very low). In this way, acquisition system improvements are limited because they are only applied in ranges of intermediate sensitivity.

The other alternative of enhancing the target measurement is increasing the differences of the values of the input magnitudes. This is to operate with large differences (

) of the input (

) to increase the differences of the output

F for different objects (

and

) until the differences are perceptible at the output (

).

Figure 5 schematically shows this concept. Elements used as input magnitudes of the set

P to get large differences of the input make up the subset

A in Equation (

11). The goal is to reduce the number of possibilities that the

VAS has to deal with; for example, restricting camera positions, viewpoints or lighting characteristics (in this paper, a lighting pattern has been used to inspect specular surfaces).

We model the transformation able to increase the differences of the values of the input magnitudes as

:

The techniques are not exclusive and can be used together for designing vision system in which images of different objects can be distinguished. An example of the use could be a system which needs to perceive two colliding objects separately through a sensor with a fisheye lens. First, a transformation by means of calibrating the camera to reduce the distortion could be applied. After, could mean to colorize the objects to enlarge the perceived difference between them.

3. Method for Inspecting Specular Surfaces

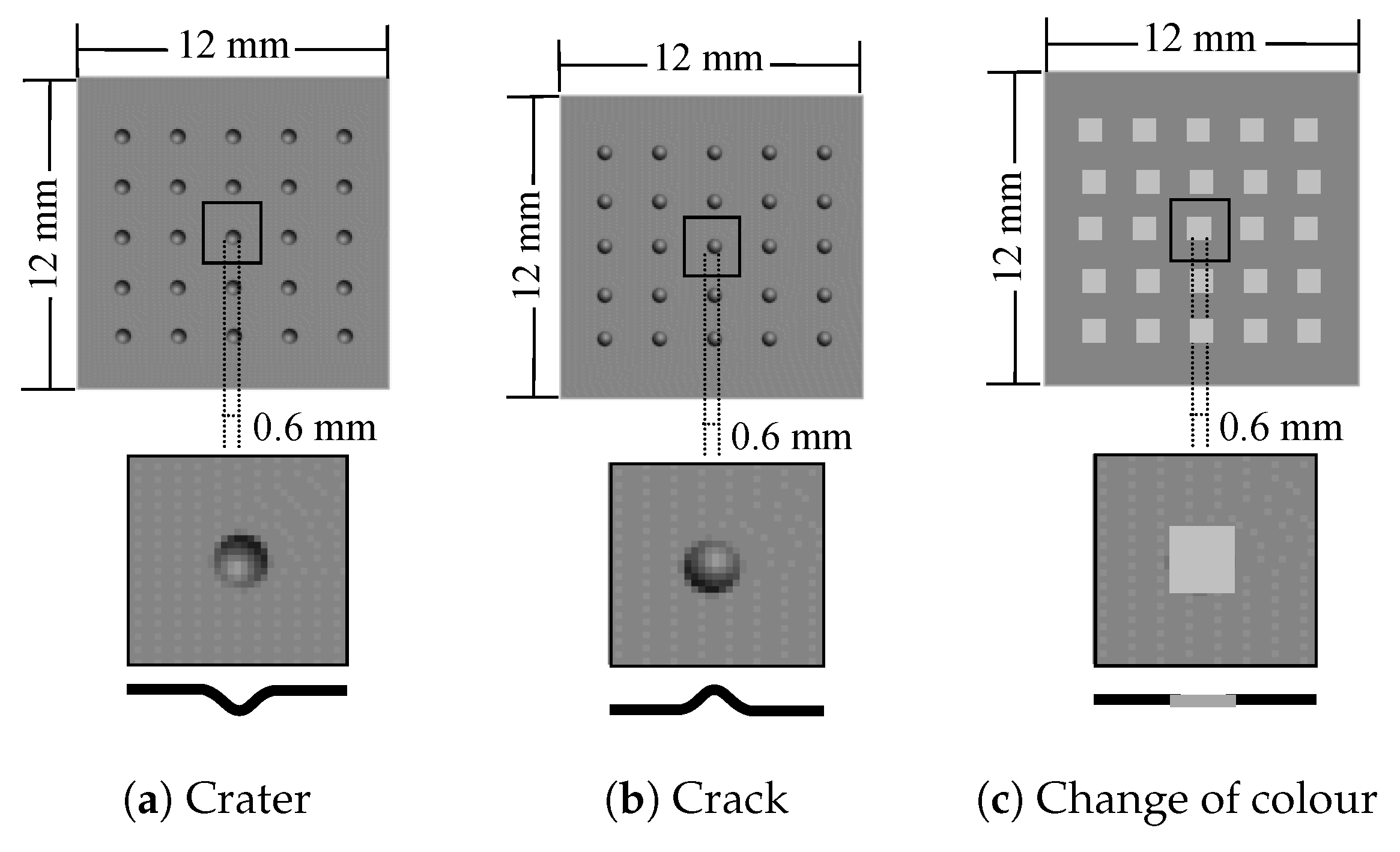

In this section, we are going to use the previous model to specify a method for inspecting specular surfaces. The objective of an automated visual inspection system, AVI, aims to determine if a product differs from the manufacturer’s specifications. This implies the AVI has to measure the object magnitudes in the scene in order to compare them with values of the magnitudes established in the design step of the product (e.g., reflectance, colour, shape, topography).

Two sets of objects are considered for modelling the

AVI:

and

. The first one is composed of objects that are made up by magnitudes

defined in the manufacturing specifications. The set

is composed by objects to be inspected looking for any deviation from objects of the set

. These deviations cause, depending on the magnitude, different defects: morphological, chromatic, topographic defects, etc. The union of

and

is the subset

Equation (

3) of the possible objects that the inspection system must consider.

The inspection goal could be modelled as in Equation (

13). The

AVI has to decide whether an object

of the set

could be distinguished (

9) from an object

of the set

if any of the magnitudes

differ in some value

from the original

. The object

is considered the object model of

and contains the manufacturing specifications.

In order for the deviations (

) of the object magnitudes

from

to be detected in the image Equation (

13), contributions from the environment and the camera to

must allow a suitable sensitivity to the

AVI.

Since it was previously shown that the sensitivity of the VAS (AVI in this specific case) is optimized simultaneously for all variables of , AVI sensitivity is not necessarily maximized for each variable . It is not maximized for each object m and each of its variables . Moreover, generally an AVI is designed for performing a measurement of a subset of the object magnitudes in the image (i.e., colour, shape). Therefore, the sensitivity of the AVI can be very low for some of the magnitudes to be measured. That is, it is possible that the perception be suitable for measuring the surface colour or shape but it could not be suitable for both together. In other words, the intended measurement determines the perception capacity of the AVI. Calibration parameters or environment conditions must be adjusted to adequately perceive magnitudes . This process requires great knowledge of the problem and accuracy for the solution.

For specular surfaces, the difficulty to perceive in scene magnitudes of is a consequence of the surface reflectance. The environment conditions produce an effect in the perception of the object being more important than other types of surfaces (e.g., Lambertian surfaces). For example, if it is considered that the calibration parameters are the same for two different images, the difficulty to perceive with different environment conditions is given by:

The mirror itself: a given camera can be confused so that it cannot distinguish between the environment and the object. The spatial modulation of the environment contribution creates the illusion of the objects in a scene.

The lighting of the environment can cause shine on the surface and confuse the two images with different objects. For example, the image formed by a grey surface with a high reflection coefficient illuminated by white lights can be confused with the image of a lighter surface object.

The specular reflection causes the working point be easily located at the limit of the range of the VAS, at the maximum value of that can be measured. Sensitivity is very low for the perception of objects in these conditions because specularity saturates the camera sensor.

The viable solution for compensating the lack of sensitivity produced in the ranges of the

scene magnitudes (in order to obtain an improved image) is related to the

System Calibration that performs the perception system (see

Section 2). It is necessary to increase the differences in the values of the input magnitudes,

, to raise the differences of the output magnitude until they can be measured (Equation (

12)).

In other words, using the resolution (another important sensor characteristic that indicates the smallest change in the magnitude being measured that the sensor can detect, e.g., the smallest feature size of an object or the smallest change in colour that the

VAS can distinguish), since the resolution in those ranges is very low, it is necessary to force large differences at the input. Thus, it is necessary to work using a subset of

scene magnitudes in order the perception will be suitable in the points around the

tuning point and it will be facilitated in the ranges of sensor saturation. Differences in

F will enable the perception among objects using the set

in Equation (

14). The elements do not necessarily correspond to those of subset

S Equation (

8).

In addition, if the

Measurement Enhancement is not sufficient to discern the defects in the inspection, the distance between the

working point and the

tuning point must be minimized using the Equation (

10). In this case, minimization is performed on the input magnitudes of the set

A. Therefore, the transformation

operates with the values of the input magnitudes

Equation (

14). In consequence, Equation (

15) models the proposed solution for inspecting specular surfaces combining the two proposed transformations

and

according to the objects to be inspected and the possible defects to be detected (see

Figure 6).

4. AVI Method Controlling Environmental Parameters

As it was previously shown, increasing the differences of the input magnitude values

can be performed by means of the control of the environmental conditions or of the camera parameters. These are two input parameters of the components

Equation (

2) that contribute to the formation of the image I Equation (

1) and are variables that can be affected by the system. The third input parameter, the object, is considered as a constant because it is the object to be inspected.

It is known that the camera, as a photoelectric transducer, provides a measurement related to the scene radiance (see

Figure 7). It is a function of environment and object magnitudes. The contribution of interest to the radiance is the radiance coming from the inspected object. This radiance,

, is related to the object reflectance,

[

66] and the irradiance

E incident on the surface according to Equation (

16).

Reflectance

contributes the necessary information about the behaviour of the light interacting surface of the object. Irradiance, the second factor of Equation (

16), is related to the environment variables, in fact, to be precise, to the electromagnetic radiation that reaches the surface of the object. These magnitudes affect the contribution of the object in the camera.

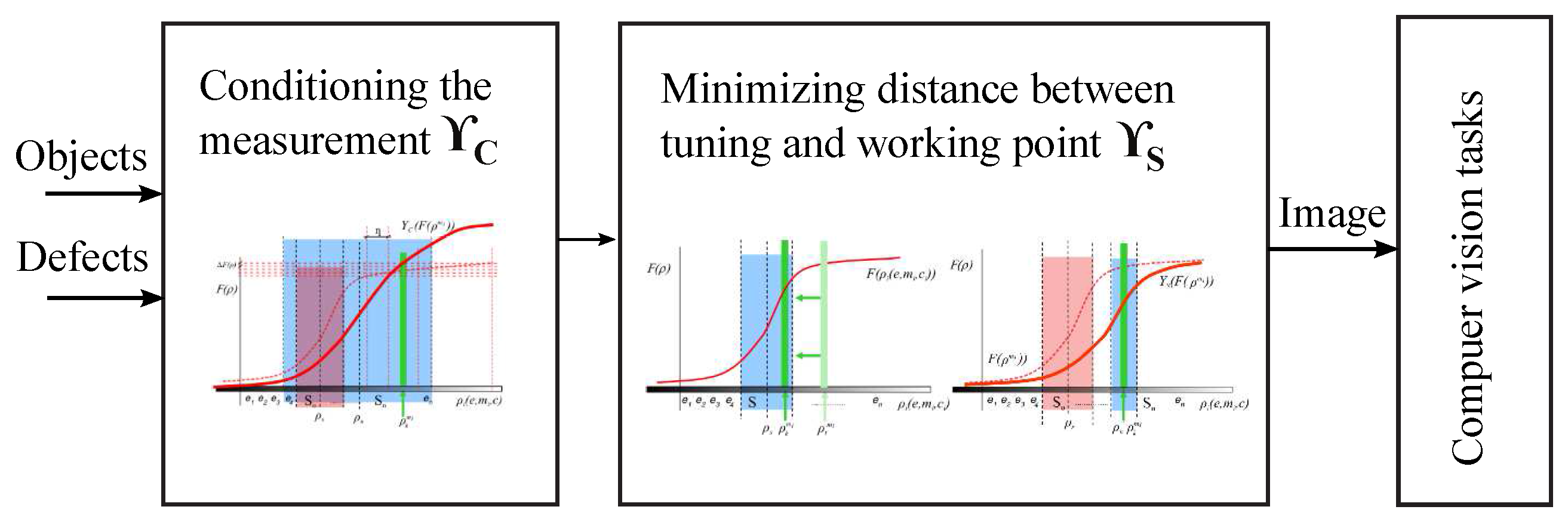

Controlling the luminous energy of the environment, a function of the lighting sources and the transmission modulations, is a way of working directly with input magnitudes of the system. Without considering any other perception characteristics, irradiance is the key. Structuring the energy that reaches the object is a method of affecting the environment or the object. It enables areas of the surface of the object to be isolated, the contrast in the camera to be increased or decreased, etc. Thus, this variable allow us to force large differences at the input to perceive changes in the output image.

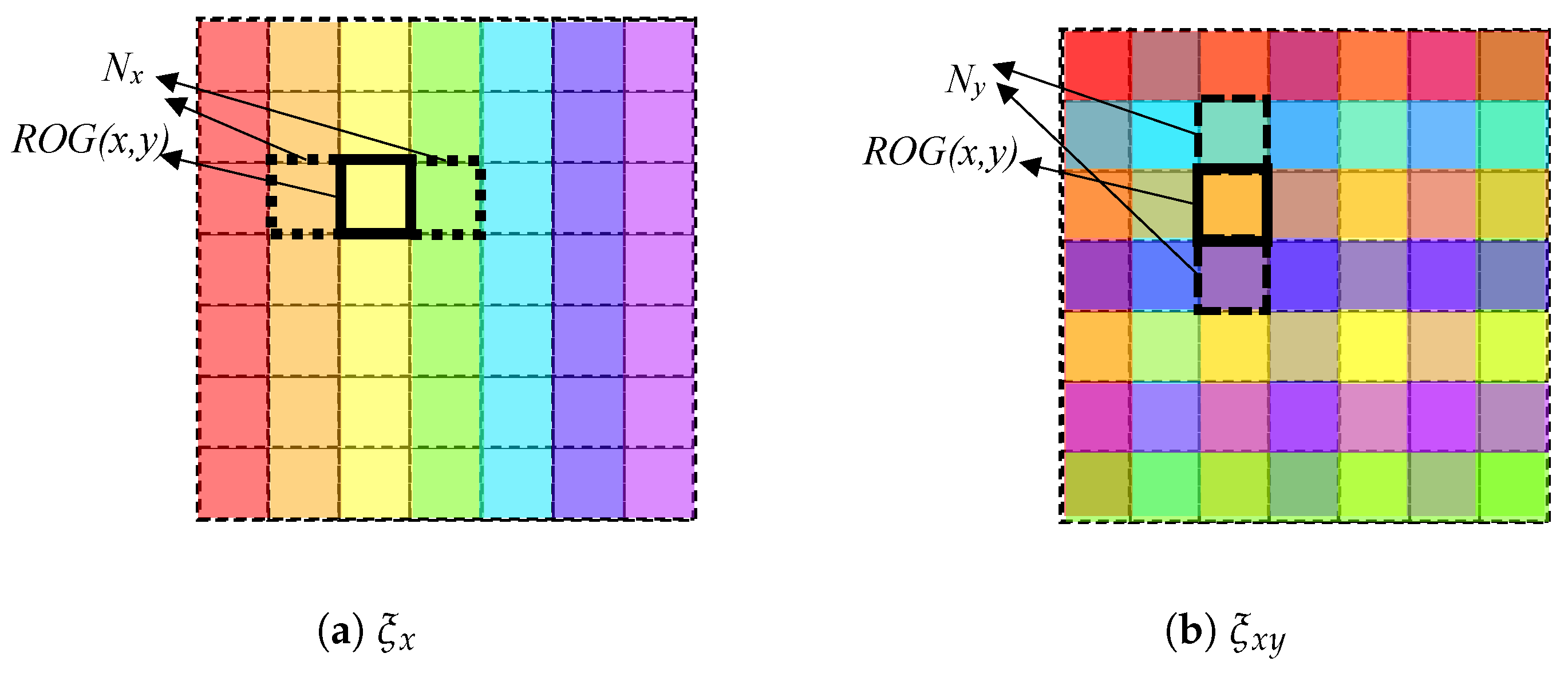

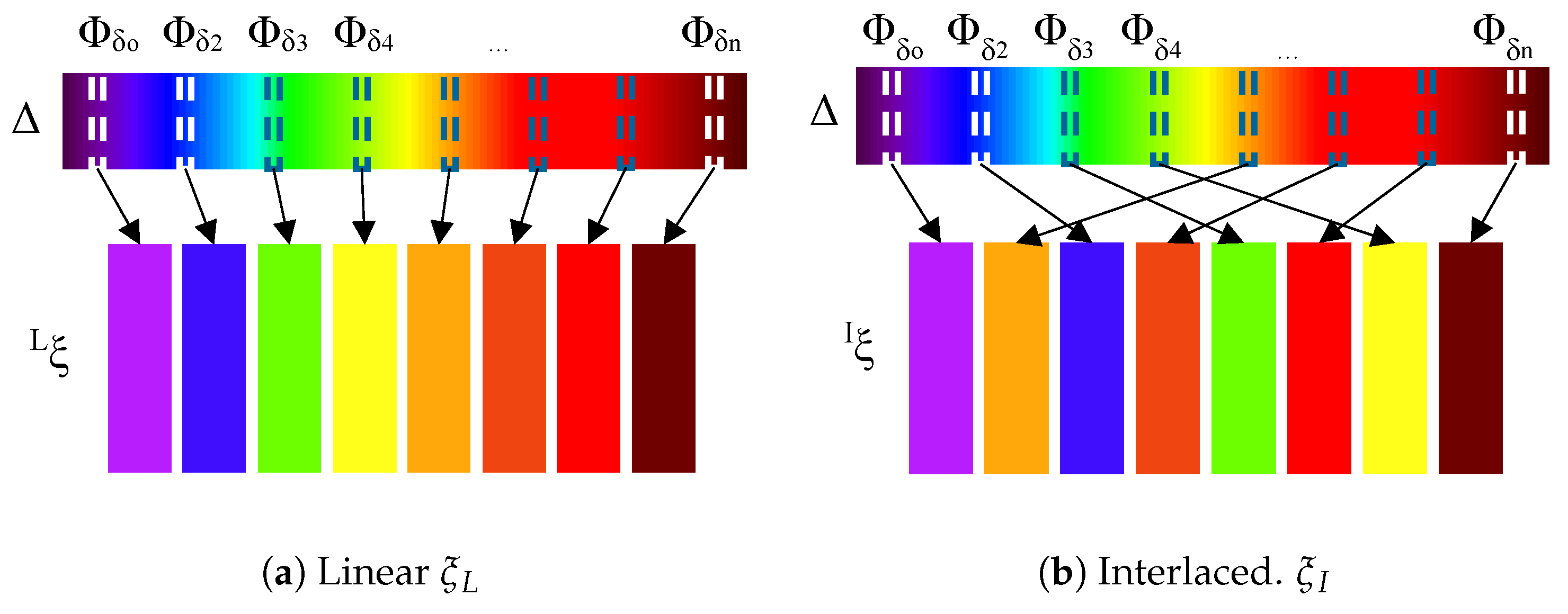

Measurement Enhancement is the task of the transformation

Equation (

15). In this paper, we propose a instance

of

focused on lighting conditions of the environment. The transformation

structures the lighting in order to establish regions on the object radiated by different spectral powers

. Areas of different radiances are formed in this way. The regions can be formed by means of spatial modulation, forming a grid. Also, a sequence of lightings using temporal modulation or a combination of both can be used. The regions contribute with different radiances to the input of the camera establishing independent areas in the image. The increasing of the differences at the input of the system is produced in space or time domain Equation (

12).

In this paper, the transformation is carried out by means of spatial modulation (space domain). The different areas in the image are formed with characteristics proportional to the irradiance E. The object irradiance E actively affects the perception process. The environment parameters are established so that great lighting gradients on the object are formed. The projection of the radiance L, which arrives at the optics, forms an image with regions. The projection of the radiance L, which arrives at the optics, forms an image with regions. The gradient of the image is a function of the one generated on the object. The greater the gradient of the pattern, the greater the gradient formed on the photodetectors. Then, a larger difference among adjacent photodetectors is obtained. Therefore, each photodetector has a spectral power associated in an instant (that is the function of irradiance and object characteristics).

Structuring the lighting enables the projection of a pattern on the surface object. This pattern is deformed by the object characteristics. It may be considered that the irradiance E is modulated by the object. Then, any other object modulates the generated pattern in a different way, and, therefore, modulates the spectral power, which is received in the space or the time by each photodetector, in a different way (in terms of inspection systems, any object with defects will modulate the generated pattern in a different way than the same object without defects). Controlling the input values of the perception system, for each photodetector, enables a reduction in the elements of the set of scene magnitudes that the system has to deal with. In addition, the pattern has to be configured so that the differences of the output are perceptible according to the magnitudes of the object (shape, colour, topology, etc.) to be perceived.

Control of energy that reaches the surface of the object is needed in order to design a certain pattern. The task depends on the number of environment lights, the spatial distribution of lighting, the wavelengths that conform each of the sources, the time, the modulations of transmission, etc. Moreover, the pattern of spectral power

could be different in order to inspect a specific magnitude

of the object

m. Then to this purpose, the transformation

will determine the spectral power

as a function of

and

:

For practical considerations, it is interesting that the function of energy establishing the spectral power

is established in terms of the field radiance

by considering four parameters:

,

,

and

(see

Figure 8).

Hence, the transformation

could be defined by:

The regions of lighting

on the surface of the object can be determined from the parameter

s. It is a function that establishes the morphology of the regions of the pattern formed on the surface. Parameter

determines the set of lighting characteristics that radiates each of the established regions

. The function

determines the spatial configuration of energy reached by the object. The task of the function is to distribute the lighting characteristics of the set

over each of the regions determined by

s. Finally, the function depends on time

t. If the structured lighting is temporal, it is necessary to generate a sequence of patterns. Moreover, for practical reasons, we define

as the regions of lighting on the source. In the same way as

, the

is the morphology of the regions of the pattern conformed on the lighting source, in this case (see

Figure 8).

6. Case Study: Automobile Logo

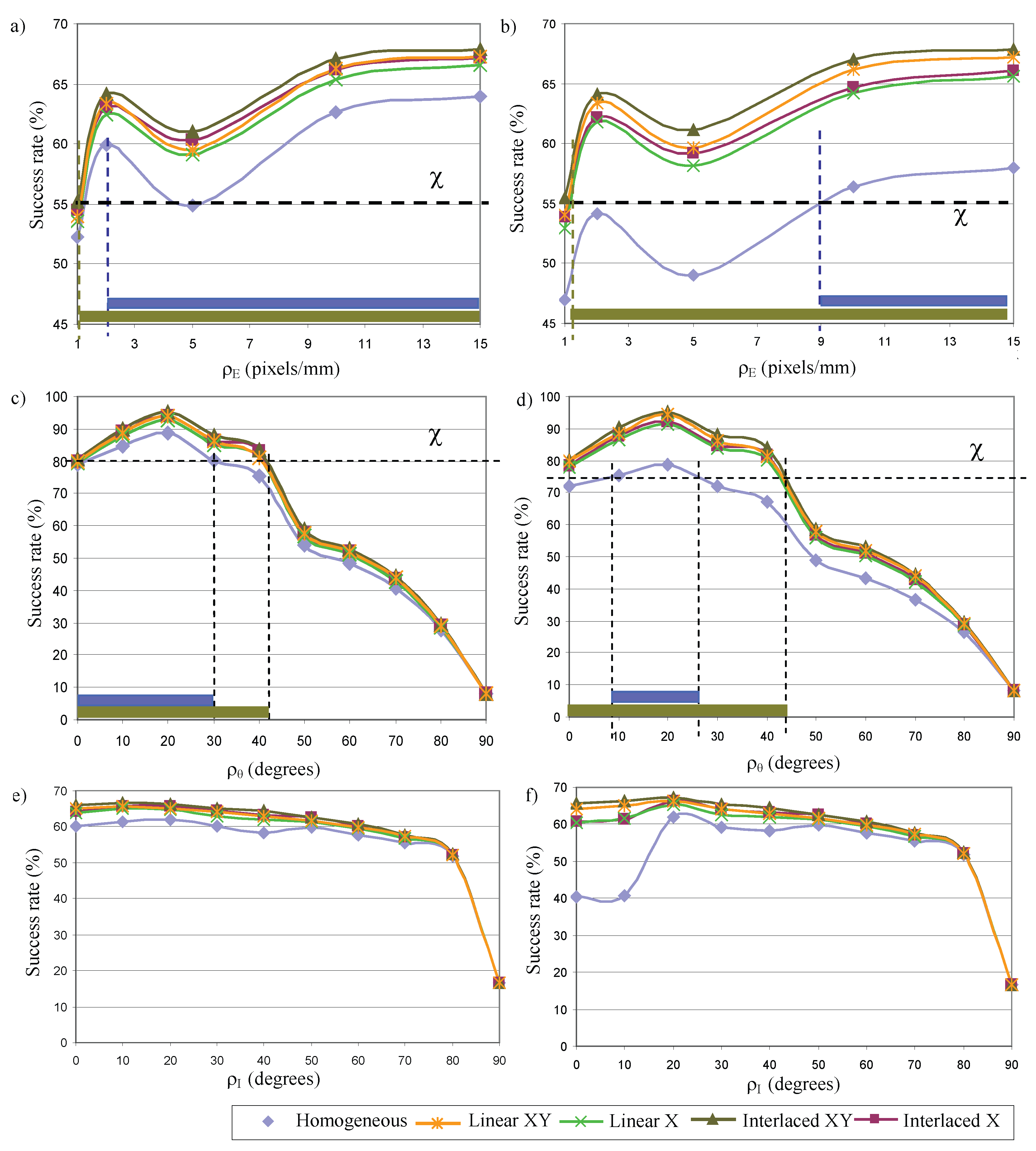

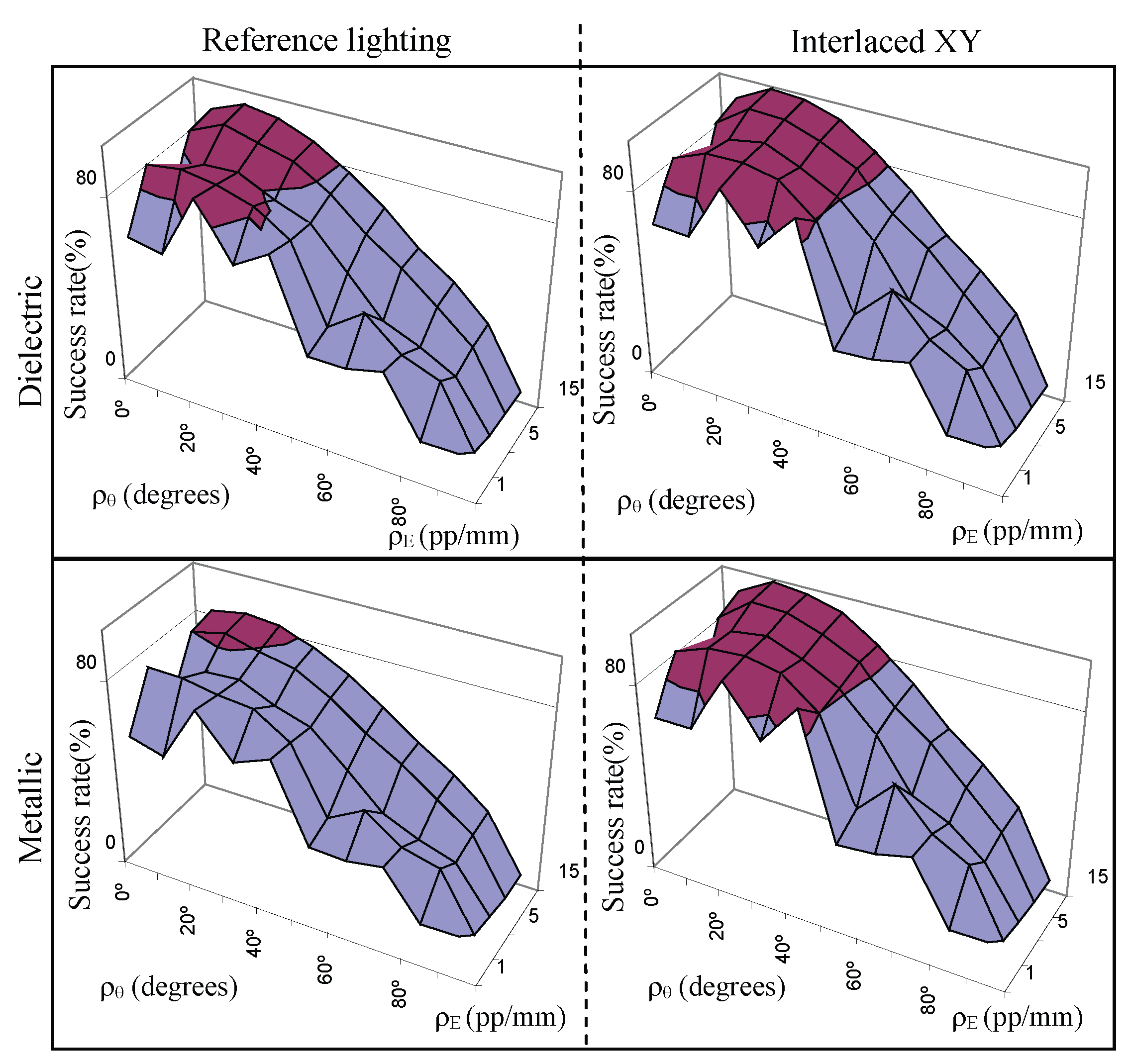

Simulation, as a previous step of experimentation in real manufacturing environments, allows a preliminary study that can be used to discern the best acquisition path planning or the equipment characteristics to perform the inspection of the specular surfaces. Finally, in this section an example of the application of the experimental results of the scale and angle of perception on specular surface acquisition for inspecting a 100 mm diameter Mercedes Benz metallic logo is shown. The area to be inspected is 4676.15 mm. Given that the minimum threshold depends on the application, in this case a value of 80% is assumed.

The inspection conditions are restricted to only 5 values of the scene magnitudes

for a metallic object with an unstructured lighting (reference lighting). As can be seen in

Figure 15 considering scale and angle (

,

), the scene magnitudes are the following: (0

, 2 pixels/mm), (10

, 10 pixels/mm) and those with the considered highest scale ([0

, 10

, 20

], 15 pixels/mm). The best lighting configuration,

’

Interlaced XY’, permits increase the tuning of the scene magnitudes system up to 20 points (see

Figure 15): ([20

, 40

], 1 pixel/mm), ([0

, 10

, 20

, 30

], 2 pixels/mm), ([10

, 20

, 30

, 40

o], 5 pixels/mm), ([0

, 10

, 20

, 30

, 40

], [10, 15] pixels/mm).

The choice of the conditions (scale, angles, etc.) to capture the whole object for inspection is a complex problem. It is necessary to take into account the particularities of each solution. In this case as example, the scale is established at 15 pixels/mm. Therefore, according to the previous scene magnitudes, the perception angle can deviate by up to 20 using the reference lighting and by up to 40 using the function ’Interlaced XY’. In other words, the angle formed by the vector normal to the camera and the normal vector of the surface to be inspected must be from 0 up to 20 using the reference ligthing and from 0 up to 40 in case of ’Interlaced XY’ lighting is used.

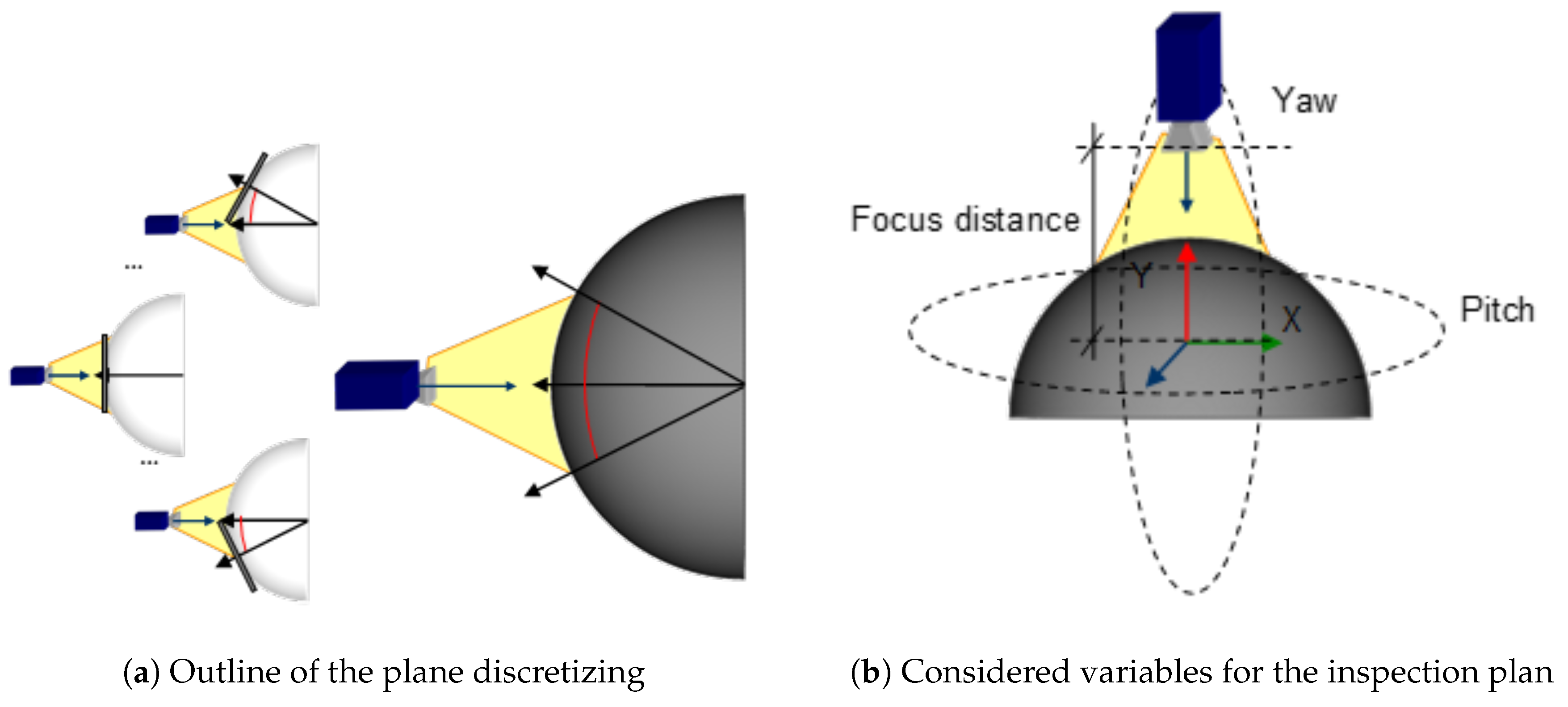

In consequence, in order to apply the results, any point on the surface can be viewed as a point on a plane whose normal vector is the normal of the surface at that point. For example,

Figure 16a shows an outline of this assumption. In this way, the surface can be analysed as a set of planes (a plane per point on the surface). Then, the experimental results (calculated for planes) can be extrapolated to calculate the perception scale and angle of surfaces of different curvature for any point on the surface. The use of planes for any point on the surface could be computationally expensive. Hence, as the function

establishes the points on the surface in a coordinate system that is local to the object, in practice the function

is defined as a triangle mesh: a collection of triangles that defines the surface shape of a polyhedral object in 3D computer graphics. The use of a triangle mesh allows the system to discretize the surface geometry as a reduced collection of planes. The number of planar faces will be determined by the geometry of the surface and the resolution used in the experiments (in this case more than 4000 polygons, although less triangles are enough, high details are not needed due to angle perception is discretized each 10

).

Since the scene magnitudes are a function of the characteristics of the object, the environment and the camera, it is required to make decisions about the appropriate magnitudes to infer to provide them. In other words, it is necessary to decide which magnitudes of the environment or the camera have to be modified to establish the adequate values of scale and angles calculated in the experiments. The scale will be determined by the number of pixels available to the sensor by setting the camera position at a focus distance and at a constant focal length. The conditions of the angle of perception will be established by the movement on the

X and

Y axis of the origin of coordinates located in the center of the of the object: the Yaw and Pitch movements of the camera (see

Figure 16b). Due to select the variables is a complex problem, an approximation to the optimum solution is proposed in order to determine the appropriate angles between camera and object surface. This increases the captured area of the logo and reduces the images to be captured. For this purpose, a search tree was designed using a branch and bound algorithm, in which the solutions space of each node is reduced to a maximum of five children and a maximum temporal processing level is established.

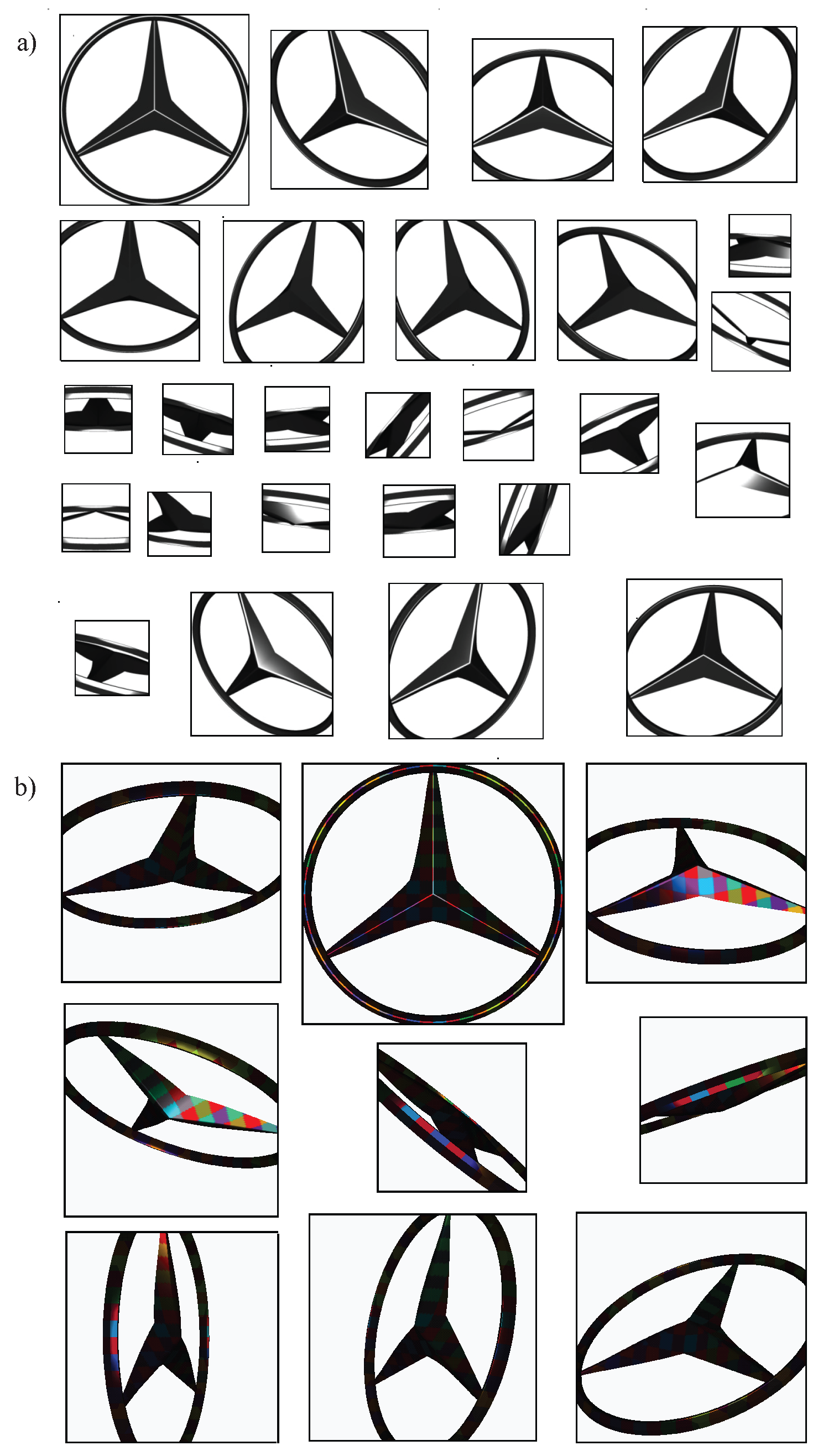

The logo inspection requires 26 captures (see

Figure 17a and

Table 4) using the lighting reference and a camera of 1452 × 1452 pixels to acquire a surface area of 4619.96 mm

(98.79% of the logo). Some captures can be made using a lower resolution of up to 510 × 510 pixels. According to the function ’

Interlaced XY’, only 9 images are necessary (see

Figure 17b and

Table 5). The camera resolution varies between 826 × 826 and 1455 × 1455 in order to cover a total surface area of 4675.05 mm

(99.9%). The first capture obtains 45% of the total inspection of the logo whereas only 8.17% is obtained using the reference lighting. This fact shows how the lighting system allow to perceive in more scene magnitudes. Specifically, more points on the surface accomplish the angle of perception

.

7. Conclusions

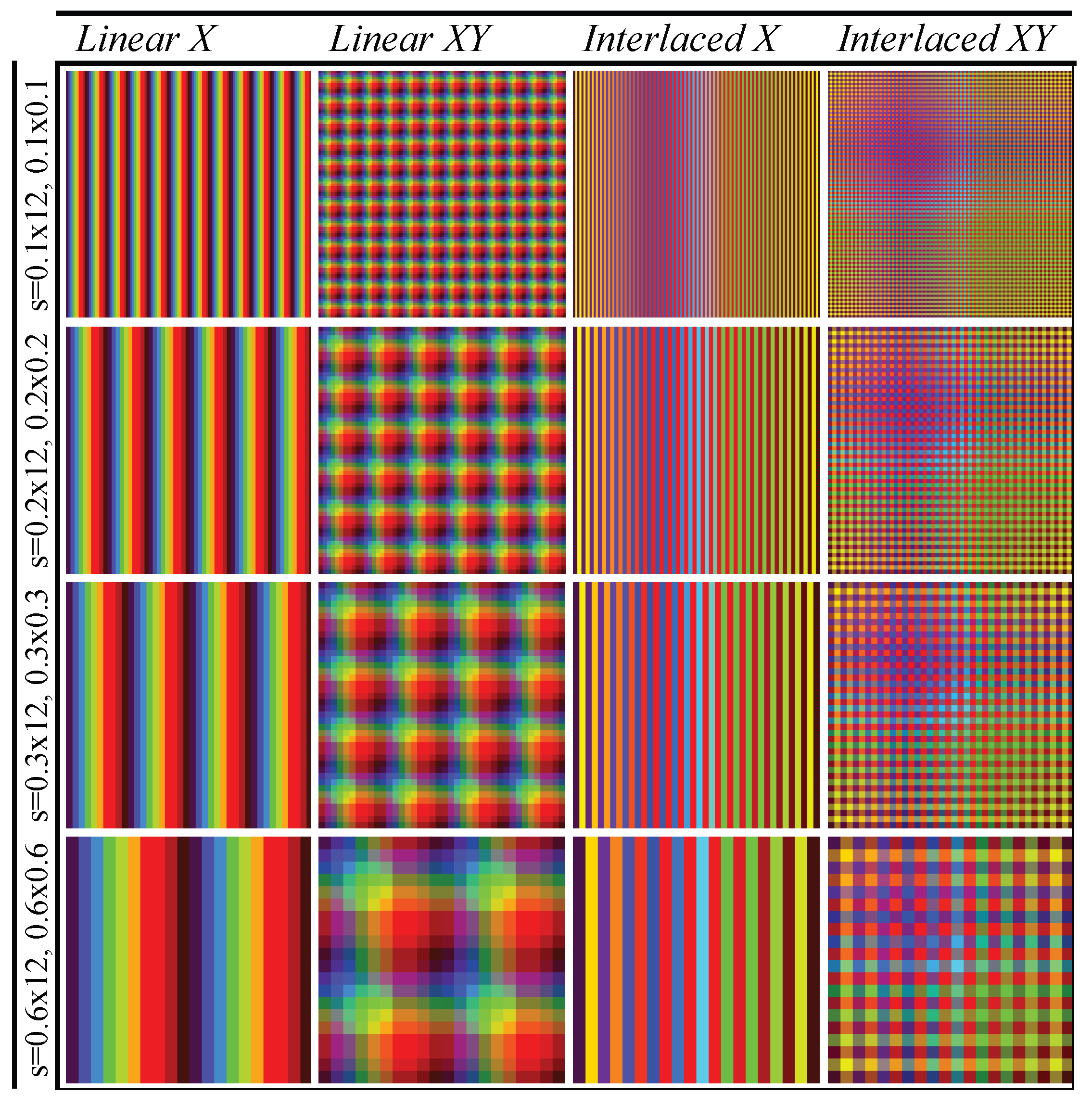

A novel active imaging model able to increase the perception capacity of the visual system is presented. The model provides solutions to vision problems in which it is difficult to perceive. It describes the parameters involved in a visual sensorization system in three main aspects: target object to perceive, environmental conditions, and sensor parameters. Each of them is individually parameterized as object size, color, etc.; environment parameters as light, objects-sensor orientations,...; and sensor parametrization such as focal length, monochrome/RGB/3D, etc. Moreover, the model describes the perception capabilities and the limits, and present solutions to these limits. In particular, the model is instantiated for the specular object inspection, as an example of a challenging situation for visual sensor perception. The specular surfaces problem means the device must operate in the intervals of low perception, related to reflections and shine. Traditionally, automated visual inspection requires a thorough analysis of the problem where solutions include everything from the acquisition equipment to the algorithm to recognize the possible defects. As a consequence, these vision systems are oriented to concrete applications and cannot be generalized. The model presented here deals with this lack by providing a general representation of vision systems and solutions for the perception limitations.

The solution proposal of the problem related to specular surfaces provides a normalization of the image in which different objects perceived as the same can be distinguished. First, Measurement Enhancement increasing the differences of the input magnitudes is performed. In this paper, the enhancement of measurements is carried out using environment variables, concretely controlling the lighting conditions. This enhancement spatially structures the lighting in order to set up regions on the surface of the object radiated using different spectral powers forming a grid. Finally, the system is tuned in order for the magnitudes of the scene (scale, angles, intensity lighting, etc.) to be properly perceived.

According to thorough analysis of the problem characteristics, use of virtual imaging simulations as a preliminary method step for validating hypotheses of the visual inspection systems is proposed. The validation of the conditions (point of view, scale, lighting, etc.) in which the inspection has to be performed, can be carried out in a flexible and low cost manner justifying the use of simulations in an early warning step of the system viability. Hence, the model can be pre-validated before the system is developed.

The use of simulations and knowledge bases and the generalist approach of the transformation provide a general solution that can be systematically applied. The method can be applied to the resolution of different inspection problems adapting the contents of the knowledge bases, avoiding a new design solution for each problem.

A realistic simulator has been designed to carry out the experimentation. This simulator recreates the conditions of the image formation and permits the validation of the inspection systems based on the model. The test shows the use of the transformations improves the capacity of perception of the system compared to a homogeneous environment (only one wavelength or colour). This is both for dielectric and metallic materials with regard to the tuning of the scale, angle or lighting. The use of transformation considering maximum amplitude gradient and maximum spatial differences (’Interlaced XY’) obtains the best results to perceive the defects for all cases. In contrast, the function with minimum amplitude gradient and minimum spatial differences (’Linear X’) offers the worst results of the transformations considered to perform the Measurement Enhancement. The functions (’Linear XY’ and ’Interlaced X’) that use only one of the maximum proposed gradients, scale or amplitude, generally present similar behaviour. The function that performs a homogeneous lighting of the object surface, which is used as reference, obtains the minimum success rate in all cases. Hence, the results prove the improvement in the capacity of perception for different conditions of scale, angle and intensity lighting. The proposed method enables the detection of surface defects in a greater number of values of scale, angle of perception and lighting conditions than in normal conditions using uniform lighting. The immediate repercussion is that a smaller number of captures of the scene is needed by the system.

The research should continue by studying the Measurement Enhancement by means of the extension of the input magnitudes: using other transformations based on structured lighting (with different patterns and using the time domain) and using other parameters like variables of the capture system to provide great gradients in the image.

The simulation confirms the hypotheses. It would be wise to advance to physical experiments according to the concrete industry in which the system is to be technologically developed. Nowadays, the ’Interlaced XY’ function (in this case, the pattern is made up using the ordered sequence of grey levels instead of using wavelengths) is being tested for increasing the perception capacity of an inspection system aimed to detect shape defects on the surfaces of ceramic tiles.