1. Introduction

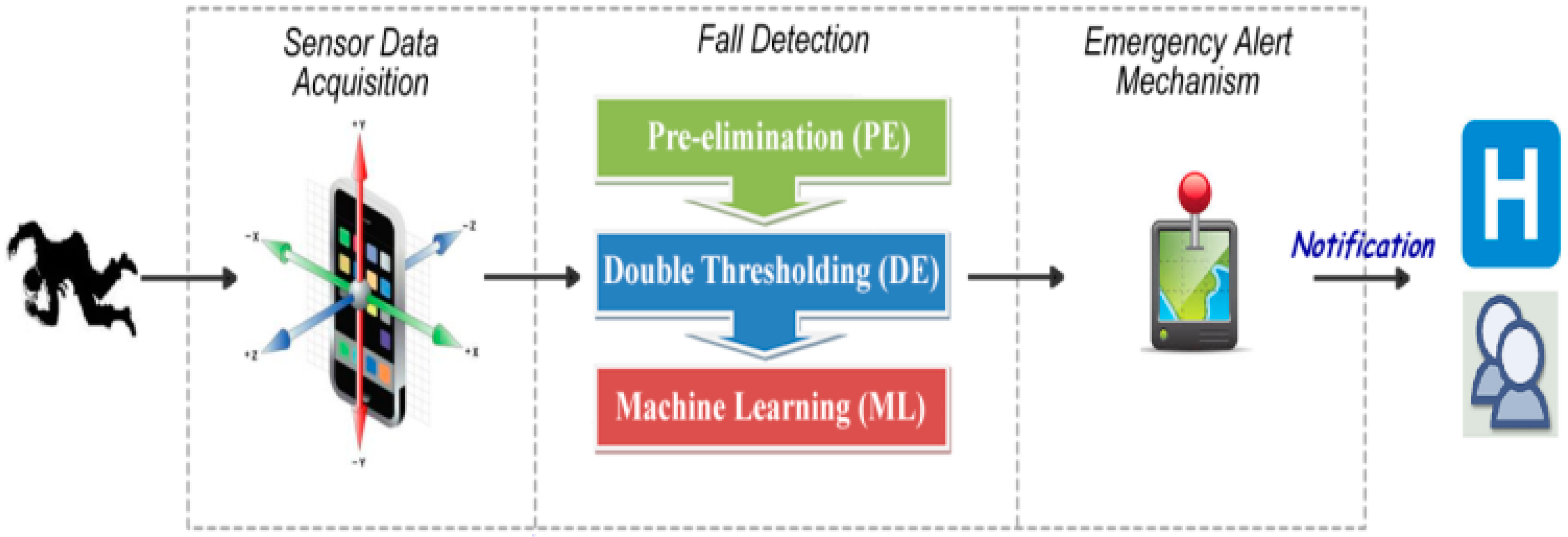

In daily life, people could unwillingly be part of some dangerous events resulting in a fall, which may be fatal due to a head injury and/or bleeding. Rapid first aid treatment can potentially reduce the severity of such injuries. However, calling for help could be impossible, especially when the injury results in unconsciousness and injured person is alone in the scene. In this study, we present a novel hybrid method for an assistive mobile application, which exploits the accelerometer sensor of a smartphone for the detection of fall events and informs authorities such as police, ambulance or caregivers to be notified in case of an emergency.

In recent years, there has been a growing interest in fall detection systems. Different fall detection approaches reported so far, are thoroughly examined in different surveys [

1,

2,

3,

4,

5]. Researchers have exploited several types of sensors, such as accelerometers, cameras, microphones, infrared, floors and pressure sensors to detect fall events. In context-aware systems, visual and audio information was gathered from sensor networks deployed in the environment, especially indoors, whereas accelerometers and gyroscopes were used on the human body and they were introduced as wearable sensors. Both approaches started to lose popularity with the widespread use of smartphones [

1]. Advanced features of smartphones make them indispensable to people at any moment. Contrary to external 3-axis accelerometers/gyroscopes and context-aware systems, smartphone-based fall detection applications are non-intrusive and easy to use, even by elderly people, since no beforehand deployment of the sensors is required.

Although the type of sensor(s) to be favored in fall detection applications is quite important, choosing the appropriate sensor data processing technique also plays a crucial role in the success of the system as well as in the energy consumption. In the literature, there are two main data processing techniques to discriminate fall events and fall-like events/daily activities [

1,

2,

3,

4,

5]: thresholding techniques and machine learning algorithms. Thresholding techniques are advantageous due to their low computational complexity. On the other hand, machine learning methods lead to accurate detection of fall events among the various movement types [

1,

2]. In this manner, we propose a novel hybrid fall detection method by taking advantages of both approaches. Combining these approaches enabled us to detect fall events in a very energy-efficient way, with high accuracy, low computational cost and low response time. The thresholding phase of the proposed approach, not only eliminates several daily activities, which do not show fall patterns, it also detects severe fall events. Machine learning methods, on the other hand are applied to the remaining ambiguous activities to distinguish fall events from fall-like events.

The main goal of this study is to discriminate fall events from daily activities with high accuracy in an energy-efficient way. Since accurate detection of fall events is crucial, the parameters of this hybrid system have been adjusted accordingly to increase the sensitivity, specificity and accuracy. As high sensitivity may result in lower specificity ratios, a fall alert handling mechanism has been added to the application to avoid calls caused by misclassified daily activities.

The key contributions of this study could be summarized as follows:

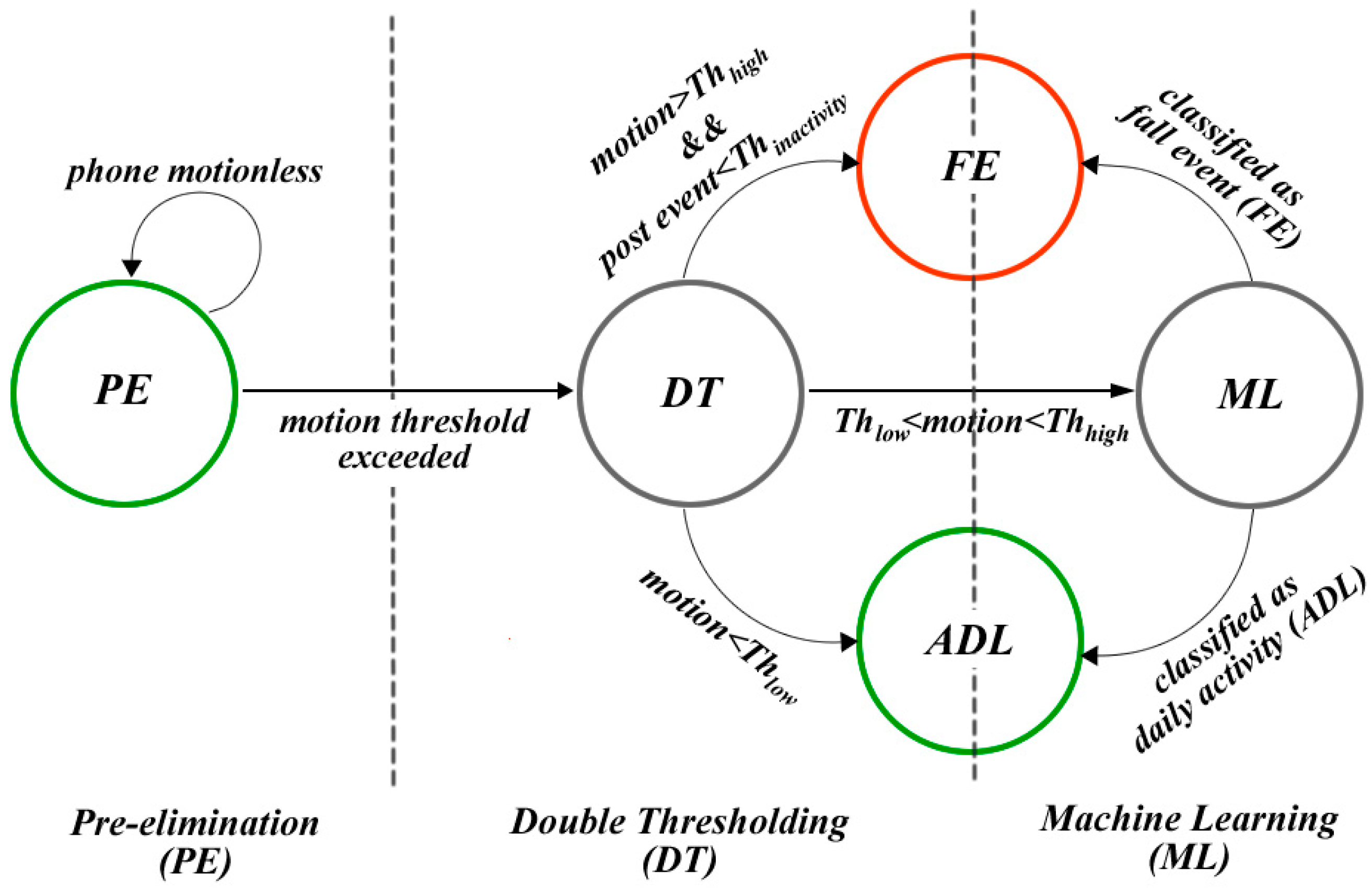

A new hybrid fall detection approach is introduced in which we take advantages of both thresholding and machine learning algorithms. The thresholding method decreases the computational time, thus saving energy, whereas decision-making based on machine learning algorithms ensures high rates of true positives.

A novel pre-elimination phase is introduced to save energy, especially in cases where the smartphone retains motionless for extended periods of time, such as when left lying on a desk.

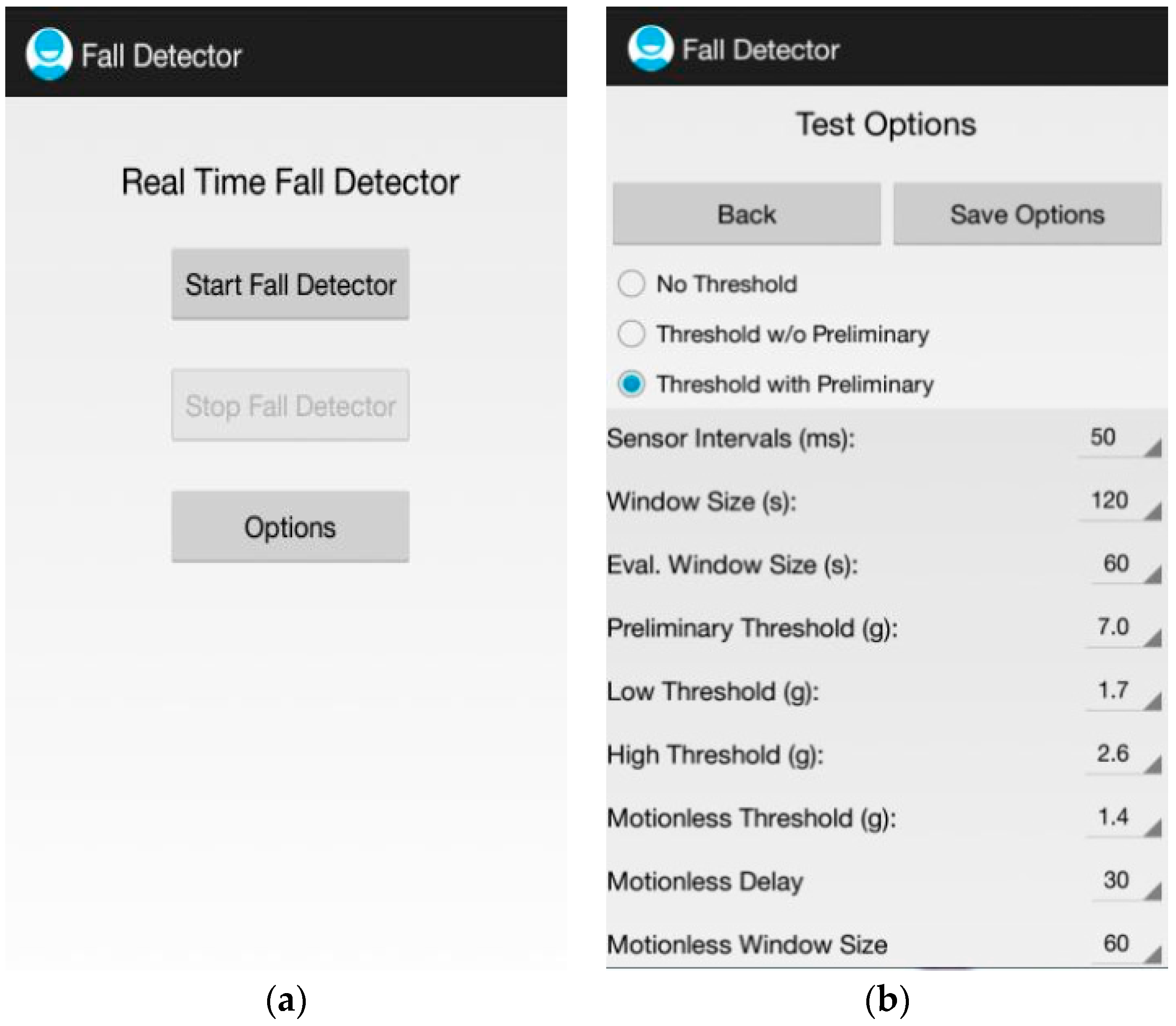

A mobile application, uSurvive, based on our hybrid fall detection approach has been introduced in this paper. This app runs as a background service and is capable of detecting fall events, and informing the authorities and/or possible caregivers.

The rest of this paper is organized as follows: in

Section 2, we briefly discuss the pros and cons of fall detection approaches and elaborate the fall detectors running on smartphones.

Section 3 introduces the novel 3-tier architecture of the uSurvive application and describes each tier in detail. We then explain the modules of our mobile application and discuss its advantages in

Section 4. In

Section 5, we evaluate the proposed architecture and the performance of uSurvive in terms of success rate and energy-efficiency. Finally, we discuss the results and conclude our paper in

Section 6 and

Section 7.

2. Related Work

The fall detection problem has been investigated since the beginning of 1990s. In 1991 Lord and Colvin first proposed to exploit the accelerometer sensor for fall detection [

6]. Afterwards, Williams introduced an autonomous belt device, which could detect the impact of the shock on the ground, and a mercury tilt switch to detect the lying position of a human body [

5]. However, apart from using accelerometers, there are several different approaches, such as video processing, audio processing, exploitation of gyroscope sensor and processing Wi-Fi signals [

7,

8] to detect fall events. Compared to visual and acoustic sensors, accelerometers consume less energy and are much easier to integrate into wearable mobility monitoring devices. Several drawbacks of video and audio processing, including their limited area of usage and their high CPU/RAM requirement [

9,

10,

11,

12,

13,

14,

15], led the researchers to make more use of wearable sensors [

16,

17,

18]. Moreover, high accuracy rates for fall detection and usability both indoors and outdoors made accelerometer as well as the gyroscope sensors more attractive for fall detection systems.

Several surveys [

1,

2,

3,

4,

5] about fall detection, published so far, discuss the pros and cons of the proposed approaches from different angles. Each survey has its own categorization and group the available studies based on such as the type of sensors, the applied methods, and/or the intrusiveness of the proposed approach. In the following paragraph, we briefly address these existing surveys.

A comprehensive literature review was completed and published in 2013 [

1]. This contemporary study discusses available fall detection systems in terms of the dataset, fall types, performance, sensors and features used for fall detection. In this study, Igual et al. divide fall detectors into two main categories: context-aware systems and wearable devices [

1]. The first category includes cameras, floor sensors, infrared sensors, microphones and pressure sensors while the second one includes 3-axis accelerometers either attached to different parts of the body or built-in into a smartphone. Smartphones, being an integral part of many people’s lives, became the center of contemporary studies, since for most people, especially for elderly ones use of a wearable gear is discomforting, thus, they often reject wearing these external sensors. As most smartphones incorporate accelerometers and gyroscopes, the kinematic sensor-based approach [

19] could be implemented on them due to their cost effectiveness, portability, robustness, and reliability.

There are also some surveys, which cover existing fall detectors [

2,

3]. In [

2], fall detectors are divided into three groups, including wearable device-based, ambiance sensor-based and camera- based. Perry et al. [

3] group the existing studies into three categories based on the measurement techniques used: methods that process acceleration data, methods that measure acceleration combined with other methods, and methods that do not use acceleration. On the other hand, Noury et al. examined available studies based on their detection approaches [

4]. According to this study, there are two main approaches. In the first approach, the researchers exploit accelerometer values at the time of impact created by hitting the ground, whereas studies based on the second approach also take the postfall phase into account. Recently, the authors in [

20] have compared the thresholding-mechanisms against machine-learning methods [

21,

22] in terms of classification accuracy. The results in [

20] support our proposed approach and show that machine-learning approaches are indispensible for high fall detection rates. On the other hand, [

23] has introduced a similar multiphase architecture using wearable sensors to our proposed multi-tier architecture designed for smartphones. However, they do not take energy consumption into consideration in their proposed architecture. Our proposed solution is designed for smartphones and aims at balancing the trade-off between the accuracy and energy efficiency.

In this study, we introduce an energy-efficient, hybrid fall detection model running as a background service on Android smartphones. Thus, we will focus on the fall detection techniques implemented as smartphone applications using different types of sensors including microphones [

24], accelerometers, and gyroscopes and elaborate the pros and cons of these techniques. Most of the available studies [

25,

26] propose thresholding methods. The first smartphone app, PerFallID [

27], running on Android G1 was developed in 2010. In this study, the authors exploit a magnetic sensor attached on either chest or waist or placed in a trouser pocket of a person, besides the built-in accelerometer of the smartphone. The experimental results show that, the accuracy of the detection via smartphone accelerometer is 90%, whereas the magnetic accessory improves the success rate by 3%. The energy consumption of PerfallID was examined too, in the same study. Based on the experimental results, the app would last about 33.5 h, if it is left running in the background. On the other hand, one of the first apps published on the Android market, iFall [

28], introduces an adaptive thresholding mechanism for fall monitoring and response. Similar to most of the former studies, this app is able to inform social contacts, such as relatives and emergency units, when a fall is detected if not deliberately disabled by the user. In order to reduce the number of false positives, like several other apps, iFall emits a prompt to cancel an alert before it is automatically sent. iFall evaluates both the root-sum-of-squares of the readings from the accelerometer’s three axes and the position of the human body, since people tend to stay motionless for a while after an accident. Another Android application for fall detection was introduced in [

29]. This application is based on the principle that accelerometer values indicate zero at the time of a fall. However, the authors did not give any success rate for their threshold-based method.

In [

30], the authors model the fall activities with a finite state machine and propose a mechanism which utilizes the accelerometer readings of a smartphone. Only 15 fall events and 15 daily activities were analyzed and the sensitivity and specificity were given as 97 and 100%, respectively. A recent study, [

31], labels a daily activity as a fall event if any of the

x-,

y-, or

z-axis accelerometer reading deviates approximately 10 g, within a short period of time, from the average value, calculated from the daily activities of the user.

As to energy efficient fall detection, one of the main problems is the requirement of periodical reading of sensor data [

32]. This significantly shortens the battery life of a phone because of the associated energy overhead. Although the sensor itself uses very little power, the problem lies in the need to keep the phone’s main processor and associated high-power components active to achieve sensor sampling at periodical intervals. Thus, the total power consumption for sampling required is orders of magnitude higher than the power actually required for sensing. A recent study [

33], clearly shows that the main processors of customary smartphones require more than 1 s to transition between sleep and idle states and vice versa. Hence, floating a smartphone’s main processor between those states in order to achieve energy efficient sampling would not produce eligible sensor data. Thus, in [

33], the authors propose the use of a dedicated low-power processor for mobile sensing applications. They underline three different solutions. The first one is to use an existing microcontroller. The second option is to add a dedicated low-power microcontroller to the smartphone. The last one is to insert a low-power core into the smartphone’s main processor. Although the last option is the least costly solution, phone manufacturers would not lean towards it, since it requires changing the main processor. In [

34], researchers present Reflex, a suite of compiler and runtime techniques that significantly lower the barrier for developers to leverage such low-processors. Without doubt engaging a low power microcontroller and/or core for energy efficient sampling would be the ultimate solution. However, until smartphones with such specialized hardware for sensing became more customary, we believe that there is still room for more energy efficient operation by engaging hybrid-like solutions as proposed in [

23,

35] and a hybrid approach with double-thresholding mechanism in this study. The authors in [

23] proposed a multiphase architecture but did not take energy consumption into consideration, whereas [

35] exploit machine learning algorithms for classifying daily activities (ADLs). Our proposed hybrid approach would also contribute to more energy efficient operation even with specialized hardware.

5. Performance Evaluation

We evaluated the performance of the proposed method in terms of fall/non-fall events detection accuracy and energy consumption. Then, we present the results in the following subsections.

5.1. Hardware and Software Specifications of the Implementation

uSurvive was run on a Samsung Galaxy S3 Mini smartphone [

46], running Android 4.1 Jelly Bean [

47]. It embeds an accelerometer that acquires 3-axial measurements in the ranges ±2 g/±2 g/±2 g. Accelerometer data was sampled at 20 Hz for 3 s intervals. On the other hand, for the verification of energy consumption results, corresponding tests were also run on a Samsung Galaxy S3 device, which runs Android 4.3.

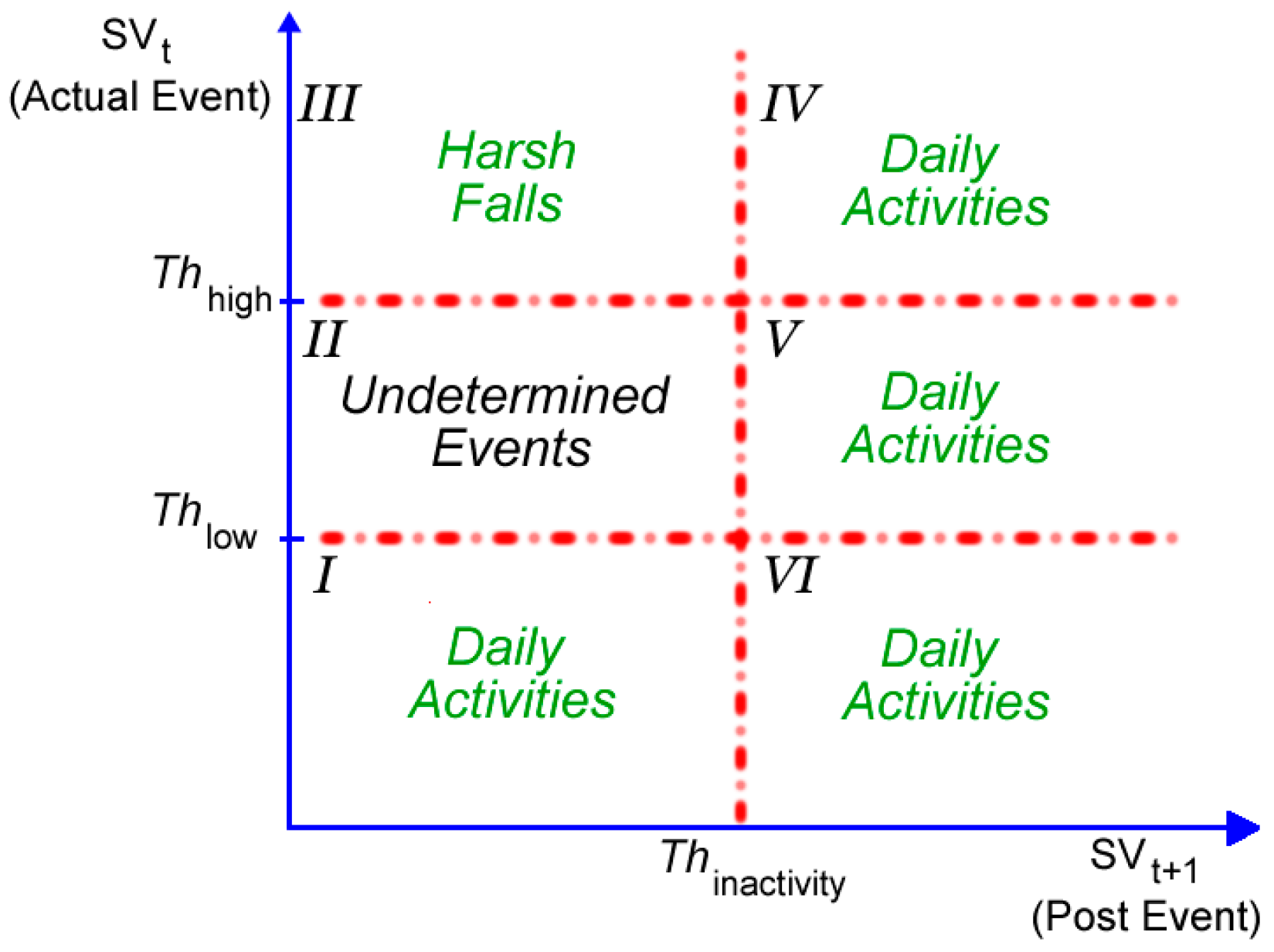

Thresholding parameters of the DT tier are crucial in the success of the proposed 3-tier hybrid architecture. To achieve user independency in the DT tier, parameters of thresholding and machine learning algorithms were determined using a validation set consisting of 100 fall events and 150 non-fall events. Fifteen subjects were involved in the collection of these events. Subjects performed five different ways of falling: falling while walking, falling while running, falling while standing, falling on knees and falling laterally. They also recorded three different daily activities: walking, running, and standing. During the test scenarios, the subjects were asked to carry the Galaxy S3 Mini in front pocket of their trousers.

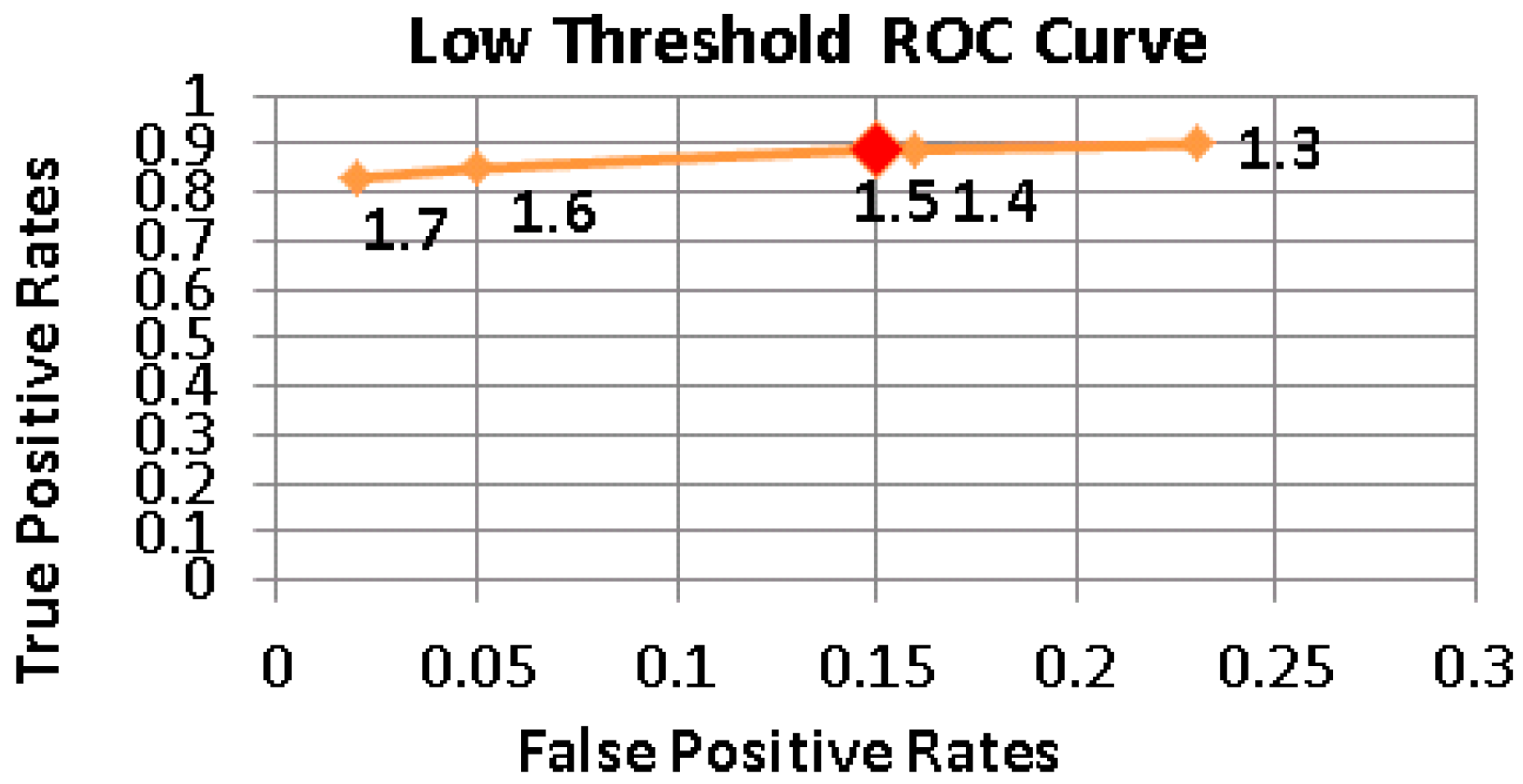

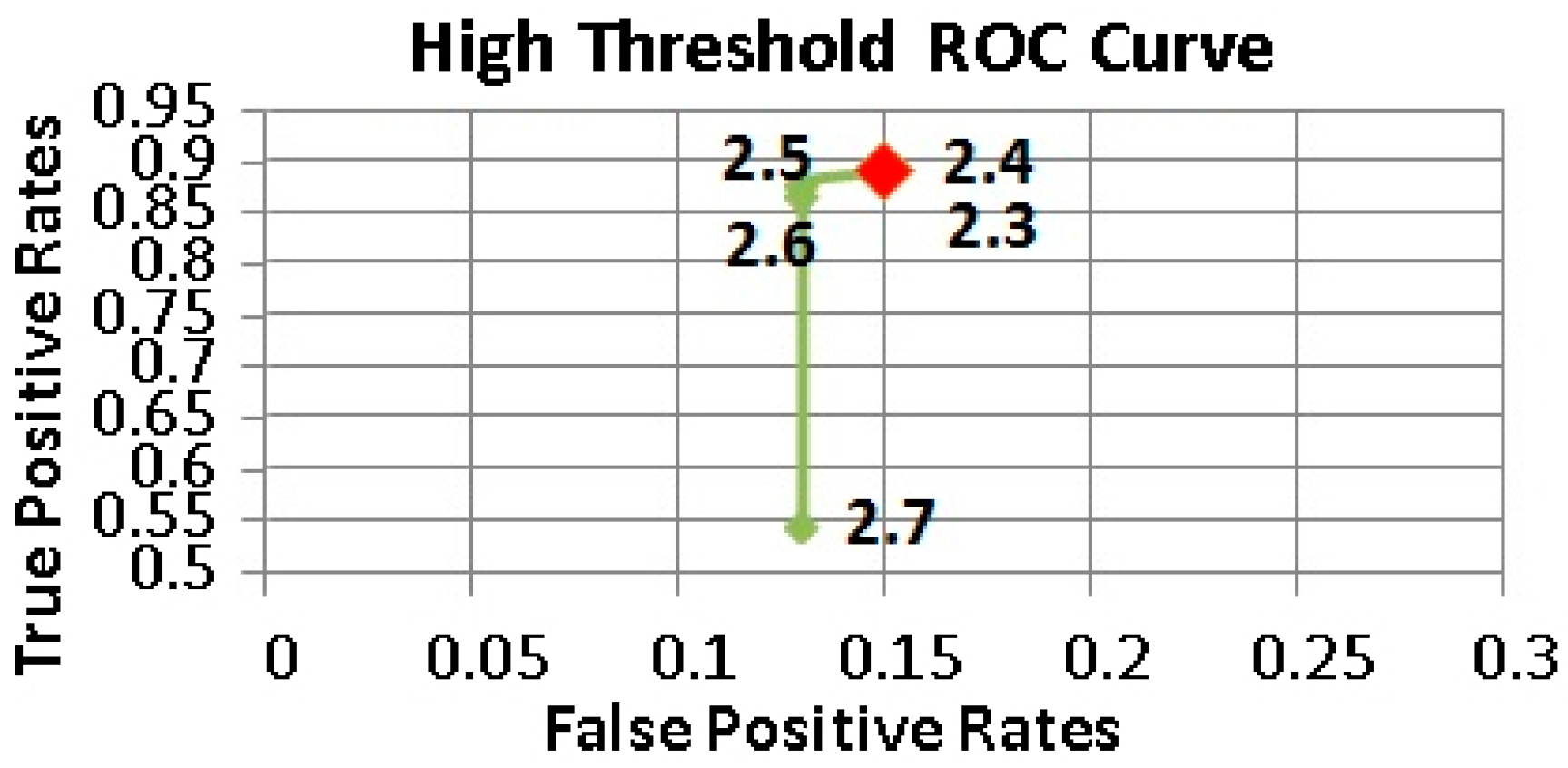

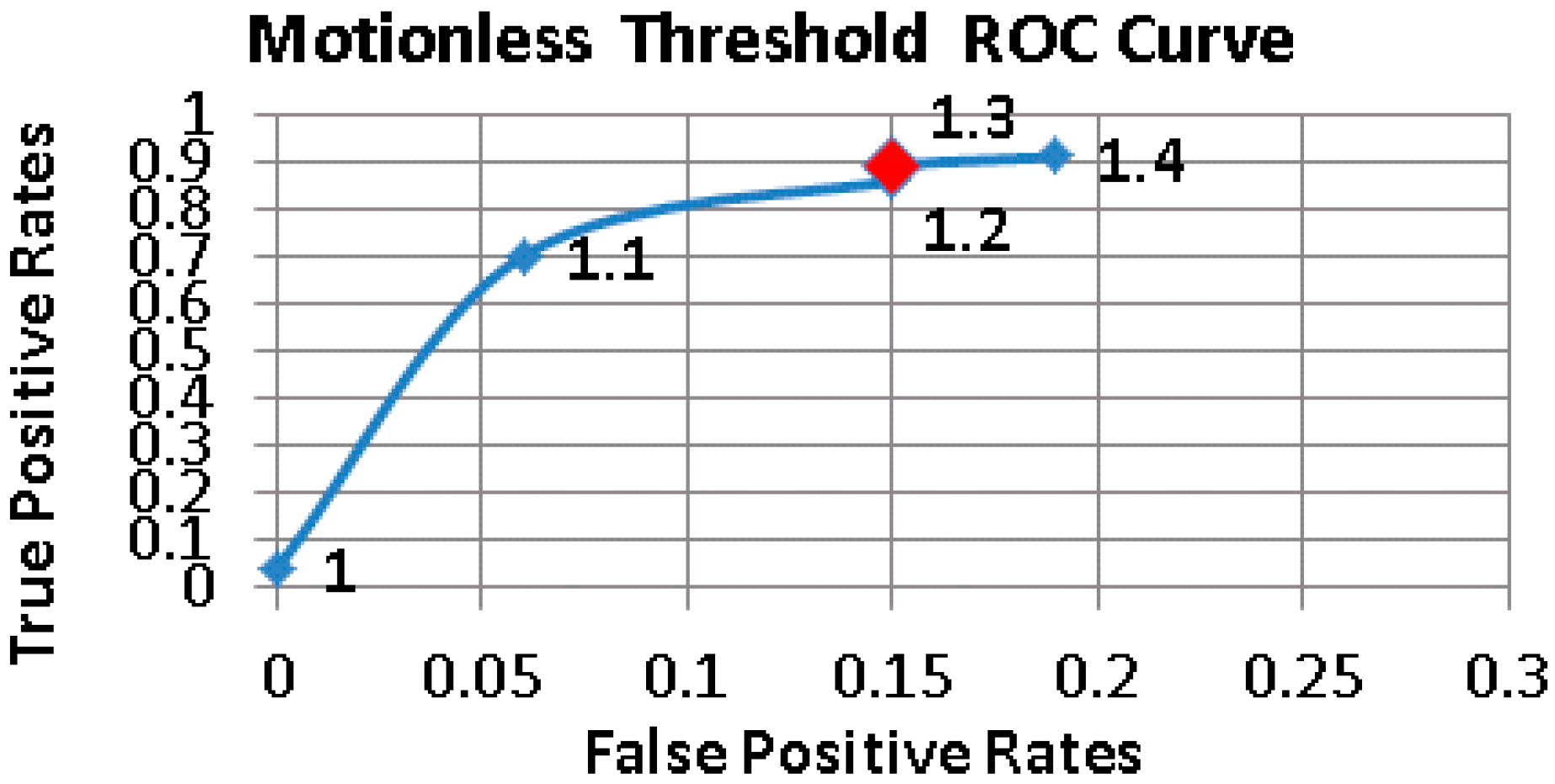

Figure 5,

Figure 6 and

Figure 7 show the Receiver operating characteristic (ROC) curves of

and

,

respectively. Different combinations of thresholding values were examined to find the appropriate set producing the minimum number of false positives, while maximizing the fall detection rates. The determined threshold values are given in

Table 4.

5.2. Fall Detection Performance of uSurvive

Fifteen subjects were involved in the performance tests. Subjects imitated five different ways of falling: falling while walking, falling while running, falling while standing, falling on knees and falling laterally. During the test scenarios, the subjects were asked to carry the Galaxy S3 Mini in front pocket of their trousers. In order to make a fair comparison and have a different test dataset, a new set of 175 fall events were first recorded by 15 subjects and then analyzed with three different approaches, only thresholding approach, machine learning only approach and the proposed hybrid method. The obtained results are given in

Table 5.

Table 5 clearly shows that the proposed hybrid method outperforms both thresholding and machine learning approaches. The hybrid method improved the success rate up to 4% over the machine learning only approach, which is stated as the most successful approach in the current literature to the best of our knowledge. Sensitivity values seem slightly less than expected. This is to be attributed to the fact that some subjects did not stay motionless after falling down, although they were specifically instructed to do so. Thus, our hybrid approach did not register those cases as fatal falls. After some consideration, we decided to leave them within the dataset.

From the standpoint of the users it is essential for the uSurvive to have a low false positive (FP) rate. As we use 3-s windows for the determination of the event type, approximately 30,000 events are analyzed within a regular working day, i.e., 24 h. An analysis of 24 h out of lab test results shows that only 0.5% of daily activities were misclassified by the proposed hybrid method. Nevertheless, the cancellation option of the generated alerts were adequate to countermeasure this misclassification. On the other hand, machine learning only approach produced 2% misclassifications. The specificity values of the three approaches are given in

Table 6.

In addition to specificity and sensitivity, the accuracies of the three approaches are also compared in

Table 7. Accuracy is computed by Equation (5) in order to overcome class imbalance:

We also analyzed the impact of the tiers to show its contribution to the proposed architecture. The daily tests show that pre-elimination tier has eliminated almost 90% of the sampled data while 6% of the sampled data have been eliminated by double thresholding tier. Hence, further computations of sampled data have been prevented and it resulted in energy-savings.

In the former tests, we have trained our system with the samples which are acquired while carrying smartphone in the front pocket of the subject’s trousers. In order to analyze the effects of different carrying types of the smartphones and different body sizes of subjects to the success of the proposed hybrid method, we collected a new dataset consisting of 150 fall events and 900 non-fall events by 10 different subjects including four females and six males. The weights of the subjects ranged from 50 to 95 kg while their heights varied between 155 and 185 cm. During the tests, the smartphone was carried in three different positions: in the front pocket of the subject’s trousers, in the rear pocket of the subject’s trousers and in an inner pocket of the subject’s jacket. The tests involve five different types of falls: backward falling, side falling, forward falling, slow-pace falling and sudden falling while walking, running or standing and six different types of daily activities: walking, running, climbing up and climbing down, sitting down and standing up. Subjects repeated each daily activity for five times. Corresponding confusion matrixes for each carrying type are given in

Table 8. The sensitivity of the system when the smartphone is carried in the front pocket of the subject’s trousers, in the rear pocket of the subject’s trousers and in an inner pocket of the subject’s jacket are calculated as 84, 80 and 88% respectively, while the specificities of all three modes are calculated as 99.3%. The results indicate that how the smartphone is carried does not affect the specificity of the system, whereas carrying the smartphone in the inner pocket of jacket positively affects the success of fall detection as it enables more acceleration due to the higher position of the smartphone on the body.

In order to analyze the effect of body sizes of the subjects to the performance of the hybrid method, subjects were separated into three groups according to their weights: Subjects within a range of 50–60, 61–70 and 71–95 kg. Confusion matrixes of each group are given in

Table 9.

Sensitivity of the system for three different weight groups 50–60, 61–70 and 71–95 kg are calculated as 81.6, 80 and 91.1%, respectively, while specificity values are calculated as 100, 99.2 and 98.5%. It is observed that while there is no significant difference between the success rates of 50–60 kg group and 61–70 kg group, there is a fair amount of increase in sensitivity and specificity values of 71–95 kg group. This difference can be explained by greater impact values produced while the large-size bodies are hitting the ground.

Comparisons of the proposed hybrid method with only thresholding approach and with machine learning only approach are also performed using asymptotic McNemar’s test in order to assess statistical significance of the results [

48]. Confusion matrixes of three approaches are given in

Table 10.

The null hypothesis (H0) for the first test suggests that machine learning only approach performs better than the proposed hybrid method whereas the alternative hypothesis (H1) claims the contrary. The result of McNemar’s test indicates rejection of the null hypothesis at the 5% significance level where the p-Value of the test is calculated as 2.3 × 108. Comparing the proposed hybrid method with only the thresholding approach by using McNemar’s test results in rejecting the null hypothesis that suggests only thresholding approach performs better than proposed hybrid method at the 5% significance level where p-Value of the test is calculated as 6.2 × 10−3.

5.3. Energy Consumption

Power consumption is one of the most important criteria for mobile application evaluation. Thus, several optimization techniques could be introduced regarding the most energy consuming components of smartphones [

49,

50]. Although there is no standard way of monitoring the energy consumption of a mobile application running on a smartphone, there are applications which enable the monitoring of the energy consumption of both system components and applications. PowerTutor [

51] is one of such applications and to the best of our knowledge, it is the most reliable one. Therefore, we used PowerTutor for the energy measurement tests and to be confident about the obtained results we conducted all the corresponding tests on two separate phones with different models, namely the Galaxy S3 Mini and Galaxy S3.

Before comparing and evaluating the energy consumption of the three approaches—only thresholding approach, machine learning only approach and hybrid method—we investigated the impact of feature reduction on the energy consumption of ML only approach. To this end, uSurvive was configured to run the ML only approach with 43 and five features, respectively, for a duration of 5, 10 and 30 min. The test results, given in

Table 11, show that the energy consumption is a linear function of time and feature reduction provided up to 32 and 50% energy savings on the S3 Mini and S3, respectively.

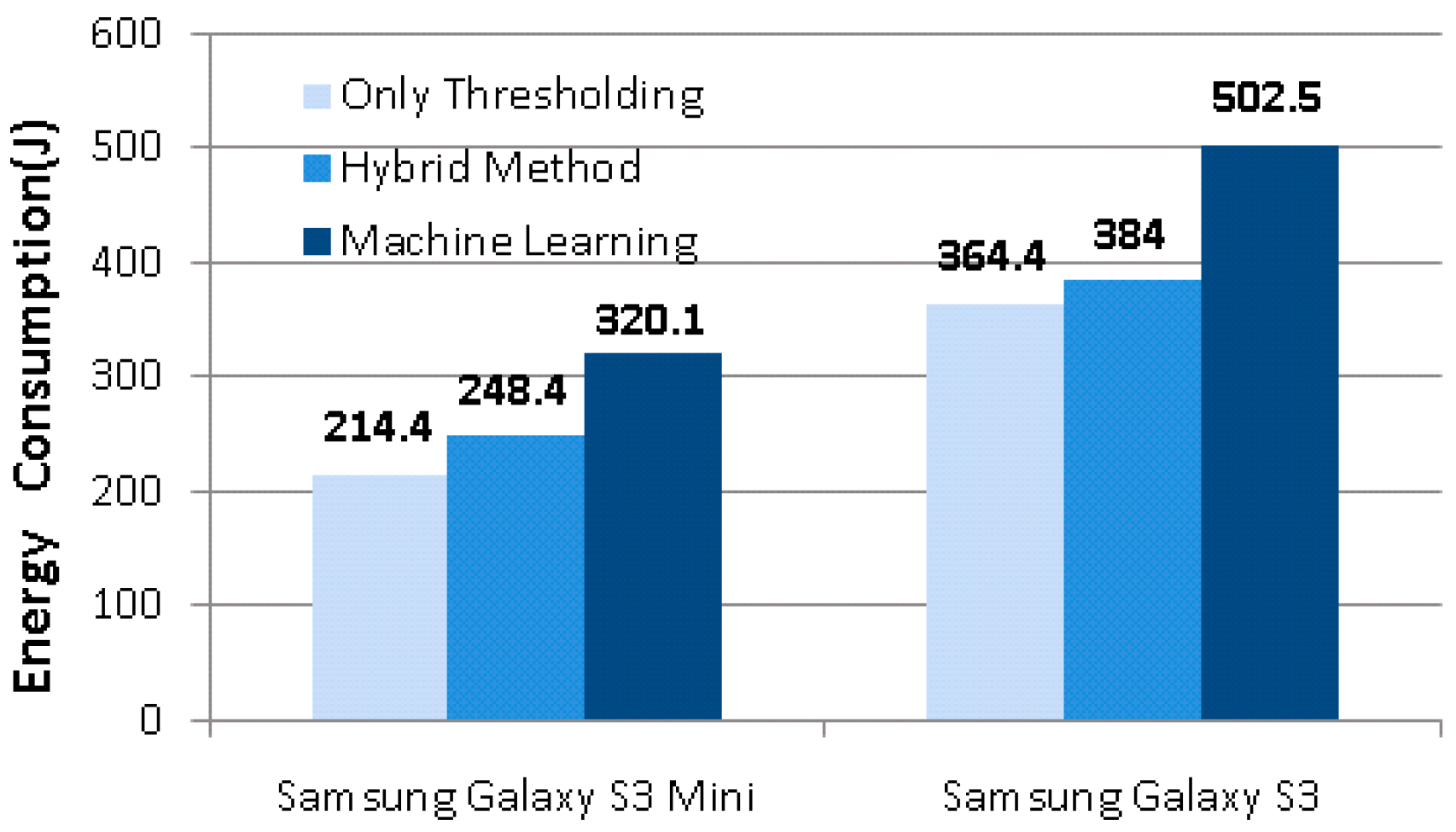

Next, all the three modes were tested on both smartphones for a duration of 24 h each by a 23-year old computer engineer affiliated with our lab. He spent a total of six days performing the tests. The 24 h period began just after the midnight and ended by the next midnight. Each 24 h period consisted of 7 h of sleep, 8 h of work with the rest involving other personal activities. The test results, given in

Figure 8, show that almost 25% of energy could be saved via the hybrid method against the ML only approach.

We also analyzed the battery remaining power running the system with/without hybrid approach. The results show that running uSurvive with the hybrid approach adds only 2% additional burden to the battery consumption in a daily usage of smartphone.

The PE and DT tiers perform thresholding on the difference of accelerometer readings (Equation (1)) and signal vector magnitude of all three axes (Equations (2) and (3)), respectively. Since only thresholding consists of a single comparison, computational complexity of both tiers is O(n) where n is the number of inputs, which is three in our case for fall detection due to the acceleration values at a given time t of x, y and z axes. On the other hand, the test complexity of the C4.5 algorithm corresponds to the tree depth, which cannot be larger than the number of attributes [

52]. Since the system is trained by only five features, the depth of the produced tree offers a reasonable test complexity for a mobile application. However, the computational cost of the feature extraction steps increases the overall complexity of the ML tier. As demonstrated in

Figure 8, the energy consumption of the system is directly affected by complexity values of two approaches: only thresholding and machine learning only. However, when the hybrid method is examined, it is observed that PE tier has eliminated almost 90% of the sampled data while DT tier has eliminated 6% of them. The ML tier classified the remaining 4% of the samples. This structure results in very close energy consumption of only thresholding approach and hybrid method and therefore ensures a remarkable energy saving compared to machine learning only solution.

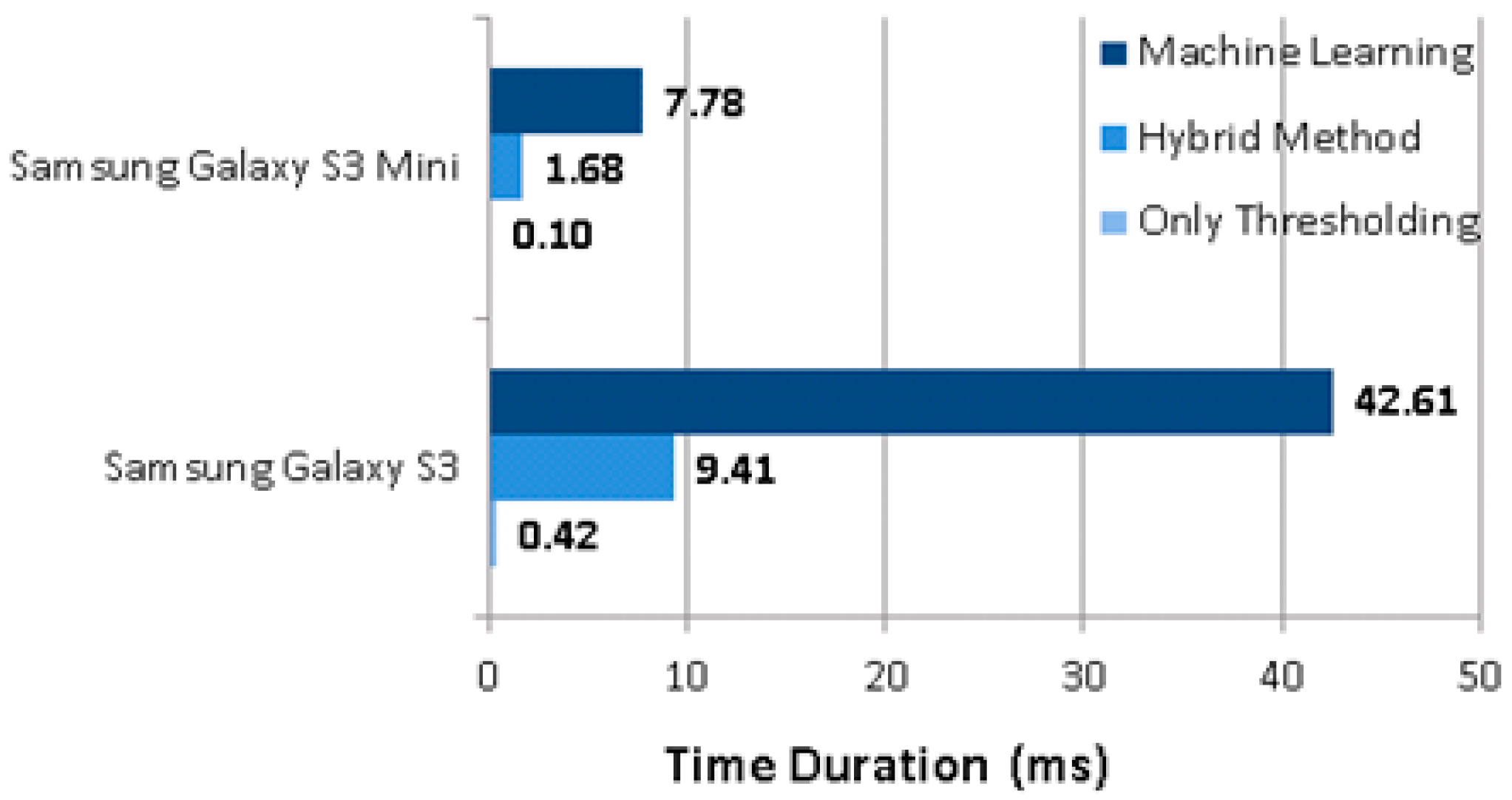

In addition to energy consumption, the computational time required for each approach was explored too. The computational time results of the 100 fall events were averaged for each approach respectively and presented in

Figure 9. The results show that the hybrid method runs approximately 4.5 times faster than the ML only approach on both of the smartphones.

In order to emphasize the importance of saving energy for fall detection, we also compared the most energy consuming components during the tests. With the ML only approach, only three other components besides the fall detector come into consideration, kernel, system, and PowerTutor, as given in

Figure 10.

It is evident that on both of the phones, the ML only fall detector consumes nearly as much energy as the Android system itself. Therefore, the aforementioned savings of 25% by our proposed hybrid method is crucial in extending the usage time of the smartphone.

6. Discussion

In this study, a novel hybrid model, which reduces power consumption of the system by combining thresholding with machine learning methods, is introduced. Due to its experimental success and low test complexity the C4.5 algorithm is exploited in the ML tier of the proposed method. However, the presented hybrid model offers flexibility for using various machine learning methods in the ML tier. A conventional machine learning algorithm with high computational complexity and accuracy or a lightweight machine learning approach which does not require any feature extraction, batched data or training [

53] can be employed in the machine learning tier. In the proposed approach, regarding test results, 96% of the data collected in a regular weekday are determined by the PE and DT tiers. The remaining 4% of the samples are sent to the ML tier and classification is made in this tier. Since thresholding is capable of making a decision by employing a single comparison of raw accelerometer data and 96% of data are evaluated by PE and DT tiers, very close low energy consumption values of only thresholding and hybrid method, as shown in

Figure 8, are observed in conducted 24 h tests. These results show that the effect of the computational cost of machine learning algorithm selected for ML tier to the total power consumption of the system is negligible. Thus, a lightweight machine learning algorithm such as Online Perceptron or a fully featured classification algorithm with high-test complexity such as k-Nearest Neighborhood (k-NN) would barely change the energy consumption of the proposed hybrid model.

Fully featured machine learning methods such as SVM, Multi Layer Perceptron and k-NN are proven methods for fall detection [

20]. Unfortunately, these methods consume a lot of energy since all acquired samples are processed and feature extraction is required. For this reason, preferring a lightweight machine learning approach then the proposed hybrid model is considered. One of the lightweight algorithms with the lowest query complexity is Online Single Layer Perceptron. For the classification, Online Perceptron uses Linear Combiner. Inputs are multiplied by their respective weights and then summed up. This is followed by a bias correction and a comparison. Therefore, the query complexity of the Online Single Layer Perceptron is O(n) where n is the number of the features, which is not lower than the computational complexity of thresholding solutions [

54]. Besides, lightweight machine learning algorithms are successful generally in distinguishing linearly separable classes [

55]. Both reasons lead us to present a system that utilizes the proven success of fully featured machine learning approaches in fall detection task while eliminating their disadvantage in terms of energy consumption by employing PE and DT tiers.

Studies that propose multiphase [

23,

51,

56] approaches in order to detect fall events use wearable sensors for data acquisition. We believe that our proposed system can be used by a wide range of audiences since it is developed on a smartphone which is easily accessible to anyone. Its energy efficient architecture would possibly increase the demand to our mobile application. To the best of our knowledge, there are only a few studies which compare the thresholding and machine learning methods [

20,

23]. However, these studies do not take energy consumption into consideration.

In the current study, we developed a user independent hybrid model. The results have been obtained by using a validation set which is collected by involving 15 subjects. It is expected that configuring the thresholding and machine learning tiers with subject-specific parameters will increase the success rates. As expected, we observed that in our experiments subjects with greater body sizes bring about greater impact values while hitting the ground. Thus, a fair number of falls of subjects with larger body sizes are classified successfully more than fall events of low-to-average weight people. However, regarding total experiments, our test results show that body sizes do not significantly change the fall detection rates of the proposed hybrid approach. Besides, this intrusive approach would bring forth a user dependent system. In order to overcome this problem and increase the accuracy, we believe that deep learning solutions could give a superior fall detection rates without any need to subject-specific parameters.

In our tests, we also realized that the way of falling could affect the fall detection rates. Especially, the very slow falls of low-weight people might be missed. However, the damage risk of these type falls is quite low. We believe that increasing the number of these types of falls in training set would help to the recognition of these fall events.

Another important point is that there are only a few public available datasets [

51,

57]. One of the most comprehensive databases that include accelerometer, gyroscope and orientation measurements of fall events and daily activities is the MobiFall dataset [

58]. In [

59], researchers exploit Auto-Regressive model for feature extraction and classify two types of fall events and three types of daily activities by using Support Vector Machine and Neural Network. Comparing two classification techniques, Neural Network provides an overall classification accuracy of 96%, whereas Support Vector Machine results in a classification accuracy of 91.7%. Another study was presented by Vallabh et al. [

60]. They implemented a filter rank based system for feature extraction. Five classification methods including Naive Bayes, k-NN, Neural Network, Support Vector Machine, and Least Squares Method were compared to each other. The highest accuracy, calculated as 87.5% is achieved by k-NN algorithm. In order to compare the classification accuracy of the proposed hybrid approach with the studies that use MobiFall dataset, a total of 200 events from MobiFall dataset (100 fall events and 100 daily activities) are classified by the proposed hybrid approach. The average accuracy rates of fall events and daily activities are calculated as 92% and 90% respectively. Regarding these success rates we can safely claim that our hybrid method outperforms the latest studies using the MobiFall dataset in an energy efficient manner.

Our next goal is to publish the collected datasets totally consisting of 425 fall events including five different fall types, three different ways of carrying the mobile phone and 1200 daily activities collected from 25 subjects with a reasonable range of age and body sizes. We also plan to extend our database by including subjects with ages older than 50 to improve the sensitivity. Another improvement could be made for position/orientation problem. During real-life tests mobile phones were carried in front/rear pocket of subject’s trousers and a jacket pocket of the subject. Our test results show that the position of the mobile phone on the user body slightly affects the detection of fall events. We observe that carrying smartphone in the upper part of a body has a positive effect on the detection rate, as the fall event takes longer time than with the lower part of a body. We believe that since changes in orientation of smartphone affect the evaluation, building up the system by taking different transport modes of the mobile phone into account such as carrying in a backpack/handbag or training the system with orientation-independent features will definitely increase the usability of the application.

We also believe that in the near future smartphones would be replaced by smartwatches especially to track people activities. Since from time-to-time we leave our phones on the table, desk, couch, bed, car, bag, etc., smartwatches are the perhaps the most appropriate candidates for analyzing daily activities of people. Therefore, as a future work we are planning to implement this hybrid approach on smartwatches.