Hierarchical Stereo Matching in Two-Scale Space for Cyber-Physical System

Abstract

:1. Introduction

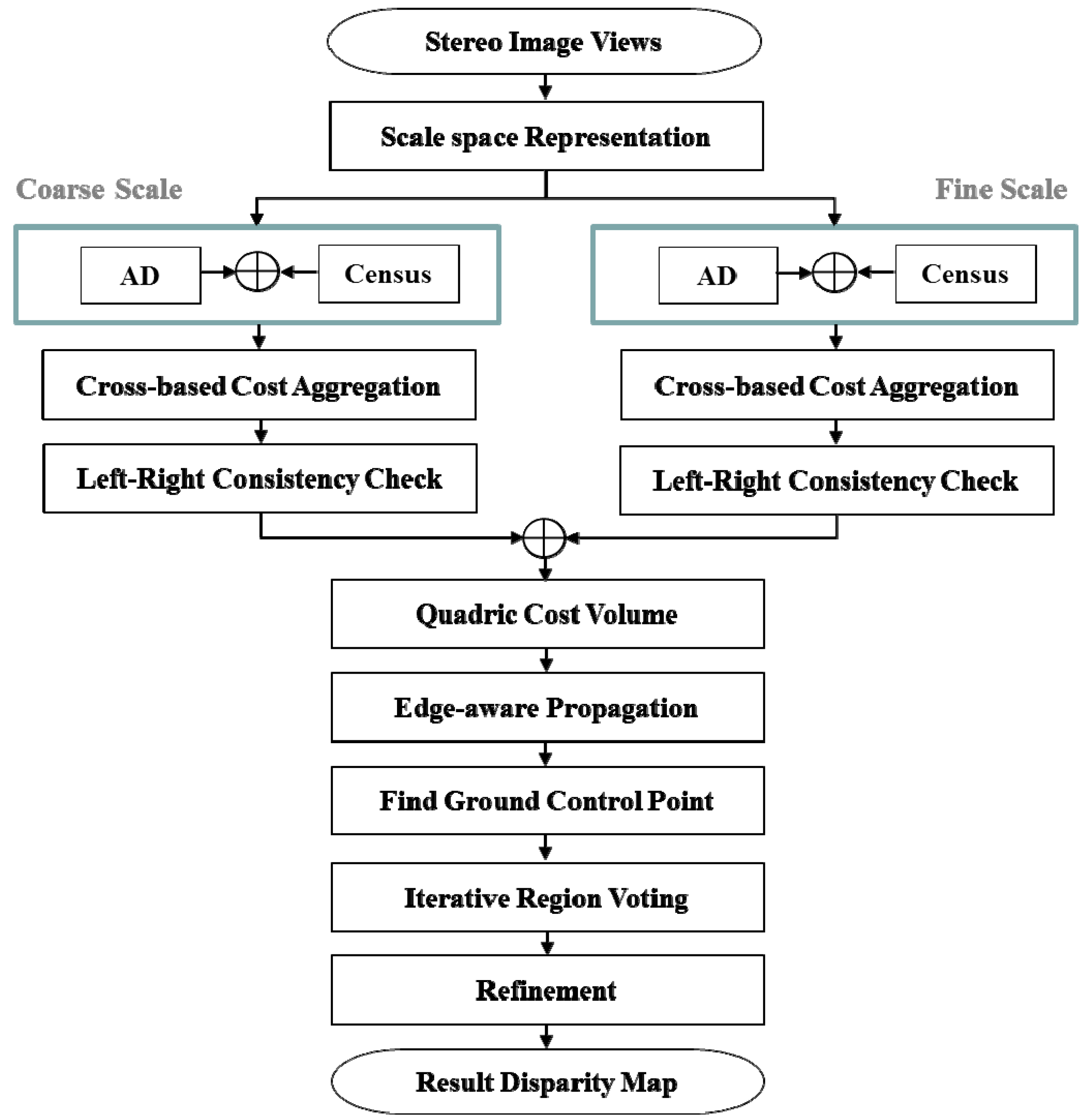

2. Proposed Method

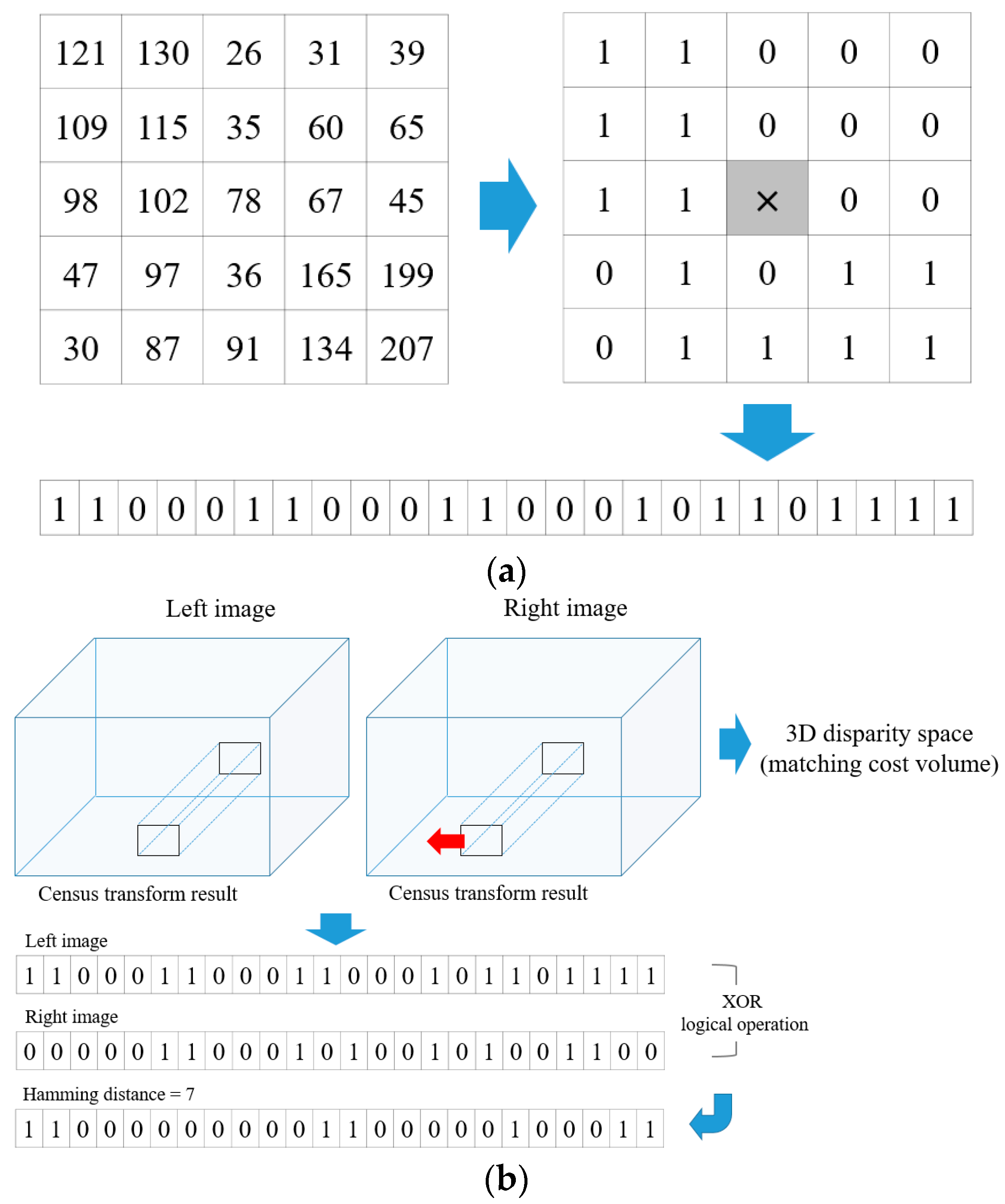

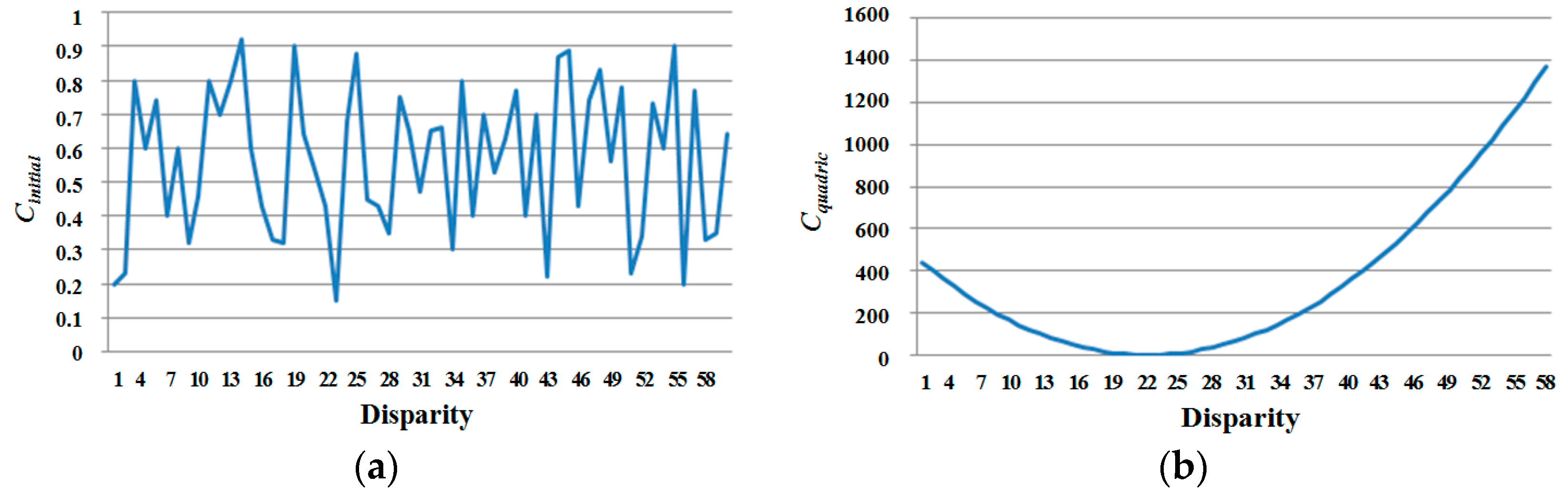

2.1. Initial Matching Cost Computing

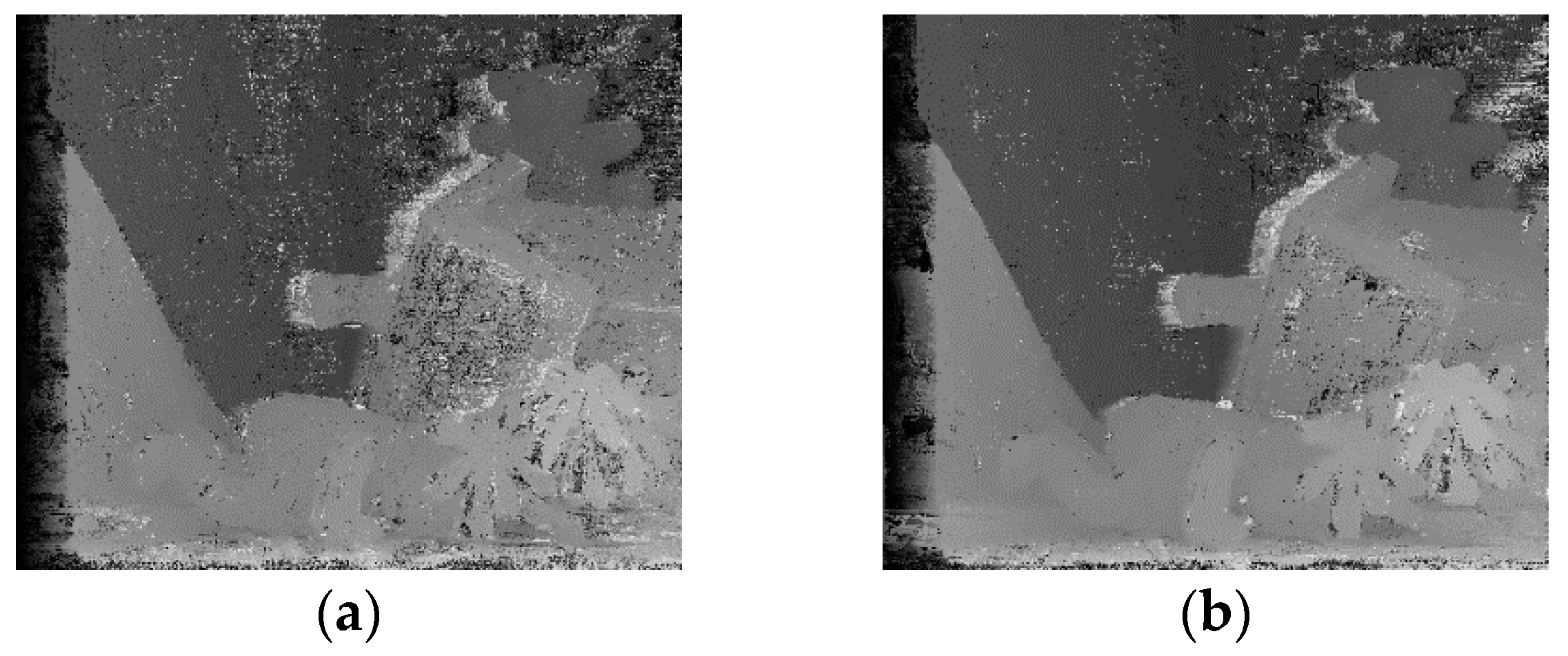

2.2. Matching Cost in Scale Space

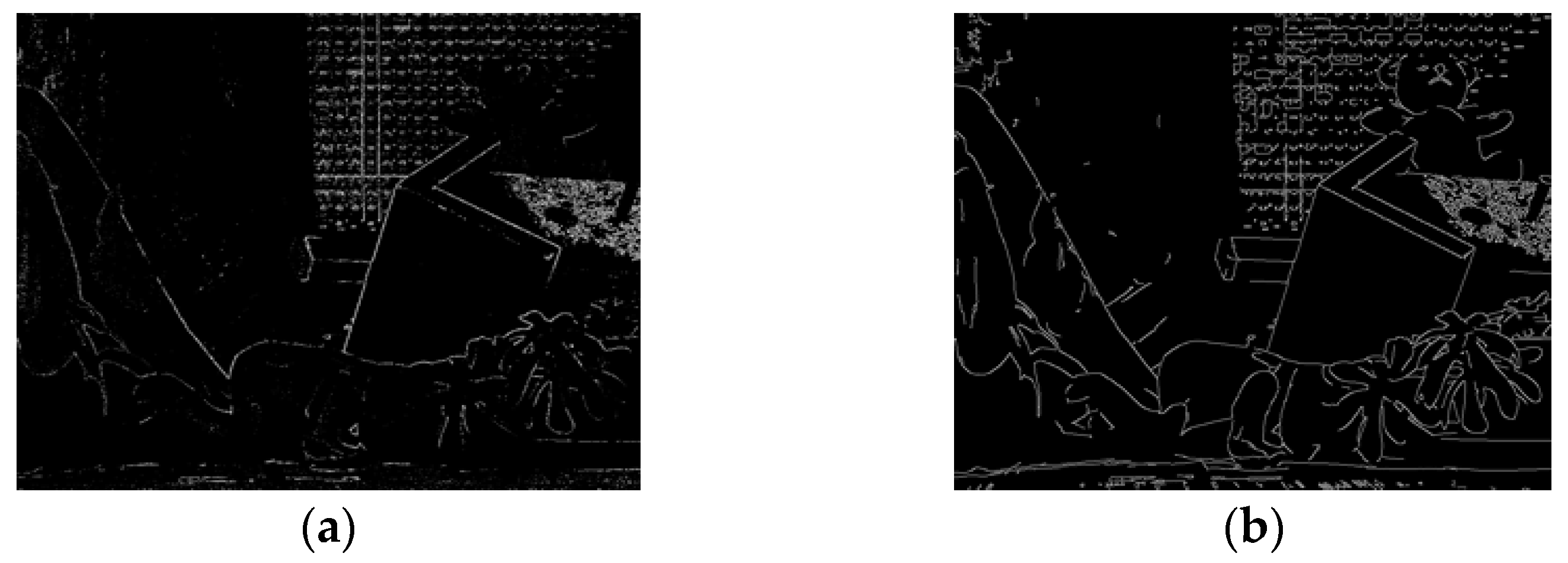

2.3. Disparity Refinement

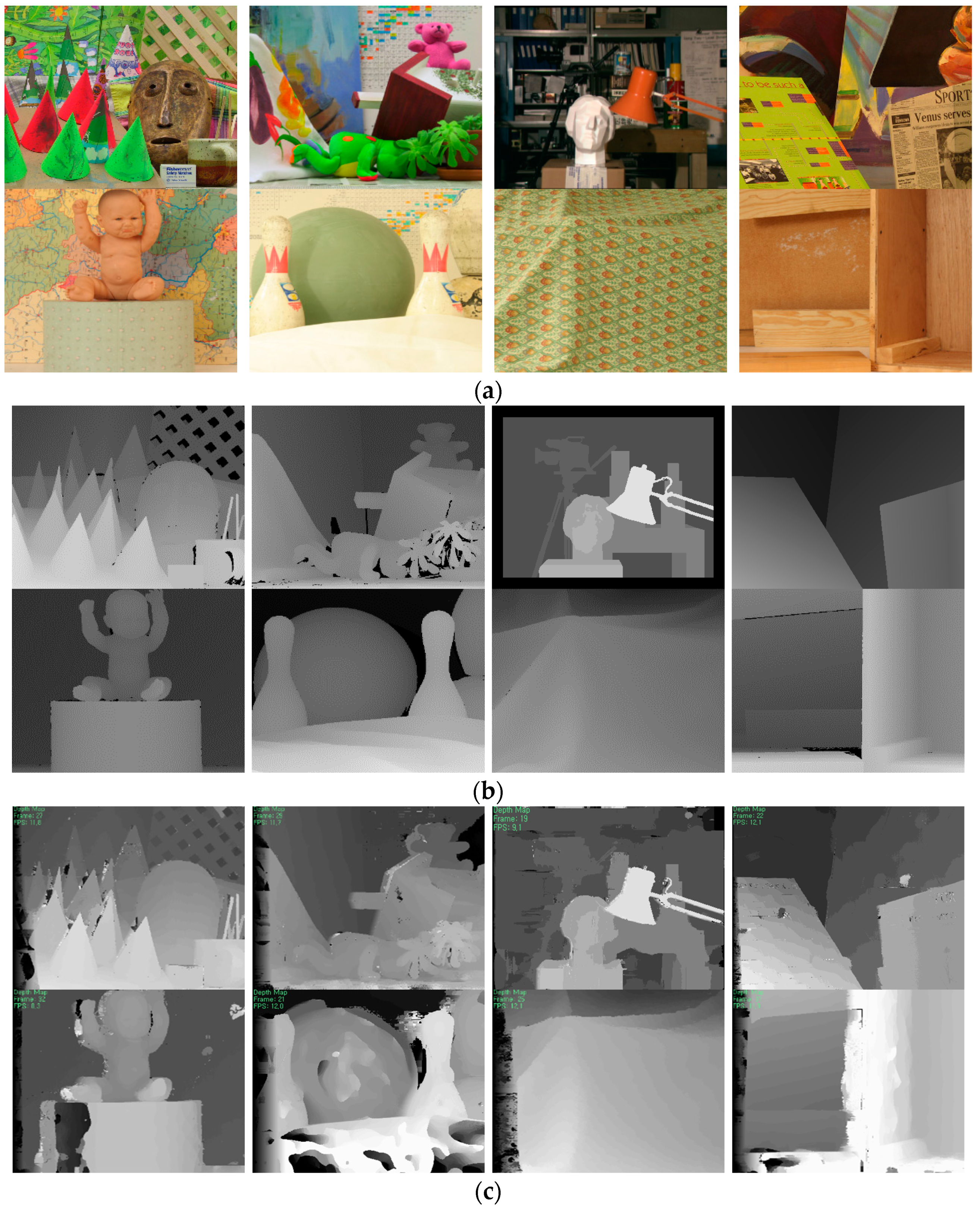

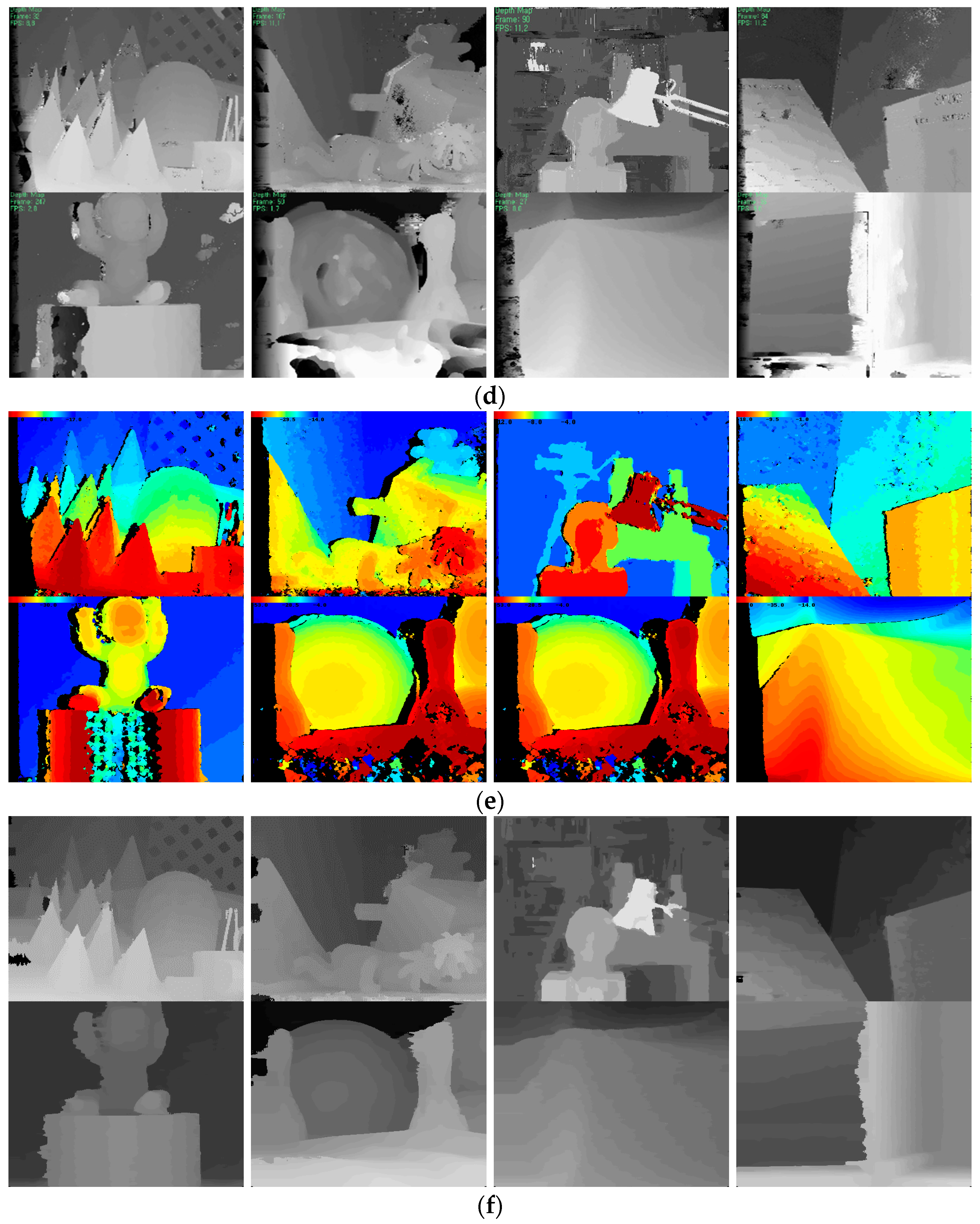

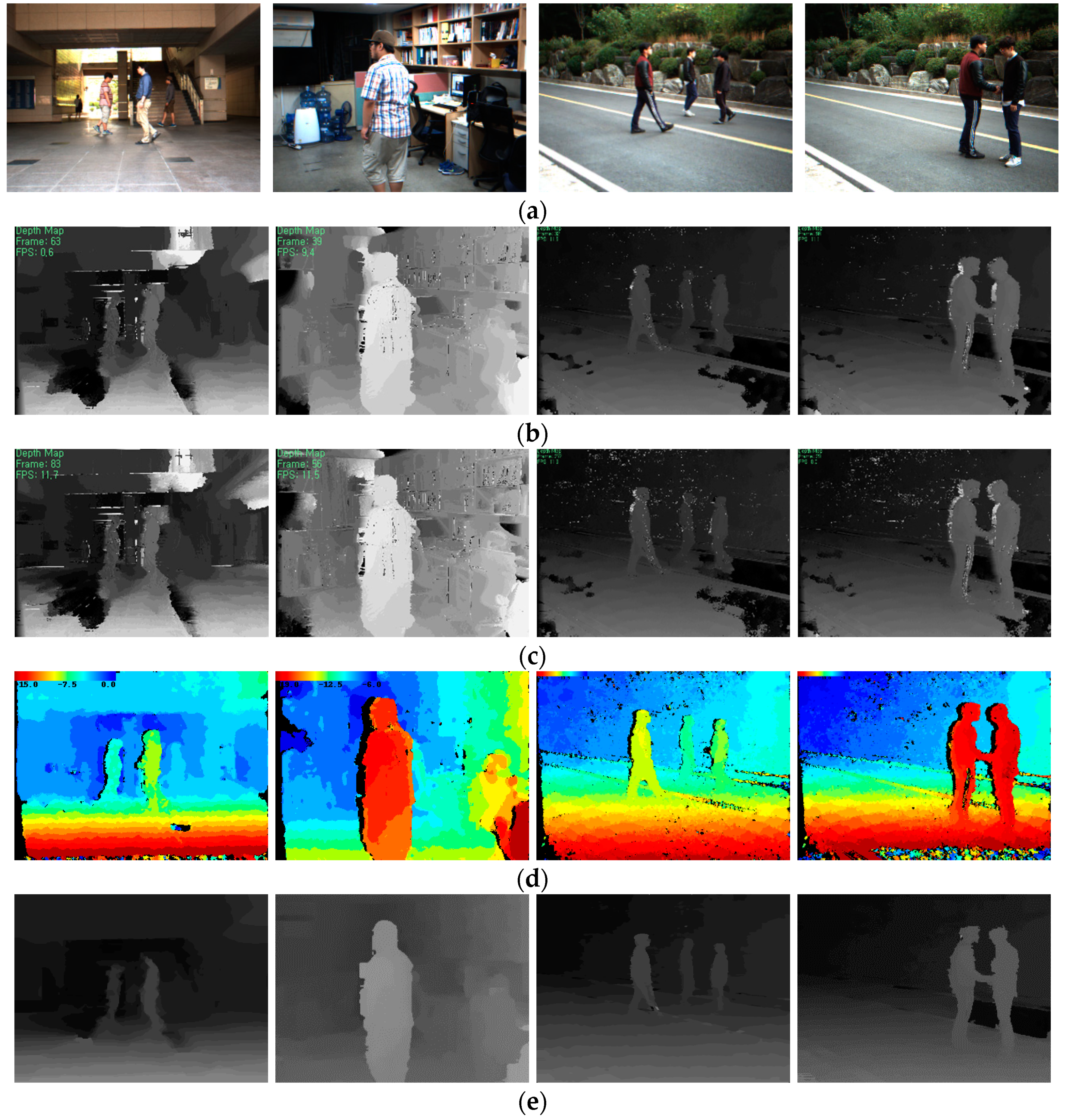

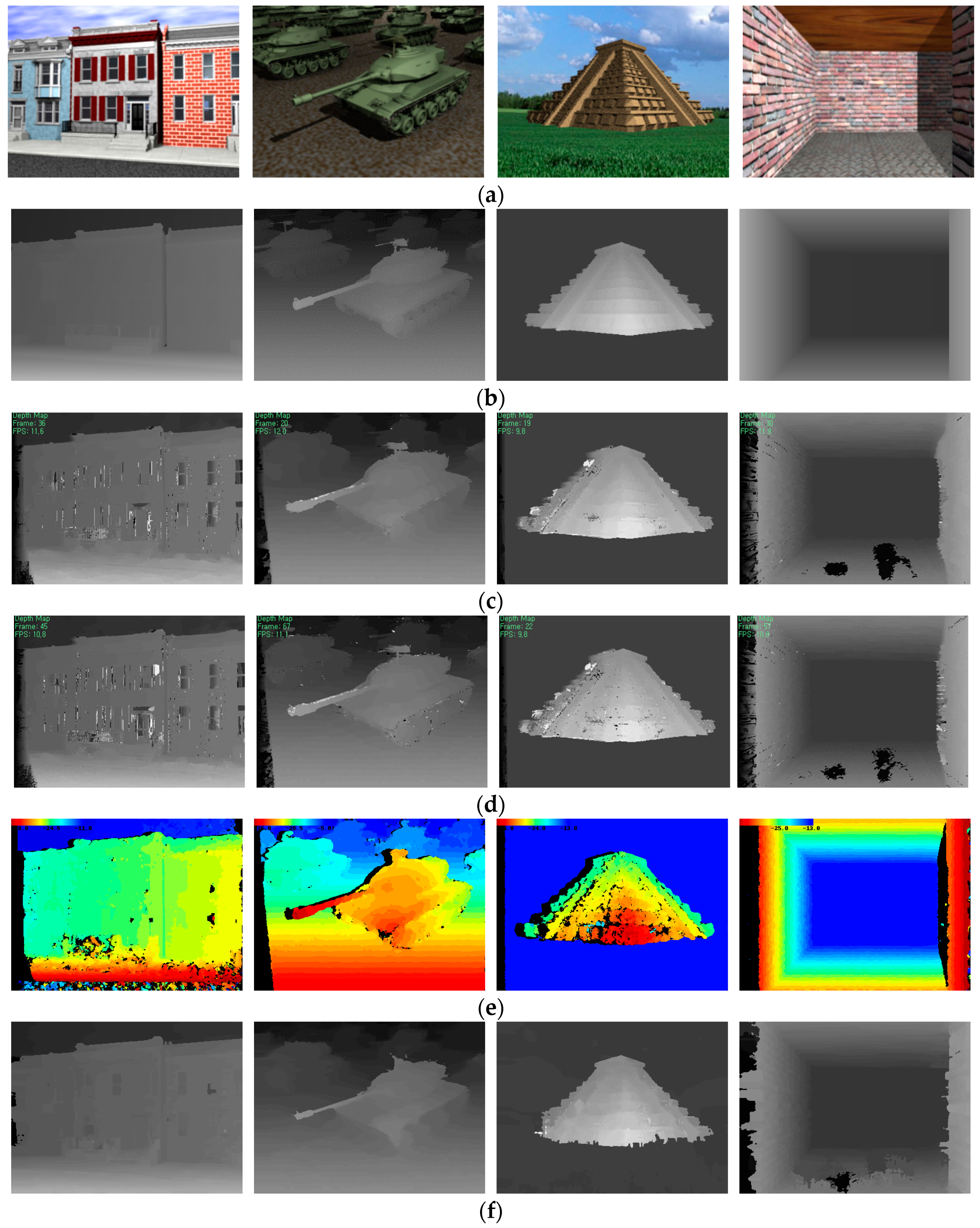

3. Experimental Results

4. Conclusions

Acknowledgments

Author Contributions

Conflicts of Interest

References

- Khaitan, S.K.; McCalley, J.D. Design techniques and applications of cyber physical systems: A survey. IEEE Syst. J. 2015, 9, 350–365. [Google Scholar] [CrossRef]

- Pérez, L.; Rodríguez, Í.; Rodríguez, N.; Usamentiaga, R.; García, D.F. Robot guidance using machine vision techniques in industrial environments: A comparative review. Sensors 2016, 16, 335. [Google Scholar] [CrossRef] [PubMed]

- Chang, J.; Jeong, J.; Hwang, D. Real-time hybrid stereo vision system for HD resolution disparity map. In Proceedings of the British Machine Vision Conference, Nottingham, UK, 1–5 September 2014; pp. 643–650. [Google Scholar]

- Scharstein, D.; Szeliski, R. A taxonomy and evaluation of dense two-frame stereo correspondence algorithms. Int. J. Comput. Vis. 2002, 47, 7–42. [Google Scholar] [CrossRef]

- Van Meerbergen, G.; Vergauwen, M.; Pollefey, M.; Van Gool, L. A hierarchical symmetric stereo algorithm using dynamic programming. Int. J. Comput. Vis. 2002, 47, 275–285. [Google Scholar] [CrossRef]

- Wang, L.; Liao, M.; Gong, M.; Yang, R.; Nistér, D. High quality real-time stereo using adaptive cost aggregation and dynamic programming. In Proceedings of the International Symposium on 3D Data Processing, Visualization and Transmission, Chapel Hill, NC, USA, 14–16 June 2006; pp. 798–805. [Google Scholar]

- Yang, Q.; Wan, L.; Yang, R.; Stewénius, H.; Nistér, D. Stereo matching with color-weighted correlation, hierarchical belief propagation and occlusion handling. IEEE Trans. Pattern Anal. Mach. Intell. 2009, 31, 492–504. [Google Scholar] [CrossRef] [PubMed]

- Boykov, Y.; Veksler, O.; Zabih, R. Fast approximate energy minimization via graph cuts. IEEE Trans. Pattern Anal. Mach. Intell. 2001, 23, 1222–1239. [Google Scholar] [CrossRef]

- Geiger, A.; Roser, M.; Urtasun, R. Efficient large-scale stereo matching. Lect. Notes Comput. Sci. 2011, 6492, 25–38. [Google Scholar]

- Sinha, S.N.; Scharstein, D.; Szeliski, R. Efficient high-resolution stereo matching using local plane sweeps. In Proceedings of the Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014; pp. 1582–1589. [Google Scholar]

- Zhao, Y.; Taubin, G. Real-time stereo on gpgpu using progressive multi-resolution adaptive windows. J. Image Vis. Comput. 2011, 29, 420–432. [Google Scholar] [CrossRef]

- Jen, Y.; Dunn, E.; Georgel, P.; Frahm, J. Adaptive scale selection for hierarchical stereo. In Proceedings of the British Machine Vision Conference, Dundee, UK, 29 August–2 September 2011; pp. 95.1–95.10. [Google Scholar]

- Zhang, K.; Fang, Y.; Min, D.; Sun, L.; Yang, S.; Yan, S.; Tian, Q. Cross-scale cost aggregation for stereo matching. In Proceedings of the Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014; pp. 1590–1597. [Google Scholar]

- Yang, R.; Pollefeys, M. Multi-resolution real-time stereo on commodity graphics hardware. In Proceedings of the Conference on Computer Vision and Pattern Recognition, Madison, WI, USA, 18–20 June 2003; pp. 211–218. [Google Scholar]

- Sah, S.; Jotwani, N. Stereo Matching Using Multi-resolution Images on CUDA. Int. J. Comput. Appl. 2012, 56, 47–55. [Google Scholar] [CrossRef]

- Mei, X.; Sun, X.; Zhou, M.; Jiao, S.; Wan, H.; Zhang, X. On building an accurate stereo matching system on graphics hardware. In Proceedings of the IEEE International Conference on Computer Vision Work-shops on GPUs for Computer Vision, Barcelona, Spain, 6–13 November 2011; pp. 467–474. [Google Scholar]

- Zhang, K.; Jiangbo, L.; Lafruit, G. Cross-based local stereo matching using orthogonal integral images. IEEE Trans. Circuits Syst. Video Technol. 2009, 19, 1073–1079. [Google Scholar] [CrossRef]

- Marr, D.; Hildreth, E. Theory of edge detection. Proc. R. Soc. Lond. Ser. B Biol. Sci. 1980, 207, 215–217. [Google Scholar] [CrossRef]

- Sun, X.; Mei, X.; Jio, S.; Zhou, M.; Liu, Z.; Wang, H. Real-time local stereo via edge-aware disparity propagation. Pattern Recognit. Lett. 2014, 49, 201–206. [Google Scholar] [CrossRef]

- Kanade, T.; Okutomi, M. A stereo matching algorithm with an adaptive window theory and experiment. IEEE Trans. Pattern Recognit. Mach. Intell. 1994, 16, 920–931. [Google Scholar] [CrossRef]

- Yoon, K.; Kweon, I. Adaptive support-weight approach for correspondence search. IEEE Trans. Pattern Anal. Mach. Intell. 2006, 28, 650–656. [Google Scholar] [CrossRef] [PubMed]

- Middlebury Stereo Vision. Available online: http://vision.middlebury.edu/stereo/ (accessed on 16 December 2015).

- Richardt, C.; Orr, D.; Davies, I.; Criminisi, A.; Dodgson, A. Real-time spatiotemporal stereo matching using the dual-cross-bilateral grid. In Proceedings of the European Conference on Computer Vision, Crete, Greece, 5–11 September 2010; Springer: Berlin/Heidelberg, Germany, 2010; pp. 6311–6316. [Google Scholar]

- Facciolo, G.; Franchis, C.; Meinhardt, E. MGM: A significant more global matching for stereovision. In Proceedings of the British Machine Vision Conference, Swansea, UK, 7–10 September 2015; pp. 90.1–90.12. [Google Scholar]

- Felzenszwalb, P.; Huttenlocher, D. Efficient belief propagation for early vision. Int. J. Comput. Vis. Wash. 2006, 70, 41–54. [Google Scholar] [CrossRef]

- Kolmogorov, V.; Zabih, R. Computing visual correspondence with occlusions using graph cuts. In Proceedings of the International Conference on Computer Vision, Vancouver, BC, Canada, 7–14 July 2001; pp. 508–515. [Google Scholar]

- Cech, J.; Sara, R. Efficient sampling of disparity space for fast and accurate matching. In Proceedings of the Computer Vision and Pattern Recognition, Minneapolis, MN, USA, 17–22 June 2007; pp. 1–8. [Google Scholar]

- Kostková, J.; Sára, R. Stratified dense matching for stereopsis in complex scenes. In Proceedings of the British Machine Vision Conference, Norwich, UK, 8–11 September 2003; pp. 339–348. [Google Scholar]

| Methods | Non-Occluded Pixels: Error > 1 | Non-Occluded Pixels: Error > 2 | ||||||||

|---|---|---|---|---|---|---|---|---|---|---|

| Cones | Teddy | Baby3 | Art | Lamp2 | Cones | Teddy | Baby3 | Art | Lamp2 | |

| Felzenszwalb [25] | 15.2 | 18.7 | 13.0 | 23.3 | 32.0 | 7.8 | 11.4 | 7.0 | 16.5 | 26.0 |

| Kolmogorov [26] | 8.2 | 16.5 | 26.2 | 30.3 | 65.7 | 4.1 | 8.1 | 19.0 | 21.0 | 60.7 |

| Cech [27] | 7.2 | 15.8 | 17.4 | 18.8 | 36.7 | 4.4 | 10.2 | 9.7 | 11.2 | 27.1 |

| Kostková [28] | 7.2 | 13.5 | 14.2 | 17.9 | 31.5 | 5.3 | 10.1 | 8.2 | 13.0 | 26.7 |

| Geiger [9] | 5.0 | 11.5 | 10.8 | 13.3 | 17.5 | 2.7 | 7.3 | 4.5 | 8.7 | 10.4 |

| Proposed method (Canny) | 4.7 | 8.5 | 4.9 | 10.8 | 7.9 | 1.6 | 4.3 | 2.1 | 8.0 | 4.0 |

| Proposed method (DOG) | 4.8 | 8.7 | 5.2 | 10.8 | 8.0 | 1.7 | 4.2 | 2.3 | 8.1 | 4.1 |

© 2017 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Choi, E.; Lee, S.; Hong, H. Hierarchical Stereo Matching in Two-Scale Space for Cyber-Physical System. Sensors 2017, 17, 1680. https://doi.org/10.3390/s17071680

Choi E, Lee S, Hong H. Hierarchical Stereo Matching in Two-Scale Space for Cyber-Physical System. Sensors. 2017; 17(7):1680. https://doi.org/10.3390/s17071680

Chicago/Turabian StyleChoi, Eunah, Sangyoon Lee, and Hyunki Hong. 2017. "Hierarchical Stereo Matching in Two-Scale Space for Cyber-Physical System" Sensors 17, no. 7: 1680. https://doi.org/10.3390/s17071680