Dynamic Gesture Recognition with a Terahertz Radar Based on Range Profile Sequences and Doppler Signatures

Abstract

:1. Introduction

2. Radar System and Multi-Modal Signals

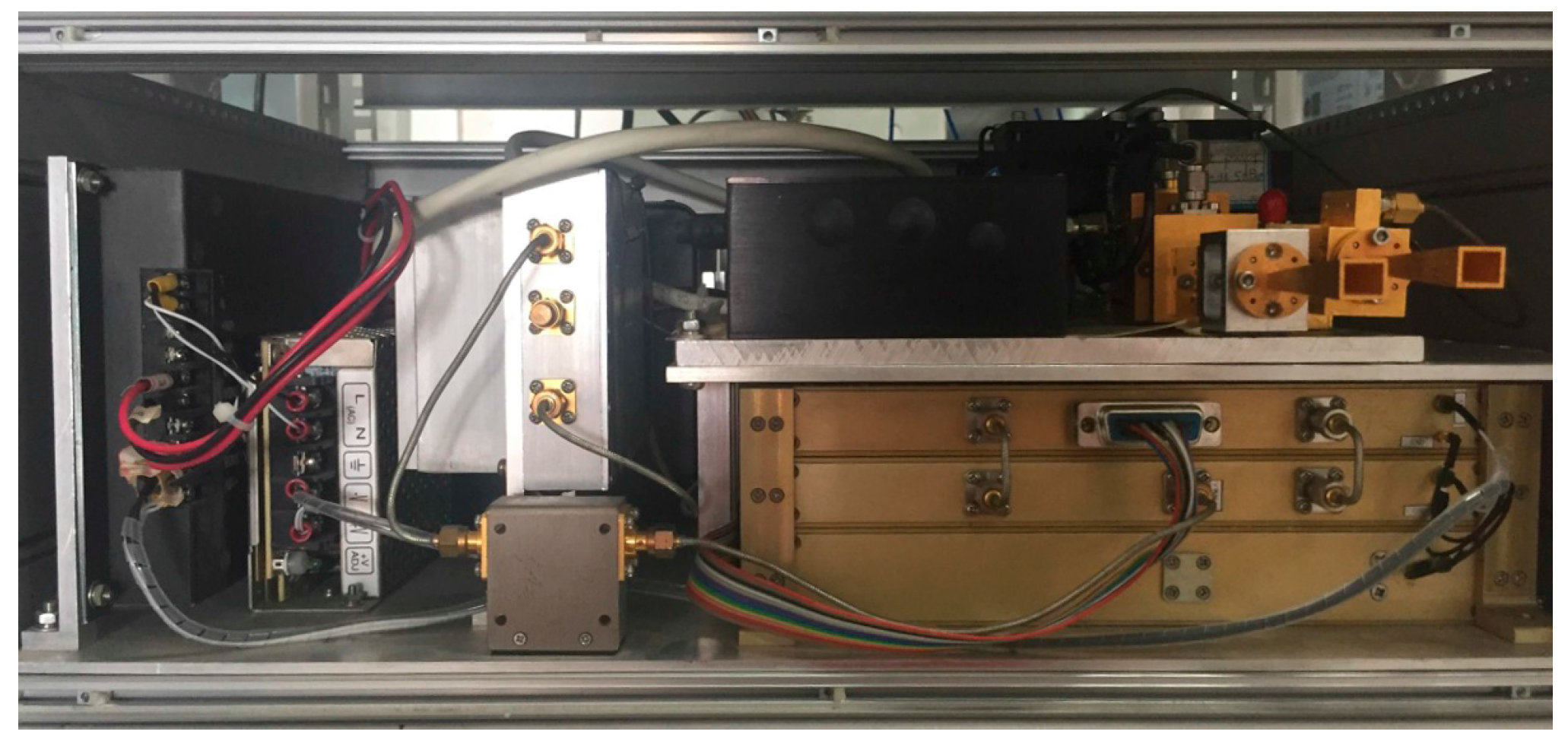

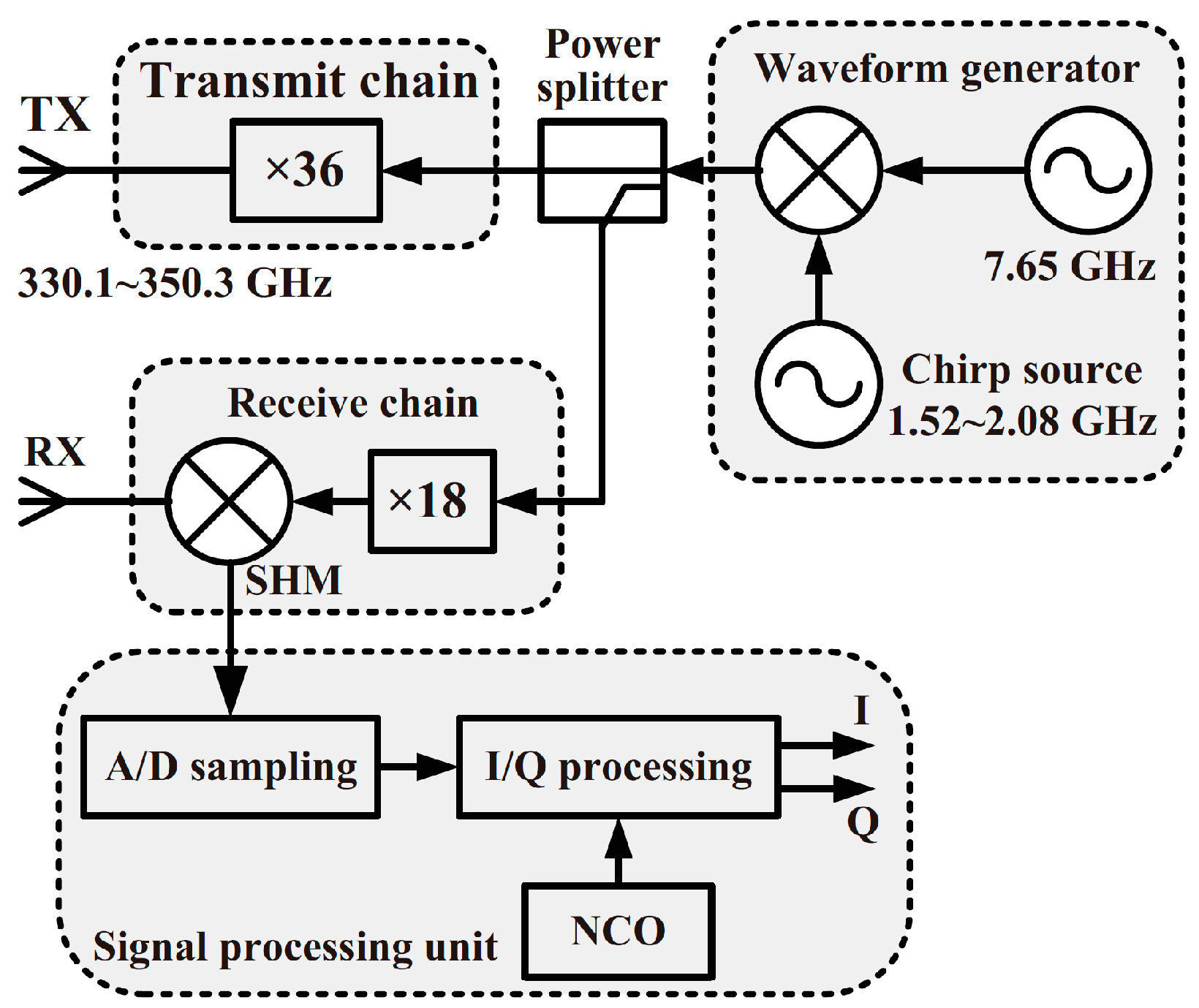

2.1. Radar System

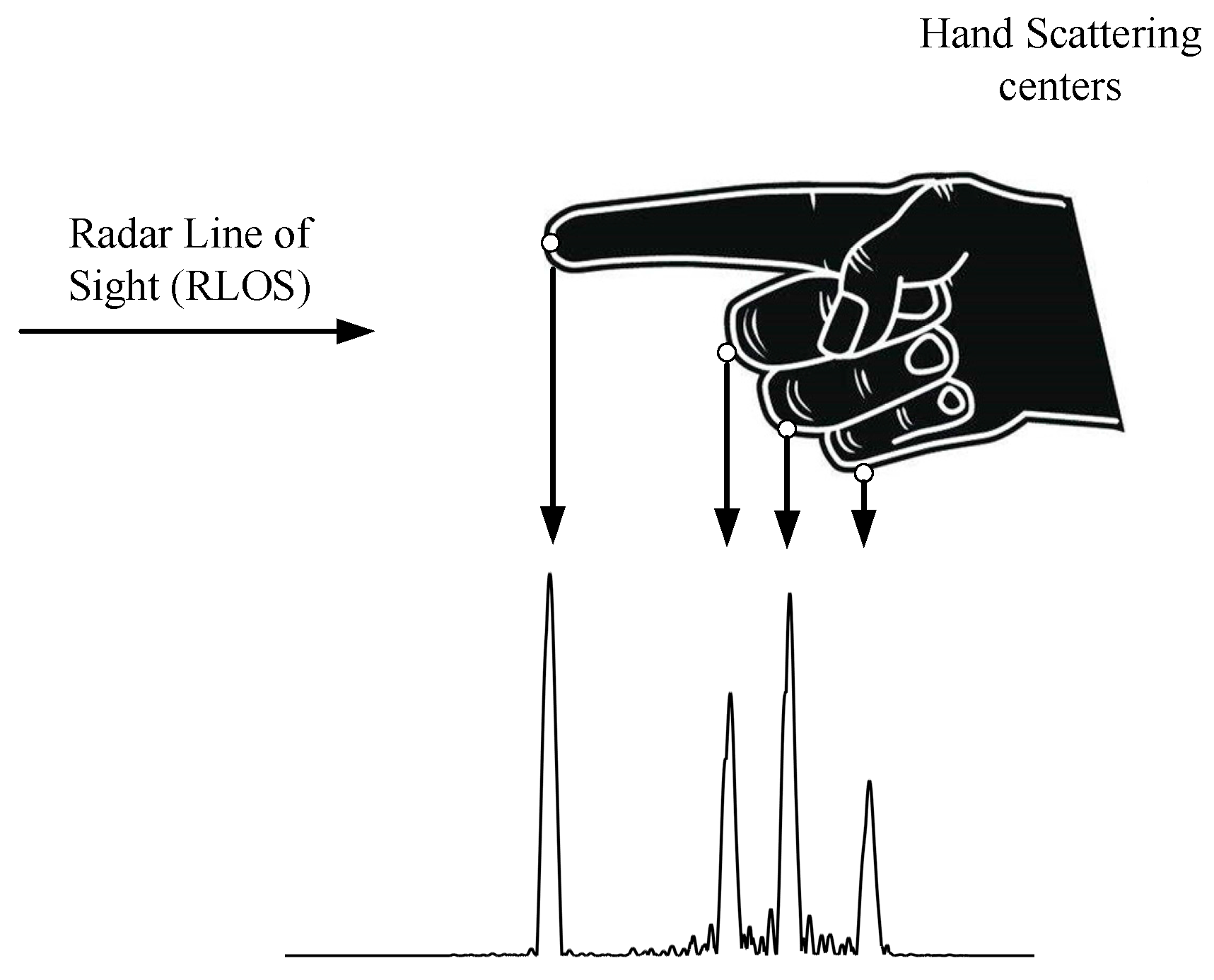

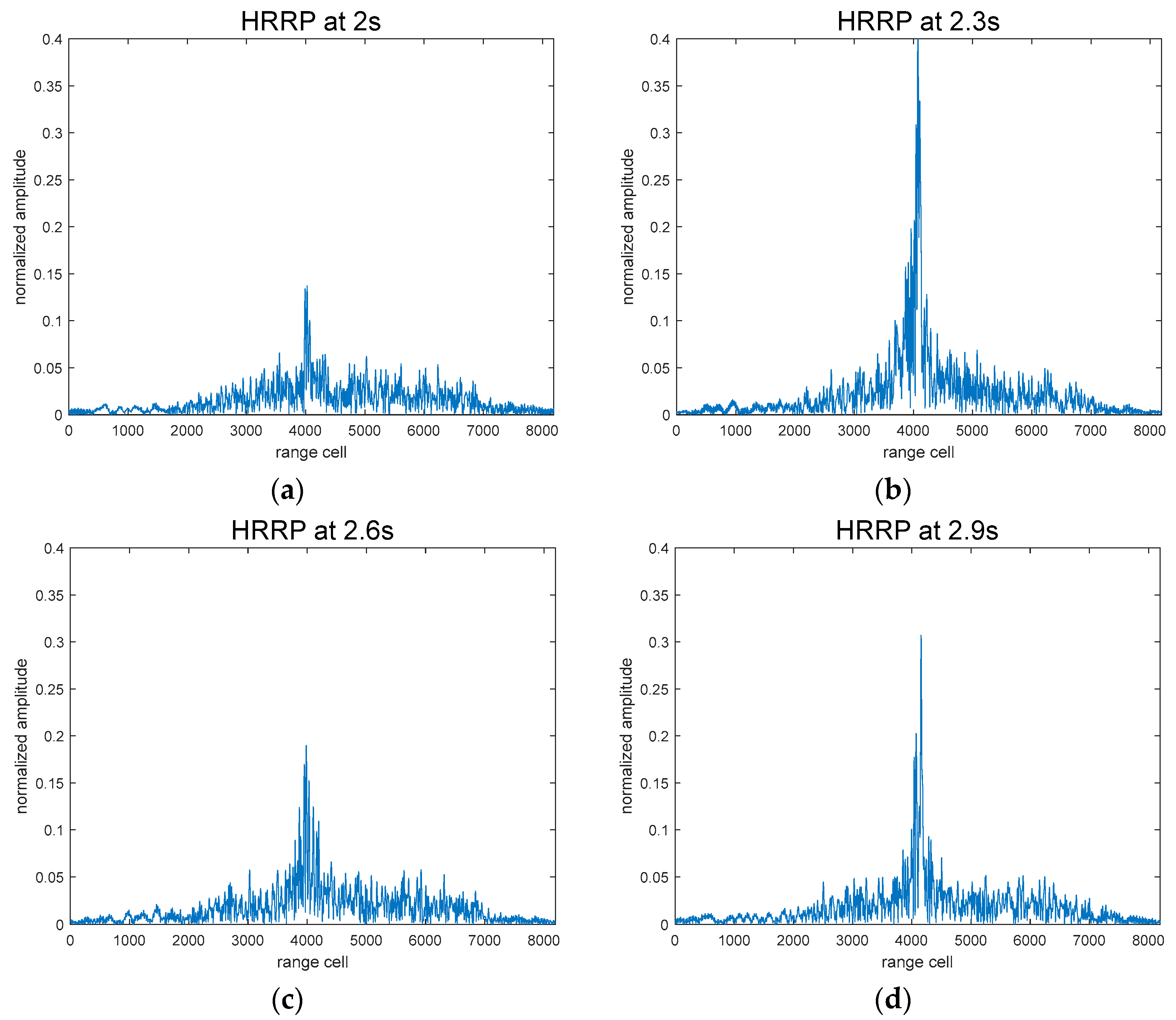

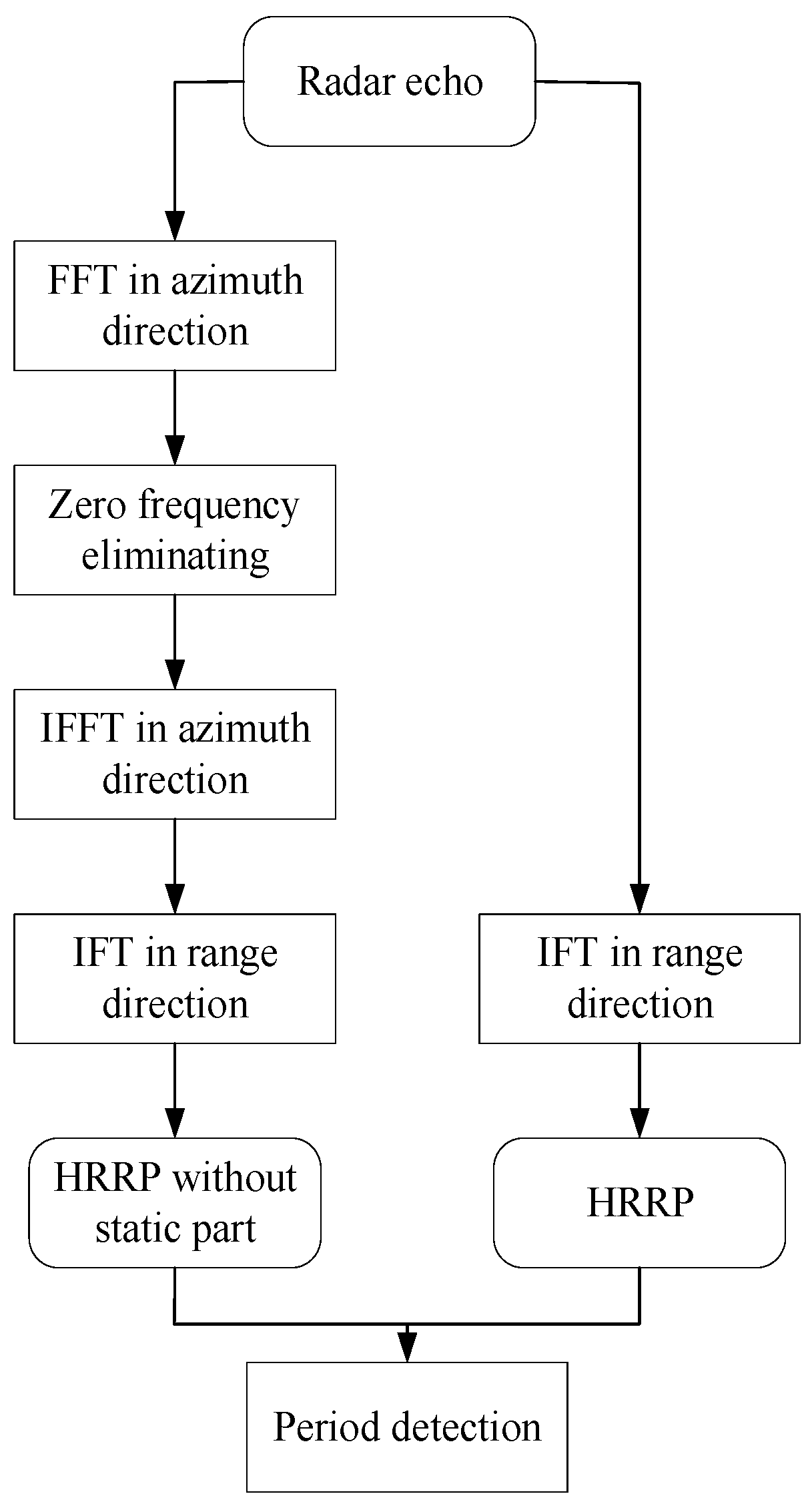

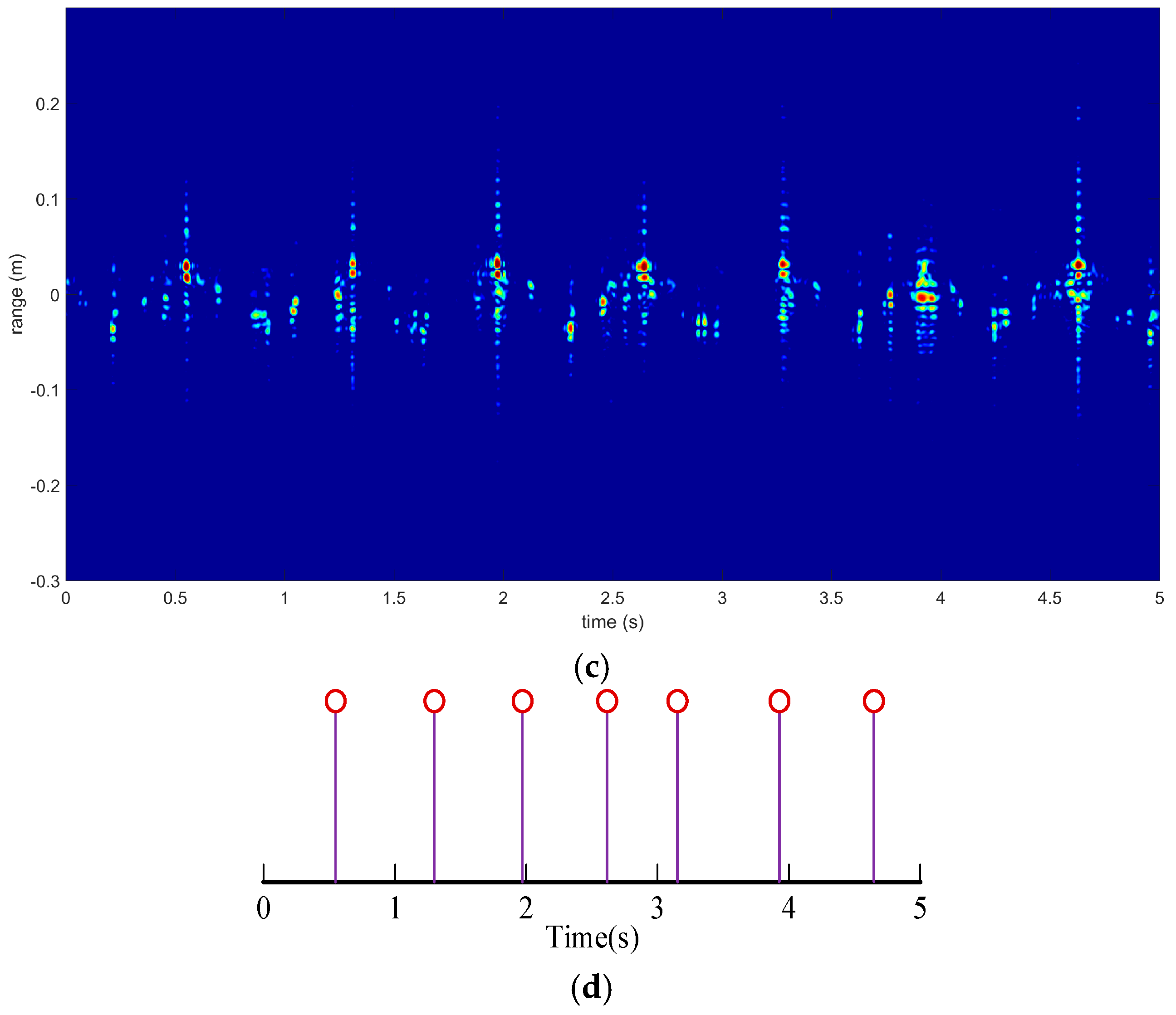

2.2. HRRP Sequences

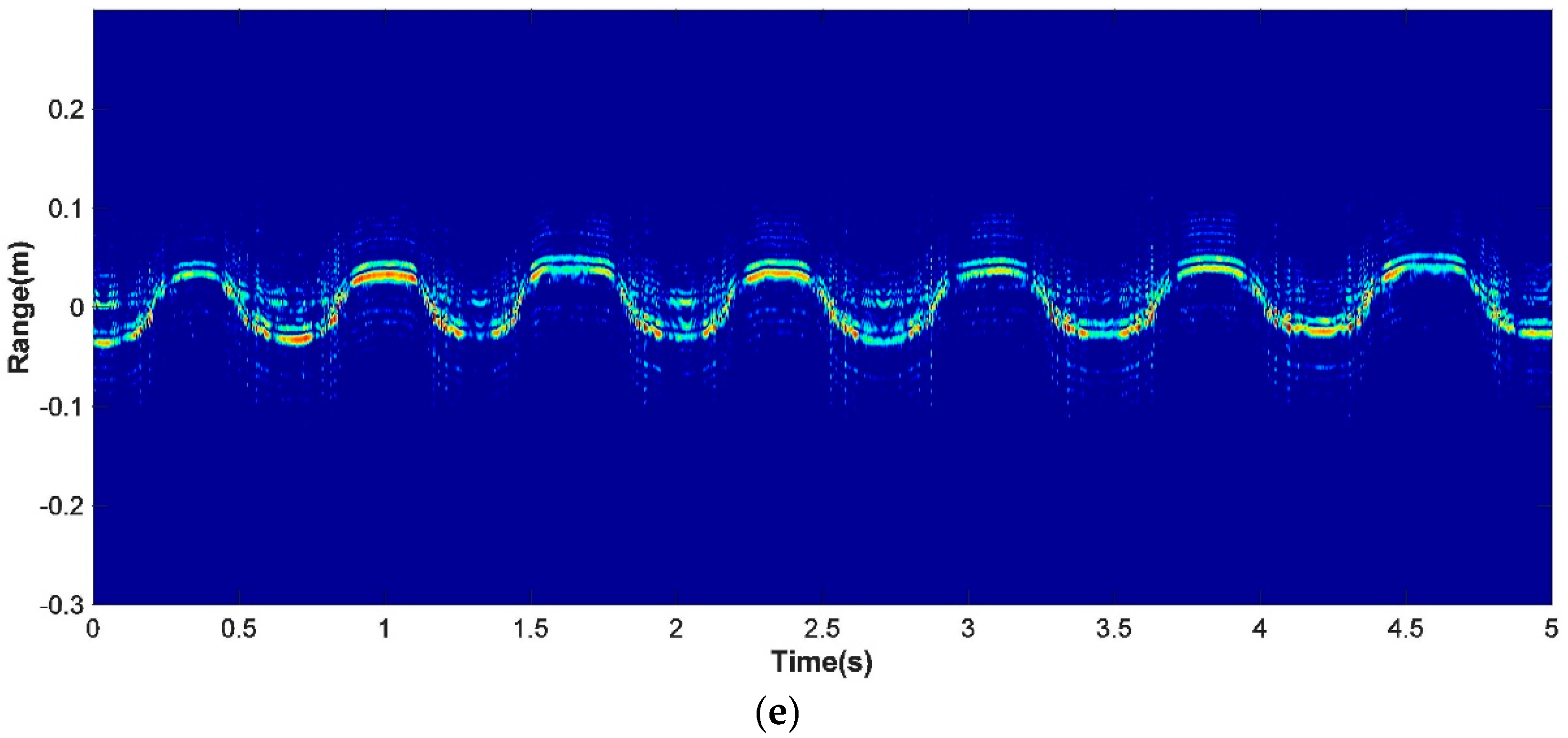

2.3. Doppler Signatures

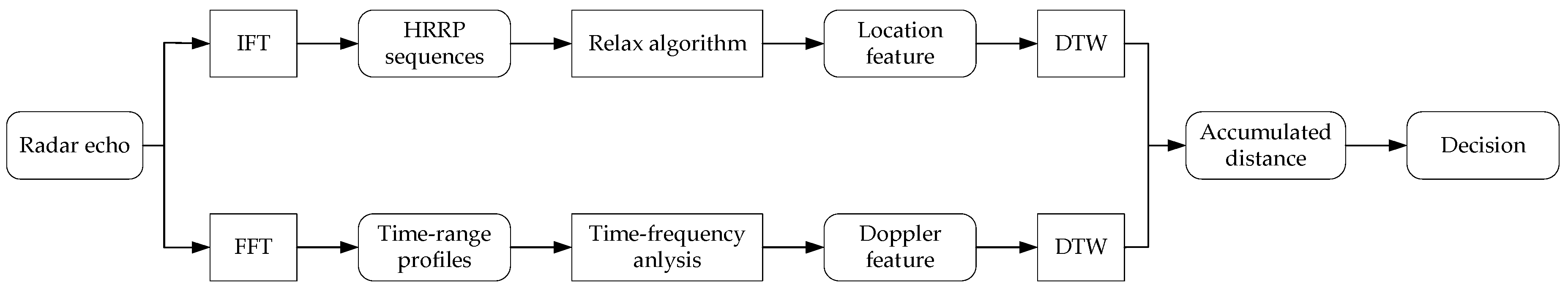

3. Gesture Recognition Scheme

3.1. Feature Extraction of HRRP Sequences

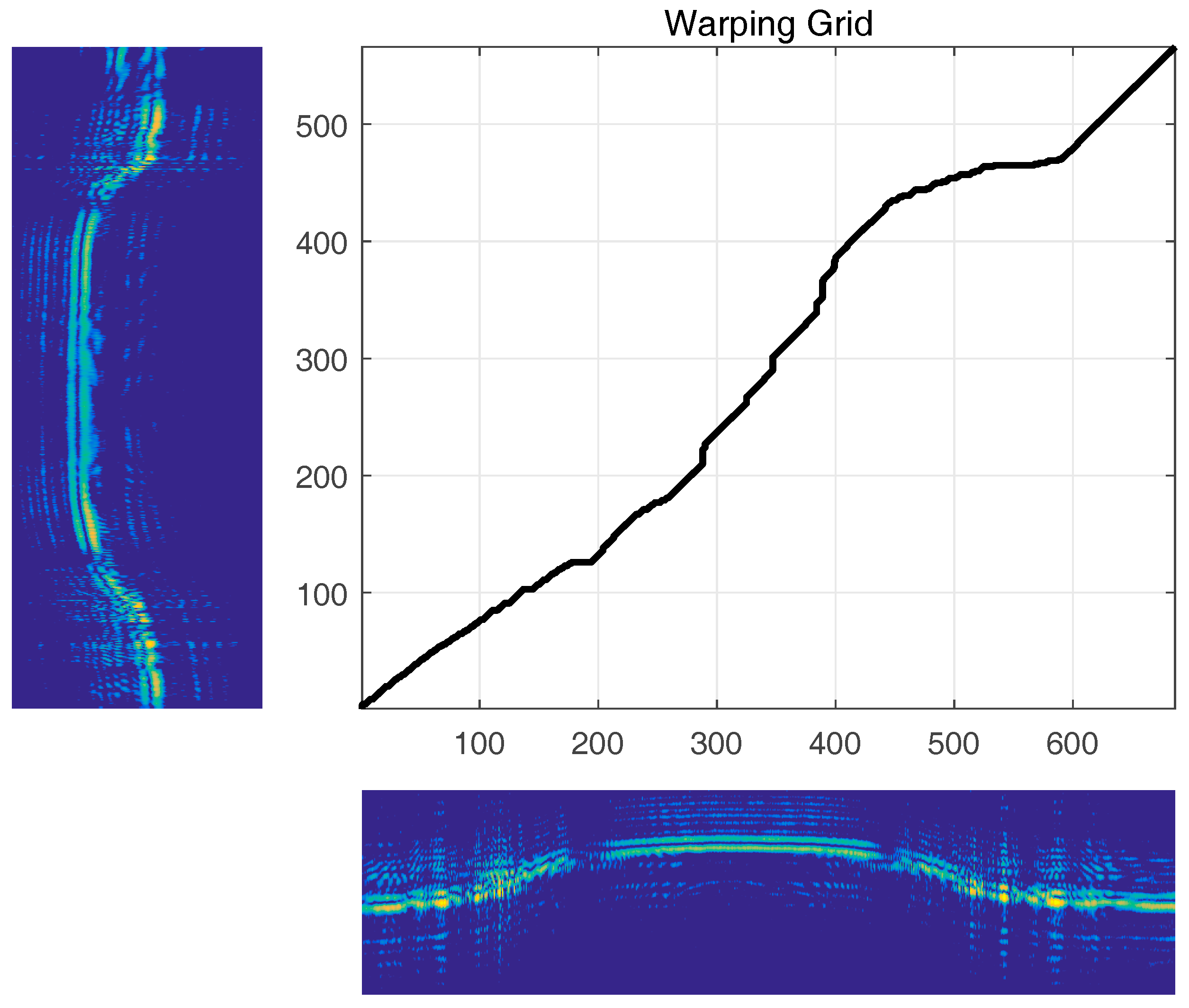

3.2. Classification Method

3.3. Recognition Scheme Overview

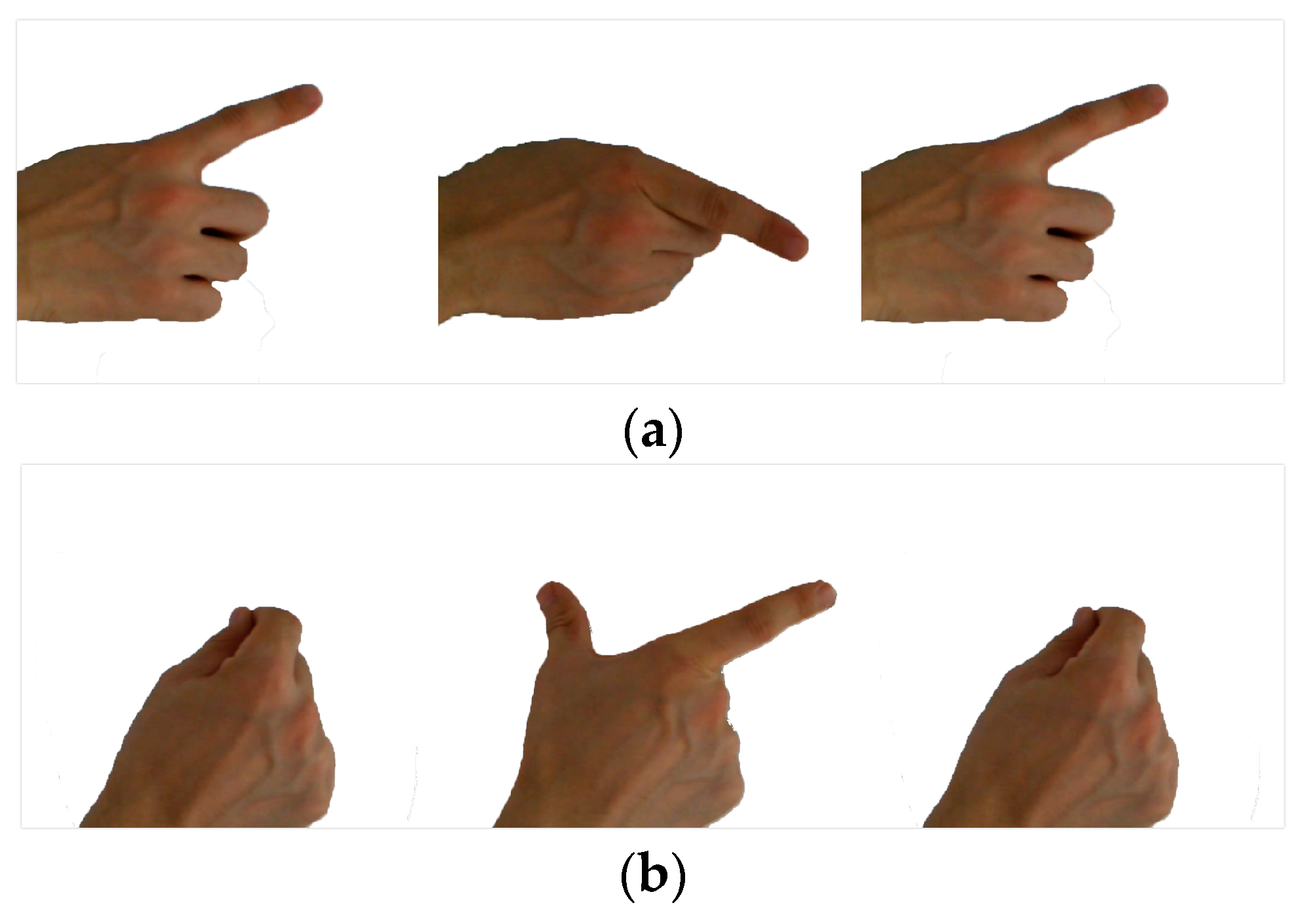

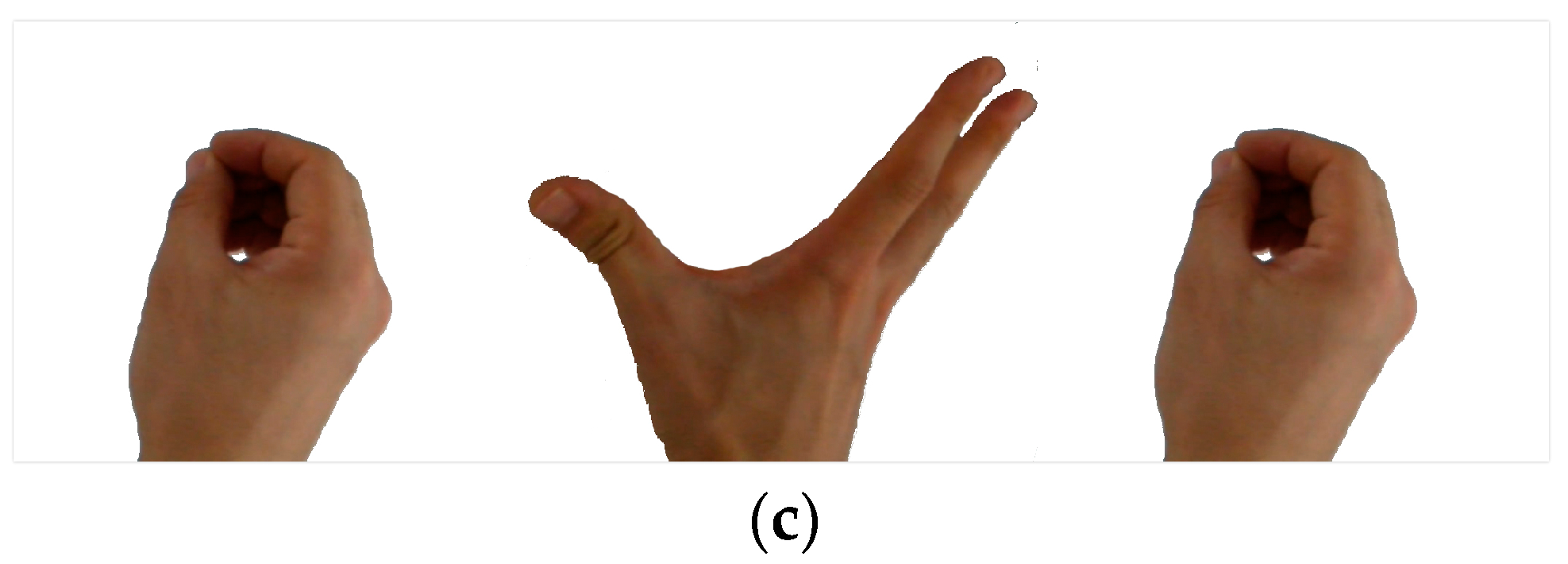

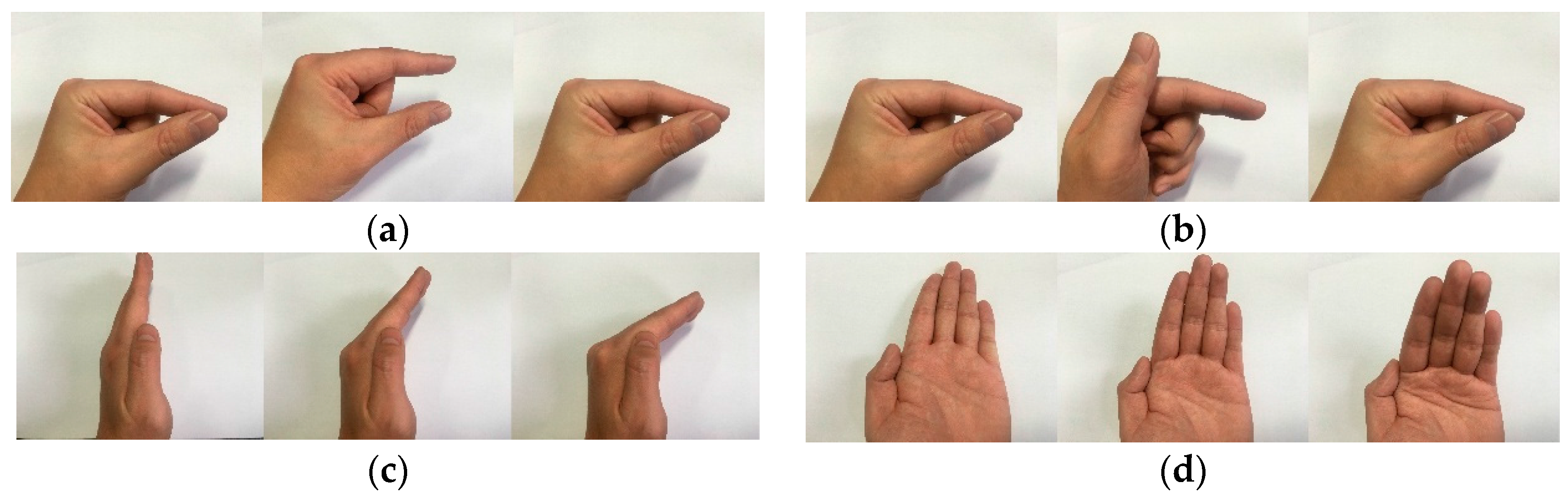

4. Experimental Data

5. Experimental Data Preprocessing

6. Experimental Results

7. Conclusions

Acknowledgments

Author Contributions

Conflicts of Interest

References

- Ferguson, B.; Zhang, X.C. Materials for terahertz science and technology. Nat. Mater. 2002, 1, 26–33. [Google Scholar] [CrossRef] [PubMed]

- Zyweck, A.; Bogner, R.E. Radar target classification of commercial aircraft. IEEE Trans. Aerosp. Electron. Syst. 1996, 32, 598–606. [Google Scholar] [CrossRef]

- Zhang, B.; Pi, Y.; Li, J. Terahertz imaging radar with inverse aperture synthesis techniques: System structure, signal processing, and experiment results. IEEE Sens. J. 2015, 15, 290–299. [Google Scholar] [CrossRef]

- Palka, N.; Rybak, A.; Czerwińska, E.; Florkowski, M. Terahertz Detection of Wavelength-Size Metal Particles in Pressboard Samples. IEEE Trans. Terahertz Sci. Technol. 2016, 6, 99–107. [Google Scholar] [CrossRef]

- Mitra, S.; Acharya, T. Gesture recognition: A survey. IEEE Trans. Syst. Man Cybern. Part C (Appl. Rev.) 2007, 37, 311–324. [Google Scholar] [CrossRef]

- Molchanov, P.; Gupta, S.; Kim, K.; Pulli, K. Multi-sensor system for driver’s hand-gesture recognition. In Proceedings of the 2015 11th IEEE International Conference and Workshops on Automatic Face and Gesture Recognition (FG), Ljublijana, Slovenia, 4–8 May 2015; IEEE: Piscataway, NJ, USA, 2015; Volume 1, pp. 1–8. [Google Scholar]

- Gałka, J.; Mąsior, M.; Zaborski, M.; Barczewska, K. Inertial motion sensing glove for sign language gesture acquisition and recognition. IEEE Sens. J. 2016, 16, 6310–6316. [Google Scholar] [CrossRef]

- Bhattacharjee, S.; Booske, J.H.; Kory, C.L.; Van Der Weide, D.W.; Limbach, S.; Gallagher, S.; Welter, J.D.; Lopez, M.R.; Gilgenbach, R.M.; Ives, R.L.; et al. Folded waveguide traveling-wave tube sources for terahertz radiation. IEEE Trans. Plasma Sci. 2004, 32, 1002–1014. [Google Scholar] [CrossRef]

- Federici, J.F.; Schulkin, B.; Huang, F.; Gary, D.; Barat, R.; Oliveira, F.; Zimdars, D. THz imaging and sensing for security applications—Explosives, weapons and drugs. Semicond. Sci. Technol. 2005, 20, S266. [Google Scholar] [CrossRef]

- Molchanov, P.; Gupta, S.; Kim, K.; Pulli, K. Short-range FMCW monopulse radar for hand-gesture sensing. In Proceedings of the 2015 IEEE Radar Conference (RadarCon), Arlington, VA, USA, 10–15 May 2015; IEEE: Piscataway, NJ, USA, 2015; pp. 1491–1496. [Google Scholar]

- Bell, M.R.; Grubbs, R.A. JEM modeling and measurement for radar target identification. IEEE Trans. Aerosp. Electron. Syst. 1993, 29, 73–87. [Google Scholar] [CrossRef]

- Yang, J.; Yin, J. On Target Recognition and Classification by a Polarimetric Radar. IEICE Commun. Soc. Glob. Newsl. 2017, 41, 2. [Google Scholar]

- Shu, C.; Xue, F.; Zhang, S.; Huang, P.; Ji, J. Micro-motion Recognition of Spatial Cone Target Based on ISAR Image Sequences. J. Aerosp. Technol. Manag. 2016, 8, 152–162. [Google Scholar] [CrossRef]

- Mao, C.; Liang, J. HRRP recognition in radar sensor network. Ad Hoc Netw. 2017, 58, 171–178. [Google Scholar] [CrossRef]

- Zhang, Y.; Fang, N. Synthetic Aperture Radar Image Recognition Using Contour Features. In DEStech Transactions on Engineering and Technology Research, 2016 (MCEMIC); DEStech Publications, Inc.: Lancaster, PA, USA, 2016. [Google Scholar]

- Feng, B.; Chen, B.; Liu, H. Radar HRRP target recognition with deep networks. Pattern Recognit. 2017, 61, 379–393. [Google Scholar] [CrossRef]

- Du, L.; Wang, P.; Liu, H.; Pan, M.; Chen, F.; Bao, Z. Bayesian spatiotemporal multitask learning for radar HRRP target recognition. IEEE Trans. Signal Process. 2011, 59, 3182–3196. [Google Scholar] [CrossRef]

- Li, J.; Phung, S.L.; Tivive, F.H.C.; Bouzerdoum, A. Automatic classification of human motions using Doppler radar. In Proceedings of the 2012 International Joint Conference on Neural Networks (IJCNN), Brisbane, Australia, 10–15 June 2012; IEEE: Piscataway, NJ, USA, 2012; pp. 1–6. [Google Scholar]

- Cheng, H.; Dai, Z.; Liu, Z.; Zhao, Y. An image-to-class dynamic time warping approach for both 3D static and trajectory hand gesture recognition. Pattern Recognit. 2016, 55, 137–147. [Google Scholar] [CrossRef]

- Zhou, Y.; Jiang, G.; Lin, Y. A novel finger and hand pose estimation technique for real-time hand gesture recognition. Pattern Recognit. 2016, 49, 102–114. [Google Scholar] [CrossRef]

- Gu, B.; Sheng, V.S. A robust regularization path algorithm for support vector classification. IEEE Trans. Neural Netw. Learn. Syst. 2017, 28, 1241–1248. [Google Scholar] [CrossRef] [PubMed]

- Gu, B.; Sun, X.; Sheng, V.S. Structural minimax probability machine. IEEE Trans. Neural Netw. Learn. Syst. 2017, 28, 1646–1656. [Google Scholar] [CrossRef] [PubMed]

- Gu, B.; Sheng, V.S.; Tay, K.Y.; Romano, W.; Li, S. Incremental support vector learning for ordinal regression. IEEE Trans. Neural Netw. Learn. Syst. 2015, 26, 1403–1416. [Google Scholar] [CrossRef] [PubMed]

- Ko, M.H.; West, G.; Venkatesh, S.; Kumar, M. Using dynamic time warping for online temporal fusion in multisensor systems. Inf. Fusion 2008, 9, 370–388. [Google Scholar] [CrossRef]

- Hsu, Y.L.; Chu, C.L.; Tsai, Y.J.; Wang, J.S. An inertial pen with dynamic time warping recognizer for handwriting and gesture recognition. IEEE Sens. J. 2015, 15, 154–163. [Google Scholar]

- Plouffe, G.; Cretu, A.M. Static and dynamic hand gesture recognition in depth data using dynamic time warping. IEEE Trans. Instrum. Meas. 2016, 65, 305–316. [Google Scholar] [CrossRef]

- Barczewska, K.; Drozd, A. Comparison of methods for hand gesture recognition based on Dynamic Time Warping algorithm. In Proceedings of the 2013 Federated Conference on Computer Science and Information Systems (FedCSIS), Kraków, Poland, 8–11 September 2013; IEEE: Piscataway, NJ, USA, 2013; pp. 207–210. [Google Scholar]

- Pei, B.; Bao, Z. Multi-aspect radar target recognition method based on scattering centers and HMMs classifiers. IEEE Trans. Aerosp. Electron. Syst. 2005, 41, 1067–1074. [Google Scholar]

- Zhao, T.; Dong, C.Z.; Ren, H.M.; Yin, H.C. The Radar Echo Simulation of Moving Targets Based on HRRP. In Proceedings of the 2013 IEEE International Conference on Green Computing and Communications (GreenCom), and IEEE Internet of Things (iThings/CPSCom), and IEEE Cyber, Physical and Social Computing, Beijing, China, 20–23 August 2013; IEEE: Piscataway, NJ, USA, 2013; pp. 1580–1583. [Google Scholar]

- Ai, X.F.; Li, Y.Z.; Zhao, F.; Xiao, S.P. Feature extraction of precession targets using multi-aspect observed HRRP sequences. Dianzi Yu Xinxi Xuebao (J. Electron. Inf. Technol.) 2011, 33, 2846–2851. [Google Scholar]

- Otero, M. Application of a continuous wave radar for human gait recognition. Proc. SPIE 2005, 5809, 538–548. [Google Scholar]

- Chen, V.C. Analysis of radar micro-Doppler with time-frequency transform. In Proceedings of the Tenth IEEE Workshop on Statistical Signal and Array Processing, Pocono Manor, PA, USA, 14–16 August 2000; IEEE: Piscataway, NJ, USA, 2000; pp. 463–466. [Google Scholar]

- Bauschke, H.H.; Borwein, J.M. On the convergence of von Neumann's alternating projection algorithm for two sets. Set-Valued Anal. 1993, 1, 185–212. [Google Scholar] [CrossRef]

- Kailath, T.; Roy, R.H., III. ESPRIT–estimation of signal parameters via rotational invariance techniques. Opt. Eng. 1990, 29, 296–313. [Google Scholar] [CrossRef]

- Dai, D.H.; Wang, X.S.; Chang, Y.L.; Yang, J.H.; Xiao, S.P. Fully-polarized scattering center extraction and parameter estimation: P-SPRIT algorithm. In Proceedings of the International Conference on Radar, 2006 (CIE ’06), Shanghai, China, 16–19 October 2006; IEEE: Piscataway, NJ, USA, 2006; pp. 1–4. [Google Scholar]

- Kim, K.T.; Kim, H.T. One-dimensional scattering centre extraction for efficient radar target classification. IEE Proc. Radar Sonar Navig. 1999, 146, 147–158. [Google Scholar] [CrossRef]

- Lien, J.; Gillian, N.; Karagozler, M.E.; Amihood, P.; Schwesig, C.; Olson, E.; Raja, H.; Poupyrev, I. Soli: Ubiquitous gesture sensing with millimeter wave radar. ACM Trans. Graph. 2016, 35, 142. [Google Scholar] [CrossRef]

| Parameters | Value |

|---|---|

| Carrier frequency | 340 GHz |

| Bandwidth | 28.8 GHz |

| Sampling frequency | 1.5625 MHz |

| Pulse repetition frequency | 1000 Hz |

| Cover Range | 10 m |

| Range Resolution | 5 mm |

| Type | G1 | G2 | G3 | G4 | G5 | G6 | G7 | G8 | G9 | G10 | Average |

|---|---|---|---|---|---|---|---|---|---|---|---|

| HRRP | 97 | 83 | 81 | 91 | 87 | 82 | 89 | 92 | 90 | 90 | 88.2 |

| Doppler | 95 | 89 | 82 | 88 | 88 | 86 | 92 | 92 | 91 | 89 | 89.2 |

| Type | G1 | G2 | G3 | G4 | G5 | G6 | G7 | G8 | G9 | G10 |

|---|---|---|---|---|---|---|---|---|---|---|

| G1 | 97 | 0 | 1 | 0 | 0 | 0 | 1 | 0 | 0 | 1 |

| G2 | 0 | 84 | 5 | 0 | 0 | 1 | 1 | 2 | 0 | 7 |

| G3 | 0 | 4 | 82 | 0 | 3 | 2 | 1 | 3 | 1 | 4 |

| G4 | 0 | 0 | 1 | 93 | 1 | 1 | 0 | 0 | 1 | 3 |

| G5 | 0 | 5 | 1 | 0 | 86 | 3 | 5 | 0 | 0 | 0 |

| G6 | 0 | 0 | 1 | 7 | 4 | 83 | 0 | 3 | 0 | 2 |

| G7 | 0 | 0 | 0 | 3 | 0 | 0 | 95 | 1 | 1 | 0 |

| G8 | 0 | 2 | 2 | 0 | 0 | 0 | 0 | 93 | 0 | 3 |

| G9 | 0 | 1 | 0 | 5 | 0 | 0 | 0 | 1 | 93 | 0 |

| G10 | 4 | 0 | 0 | 0 | 3 | 0 | 5 | 0 | 1 | 87 |

| Type | G1 | G2 | G3 | G4 | G5 | G6 | G7 | G8 | G9 | G10 |

|---|---|---|---|---|---|---|---|---|---|---|

| G1 | 98 | 0 | 1 | 0 | 0 | 0 | 1 | 0 | 0 | 0 |

| G2 | 0 | 90 | 0 | 1 | 3 | 0 | 0 | 1 | 1 | 4 |

| G3 | 2 | 1 | 87 | 0 | 2 | 6 | 0 | 1 | 1 | 0 |

| G4 | 2 | 0 | 0 | 92 | 1 | 0 | 1 | 0 | 1 | 3 |

| G5 | 0 | 4 | 1 | 0 | 91 | 0 | 2 | 0 | 1 | 1 |

| G6 | 1 | 1 | 1 | 0 | 1 | 87 | 1 | 2 | 1 | 5 |

| G7 | 0 | 1 | 0 | 2 | 0 | 1 | 94 | 0 | 0 | 2 |

| G8 | 0 | 0 | 2 | 2 | 0 | 2 | 1 | 93 | 0 | 0 |

| G9 | 0 | 0 | 0 | 1 | 3 | 0 | 0 | 3 | 92 | 1 |

| G10 | 5 | 0 | 1 | 0 | 0 | 1 | 2 | 0 | 0 | 91 |

| Soli Project | Our System | |

|---|---|---|

| Recognition rate (%) | 92.10 | 96.70 |

© 2017 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhou, Z.; Cao, Z.; Pi, Y. Dynamic Gesture Recognition with a Terahertz Radar Based on Range Profile Sequences and Doppler Signatures. Sensors 2018, 18, 10. https://doi.org/10.3390/s18010010

Zhou Z, Cao Z, Pi Y. Dynamic Gesture Recognition with a Terahertz Radar Based on Range Profile Sequences and Doppler Signatures. Sensors. 2018; 18(1):10. https://doi.org/10.3390/s18010010

Chicago/Turabian StyleZhou, Zhi, Zongjie Cao, and Yiming Pi. 2018. "Dynamic Gesture Recognition with a Terahertz Radar Based on Range Profile Sequences and Doppler Signatures" Sensors 18, no. 1: 10. https://doi.org/10.3390/s18010010