1. Introduction

Falls in elderly people often result in fractures, trauma, disabilities, and loss of activity. In fact, falls are the second biggest cause of death reported in the world (WHO [

1]). Therefore, fall prevention constitutes a major human and economic issue, nowadays and for years to come. The Timed Up and Go test (TUG test) is widely used by healthcare professionals to assess fall risk and distinguish high vs. low fall risk individuals. It is recommended by both the American Geriatrics Society and the British Geriatric Society [

2]. The TUG is based on the Get Up and Go (GUG) test, originally proposed by Mathias et al. [

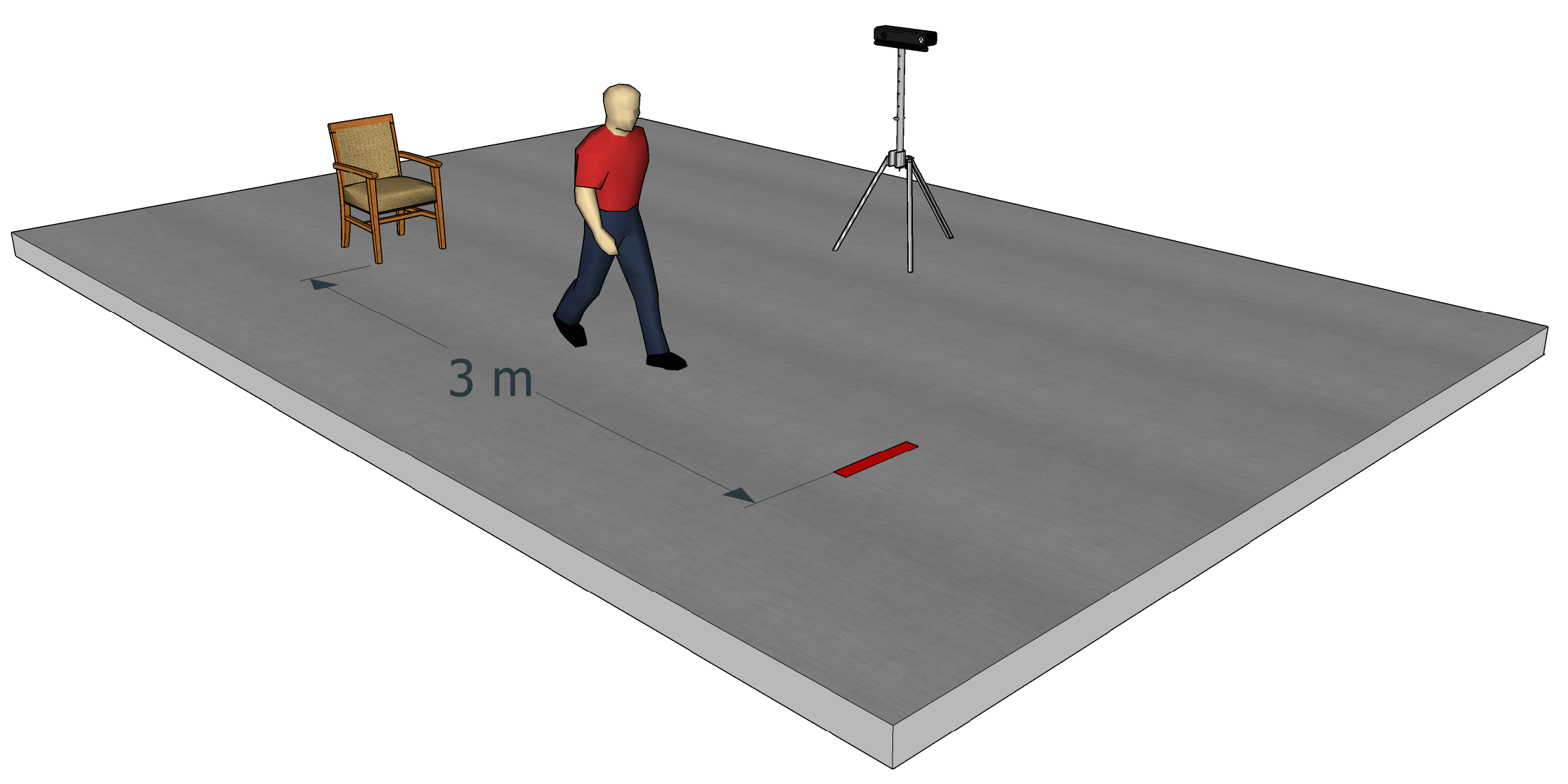

3]. In the GUG test, healthcare professionals observe a person who stands up from a chair, walks 3 m, turns 180°, walks back to the chair and sits down. Then, the healthcare professionals evaluate the person on a five-point ordinal scale : “normal”, “very slightly abnormal”, “mildly abnormal”, “moderately abnormal” and “severely abnormal”. The TUG test is the timed version of the GUG test. It was introduced by Podsiadlo and Richardson [

4] to diminish the subjective nature of scoring. Physicians usually use a stopwatch to time the test, from stand up to sit down. Several studies [

5,

6,

7] highlighted a correlation between the time required to perform the TUG test and fall risk in elderly people, a slower performance on the test being associated with a higher fall risk.

Though the TUG test is widely used by healthcare professionals, notably because of its simplicity and the ease with which it can be performed in clinical environments, some authors criticized it [

8,

9]. In particular, the TUG test is considered as being too dependent on the clinicians conducting it as well as on the environmental conditions in which it is conducted. When looking at the literature and at clinical practice, the threshold used to discriminate low and high fall risk individuals can vary from 10 s to 25 s. For instance, Shumway-Cook et al. [

5] have proposed a cut-off of 13.5 s to discriminate low and high fall risk individuals. Along a similar line, Dite and Temple [

10] found that a cut-off value of 13 s resulted in a discrimination sensitivity of 89% and a discrimination specificity of 93%. On the other hand, Okumiya et al. [

11] suggested that a cut-off of 16 s constituted a good predictor of fall risk. The environmental conditions chosen by the clinicians can also increase the variability of the test: for instance, the 3 m of walking are not always respected. In addition, a chair without arms is sometimes used. Finally, the instructions given and the test evaluation can vary depending on the experience of the medical staff. For instance, the instruction to walk as fast as possible or as comfortably as possible can result in time differences. To limit such biases, an evaluation grid including several ‘assessment observations’ is sometimes used by healthcare professionals. This grid can then be used to determine a score on the five-point scale proposed by Mathias and colleagues [

3]. However, the assessment observations can slightly differ between institutions. In addition, healthcare professionals are not always aware of the existence of this grid, and it is seldom used in practice.

Another limitation of the TUG test is that, currently, it is almost exclusively run by specialized clinicians. We believe that, if such a test could be performed routinely and automatically by other healthcare professionals, notably by general practitioners, fall prevention could be greatly improved. Specifically, new technological solutions could be adopted to help perform automatic and more objective clinical assessments. In line with this, instrumenting clinical assessments using technology is an issue addressed by a growing number of research groups. The aim is to build a system (based on sensors such as video or accelerometers) automatically providing data relative to the performed test. In their review of 2015, Sprint et al. [

12] listed the different techniques to automate the TUG test. Weiss et al. [

13] automated the TUG test using accelerometers, whereas Salarian et al. [

14] combined accelerometers and gyroscopes. The use of sensors allowed these authors to discriminate healthy subjects from Parkinson’s disease patients, which was otherwise impossible using the traditional TUG assessment only. Beyea et al. [

15] used the inertial measurement units (accelerometers, gyroscopes and magnetometers) with healthy adults aged 21 to 64 years old to detect TUG phases (sit-to-stand, walk, turn, stand-to-sit). They validated the correlation between the TUG phase durations obtained with their sensors and those measured with an optoelectronic motion capture system. Accelerometers have also been used with the TUG test to automatically classify high and low fall risk individuals [

16,

17,

18]. Whereas traditional TUG assessments are mostly based on the time required to perform the test, these studies included additional parameters/criteria to discriminate high fall risk from low fall risk individuals.

The TUG test can also be “automated” using video recording, which has the advantage not to interfere with the tested person. For instance, Skrba et al. [

19] used two webcams to extract different parameters such as the total walking time, the number of steps, the stability into and out of the turn, etc. They concluded that the walk duration and the time between turning and sitting back on the chair were the most relevant parameters to classify low and high fall risk individuals. Other researchers used video to analyze a specific component of the test. For example, Wang et al. [

20] focused on the turn phase, whereas Frenken et al. [

21] studied the walking phase. Lohmann et al. [

22] used a Kinect camera with skeleton tracking to automate the test. They detected events, as starts moving, ends uprising, starts walking, starts rotating, etc., and compared these values with manual labeling. Kitsunezaki et al. [

23] performed a study similar to that of Lohmann et al. but they used three Microsoft Kinect cameras placed in front of, to the side of and above the chair, in order to determine the position of the Kinect, which minimized timing errors. They concluded that placing the Kinect 4 m in front of the chair is the configuration giving the most accurate data.

We developed a system that automatically discriminates low vs. high fall risk individuals. This system measures the total time required to complete the test, as healthcare professionals do, but, in addition, it measures complementary parameters, such as time to sit down, time to walk, spatio-temporal parameters of gait, etc. These additional parameters cannot be measured with the traditional TUG test and they provide supplementary qualitative information that can help the clinician identify which aspects/components of the test are problematic for the tested person (i.e., walking, turning, sitting or getting up). In that respect, measuring these parameters constitutes an automatic variant of the evaluation grid. However, the automatic assessment of these parameters with our system presents two main advantages over the “traditional” evaluation grid. First, because it is built in the system, the assessment takes place every time the test is performed, whereas as mentioned above, the evaluation grid is seldom used by clinicians. Second, these parameters provide a more objective evaluation than the grid, the results of which often depend on the clinician performing the evaluation.

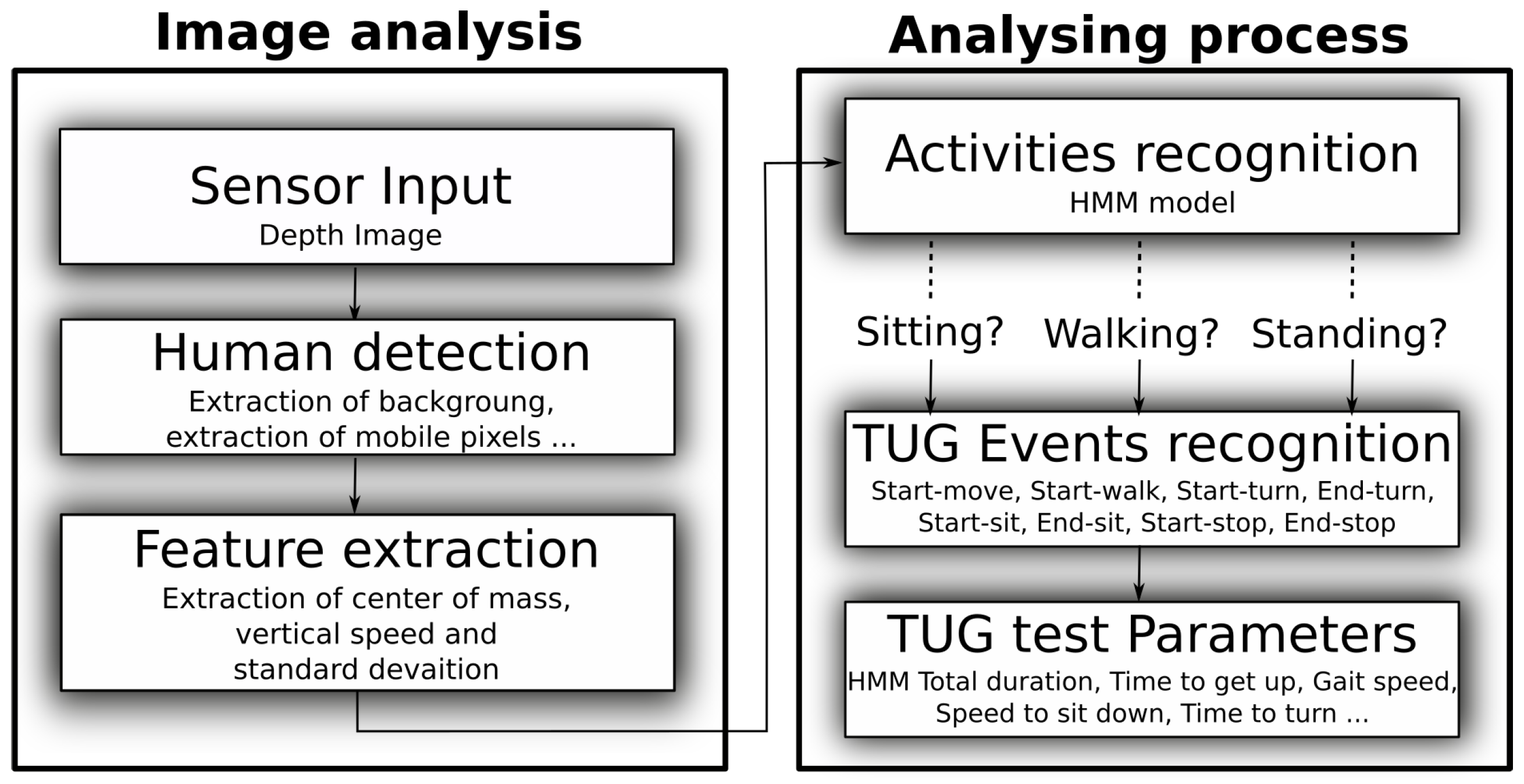

For the hardware, our system relies on the Microsoft Kinect v2, that is low cost, easy to use, and works with the silhouette (i.e., using depth points only), which is of less privacy concern [

24]. Most studies based on the Kinect sensor use the Microsoft Software Development Kit (SDK). Our system does not rely on the Microsoft SDK but instead uses an algorithm developed by Dubois and Charpillet [

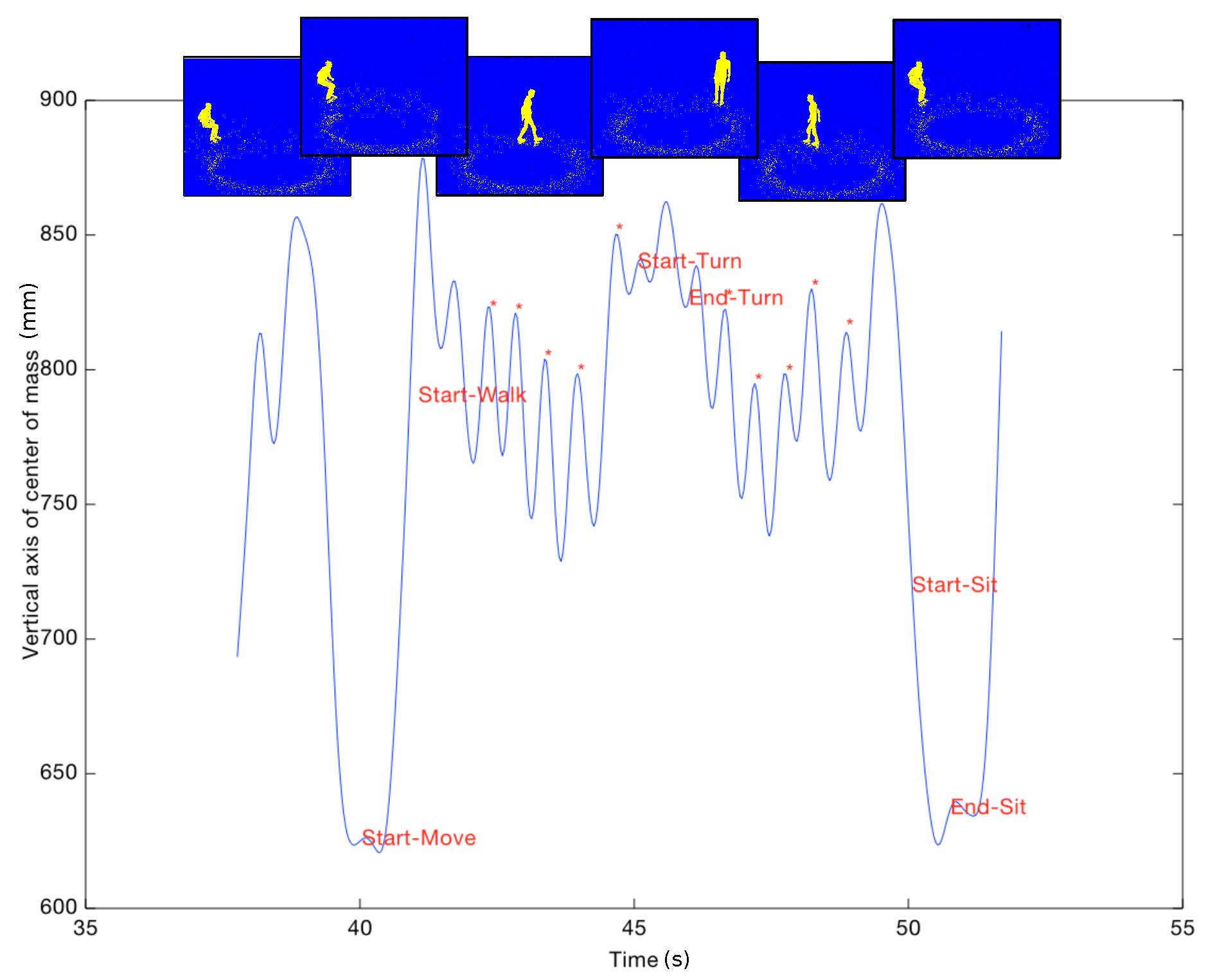

25]. This is because, with our system, the parameters are extracted from the vertical displacement of the geometric center of the body, so that an accurate representation of the skeleton and the body segments is not necessary. Our approach has two main advantages. First, parameters can be extracted even if the feet of the walking person are occluded. Second, the performance of the analysis is relatively unaffected by the angle of view of the sensor, which also constitutes a major advantage for monitoring individuals in any place, and especially in furnished environments (e.g., physician’s office, patient’s room). In this study, the same sequences of the TUG test were evaluated both by our algorithm and by healthcare specialists. First, we tested whether the measurements of test duration made with our system matched those made by healthcare professionals. For that, we used statistical analyses to compare the test durations automatically extracted with the Kinect sensor to those measured by healthcare specialists. In addition, we assessed which test parameters are the most relevant to classify individuals into high or low fall risk population. Finally, we used machine learning methods to determine whether our system can constitute an automatic and objective alternative to the evaluation grid. Our aim was to build a tool that consistently provides the same values regardless of the clinician conducting the test and of the place where it is performed.

4. Discussion and Conclusions

Our aim was to automate the Timed Up and Go test in order to discriminate low vs. high risk of fall individuals as objectively as possible using several quantitative parameters. Currently, healthcare professionals time the test and can use an evaluation grid to perform the test more objectively. However, this test is often criticized because it depends too heavily on the clinician conducting it and on the environment in which it is performed. To automate the duration measurement and the evaluation grid, 37 individuals performed the TUG test in front of an ambient Kinect sensor. The sequences were simultaneously evaluated by healthcare professionals and by specific algorithms analysing the depth images provided by the Kinect sensor. The clinicians measured the total duration of the test and filled the evaluation grid for each participant. With our algorithms, we automatically measured the test duration as well as other parameters (such as step length, time to turn, etc) inspired by the evaluation grid.

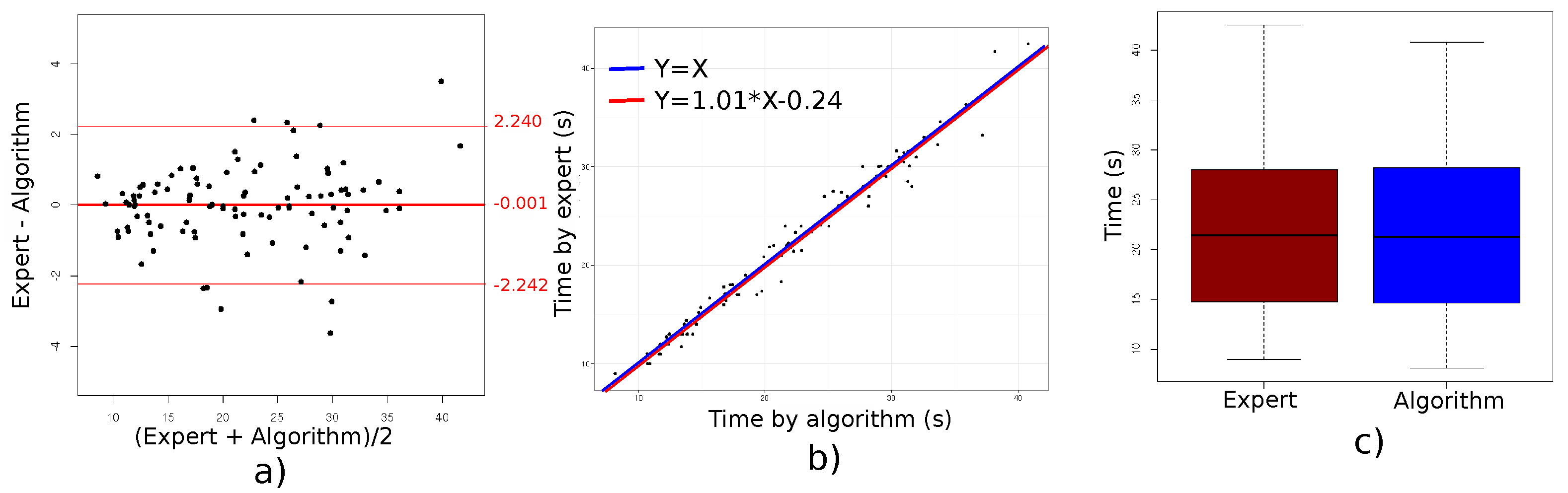

The first important result is that we found an excellent agreement between the TUG test durations measured by the clinicians and those provided by our algorithm, with a difference in average duration of only

s. As a comparison, in a study with nine participants (including five elderly people), Lohmann et al. [

22] found an average difference of 0.1 s when comparing Kinect-based and clinician-based measurements of test duration. In addition to assessing the agreement for average durations, we also compared algorithm-measured and clinician-measured test duration values for each of the 99 individual tests and computed the limits of agreement (mean difference ± 2 SD). The observed limits of agreement ranged from −2.24 to 2.24. Such a 2 s difference is rather small, especially considering that this difference depends on when the clinician starts the chronometer. As a matter of fact, measured durations can also differ between clinicians [

32]. In our experiment, two clinicians timed the TUG test. We ran an extra analysis in which the durations measured by the two clinicians were analyzed separately using in each case the same statistical analysis that was used for the “global analysis”, i.e., the analysis that included the test durations measured by both clinicians. Test durations measured by clinician 1 were closer to those measured by the Kinect (limits of agreement ranging from −2.313 to 1.977 with a mean difference of −0.168) than test durations measured by clinician 2 (limits of agreement ranging from −2.185 to 2.584 with a mean difference of 0.199). Murphy and Lowe [

32] compared test durations measured by two different clinicians on the same thirty test sequences (15 participants, two test sequences per participant). These authors did not report the limits of agreement (for a direct comparison with ours), but they found a correlation coefficient of 0.77 between the test duration measured by the two clinicians, which highlights some measurement differences. Taken together, these results confirm that our algorithm provides reliable measurements of the TUG test duration, which are in good agreement with those measured by expert clinicians.

A second objective of our study was to identify additional parameters (i.e., other than test duration) that are currently neither measured nor measurable by expert clinicians and that could help discriminate high and low fall risk individuals. Based on test duration, we classified our 37 participants into two groups, namely high fall risk and low fall risk individuals. The mean age of the participants in the high fall risk group was 86.63, whereas it was 79.36 in the low fall risk group. Interestingly, all automatically extracted parameters, such as step length, gait speed, time and speed to sit down and to get up, or time to walk and turn, differed significantly between the two groups. Previous studies tried to identify parameters other than test duration that could discriminate high and low fall risk individuals. For instance, Tmaura et al. [

18] investigated each phase of the TUG test (i.e., sit-bend, bend-stand, forth walk, turn, back walk, stand-bend and bend-sit) using wireless inertia sensors. They showed that the duration required to complete each phase was significantly different between high fall risk and low fall risk individuals. Our results are similar to theirs. Therefore, these parameters could be used to complete the evaluation of healthcare professionals by providing complementary information. As proposed by Tmaura et al., these parameters would notably be very helpful in determining more precisely which activities would place the individual at a higher risk of fall and should therefore be monitored with particular attention. In addition, clinicians could rely on these parameters to propose a more targeted rehabilitation focusing particularly on the specific “weaknesses” of each patient.

The third objective of our study was to assess how well the parameters automatically extracted by our algorithm would allow us to classify participants into the three fall risk classes routinely used by clinicians. The classification performed by expert clinicians on our 34 participants was used as a reference. For a three-class classification, the best matching rate between our algorithm and the reference was 94%. This matching rate was obtained combining three evaluation parameters. By regrouping the two classes being composed of individuals at risk of fall (i.e., moderate and high risk of fall) so as to use a two-class classification, we obtained a matching rate of 100% using two evaluation parameters only. In other words, two parameters were sufficient to distinguish without error participants being at risk of fall from participants free of fall risk. Based on our results, the most relevant parameters to perform such a classification are mean step length, mean step duration and mean walking cadence. In particular, we showed that the most robust classification is obtained when coupling these parameters with parameters related to the sitting position (speed to sit down or to get up).

Because of population ageing, fall prevention is a very important issue, both nowadays and for years to come. People presenting a fall risk need to be detected as soon as possible so that they can adapt their home environment and follow rehabilitation programs allowing them to stay active longer, if possible at home. Therefore, fall risk represents a human, economic and societal issue. Currently, fall prevention mostly relies on clinical tests, and notably the TUG test, performed in healthcare institutions by expert clinicians. Here, we proposed a system that automatically evaluates TUG test performance in a more objective, robust and detailed manner. This system is inexpensive, easy to set up, and does not require the level of expertise of the traditional TUG test to be reliably used. Therefore, our system could be used routinely and with more flexibility by physicians/clinicians with less expertise (as, for instance, by general practitioners in their practice) to assess fall risk, which would greatly contribute to improving fall risk detection.