An EEG-Based Person Authentication System with Open-Set Capability Combining Eye Blinking Signals

Abstract

:1. Introduction

2. Materials and Methods

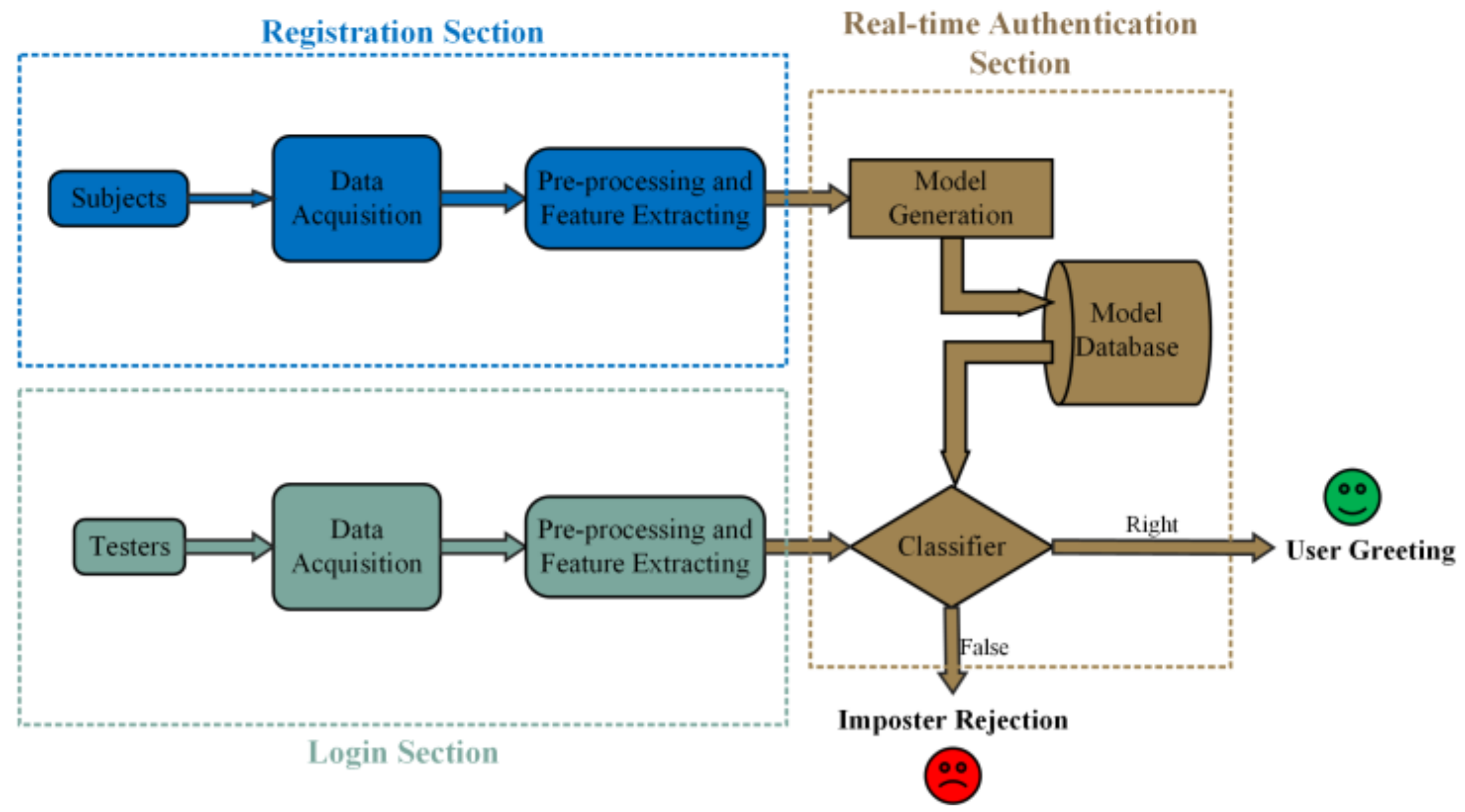

2.1. Main Framework Design

2.1.1. Main Framework of the Authentication System

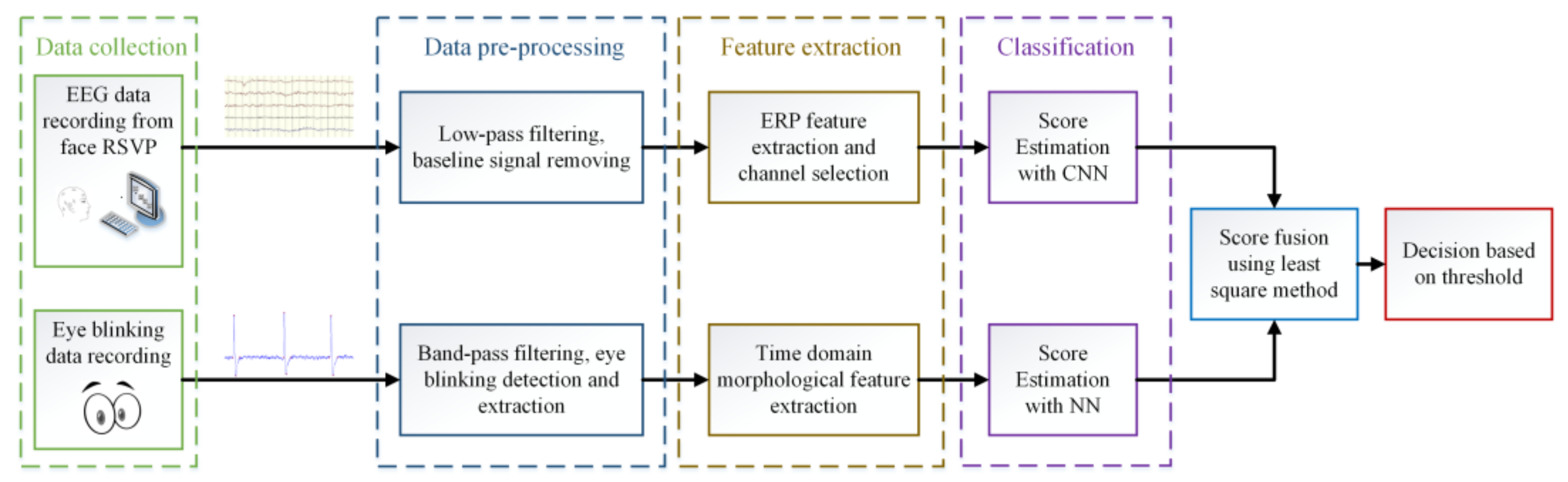

2.1.2. Main Framework of the Multi-task Authentication Method

2.2. Participants

2.3. Data Acquisition

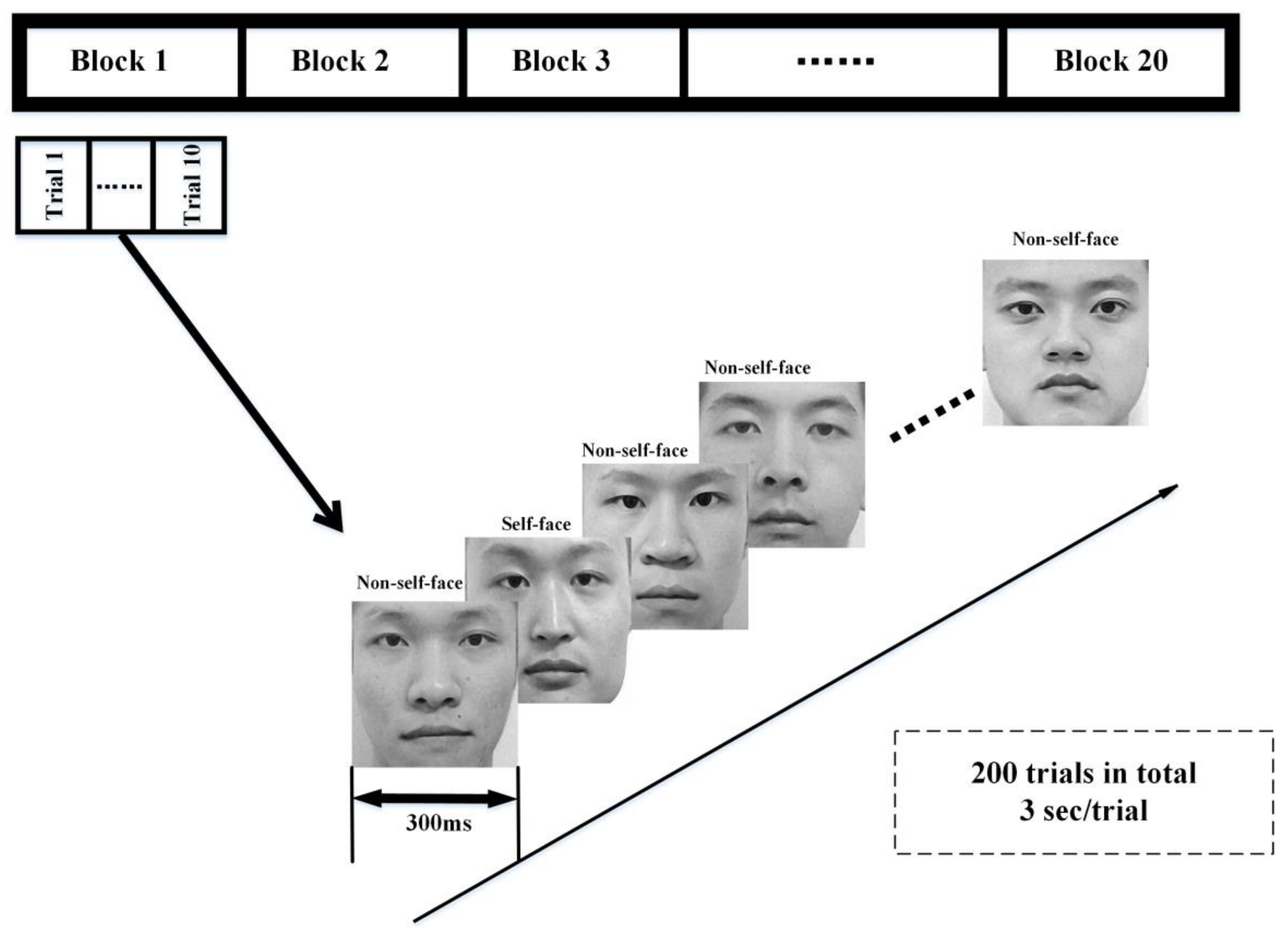

2.3.1. Visual Evoked EEG Acquisition

Self- or Non-Self-Face RSVP Paradigm

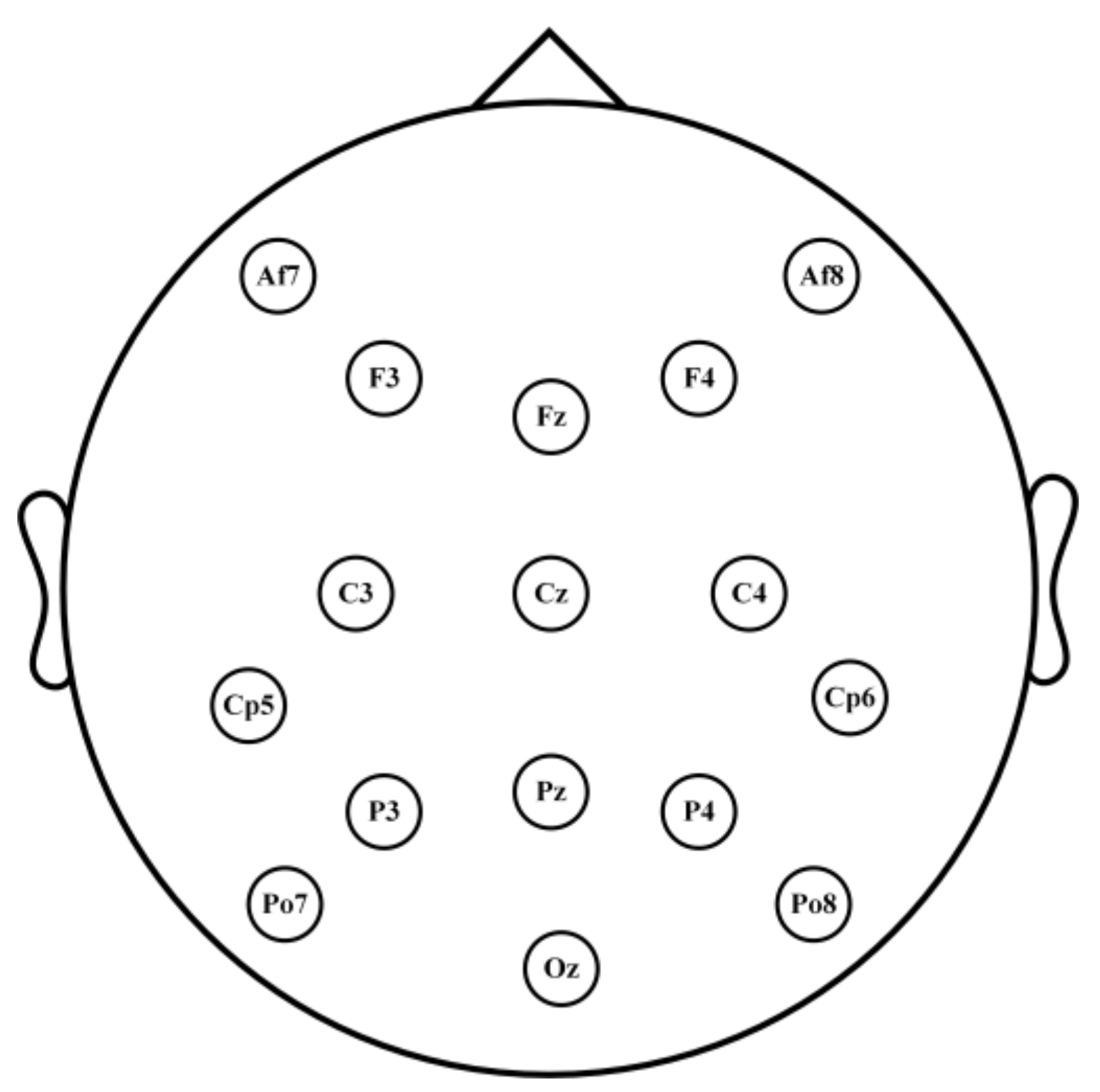

EEG Data Acquisition

2.3.2. Eye Blinking Data Acquisition

2.4. Data Preprocessing

2.4.1. Preprocessing of EEG Signals

2.4.2. Preprocessing of Eye Blinking Signals

2.5. Feature Extraction

2.5.1. Feature Extraction of EEG

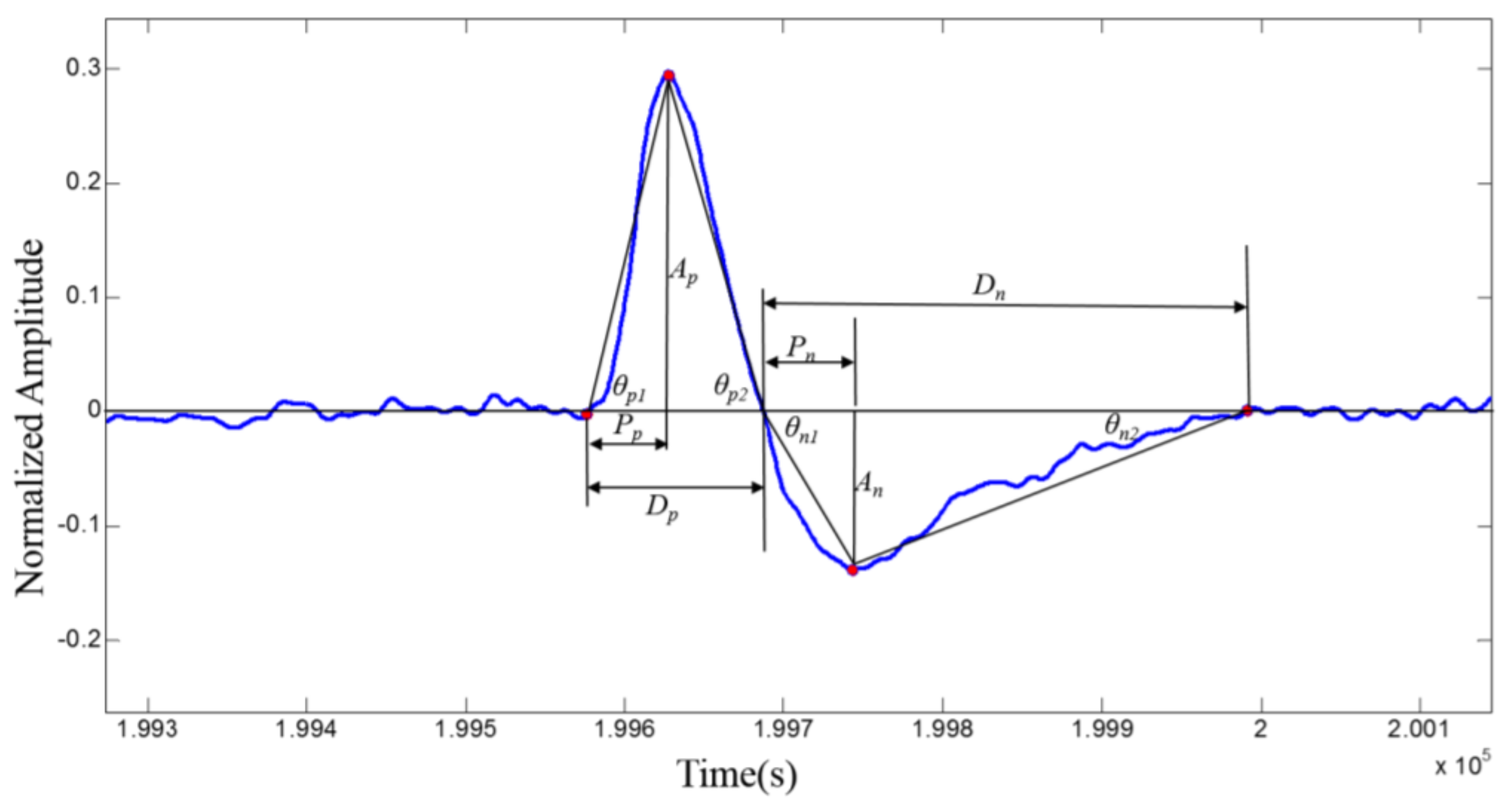

2.5.2. Feature Extraction of Eye Blinking Signals

2.6. Score Estimation with CNN and NN

2.6.1. Score Estimation of EEG Features with CNN

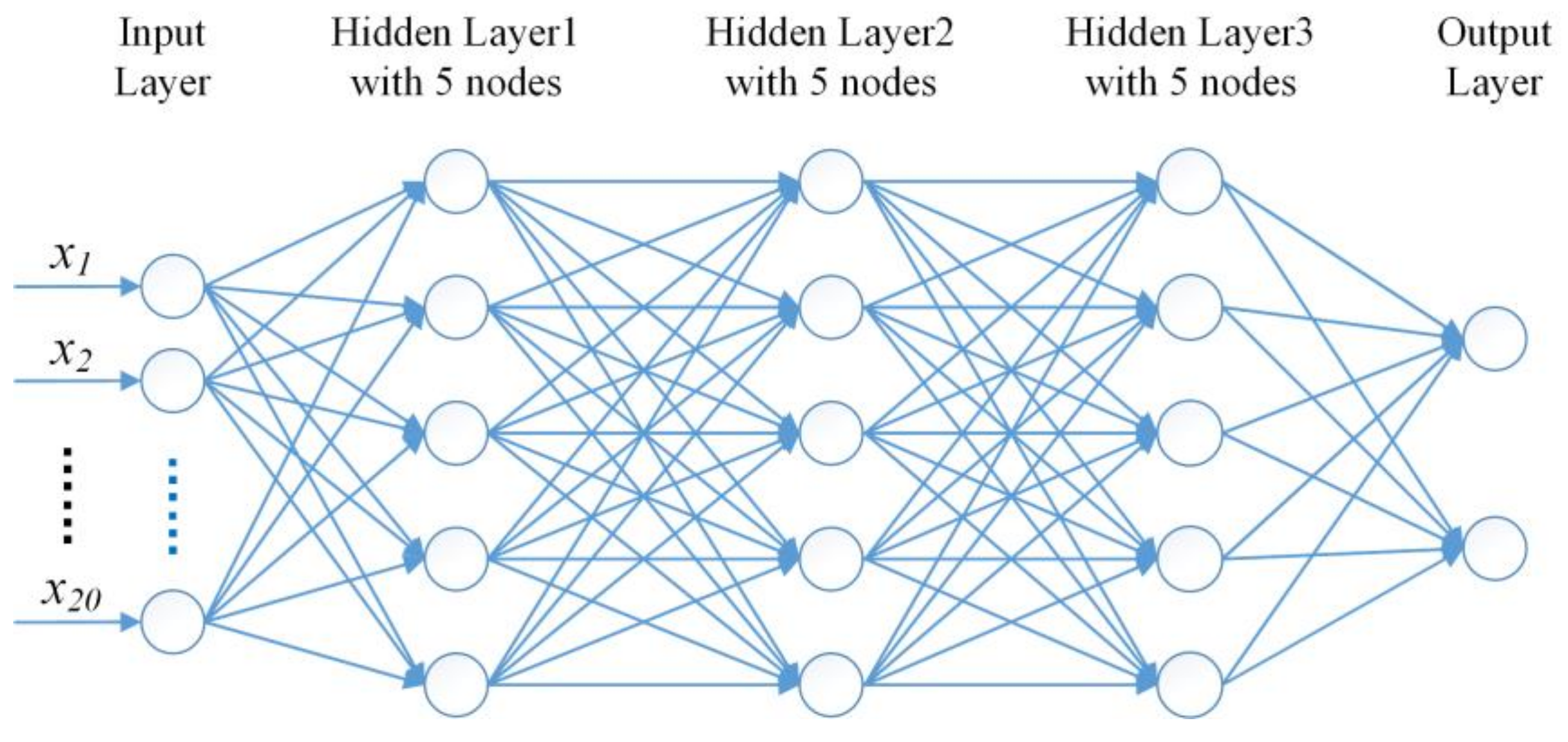

2.6.2. Score Estimation of Eye Blinking Features with NN

2.7. Score Fusion Using Least Square Method

3. Results

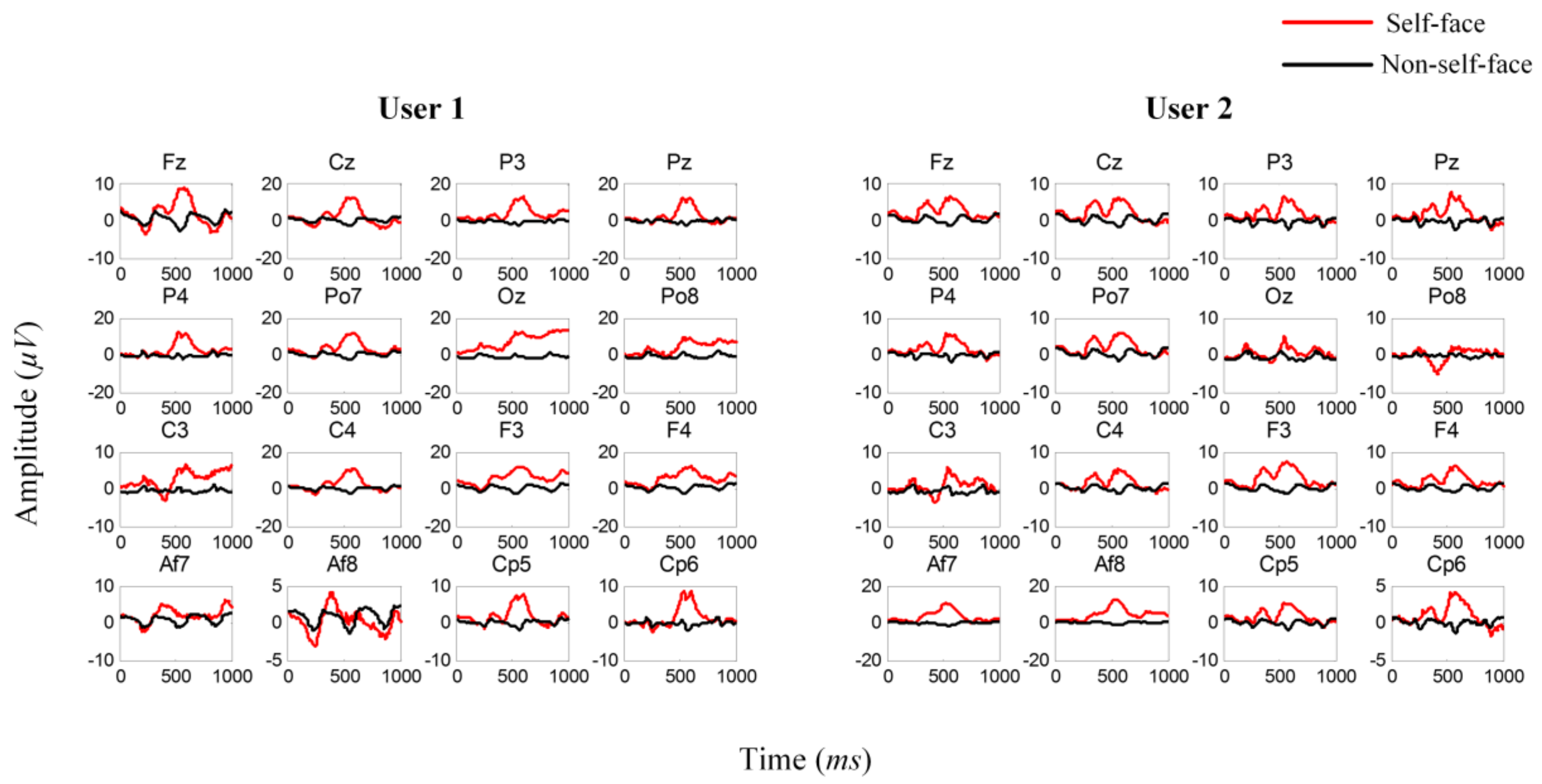

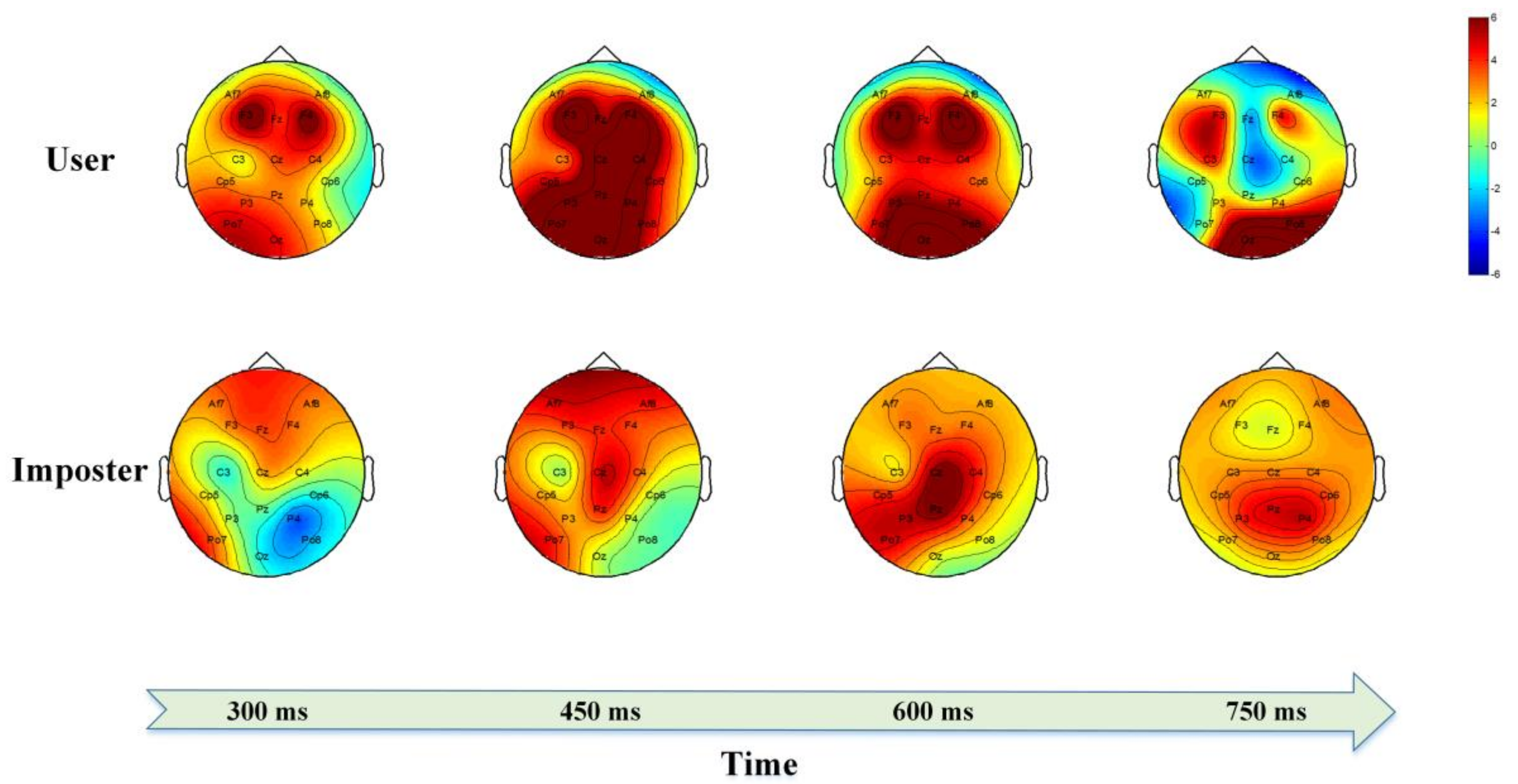

3.1. Average ERPs Analysis

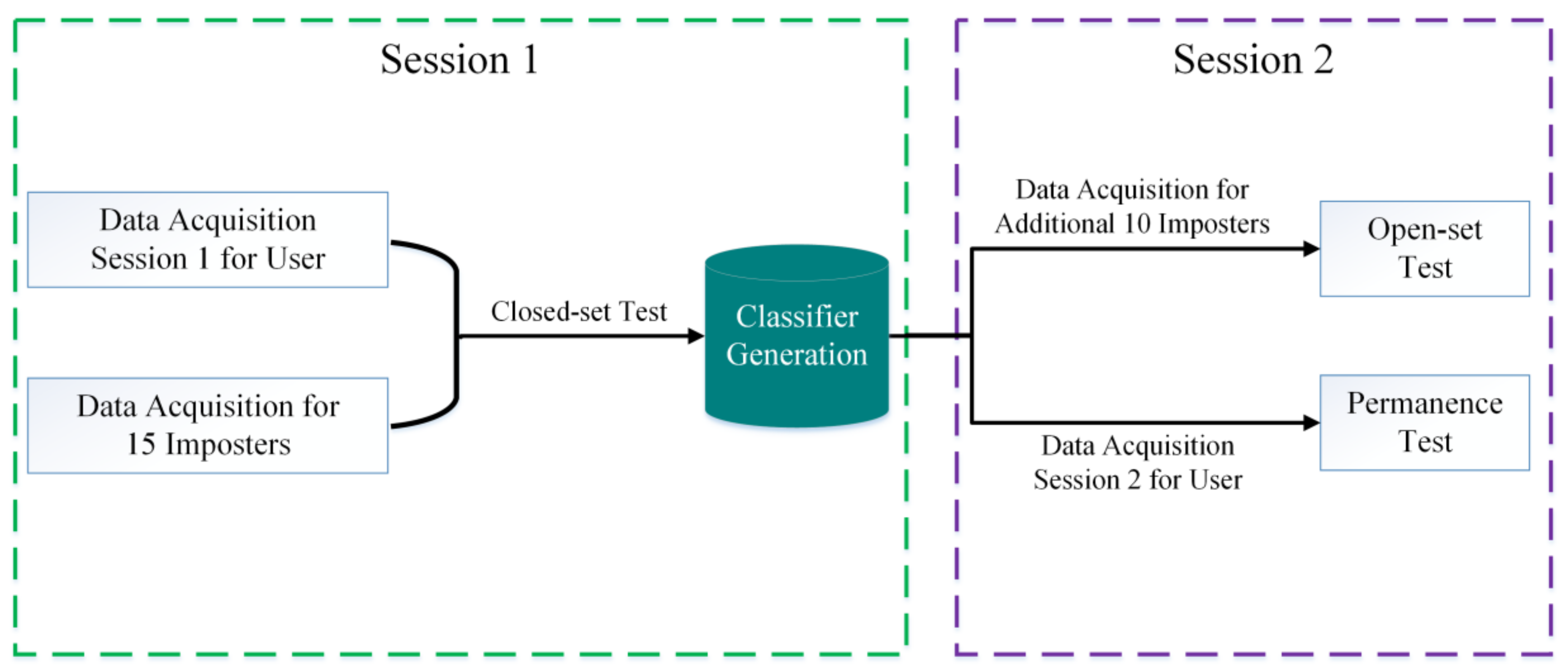

3.2. Closed-Set Authentication Result

- (1)

- For each sample x in a few classes, calculate its Euclidean distance to all samples in the minority class sample set, and then obtain its K nearest neighbors.

- (2)

- Set a sampling magnification N based on the sample imbalance ratio. For each minority sample x, randomly select several samples from its k neighbors, assuming that the selected neighborhood is .

- (3)

- For each randomly selected neighbor , construct a new sample with the original sample according to the following formula, namely:

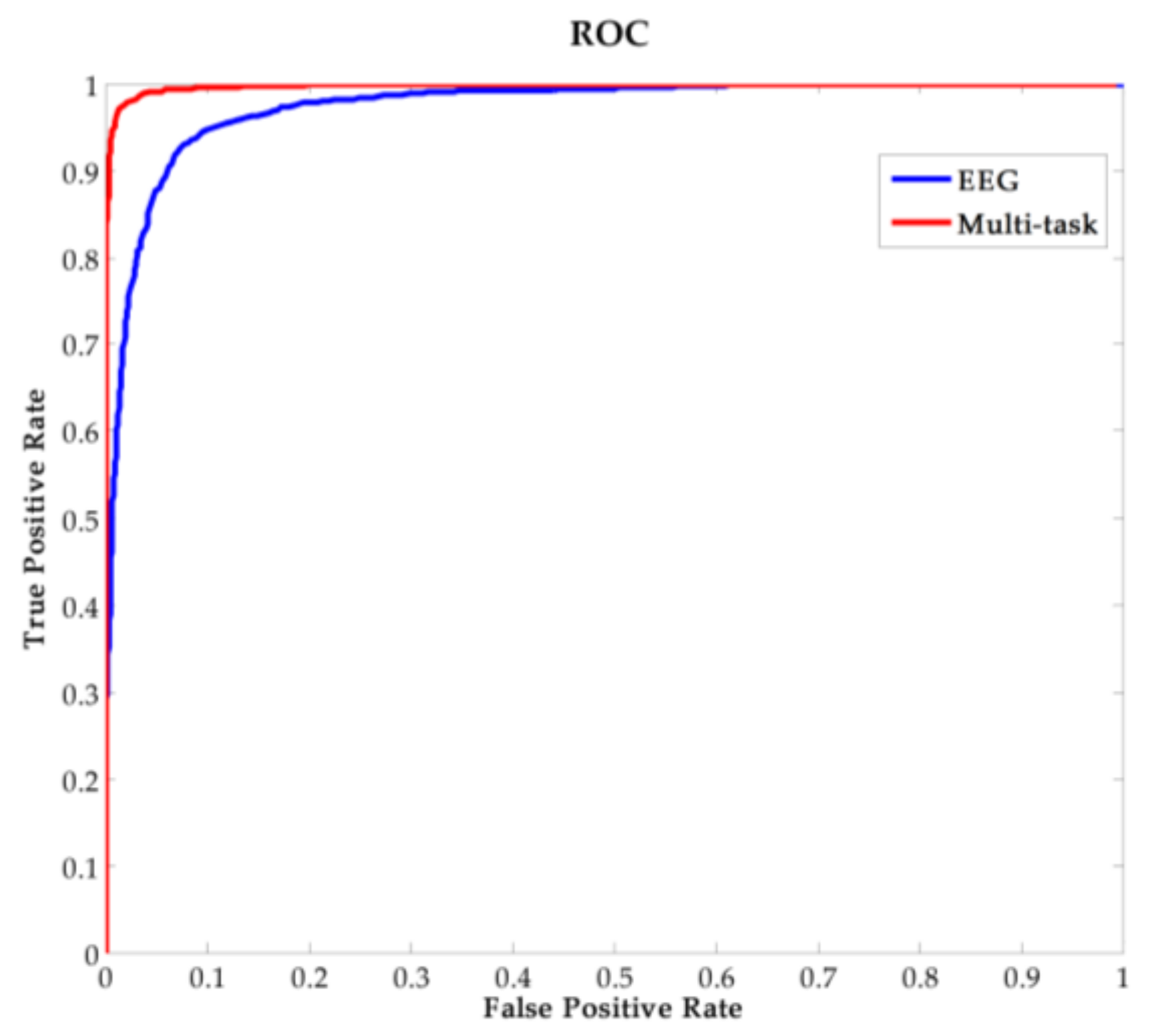

3.3. Open-Set Authentication Result

3.4. Permanence Tests for Users

4. Discussion

4.1. Comparison with the Existing EEG-Based Authentication Systems

4.2. Future Research Directions

5. Conclusions

Acknowledgment

Author Contributions

Conflicts of Interest

References

- Banville, H.; Falk, T.H. Recent advances and open challenges in hybrid brain-computer interfacing: A technological review of non-invasive human research. Brain-Comput. Interfaces 2016, 3, 9–46. [Google Scholar] [CrossRef]

- Weng, R.; Lu, J.; Tan, Y.P. Robust point set matching for partial face recognition. IEEE Trans. Image Process. 2016, 25, 1163–1176. [Google Scholar] [CrossRef] [PubMed]

- Kouamo, S.; Tangha, C. Fingerprint recognition with artificial neural networks: Application to e-learning. J. Intell. Learn. Syst. Appl. 2016, 8, 39–49. [Google Scholar] [CrossRef]

- Gunson, N.; Marshall, D.; Mcinnes, F.; Jack, M. Usability evaluation of voiceprint authentication in automated telephone banking: Sentences versus digits. Interact. Comput. 2011, 23, 57–69. [Google Scholar] [CrossRef]

- Marsico, M.D.; Petrosino, A.; Ricciardi, S. Iris recognition through machine learning techniques: A survey. Pattern Recognit. Lett. 2016, 82, 106–115. [Google Scholar] [CrossRef]

- Abo-Zahhad, M.; Ahmed, S.M.; Abbas, S.N. State-of-the-art methods and future perspectives for personal recognition based on electroencephalogram signals. IET Biom. 2015, 4, 179–190. [Google Scholar] [CrossRef]

- Palaniappan, R.; Mandic, D.P. Biometrics from brain electrical activity: A machine learning approach. IEEE Trans. Pattern Anal. Mach. Intell. 2007, 29, 738–742. [Google Scholar] [CrossRef] [PubMed]

- Bassett, D.S.; Gazzaniga, M.S. Understanding complexity in the human brain. Trends Cognit. Sci. 2011, 15, 200–209. [Google Scholar] [CrossRef] [PubMed]

- Pozo-Banos, M.D.; Alonso, J.B.; Ticay-Rivas, J.R.; Travieso, C.M. Electroencephalogram subject identification: A review. Expert Syst. Appl. 2014, 41, 6537–6554. [Google Scholar] [CrossRef]

- Poulos, M.; Rangoussi, M.; Chrissikopoulos, V.; Evangelou, A. Person identification based on parametric processing of the eeg. In Proceedings of the 6th IEEE International Conference on Electronics, Circuits and Systems, Pafos, Cyprus, 5–8 September 1999; Volume 281, pp. 283–286. [Google Scholar]

- Ravi, K.V.R.; Palaniappan, R. Leave-one-out authentication of persons using 40 hz eeg oscillations. In Proceedings of the International Conference on Computer as a Tool, Belgrade, Serbia, 21–24 November 2005; pp. 1386–1389. [Google Scholar]

- Snodgrass, J.G.; Vanderwart, M. A standardized set of 260 pictures: Norms for name agreement, image agreement, familiarity, and visual complexity. J. Exp. Psychol. Hum. Learn. Mem. 1980, 6, 174–215. [Google Scholar] [CrossRef]

- Shiliang, S. Multitask learning for eeg-based biometrics. In Proceedings of the 2008 19th International Conference on Pattern Recognition, Tampa, FL, USA, 8–11 December 2008; pp. 1–4. [Google Scholar]

- Yeom, S.K.; Suk, H.I.; Lee, S.W. Person authentication from neural activity of face-specific visual self-representation. Pattern Recognit. 2013, 46, 1159–1169. [Google Scholar] [CrossRef]

- Miyakoshi, M.; Kanayama, N.; Iidaka, T.; Ohira, H. Eeg evidence of face-specific visual self-representation. Neuroimage 2010, 50, 1666–1675. [Google Scholar] [CrossRef] [PubMed]

- Sugiura, M.; Sassa, Y.; Jeong, H.; Horie, K.; Sato, S.; Kawashima, R. Face-specific and domain-general characteristics of cortical responses during self-recognition. Neuroimage 2008, 42, 414–422. [Google Scholar] [CrossRef] [PubMed]

- Sharma, P.K.; Vaish, A. Individual identification based on neuro-signal using motor movement and imaginary cognitive process. Opt. Int. J. Light Electron Opt. 2016, 127, 2143–2148. [Google Scholar] [CrossRef]

- Potter, M.C. Rapid serial visual presentation (rsvp): A method for studying language processing. New Methods Read. Compr. Res. 1984, 118, 91–118. [Google Scholar]

- Acqualagna, L.; Blankertz, B. Gaze-independent bci-spelling using rapid serial visual presentation (rsvp). Clin. Neurophysiol. 2013, 124, 901–908. [Google Scholar] [CrossRef] [PubMed]

- Thulasidas, M.; Guan, C.; Wu, J. Robust classification of eeg signal for brain-computer interface. IEEE Trans. Neural Syst. Rehabil. Eng. 2006, 14, 24–29. [Google Scholar] [CrossRef] [PubMed]

- Wu, Q.; Zeng, Y.; Lin, Z.; Wang, X.; Yan, B. Real-time eeg-based person authentication system using face rapid serial visual presentation. In Proceedings of the 8th International IEEE EMBS Conference On Neural Engineering, Shanghai, China, 25–28 May 2017; pp. 564–567. [Google Scholar]

- Pham, T.; Ma, W.; Tran, D.; Nguyen, P. Multi-factor eeg-based user authentication. In Proceedings of the International Joint Conference on Neural Networks, Beijing, China, 6–11 July 2014; pp. 4029–4034. [Google Scholar]

- Patel, V.; Burns, M.; Chandramouli, R.; Vinjamuri, R. Biometrics based on hand synergies and their neural representations. IEEE Access 2017, 5, 13422–13429. [Google Scholar] [CrossRef]

- Abo-Zahhad, M.; Ahmed, S.M.; Abbas, S.N. A novel biometric approach for human identification and verification using eye blinking signal. IEEE Signal Process. Lett. 2014, 22, 876–880. [Google Scholar] [CrossRef]

- Abo-Zahhad, M.; Ahmed, S.M.; Abbas, S.N. A new multi-level approach to eeg based human authentication using eye blinking. Pattern Recognit. Lett. 2016, 82, 216–225. [Google Scholar] [CrossRef]

- Li, Y.; Ma, Z.; Lu, W.; Li, Y. Automatic removal of the eye blink artifact from eeg using an ica-based template matching approach. Physiol. Meas. 2006, 27, 425. [Google Scholar] [CrossRef] [PubMed]

- Chen, Y.; Atnafu, A.D.; Schlattner, I.; Weldtsadik, W.T.; Roh, M.-C.; Kim, H.J.; Lee, S.-W.; Blankertz, B.; Fazli, S. A high-security eeg-based login system with rsvp stimuli and dry electrodes. IEEE Trans. Inf. Forensics Secur. 2016, 11, 2635–2647. [Google Scholar] [CrossRef]

- Giles, C.L.; Tsoi, A.C.; Back, A.D. Face recognition: A convolutional neural-network approach. IEEE Trans. Neural Netw. 1997, 8, 98–113. [Google Scholar]

- Li, J.; Zhang, Z.; He, H. Implementation of eeg emotion recognition system based on hierarchical convolutional neural networks. In Proceedings of the International Conference on Brain Inspired Cognitive Systems, Beijing, China, 28–30 November 2016; pp. 22–33. [Google Scholar]

- Armstrong, B.C.; Ruiz-Blondet, M.V.; Khalifian, N.; Kurtz, K.J.; Jin, Z.; Laszlo, S. Brainprint: Assessing the uniqueness, collectability, and permanence of a novel method for erp biometrics. Neurocomputing 2015, 166, 59–67. [Google Scholar] [CrossRef]

- Marcel, S.; Millan, J.D.R. Person authentication using brainwaves (eeg) and maximum a posteriori model adaptation. IEEE Trans. Pattern Anal. Mach. Intell. 2007, 29, 743–752. [Google Scholar] [CrossRef] [PubMed]

- Mu, Z.; Hu, J.; Min, J. EEG-based person authentication using a fuzzy entropy-related approach with two electrodes. Entropy 2016, 18, 432. [Google Scholar] [CrossRef]

| Category | Symbol | Definition |

|---|---|---|

| Energy features | Ap | Amplitude of positive peak of the eye blinking signal |

| An | Amplitude of negative peak of the eye blinking signal | |

| Ep | Area under positive pulse of the eye blinking signal | |

| En | Area under negative pulse of the eye blinking signal | |

| Position features | Pp | Position of positive peak of the eye blinking signal |

| Pn | Position of negative peak of the eye blinking signal | |

| Dp | Duration of positive pulse of the eye blinking signal | |

| Dn | Duration of negative pulse of the eye blinking signal | |

| Slope features | S1 | Slope of the onset of the positive pulse (tan(θp1)) |

| S2 | Slope of the onset of the negative pulse (tan(θn1)) | |

| S3 | Slope of the offset of the positive pulse (tan(θp2)) | |

| S4 | Slope of the offset of the negative pulse (tan(θn2)) | |

| S5 | Dispersion degree of the positive pulse (σp/meanp) | |

| S6 | Dispersion degree of the negative pulse (σn /meann) | |

| Derivative features | D1 | Amplitude of positive peak of first derivative |

| D2 | Amplitude of negative peak of first derivative | |

| D3 | Position of the positive peak of first derivative | |

| D4 | Position of the negative peak of first derivative | |

| D5 | Number of zero crossings of the first derivative | |

| D6 | Number of zero crossings of the second derivative |

| ACC (%) | FAR (%) | FRR (%) | ||||

|---|---|---|---|---|---|---|

| Users | EEG | Multi-Task | EEG | Multi-Task | EEG | Multi-Task |

| 1 | 91.33 | 99.67 | 6.00 | 0.67 | 11.33 | 0.00 |

| 2 | 96.67 | 98.00 | 3.33 | 1.33 | 3.33 | 2.67 |

| 3 | 98.00 | 98.00 | 3.33 | 3.33 | 0.67 | 0.67 |

| 4 | 93.67 | 98.33 | 6.00 | 2.00 | 6.67 | 1.33 |

| 5 | 88.33 | 95.67 | 8.00 | 4.67 | 15.33 | 4.00 |

| 6 | 97.67 | 98.33 | 2.00 | 2.00 | 2.67 | 1.33 |

| 7 | 85.00 | 96.00 | 14.67 | 3.33 | 15.33 | 4.67 |

| 8 | 90.67 | 99.67 | 4.67 | 0.67 | 14.00 | 0.00 |

| 9 | 90.67 | 94.67 | 8.00 | 6.67 | 10.67 | 4.00 |

| 10 | 94.33 | 97.67 | 7.33 | 2.67 | 4.00 | 2.00 |

| 11 | 93.33 | 95.00 | 6.00 | 6.00 | 7.33 | 4.00 |

| 12 | 93.33 | 98.00 | 6.67 | 2.67 | 6.67 | 1.33 |

| 13 | 91.67 | 96.67 | 7.33 | 3.33 | 9.33 | 3.33 |

| 14 | 91.67 | 99.67 | 9.33 | 0.00 | 7.33 | 0.67 |

| 15 | 89.67 | 98.67 | 8.00 | 1.33 | 12.67 | 1.33 |

| Mean (std) | 92.40 (3.50) | 97.60 (1.65) | 6.71 (3.01) | 2.71 (1.93) | 8.49 (4.68) | 2.09 (1.57) |

| FAR (%) | ||

|---|---|---|

| User | EEG | Multi-Task |

| 1 | 3.60 | 2.70 |

| 2 | 3.90 | 3.00 |

| 3 | 1.30 | 1.40 |

| 4 | 6.30 | 5.50 |

| 5 | 5.70 | 4.70 |

| 6 | 2.50 | 1.30 |

| 7 | 12.90 | 5.80 |

| 8 | 5.10 | 2.20 |

| 9 | 8.70 | 6.30 |

| 10 | 6.70 | 3.90 |

| 11 | 3.80 | 4.00 |

| 12 | 4.00 | 1.90 |

| 13 | 4.50 | 8.90 |

| 14 | 3.40 | 2.10 |

| 15 | 13.80 | 4.80 |

| Mean (std) | 5.75 (3.57) | 3.90 (2.13) |

| FRR (%) | ||

|---|---|---|

| User | EEG | Multi-Task |

| 1 | 6.00 | 2.00 |

| 2 | 0.00 | 0.00 |

| 3 | 2.00 | 0.00 |

| 4 | 12.00 | 16.00 |

| 5 | 16.00 | 8.00 |

| 6 | 2.00 | 6.00 |

| 7 | 10.00 | 6.00 |

| 8 | 14.00 | 0.00 |

| 9 | 8.00 | 6.00 |

| 10 | 4.00 | 4.00 |

| 11 | 2.00 | 2.00 |

| 12 | 2.00 | 4.00 |

| 13 | 10.00 | 2.00 |

| 14 | 10.00 | 0.00 |

| 15 | 8.00 | 2.00 |

| Mean (std) | 7.07 (4.95) | 3.87 (4.24) |

| Author | Data Type | Time Cost (s) | ACC (%) | FAR (%) | FRR (%) | Open-Set Test | Permanence Test |

|---|---|---|---|---|---|---|---|

| Armstrong et al. [30] | Text reading related EEG | Not mentioned | 89 | Not mentioned | Not mentioned | None | Yes |

| Yeom et al. [14] | Visual evoked related EEG | 31.5~41 | 86.1 | 13.9 | 13.9 | None | None |

| Marcel et al. [31] | Motor imagery related EEG | 15 | 80.7 | 14.4 | 24.3 | None | None |

| Zhendong et al. [32] | Visual evoked related EEG | 6.5 | 87.3 | 5.5 | 5.6 | None | None |

| Patel et al. [23] | EEG and Hand synergies | 8 | 92.5 | Not mentioned | Not mentioned | None | None |

| Abo-Zahhad et al. [25] | EEG and eye blinking signal | Not mentioned | 98.65 | Not mentioned | Not mentioned | None | None |

| This paper | EEG and eye blinking signal | 7 | 97.6 | 2.71 | 2.09 | Yes | Yes |

© 2018 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wu, Q.; Zeng, Y.; Zhang, C.; Tong, L.; Yan, B. An EEG-Based Person Authentication System with Open-Set Capability Combining Eye Blinking Signals. Sensors 2018, 18, 335. https://doi.org/10.3390/s18020335

Wu Q, Zeng Y, Zhang C, Tong L, Yan B. An EEG-Based Person Authentication System with Open-Set Capability Combining Eye Blinking Signals. Sensors. 2018; 18(2):335. https://doi.org/10.3390/s18020335

Chicago/Turabian StyleWu, Qunjian, Ying Zeng, Chi Zhang, Li Tong, and Bin Yan. 2018. "An EEG-Based Person Authentication System with Open-Set Capability Combining Eye Blinking Signals" Sensors 18, no. 2: 335. https://doi.org/10.3390/s18020335