1. Introduction

Sensor technologies aim at providing a convenient and intelligent life for human beings and have been largely enhanced in recent years. For example, wearable sensors make health monitoring and early disease classification with minimum discomfort possible [

1,

2], optical sensors used in clinical diagnosis have made the detection of specific compound such as calcium more easy-to-use [

3], and motion sensors have made smart phones not only tools for communications, but also means to provide personalized services [

4].

By using specific sensors, identification of chemical gases becomes possible. Electronic-Nose (E-Nose) systems, also known as machine olfaction, is one of such units. Combining with pattern recognition techniques, E-nose systems can classify multiple gases mixed together in different concentrations [

5], which leads to a wide application in airport and train station checkpoints [

6], food security [

7], environmental monitoring [

8], clinical diagnosis [

9] and so on. Despite the fascinating applications that make our daily lives more intelligent, there has been a major problem in E-Nose systems that makes the recognition capability of sensors degrade after some time. In E-Nose systems, the readings

X rely on the chemical reactions between gas compounds and the sensor materials, together with some recognition mechanism such as machine learning to create connections between diverse gas types and their corresponding readings. Mathematically, we can use a function

to denote such connections. Given a proper trained

, the outputs should match all designated gas compounds. However, in practice, when a

is trained on the collected data perfectly, the outputs gradually fail to match the right gases, and the phenomenon is called sensor drift.

Currently, it is commonly accepted that the drift problem in sensors is due to two causes. One is the chemical process that happens between sensor materials and the environment, also called the first-order drift, and the other is the system noise, namely the second-order drift [

10,

11,

12]. Researchers have been trying to solve the problem in material science, sensor selection strategies and post processing mechanisms. In material science, durable materials were invented to prolong the life of sensors [

13,

14,

15,

16]. Meanwhile, proper selection of more resilient sensors to drift is another way to achieve the goal [

17,

18]. In the perspective of post-processing, the drift problem can be taken as the changes of distributions of gas labels over time. To maintain the stable recognition capability of the sensors, classifier ensemble techniques have relived the problem to some extent [

19,

20,

21]. However, the learning process of the methods is supervised and requires human effort to label the training set beforehand. Furthermore, the methods assume that the data distributions remain the same for different gases, which is not always true. Component Correction (CC)-based methods [

22,

23,

24] and Sequential Minimal Optimization (SMO)-based [

25,

26] are the most effective supervised ways to adjust the model to the drift. Nevertheless, CC-based methods assume the drift acts in the same way for diverse gases, which is sometimes not the case, and SMO-based methods sometimes update the model by following the wrong reference label. Another effective and promising approach is to use the transfer learning technique, namely domain adaptation. Zhang et al. [

27] achieved one of the highest accuracies using semi-supervised methods, but the learning mechanism follows an offline training scheme, which makes the data generated in real time hard to be processed in time. Together with the fast development in big data, an enormous amount of sensor data are generated per second, which has made timely processing a great challenge.

To effectively discover information collected from the data, an online learning mechanism that can train and update the model in a time-efficient manner without losing the classification accuracy is required. An online learning method in machine learning uses the current model and newly received data to update the analytical model. In this way, the updates of the model do not require training from scratch and the capability of drift compensation can keep up with the data generated in massive amounts. In this paper, we combine the theory of online sequential extreme learning machine and Domain Adapation Extreme Learning Machine (DAELM) [

27] for it has appealing performance and has achieved almost 100% for specific datasets. We wish to transform the offline learning version in the work into an online learning version without losing the performance for drift compensation. The contributions are three-fold:

The selection of representative samples plays an essential part in semi-supervised methods such as DAELM-S and DAELM-T in [

27]. Therefore, we analyzed the characteristics of sample selection and provided two online sampling strategies regarding whether testing error can be used as feedback.

To preserve the high accuracies of domain adaptation-based methods and save time for updates, we combined the theory of online sequential extreme learning machine to propose Online Source Domain Adaptation Extreme Learning Machine (ODAELM-S). In ODAELM-S, only the source domain and few labeled samples contribute to the model. When new labeled data are identified, ODAELM-S can update the model in a time efficient manner.

To leverage between the effects of labeled and unlabeled samples, we transformed DAELM-T into its online learning version and proposed Online Target Domain Adaptation Extreme Learning Machine (ODAELM-T). Unlike ODAELM-S, which only relies on the source domain and the labeled set, ODAELM-T leverages the effects of labeled and unlabeled set to the model. Based on the changes of the two sets, the update phase is divided into three learning process, namely unlabeled incremental learning, unlabeled decremental learning and labeled incremental learning.

The remainder of the paper is organized as follows.

Section 2 introduces some preliminaries on online processing in sensors, domain adaptation and extreme learning machine to help understand the methods in the paper. Methodologies of ODAELM-S and ODAELM-T are detailed in

Section 3.

Section 4 describes the dataset used in the experiments and the experimental set-up, followed by a detailed analysis on the results. Conclusions are drawn in

Section 5. For illustration purposes, abbreviations of the frequently used terms are listed after

Section 5.

3. Online Domain Adaptation Extreme Learning Machines

To achieve timely processing without losing the recognition accuracies for sensor drift, we intend to transform current state-of-art batch learning methods into their corresponding online versions. Domain adaptation-based drift compensation has been proved to possess high accuracies in [

27]. The two algorithms, namely DAELM-S and DAELM-T, are based on batch learning and require selecting a group of representative samples beforehand. The selection algorithm is based on the distribution of the entire dataset. However, in an online processing scenario, samples in the target domains arrive in sequence as a data flow. Therefore, the original sample selection method is not applicable. Supposing we can determine when to select the representative samples, there is another problem of how much time and human effort it costs. Additionally, the batch learning mechanisms of DAELM-S and DAELM-T require calculating the classifier based on the full dataset. Since the data arrives in sequence, there is no doubt that the data used in the next update will overlap with the ones in previous updates. In this case, there will be repeated calculation of the same data over time, which costs more time and resources as the size of target domain increases.

In an online learning model using either DAELM-S and DAELM-T, we wish to maintain the performances of the two methods while solving the obstacles described in the previous paragraph.

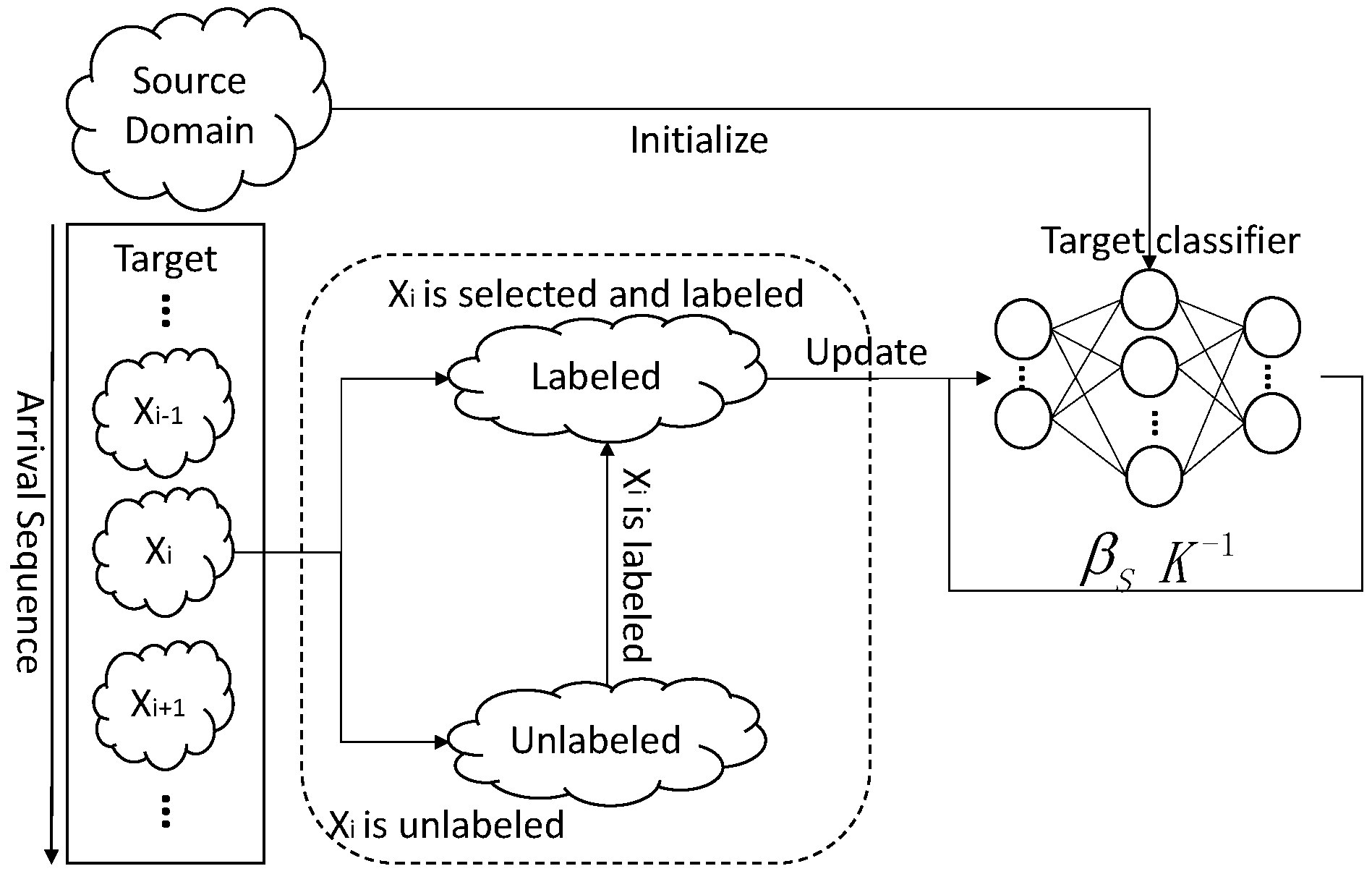

Figure 1 shows the sample changes in a target domain when a new sample

arrives. The labeled set refers to the manually labeled samples and the unlabeled set is the rest of the samples in a target domain. When

arrives in the target domain, it triggers two possible cases regarding whether to select and label a group of samples in the unlabeled set. When no selection and labeling happens,

is added to the unlabeled set and the labeled set remains unchanged. In this case, the changes of samples, denoted by

, has only one sample, namely

. When the selection and labeling happens, the situation is more complicated. Given some sample selection algorithm, a group of representative samples, denoted by

, is selected from the target samples received to date. Note that, in this case, the unlabeled set includes the new sample

. Considering the fact that the selected samples may overlap with current labeled ones,

is the difference set between

and

where

is the latest selected sample set. The blue dashed circle in the figure represents the

. When labeling happens,

is removed from

and added into

. The

in

refers to any possible samples selected, including the new sample

.

In classic ELM, a variant called OSELM can be applied to reduce the calculation when new sample arrives in sequence. The similar process can be applied to both DAELM-S and DAELM-T by using incremental learning. The idea is to transform original batch learning process into a recursive process. Taking the arrival sequence in

Figure 1, for example, when

arrives, the classifier trained on current received data is written as

where

is the parameter or parameter vector that requires training. The output of

f can be the gas labels or the probabilities of the sample belonging to certain gases. In incremental learning, we wish to derive a recursive form of the training process where

can be written as a new formula denoted as

where

K is some intermediate results. In this way, instead of training the new

with all the data received to date, we can use only the previous result and the current increment for updates. By doing so, we can save the repeated calculation and achieve a time-efficient algorithm for generating new classifiers. In OSELM, the parameter

in Equation (

5) is the intermediate result. In DAELM-S and DAELM-T, we can find similar terms to achieve the goal. Once the update algorithm is produced, the only challenge becomes selecting the representative samples in an online manner.

In the following part of the section, we first analyze the sampling strategies regarding online learning. Then, corresponding online learning versions of DAELM-S and DAELM-T are described in detail.

3.1. Online Sampling Strategies

Assuming we can determine the time for updates, the only problem is to determine

. We view the classification model as an intelligent agent and the arrival sequence of the target samples like perceptions of the environment. Similar to the description on intelligent agents by Stuart and Peter in [

47], the actions of the model, including whether to select and label samples, depend on the entire sequence of samples received to date, not on anything that has not yet appeared. Therefore, we can apply Kennard–Stone (KS) algorithm [

48,

49] on the current received samples to determine

. Ideally, if we can perform KS whenever the distribution changes, the problem is solved. However, the changes in distribution may be a slow process, which brings another problem of defining the changes of distribution. Even though a periodical collection of the samples may be a trade-off plan, the circle for collection may vary, which brings up another problem of how to decide the circle beforehand.

Normally, we wish to label enough samples so that the model can be more precise. However, manually labeling in semi-supervised methods is time-consuming and labeling more samples contradicts with our goal of saving time. Eventually, the problem becomes a trade-off between high classification accuracy and low human efforts.

Selecting and labeling samples in a predefined circle is the easiest to apply, although not very applicable since proper circles for different datasets may vary. The reason for labeling more samples is to provide more information to track the changes of data. In this sense, the labeling should be more likely to happen when the performance degrades and vice versa. In this case, we consider the labeling process as a probability event, which possesses the following characteristics:

The chance of labeling is inversely proportional to number of labeled samples;

The chance of labeling is proportional to number of samples in total;

The chance of labeling is proportional to the classification error.

The performance of the model is based on the classification accuracy of current model. In this case, the system would require receiving the accuracy. When the performance of the classification model is assumed to degrade, the labeling should be more frequent, and vice versa. Meanwhile, when the number of labeled samples is small, the labeling should happen more frequently, and vice versa. However, when the accuracy is not accessible, the system would have no idea whether it performs well or not. In this case, the system can only decide for selection and labeling based on the number of samples received so far.

Ideally, if we can determine the representative samples for each target domain as [

27] does, we can achieve equivalent high classification accuracies in an online manner. However, the method used to determine the representative samples in [

27] is based on the distribution of the entire target domain, which is unable to be acquired in an online scenario. A more feasible way is to label more samples instead of labeling specific ones. Therefore, we use Equation (

6) to depict the probability of labeling where

is current classification error, and

y and

x are the numbers of labeled samples and entire samples, respectively. In the experiments, the equation managed to possess the aforementioned features while maintaining the number of the labeled samples in a relatively small value. Note that Equation (

6) is an attempt to depict the probabilities instead of ideal calculation of the probability. Therefore, more sophisticated methods can be used to replace it:

In the experiments, the equation is not good enough. Another possible issue may be that the labeled samples keep growing even when the accuracy is high, say over 90%. In an online learning scenario, if the manually labeling process labels too many samples, the method is not applicable since too much resource time would be spent in the process. Therefore, we set another criteria for the process, namely the minimum accepted accuracy. In practice, when the accuracy is stable and very high, it does not require extra labeling. In this paper, the maximum number of KS is set to 50 so as to limit the growth of labeled samples. The minimum accepted accuracy is set to 90%. When the residual error is larger than 10%, the labeled process happens.

3.2. Online Source Domain Adaptation Extreme Learning Machine

Similar to OSELM, which uses incoming data to update the model in an online manner, we transformed the training of DAELM-S into an incremental learning procedure and proposed Online Source Domain Adaptive Extreme Learning Machine (ODAELM-S).

Figure 2 shows the framework of ODAELM-S. In DAELM-S, the learning only involves the source domain and the labeled samples from target domain. Since the source domain remains unchanged during the learning phase, the update of the model only happens when new samples are labeled. Initially, the target classifier is the source classifier for there is no labeled sample in the target domain. After the classifier is initialized using source domain samples, it can receive and learn the patterns from target domain in an online manner. The left rectangle in the figure represents the arrival sequence of the samples in the target domain. Let

be the current arrival sample that belongs to either unlabeled or labeled samples. If no selection happens,

is added into the set of unlabeled samples and there is no update in the classifier. When the online sample selection happens, the sample(s) for labeling can only be chosen from the unlabeled samples and

. Note that

may not be chosen when the selection happens at the arrival of the sample, but it may be selected later by another selection process. Whenever labeled samples are selected, the target classifier updates itself based on an online learning mechanism described in the following paragraphs.

In DAELM-S, the model is taken as an extension of the classifier trained on the source domain. The objective function is written as Equation (

7) where

,

.

and

are the corresponding hidden layer outputs of source domain and labeled samples from target domain, respectively. To obtain an proper value of

, it requires minimizing the objective function:

By calculating the gradient of

L with respect to

as Equation (

8), we can calculate the optimal value of

by setting the gradient to 0:

Note that, regarding whether there are more rows or columns in

, solving

can be either an overdetermined or under-determined problem. When it is an overdetermined problem, we assume

is a linear combination of the columns of

, i.e.,

. Therefore, by setting Equation (

8) to 0, the hidden layer output

can be formulated as Equation (

9), where

and

:

Let the case where has more rows be case 1 and the other be case 2. When new samples are labeled, the hidden layer output becomes where is the corresponding hidden layer output of the newly labeled samples. In order to save the calculation, ODAELM-S uses some intermediate result as where K is defined as for case 1 and for case 2.

For case 1,

can be defined as Equation (

10):

Hence,

can be updated using Equation (

11):

Subsequently,

can be updated using Equation (

12):

For case 2, let

be defined as Equation (

13):

Similarly, the update of the intermediate result and output weight can be written as Equations (

14) and (

15), respectively, where

:

The pseudo code for ODAELM-S is shown in Algorithm 1. Before the updates begin, ODAELM-S first initializes a base classifier using source domain data (lines 1–3). When a base classifier has been created, the classification of gases can be available. When a new sample in the target domain arrives, ODAELM-S calculates the possibility for samples in the target domain to be selected and labeled (lines 5–6). When the process is determined (line 7), a group of unlabeled sample will be selected as (lines 8–9). Based on whether has more rows or columns, ODAELM-S updates the corresponding hidden layer output and some intermediate result (lines 10–16). The process continues when no more samples arrive.

| Algorithm 1 Pseudo Code for ODAELM-S. |

| Input: |

| the number of hidden layer neurons; |

| the activation function type; |

| the source domain data; |

| 1: Initialize two empty sets, i.e., and , as labeled and unlabeled sets, respectively; |

| 2: Set activation function as and initialize an ELM with L hidden nodes with ; |

| 3: Let be defined as in Equation (9); |

| 4: while new sample x in the target domain arrives do |

| 5: Calculate the probability P for labeling; |

| 6: Generate random value between 0 and 1 as p; |

| 7: if then |

| 8: Add x to ; |

| 9: Select a group of samples from as for labeling; |

| 10: if has more rows then |

| 11: Update the classifier using Equations (11) and (12); |

| 12: else |

| 13: Update the classifier using Equation (14) and (15); |

| 14: end if |

| 15: Set and ; |

| 16: else |

| 17: Add x to ; |

| 18: end if |

| 19: end while |

3.3. Online Target Domain Adpatation Extreme Learning Machine

To transform DAELM-T into its online learning version, we proposed Online Target Domain Adaptation Extreme Learning Machine (ODAELM-T). Different from ODAELM-S, ODAELM-T leverages both labeled and unlabeled samples in the target domain by using Equation (

16), in which

is the output weight matrix,

are the same as in DAELM-S, and

and

are the corresponding regularization parameter and the hidden layer output of the unlabeled samples in the target domain. Obviously, the update of the model is more complicated than ODAELM-S:

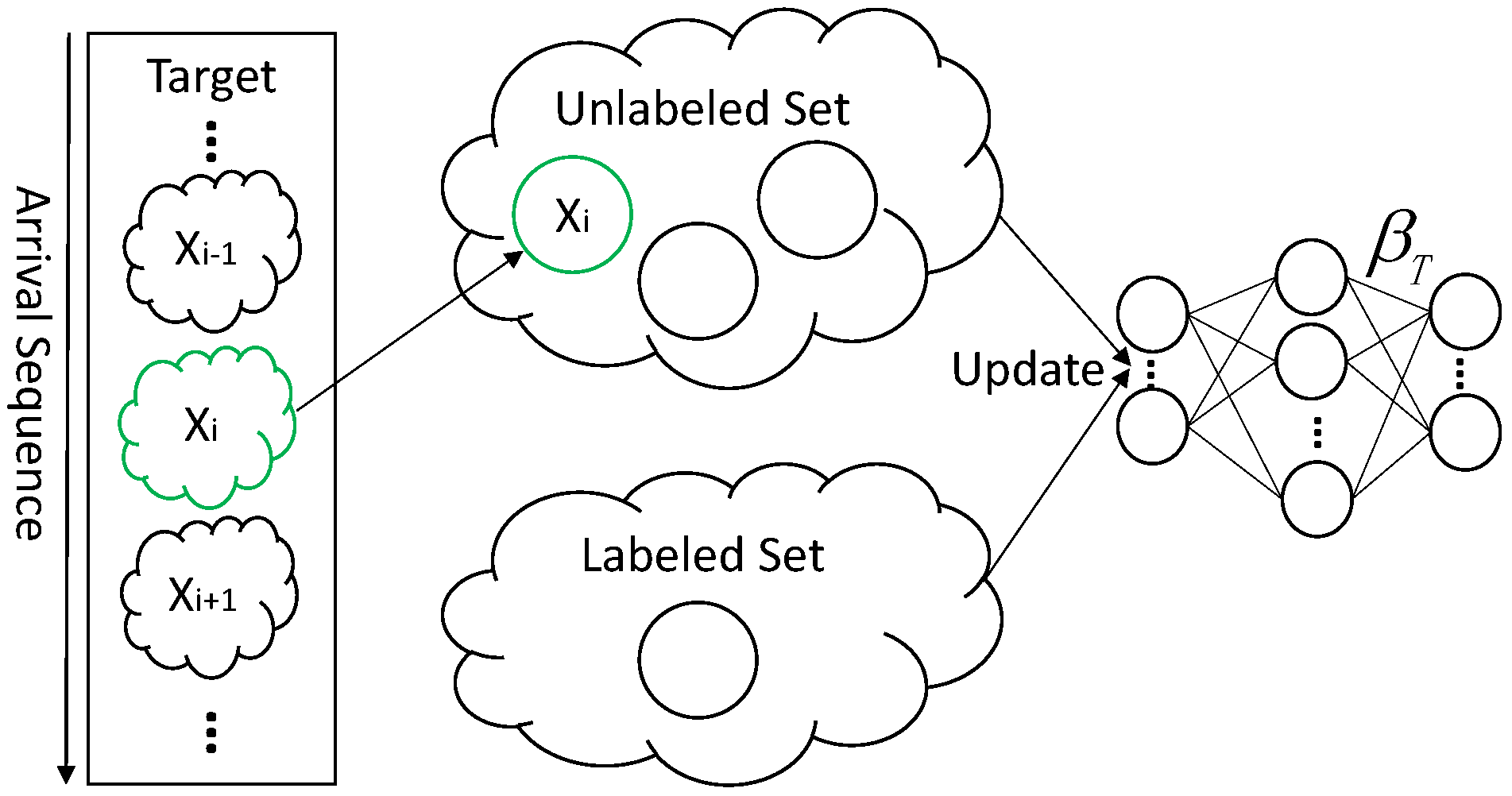

Figure 3 shows the procedure of ODAELM-T. Initially, a source classifier is trained on a source domain. Unlike ODAELM-S in which the classifier is built upon both source domain and labeled samples in the target domain, in DAELM-T, only the output weight matrix

contributes to the initialization and updates of the target classifier. In ODAELM-T, solving Equation (

16) results in two different cases depending on the numbers of rows and columns. In [

27], when the number of rows in

is smaller than that of columns, the Lagrange multiplier method was applied by using

. It is equal to assuming that the output weight is a linear combination of

and

. However, due to the fact that the two cases are based on the rows and columns of

, it is reasonable to just assume

. Therefore, we can rewrite the output weight matrix of DAELM-T as Equation (

17), where

and

.

Based on the appendix of [

50], we know that the Gaussian kernel is of full rank in any case. In ELM,

and

are “ELM kernel” matrices [

51]. Noting that Gaussian kernel is a special Radial Basis Function (RBF) kernel, we can ensure that

and

is of full rank if we use Gaussian function as the activation function. Moreover, we can further induce that Lemma A1 stands (see

Appendix A), so Equation (

17) can be transformed into online learning versions.

When new sample arrives, the algorithm determines whether to select and label a group of samples in the target domain. When no such process happens, is added into the unlabeled set. However, when the process takes place, , which is described earlier, may consist of one or more samples and may or may not be in it. Due to the fact that manually labeling is time consuming, when arrives at first, it will be put into an unlabeled set. When is determined, ODAELM-S will first take out the effects of these samples by using decremental learning in unlabeled sets. Subsequently, when the labeling is finished, ODAELM-T will perform incremental learning in the target domain. Therefore, there are three different learning mechanisms in ODAELM-T, which ensure the classifier is up-to-date during its lifetime.

The pseudo code for ODAELM-T is described as Algorithm 2. Initially, ODAELM-T generates a source classifier as ODAELM-S does (lines 2–3) and sets the labeled and unlabeled sets as and , respectively. When new sample x in the target domain arrives, ODAELM-T calculates the probability of selecting and labeling samples in the target domain as ODAELM-S does (lines 5–6). When the selection and labeling happens, ODAELM-T firstly adds x into and selects the group of samples from target domain for labeling (lines 8–9). Note that, initially, there is no target classifier. Therefore, ODAELM-T will initialize an ELM with L hidden nodes when the first group of samples are labeled (lines 11–12). Once the target classifier is initialized, the target classifier will update itself based on the changes between and . When x is added into , ODAELM-T follows unlabeled incremental learning. After a group of samples, i.e., , are chosen, ODAELM-T will perform unlabeled decremental learning (lines 15–16). Subsequently, when was manually labeled, ODAELM-T will perform incremental learning (lines 17–18). In the circumstance that no labeling happens, there is only unlabeled incremental learning (lines 21–22).

| Algorithm 2 Pseudo Code for ODAELM-T. |

| Input: |

| the number of hidden layer neurons; |

| the activation function type; |

| the source domain data; |

| 1: Initialize labeled and unlabeled set as and , respectively. |

| 2: Initialize the source classifier of L hidden nodes using with ; |

| 3: Let and be defined as Equation (17); |

| 4: while new sample x in the target domain arrives do |

| 5: Calculate the probability P for labeling; |

| 6: Generate random value between 0 and 1 as p; |

| 7: if then |

| 8: Add x into ; |

| 9: Select a group of samples as in the target domain for labeling; |

| 10: if is empty then |

| 11: ; |

| 12: Initialize a target classifier of L hidden nodes using Equation (17); |

| 13: else |

| 14: perform unlabeled incremental learning where increment is x; |

| 15: ; |

| 16: perform unlabeled decremental learning where decrement is ; |

| 17: when the labeling process completes, ; |

| 18: perform labeled incremental learning where increment is ; |

| 19: end if |

| 20: else |

| 21: Add x into ; |

| 22: perform unlabeled incremental learning where the increment is x; |

| 23: end if |

| 24: end while |

3.3.1. Unlabeled Incremental Learning

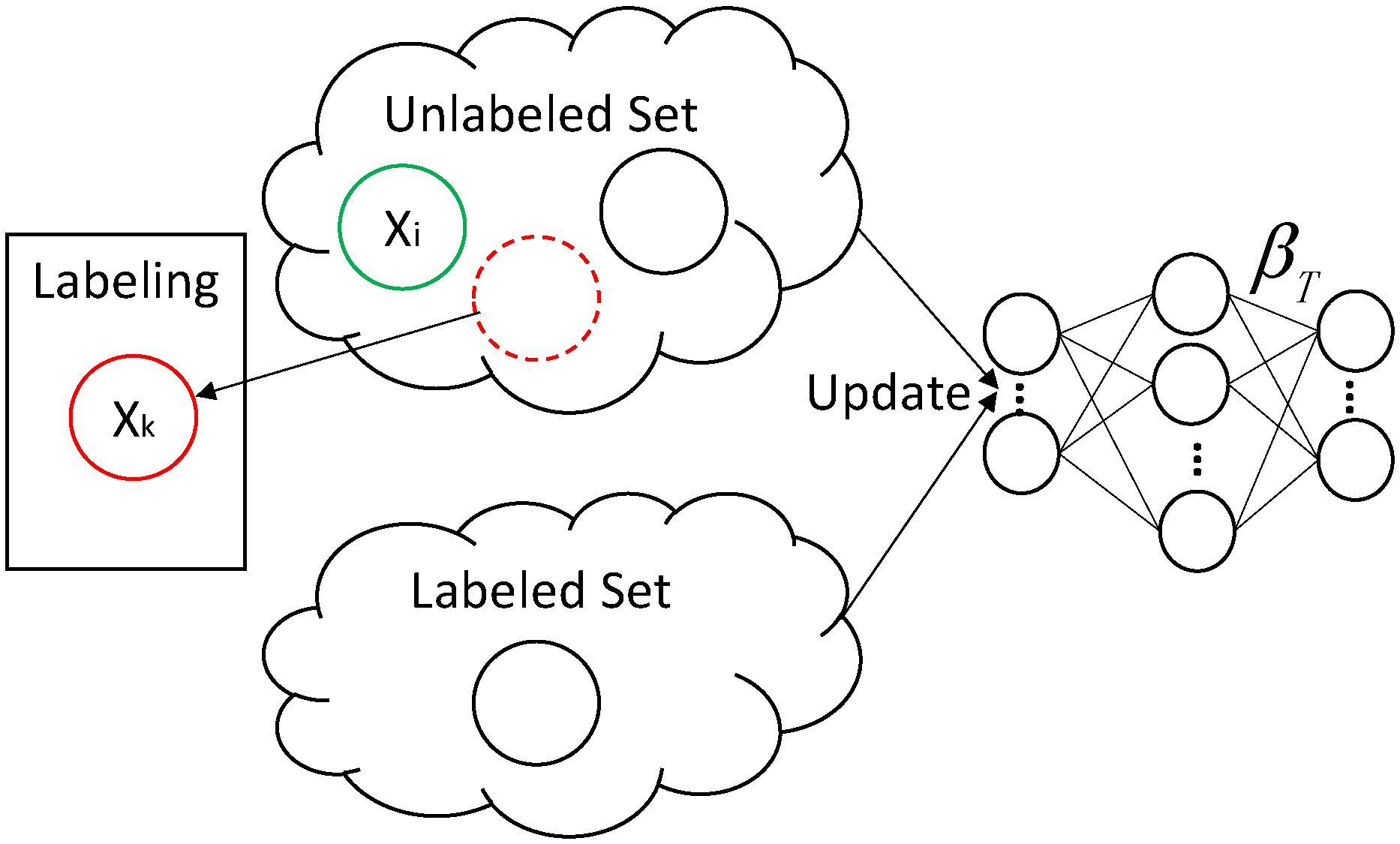

As shown in

Figure 4, only the unlabeled set changes by adding

when a new sample arrives in an unlabeled incremental learning phase. The target classifier is calculated based on both of the samples in labeled and unlabeled sets. To provide efficient updates without repeatedly calculating the unchanged set, we can choose some intermediate result to compute the current output weight of the classifier.

For simple illustration purposes, we divided Equation (

17) into two parts and let

. Let the intermediate result for current ELM be

, and the output weight matrix be

. When new sample

arrives, the corresponding hidden layer output of the target classifier can be computed as

. Similar to OSELM, we can use the intermediate result

for

th update, where

is defined as Equation (

18):

Subsequently,

becomes Equation (

19):

Based on the Sherman–Morrison–Woodbury formula, the inverse of

can be obtained as Equation (

20):

Note that

. By multiplying

before

in Equation (

19), we can obtain the formula for

as Equation (

21):

For the case where

has more columns than rows,

can be written as Equation (

22). Let

and

be Equations (

23) and (

24), respectively:

For illustration purposes, let

. Similarly,

can be derived as Equation (

25) based on the Sherman-Morrison-Woodbury formula:

Consequently, the output weight

can be derived as Equation (

26):

3.3.2. Unlabeled Decremental Learning

When a group of samples (

) are selected for labeling, ODAELM-T updates the model first by eliminating the effects of samples in

. The process is called unlabeled decremental learning. As shown in

Figure 5,

is selected from an unlabeled set for labeling process. Note that

k can be any arbitrary index from 1 to

i, and there can be more than one sample for labeling.

Let the corresponding hidden layer output of

be

. For the case where there are more rows than columns, let

K and

be written as Equation (

27):

For current update procedure, the intermediate result

can be formulated as Equation (

28):

Correspondingly, the output weight

can be formulated as Equation (

29):

For the case where there are more columns than rows, let

and

. Since

has changed, the corresponding results regarding

P and

Q can be written as Equation (

30):

Note that

and

can be written as Equation (

31):

Subsequently, we can write the intermediate result and the output weight matrix as Equations (

32) and (

33), respectively, where

is defined as

:

3.3.3. Labeled Incremental Learning

After new samples are manually labeled, the incremental learning ensures that the model does not need to be recomputed from scratch. As shown in

Figure 6, the unlabeled samples remain unchanged in this case. Therefore, the changes happens in

. The decremental part

in this process is added into a labeled set. Note that

in the figure is just an example and there can be more than one sample added into the labeled set.

Let the increment part be

with its label be

, and the corresponding hidden layer output be

. For the case where

has more rows, let the current intermediate result

be Equation (

34):

When increment

arrives, the hidden layer output becomes

and the intermediate results can be derived as Equation (

35):

By using Woodbury formula, the inverse of

can be formulated as Equation (

36):

Let

be

, and then

can be formulated as Equation (

37):

Substitute Equations (

36) and (

37) into Equation (

17), and the current output weight can be formulated as Equation (

38):

For the case where

has more columns, let

be Equation (

39), where

and

:

The inverse of

involves updates of

and

. Meanwhile, the inverse of current

becomes Equation (

40) where

,

and

:

Note that

and

can be formulated as Equations (

41) and (

42), respectively:

To further compute the result, the formula becomes too complex, which increases the computation cost. Therefore, in this case, we simply update the output weight matrix

based on the batch learning version. However, in order for the two cases to combine together, we still use Equation (

43) where

is formulated as Equation (

44):

Considering that changes with the arrival of , the relation between the numbers of rows and columns in may not be static over time. To be specific, transitions may happen when the numbers of rows and columns are the same. Initially, the labeled set has few samples and increases as the labeling happens. Given enough time and samples, eventually, the size of the labeled set will match the size of the hidden neurons, i.e., the numbers of rows and columns in are the same. At this time stamp, case 1 and 2 coincide with each other. In order to continue performing incremental learning, the transition between the intermediate results of the two cases happens.

For the case where there are more rows than columns,

where

and

can be formulated as Equation (

45), respectively:

For the case where there are more columns than rows,

where

and

can be formulated as Equation (

46):

When the rows are equal to the columns, the two expressions should be equal. In this case, both

and

are invertible. Hence,

. Therefore, intermediate results for transition between the two cases follow Equations (

47) and (

48):

5. Conclusions

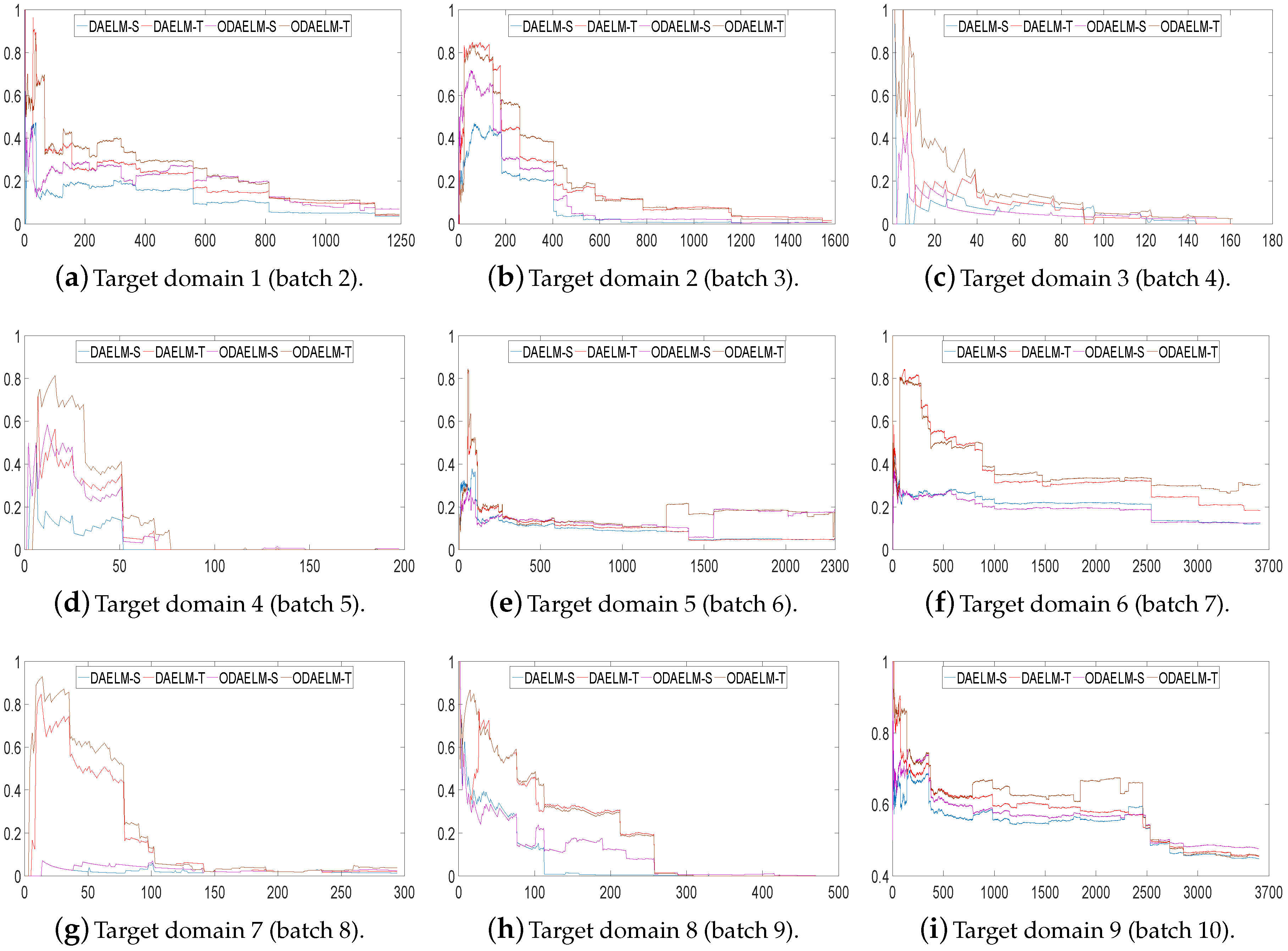

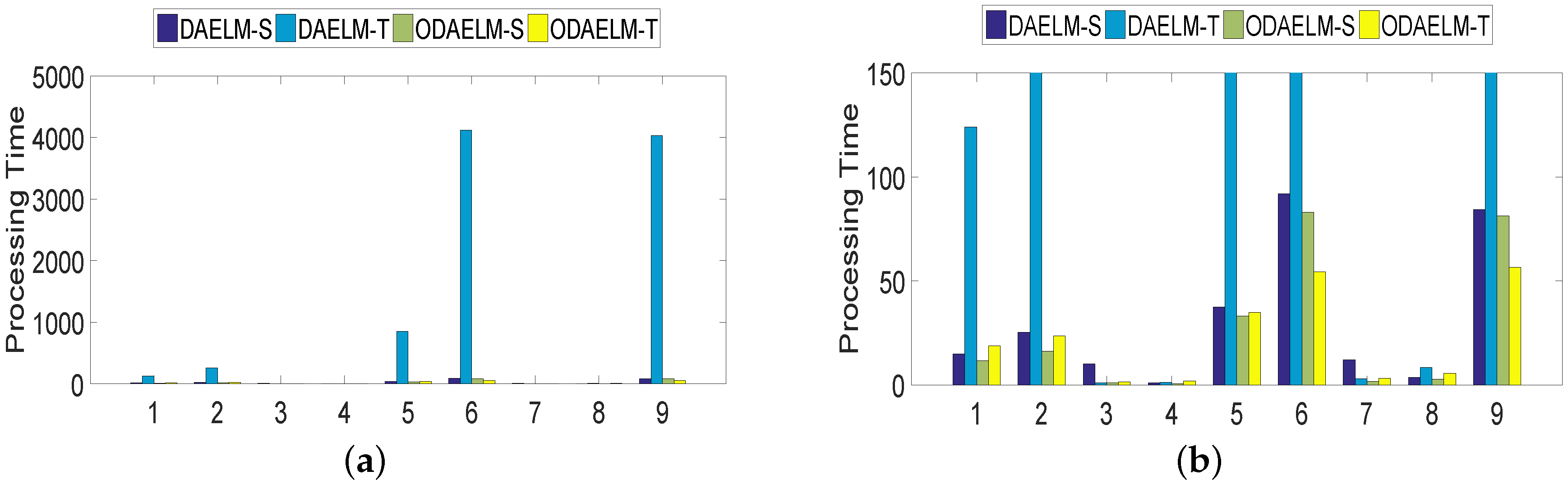

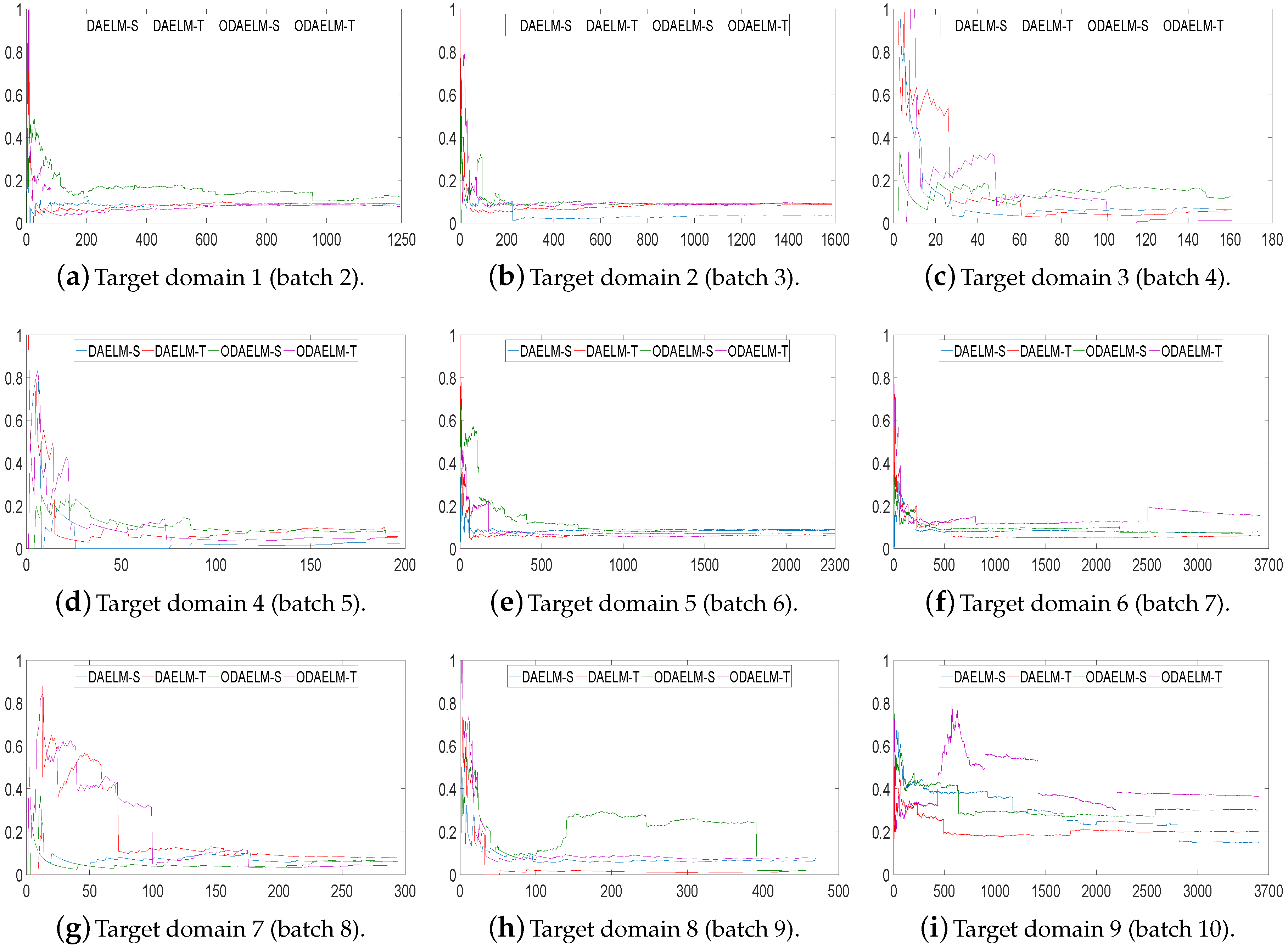

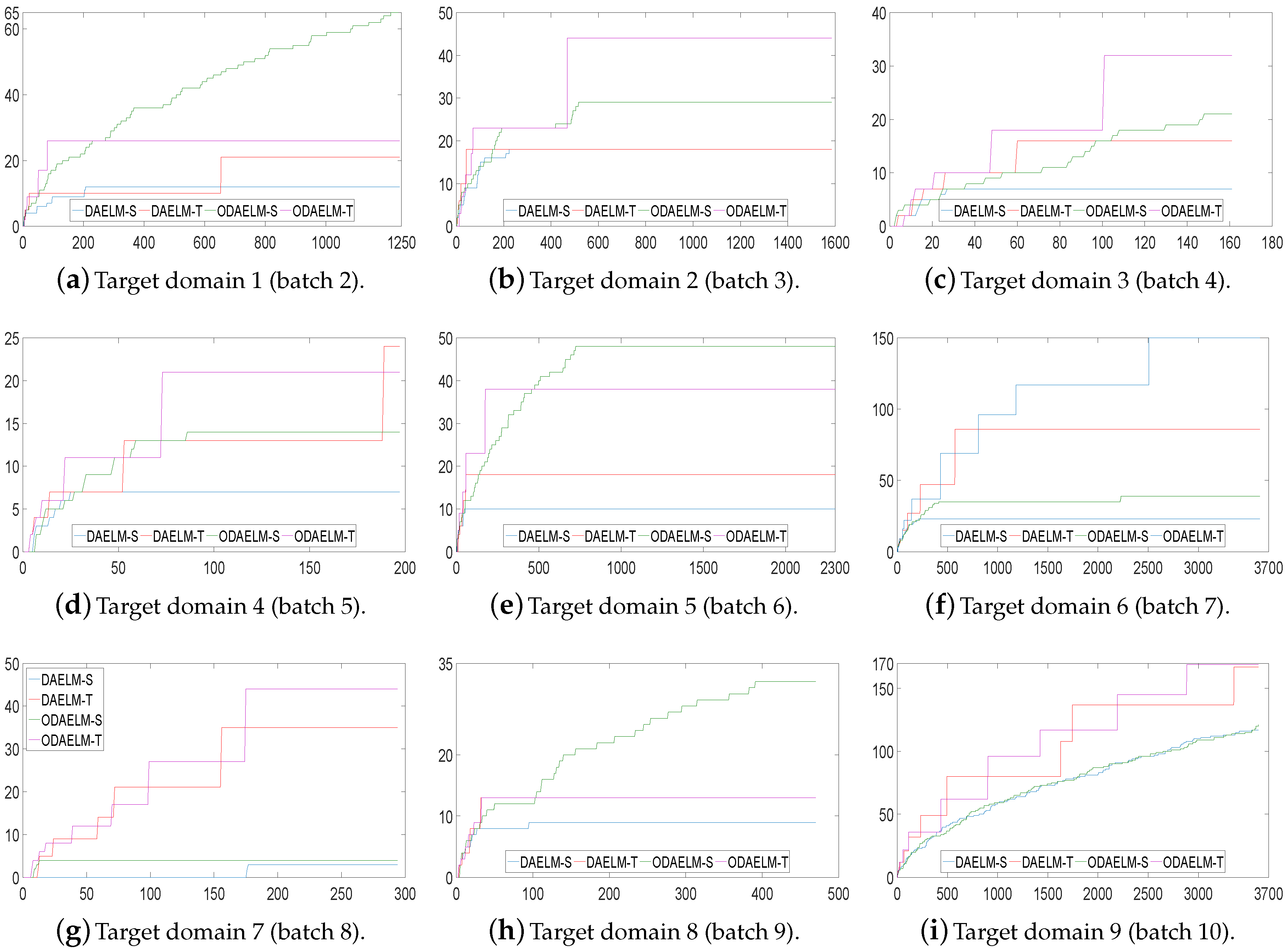

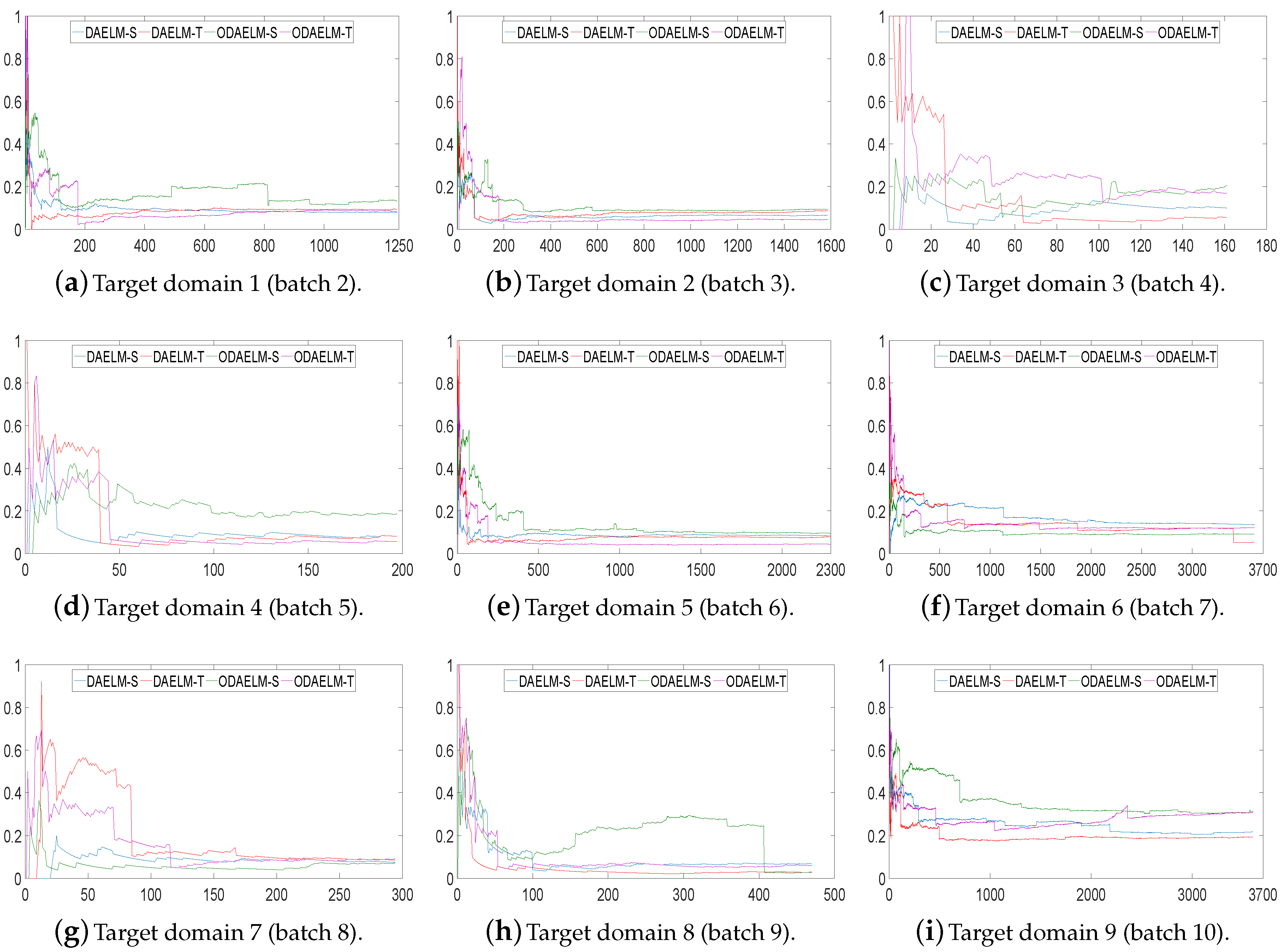

In this paper, we proposed ODAELM-S and ODAELM-T for online sensor drift compensation in E-Nose systems. The proposed methods can update the model as new samples arrive, which is more time-saving compared with their batch learning versions. Meanwhile, we proposed two online labeling strategies to couple with the proposed methods.

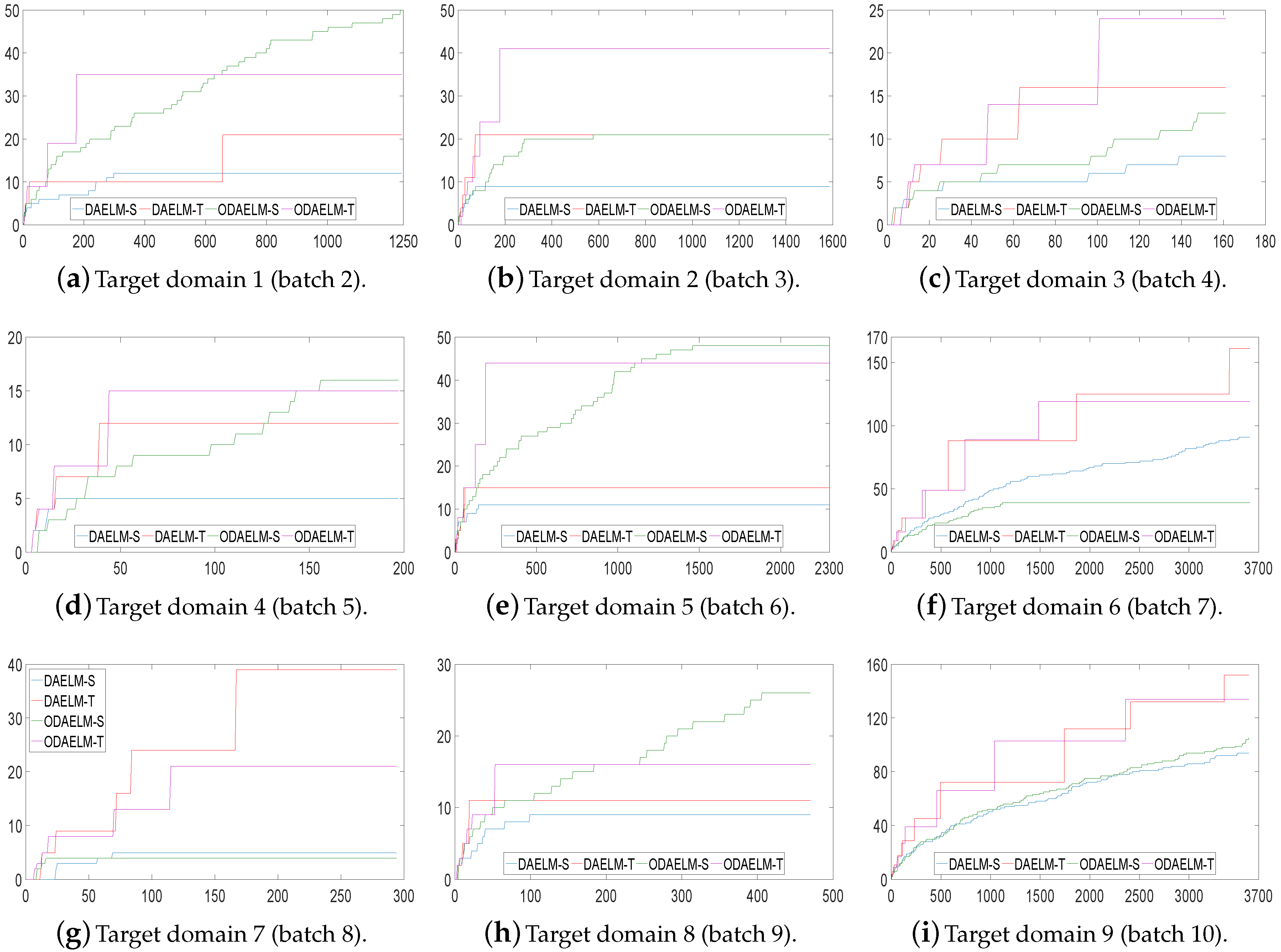

Experiments on sensor drift dataset of six diverse compounds from 36 months demonstrate the effectiveness of the proposed methods regarding both classification accuracy and processing time. The results show that, under the same sampling and arrival sequences of the target domain, the proposed methods save more time than their batch learning versions do without losing the classification accuracy. In the meantime, the results under two online sampling strategies confirm the effectiveness of the proposed methods, which outperform the other classification algorithms. Between the two proposed methods, their capacities of identifying diverse gases draw close to each other eventually. However, ODAELM-S is more suitable to apply when the target domain is small and limit samples are labeled. ODAELM-T achieves its maximum capacity when the number of labeled samples is large, and outperforms ODAELM-S in specific target domains. In general, ODAELM-S is more feasible when the labeled samples are limited, while ODAELM-T can be used to replace ODAELM-S for better accuracies when the number of sample increases.

The online sampling strategies including the formula to calculate the probabilities of selecting and labeling samples in the target domain are the only two cases used in the paper. More sophisticated and accurate sampling models may be considered to improve the selection of representative samples. Meanwhile, human labor is a key factor in semi-supervised methods and the selection of representative samples may also be constrained by the factor, which is not included in the discussion of the paper. Future works may be extended to improve the sampling strategies under more restricted scenarios and parallel computing may be included to further reduce the processing time.