Study of the Navigation Method for a Snake Robot Based on the Kinematics Model with MEMS IMU

Abstract

:1. Introduction

2. Analysis of the Snake Robot’s Motion Characteristics

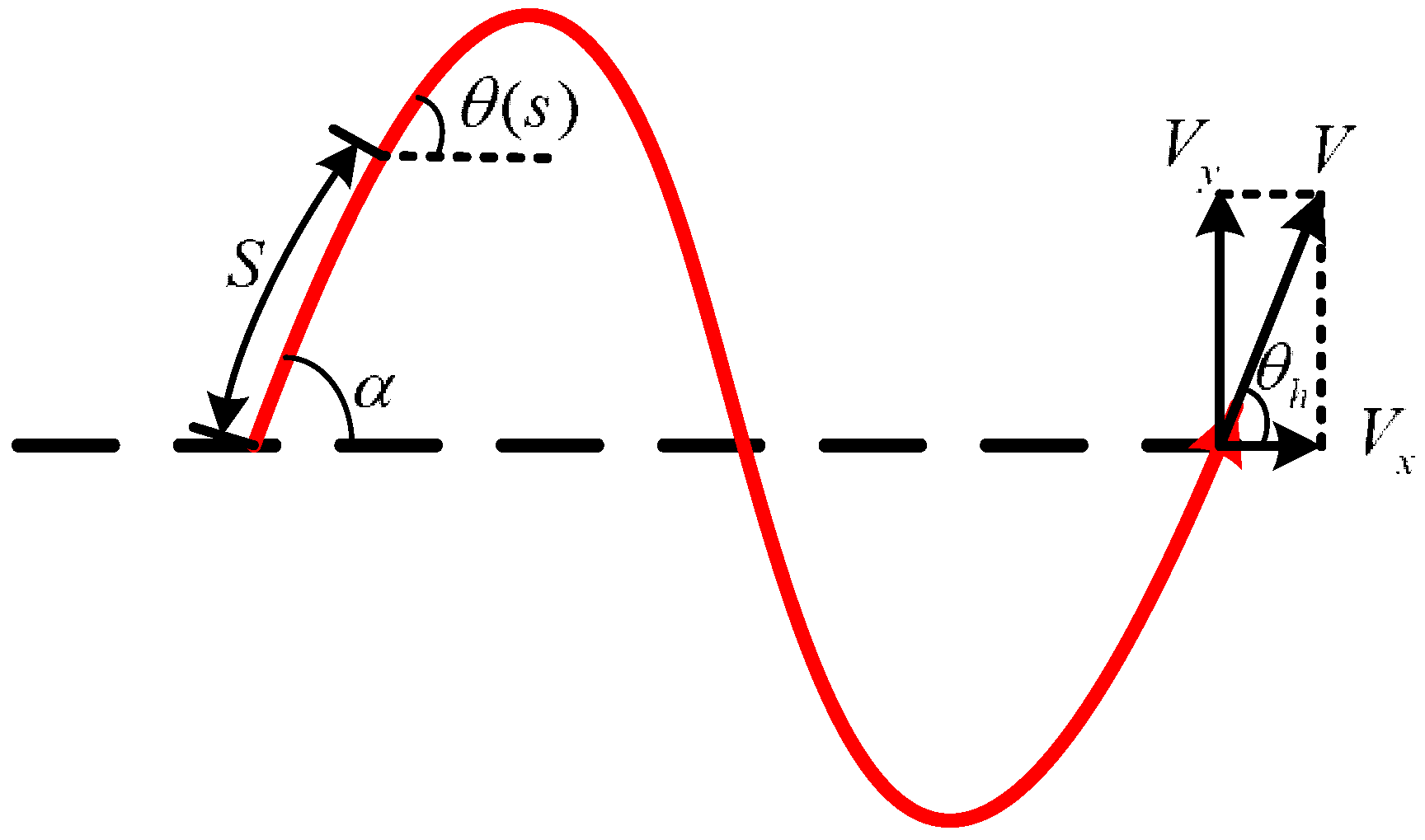

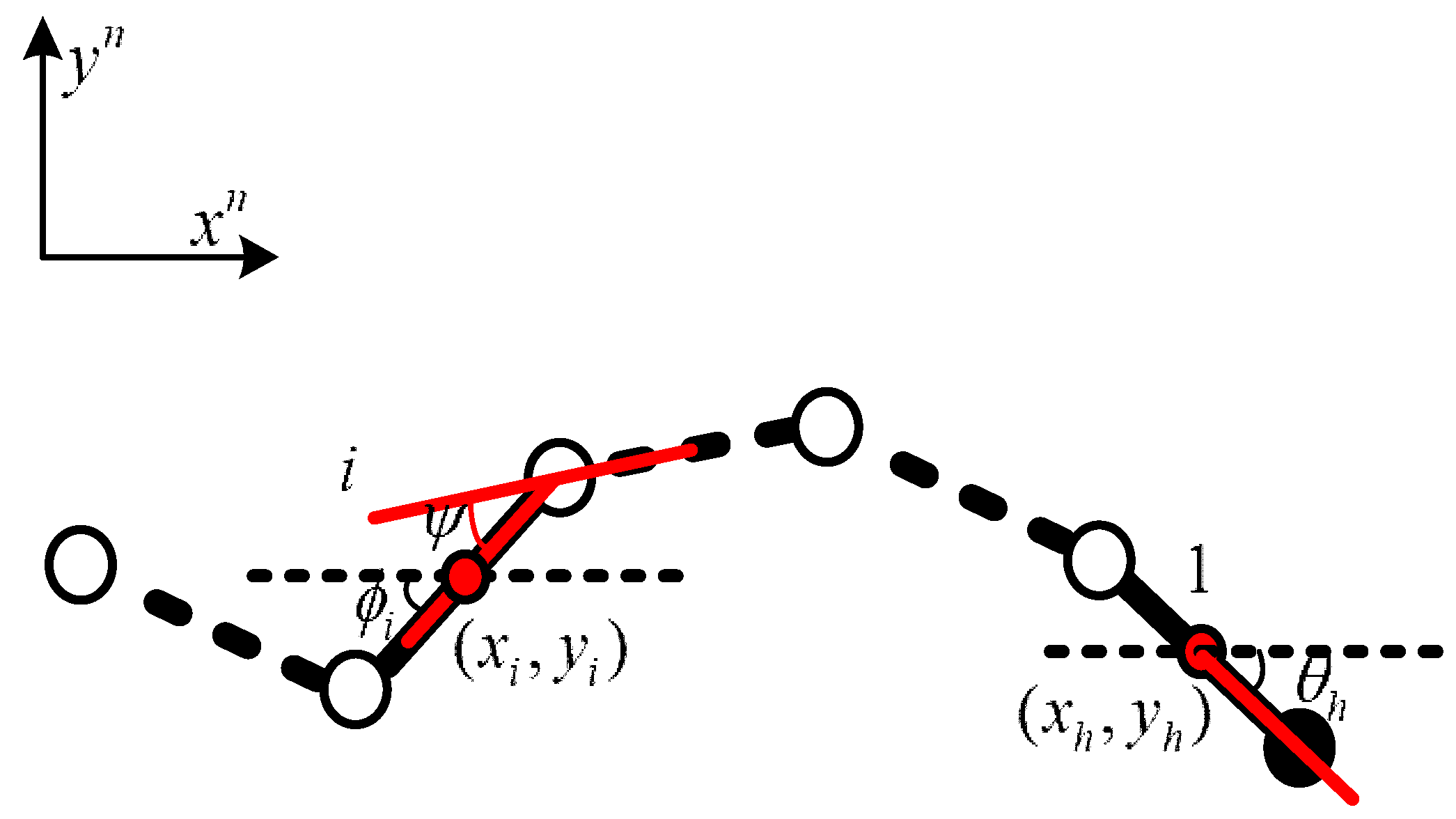

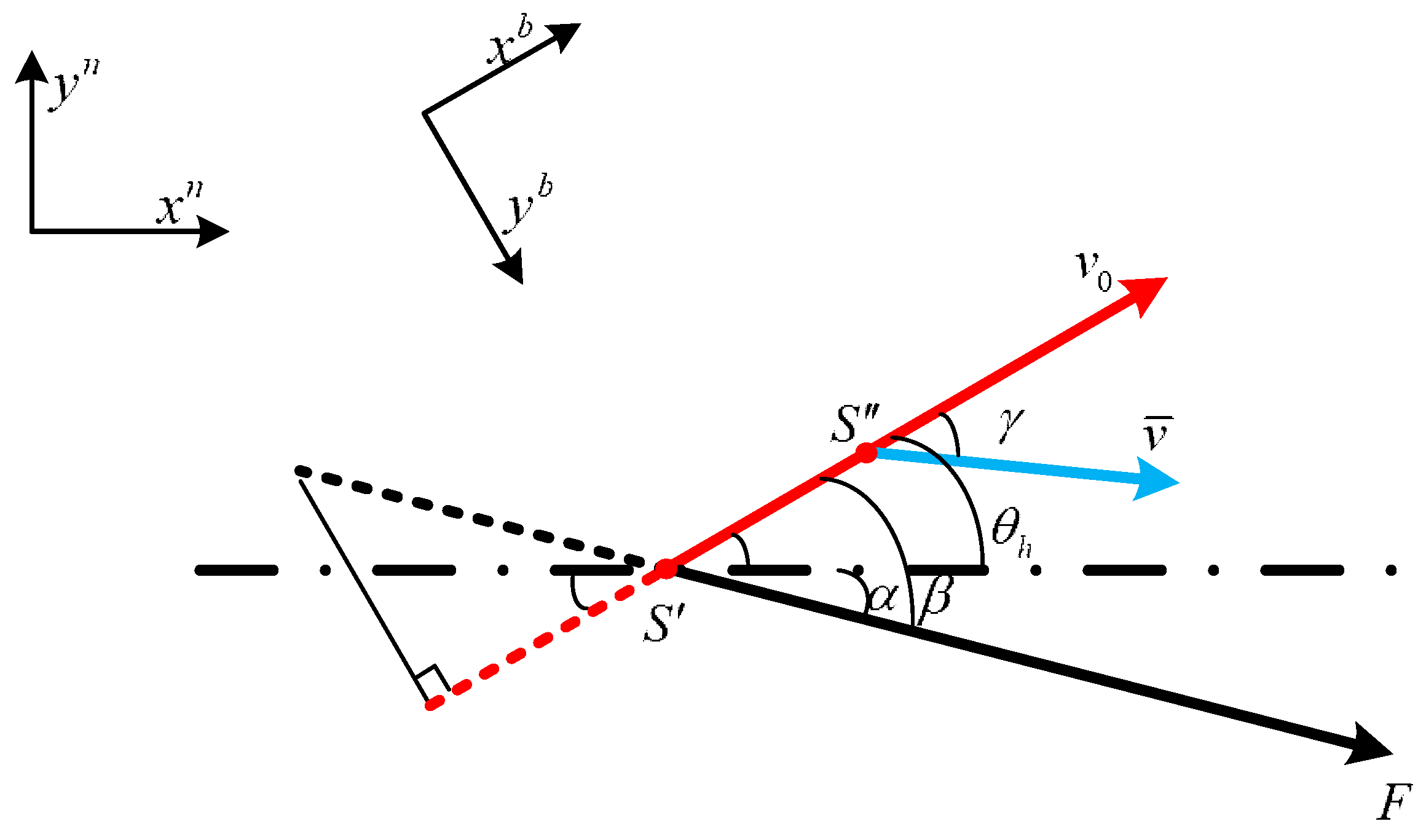

2.1. Snake Robot Kinematics Model

2.2. Analysis of the Snake Robot’s Motion Restraint Characteristics

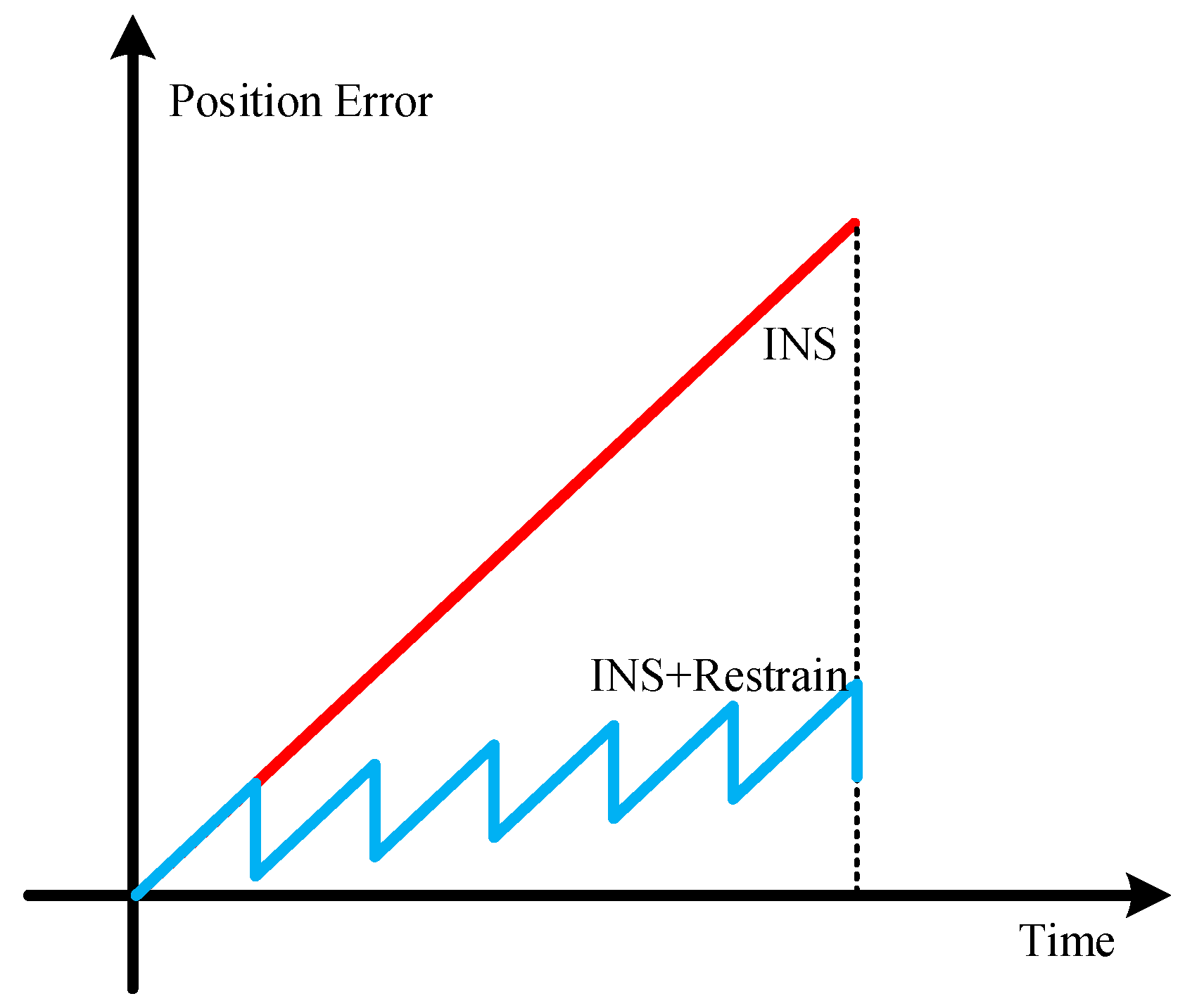

2.3. Error Propagation Properties

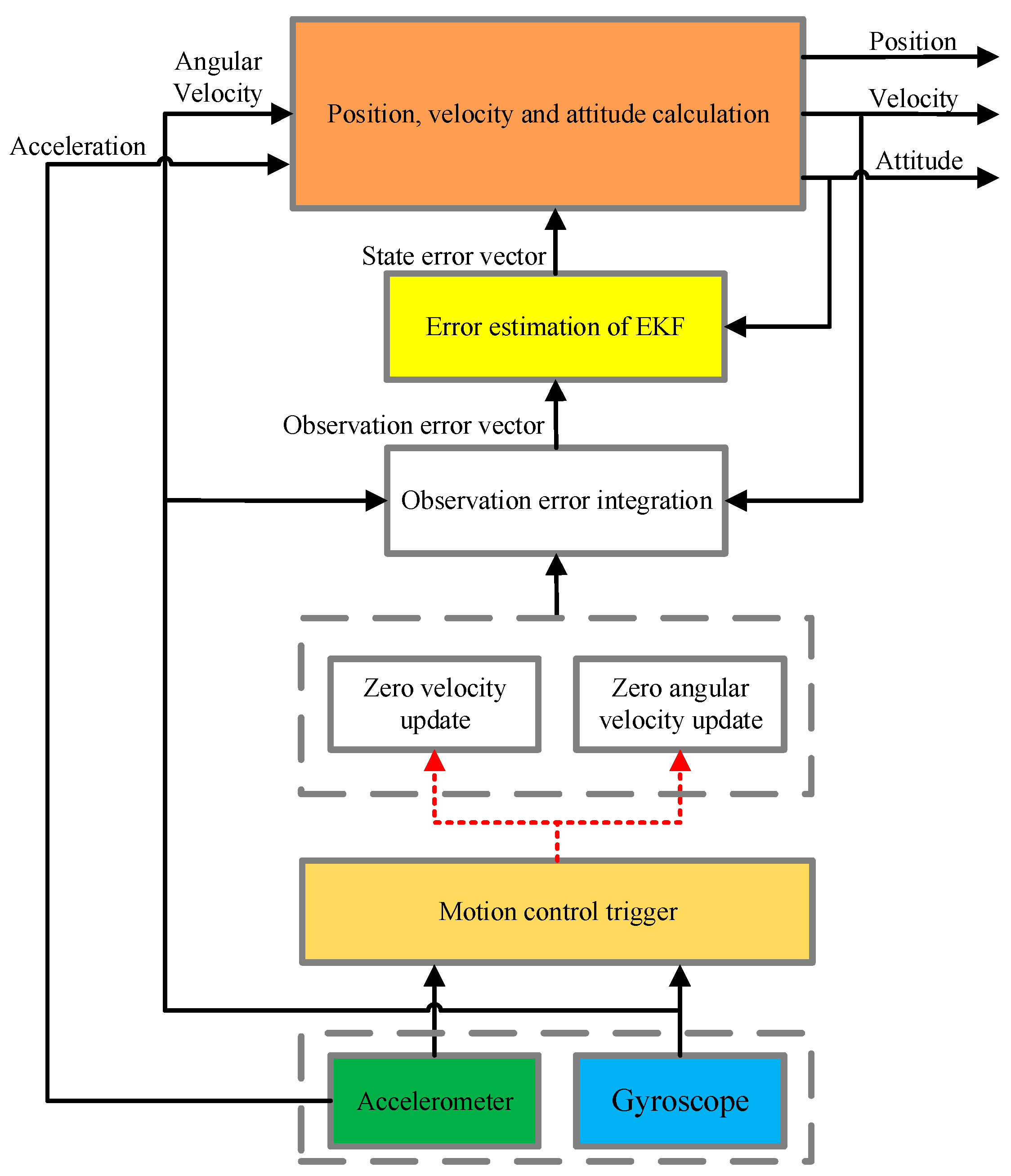

3. Navigation Method under the Constraint of the Snake Robot’s Motion Features

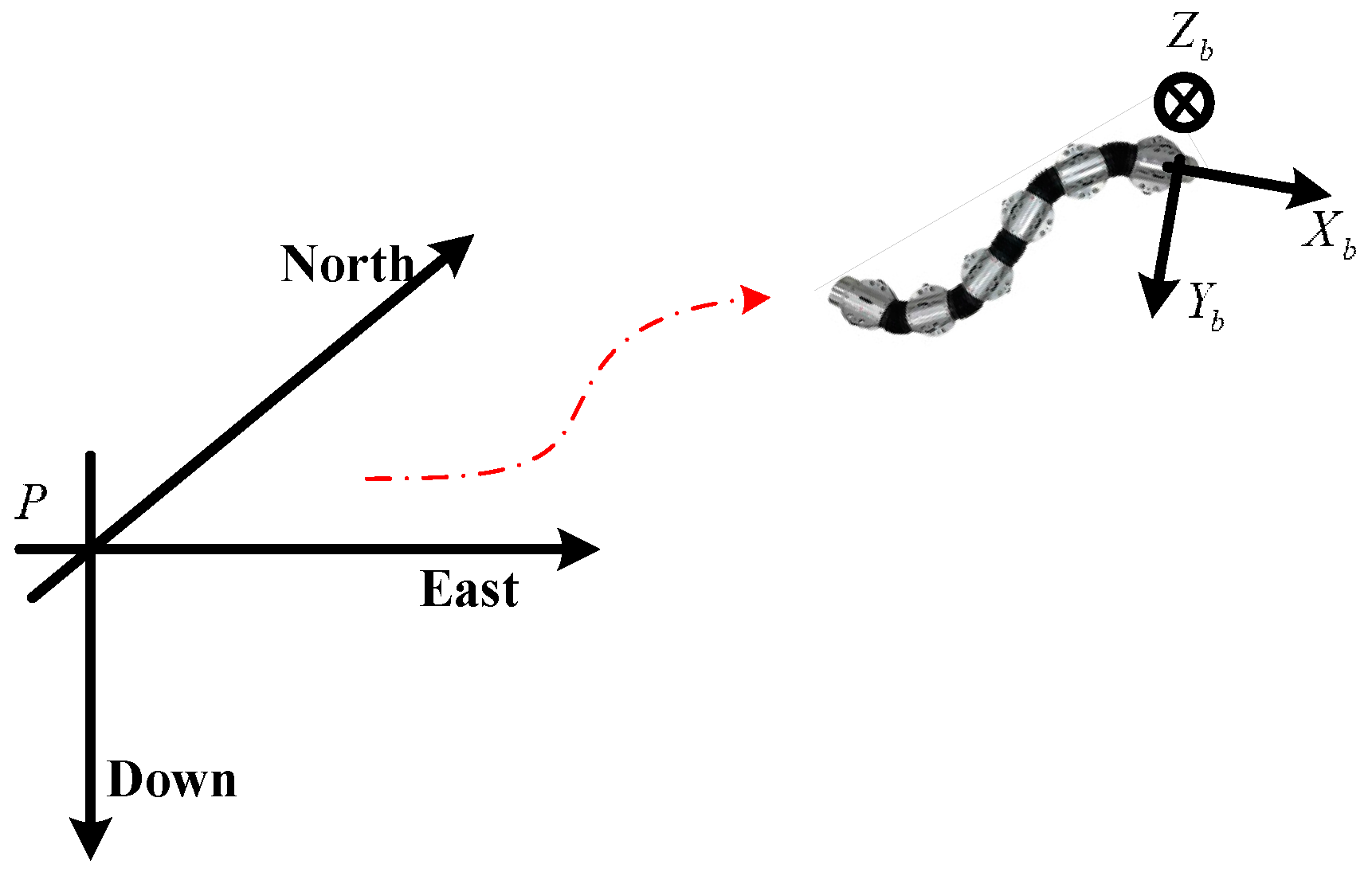

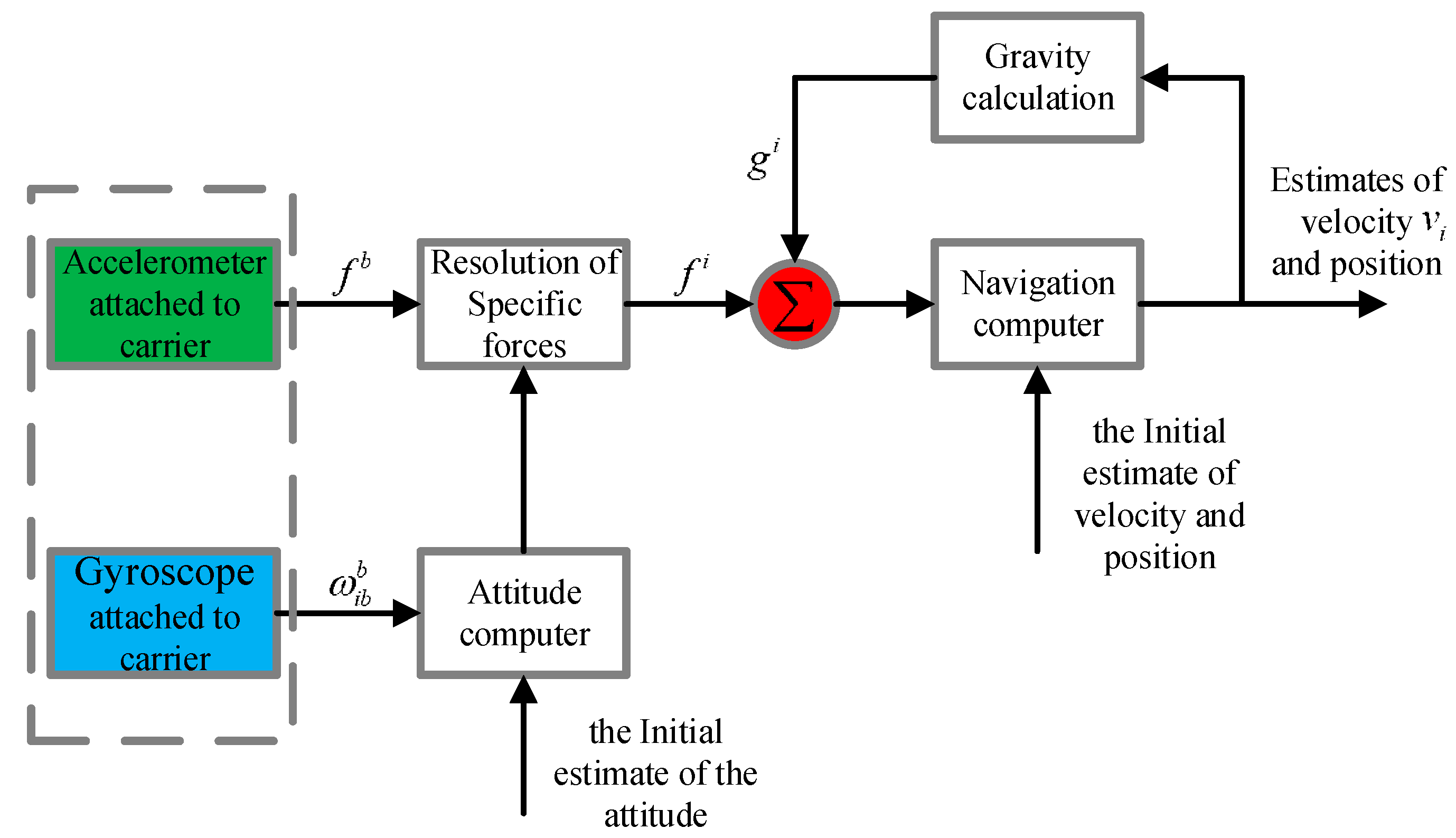

3.1. Mechanical Arrangement of the Strapdown Navigation System

3.2. Position Estimation Filter Design

3.2.1. Establish the State Equation

3.2.2. Extended Kalman Filter

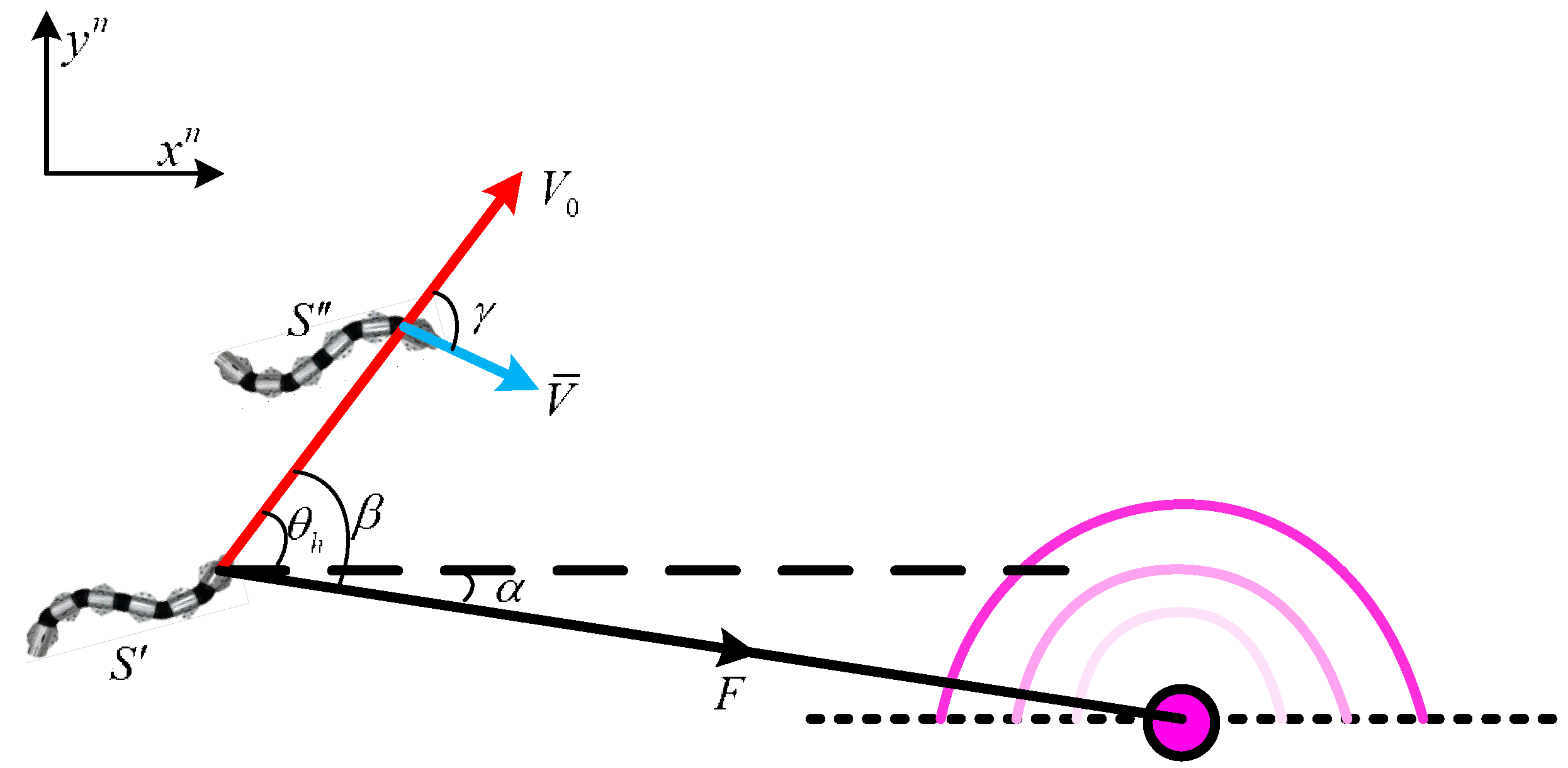

3.3. Analysis of the Trigger Correction Mechanism under the Restriction of Snake Robot Behavior

3.3.1. Velocity-Assisted Correction of the Snake Robot’s Movement

3.3.2. Velocity-Assisted Correction and Fusion Angular Velocity-Assisted Correction

4. Experiment

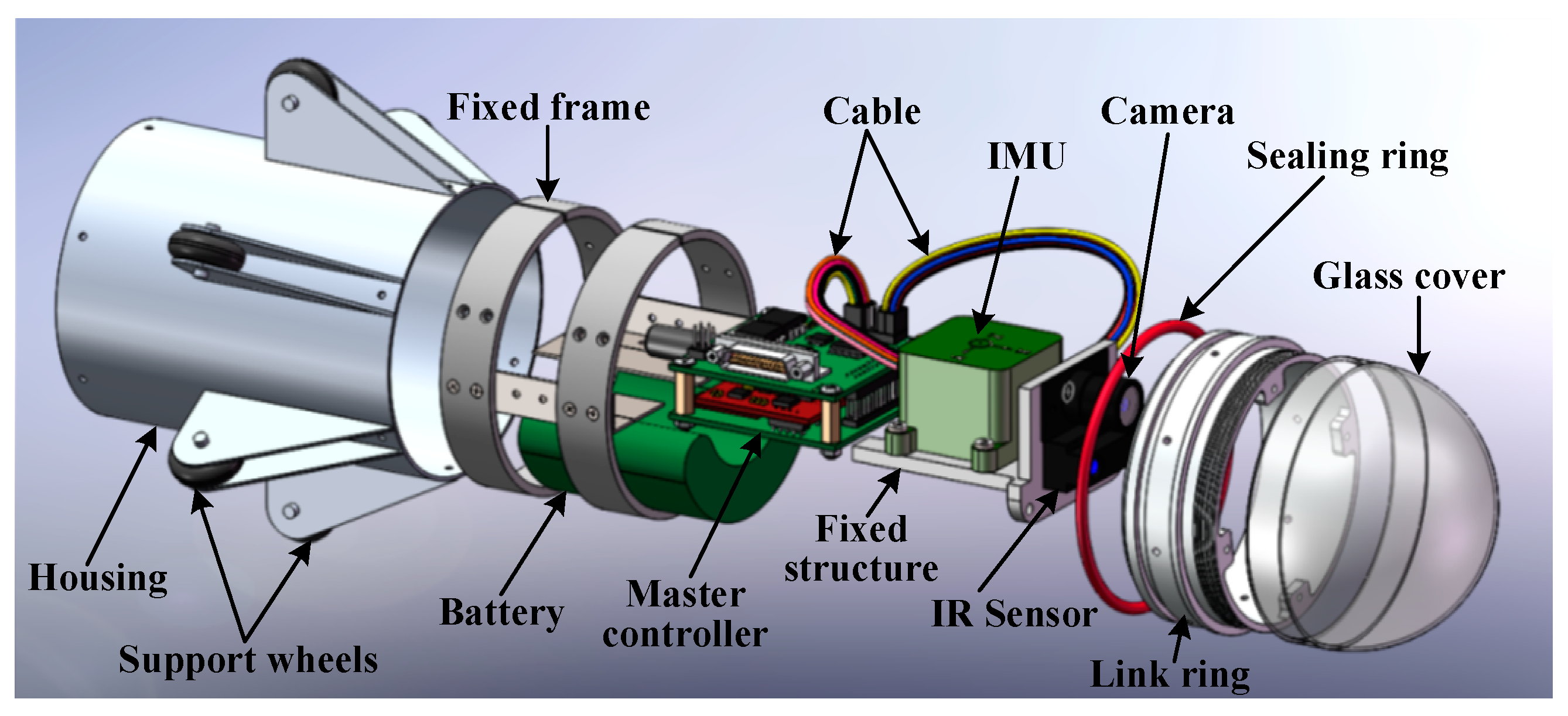

4.1. Prototype Development

4.1.1. Design Process

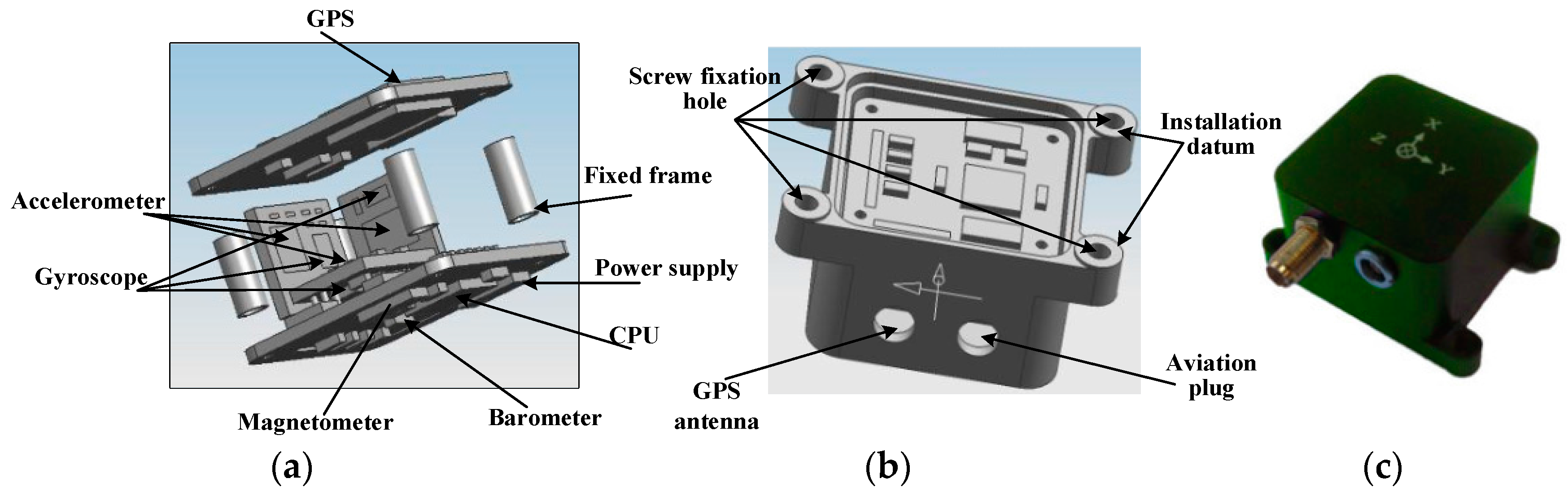

4.1.2. IMU System Sensor

4.2. Experimental Verification

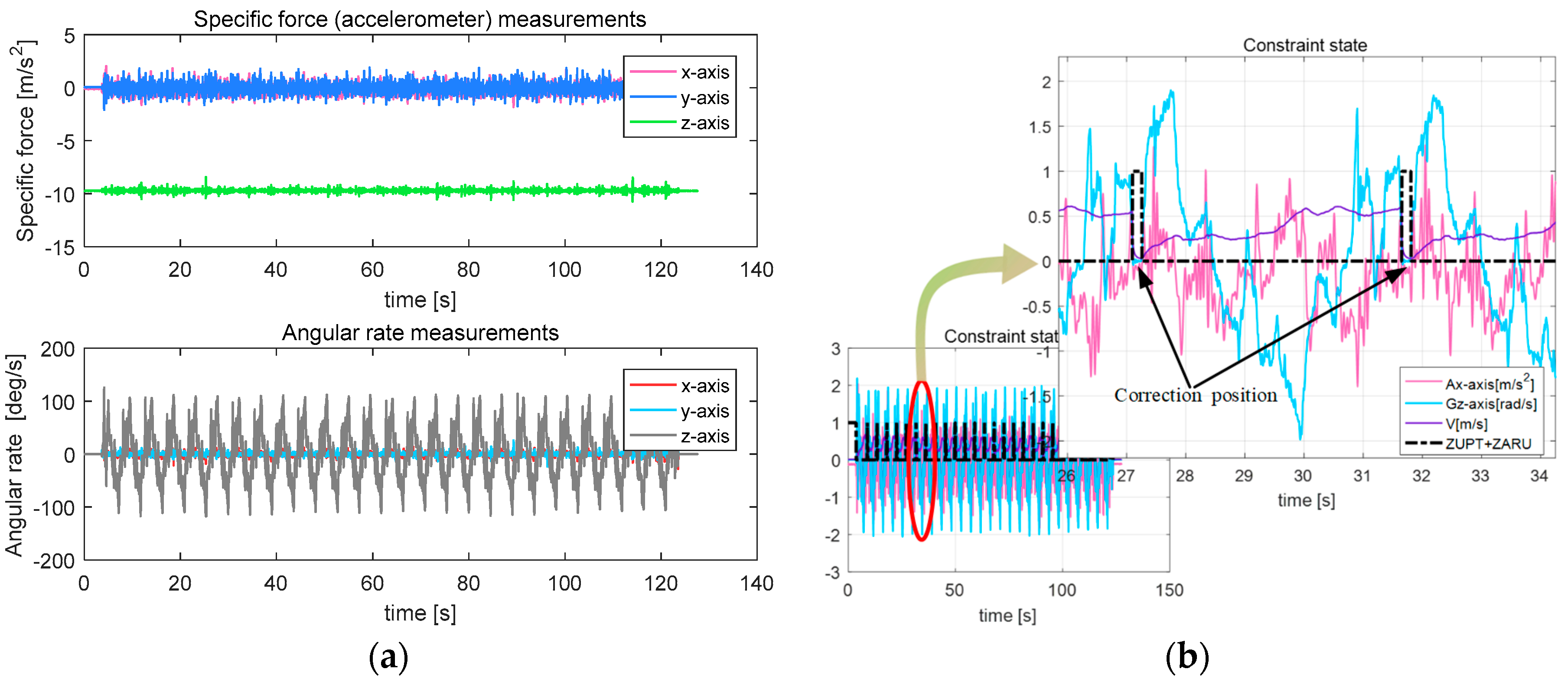

4.2.1. Linear Motion Test

4.2.2. Turn Motion Test

4.2.3. Turn-Back Motion Test

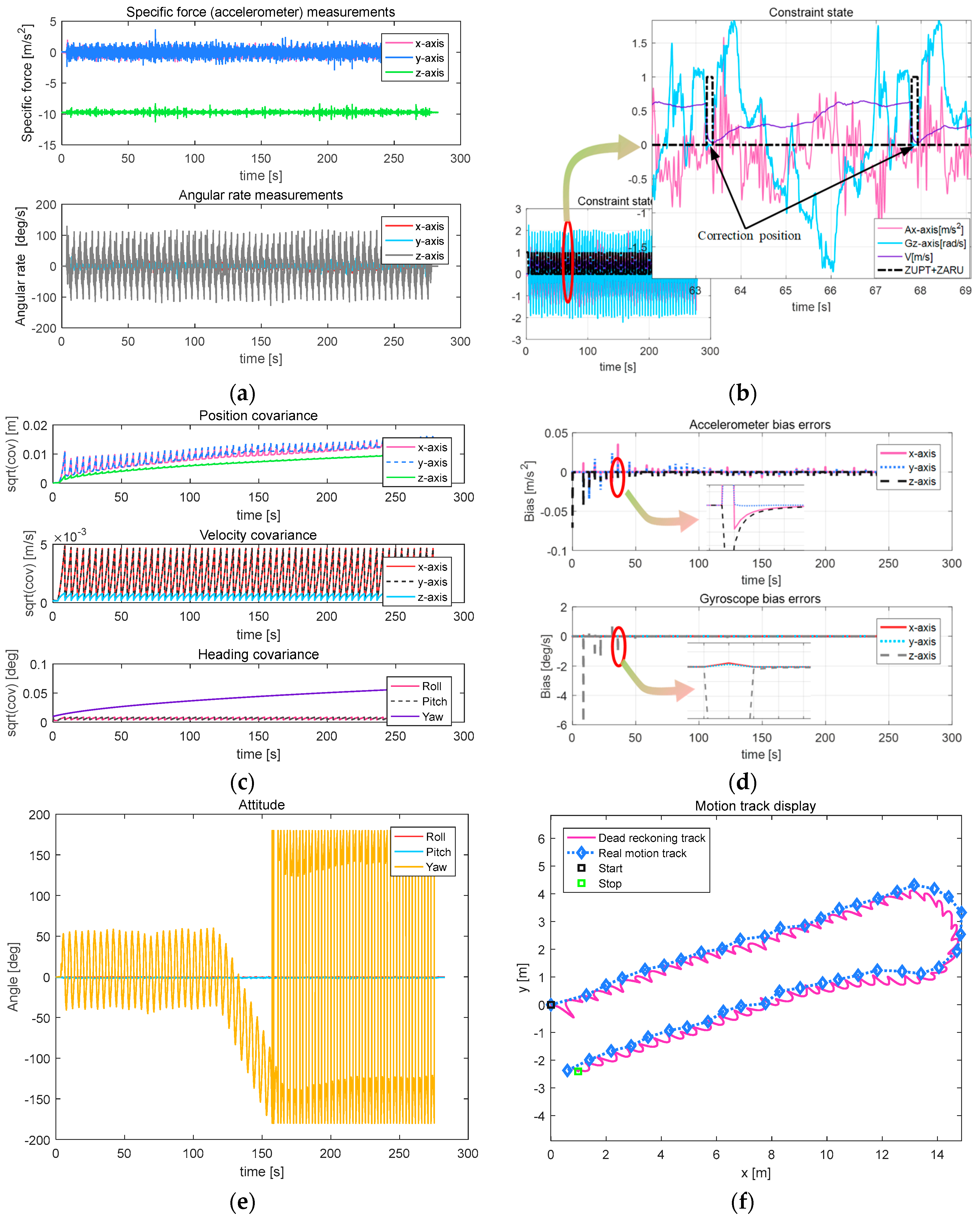

4.2.4. Circular Motion Test

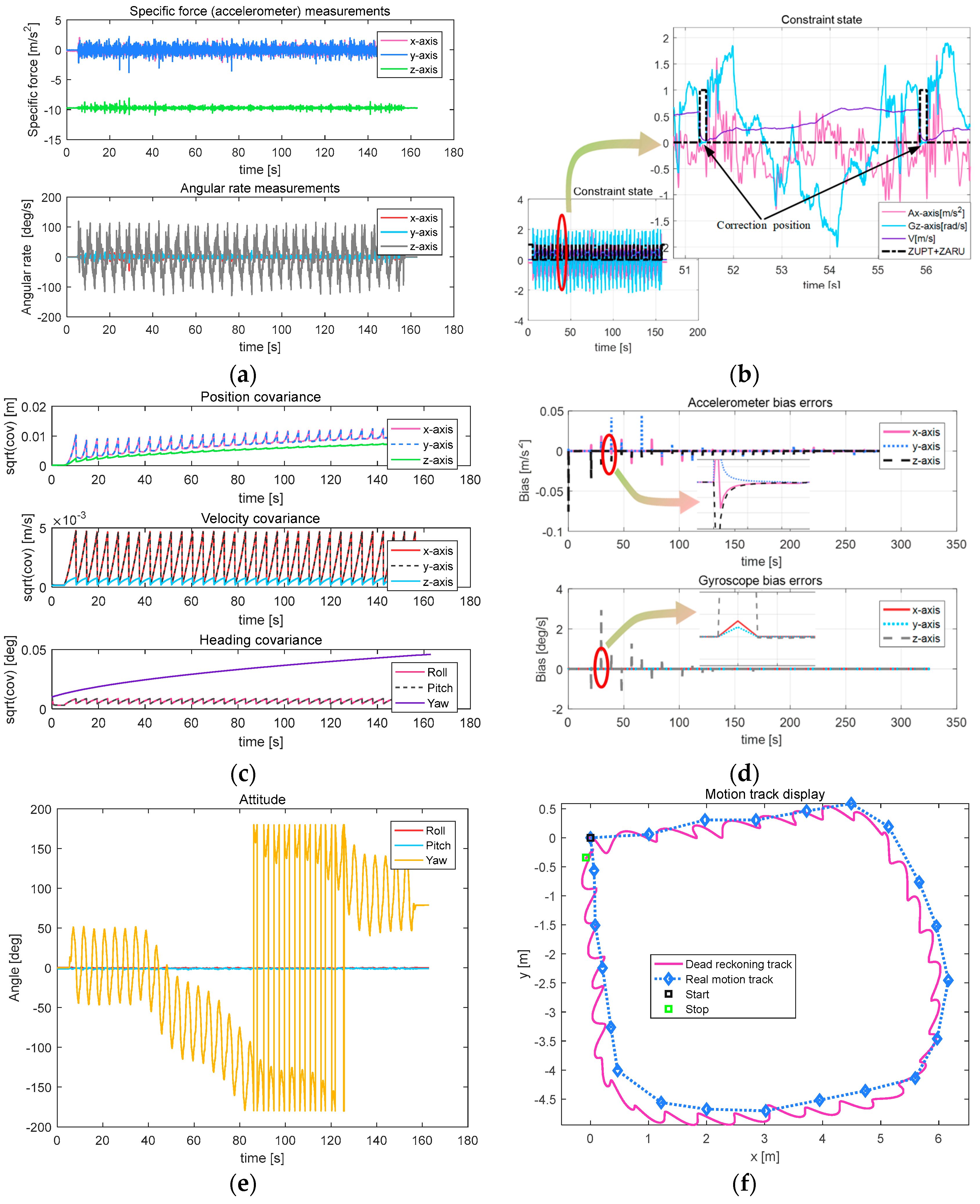

5. Conclusions

Acknowledgments

Author Contributions

Conflicts of Interest

References

- Hirose, S. Biologically Inspired Robots: Snake Locomotors and Manipulators; Oxford University Press: Oxford, UK, 1993. [Google Scholar]

- Liljebäck, P.; Pettersen, K.Y.; Stavdah, Ø.; Gravdahl, J.T. A review on modelling, implementation, and control of snake robots. Robot. Auton. Syst. 2012, 60, 29–40. [Google Scholar] [CrossRef] [Green Version]

- Sanfilippo, F.; Azpiazu, J.; Marafioti, G.; Transeth, A.A.; Stavdahl, Ø.; Liljebäck, P. Perception-Driven Obstacle-Aided Locomotion for Snake Robots: The State of the Art, Challenges and Possibilities. Appl. Sci. 2017, 7, 336. [Google Scholar] [CrossRef]

- Gray, J. The Mechanism of Locomotion in Snakes. J. Exp. Biol. 1946, 23, 101–124. [Google Scholar] [PubMed]

- Liljeback, P.; Pettersen, K.; Stavdahl, Ø. Modelling and Control of Obstacle-Aided Snake Robot Locomotion Based on Jam Resolution. In Proceedings of the 2009 IEEE International Conference on Robotics and Automation, Kobe, Japan, 12–17 May 2009; pp. 3807–3814. [Google Scholar]

- Liljeback, P.; Pettersen, K.Y.; Stavdahl, O.; Gravdahl, J.T. Compliant control of the body shape of snake robots. In Proceedings of the 2004 IEEE International Conference on Robotics and Automation, Hong Kong, China, 31 May–7 June 2014; pp. 4548–4555. [Google Scholar]

- Yamada, H.; Chigisaki, S.; Mori, M.; Takita, K.; Ogami, K.; Hirose, S. Development of amphibious snake-like robot ACM-R5. In Proceedings of the 36th International Symposium on Robots, Tokyo, Japan, 29 November–1 December 2005. [Google Scholar]

- Wright, C.; Buchan, A.; Brown, B.; Geist, J.; Schwerin, M.; Rollinson, D.; Tesch, M.; Choset, H. Design and architecture of the unified modular snake robot. In Proceedings of the 2012 IEEE International Conference on Robotics and Automation, St. Paul, MN, USA, 14–18 May 2012; pp. 4347–4354. [Google Scholar]

- Liljeback, P.; Stavdahl, Ø.; Beitnes, A. Snake Fighter-Development of a Water Hydraulic Fire Fighting Snake Robot. In Proceedings of the 9th International Conference on Control, Automation, Robotics and Vision, Singapore, 5–8 December 2006; pp. 1–6. [Google Scholar]

- Liljeback, P.; Pettersen, K.; Stavdahl, Ø. A Snake Robot with a Contact Force Measurement System for Obstacle-aided Locomotion. In Proceedings of the 2010 IEEE International Conference on Robotics and Automation, Anchorage, AK, USA, 3–7 May 2010; pp. 683–690. [Google Scholar]

- Tanaka, M.; Kon, K.; Tanaka, K. Range-Sensor-Based Semiautonomous Whole-Body Collision Avoidance of a Snake Robot. IEEE Trans. Control Syst. Technol. 2015, 23, 1927–1934. [Google Scholar] [CrossRef]

- Tian, Y.; Gomez, V.; Ma, S. Influence of two SLAM algorithms using serpentine locomotion in a featureless environment. In Proceedings of the IEEE International Conference on Robotics and Biomimetics, Qingdao, China, 3–7 December 2016; pp. 182–187. [Google Scholar]

- Chavan, P.; Murugan, M.; Unnikkannan, E.V.; Singh, A.; Phadatare, P. Modular Snake Robot with Mapping and Navigation: Urban Search and Rescue (USAR) Robot. In Proceedings of the 2015 International Conference on Computing Communication Control and Automation, Pune, India, 26–27 February 2015; pp. 537–541. [Google Scholar]

- Fu, X.; Wang, Y. Research of snake-like robot control system based on visual tracking. In Proceedings of the International Conference on Electronics, Communications and Control, Ningbo, China, 9–11 September 2011; pp. 399–402. [Google Scholar]

- Xiao, X.; Cappo, E.; Zhen, W.; Dai, J.; Sun, K.; Gong, C.; Travers, M.J.; Choset, H. Locomotive reduction for snake robots. In Proceedings of the IEEE International Conference on Robotics and Automation, Seattle, WA, USA, 26–30 May 2015; pp. 3735–3740. [Google Scholar]

- Ponte, H.; Queenan, M.; Gong, C.; Mertz, C.; Travers, M.; Enner, F.; Hebert, M.; Choset, H. Visual sensing for developing autonomous behavior in snake robots. In Proceedings of the IEEE International Conference on Robotics and Automation, Hong Kong, China, 31 May–7 June 2014; pp. 2779–2784. [Google Scholar]

- Ohno, K.; Nomura, T.; Tadokoro, S. Real-Time Robot Trajectory Estimation and 3D Map Construction using 3D Camera. In Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems, Beijing, China, 9–15 October 2006; pp. 5279–5285. [Google Scholar]

- Billah, M.M.; Khan, M.R. Smart inertial sensor-based navigation system for flexible snake robot. In Proceedings of the IEEE International Conference on Smart Instrumentation, Measurement and Applications, Kuala Lumpur, Malaysia, 24–25 November 2014; pp. 1–5. [Google Scholar]

- Estébanez, J.G. GPS-IMU Integration for a Snake Robot with Active Wheels; Institutt for Teknisk Kybernetikk: Trondheim, Norway, 2009. [Google Scholar]

- Yang, W.; Bajenov, A.; Shen, Y. Improving low-cost inertial-measurement-unit (IMU)-based motion tracking accuracy for a biomorphic hyper-redundant snake robot. Robot. Biomim. 2017, 4, 16. [Google Scholar] [CrossRef] [PubMed]

- Baglietto, M.; Sgorbissa, A.; Verda, D.; Zaccaria, R. Human navigation and mapping with a 6DOF IMU and a laser scanner. Robot. Auton. Syst. 2011, 59, 1060–1069. [Google Scholar] [CrossRef]

- Sato, M.; Fukaya, M.; Iwasaki, T. Serpentine locomotion with robotic snakes. IEEE Control Syst. 2002, 22, 64–81. [Google Scholar] [CrossRef]

- Wiriyacharoensunthorn, P.; Laowattana, S. Analysis and design of a multi-link mobile robot (Serpentine). In Proceedings of the 2002 IEEE International Conference on Industrial Technology, Bangkok, Thailand, 11–14 December 2002; Volume 2, pp. 694–699. [Google Scholar]

- Kelasidi, E.; Tzes, A. Serpentine motion control of snake robots for curvature and heading based trajectory-parameterization. In Proceedings of the 2012 20th Mediterranean Conference on Control & Automation, Barcelona, Spain, 3–6 July 2012; pp. 536–541. [Google Scholar]

- Khatib, O. Real-Time Obstacle Avoidance for Manipulators and Mobile Robots. Int. J. Robot. Res. 1986, 5, 90–98. [Google Scholar] [CrossRef]

- Ye, C.; Hu, D.; Ma, S.; Li, H. Motion planning of a snake-like robot based on artificial potential method. In Proceedings of the 2010 IEEE International Conference on Robotics and Biomimetics, Tianjin, China, 14–18 December 2010; pp. 1496–1501. [Google Scholar]

- Groves, P.D. Principles of GNSS, Inertial, and Multisensor Integrated Navigation Systems; Artech House: Norwood, MA, USA, 2008. [Google Scholar]

- Jahn, J.; Batzer, U.; Seitz, J.; Patino-Studencka, L.; Boronat, J.G. Comparison and evaluation of acceleration based step length estimators for handheld devices. In Proceedings of the 2010 International Conference on Indoor Positioning and Indoor Navigation (IPIN), Zurich, Switzerland, 15–17 September 2010. [Google Scholar]

- Retscher, G.; Fu, Q. An intelligent personal navigator integrating GNSS, RFID and INS for continuous position determination. Boletim de Ciências Geodésicas 2009, 15, 707–724. [Google Scholar]

| Specifications | Index Value | |

|---|---|---|

| Accelerometer | Range | ±1.7 g |

| Bias Instability | 25 mg | |

| Non-linearity | <0.2% | |

| Velocity random walk | <0.75 m/s/h1/2 | |

| Bandwidth | 2500 Hz | |

| Gyroscope | Range | ±300°/s |

| Bias Instability | 20°/h | |

| Non-linearity | <0.1% | |

| Angle random walk | <0.02°/s1/2 | |

| Bandwidth | 2000 Hz | |

| Systems | Update Time | 5 ms |

| Power | 0.15 A @ 5 Vdc |

© 2018 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhao, X.; Dou, L.; Su, Z.; Liu, N. Study of the Navigation Method for a Snake Robot Based on the Kinematics Model with MEMS IMU. Sensors 2018, 18, 879. https://doi.org/10.3390/s18030879

Zhao X, Dou L, Su Z, Liu N. Study of the Navigation Method for a Snake Robot Based on the Kinematics Model with MEMS IMU. Sensors. 2018; 18(3):879. https://doi.org/10.3390/s18030879

Chicago/Turabian StyleZhao, Xu, Lihua Dou, Zhong Su, and Ning Liu. 2018. "Study of the Navigation Method for a Snake Robot Based on the Kinematics Model with MEMS IMU" Sensors 18, no. 3: 879. https://doi.org/10.3390/s18030879