1. Introduction

High-precision measurement of the attitude angles of an unmanned aerial vehicle (UAV), i.e., a drone, is of critical importance for the landing process. In general, the attitude angles of a UAV are measured by an internal device [

1,

2], such as a gyroscope [

3,

4,

5] or a GPS angle measurement system [

6,

7,

8]. However, for small-scale fixed-wing drones, limitations on load capacity and power supply make the use of internal high-precision gyroscopes impracticable, and instead an approach based on external attitude measurement must be adopted. External attitude measurement methods determine a drone’s attitude angles by using external measuring devices such as theodolites or cameras. Such methods are divided into two types according to where the measurement device is installed: a visual sensor can either be mounted on the drone or located at some position away from it [

9]. Installation of the sensor away from the drone provides high accuracy, but using the device is inconvenient for navigating the drone. Therefore, to assist in the landing of small-scale drones, methods employing drone-mounted visual sensors are most commonly used [

10,

11,

12]. With the development of visual sensor technology, visual sensors could be implemented even to the smaller drones in the near future, and the computational capacity together with the camera quality will be on a better technological level.

Most methods based on visual techniques to obtain the attitude angles of a UAV require multiple images with a fixed-focus lens. Eynard et al. [

13] presented a hybrid stereo system for vision-based navigation composed of a fisheye and a perspective camera. Rawashdeh et al. [

14] and Ettinger et al. [

15] obtained the attitude angles by detecting changes in the horizon between two adjacent images from a single fisheye camera. Tian and Huang [

16], Caballero et al. [

17], and Cesetti et al. [

18] matched features of sequential images from a single camera to obtain attitude angles by capturing natural landmarks on the ground. Li et al. [

19] obtained the pose with the uncalibrated multi-view images and the intrinsic camera parameters. Dong et al. [

20] matched dual-viewpoint images of a corner target to find the attitude angles. All of these methods obtain the drone’s attitude angles through the use of multiple images. However, fast flying speeds and constant changes in attitude angles lead to an attitude angle delay, with the result that the attitude angles acquired using these methods are not the current angles. In addition, the accuracy of most of these methods based on monocular cameras is dependent on the focal length of the lens employed. For example, with the method proposed by Tian and Huang [

16], the accuracy is proportional to focal length. Eberli et al. [

21] imaged two concentric circles with a fixed-focus lens and determined the attitude angles from the deformation of these circles. Also using a fixed focal length, Li [

22] measured the angle of inclination of a marker line, from which he obtained the attitude angles. Soni and Sridhar [

23] proposed a pattern recognition method for landmark recognition and attitude angle estimation, using a fixed-focus lens. In the method proposed by Gui et al. [

24], the centers of four infrared lamps acting as ground targets were detected and tracked using a radial constraint method, with the accuracy again being dependent on the focal length of the lens. During landing of a UAV, as the distance to a cooperative target decreases, the view size of the target changes. Thus, in addition to attitude angle delay, most of the existing methods for assisting with the landing of a small fixed-wing drone also suffer from the problem of varying view size of cooperative targets.

In contrast to existing methods, our method determines the UAV attitude angles from just a single captured image containing five coded landmark points using a zoom system. Not only does this method reduce angle delay, but the use of a zoom system greatly improves the view size of the cooperative target. The remainder of this paper is organized as follows:

Section 2 explains the principle of the scheme for obtaining the attitude angles,

Section 3 describes a simulation experiment,

Section 4 presents the experimental results and a discussion, and

Section 5 gives our conclusions.

2. Measurement Scheme

The UAV attitude angles are obtained from a single captured image containing five coded landmark points using the radial constraint method together with a three-dimensional coordinate transformation.

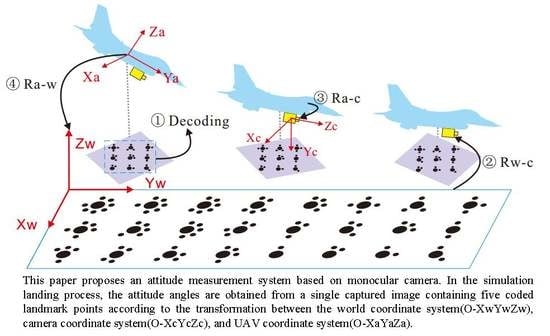

The solution procedure is divided into four steps, as shown in

Figure 1. The first step is to decode and obtain the relationship between the coordinates of the code mark point in the image coordinate system and those in the world coordinate system. In the second step, the principal point

is calibrated, and the rotation matrix

between the world coordinate system and the camera coordinate system is obtained by the radial constraint method. The third step determines the rotation matrix

between the UAV coordinate system and the camera coordinate system according to the rules for converting between three-dimensional coordinate systems. In the fourth step, the rotation matrix

between the UAV coordinate system and the world coordinate system is obtained from the matrices found in the second and third steps, and is then used to calculate the UAV attitude angles.

2.1. Coded Target Decoding

The marking point used in this paper is a coded marking point with a starting point. The coding method is binary coding. The closest point to the initial point is the starting point of the code. The coding is counterclockwise, and each circle at the outermost periphery represents one bit.

Figure 2 shows one of the captured encoded target maps. The relationship between the coordinates in the image coordinate system and the corresponding coordinates in the world coordinate system is obtained through a decoding calculation.

From the 20 successfully decoded points in

Figure 2, five are selected to solve for the attitude angles, as shown in

Table 1.

denote coordinates in the world coordinate system, and

denote those in the image coordinate system.

2.2. Solution for the Rotation Matrix between the World and Camera Coordinate Systems

The radial constraint method is used to find the rotation matrix between the camera coordinate system and the world coordinate system. According to the ideal camera imaging model, as shown in

Figure 3,

is a point in the world coordinate system, while the corresponding point in the camera plane coordinate system is

. It should be noted that none of the equations derived using the radial constraint method involve the effective focal length

f. It is important to choose the appropriate focal length based on the distance between the camera and the cooperative target. This is the main reason why in this paper we choose the radial constraint method to obtain the rotation matrix

.

In practical applications, the target is planar, so

. Thus, the relevant formula is

Here,

denotes the actual image coordinates and

denotes the world coordinates. Finally, the unit orthogonality of

is used to determine

,

,

,

, and

[

25]. The rotation matrix

from the world coordinate system to the camera coordinate system is thereby obtained.

2.3. Solution for the Rotation Matrix between the UAV and Camera Coordinate Systems

The camera is mounted on the drone at a known position, and so the rotation matrix between the UAV coordinate system and the camera coordinate system can be obtained from a three-dimensional coordinate transformation. The

X,

Y, and

Z axes of the UAV coordinate system are rotated through angles

,

, and

around the

,

, and

axes, respectively, of the camera coordinate system. The rotation matrices representing rotations through angles

,

, and

around the

X,

Y, and

Z axes, respectively, are

Therefore, the rotation matrix between the UAV coordinate system and the camera coordinate system is given by the matrix product

The relative positions of the UAV and the camera in the simulation experiment are shown in

Figure 1. The angles between the

X,

Y, and

Z axes of the UAV coordinate system and the

,

, and

axes of the camera coordinate system are

,

, and

, respectively. According to Formula (

5), the rotation matrix between the UAV coordinate system and the camera coordinate system is then given by

2.4. Solution for the Rotation Matrix between the UAV and World Coordinate Systems

The two steps above have provided the rotation matrix

from the world coordinate system to the camera coordinate system and the rotation matrix

from the UAV coordinate system to the camera coordinate system. Given a point

, we denote by

,

, and

the corresponding points in the world, camera, and UAV coordinate systems, respectively. We denote by

and

the translation matrices between the world coordinate system and the camera coordinate system and between the UAV coordinate system and the camera coordinate system, respectively.

Figure 4 shows the relationship between the UAV coordinate system and the world coordinate system.

The coordinates of the point

in the camera coordinate system can be obtained from the relationship between the camera and world coordinate systems as

and from the relationship between the UAV and camera coordinate systems as

Equating these two expressions for

gives

from which we have

The rotation matrix between the UAV coordinate system and the world coordinate system is given by

where

,

, and

now denote the angles through which the

x,

x, and

z axes of the UAV coordinate system are rotated around the

x,

y, and

z axes, respectively, of the world coordinate system. The attitude angles of UAV are obtained from the following formulas:

According to Formulas (

12)–(

14), the algorithm proposed in this paper is able to achieve the measurement of three attitude angles (yaw angle, pitch angle and roll angle). The solution of the attitude angle is independent of the focal length. It should be noted that the relative positions of the UAV and the camera in practical applications can be adjusted by pan-tilt. Regardless of whether the drone’s pitch angle is positive or negative, the attitude of the drone can be solved by adjusting the attitude of the camera through the pan/tilt. The rotation matrix between the UAV coordinate system and the camera coordinate system should also be re-acquired correspondingly according to the position relationship.

3. Experiment

The platform for the experiment was constructed with a coded target for landing cooperation, as shown in

Figure 5. The quadrocopter model was equipped with an industrial CCD camera and an electronic compass for measuring its attitude angles. The imaging area of the CCD camera was

pixels. The sensor size was 1/3 inch and the pixel size was

m. Four lenses with focal lengths of 12, 16, 25, and 35 mm were used. The electronic compass pitch accuracy, roll accuracy, and heading accuracy were

,

, and

, respectively. Capturing of images and electronic compass data acquisition were both under computer control. The electronic compass and camera were fixed on the four-axle aircraft model, with the compass in the middle and the camera in the front. The four-axis aircraft model was fixed on a tripod using a pan–tilt–zoom (PTZ) camera. The target was a planar coding target with an initial point, and the distance between target and camera was in the range 50–300 cm.

Determination of the world coordinate system – was based on the four-axis aircraft model. When the pitch, roll, and yaw angles of the four-axis aircraft model were all equal to , the world coordinate system was taken as the coordinate system of the four-axis aircraft model –. The world coordinate system – was not fixed in the horizontal direction. Moving the target in parallel had no effect on the experimental results. After the world coordinate system was determined, the coding target was fixed to the optical experimental platform. It was necessary to ensure that there were at least five coded landmarks, in order to allow measurement of the attitude angles of the four-axis aircraft.

4. Results and Discussion

To validate the accuracy of the attitude angle and the effect of focal length on accuracy, the experiment was designed on the basis of the specification “calibration specification for moving pose measurement system”. In the verification experiment, four groups of experiments were performed for simulating the variation of attitude angles during the landing of the drone in which the pitch angle was changed from to , the roll angle from to and the yaw angle from to . Furthermore, the dependency between the three angles was also added to the discussion.

In the first group of experiments, during the landing process, the distance between the drone and the cooperative target decreased continuously. In order to identify the influences of focal lengths on the attitude angles measurement, lenses of different focal lengths (12, 16, 25, and 35 mm) were used to photograph the target with the drone in the same pose, with a pitch angle of about , a roll angle of about and a yaw angle of about . In the second group of experiments, the yaw angle measurement was identified in the range from to with a pitch angle of about and a roll angle of about . The condition of close landing with a yaw angle of about was also investigated. The roll and pitch angles were changed in increments of from to and to in this condition. Similarly, the roll angles and pitch angles were investigated in the third and fourth group, respectively. In the third group, for roll angles of , , , , , , and , the pitch angle was changed in increments from to with yaw angle at unchanged. In the fourth group, for pitch angles of , , , , , and , the roll angle was changed in increments of from to with yaw angle at unchanged.

4.1. Focal Lengths

During landing of a UAV, as the distance to a cooperative target decreases, the use of a zoom system greatly improves the view size of the cooperative target and further improves the accuracy of the attitude angle measurement. The proposed algorithm for the UAV attitude angle was irrelevant to the focal length as mentioned in the measurement scheme section. Lenses of different focal lengths (12, 16, 25, and 35 mm) were used to photograph the target with the drone in the same pose, with a pitch angle of about , a roll angle of about and a yaw angle of about . The verification experiment is as follows.

Table 2 compares the experimental result and ground truth (electronic compass values) for attitude angles at different lens’ focal lengths. The average error in the pitch angle is 0.36

, and the minimum error reaches 0.04

. Similarly, for roll angles and yaw angles, the average errors are 0.40

, 0.38

respectively, and the minimum error of 0.01

, 0.04

, respectively. The results illustrate that the attitude angles of a UAV can be determined with high accuracy using the proposed method when these angles remain nearly constant during descent and that the accuracy is independent of the focal length of the camera lens. Furthermore, during landing of the drone, the accuracy can be increased by appropriate selection of focal length depending on the distance between the UAV and the cooperative target.

4.2. Yaw Angles

Yaw angle is important for UAV control, especially when precise and restricted landing direction and location are required to overcome the cross wind components. At earlier stages of the final approach for instance 200 m out, GPS and altimeters are sufficient given glide path. At 10 m or less to landing pitch, the proposed method has the capability to provide the attitude angles information including pitch, roll and yaw.

The comparison between electronic compass data and experimental data for the roll angle are presented in

Figure 6 at different yaw angles from

to

. The red line indicates the compass data, and the blue line indicates the experimental data. The yellow histogram shows the error between the experimental data and the compass data. The experimentally determined yaw angles are almost coincident with the actual angles at each measurement point on the graph in which the minimum error in the yaw angle reaches 0.02

, the average error reaches 0.28

and the maximum error reaches 0.8

(errors here are absolute). The yaw angle has high accuracy when yaw angles vary around 0

, and the error increases with the increases of the yaw angle.

Results in

Table 3 compare the yaw angles with the roll angle varying from

to

, in which the minimum error in the yaw angle reaches 0.05

and the average error reaches 0.43

. Similarly,

Table 4 compares the yaw angles with the pitch angle varying from

to

, in which the minimum error in the yaw angle reaches 0.05

and the average error reaches 0.49

. The yaw angle achieves high accuracy as roll angles vary around 0

. The experimental results show that the proposed method achieves high accuracy in the yaw angle, and the error of yaw angles is less than

with the variation of pitch angles and roll angles.

4.3. The Pitch and Roll Angles

During the landing of the drone, the pitch angle of the drone gradually decreases, and the roll is with slight variations (affected by the cross wind). In the verification experiment, roll and pitch angles were changed in increments of from to and to with a yaw angle of about unchanged.

The experimental results are compared with the electronic compass data for six different locations in

Table 5. In the following discussion, the electronic compass data are taken as giving the true attitude angles for the quadrocopter model. The attitude angles for these six positions were all measured when the roll angle was close to

, which means that the quadrocopter model did not roll during the simulated landing. The pitch angle of the quadrocopter model decreased from

to

. When the roll and pitch angles were approximately

and

, respectively, the accuracies of the experimental results for these angles reached

and

, thus indicating that the method proposed in this paper achieves high accuracy when the roll angle changes little during descent of the drone.

The comparison between electronic compass data and experimental data for the roll angle are presented in

Figure 7 at different pitch angles. The red line indicates the compass data, and the blue line indicates the experimental data. The yellow histogram shows the error between the experimental data and the compass data. At each fixed pitch angle, the roll angle was varied from

to

and measured every

to obtain seven sets of roll angle values. For the whole range of pitch angle from

to

, the experimentally determined roll angles are almost coincident with the actual angles at each measurement point on each graph, indicating the high accuracy of the experimental determinations. Similarly,

Figure 8 compares the pitch angle measurement results at different roll angles. In this case, at each fixed roll angle, the pitch angle was varied from

to

and measured every

to obtain six sets of pitch angle values. For the whole range of roll angles from

to

, the experimental pitch angle again coincided with the actual angle at each measurement point on each graph. Thus, overall, the method for attitude angle determination presented in this paper achieves high accuracy over a wide range of angles.

An error analysis for the roll angle is presented in

Figure 9, which shows the maximum, minimum, and average errors (errors here are absolute). It can be seen that the average error in the roll angle is

,

,

,

,

, and

at the different fixed pitch angles. In particular, the low average error in the roll angle of

at a pitch angle of

should be noted. The shooting angle of the camera also affects the accuracy of the drone’s attitude angles to a certain extent.

Figure 10 presents a similar error analysis for the pitch angle. The average error in the pitch angle is

,

,

,

,

,

, and

at the different fixed roll angles. It can be seen that when the roll angle changes from

to

, the average error in the pitch angle increases, reaching

at a roll angle of

. Furthermore, it can be seen from the plots in

Figure 10 that when the actual value of the roll angle is

, the experimentally determined values show clear deviations. Thus, it can be seen that increasing roll angle results in increasing errors in both pitch and roll angles.

The greatest errors in both pitch and roll angles (almost ) occur at a pitch angle of and a roll angle of . From an analysis of the sources of these errors, it appears that the major contribution comes from image processing. Extraction of the center of the coded point is affected by the shooting angle. When this angle is skewed, the center after processing will deviate from the true center, resulting in an error in the extraction of the image center coordinates. This eventually leads to a deviation of the calculated result from the true value. In addition, the quality of the captured image also affects the accuracy of attitude angle determination.

4.4. Way Ahead

The proposed method in this paper is developed on the basis of visual measurement, which has some limitations at this stage and can be improved in the near future. The quality of the captured image is sensitive to the light condition, which directly depends on the weather condition. In some weather with poor light conditions, the proposed method may not be able to solve the attitude angle information due to the insufficient image quality. If precise and restricted landing direction and location are imposed as implemented, the navigation and control system are essential for the UAVs, which raise a new challenge for the treatment speed and the robustness of the proposed method. The accuracy of the attitude angle can also be improved by rationally designing the target size according to the working distance. It is worth mentioning that the size of the target depends on the camera focal length. Large focal length is required when shooting distance reaches hundreds of meters. The 200 mm focal length is able to capture a 4-m wide target 100 m away and a 2-m wide target 50 m away. In view of the limitation mentioned above, a series of research works are planned for the practical navigation including the optimization of target design, error model optimization, and flight control algorithm in the near future. In order to improve the robustness at poor light condition, self-illuminating targets that automatically adjust the brightness according to the light intensity will be explored, and the error model of attitude determination concerned with precise extraction of the cooperation center should be further studied to improve the accuracy of attitude determination.

The purpose of the method proposed in this paper is to achieve a precise landing of a small fixed-wing UAV under a high-precision attitude angle measurement system. At this stage, the attitude angle measurement system proposed in this paper is only used for the static measurement of the attitude angle, which simulates the variation of attitude angles at the landing stage. In the follow-up work, the measurement system will be applied to the practical small-scale fixed-wing UAV to achieve dynamic measurement of the attitude angle during landing, and the stability of the algorithm requires being further strengthened to ensure the real-time measurement of the attitude angle. Ultimately, the high-precision landing of the small-scale fixed-wing UAV will be achieved on the basis of the dynamic measurement of the attitude angle and a novel control algorithm and the autonomous control systems. It should be noted that the vision based measurement method proposed in this paper has the potential to develop the intelligence navigation—for example automatic obstacle avoidance, when combined with vision information and artificial intelligence technology. Furthermore, the novel attitude angle measurement is not only applicable for UAVs, but also possible for the application of other vehicles such as underwater vehicles, and these will also serve as future research projects for our group.