Haptic Glove and Platform with Gestural Control For Neuromorphic Tactile Sensory Feedback In Medical Telepresence †

Abstract

:1. Introduction

1.1. Motivation and Challenge Definition

1.2. Related Work and State of the Art

1.3. Contribution of the Present Study

2. Materials and Methods

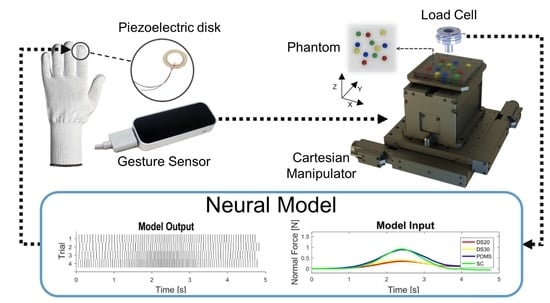

2.1. Experimental Setup

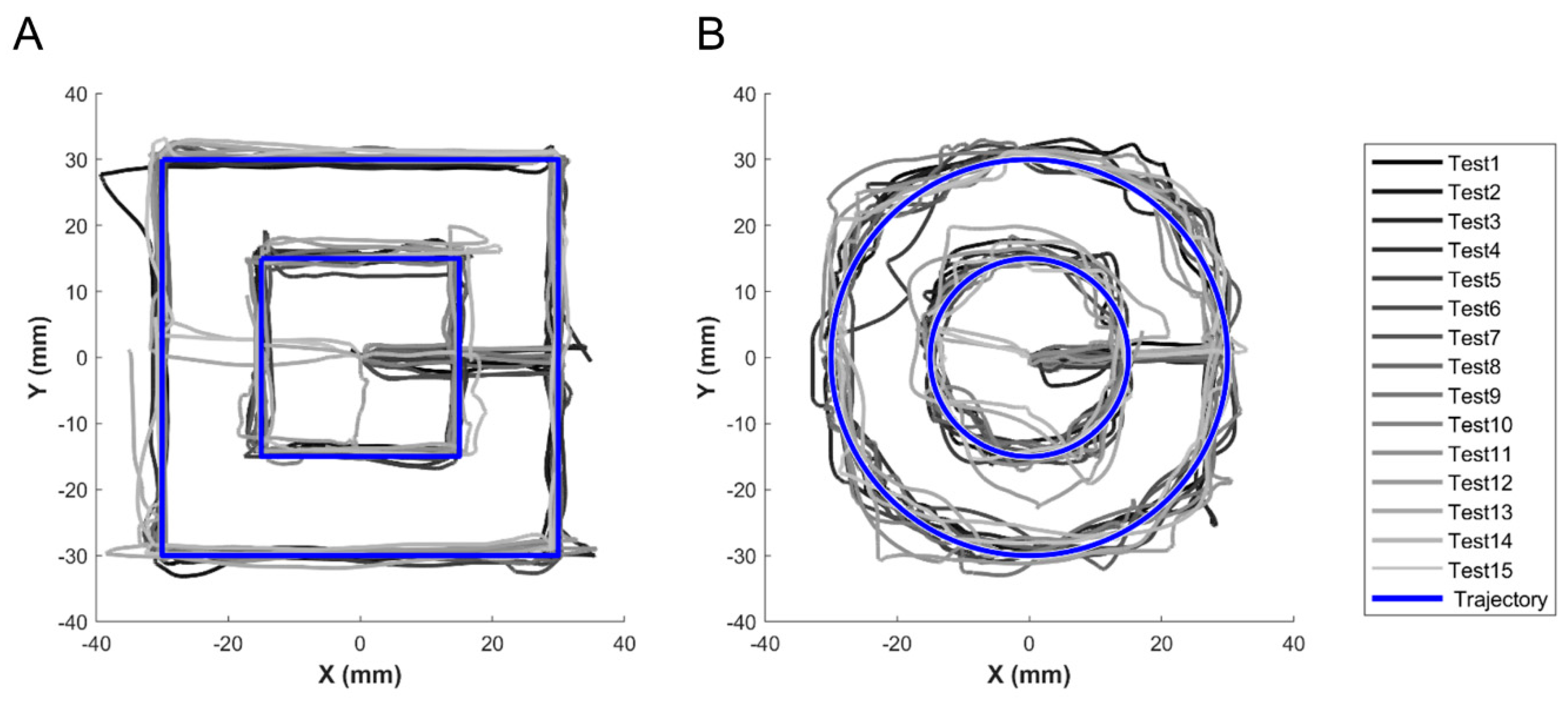

2.2. Platform and Inclusions Characterization Protocols

2.3. Inclusions Identification Experimental Methods

3. Results

3.1. Platform and Inclusions Characterization Results

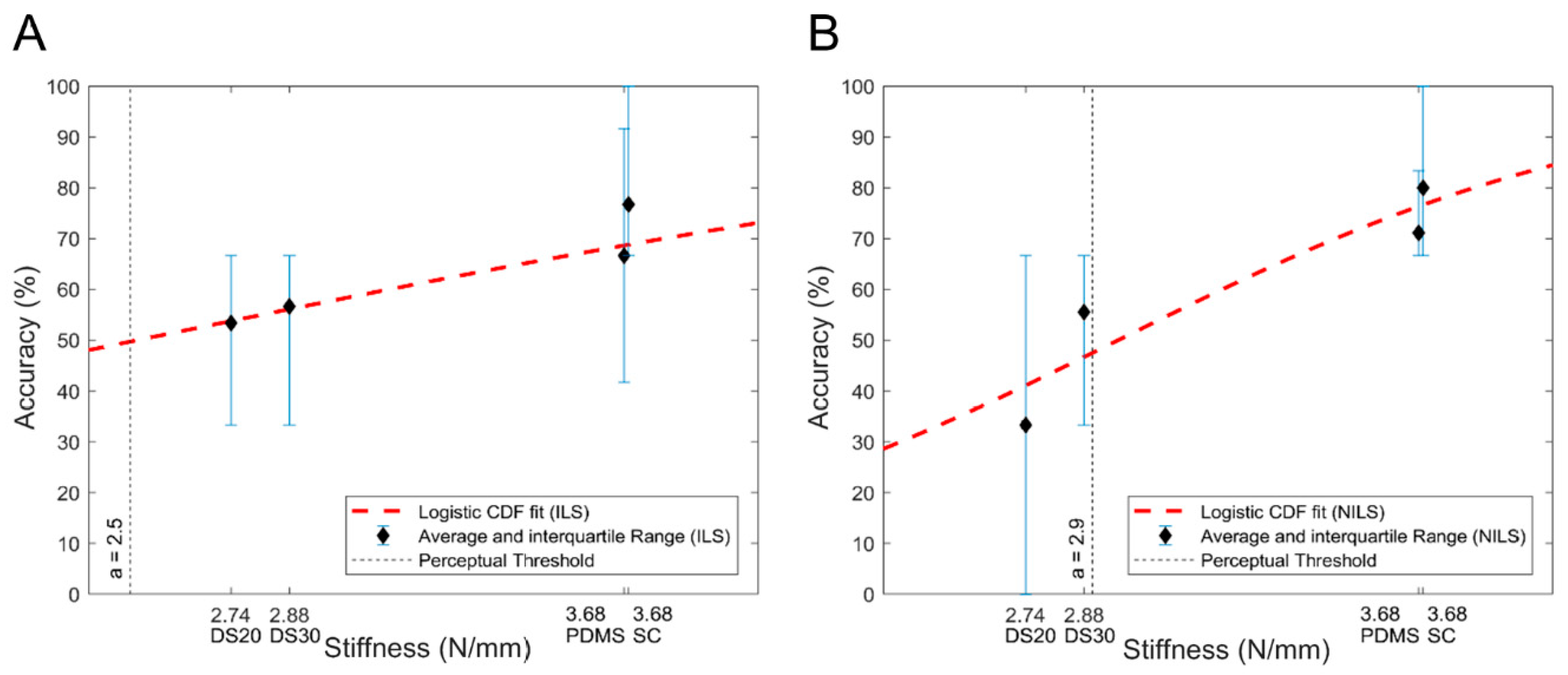

3.2. Inclusions Identification Experimental Results

4. Discussion and Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Hannaford, B.; Okamura, A.M. Haptics. In Springer Handbook of Robotics; Springer: New York, NY, USA, 2016; ISBN 978-3-540-30301-5. [Google Scholar]

- Velázquez, R.; Pissaloux, E. Tactile Displays in Human-Machine Interaction: Four Case Studies. Int. J. Virtual Real. 2008, 7, 51–58. [Google Scholar]

- Varalakshmi, B.D.; Thriveni, J.; Venugopal, K.R.; Patnaik, L.M. Haptics: State of the art survey. Int. J. Comput. Sci. Issues 2012, 9, 234–244. [Google Scholar]

- Caldwell, D.G.; Tsagarakis, N.; Wardle, A. Mechano thermo and proprioceptor feedback for integrated haptic feedback. In Proceedings of the 1997 IEEE International Conference on Robotics and Automation, Albuquerque, NM, USA, 20–25 April 1997; Volume 3, pp. 2491–2496. [Google Scholar]

- Dahiya, R.S.; Metta, G.; Valle, M.; Sandini, G. Tactile sensing—From humans to humanoids. IEEE Trans. Robot. 2010, 26, 1–20. [Google Scholar] [CrossRef]

- Johansson, R.S.; Vallbo, A.B. Tactile sensibility in the human hand: Relative and absolute densities of four types of mechanoreceptive units in glabrous skin. J. Physiol. 1979, 286, 283–300. [Google Scholar] [CrossRef] [PubMed]

- Johansson, R.S.; Westling, G. Roles of glabrous skin receptors and sensorimotor memory in automatic control of precision grip when lifting rougher or more slippery objects. Exp. Brain Res. 1984, 56, 550–564. [Google Scholar] [CrossRef] [PubMed]

- Verrillo, R.T. Psychophysics of vibrotactile stimulation. J. Acoust. Soc. Am. 1985, 77, 225–232. [Google Scholar] [CrossRef] [PubMed]

- Vallbo, A.B.; Johansson, R.S. Properties of cutaneous mechanoreceptors in the human hand related to touch sensation. Hum. Neurobiol. 1984, 3, 3–14. [Google Scholar]

- Brewster, S.; Brown, L.M. Tactons: Structured tactile messages for non-visual information display. In Proceedings of the Fifth Conference on Australasian User Interface; Australian Computer Society, Inc.: Darlinghurst, Australia, 2004; Volume 28, pp. 15–23. [Google Scholar]

- Gunther, E.; O’Modhrain, S. Cutaneous grooves: Composing for the sense of touch. J. New Music Res. 2003, 32, 369–381. [Google Scholar] [CrossRef]

- Yogeswaran, N.; Dang, W.; Navaraj, W.T.; Shakthivel, D.; Khan, S.; Polat, E.O.; Gupta, S.; Heidari, H.; Kaboli, M.; Lorenzelli, L. New materials and advances in making electronic skin for interactive robots. Adv. Robot. 2015, 29, 1359–1373. [Google Scholar] [CrossRef] [Green Version]

- Ulmen, J.; Cutkosky, M. A robust, low-cost and low-noise artificial skin for human-friendly robots. In Proceedings of the 2010 IEEE International Conference on Robotics and Automation (ICRA), Anchorage, Alaska, 3–8 May 2010; pp. 4836–4841. [Google Scholar]

- Kroemer, O.; Lampert, C.H.; Peters, J. Learning dynamic tactile sensing with robust vision-based training. IEEE Trans. Robot. 2011, 27, 545–557. [Google Scholar] [CrossRef]

- Kaboli, M.; Cheng, G. Robust tactile descriptors for discriminating objects from textural properties via artificial robotic skin. IEEE Trans. Robot. 2018, 34, 985–1003. [Google Scholar] [CrossRef]

- Su, Z.; Hausman, K.; Chebotar, Y.; Molchanov, A.; Loeb, G.E.; Sukhatme, G.S.; Schaal, S. Force estimation and slip detection/classification for grip control using a biomimetic tactile sensor. In Proceedings of the 2015 IEEE-RAS 15th International Conference on Humanoid Robots (Humanoids), Seoul, Korea, 3–5 November 2015; pp. 297–303. [Google Scholar]

- Shirafuji, S.; Hosoda, K. Detection and prevention of slip using sensors with different properties embedded in elastic artificial skin on the basis of previous experience. Robot. Auton. Syst. 2014, 62, 46–52. [Google Scholar] [CrossRef]

- Yao, K.; Kaboli, M.; Cheng, G. Tactile-based object center of mass exploration and discrimination. In Proceedings of the 2017 IEEE-RAS 17th International Conference on Humanoid Robotics (Humanoids), Birmingham, UK, 15–17 November 2017; pp. 876–881. [Google Scholar]

- Bach-y-Rita, P.; Collins, C.C.; Saunders, F.A.; White, B.; Scadden, L. Vision substitution by tactile image projection. Nature 1969, 221, 963–964. [Google Scholar] [CrossRef] [PubMed]

- White, B.W. Perceptual findings with the vision-substitution system. IEEE Trans. Man-Mach. Syst. 1970, 11, 54–58. [Google Scholar] [CrossRef]

- Collins, C.C. Tactile television-mechanical and electrical image projection. IEEE Trans. Man-Mach. Syst. 1970, 11, 65–71. [Google Scholar] [CrossRef]

- Bliss, J.C.; Katcher, M.H.; Rogers, C.H.; Shepard, R.P. Optical-to-tactile image conversion for the blind. IEEE Trans. Man-Mach. Syst. 1970, 11, 58–65. [Google Scholar] [CrossRef]

- Kaczmarek, K.A.; Webster, J.G.; Bach-y-Rita, P.; Tompkins, W.J. Electrotactile and vibrotactile displays for sensory substitution systems. IEEE Trans. Biomed. Eng. 1991, 38, 1–16. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Sziebig, G.; Solvang, B.; Kiss, C.; Korondi, P. Vibro-tactile feedback for VR systems. In Proceedings of the HSI’09, 2nd Conference on Human System Interactions, Catania, Italy, 21–23 May 2009; pp. 406–410. [Google Scholar]

- Sparks, D.W.; Ardell, L.A.; Bourgeois, M.; Wiedmer, B.; Kuhl, P.K. Investigating the MESA (multipoint electrotactile speech aid): The transmission of connected discourse. J. Acoust. Soc. Am. 1979, 65, 810–815. [Google Scholar] [CrossRef] [PubMed]

- Sibert, J.; Cooper, J.; Covington, C.; Stefanovski, A.; Thompson, D.; Lindeman, R.W. Vibrotactile feedback for enhanced control of urban search and rescue robots. In Proceedings of the IEEE International Workshop on Safety, Security and Rescue Robotics, Gaithersburg, MD, USA, 22–24 August 2006. [Google Scholar]

- Choi, S.; Kuchenbecker, K.J. Vibrotactile display: Perception, technology, and applications. Proc. IEEE 2013, 101, 2093–2104. [Google Scholar] [CrossRef]

- Alahakone, A.U.; Senanayake, S.M.N.A. Vibrotactile feedback systems: Current trends in rehabilitation, sports and information display. In Proceedings of the IEEE/ASME International Conference on Advanced Intelligent Mechatronics, Singapore, 14–17 July 2009; pp. 1148–1153. [Google Scholar]

- Pacchierotti, C.; Prattichizzo, D.; Kuchenbecker, K.J. Cutaneous feedback of fingertip deformation and vibration for palpation in robotic surgery. IEEE Trans. Biomed. Eng. 2016, 63, 278–287. [Google Scholar] [CrossRef]

- Van der Meijden, O.A.J.; Schijven, M.P. The value of haptic feedback in conventional and robot-assisted minimal invasive surgery and virtual reality training: A current review. Surg. Endosc. 2009, 23, 1180–1190. [Google Scholar] [CrossRef] [PubMed]

- Tavakoli, M.; Patel, R.V.; Moallem, M. A force reflective master-slave system for minimally invasive surgery. In Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems, Las Vegas, NV, USA, 27–31 October 2003; Volume 4, pp. 3077–3082. [Google Scholar]

- Peirs, J.; Clijnen, J.; Reynaerts, D.; Van Brussel, H.; Herijgers, P.; Corteville, B.; Boone, S. A micro optical force sensor for force feedback during minimally invasive robotic surgery. Sens. Actuators A Phys. 2004, 115, 447–455. [Google Scholar] [CrossRef]

- Tiwana, M.I.; Redmond, S.J.; Lovell, N.H. A review of tactile sensing technologies with applications in biomedical engineering. Sens. Actuators A Phys. 2012, 179, 17–31. [Google Scholar] [CrossRef]

- Hu, T.; Castellanos, A.E.; Tholey, G.; Desai, J.P. Real-time haptic feedback in laparoscopic tools for use in gastro-intestinal surgery. In Proceedings of the International Conference on Medical Image Computing and Computer-Assisted Intervention, Tokyo, Japan, 25–28 September 2002; pp. 66–74. [Google Scholar]

- Yamamoto, T.; Abolhassani, N.; Jung, S.; Okamura, A.M.; Judkins, T.N. Augmented reality and haptic interfaces for robot-assisted surgery. Int. J. Med. Robot. Comput. Assist. Surg. 2012, 8, 45–56. [Google Scholar] [CrossRef] [PubMed]

- Lee, M.H.; Nicholls, H.R. Review Article Tactile sensing for mechatronics—A state of the art survey. Mechatronics 1999, 9, 1–31. [Google Scholar] [CrossRef]

- Iwata, H.; Yano, H.; Uemura, T.; Moriya, T. Food simulator: A haptic interface for biting. In Proceedings of the Virtual Reality, Chicago, IL, USA, 27–31 March 2004; pp. 51–57. [Google Scholar]

- Ranasinghe, N.; Nakatsu, R.; Nii, H.; Gopalakrishnakone, P. Tongue mounted interface for digitally actuating the sense of taste. In Proceedings of the 2012 16th Annual International Symposium on Wearable Computers (ISWC), Newcastle, UK, 18–22 June 2012; pp. 80–87. [Google Scholar]

- Ranasinghe, N.; Do, E.Y.-L. Digital lollipop: Studying electrical stimulation on the human tongue to simulate taste sensations. ACM Trans. Multimed. Comput. Commun. Appl. 2017, 13, 5. [Google Scholar] [CrossRef]

- Cruz, A.; Green, B.G. Thermal stimulation of taste. Nature 2000, 403, 889–892. [Google Scholar] [CrossRef]

- Wilson, A.D.; Baietto, M. Advances in electronic-nose technologies developed for biomedical applications. Sensors 2011, 11, 1105–1176. [Google Scholar] [CrossRef]

- Albini, A.; Denei, S.; Cannata, G. Human hand recognition from robotic skin measurements in human-robot physical interactions. In Proceedings of the IEEE International Conference on Intelligent Robots and Systems, Vancouver, BC, Canada, 24–28 September 2017. [Google Scholar]

- Sorgini, F.; Massari, L.; D’Abbraccio, J.; Palermo, E.; Menciassi, A.; Petrovic, P.B.; Mazzoni, A.; Carrozza, M.C.; Newell, F.N.; Oddo, C.M. Neuromorphic Vibrotactile Stimulation of Fingertips for Encoding Object Stiffness in Telepresence Sensory Substitution and Augmentation Applications. Sensors 2018, 18, 261. [Google Scholar] [CrossRef]

- Kaboli, M.; Yao, K.; Feng, D.; Cheng, G. Tactile-based active object discrimination and target object search in an unknown workspace. Auton. Robots 2018, 43, 123–152. [Google Scholar] [CrossRef] [Green Version]

- Feng, D.; Kaboli, M.; Cheng, G. Active Prior Tactile Knowledge Transfer for Learning Tactual Properties of New Objects. Sensors 2018, 18, 634. [Google Scholar] [CrossRef] [PubMed]

- Prescott, T.J.; Diamond, M.E.; Wing, A.M. Active touch sensing. Philos. Trans. R. Soc. B Biol. Sci. 2011, 366, 2989–2995. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Pape, L.; Oddo, C.M.; Controzzi, M.; Cipriani, C.; Förster, A.; Carrozza, M.C.; Schmidhuber, J. Learning tactile skills through curious exploration. Front. Neurorobot. 2012, 6, 6. [Google Scholar] [CrossRef] [PubMed]

- Massari, L.; D’Abbraccio, J.; Baldini, L.; Sorgini, F.; Farulla, G.A.; Petrovic, P.; Palermo, E.; Oddo, C.M. Neuromorphic haptic glove and platform with gestural control for tactile sensory feedback in medical telepresence applications. In Proceedings of the 2018 IEEE International Symposium on Medical Measurements and Applications (MeMeA), Rome, Italy, 11–13 June 2018; pp. 1–6. [Google Scholar]

- Oddo, C.M.; Raspopovic, S.; Artoni, F.; Mazzoni, A.; Spigler, G.; Petrini, F.; Giambattistelli, F.; Vecchio, F.; Miraglia, F.; Zollo, L.; et al. Intraneural stimulation elicits discrimination of textural features by artificial fingertip in intact and amputee humans. Elife 2016, 5, e09148. [Google Scholar] [CrossRef] [PubMed]

- Oddo, C.M.; Mazzoni, A.; Spanne, A.; Enander, J.M.D.; Mogensen, H.; Bengtsson, F.; Camboni, D.; Micera, S.; Jörntell, H. Artificial spatiotemporal touch inputs reveal complementary decoding in neocortical neurons. Sci. Rep. 2017, 7, 45898. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Rongala, U.B.; Mazzoni, A.; Oddo, C.M. Neuromorphic Artificial Touch for Categorization of Naturalistic Textures. IEEE Trans. Neural Netw. Learn. Syst. 2015, 28, 819–829. [Google Scholar] [CrossRef] [PubMed]

- Sorgini, F.; Mazzoni, A.; Massari, L.; Caliò, R.; Galassi, C.; Kukreja, S.L.; Sinibaldi, E.; Carrozza, M.C.; Oddo, C.M. Encapsulation of piezoelectric transducers for sensory augmentation and substitution with wearable haptic devices. Micromachines 2017, 8, 270. [Google Scholar] [CrossRef] [PubMed]

- Izhikevich, E.M. Simple model of spiking neurons. IEEE Trans. Neural Netw. 2003, 14, 1569–1572. [Google Scholar] [CrossRef] [Green Version]

- Hodgkin, A.L.; Huxley, A.F. A quantitative description of membrane current and its application to conduction and excitation in nerve. J. Physiol. 1952, 117, 500–544. [Google Scholar] [CrossRef] [Green Version]

- Izhikevich, E.M. Which model to use for cortical spiking neurons? IEEE Trans. Neural Netw. 2004, 15, 1063–1070. [Google Scholar] [CrossRef]

- Miller, J.; Ulrich, R. On the analysis of psychometric functions: The Spearman-Kärber method. Percept. Psychophys. 2001, 63, 1399–1420. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Samani, A.; Zubovits, J.; Plewes, D. Elastic moduli of normal and pathological human breast tissues: An inversion-technique-based investigation of 169 samples. Phys. Med. Biol. 2007, 52, 1565–1576. [Google Scholar] [CrossRef] [PubMed]

- Zhang, M.; Nigwekar, P.; Castaneda, B.; Hoyt, K.; Joseph, J.V.; di Sant’Agnese, A.; Messing, E.M.; Strang, J.G.; Rubens, D.J.; Parker, K.J. Quantitative characterization of viscoelastic properties of human prostate correlated with histology. Ultrasound Med. Biol. 2008, 34, 1033–1042. [Google Scholar] [CrossRef] [PubMed]

- Winstone, B.; Melhuish, C.; Pipe, T.; Callaway, M.; Dogramadzi, S. Toward Bio-Inspired Tactile Sensing Capsule Endoscopy for Detection of Submucosal Tumors. IEEE Sens. J. 2017, 17, 848–857. [Google Scholar] [CrossRef]

| n = 15 | Square (s1 = 60 mm) | Square (s2 = 30 mm) | Circle (r1 = 30 mm) | Circle (r2 = 15 mm) |

|---|---|---|---|---|

| Target Area (mm2) | 3600 | 900 | 2827.43 | 706.86 |

| |Tracked Area – Target Area| (mm2) (µ ± σ) | 74.05 ± 65.79 | 43.27 ± 40.99 | 143.90 ± 121.28 | 91.74 ± 89.82 |

| Error Rate (%) (µ ± σ) | 2.01 ± 1.83 | 4.81 ± 4.55 | 5.09 ± 4.29 | 12.98 ± 12.71 |

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

D’Abbraccio, J.; Massari, L.; Prasanna, S.; Baldini, L.; Sorgini, F.; Airò Farulla, G.; Bulletti, A.; Mazzoni, M.; Capineri, L.; Menciassi, A.; et al. Haptic Glove and Platform with Gestural Control For Neuromorphic Tactile Sensory Feedback In Medical Telepresence †. Sensors 2019, 19, 641. https://doi.org/10.3390/s19030641

D’Abbraccio J, Massari L, Prasanna S, Baldini L, Sorgini F, Airò Farulla G, Bulletti A, Mazzoni M, Capineri L, Menciassi A, et al. Haptic Glove and Platform with Gestural Control For Neuromorphic Tactile Sensory Feedback In Medical Telepresence †. Sensors. 2019; 19(3):641. https://doi.org/10.3390/s19030641

Chicago/Turabian StyleD’Abbraccio, Jessica, Luca Massari, Sahana Prasanna, Laura Baldini, Francesca Sorgini, Giuseppe Airò Farulla, Andrea Bulletti, Marina Mazzoni, Lorenzo Capineri, Arianna Menciassi, and et al. 2019. "Haptic Glove and Platform with Gestural Control For Neuromorphic Tactile Sensory Feedback In Medical Telepresence †" Sensors 19, no. 3: 641. https://doi.org/10.3390/s19030641