Object-Based Classification of Ikonos Imagery for Mapping Large-Scale Vegetation Communities in Urban Areas

Abstract

:1. Introduction

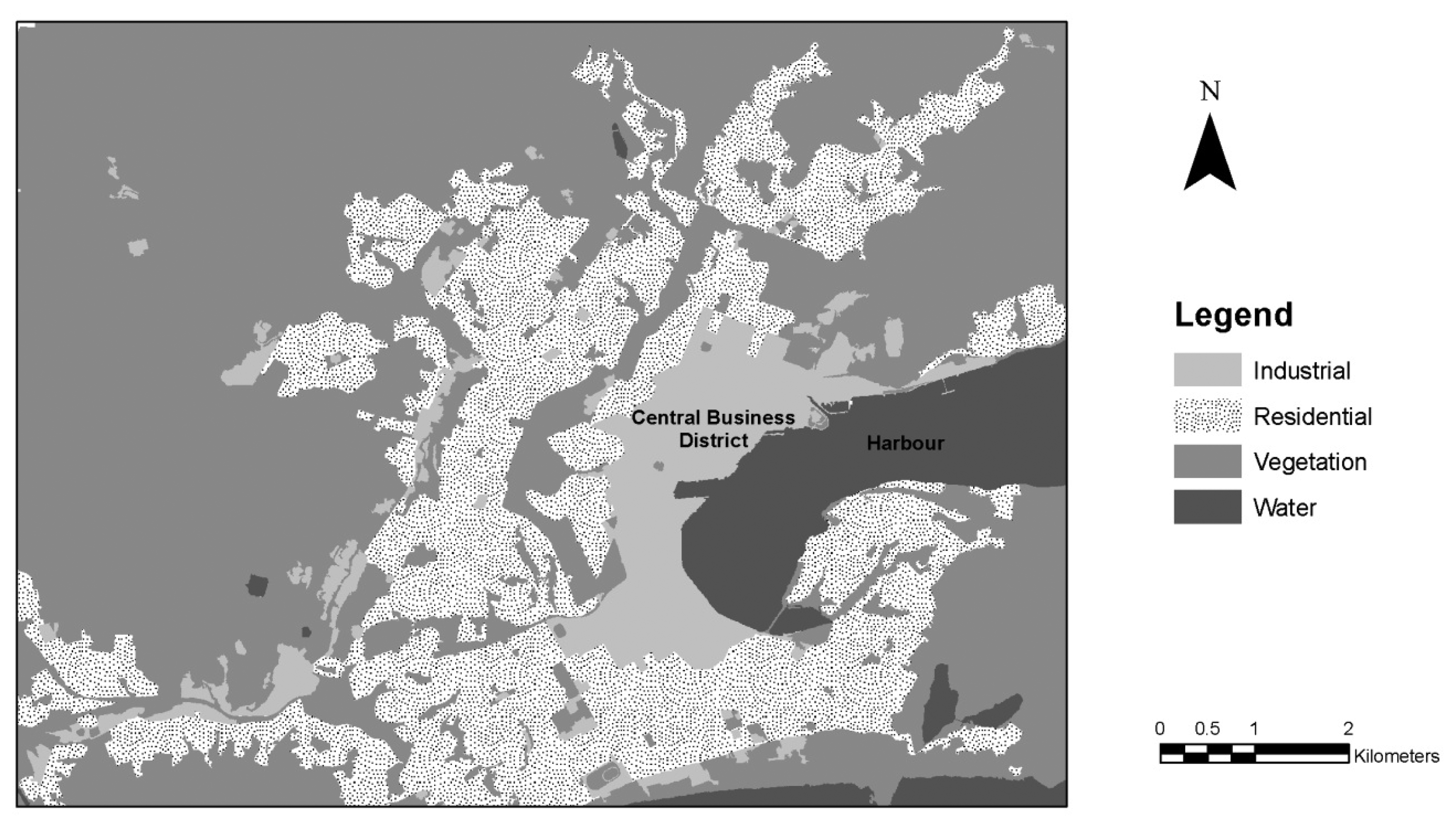

2. Study Area

3. Data and Methods

3.1. Ikonos images and preprocessing

3.2. Multi-scale image segmentation and classification

3.2.1. Image segmentation

3.2.2. Stratification of urban areas

3.2.3. Fine scale vegetation mapping

- Mean spectral value of image objects,

- Standard deviation of spectral values of image objects,

- Ratio of mean spectral value to sum of all spectral layer mean values of image objects,

- Compactness of image objects (length x width / number of pixels).

- If plantation smaller than one hectare then reclassify as tree group.

- If forest smaller than one hectare then reclassify as tree group.

- If tree group larger than one hectare then reclassify as second best class.

3.3. Accuracy assessment

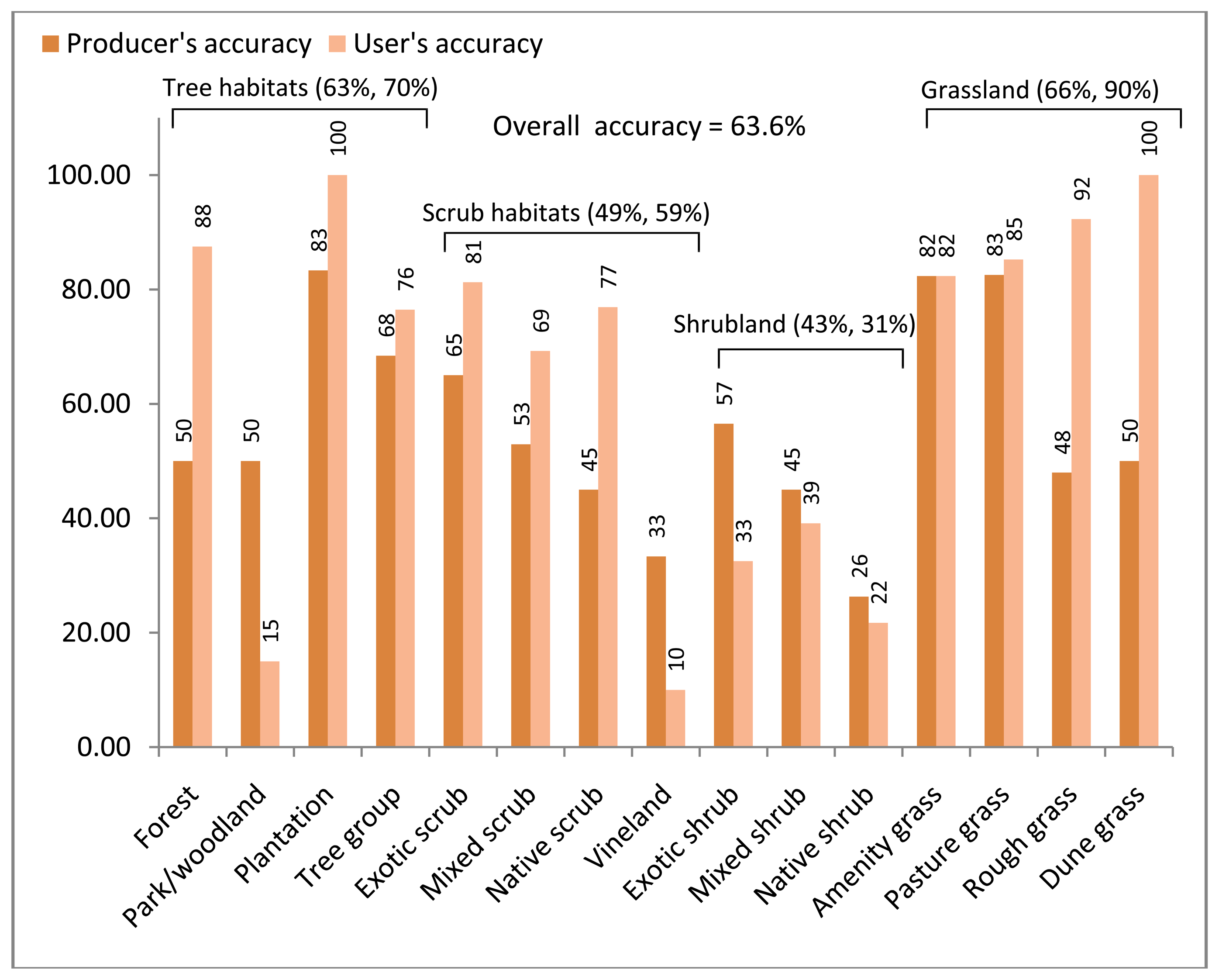

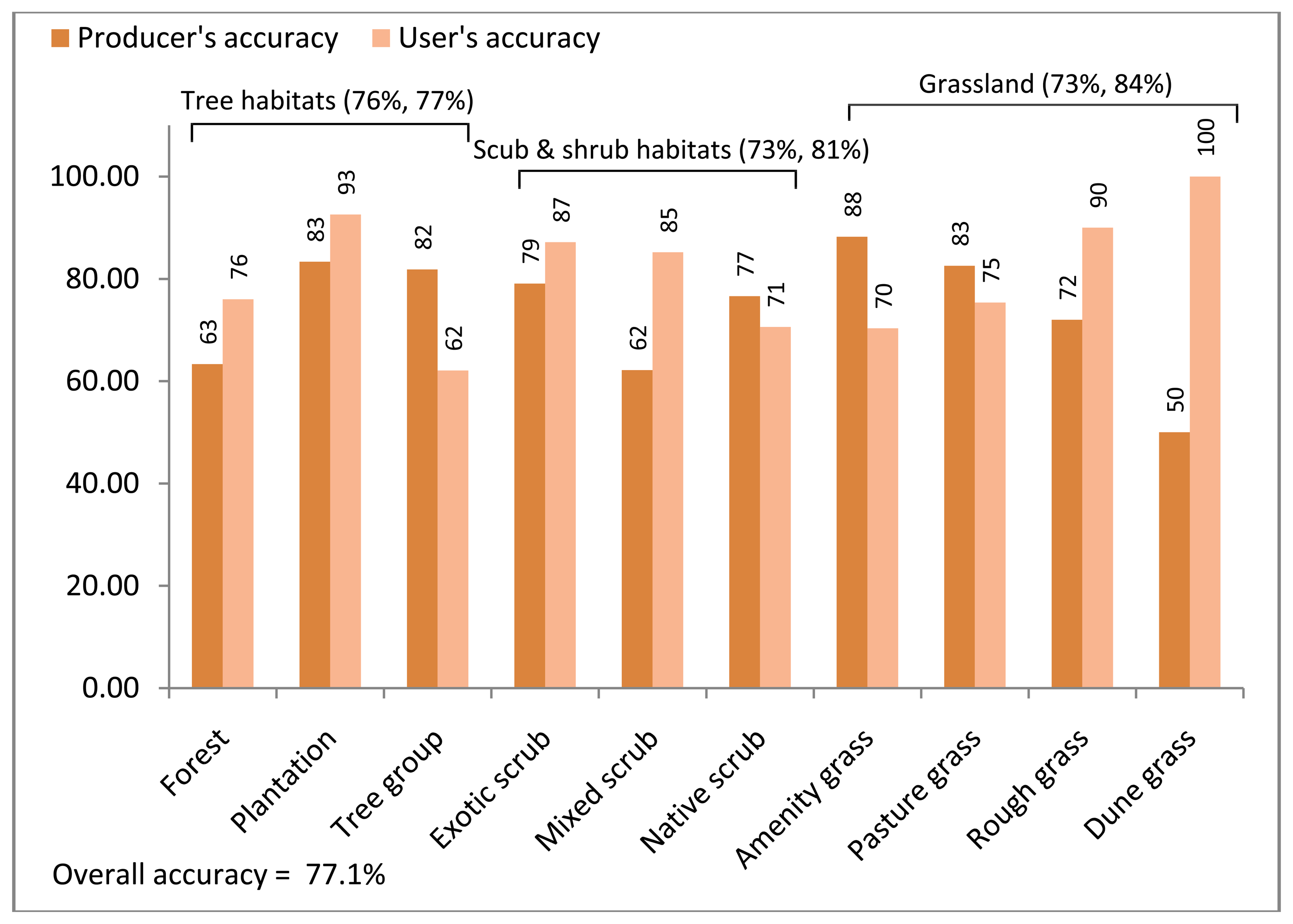

4. Results

- Classification fifteen classes: κ = 0.52, Z-statistic = 17.5

- Classification ten classes: κ = 0.74, Z-statistics = 25.2

5. Discussion

5.1. Classification accuracy

5.2. Object-based approach and urban ecological mapping

6. Conclusion

Acknowledgments

References and Notes

- Andersson, E. Urban landscapes and sustainable cities. Ecology and Society 2006, 11(1), 34. [Google Scholar]

- Atkinson, I.A.E. Derivation of vegetation mapping units for an ecological survey of Tongariro-National Park, North Island, New Zealand. New Zealand Journal of Botany 1985, 23(3), 361–378. [Google Scholar]

- Baatz, M.; Benz, U.; Dehghani, S.; Heynen, M.; Höltje, A.; Hofmann, P.; Lingenfelder, I.; Mimler, M.; Sohlbach, M.; Weber, M.; Willhauck, G. eCognition professional user guide.; Definiens Imaging GmbH: München, Germany, 2004. [Google Scholar]

- Benz, U.C.; Hofmann, P.; Willhauck, G.; Lingenfelder, I.; Heynen, M. Multi-resolution, object-oriented fuzzy analysis of remote sensing data for GIS-ready information. ISPRS Journal of Photogrammetry and Remote Sensing 2004, 58, 239–258. [Google Scholar]

- Blaschke, T.; Strobl, J. What's wrong with pixels? Some recent developments interfacing remote sensing and GIS. GIS 2001, 6, 12–17. [Google Scholar]

- Bock, M.; Xofis, P.; Mitchley, J.; Rossner, G.; Wissen, M. Object-oriented methods for habitat mapping at multiple scales– Case studies from Northern Germany and Wye Downs, UK. Journal for Nature Conservation 2005, 13, 75–89. [Google Scholar]

- Bolund, P.; Hunhammar, S. Ecosystem services in urban areas. Ecological Economics 1999, 29(2), 293–301. [Google Scholar]

- Breuste, J.H. Decision making, planning and design for the conservation of indigenous vegetation within urban development. Landscape and Urban Planning 2004, 68(4), 439–452. [Google Scholar]

- Carleer, A.P.; Wolff, E. Urban land cover multi-level region-based classification of VHR data by selecting relevant features. International Journal of Remote Sensing 2006, 27(5-6), 1035–1051. [Google Scholar]

- City Mayors Statistics. world's largest cities and urban areas in 2006 and 2020. Retrieved the 13thof November 2007 from http://www.citymayors.com/statistics/urban_intro.html.

- Congalton, R.G. A review of assessing the accuracy of classifications of remotely-sensed data. Remote Sensing of Environment 1991, 37, 35–46. [Google Scholar]

- Congalton, R.G.; Green, K. Assessing the accuracy of remotely sensed data: Principles and practices.; CRC/Lewis Press: Boca Raton, FL, 1999. [Google Scholar]

- Cornelis, J.; Hermy, M. Biodiversity relationships in urban and suburban parks in Flanders. Landscape and Urban Planning 2004, 69(4), 385–401. [Google Scholar]

- Cunningham, M.A. Accuracy assessment of digitized and classified land cover data for wildlife habitat. Landscape and Urban Planning 2006, 78(3), 217–228. [Google Scholar]

- Dial, G.; Bowen, H.; Gerlach, F.; Grodecki, J.; Oleszczuk, R. IKONOS satellite, imagery, and products. Remote sensing of Environment 2003, 88, 23–36. [Google Scholar]

- Dunn, R.R.; Gavin, M.C.; Sanchez, M.C.; Solomon, J.N. The pigeon paradox: Dependence of global conservation on urban nature. Conservation Biology 2006, 20(6), 1814–1816. [Google Scholar]

- Freeman, C. Geographic Information Systems and the conservation of urban biodiversity. Urban Policy and Research 1999, 17(1), 51–61. [Google Scholar]

- Freeman, C.; Buck, O. Development of an ecological mapping methodology for urban areas in New Zealand. Landscape and Urban Planning 2003, 63(3), 161–173. [Google Scholar]

- Giada, S.; De Groeve, T.; Ehrlich, D.; Soille, P. Information extraction from very-high resolution satellite imagery over Lukole refugee camp, Tanzania. International Journal of Remote sensing 2003, 24(22), 4251–4266. [Google Scholar]

- Guyot, G. Optical properties of vegetation canopies. In Applications of Remote Sensing in Agriculture; Steven, M.D., Clark, J.A., Eds.; Butterworth: London, 1990; pp. 19–43. [Google Scholar]

- Herold, M.; Liu, X.H.; Clarke, K.C. Spatial metrics and image texture for mapping urban land use. Photogrammetric Engineering and Remote Sensing 2003, 69(9), 991–1001. [Google Scholar]

- Herold, M.; Scepan, J.; Muller, A.; Gunther, S. Object-oriented mapping and analysis of urban land use/cover using IKONOS data. Proceedings of the 22nd EARSEL Symposium ‘Geoinformation for European Wide Integration’, Prague, Czech Republic, Jun 4-6, 2002.

- Hostetler, M. Scale, birds and human decisions: a potential for integrative research in urban ecosystems. Landscape and Urban Planning 1999, 45, 15–19. [Google Scholar]

- Jain, S.; Jain, R.K. A remote sensing approach to establish relationships among different land covers at the micro level. International Journal of Remote Sensing 2006, 27(13), 2667–2682. [Google Scholar]

- Johnson, M.; Reich, P.; Mac Nally, R. Bird assemblages of a fragmented agricultural landscape and the relative importance of vegetation structure and landscape pattern. Wildlife Research 2007, 34(3), 185–193. [Google Scholar]

- Keramitsoglou, I.; Kontoes, C.; Sifakis, N.; Mitchley, J.; Xofis, P. Kernel based re-classification of Earth observation data for fine scale habitat mapping. Journal for Nature Conservation 2005, 13, 91–99. [Google Scholar]

- Laliberte, A.S.; Rango, A.; Havstad, K.M.; Paris, J.F.; Beck, R.F.; McNeely, R.; Gonzalez, A.L. Object-oriented image analysis for mapping shrub encroachment from 1937 to 2003 in southern New Mexico. Remote Sensing of Environment 2004, 93, 198–210. [Google Scholar]

- Landis, J.R.; Kock, G.C. The measurement of observer agreement for categorical data. Biometrics 1977, 33, 159–174. [Google Scholar]

- Mathieu, R.; Freeman, C.; Aryal, J. Mapping private gardens in urban areas using object-oriented techniques and very high resolution satellite imagery. Landscape and Urban Planning 2007, 81(3), 179–192. [Google Scholar]

- Mickelson, J.G.; Civco, D.L.; Silander, J.A. Delineating forest canopy species in the north-eastern United States using multi-temporal TM imagery. Photogrammetric Engineering and Remote Sensing 1998, 64(9), 891–904. [Google Scholar]

- Millington, A.C.; Alexander, R.W. Vegetation mapping in the last three decades of the twentieth century. In Vegetation Mapping: from Patch to Planet; Millington, A.C., Alexander, R.W., Eds.; John Wiley and Sons: Chichester, 2000; pp. 321–329. [Google Scholar]

- Moller, M.; Lymburner, L.; Volk, M. The comparison index: A tool for assessing the accuracy of image segmentation. International Journal of Applied Earth Observation and Geoinformation 2007, 9, 311–321. [Google Scholar]

- Myint, S.W.; Lam, N. Examining lacunarity approaches in comparison with fractal and spatial autocorrelation techniques for urban mapping. Photogrammetric Engineering and Remote Sensing 2005, 71(8), 927–937. [Google Scholar]

- Nichol, J.; Lee, C.M. Urban vegetation monitoring in Hong Kong using high resolution multi-spectral images. International Journal of Remote Sensing 2005, 26(5), 903–918. [Google Scholar]

- Nichol, J.; King, B.; Quattrochi, D.; Dowman, I.; Ehlers, M.; Ding, X.L. Earth Observation for urban planning and management - State of the art and recommendations for application of Earth Observation in urban planning. Photogrammetric Engineering and Remote Sensing 2007, 73(9), 973–979. [Google Scholar]

- Péteri, R.; Couloigner, I.; Ranchin, T. Quantitatively assessing roads extracted from high-resolution imagery. Photogrammetric Engineering and Remote Sensing 2004, 70(12), 1449–1456. [Google Scholar]

- Ranchin, T.; Wald, L. Fusion of high spatial and spectral resolution images: The ARSIS concept and its implementation. Photogrammetric Engineering and Remote Sensing 2000, 66(1), 49–61. [Google Scholar]

- Rutchey, K.; Vilcheck, L. Development of an Everglades vegetation map using a SPOT image and the Global Positioning System. Photogrammetric Engineering and Remote Sensing 1994, 60(6), 767–775. [Google Scholar]

- Sandstrom, U.G.; Angelstam, P.; Mikusinski, G. Ecological diversity of birds in relation to the structure of urban green space. Landscape and Urban Planning 2006, 77(1-2), 39–53. [Google Scholar]

- Savard, J-P.L.; Clergeau, P.; Mennechez, G. Biodiversity concepts and urban ecosystems. Landscape and Urban Planning 2000, 48(3-4), 131–142. [Google Scholar]

- Sawaya, K.E.; Olmanson, L.G.; Heinert, N.J.; Brezonik, P.L.; Bauer, M.E. Extending satellite remote sensing to local scales: Land and water resource monitoring using high-resolution imagery. Remote Sensing of Environment 2003, 88(1-2), 144–156. [Google Scholar]

- Seidling, W. Derived vegetation map of Berlin. In Urban Ecology; Breuste, J., Feldmann, H., Uhlmann, O., Eds.; Springer-Verlag: Berlin, Germany, 1998; pp. 648–652. [Google Scholar]

- Smith, R.M.; Thompson, K.; Warren, P.H.; Gaston, K.J. Urban domestic gardens (IX): composition and richness of vascular plant flora, and implications for native biodiversity. Biological Conservation 2006, 129, 312–322. [Google Scholar]

- Statistics New Zealand. Dunedin City community profile. Retrieved the 25thof November 2005 from http://www2.stats.govt.nz/domino/external/web/CommProfiles.nsf/FindInfobyArea/071-ta.

- Shackelford, A.K.; Davis, C.H. A combined fuzzy pixel-based and object-based approach for classification of high-resolution multispectral data over urban areas. IEEE Transactions on Geoscience and Remote Sensing 2003, 41(10), 2354–2363. [Google Scholar]

- Small, C. High spatial resolution spectral mixture analysis of urban reflectance. Remote Sensing of Environment 2003, 88(1-2), 170–186. [Google Scholar]

- Stefanov, W.L.; Ramsey, M.S.; Christensen, P.R. Monitoring urban land cover change: An expert system approach to land cover classification of semiarid to arid urban centers. Remote Sensing of Environment 2001, 77(2), 173–185. [Google Scholar]

- Stow, D.; Coulter, L.; Kaiser, J.; Hope, A.; Service, D.; Schutte, K.; Walters, A. Irrigated vegetation assessment for urban environments. Photogrammetric Engineering and Remote Sensing 2003, 69(4), 381–390. [Google Scholar]

- Thenkabail, P.S.; Enclona, E.A.; Ashton, M.S.; Legg, C.; Dedieu, M.J. Hyperion, IKONOS, ALI, and ETM+ sensors in the study of African rainforests. Remote Sensing of Environment 2004, 90(1), 23–43. [Google Scholar]

- Thomas, N.; Hendrix, C.; Congalton, R.G. A comparison of urban mapping methods using high-resolution digital imagery. Photogrammetric Engineering and Remote Sensing 2003, 69(9), 963–972. [Google Scholar]

- Yu, Q.; Gong, P.; Clinton, N.; Biging, G.; Kelly, M.; Schirokauer, D. Object-based detailed vegetation classification with airborne high spatial resolution remote sensing imagery. Photogrammetric Engineering and Remote Sensing 2006, 72(7), 799–811. [Google Scholar]

- Zhang, Q.; Wang, J. A rule-based urban land use inferring method for fine-resolution multispectral imagery. Canadian Journal of Remote Sensing 2003, 29(1), 1–13. [Google Scholar]

- Zhang, X.; Feng, X. Detecting urban vegetation from IKONOS data using an object oriented approach. Proceedings of IGARSS 2005, Seoul, Korea, Jul 25-29, 2005.

| Level I - habitat type | Level II - class | Description |

|---|---|---|

| Tree habitats (avg. stem dbh > 0.1 m) | Bush and forest | Structure-rich tree stands, height > five meters |

| Plantation | Exotic tree stands of uniform age, incl. shelterbelts | |

| Park/woodland | Scattered trees over grassland or scrub | |

| Tree group | Isolated group of trees, native and/or exotic, < one ha | |

| Scrub habitats (avg. stem dbh < 0.1 m) | Exotic scrub | Closed canopy, non-native species |

| Mixed scrub | Closed canopy, mixture of non-native & native species | |

| Native scrub | Closed canopy, native species | |

| Vineland | Scrub vegetation heavily covered by woody vines | |

| Shrubland (avg. stem dbh < 0.1 m) | Exotic shrub | Open canopy, non-native species |

| Mixed shrub | Open canopy, mixture of non-native & native species | |

| Native shrub | Open canopy, native species | |

| Grassland | Amenity grassland | Intensively managed and regularly mown pasture |

| Pasture grassland | Intensively managed and regularly grazed pasture | |

| Rough grassland | Irregularly managed grassland, including tussocks | |

| Dune grassland | Grassland on consolidated dunes | |

| Non vegetation | House | Including farms (> 0.25 ha) |

| Bare ground | Inclusive bare soil, gravel, quarry, sand | |

| Road, sealed surface | Concrete (e.g. parking) | |

| Coastal water | ||

| Standing water | ||

| Ground references | Tree habitats | Scrub habitats | Shrubland | Grassland | |||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Classification | |||||||||||||||||

| For | Par | Pla | Tre | Exo | Mix | Nat | Vin | Exo | Mix | Nat | Am | Past | Rou | Du | R | ||

| Tree Habitats | Forest | 14 (50) | 2 (33) | 16 | |||||||||||||

| Park/woodland | 2 (7) | 3 (50) | 2 (7) | 5 (27) | 1 (5) | 1 (6) | 1 (17) | 2 (10) | 1 (5) | 2 (8) | 20 | ||||||

| Plantation | 25 (83) | 25 | |||||||||||||||

| Tree group | 3 (11) | 1 (17) | 13 (68) | 17 | |||||||||||||

| Scrub habitats | Exotic scrub | 13 (65) | 2 (9) | 1 (5) | 16 | ||||||||||||

| Mixed scrub | 9 (53) | 4 (20) | 13 | ||||||||||||||

| Native scrub | 2 (12) | 10 (45) | 1 (17) | 13 | |||||||||||||

| Vineland | 4 (14) | 2 (10) | 2 (12) | 7 (33) | 2 (33) | 1 (4) | 1 (5) | 1 (5) | 20 | ||||||||

| Shrubland | Exotic shrub | 1 (4) | 2 (7) | 3 (15) | 1 (6) | 1 (17) | 13 (57) | 2 (10) | 10 (52) | 1 (2) | 2 (3) | 4 (16) | 40 | ||||

| Mixed shrub | 3 (10) | 1 (3) | 1 (5) | 1 (5) | 2 (9) | 9 (45) | 2 (10) | 1 (2) | 3 (12) | 23 | |||||||

| Native shrub | 1 (4) | 2 (12) | 3 (14) | 1 (17) | 5 (21) | 1 (5) | 5 (26) | 1 (2) | 2 (8) | 2 (33) | 23 | ||||||

| Grassland | Amenity grass | 1 (6) | 42 (82) | 8 (13) | 51 | ||||||||||||

| Pasture grass | 7 (14) | 52 (83) | 2 (8) | 61 | |||||||||||||

| Rough grass | 12 (48) | 1 (17) | 13 | ||||||||||||||

| Dune grass | 3 (50) | 3 | |||||||||||||||

| Column Total | 28 | 6 | 30 | 19 | 20 | 17 | 21 | 6 | 23 | 20 | 19 | 51 | 63 | 25 | 6 | 354 | |

| Ground references | Tree habitats | Scrub & shrub habitats | Grassland | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Classification | ||||||||||||

| Forest | Plantation | Tree group | Exotic scrub | Mixed scrub | Native scrub | Amenity grass | Pasture grass | Rough grass | Dune grass | Row Total | ||

| Tree habitats | Forest | 19 (63) | 1 (4) | 1 (2) | 3 (8) | 1 (2) | 25 | |||||

| Plantation | 25 (83) | 2 (5) | 27 | |||||||||

| Tree group | 5 (17) | 18 (82) | 1 (2) | 1 (3) | 2 (4) | 2 (8) | 29 | |||||

| Scrub & shrub habitats | Exotic scrub | 1 (3) | 34 (79) | 1 (3) | 2 (4) | 1 (2) | 39 | |||||

| Mixed scrub | 2 (7) | 1 (3) | 23 (62) | 1 (2) | 27 | |||||||

| Native scrub | 4 (13) | 2 (7) | 3 (7) | 3 (8) | 36 (77) | 2 (8) | 1 (17) | 51 | ||||

| Grassland | Amenity grass | 3 (14) | 3 (8) | 1 (2) | 45 (88) | 10 (16) | 1 (4) | 1 (17) | 64 | |||

| Pasture grass | 1 (3) | 2 (5) | 2 (5) | 4 (9) | 6 (12) | 52 (83) | 2 (8) | 69 | ||||

| Rough grass | 1 (3) | 18 (72) | 1 (17) | 20 | ||||||||

| Dune grass | 3 (50) | 3 | ||||||||||

| Column Total | 30 | 30 | 22 | 43 | 37 | 47 | 51 | 63 | 25 | 6 | 354 | |

| Level I – habitat type | Level II - class | Conditional κvalue | Range * |

|---|---|---|---|

| Tree habitats | Forest | 0.74 | good |

| Plantation | 0.92 | excellent | |

| Tree group | 0.6 | moderate | |

| Scrub & shrub habitats | Exotic scrub | 0.85 | excellent |

| Mixed scrub | 0.83 | excellent | |

| Native scrub | 0.66 | good | |

| Grassland | Amenity grass | 0.65 | good |

| Pasture grass | 0.70 | good | |

| Rough grass | 0.89 | excellent | |

| Dune grass | 1 | excellent | |

| Level I – habitat type | Level II - class | Area (ha) | Percent (%) |

|---|---|---|---|

| Tree habitats | Forest | 77.5 | 2.4 |

| Plantation | 40.0 | 1.2 | |

| Tree group | 281.1 | 8.6 | |

| Scrub & shrub habitats | Exotic scrub | 57.8 | 1.8 |

| Mixed scrub | 112.6 | 3.5 | |

| Native scrub | 385.2 | 11.8 | |

| Grassland | Amenity grass | 502.2 | 15.4 |

| Pasture grass | 390.4 | 11.9 | |

| Rough grass | 31.2 | 1.0 | |

| Dune grass | 6.6 | 0.2 | |

| Total area vegetation (a) | 1884.6 | 57.6 | |

| Non vegetation | Built | 1,204.8 | 36.8 |

| Bare ground (Bare soil) | 3.6 | 0.1 | |

| Bare ground (Quarry, Gravel) | 43.7 | 1.3 | |

| Water | 131.8 | 4.0 | |

| Sand | 1.1 | 0.0 | |

| Total area other habitats (b) | 1385.0 | 42.4 | |

| TOTAL AREA (a) + (b) | 3269.6 | 100.0 | |

© 2007 by MDPI ( http://www.mdpi.org). Reproduction is permitted for noncommercial purposes.

Share and Cite

Mathieu, R.; Aryal, J.; Chong, A.K. Object-Based Classification of Ikonos Imagery for Mapping Large-Scale Vegetation Communities in Urban Areas. Sensors 2007, 7, 2860-2880. https://doi.org/10.3390/s7112860

Mathieu R, Aryal J, Chong AK. Object-Based Classification of Ikonos Imagery for Mapping Large-Scale Vegetation Communities in Urban Areas. Sensors. 2007; 7(11):2860-2880. https://doi.org/10.3390/s7112860

Chicago/Turabian StyleMathieu, Renaud, Jagannath Aryal, and Albert K. Chong. 2007. "Object-Based Classification of Ikonos Imagery for Mapping Large-Scale Vegetation Communities in Urban Areas" Sensors 7, no. 11: 2860-2880. https://doi.org/10.3390/s7112860