Locating Acoustic Events Based on Large-Scale Sensor Networks

Abstract

:1. Introduction

2. Related Work

3. Background

3.1. Distributed Acoustic Source Localization (DSL)

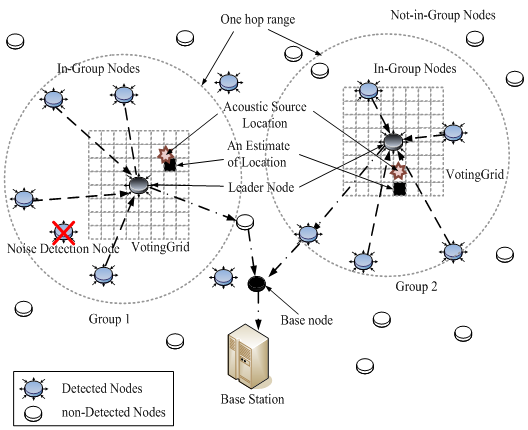

- Acoustic Source Detection: When an acoustic event occurs, all of the nodes that detected the event construct the group exchanging information about their position and detection time.

- Leader Election: The node that has the earliest detection time is elected the leader node. The leader node constructs an Essential Event Region (EER) centered on itself. The EER is a simplified circular version of the Voronoi Region for resource-constrained WSN devices, and its diameter W is calculated as in Equation (1):

- Distributed Processing: Each node individually estimates the source location by comparing its position and detection time with those of other members in the group. For each comparison, the partition line is defined as perpendicular to the midpoint of a line between two nodes. The source should be located on the side of the node that has an earlier detection time. Therefore, the right side grids of the Voting Grid score a point. After the comparison is completed, the voting result is sent to the leader node.

- Confirmation: The leader node aggregates the voting results from other members in the group and confirms the final source location.

3.2. Problems

4. Distributed Acoustic Source Localization in Large-Scale Sensor Networks

4.1. System Overview

4.2. Algorithm

4.2.1. Possible Event Region

4.2.2. Noise Removal

4.2.3. Virtual Leader Removal

5. Simulation

6. Experiments

6.1. Experimental Setup

6.2. Detection Time Error

6.3. Localization Accuracy and Response Time

6.4. Results in a Multi-Hop Environment

7. Conclusions

Acknowledgments

References and Notes

- Volgyesi, P.; Balogh, G.; Nadas, A.; Nash, C.; Lédeczi, A. Shooter localization and weapon classification with soldier wearable networked sensors. Proceedings of the 5th International Conference on Mobile Systems, Applications, and Services, San Juan, Puerto Rico, January, 2007.

- Lédeczi, A.; Nadas, A.; Volgyesi, P.; Balogh, G.; Kusy, B.; Sallai, J.; Pap, G.; Dora, S.; Molnar, K.; Maróti, M.; Simon, G. Countersniper System for Urban Warfare. ACM Trans. Sens. Netw. 2005, 1, 153–177. [Google Scholar]

- Balogh, G.; Lédeczi, A.; Maróti, M.; Simon, G. Time of arrival data fusion for source localization. Proceedings of the WICON Workshop on Information Fusion and Dissemination in Wireless Sensor Networks (SensorFusion 2005), Budapest, Hungary, July, 2005.

- Simon, G.; Maróti, M.; Lédeczi, A.; Balogh, G.; Kusy, B.; Nádas, A.; Pap, G.; Sallai, J.; Frampton, K. Sensor network-based countersniper system. Proceedings of the Second ACM Conference on Embedded Networked Sensor Systems, Baltimore, USA, November, 2004.

- Ledeczi, A.; Volgyesi, P.; Maroti, M.; Simon, G.; Balogh, G.; Nadas, A.; Kusy, B.; Dora, S.; Pap, G. Multiple simultaneous acoustic source localization in urban terrain. Proceedings of the 4th International Symposium on Information Processing in Sensor Networks, Los Angeles, USA, April, 2005.

- Trifa, V.; Girod, L.; Collier, T.; Blumstein, D.T.; Taylor, C.E. Automated wildlife monitoring using self-configuring sensor networks deployed in natural habitats. The 12th International Symposium on Artificial Life and Robotics, Oita, Japan, January, 2007.

- Wang, H.; Chen, C.E.; Ali, A.; Asgari, S.; Hudson, R.E.; Yao, K.; Estrin, D.; Taylor, C. Acoustic sensor networks for woodpecker localization. SPIE Conference on Advanced Signal Processing Algorithms, Architectures and Implementations, San Diego, CA, USA, August, 2005; pp. 591009.1–591009.12.

- Na, K.; Kim, Y.; Cha, H. Acoustic sensor network-based parking lot surveillance system. 6th European Conference on Wireless Sensor Networks (EWSN 2009), Cork, Ireland, February, 2009.

- Chen, C.; Ali, A.; Wang, H.; Asgari, S.; Park, H.; Hudson, R.; Yao, K.; Taylor, C. Design and testing of robust acoustic arrays for localization and enhancement of several bird sources. Proceedings of IEEE IPSN '06, Nashville, TN, USA, April, 2006; pp. 268–275.

- Ali, A.M.; Collier, T.C.; Girod, L. An empirical study of collaborative acoustic source localization. Proceedings of IEEE IPSN '07, Cambridge, MA, USA, April, 2007.

- You, Y.; Cha, H. Scalable and low-cost acoustic source localization for wireless sensor networks. Proceedings of the 3rd International Conference on Ubiquitous Intelligence and Computing, Wuhan, China, September, 2006; pp. 517–526.

- You, Y.; Yoo, J.; Cha, H. Event region for effective distributed acoustic source localization in wireless sensor networks. Proceedings of Wireless Communications & Networking Conference, Hong Kong, China, March, 2007.

- Krim, J.; Viberg, M. Two Decades of Array Signal Processing Research: The Parametric Approach. IEEE Signal Process. 1996, 13, 67–94. [Google Scholar]

- Schmidt, R.O. Multiple Emitter Location and Signal Parameter Estimation. IEEE Trans. Antennas Propagat. 1986, AP-34, 276–280. [Google Scholar]

- Van Veen, B.; Bukley, K. Beamforming: a Versatile Approach to Spatial Filtering. IEEE Acoust. Speech Sig. Process. Mag. 1988, 5, 4–24. [Google Scholar]

- Chen, J.C.; Yao, K.; Hudson, R.E. Source Localization and Beamforming. IEEE Sig. Proc. 2002, 19, 30–39. [Google Scholar]

- Bergamo, P.; Asgari, S.; Wang, H.; Maniezzo, D.; Yip, L.; Hudson, R.E.; Yao, K.; Estrin, D. Collaborative Sensor Networking Towards Real-Time Acoustical Beamforming in Free-Space and Limited Reverberence. IEEE Trans. Mobile Comp. 2004, 3, 211–224. [Google Scholar]

- Tmote-Sky-Datasheet. MoteIV. Available online: http://www.moteiv.com/products/docs/tmote-sky-datasheet.pdf (accessed on 2 February 2007).

- Cha, H.; Choi, S.; Jung, I.; Kim, H.; Shin, H.; Yoo, J.; Yoon, C. RETOS: Resilient, expandable, and threaded operating system for wireless sensor networks. Proceedings of IEEE IPSN '07, Cambridge, MA, USA, April, 2007.

- RETOS. Mobile Embedded System Lab. Available at: http://retos.yonsei.ac.kr (accessed on 30 November 2009).

- Kusy, B.; Dutta, P.; Levis, P.; Maroti, M.; Ledeczi, A.; Culler, D. Elapsed Time on Arrival: A Simple, Versatile, and Scalable Primitive for Canonical Time Synchronization Services. Int. J. Ad Hoc Ubiquitous Comp. 2006, 1, 239–251. [Google Scholar]

© 2009 by the authors; licensee Molecular Diversity Preservation International, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution license (http://creativecommons.org/licenses/by/3.0/).

Share and Cite

Kim, Y.; Ahn, J.; Cha, H. Locating Acoustic Events Based on Large-Scale Sensor Networks. Sensors 2009, 9, 9925-9944. https://doi.org/10.3390/s91209925

Kim Y, Ahn J, Cha H. Locating Acoustic Events Based on Large-Scale Sensor Networks. Sensors. 2009; 9(12):9925-9944. https://doi.org/10.3390/s91209925

Chicago/Turabian StyleKim, Yungeun, Junho Ahn, and Hojung Cha. 2009. "Locating Acoustic Events Based on Large-Scale Sensor Networks" Sensors 9, no. 12: 9925-9944. https://doi.org/10.3390/s91209925