1. Introduction

Injection liquid clarity is one of the vital indexes in clinical treatment using fluids. The presence of visible foreign particles, which can not be metabolized by human beings in injection liquids is prohibited [

1]. However, different kinds of foreign substances such as rubber chips, chemical fibers, glass fragments, calcium carbonate and other crystalline particles [

2] appear due to problems with injection bottle quality, packing procedures, collisions, filtration or filling. They can cause thrombus, phlebitis, tumor, anaphylactic reaction or even death when these kinds of particles are injected into the vein. Thus, foreign particles in injection liquids are now a matter of social concern which is often reported in the mass media. Thus, it was reported recently that more than 75% medicine recalls were related to the presence of foreign substances. Pharmaceutical corporations have been taking active measures against this problem to avoid damage to their public image and economic losses caused by recalls. Nevertheless, according to the statistics [

3] taken by China Pharmaceutical Industry Association (CPIA), 99.6% pharmaceutical corporations in China use a light inspection method carried out by workers [

1]: inspectors put the injection bottle under a special lamp housing, rotate and turn it over gently, suspending any visible foreign substance present in the transfusion and then deciding whether it is acceptable or not based on their inspection experience. This method is simple, but the inspection efficiency and repeatability are poor, with omission rates increasing synchronously due to workers' tiredness.

A visible foreign particles inspection system was previously developed and has been utilized in an actual product line [

4,

5]. In this system, an injection container such as an ampoule is rotated at high speed and stopped abruptly. The ampoule forms a vortex due to inertia. The moving foreign substances can be distinguished from stationary scratches on the surface of the ampoule by applying image processing techniques. Detection in rectangular plastic bottles of medicinal solutions based on real-time image processing are proposed in [

6]. Some experiments have been performed on discrimination of particle in size and shape [

7] and visualization of the spatial behavior of particles [

8]. Developmental research for detecting impurity particles in a water supply has been described in [

9].

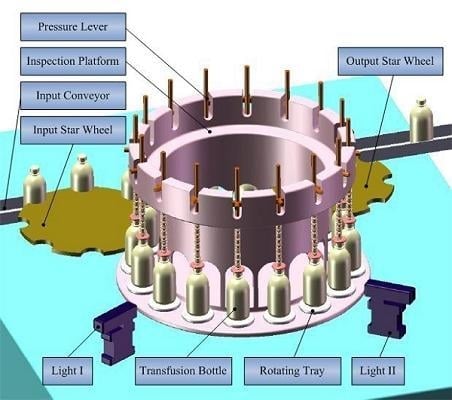

Currently we are developing an Automatic Inspection Machine (AIM) equipped with CCD cameras. Since an ampoule or a vial is small and simple in shape, having a smooth surface, it is easy to gather any foreign substances present in the center by forming a vortex in the solution and thus distinguish them from scratches and other defects of the surface of the container. Nevertheless, in our machine, the detection target is mass transfusion and it is commonly contained in a glass or plastic bottle. Sometimes the cross sections of the bottle are circular, oval, rectangular or hexagonal and they can have a complicated surface such as embossed symbols and graduations on the surface, which makes it difficult to create a vortex and distinguish the foreign particles from uneven surface features.

Hence, image segmentation and recognition of foreign particles is the key module of AIM. A large number of different image segmentation techniques are currently available. Basic algorithms like thresholding, edge detection and region growing are described in [

10]. Although simple to implement, these methods usually have serious shortcomings when employed in practical situations. The most obvious case is thresholding, which can only be used to segment an image if the segments consist of non-overlapping pixel intensity ranges, which is rarely the case. Edge detection and region growing can tolerate some intensity overlap between the different segments, but can fail if the edges between the segments are not sharp enough. Several newer and more advanced techniques have been proposed to address these problems. One interesting class of methods are the graph based methods [

11] which has yielded several impressive results [

12,

13].

PCNN is a biologically inspired type of neural network, which based on the experimental observations of synchronous pulse bursts in the cat and the monkey visual cortex [

14]. Compared with previous classical Artificial Neural Networks model, PCNN can be applied to image processing without training process. However, it needs to properly set a number of numerical control parameters for which it is usually not known a priori how to select the best values for a given application. The optimum values typically depend on attributes of the images to be segmented, e.g. pixel intensity ranges, contrasts and noise levels. As a consequence, values successful for one set of images may not be useful for other images with different intensity ranges, contrasts and noise levels. A number of different solutions have been suggested to address this problem, including the use of adaptive connection weights between neighboring neurons [

15], automatic adaptation by genetic algorithms [

16], reinforcement learning [

17] and neural networks [

18].

This paper proposes an adaptive segmentation method based on a modified PCNN, which multi-thresholds determined by the water region area in a histogram. Those control parameters can be achieved adaptively. A number of injection images are segmented. According to continuity and smoothness properties of extracted objects' moving traces, the inspection machine judges whether this injection is acceptable. Furthermore, improved spin/stop technique of controlling motors and illumination styles are applied through a large quantity of experiments to reduce the influence of air bubbles. The experimental results show that the inspection machine is superior to proficient workers, and can detect visible foreign particles effectively with satisfactory speed and accuracy.

In the following paragraphs, Section 2 introduces the architecture of the PCNN model. Section 3 gives intelligent inspection machine's overview. Section 4 brings forward key algorithms of foreign substances detection. Section 5 is devoted to the experiments and analysis of their results. Section 6 gives the conclusion.

2. Architecture of the PCNN Model

PCNN is a biologically inspired type of neural network, which is based on the experimental observations of synchronous pulse bursts in the cat's visual cortex by Eckhorn

et al. and was adapted for image processing by Johnson [

19].

2.1. PCNN Neuron Model and Parameters Determination

The PCNN is significantly different from other artificial neural network models in both its structure and operation. There is no training phase involved. Each neuron in the processing layer is directly tied to an input, in this case an image pixel or a set of neighboring image pixels. These are the feeding inputs, and they are also linked to nearby neurons, the linking inputs. The feeding inputs are iteratively processed together with the linking inputs producing a pulse train. The PCNN neuron consists of three parts [

19,

20]: the dendritic tree, the linking modulation, and the pulse generator, as shown in

Figure 1.

The corresponding neuron's functions are:

where,

Fij - feeding input;

Lij - linking input;

Uij - internal state;

Sij - external stimulus;

θij - dynamic threshold;

M, W - synaptic weights matrix to neuron;

VF,

VL and

Vθ - normalizing constant;

αF,

αL and

αθ - negative decay constants of leaky integrator;

β - linking strength between neurons;

n - iteration times;

Yij - output.

A PCNN is a two-dimensional non-training neural network in which each neuron in the network corresponds to one pixel in an input image. The neuron receives its input (e.g. intensity) as an external stimulus. However, each neuron also connects with its neighboring neurons, receiving local stimuli from them. These stimuli are combined in an internal activation system, and are accumulated until they exceed a dynamic threshold. This will result in a pulse output, which is called natural fire. Through an iterative process, the algorithm produces a temporal series of binary images as outputs. Due to the linking of neighboring neurons, segments of the image consisting of pixels of similar intensity values tend to pulse together, which is called captured fire. The output of a PCNN can therefore be used for image segmentation by taking the pixels corresponding to synchronously pulsing neurons as segments.

Nevertheless, the performance of image segmentation based on PCNN depends on the suitable PCNN parameters. It is necessary to adjust the various threshold parameters of its mathematical model manually and then can achieve the optimum processing. As Karvonen [

21] mentioned, a very large set of data should be required to optimize PCNN parameters, which is unfeasible in most applications. Hence, in order to determine PCNN parameters adaptively, this paper suggests an adaptive segmentation method based on a modified PCNN, which multi-thresholds determined by water region area in histogram. The implementation of the algorithm is computationally simple and can be used in foreign particles real-time detection in injections. The detailed process is given as following.

2.2. Multi-Threshold Acquisition Using “Water Region Area” Method

A novel threshold auto-detection algorithm in image histograms is proposed. In accordance with the intuitional features of the histogram, the peaks of the histogram are considered as watersheds, each valley including two neighboring peaks and a valley bottom points. We call the maximal water capacity in each valley the “water region area”.

Step 1: Draw image histogram and normalize them.

Step 2: Seek all peaks and valley bottom points in the histogram.

Step 3: Calculate the water region area from the left valley bottom point. Define θ that lies within [0.01, 0.05]. The smaller θ is, the more threshold points we will get. When the water region area is larger than θ, the corresponding valley bottom point will be kept in threshold array Tm. Meanwhile, the corresponding left side peak point will be kept in peak points array Pm. Otherwise the valley will be taken as invalid. At this situation, comparing the two peaks' values located in the valley's two sides:

If the left peak point is larger than the right one, it will be treated as the new left peak point. While the next right peak point will be the new right peak one, the smaller between the current and the next valley bottom point will be regarded as the new valley bottom point.

Otherwise, the right peak point, the right valley bottom point and the next right peak point will be regarded as new left peak point, new valley bottom point and new right peak point respectively and then the new water region area will be calculated again.

Step 4: Iteratively execute Step3 until all valley bottom points have been processed and then we can get the threshold array

Tm (

m = 1, …,

M and

T1<…<

Tm) and the corresponding peak array

Pm (

m = 1, …,

M+1 and

P1 <…<

PM+1). Hence, a valid valley

Vm includes two neighboring peaks {

Pm,

Pm+1} and a threshold Tm (

Pm <

Tm <

Pm+1).

Figure 12 in Section 5 shows water regions and corresponding thresholds of images with black and white foreign particles respectively.

2.3. Modified PCNN Model

Considering the applications of foreign particles segmentation in injections, the PCNN model we have applied is a modification of the original PCNN [

19], adapted slightly from Karvonen's model [

21], and is implemented by applying iteratively the equations:

where

Tij is a threshold value. They are a set of fixed threshold values,

Tm (m = 1, …,

M) determined by water region area method mentioned above. Linking weight

wijkl is determined by Function (9), which satisfies human vision system (HVS) the best after many experiments' comparisons:

where,

dijkl is the linking distance between neuron (

i, j) and (

k, l), as shown in

Figure 2.

Starting with the biggest threshold TM, an object whose mean gray value is larger than TM will be picked out at the first iteration. We keep the threshold TM fixed during the following iterations until no firing happens. After the first iteration loop, both the natural firing pixels and capture firing pixels are collected, which is the first level PCNN segmented objects with the largest gray value. Then the second level objects can be got by the same algorithm using threshold TM-1. Repeating this progress until all thresholds are processed.

Considering those pixels whose intensities are smaller than peak point

Pm, ought not to be captured at

Tm even if they have the largest linking value 1, so in the iteration loop at

Tm, the value of

βm is:

Because the least peak point Pm may be 0, we choose the corresponding βm to be 0.1- 0.3 at this situation.

4. Key Algorithms of Foreign Substances Detection

The majority of injection bottles used in China do not strictly meet quality standards. Fingerprints, graduations, dust, can sometimes be seen on the surface of the bottles. Even some foreign substances or bubbles, shown in

Figure 8(a), can be found in the glass walls, which cause lots of difficulties for detection. In a single image, the foreign particles lack structural information and distinct characteristics, especially they are small and usually occupy only several or dozens of pixels in the image. It is hard to distinguish them from noise (lights) and disturbances (dust or graduations) just depending on their gray values. Hence, special foreign particle detection algorithms should be used. Foreign substances in liquid have two obvious properties: the moving trace is continuous over time and there are certain gray value differences between foreign particles and background, so a sequence image difference algorithm based on spatiotemporal continuity is proposed to remove existing static disturbances in the background, extracting objects with modified PCNN and performing the judgment according to the trace. A detection flowchart is shown in

Figure 9.

4.1. Image Preprocessing

In the image with foreign objects, the majority of the pixels are occupied by background which belongs to slowly varying low frequency parts, while the foreign particles are high frequency parts and they are unrelated with the background. Hence, low frequency components can be inhibited with a high pass filter letting high frequency ones pass, nevertheless some parts of the high luminance noise is kept.

Image M*N can be recognized as formed with large low frequency background and some high frequency parts. Suppose the captured sequence images are:

where,

p(x, y; ti) are ideal background;

q(x, y; ti) are image details including high frequency noise and foreign particles. According to convolution theorem:

where

F(u, v; ti) is the Fourier transformation of original images;

H(u, v; ti) is the transfer function;

G(u, v; ti) is the high-pass filtering output images after Fourier transformation, then reverse transform it and get the enhanced images. Butterworth high-pass filter is applied in this article.

4.2. Sequential Images' Difference

Assuming

P1, P2, …, Pn are

n-frame original images,

T1, T2, …, Tn are filtered sequence, latter frame subtract the former one and get

n-1-frame sequence

C1, C2, …, Cn-1. Only noise and foreign objects remain in the difference images:

To avoid the trivial possibility of a tiny spatial location difference moving objects on two frames, the camera's external asynchronous capture mode is employed. A PLC sends trigger pulses every 200 milliseconds.

Figure 8(d) shows the absolute difference between two successive frames.

As we can see from the difference image, small particles do exist in the glucose injection liquids. However, injection liquids are chemical liquids which also contain some tiny medicinal powder. Referring to [

1], elaborate requirements and demonstrations are defined on small objects' dimensions whether they can be accepted or not. To decide whether the bright spots, shown in

Figure 8(d), are foreign substances, noise or acceptable tiny medicinal powders, more accurate object outlines should be obtained.

4.3. Foreign Substance Segmentation Based on Modified PCNN

Difference images are segmented with modified PCNN directly because of its superiority, omitting energy accumulation method described in [

22], which improves the detection speed effectively.

4.4. Feature Extraction and Foreign Particles Judgment

To recognize multiple objects (shown in

Figure 11), every particle in the sequence images should be labeled and their invariance properties must be extracted. Selected properties need perfect invariance to image geometrical transformations such as motion, rotations and translations. Ideal matching results can be achieved with proper values which have invariance properties. Three values, which are calculated in the Euclidean space, concluded from many experiments have good invariance property shown as follows:

Ratio of objects' circumferences to areas, λ1. λ1 = Li/Si, Li is circumference and Si is the area of the particle.

Length-width ratio λ2. Searching each object's minimum circumscribed rectangle Ri, which length is ai, width is bi. λ2 = ai/bi.

Compactness λ3. λ3 = Si /(ai*bi).

Furthermore, coordinates (

Cix, Ciy) of particles and remaining noise' centroids are calculated.

f(x, y) is the gray value:

Because of λ1, λ2, λ3's good invariance properties, there are almost no changes in the sequence images. Hence, points which have shortest distance between images' proper values are the best matched ones. Distance can be calculated with Formula (15). We note the corresponding point's coordinates and connect them into a line:

According to object's moving trace, we can judge whether it is foreign substance or caused by noise through two principles:

Images are captured when the bottle are kept static. At this moment, foreign particles are falling and their centroids' ordinates become larger (suppose the origin of coordinates is located in the top left corner).

Traces formed by particles are smooth, while which generated by noise are unordered.

So, one smooth trace with objects' ordinates increasing indicates the existence of the foreign particles.

6. Conclusions

This article describes a real-time visual based automatic intelligent inspection system for foreign particles in injection liquids. Obstructions to detection due to unevenness such as scratches, embossed symbols, and graduations on the bottle surface are removed with sequence image processing. Parameters of PCNN model are achieved adaptively by water region area in histogram and the segmentation results are effective. It is capable of perfectly segmenting images even when there is a considerable overlap in the intensity ranges of adjacent regions. The inspection machine judges the injection qualified or not according to the continuity and smoothness properties of extracted objects' moving traces.

The correct detection rate is at least about 99.1%, which was confirmed through lots of experiments. Other tests show that intelligent inspection machine's detection effects for injection are superior to those of proficient workers.

Future work may focus on pharmaceutical management guided by the inspection machine and design higher precision servo driving system to reduce the influence brought by the vibration deriving from the mechanical system. More suitable illumination styles and different image processing algorithms should be explored with the current inspection platform.