Effects of Scale, Question Location, Order of Response Alternatives, and Season on Self-Reported Noise Annoyance Using ICBEN Scales: A Field Experiment

Abstract

:1. Introduction

- Type of contact: The ICBEN recommendation states that both annoyance scales can be used irrespective of the type of contact (either personal, self-administered/by postal mail, or via telephone).

- Type of question and scale: While the numeric 11-point scale is believed to provide greater assurance that the scale points are equally spaced and hence be suitable for linear regression analysis, the 5-point verbal scale is suggested to be used when communication between respondents and policy makers stands in the foreground [9]. Nevertheless, in any given study, it is up to the researcher to decide upon which question’s results (5-point or 11-point) to finally use for the formulation of exposure–response relationships for annoyance as well as for the percentage of highly annoyed persons (%HA) that are regarded as the “valid” outcome of the study. ICBEN recommends to always use both questions. Still, it has not been investigated so far whether the particular sequence of the presentation of the two questions (5-point question first, then 11-point question, or vice-versa) affects the answering behavior.

- Order of response alternatives (scale points): In psychological method studies, the order in which response alternatives are presented has been shown to influence the answering behavior of respondents [10]. While the ICBEN recommendation for the 5-point verbal scale is to present it with the highest intensity (“extremely”) at the top/left, the corresponding International Organization for Standardization (ISO) standard [11] recommends the opposite, namely to put the lowest intensity (“not at all”) at the top/left of the scale. On the same note, in order to save space on a self-administered questionnaire, the 5-point verbal question/scale is usually not presented vertically as recommended both by ICBEN and ISO, but in a horizontal manner. It is yet unknown if and to which degree such differences in question presentation affect the response behavior.

- Location/placement of annoyance questions: The place where questions appear in a questionnaire can affect the way in which respondents interpret and thus answer them [10,12]. ICBEN generally recommends placing the annoyance questions early in the inter-view/questionnaire. However, to our knowledge, studies to empirically test the effect of the location of ICBEN annoyance questions have not been carried out yet. For the researcher, it is certainly important to know if annoyance questions in a questionnaire (which may contain many other noise-related questions) are treated differently by the respondents, depending on whether the annoyance questions are asked after the respondent filled out a range of other noise-related questions, or before such questions.

- Season: There is no clearly preferable time of the year for noise annoyance surveys to be carried out in order to inform general noise policy decisions, but surveys are seldom carried out in the middle of the winter or during the peak period of the holiday season in summer. In most general noise surveys, the idea is that respondents rate their average long-term noise annoyance, i.e., in the preceding one-year period. This also finds expression in the aforementioned ICBEN standard questions that both begin with “Thinking about the last 12 months...”. However, despite this clear instruction, meteorological and maybe also other non-weather but time of the year-related circumstances may still affect annoyance responses at the time of a survey. The available evidence of seasonal effects have been analyzed by Miedema et al. [2], who suggest that previous studies’ estimates of long-term noise annoyance reactions may have been affected by the time of the year when residents were interviewed, with higher annoyance in warmer seasons.

2. Methods

2.1. Overview

2.2. Survey Design

2.2.1. Sample Size Estimation

2.2.2. Sampling Strategy

2.2.3. Noise Exposure Assessment

2.2.4. Factorial Design

2.2.5. Meteorological Data

2.2.6. Questionnaire

2.3. Statistical Analysis

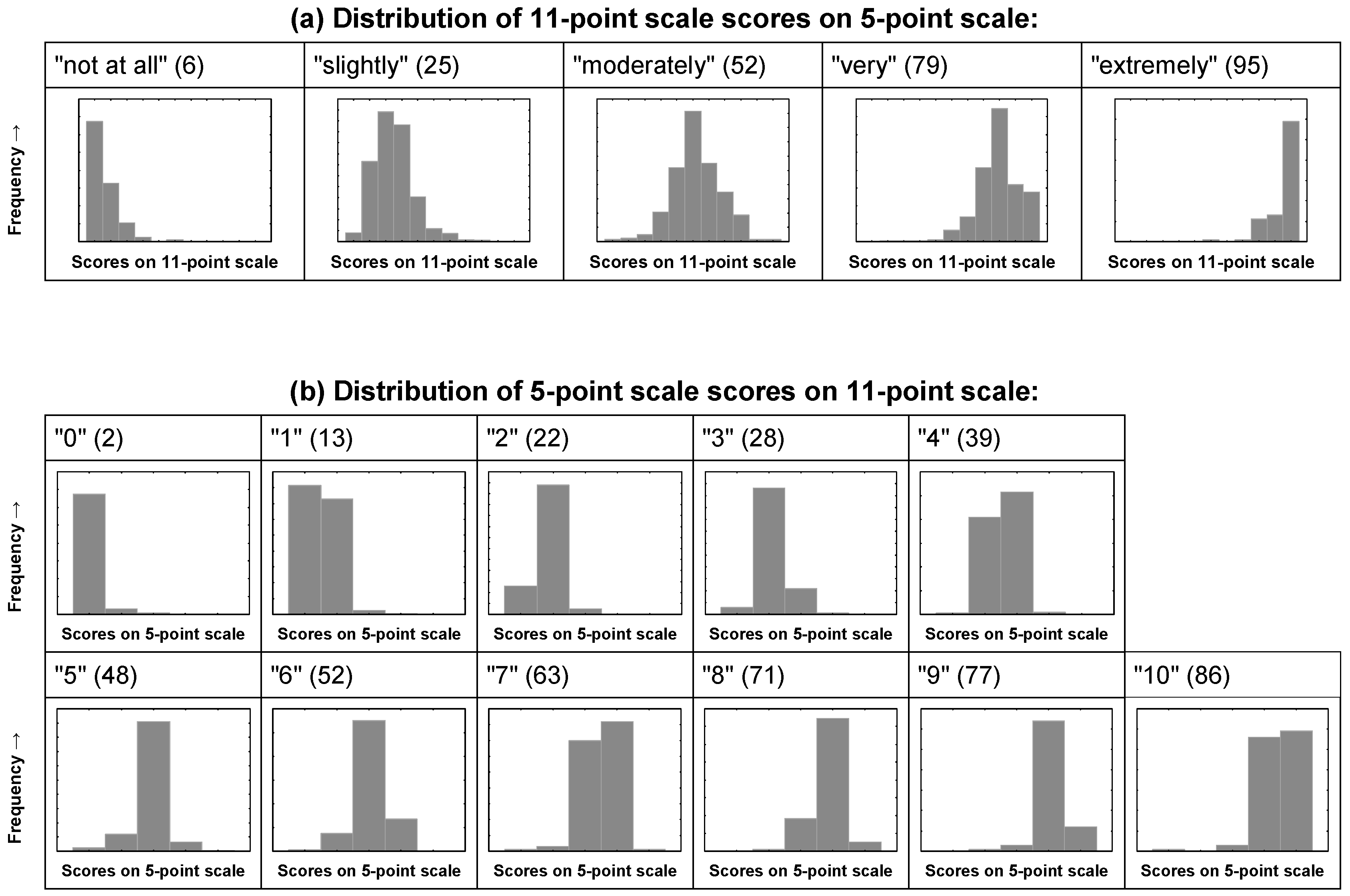

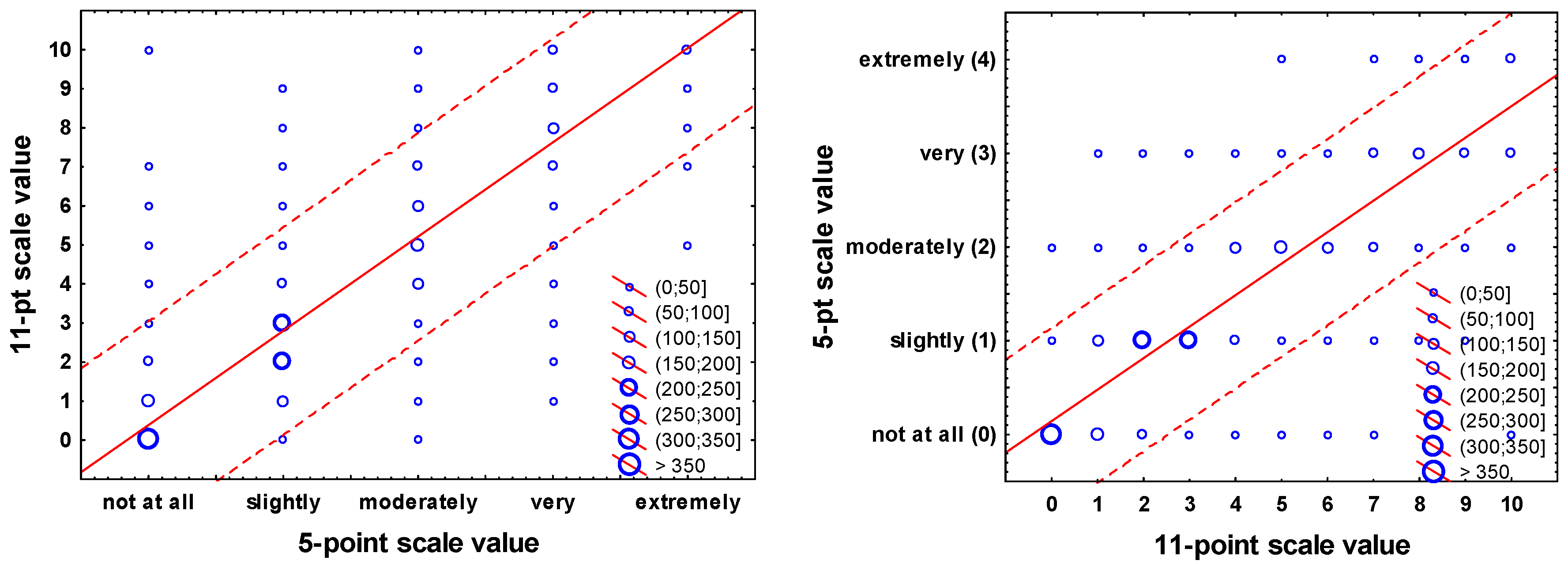

2.3.1. Scale Conversions

2.3.2. Analysis of Agreement and Statistical Modeling

3. Results

3.1. Response Statistics and Sample Characteristics

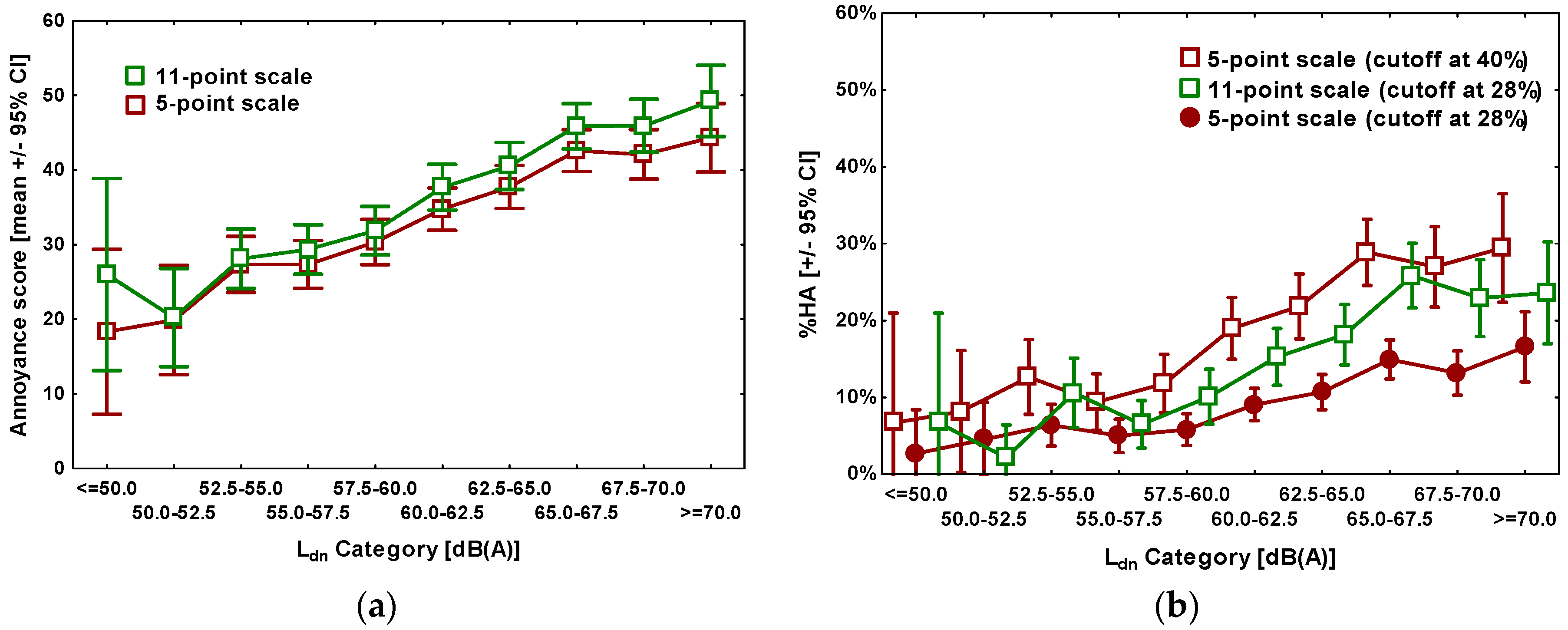

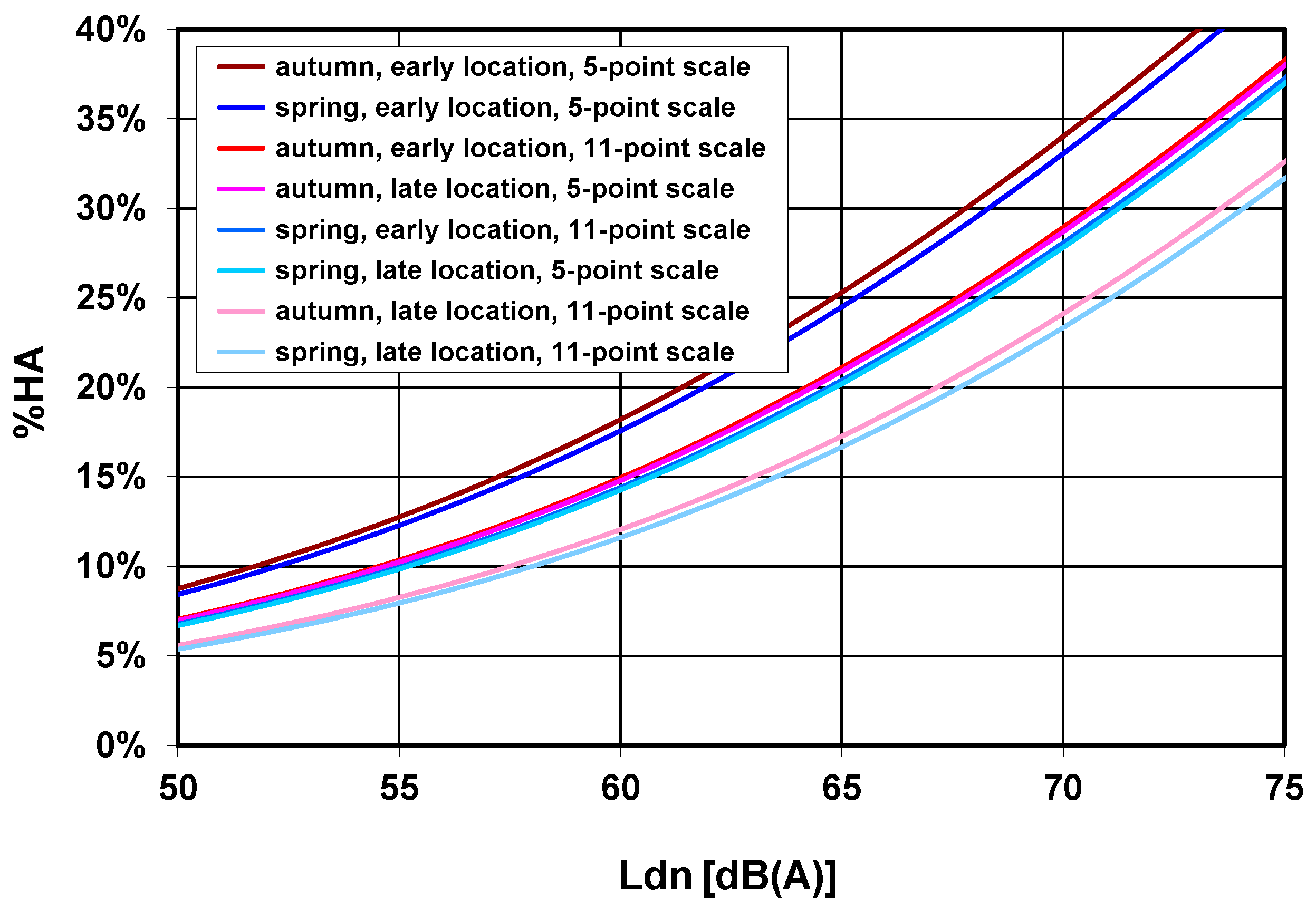

3.2. Exposure-Effect Relationships

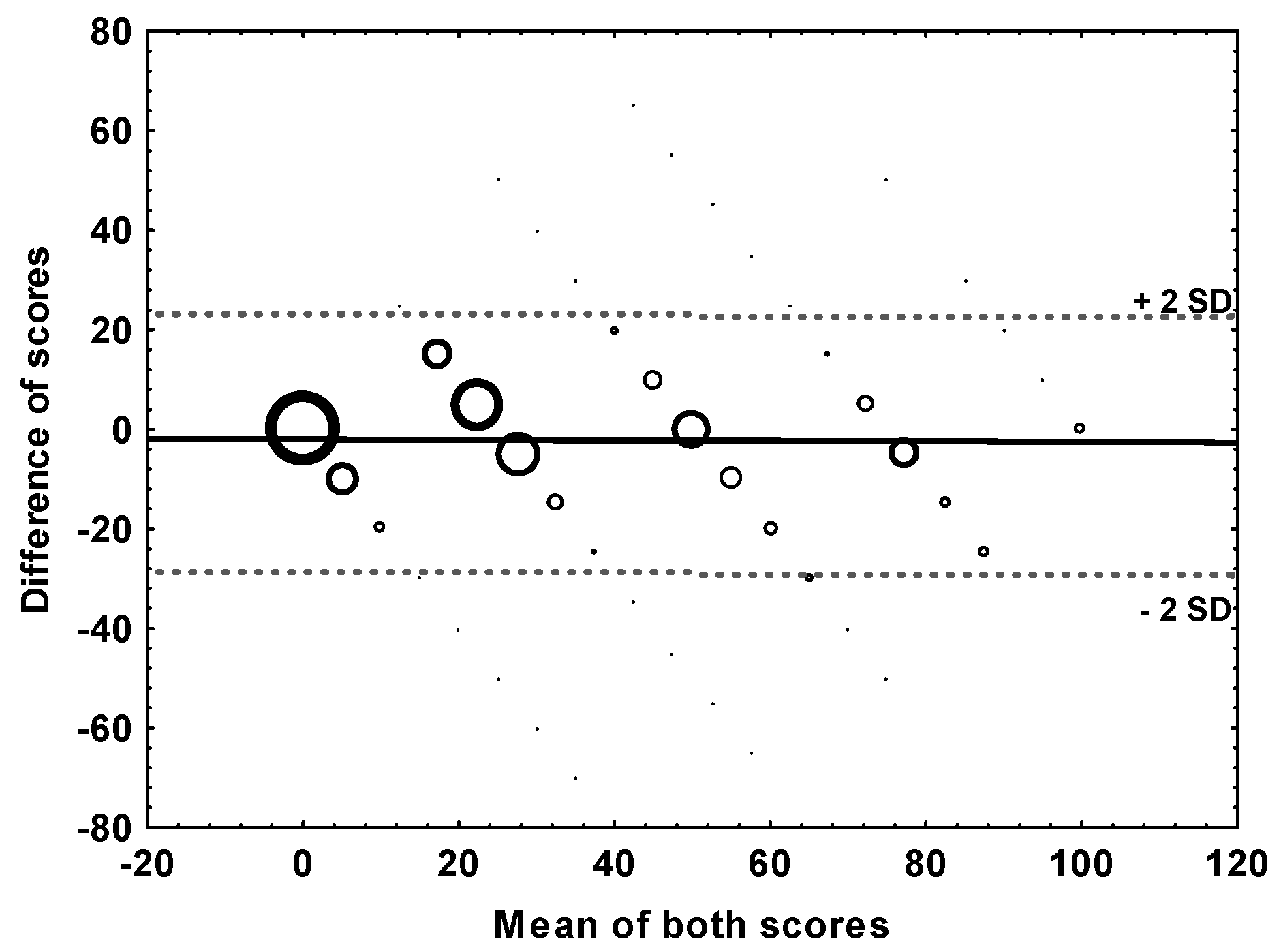

3.3. Degree of Agreement between the Two Scales

3.4. Simple Conversion between the Values on Both Scales

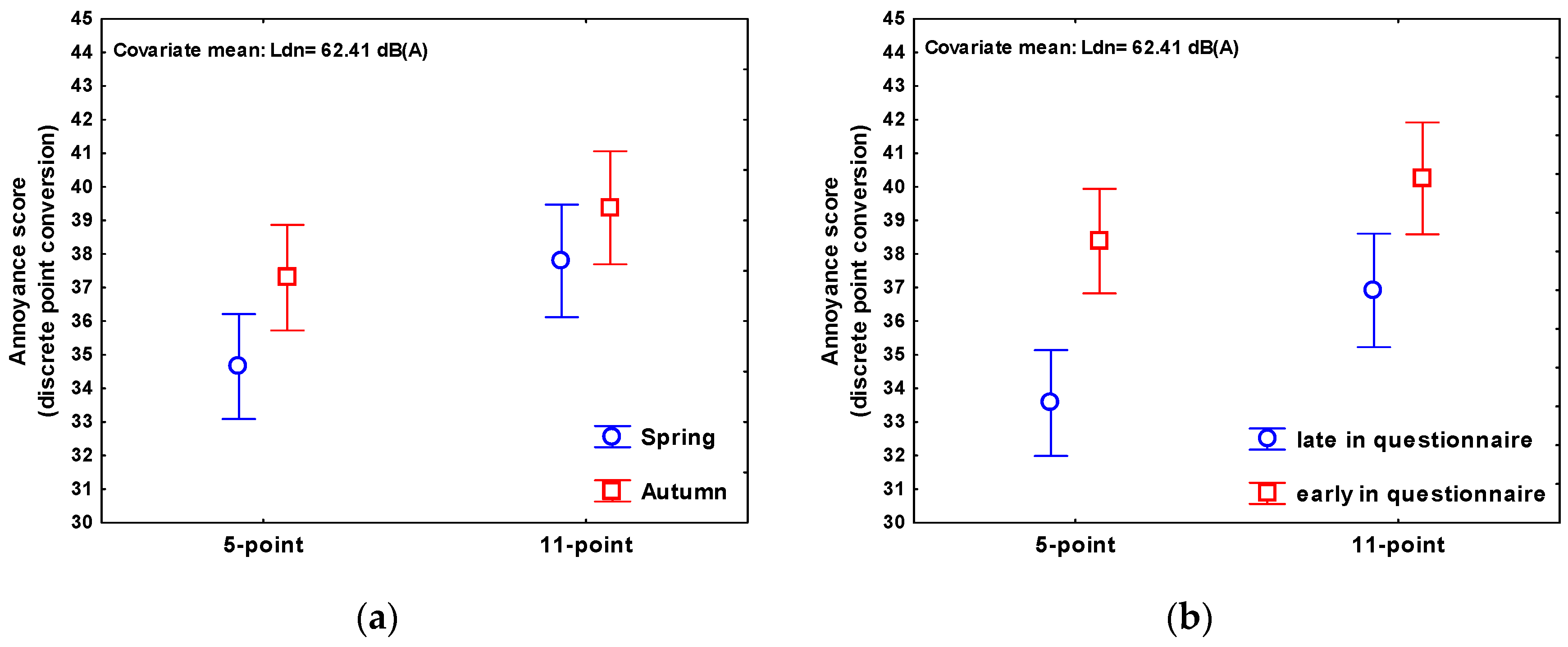

3.5. Effects of Season, Scale Type and Question Presentation Characteristics on Reported Annoyance

3.5.1. Effects on Annoyance Score

- average day temperature in the period 05:40–17:40 h (°C).

- total precipitation in the period 07:00–19:00 h (mm).

- absolute number of sunshine hours within the 24-h period (h).

3.5.2. Effects on the Probability to Be “Highly Annoyed” (PHA)

4. Discussion

4.1. Brief Summary

4.2. Strengths and Limitations

5. Conclusions

Supplementary Materials

Acknowledgments

Author Contributions

Conflicts of Interest

References

- Sapsford, R. Survey Research; Sage: London, UK, 1999. [Google Scholar]

- Miedema, H.; Fields, J.M.; Vos, H. Effect of season and meteorological conditions on community noise annoyance. J. Acoust. Soc. Am. 2005, 117, 2853–2865. [Google Scholar] [CrossRef] [PubMed]

- Meyer, T.; Schafer, I.; Matthis, C.; Kohlmann, T.; Mittag, O. Missing data due to a ‘checklist misconception-effect’. Soz. Prav. 2006, 51, 34–42. [Google Scholar] [CrossRef]

- Taylor, A.W.; Dal Grande, E.; Gill, T. Beware the pitfalls of ill-placed questions—Revisiting questionnaire ordering. Soz. Prav. 2006, 51, 43–44. [Google Scholar] [CrossRef]

- Bodin, T.; Bjork, J.; Ohrstrom, E.; Ardo, J.; Albin, M. Survey context and question wording affects self reported annoyance due to road traffic noise: A comparison between two cross-sectional studies. Environ. Health 2012, 11, 14. [Google Scholar] [CrossRef] [PubMed]

- Kroesen, M.; Molin, E.J.E.; van Wee, B. Measuring subjective response to aircraft noise: The effects of survey context. J. Acoust. Soc. Am. 2013, 133, 238–246. [Google Scholar] [CrossRef] [PubMed]

- Brink, M.; Wunderli, J.M.; Bögli, H. Establishing noise exposure limits using two different annoyance scales: A sample case with military shooting noise. In Proceedings of the Euronoise, Edinburgh, UK, 26–28 October 2009.

- Janssen, S.A.; Vos, H.; van Kempen, E.E.M.M.; Breugelmans, O.R.P.; Miedema, H.M.E. Trends in aircraft noise annoyance: The role of study and sample characteristics. J. Acoust. Soc. Am. 2011, 129, 1953–1962. [Google Scholar] [CrossRef] [PubMed]

- Fields, J.M.; De Jong, R.G.; Gjestland, T.; Flindell, I.H.; Job, R.F.S.; Kurra, S.; Lercher, P.; Vallet, M.; Yano, T.; Guski, R.; et al. Standardized general-purpose noise reaction questions for community noise surveys: Research and a recommendation. J. Sound Vib. 2001, 242, 641–679. [Google Scholar] [CrossRef]

- Sudman, S.; Bradburn, N.M.; Schwarz, N. Thinking about Answers: The Application of Cognitive Processes to Survey Methodology; Jossey-Bass Publishers: San Francisco, CA, USA, 1996. [Google Scholar]

- International Standards Organisation. ISO/TS 15666:2003 (Acoustics-Assessment of Noise Annoyance by Means of Social and Socio-Acoustic Surveys); International Standards Organisation: Geneva, Switzerland, 2003. [Google Scholar]

- Schuman, H.; Presser, S. Questions and Answers in Attitude Surveys—Experiments on Question Form, Wording, and Context; Sage Publications: Thousand Oaks, CA, USA, 1996. [Google Scholar]

- Schreckenberg, D.; Meis, M. Effects of Aircraft Noise on Noise Annoyance and Quality of Life around Frankfurt Airport. Final Abbridged Report Bochum/Oldenburg 2006. Available online: http://www.verkehrslaermwirkung.de/FRA070222.pdf (accessed on 12 July 2016).

- Brink, M.; Wunderli, J.-M. A field study of the exposure-annoyance relationship of military shooting noise. J. Acoust. Soc. Am. 2010, 127, 2301–2311. [Google Scholar] [CrossRef] [PubMed]

- Bundesamt für Umwelt. SonBase—The GIS Noise Database of Switzerland; Technical Bases (English Version); Bundesamt für Umwelt: Bern, Switzerland, 2009; Available online: http://www.bafu.admin.ch/laerm/10312/10340/index.html?lang=de (accessed on 12 July 2016).

- Miedema, H.; Vos, H. Exposure-response relationships for transportation noise. J. Acoust. Soc. Am. 1998, 104, 3432–3445. [Google Scholar] [CrossRef] [PubMed]

- Bland, J.M.; Altman, D.G. Statistical methods for assessing agreement between two methods of clinical measurement. Lancet 1986, 327, 307–310. [Google Scholar] [CrossRef]

- Brink, M. (Ed.) A review of explained variance in exposure-annoyance relationships in noise annoyance surveys. In Proceedings of the 11th International Congress on Noise as a Public Health Problem (ICBEN), Nara, Japan, 1–5 June 2014.

- Brooker, P. ANASE: Lessons from ‘Unreliable Findings’ 2008. Available online: https://dspace.lib.cranfield.ac.uk/handle/1826/2510 (accessed on 18 October 2016).

- Department for Transport. Attitudes to Noise from Aviation Sources in England (ANASE) Final Report 2007. Available online: http://www.dft.gov.uk/pgr/aviation/environmentalissues/Anase/ (accessed on 18 October 2016).

- Fidell, S.; Mestre, V.; Schomer, P.; Berry, B.; Gjestland, T.; Vallet, M.; Reid, T. A first-principles model for estimating the prevalence of annoyance with aircraft noise exposure. J. Acoust. Soc. Am. 2011, 130, 791–806. [Google Scholar] [CrossRef] [PubMed]

| Variant | Season | Sequence | Order of Response Alternatives | Location | n Returns (Cooperation Rate) |

|---|---|---|---|---|---|

| A | Autumn | 5-point → 11-point | ascending | early | 161 (31%) |

| B | Autumn | 5-point → 11-point | ascending | late | 157 (30%) |

| C | Autumn | 5-point → 11-point | descending | early | 164 (31%) |

| D | Autumn | 5-point → 11-point | descending | late | 142 (27%) |

| E | Autumn | 11-point → 5-point | ascending | early | 136 (26%) |

| F | Autumn | 11-point → 5-point | ascending | late | 146 (28%) |

| G | Autumn | 11-point → 5-point | descending | early | 152 (29%) |

| H | Autumn | 11-point → 5-point | descending | late | 136 (26%) |

| A | Spring | 5-point → 11-point | ascending | early | 154 (29%) |

| B | Spring | 5-point → 11-point | ascending | late | 146 (28%) |

| C | Spring | 5-point → 11-point | descending | early | 151 (29%) |

| D | Spring | 5-point → 11-point | descending | late | 156 (30%) |

| E | Spring | 11-point → 5-point | ascending | early | 135 (26%) |

| F | Spring | 11-point → 5-point | ascending | late | 150 (29%) |

| G | Spring | 11-point → 5-point | descending | early | 155 (30%) |

| H | Spring | 11-point → 5-point | descending | late | 145 (28%) |

| 11-Point Numerical Scale and Corresponding Numeric Values on an 0–100 Interval Scale: | |||||||||||

| Scale Point Label: | “0” | “1” | “2” | “3” | “4” | “5” | “6” | “7” | “8” | “9” | “10” |

| Numeric value: | 0 | 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 | 10 |

| Discrete point: | 0 | 10 | 20 | 30 | 40 | 50 | 60 | 70 | 80 | 90 | 100 |

| Midpoint of category: | 4.55 | 13.64 | 22.73 | 31.82 | 40.90 | 50.00 | 59.09 | 68.18 | 77.27 | 86.36 | 95.50 |

| 5-Point Verbal Scale and Corresponding Numeric Values on an 0–100 Interval Scale: | |||||||||||

| Scale Point Label: | “not at all” “iiberhaupt nicht” | “slightly” “etwas” | “moderately” “mittelmässig” | “very” “stark” | “extremely” “äusserst” | ||||||

| Numeric value: | 0 | 1 | 2 | 3 | 4 | ||||||

| Discrete point: | 0 | 25 | 50 | 75 | 100 | ||||||

| Midpoint of category: | 10 | 30 | 50 | 70 | 90 | ||||||

| Wave 1 (Autumn) | Wave 2 (Spring) | |

|---|---|---|

| Total # persons individually addressed (initial mail order) | 4200 | 4200 |

| Returned non-empty questionnaires | 1220 | 1211 |

| (thereof number of questionnaires with valid addresses) | (1194) | (1192) |

| Addressee deceased, unable to respond, or language problem a | 5 | 3 |

| Nothing returned | 2917 | 2873 |

| Actively refused by addressee | 8 | 8 |

| Envelope returned undelivered b | 50 | 105 |

| Cooperation Rate | 0.29 | 0.29 |

| Response Rate | 0.28 | 0.28 |

| Ldn a Category (dB(A)) | n | % of Sample | % Female | Mean Age (Years) | Mean of Occupancy (Years) | %HA (5-Point) | %HA (11-Point) | MA (5-Point) b | MA (11-Point) b |

|---|---|---|---|---|---|---|---|---|---|

| ≤50 | 15 | 0.63 | 60.00 | 52.20 | 14.67 | 6.67 | 6.67 | 18.33 | 26.00 |

| 50.0–52.5 | 49 | 2.05 | 57.14 | 54.18 | 17.08 | 8.16 | 2.04 | 19.90 | 20.21 |

| 52.5–55.0 | 183 | 7.67 | 56.83 | 53.03 | 14.60 | 12.57 | 10.38 | 27.34 | 28.11 |

| 55.0–57.5 | 247 | 10.35 | 55.47 | 54.28 | 14.09 | 9.31 | 6.48 | 27.35 | 29.35 |

| 57.5–60.0 | 282 | 11.82 | 52.84 | 54.34 | 15.60 | 11.70 | 9.93 | 30.36 | 31.87 |

| 60.0–62.5 | 370 | 15.51 | 54.86 | 52.73 | 14.08 | 18.92 | 15.14 | 34.76 | 37.68 |

| 62.5–65.0 | 373 | 15.63 | 54.96 | 53.48 | 15.34 | 21.72 | 17.96 | 37.74 | 40.54 |

| 65.0–67.5 | 426 | 17.85 | 46.95 | 51.79 | 15.00 | 28.87 | 25.59 | 42.61 | 45.88 |

| 67.5–70.0 | 278 | 11.65 | 58.99 | 50.74 | 14.57 | 26.98 | 22.66 | 42.09 | 45.93 |

| ≥70 | 163 | 6.83 | 54.60 | 52.57 | 14.77 | 29.45 | 23.31 | 44.33 | 49.25 |

| Wave | Survey Period | Averaging Period a | Day Temp. (°C) | Night Temp. (°C) | Precipitation c (mm) | Sunshine Hours (h) |

|---|---|---|---|---|---|---|

| Autumn | 10 October to 28 November 2012 | 1 day b | 11.14 (3.20) | 8.32 (2.80) | 2.00 (3.45) | 2.89 (2.95) |

| 7 days | 12.48 (2.30) | 10.18 (2.13) | 4.00 (2.38) | 2.38 (1.45) | ||

| 30 days | 13.89 (1.25) | 10.94 (1.02) | 2.17 (0.51) | 4.16 (0.50) | ||

| Spring | 11 March to 29 April 2013 | 1 day b | 3.64 (3.69) | 1.07 (3.26) | 0.98 (1.47) | 2.23 (2.47) |

| 7 days | 6.36 (2.85) | 3.62 (2.40) | 0.69 (0.57) | 2.82 (0.94) | ||

| 30 days | 1.94 (1.13) | 0.13 (1.05) | 0.30 (0.24) | 2.45 (0.40) |

| Effect | Level | F | B | SE | t | p Value |

|---|---|---|---|---|---|---|

| Between subject effects | ||||||

| Intercept | 77.831 | −44.426 | 4.875 | −9.113 | 0.000 | |

| Noise exposure (Ldn) | 277.705 | 1.272 | 0.076 | 16.664 | 0.000 | |

| Season | autumn a | 6.491 | 2.099 | 0.824 | 2.548 | 0.011 |

| Question sequence | 5-point → 11-point b | 0.121 | 0.286 | 0.824 | 0.347 | 0.728 |

| Order of response alternatives | ascending c | 0.519 | 0.593 | 0.824 | 0.720 | 0.471 |

| Location in questionnaire | early d | 25.758 | 4.182 | 0.824 | 5.075 | 0.000 |

| Within subject effect | ||||||

| Type of scale | 11-point e | 11.198 | 2.763 | 0.826 | 3.346 | 0.001 |

| Effect | Level | B | 95% CI of B | exp(B) | CI of exp(B) | p Value | ||

|---|---|---|---|---|---|---|---|---|

| Between-subject effects | ||||||||

| Intercept | −7.160 | −8.369 | −5.952 | 0.001 | 0.000 | 0.003 | <0.001 | |

| Noise exposure | 0.084 | 0.066 | 0.103 | 1.088 | 1.068 | 1.109 | <0.001 | |

| Season | autumn a | 0.043 | −0.153 | 0.240 | 1.044 | 0.858 | 1.271 | 0.665 |

| Question sequence | 5-point → 11-point b | −0.019 | −0.178 | 0.216 | 1.020 | 0.837 | 1.242 | 0.847 |

| Order of response alternatives | ascending c | 0.109 | −0.087 | 0.306 | 1.115 | 0.916 | 1.358 | 0.276 |

| Location in questionnaire | early d | 0.248 | 0.051 | 0.445 | 1.281 | 1.052 | 1.560 | 0.014 |

| Within-subject effect | ||||||||

| Type of scale | 11-point e | −0.236 | −0.161 | −0.311 | 1.266 | 1.175 | 1.365 | <0.001 |

© 2016 by the authors; licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC-BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Brink, M.; Schreckenberg, D.; Vienneau, D.; Cajochen, C.; Wunderli, J.-M.; Probst-Hensch, N.; Röösli, M. Effects of Scale, Question Location, Order of Response Alternatives, and Season on Self-Reported Noise Annoyance Using ICBEN Scales: A Field Experiment. Int. J. Environ. Res. Public Health 2016, 13, 1163. https://doi.org/10.3390/ijerph13111163

Brink M, Schreckenberg D, Vienneau D, Cajochen C, Wunderli J-M, Probst-Hensch N, Röösli M. Effects of Scale, Question Location, Order of Response Alternatives, and Season on Self-Reported Noise Annoyance Using ICBEN Scales: A Field Experiment. International Journal of Environmental Research and Public Health. 2016; 13(11):1163. https://doi.org/10.3390/ijerph13111163

Chicago/Turabian StyleBrink, Mark, Dirk Schreckenberg, Danielle Vienneau, Christian Cajochen, Jean-Marc Wunderli, Nicole Probst-Hensch, and Martin Röösli. 2016. "Effects of Scale, Question Location, Order of Response Alternatives, and Season on Self-Reported Noise Annoyance Using ICBEN Scales: A Field Experiment" International Journal of Environmental Research and Public Health 13, no. 11: 1163. https://doi.org/10.3390/ijerph13111163