1. Introduction

The upsurge in information technology (IT) has brought fabulous changes to people’s lives. As an important basis of the IT industry, data centres (DCs) have become more and more prevalent in both the public and private sectors. They are widely used for web-hosting, intranet, telecommunications, financial transaction processing, research units, central depository information bases of governmental organizations and other fields. The DC market have been rapidly growing over the past 40 years [

1,

2]. The scale of the global DC market has grew from 14.84 billion dollars to 45.19 billion dollars during 2009 to 2016, and in China the DC market grew fast from 1.07 billion dollars to 10.53 billion dollars in this period [

3]. In Europe, there are 1014 collocation DCs spread across its 27 member states, which consume more than 100 TWh of electricity each year [

4]. In China, the capacity of DCs has reached 28.5 GW in 2013, with 549.6 TWh of annual electrical consumption [

5,

6].

A DC comprises a large amount of Information and Communication Technology (ICT) equipment (e.g., servers, data storage, network devices, redundant or backup power supplies, redundant data communications connections, environmental controls and various security devices) and associated components [

7]. The ICT equipment in DCs are energy intensive and they need to run continually without rest during every hour of the 365 days in a year. It was shown that the energy usage of data centres is in the range of 120–940 W/m

2 [

8]. This keeps increasing significantly, reaching up to 100 times higher than the energy demand of commercial office accommodations [

9]. In 2006, DCs consumed 61 billion kWh of electricity in the USA, which is 1.5% of the total energy consumption of USA in that year [

10], in 2013, the electrical energy consumption in the USA increased to 91 billion kWh, and the quantity is expected to increase to 140 billion kWh annually by 2020 [

11]. The huge energy consumptions may indicate the significant energy saving potential, so energy conservation measures should be taken in data centres.

Space cooling (i.e., air conditioning) is a fundamental need in DCs which, aiming at removing the tremendous amount of heat dissipated from the IT equipment and maintaining an adequate space temperature, consumes around 30% to 40% of energy delivered into the centre spaces [

6,

12,

13,

14]. The traditional cooling equipment for DCs are a specialised mechanical vapour compression air conditioners, which are driven by electrical energy and have lower efficiency, i.e., the coefficients of performance (COP) are around 2 to 3, leading to energy inefficient and environmentally unfriendly operation. In recent years, several alternative cooling modes, e.g., adsorption/absorption, ejector, and evaporative types, have appeared on the market, and some of them have been used in DCs. Although these technologies can save some electrical energy, there exists a lot of problems that prevents them from wider application in DCs. The problems of different technologies are as follows: (1) Evaporative/ traditional dew point cooling: low cooling efficiency, low heat transfer capacity, and low efficiency in humid climates regions, which lead to large size and climate selectivity disadvantages [

15,

16]; (2) Ejector cooling: requires a high temperature heat source and it is difficult to utilize waste heat in the DCs, unstable cooling output, low COP (0.4–0.7) [

17,

18,

19], which lead disadvantages of large size, high cost and difficulty of integration into DCs; (3) Absorption/adsorption cooling: requires extra heat sources with higher temperature, lower COP (0.6–0.9), and it is difficult to utilize waste heat in the DCs [

20,

21,

22], which lead to disadvantages of large size, high cost and difficulties of integration into DCs.

Evaporative cooling, by making use of the principle of water evaporation for heat absorbing, has gained growing popularity in air conditioning [

23,

24], owing to its simple structure and effective use of the latent heat of water, which is a recyclable (renewable) energy in the natural environment. Evaporative cooling technologies include two general types: direct and indirect ones. Direct Evaporative Cooling keeps the primary (product) air in direct contact with water, causing the simultaneous evaporation of the water and reduction of air temperature. As a result, the vaporised water is added into the air, creating wetter air conditions and potentially causing discomfort for the space occupants. Indirect Evaporative Cooling keeps the primary (product) air at the dry channels and the secondary (working) air in separate wet channels where water is distributed towards the surfaces to form thin water films [

25]. Indirect Evaporative Cooling can lower the temperature of air and meanwhile keep its dryness, thus creating a better thermal comfort and improved indoor air quality [

26,

27,

28]. Dew point cooling, a type of Indirect Evaporative Cooling, by modifying the structure of heat and mass exchanger to enable the pre-cooling of the secondary air prior to its entry into the wet channels, can break the wet bulb limit and lower the air temperature down to its dew point, thereby achieving 20–30% higher cooling efficiency than conventional IECs [

29,

30,

31,

32,

33].

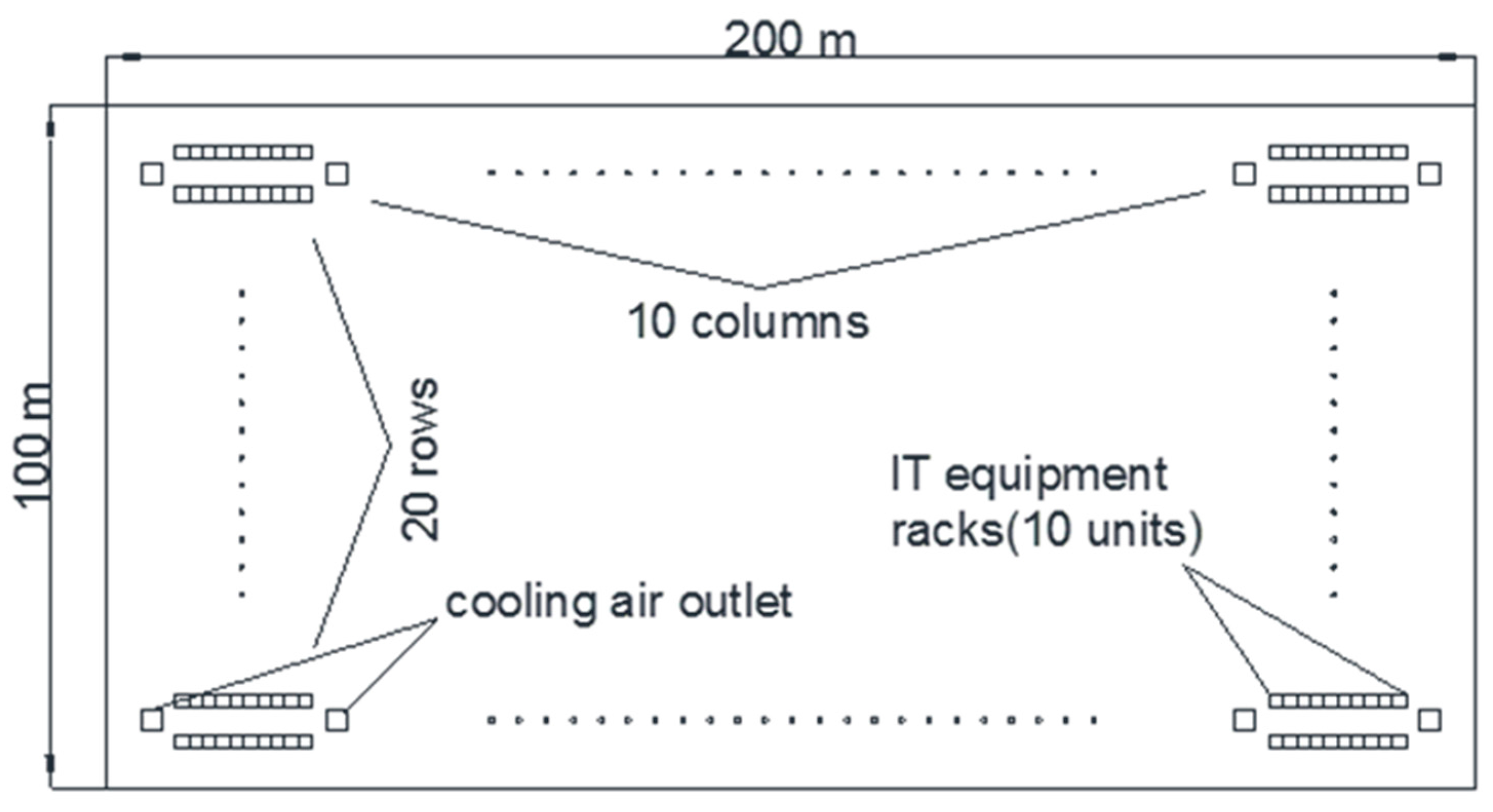

A novel dew point cooling system with high efficiency has been recently developed. The system is comprised of a highly efficient dew point cooler unit to cool the air, a dehumidifying unit to pre-treat the intake air to achieve high cooling efficiency in humid climates, a heat recovery unit to utilize the waste heat in DCs for air dehumidification, a heat storage/exchanger unit and a control system. To explore the feasibility and energy saving potential of using the novel dew point cooling system in DCs, the detailed conditions of DCs were investigated in this paper. DCs have been classified into several types in terms of their IT load capacities and climate conditions. The energy consumptions and energy usage effectiveness for various types of DCs were investigated by a case study. The energy saving potential in various types of DCs were investigated by using dynamic IT loads model to determine the cooling demand and introducing cooling air supply management. The energy saving potentials using the novel dew point cooling system in the DCs were analysed by comparing the annual electricity consumptions of DCs using traditional cooling systems and the novel dew point cooling system. The results will provide insights into the optimum selections of cooling technologies and cooling supply air management for improvement of the energy performance of DCs.

2. The Innovative Dew Point Cooling System

A super-performance dew point cooler has been recently developed [

25]. The dew point cooler, employing a super performance wet material layer, innovative heat and mass exchanger and intermittent water supply scheme, achieves 100–160% higher COP and a much lower electrical energy usage compared to existing air coolers of the same type. The novel dew point cooler overcomes the difficulties remaining with existing cooling systems, thus achieving significantly improved energy efficiency. Under the standard test conditions, i.e., dry bulb temperature of 37.8 °C and coincident wet bulb temperature of 21.1 °C, the prototype cooler achieved the wet-bulb cooling effectiveness of 114% and dew-point cooling effectiveness of 75%, yielding a significantly high COP value of 52.5 [

25]. Although the dew point cooler is mostly suitable for dry climates, it can maintain its high efficiency when used in humid climates by combining it with an air pretreatment system, to form an innovative dew point cooling system. The performance comparison among the novel and existing cooling systems is outlined in

Table 1.

Figure 1 shows a schematic drawing of the innovative cooling system. The system comprises five major parts: (1) dew point air cooler; (2) adsorbent sorption/desorption cycle containing a sorption bed for air dehumidification and a desorption bed for adsorbent regeneration; both are functionally alternative; (3) micro-channels-loop-heat-pipe (MCLHP) based DC heat recovery system; (4) heat storage/exchanger; and (5) internet-based intelligent monitoring and control system.

During operation, mixture of the return air and fresh air will be pretreated within the sorption bed (part of the sorption/desorption cycle), which will create a lower and stabilised humidity ratio in the air, thus increasing its cooling potential. This part of the air will be delivered into the dew point air cooler. Within the cooler, part of the air will be cooled to a temperature approaching the dew point of its inlet state and delivered to the DC spaces for indoor cooling.

Meanwhile, the remainder of the air will receive the heat transported from the product air and absorb the evaporated moisture from the wet channel surfaces, thus becoming hot and saturated and being discharged to the atmosphere. As the adsorbent regeneration process requires significant amount of heat while the IT equipment generate heat constantly, a micro-channels-loop-heat pipe (MCLHP)-based DC heat recovery system will be implemented. Within the system, the evaporation part of the MCLHP will be stuck to the enclosure of the data processing (or computing) equipment to absorb the heat dissipated from the equipment, while the absorbed heat will be released to a dedicated heat storage/exchanger via the condenser of the MCLHP. Within the heat storage/exchanger, the regeneration air will be directed through, taking the heat away from it and transferring the heat to the desorption bed for adsorbent regeneration, while the paraffin/expanded-graphite within the storage/exchanger will act as the heat balance element that stores or releases heat intermittently to match the heat required by the regeneration air. The heat collected from the DC equipment and (or) from solar radiation will be jointly or independently applied to the adsorbent regeneration, while the system operation will be managed by an internet-based intelligent monitoring and control system. The individual components are described below:

- (1)

A unique high performance dew point air cooler: The novel high performance dew point evaporative cooler had a complex heat and mass exchanger with an advanced wet material layer, An intermittent water supply scheme was implemented. The cooler achieved 100–160% higher COP compared to the existing dew point coolers. Electricity use of the cooler was reduced by 50–70% compared to existing dew coolers. Under the standard test condition, i.e., dry bulb temperature of 37.8 °C and coincident wet bulb temperature of 21.1 °C, the prototype cooler achieved the wet-bulb cooling effectiveness of 114% and dew-point cooling effectiveness of 75%, yielding a significantly high COP value of 52.5

- (2)

An energy efficient solar and (or) CDC-waste-heat driven sorption/desorption cycle: comprising sorption and desorption beds made with identical structures which allow the periodic alternation in function, the sorption/desorption cycle can dehumidify the humid air. Compared to existing adsorption systems, the new sorption/desorption cycle has a number of innovative features [

34]: (a) direct interaction between the solar radiation and bed-attained moistures reduces energy losses to surroundings, thus increasing the system’s energy efficiency by around 20%; (b) the regeneration air could be at a lower temperature (40–60 °C), thus creating an opportunity to utilize the waste heat from a DC. This will create a near-to-zero-energy adsorbent regeneration by making full use of the DC waste heat and (or) solar energy.

- (3)

A high efficiency micro-channels-loop-heat-pipe (MCLHP)-based CDC heat recovery system. This will enable direct collection of the heat dissipated from the equipment, thus minimising the space cooling load and maximising the heat recovery rate of the system.

- (4)

A high performance heat storage/exchanger unit, that will create an enhanced/accelerated heat storing and releasing process.

Owing to its significantly enhanced energy efficiency, environmentally friendly operation, small size and good climatic adaptability, this novel dew point cooling system is expected to be used in DCs in various climates with higher energy usage effectiveness and significant energy saving effect.

6. Conclusions

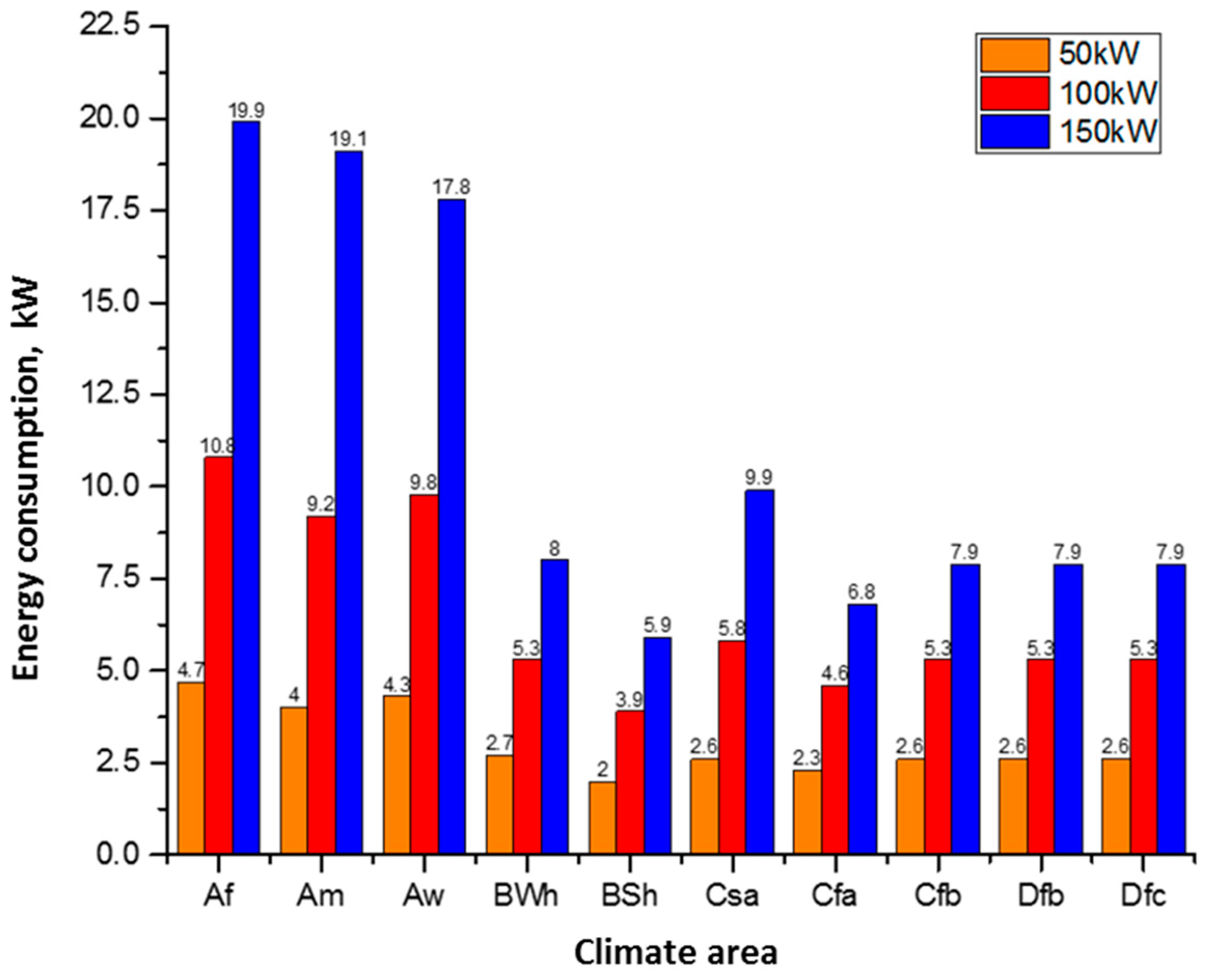

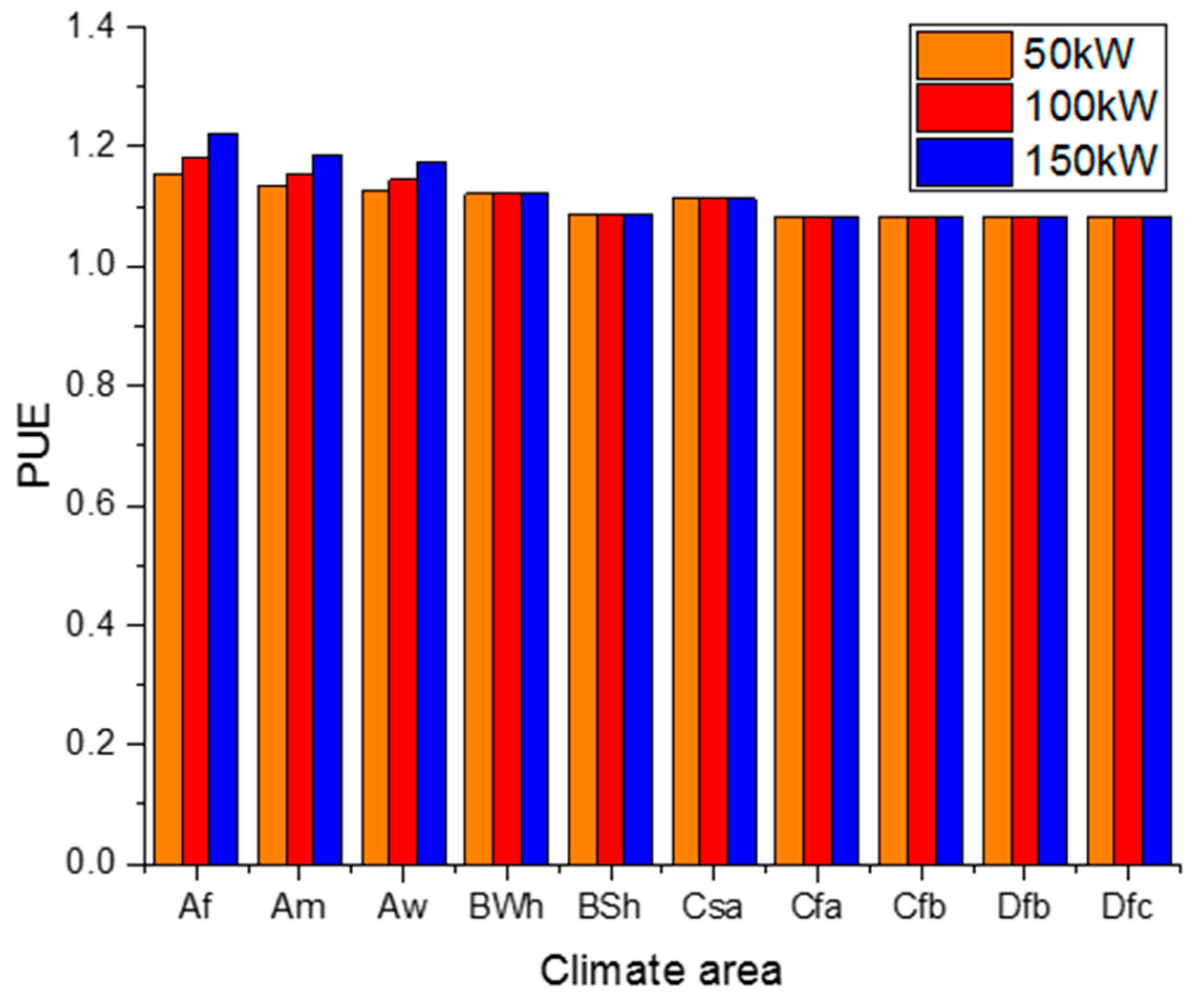

The performance of the novel dew point cooler in 10 typical climates was investigated. It was found that the novel dew point cooler is ideal for use in dry climates, and in humid areas it can be run efficiently with the aid of dehumidification systems. In the 10 typical cities, the PUEs of DCs are lower than 1.2. PUEs are relatively higher in Af, Am, Aw and Csa climate areas, and increase with cooling load at the same climate conditions, because of the requirement of the dehumidification in these climate areas. As for the other 6 climate areas (BWh, BSh, Cfa, Cfb, Dfb and Dfc) where dehumidification is not required, the cooling loads dont impact on the PUEs.

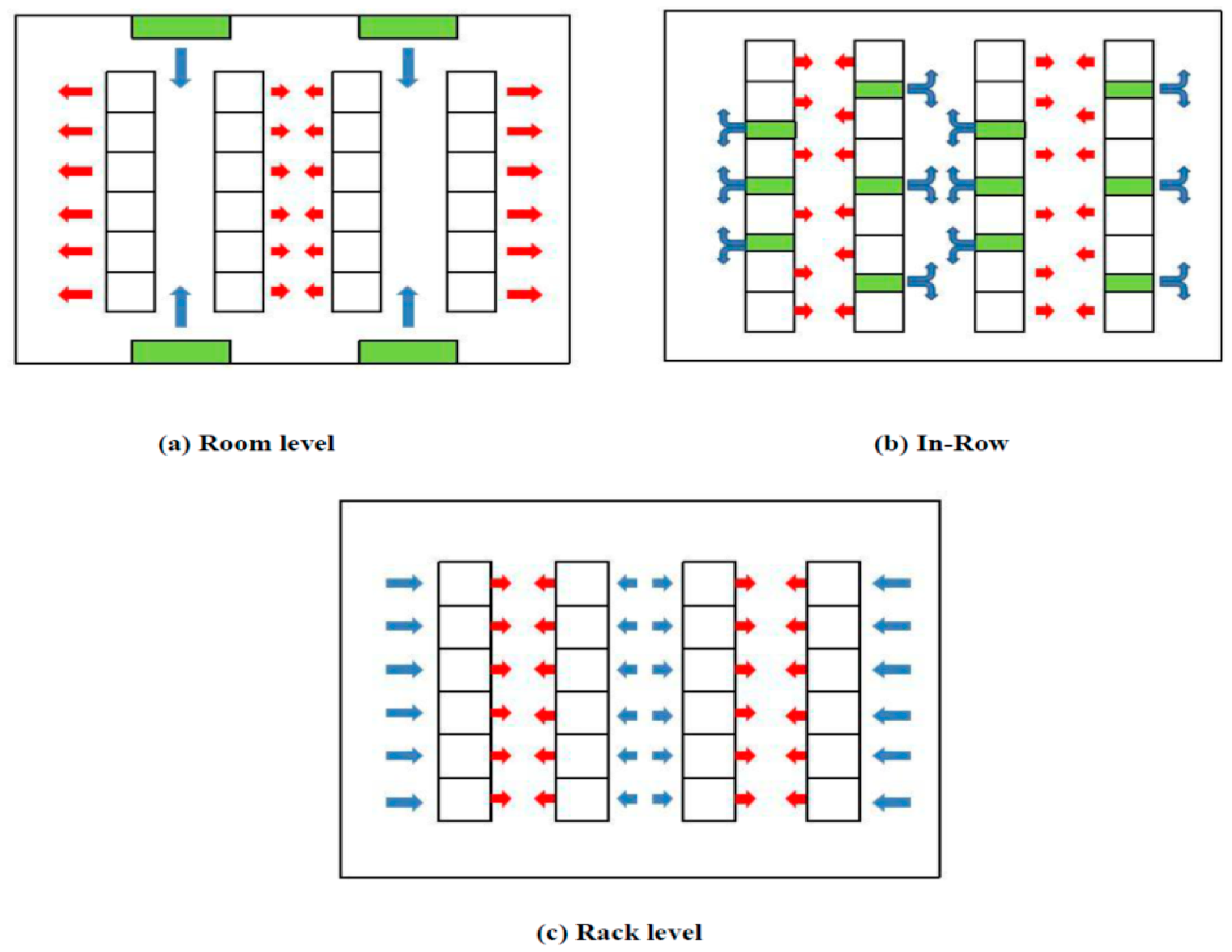

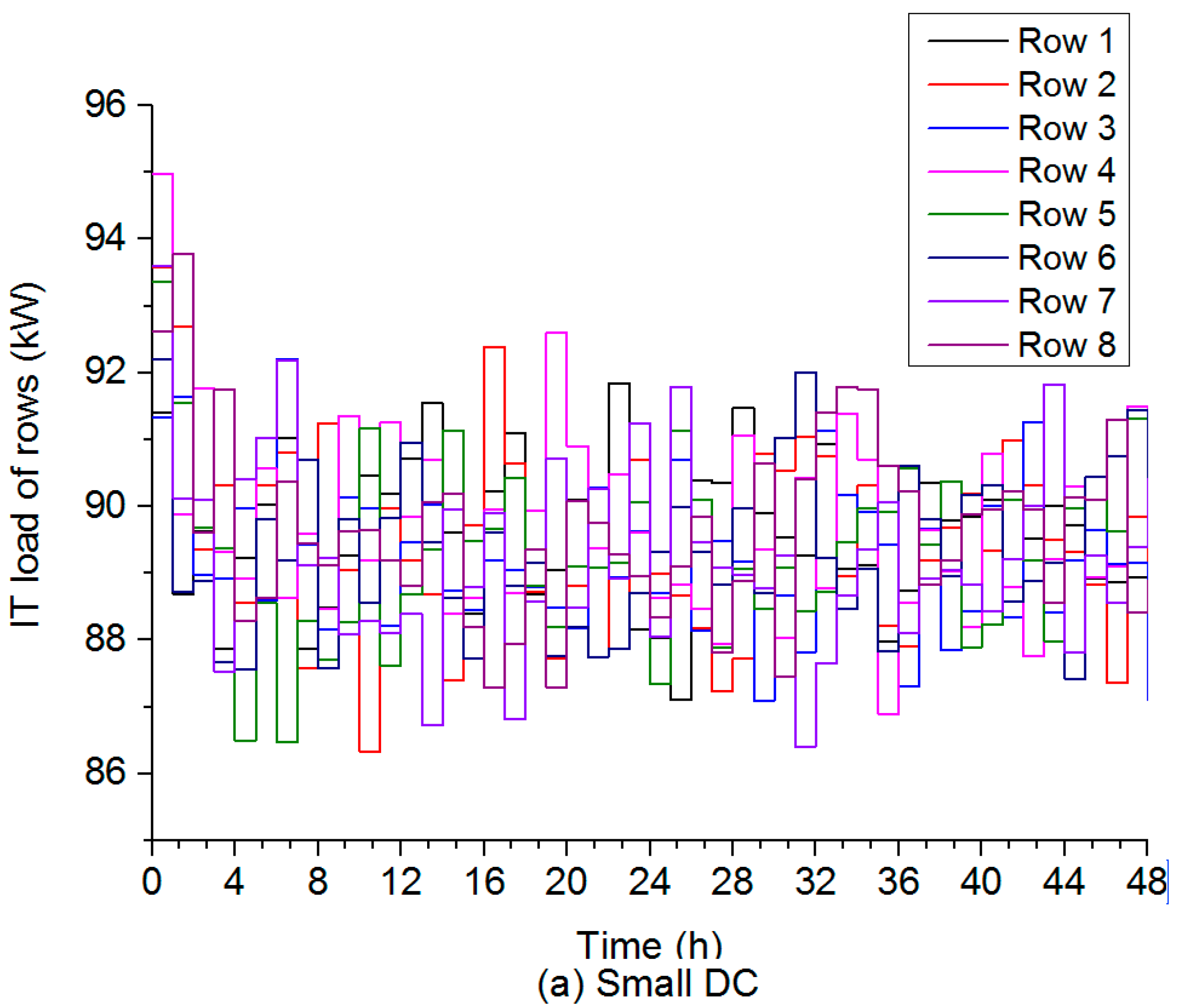

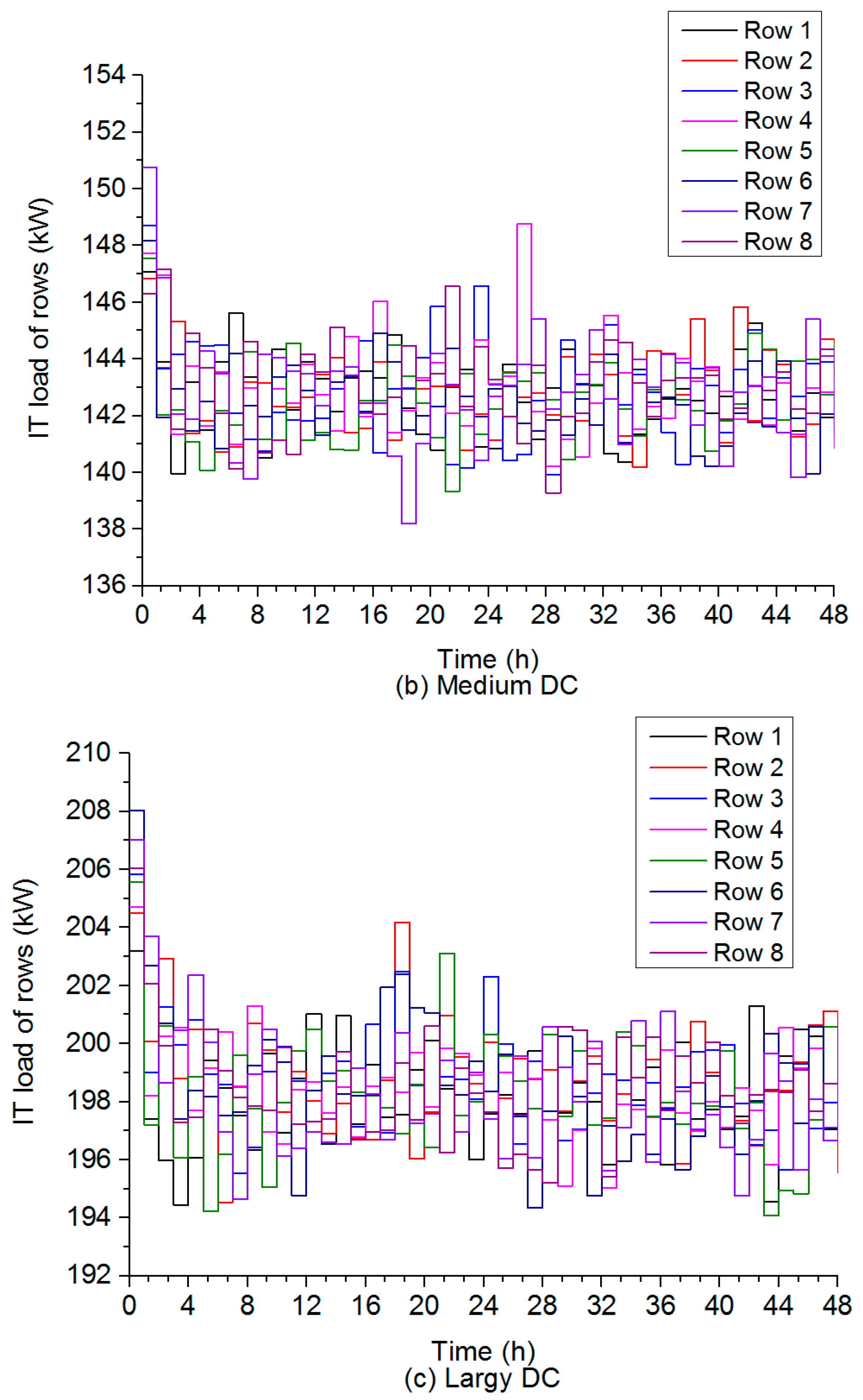

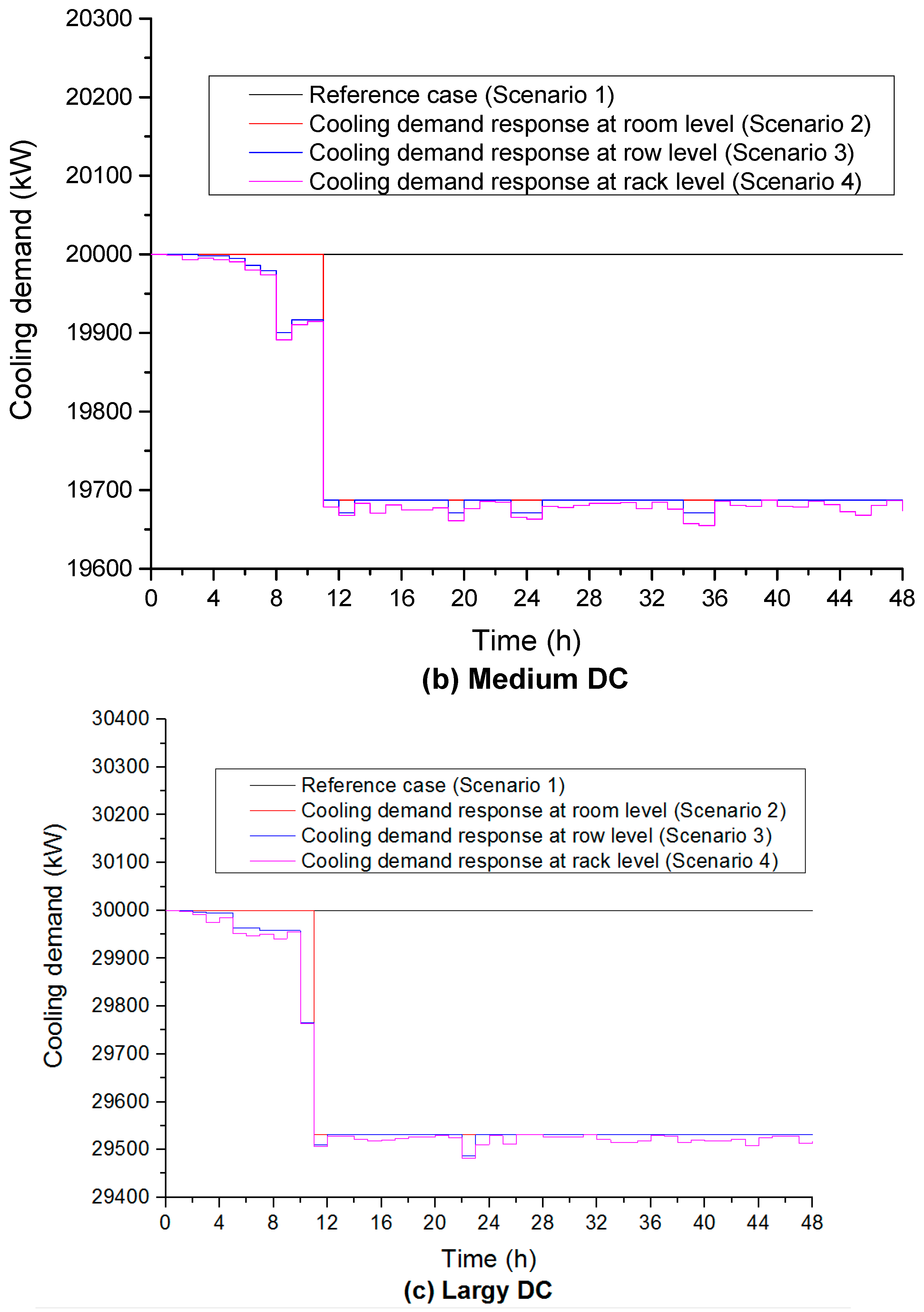

By using the novel dew point cooling system instead of a traditional air conditioning system, 87.7~91.6% electricity consumption savings can be achieved in 10 selected cities which represent 10 typical climatic regions across the world. The DCs can be classified into three types in terms of the IT equipment load density: large, medium and small. The scale of DCs slightly affects the saving of cooling demand, the smaller the DC load capacity the higher proportion of cooling demand could be saved. Corresponding to the three ways of cold air supply, the room level, row level, and rack level, the saved cooling demand increases in the order of room level, row level, and rack level.

It should be of particular interest that the dew point cooling system can be used in data centres in various climates with much higher energy efficiency. This created an opportunity to develop a novel air cooling system for DCs with the significantly enhanced energy efficiency and greatly reduced power use, thus extending its applications worldwide. This research will therefore contribute to realisation of the global energy saving and carbon reduction targets, and bring about the enormous economic, environmental and sustainability benefits to the world.