Development of a General Package for Resolution of Uncertainty-Related Issues in Reservoir Engineering

Abstract

:1. Introduction

2. Methodology

2.1. Random Field Generator

2.1.1. Sequential Gaussian Simulation Method

2.1.2. Karhunen–Loeve Expansion

2.2. Forward Modeling Methods

2.2.1. Monte Carlo Simulation

2.2.2. Probabilistic Collocation Method

2.3. Inverse Modeling Method

2.4. The Design of GenPack

3. Results and Discussions

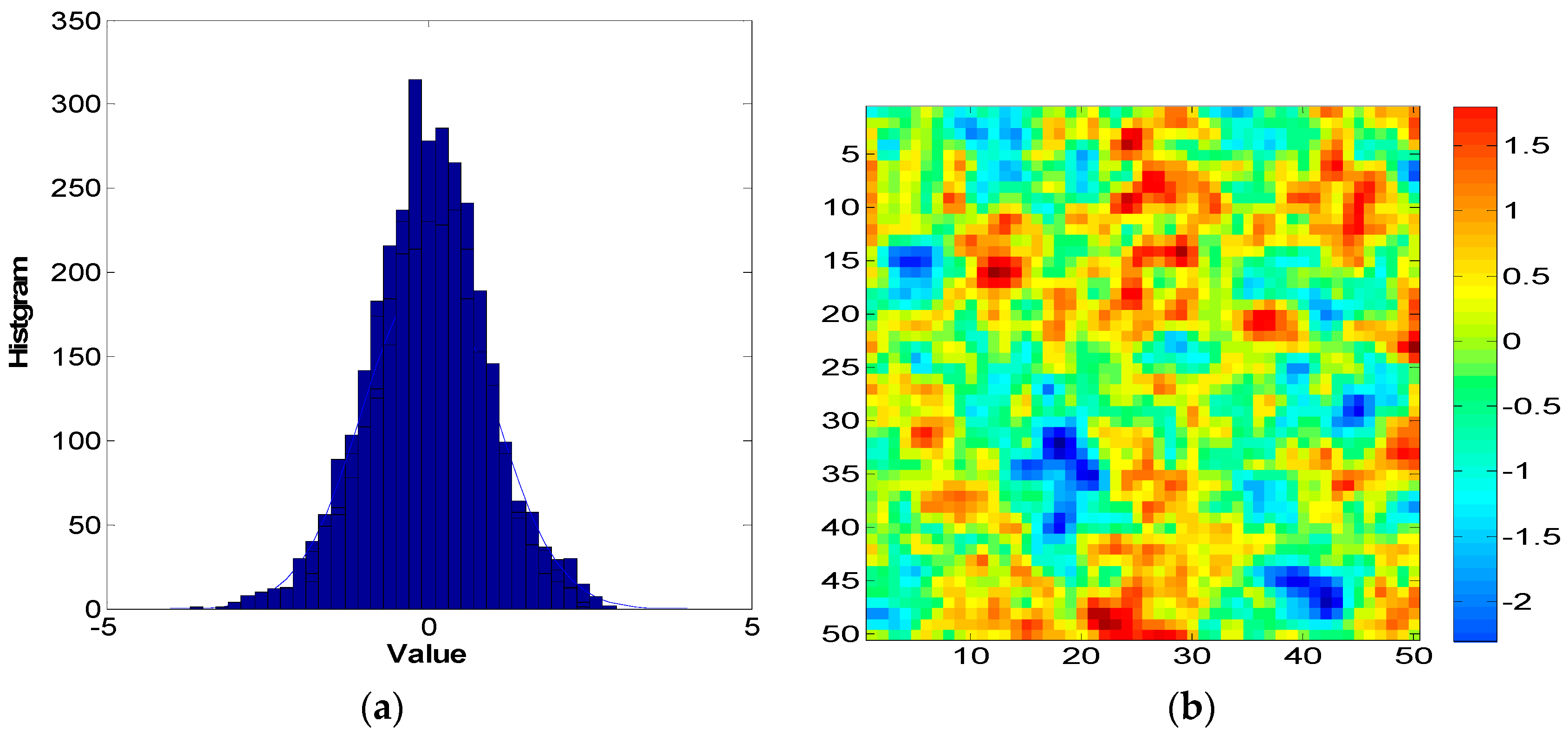

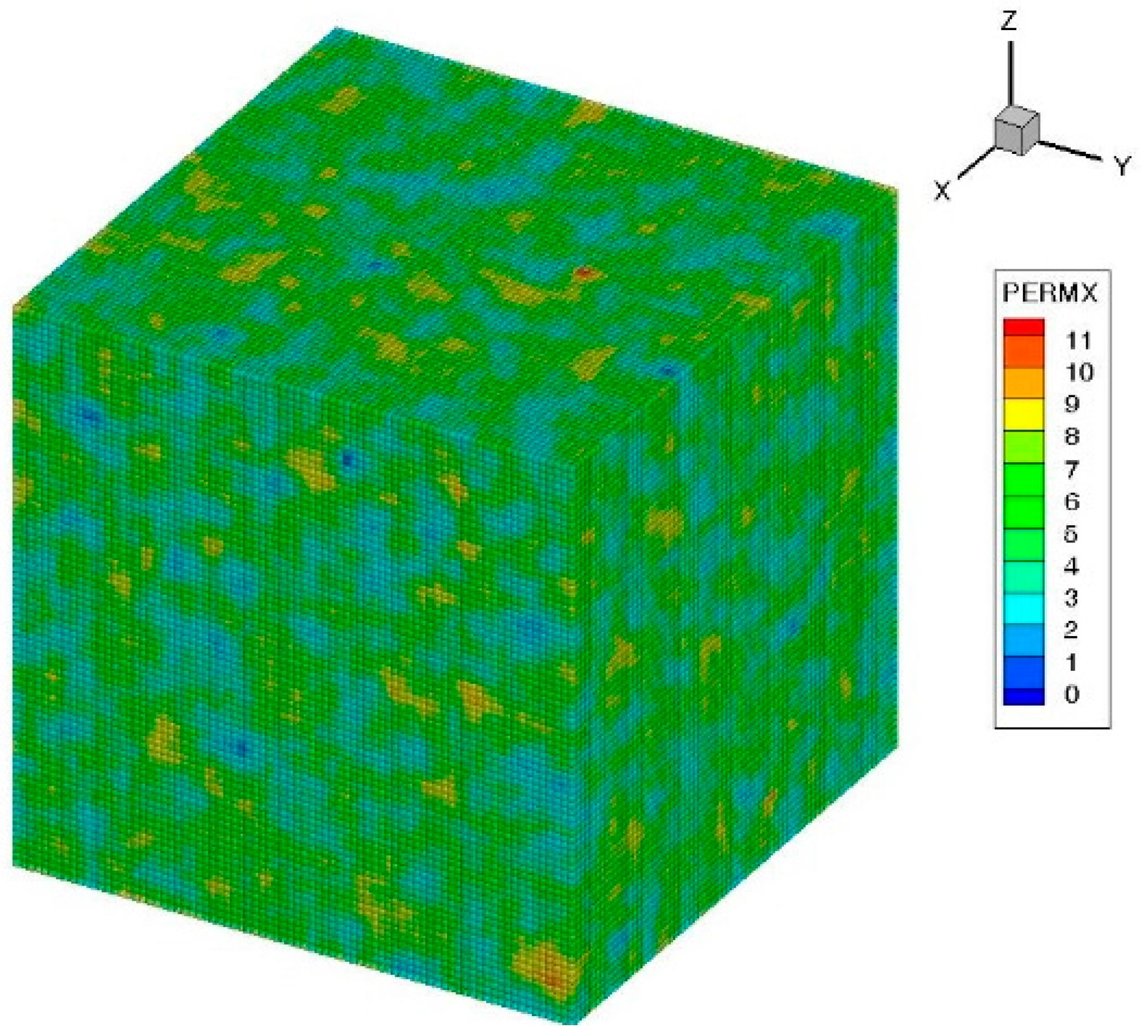

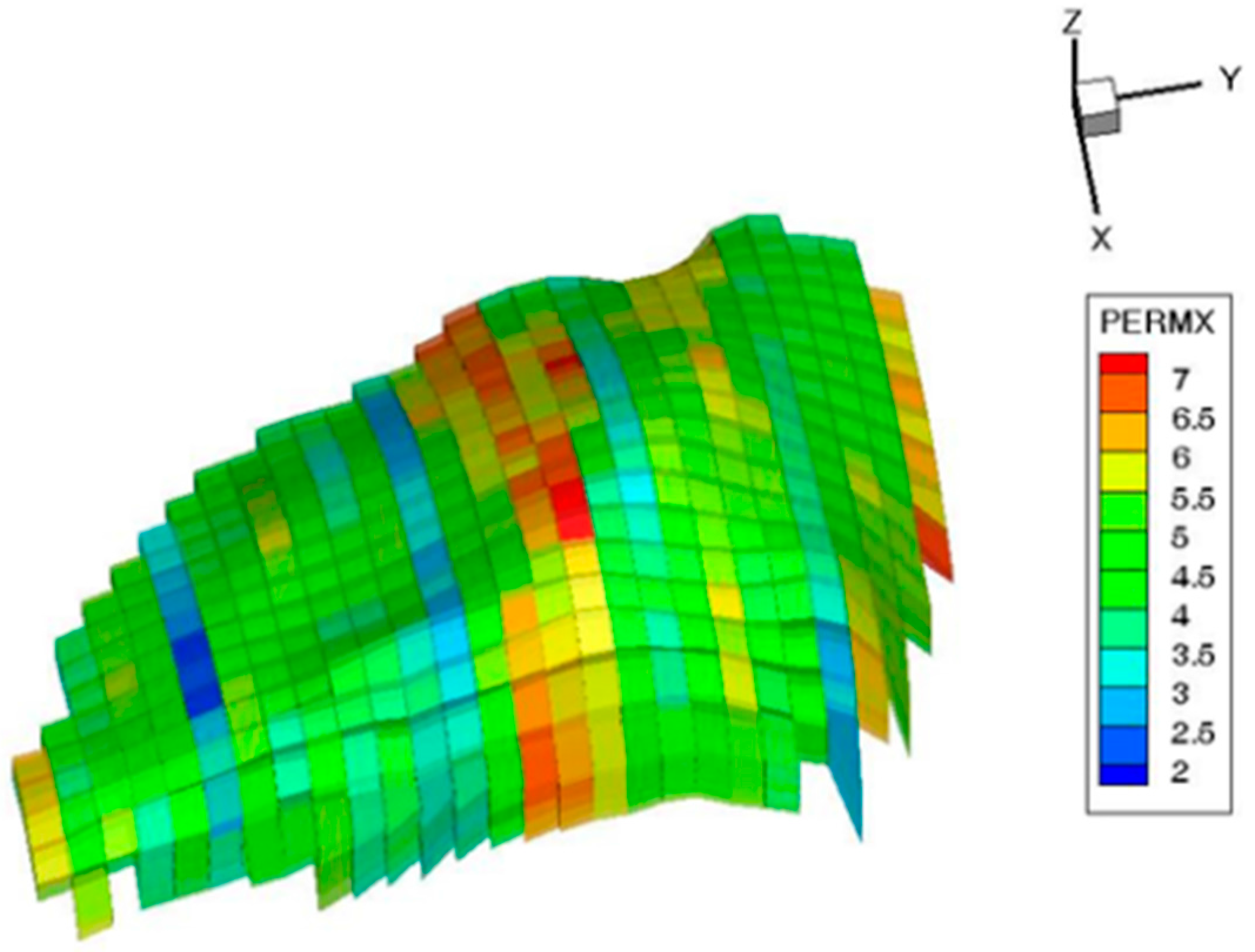

3.1. Random Field Generation

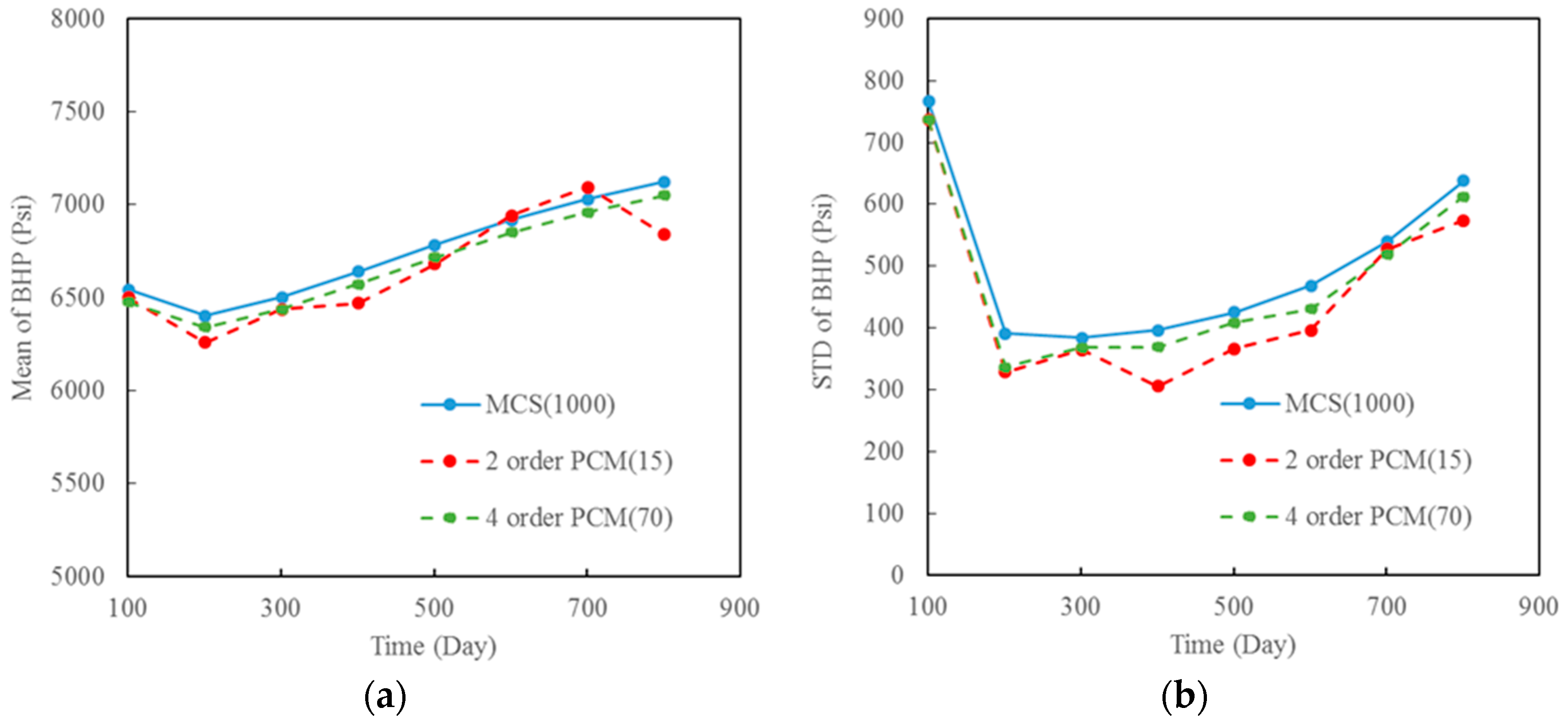

3.2. Uncertainty Quantification

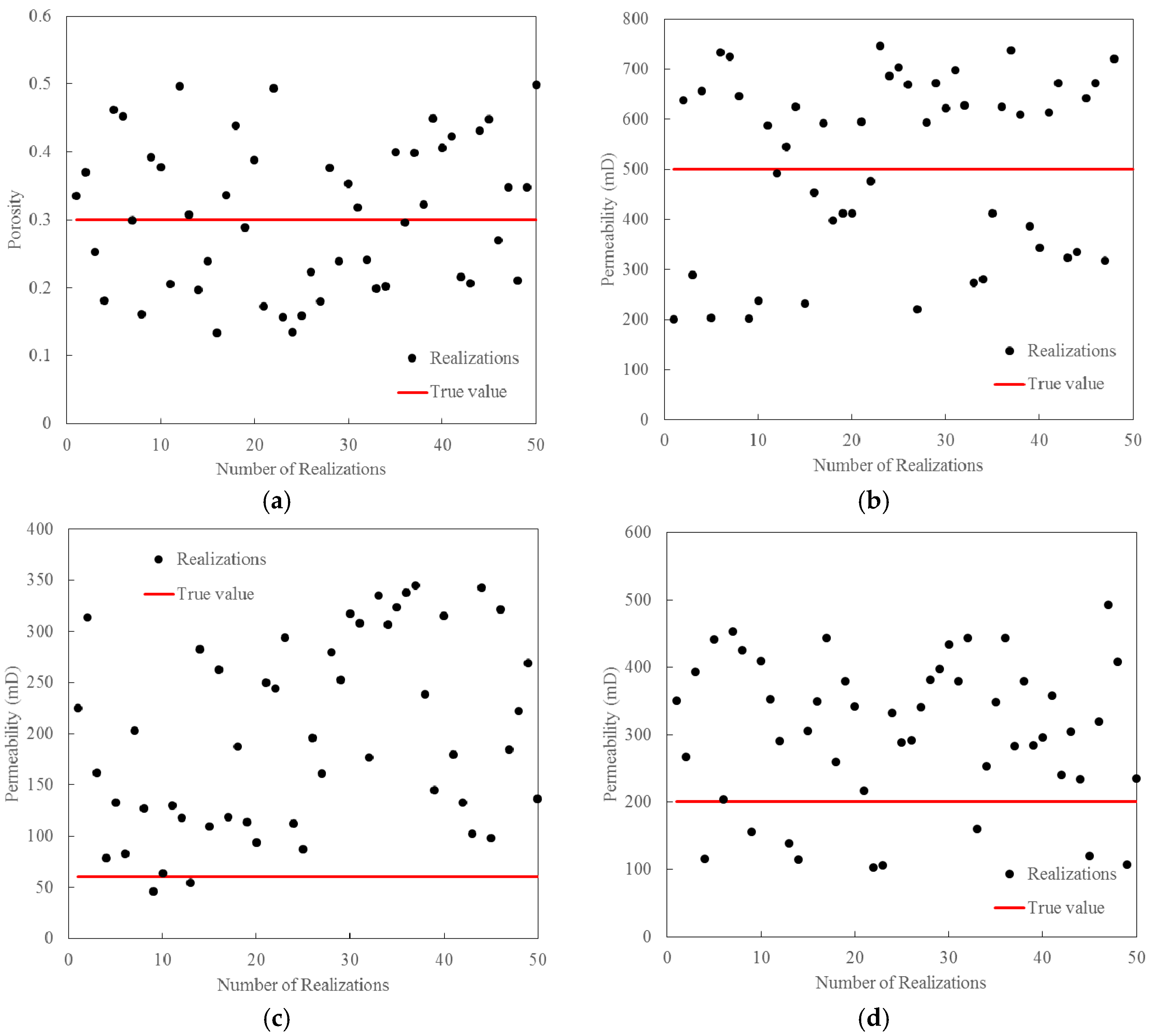

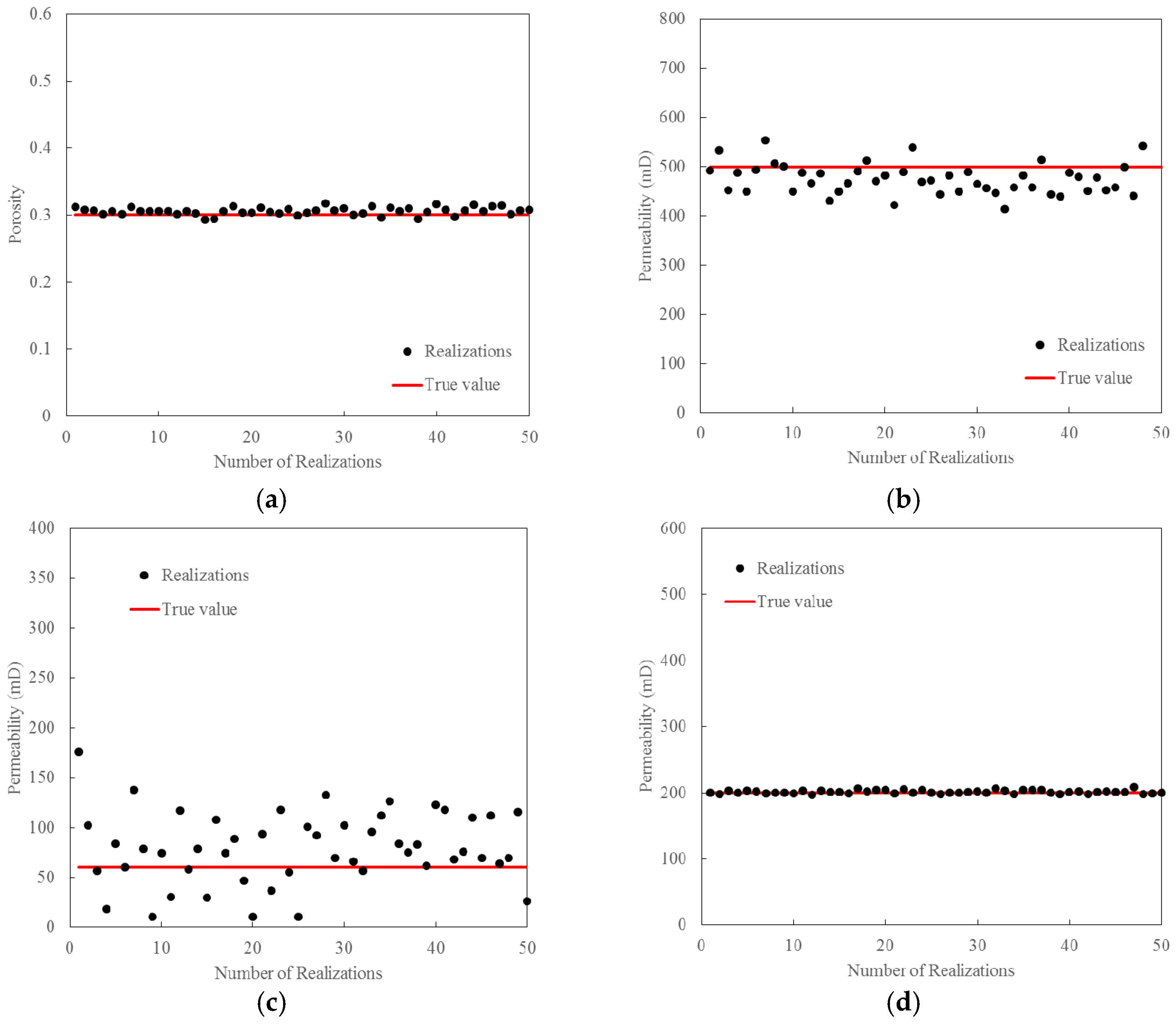

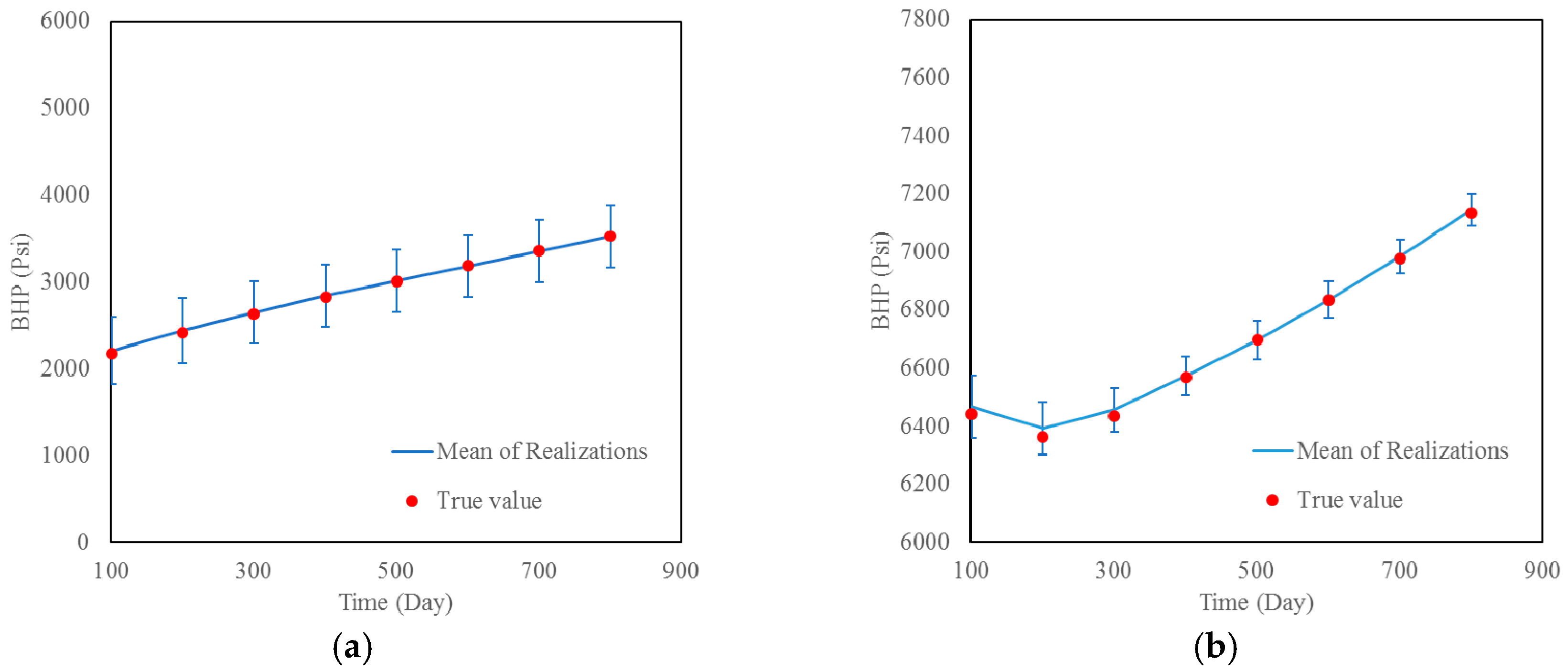

3.3. History Matching

4. Conclusions

- (1)

- Uncertainty-related issues are extremely important to aid the decision-making process in petroleum engineering. It is imperative to develop an efficient tool to quantify the risks and calibrate the simulation models. In this study, we designed a comprehensive general package, named GenPack to integrate together uncertainty quantification in forward modeling and uncertainty reduction in inverse modeling. GenPack is a helpful tool for petroleum engineers and researchers to effectively investigate the uncertainty-related issues in practice. In the current version of GenPack, the selected methods are either widely accepted or performed well in the existing literature. Other methods can be incorporated when they become available due to the modularized design of this package.

- (2)

- GenPack allow user to generate Gaussian random fields via either KL expansion or sequential Gaussian simulation method. In order to improve the computational efficiency, one should decide how many leading terms can be retained in KL expansion. Sequential Gaussian simulation method is found to be suitable for parameter field generation in a large model with complex geometric boundaries.

- (3)

- MCS and PCM are the options in GenPack to quantify uncertainty. MCS is a robust method for uncertainty quantification even though it requires large number of samples to guarantee the convergence. PCM is an efficient method to quantify uncertainty. The appropriate order of PCM can be investigated to achieve the balance between efficiency and accuracy during the analysis process.

- (4)

- History matching is an important function in GenPack to assist us to take advantage of the observation data. GenPack applies the EnKF method in history matching. The method is validated with a synthetic case. The results show that the prediction accuracy can be greatly improved and the predictive uncertainty can be dramatically reduced after the implementation of history matching.

- (5)

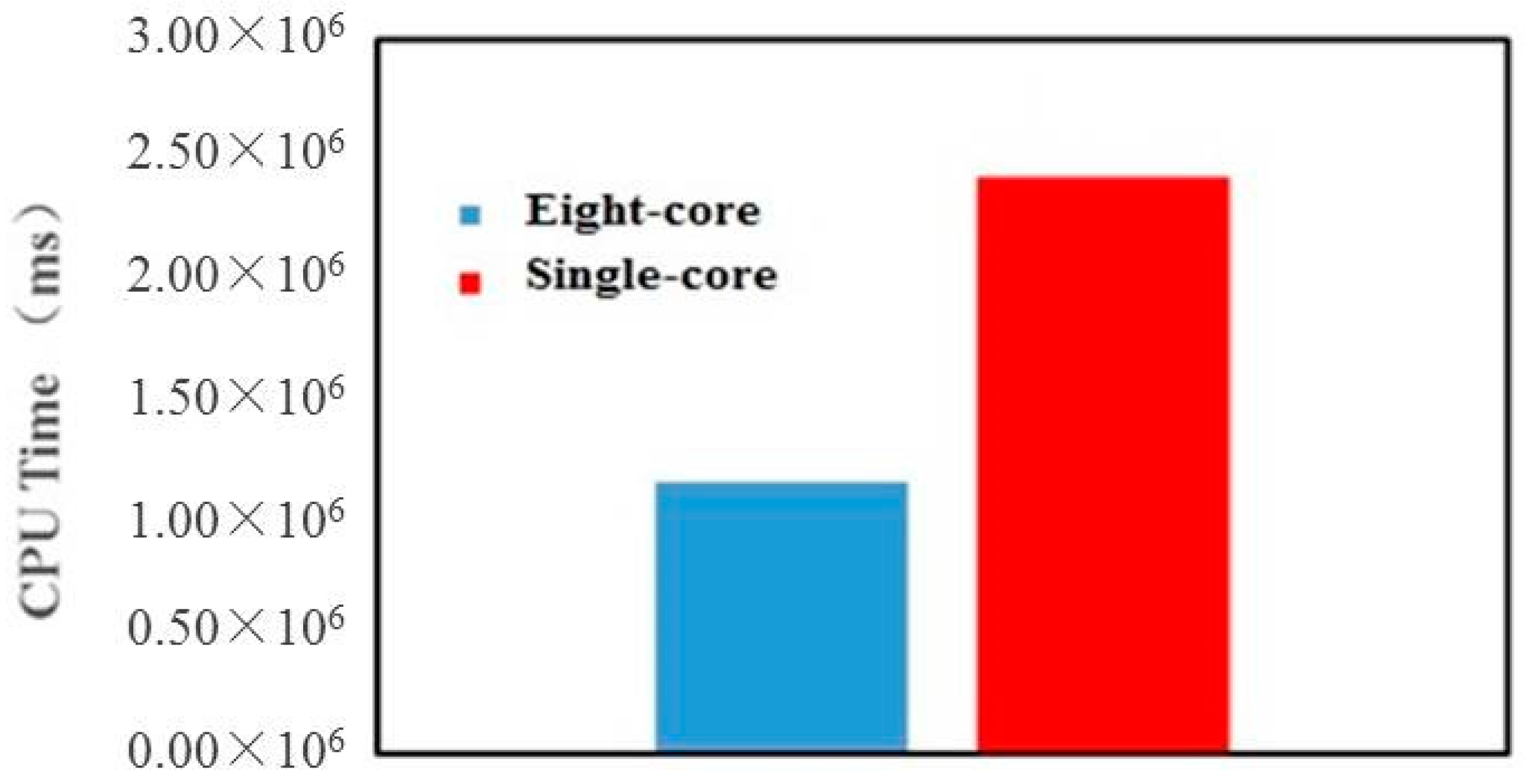

- GenPack is a Monte Carlo-based non-intrusive software package ready to be incorporated with any existing simulator in the petroleum engineering field. Due to the independence characteristics when evaluating each realization in the MC process, the efficiency of the stochastic analysis can be further improved with parallel computing when the required computing source is available.

Acknowledgments

Author Contributions

Conflicts of Interest

References

- Zhang, D. Stochastic Methods for Flow in Porous Media: Coping with Uncertainties; Academic Press: New York, NY, USA, 2001. [Google Scholar]

- Xiu, D. Numerical Methods for Stochastic Computations: A Spectral Method Approach; Princeton University Press: Princeton, NJ, USA, 2010. [Google Scholar]

- Xiu, D.; Karniadakis, G.E. The Wiener--Askey polynomial chaos for stochastic differential equations. SIAM J. Sci. Comput. 2002, 24, 619–644. [Google Scholar] [CrossRef]

- Bakr, A.A.; Gelhar, L.W.; Gutjahr, A.L.; Macmillan, J.R. Stochastic analysis of spatial variability in subsurface flows: 1. Comparison of one- and three-dimensional flows. Water Resour. Res. 1978, 14, 263–271. [Google Scholar] [CrossRef]

- Gelhar, L.W. Stochastic subsurface hydrology from theory to applications. Water Resour. Res. 1986, 22. [Google Scholar] [CrossRef]

- James, A.L.; Oldenburg, C.M. Linear and Monte Carlo uncertainty analysis for subsurface contaminant transport simulation. Water Resour. Res. 1997, 33, 2495–2508. [Google Scholar] [CrossRef]

- Kuczera, G.; Parent, E. Monte Carlo assessment of parameter uncertainty in conceptual catchment models: The Metropolis algorithm. J. Hydrol. 1998, 211, 69–85. [Google Scholar] [CrossRef]

- Liu, N.; Oliver, D.S. Evaluation of Monte Carlo methods for assessing uncertainty. SPE J. 2003, 8, 188–195. [Google Scholar] [CrossRef]

- Xiu, D.; Karniadakis, G.E. Modeling uncertainty in flow simulations via generalized polynomial chaos. J. Comput. Phys. 2003, 187, 137–167. [Google Scholar] [CrossRef]

- Li, H.; Zhang, D. Probabilistic collocation method for flow in porous media: Comparisons with other stochastic methods. Water Resour. Res. 2007, 43. [Google Scholar] [CrossRef]

- Li, H.; Zhang, D. Efficient and accurate quantification of uncertainty for multiphase flow with the probabilistic collocation method. SPE J. 2009, 14, 665–679. [Google Scholar] [CrossRef]

- Romero, C.E.; Carter, J.N. Using genetic algorithms for reservoir characterisation. J. Pet. Sci. Eng. 2001, 31, 113–123. [Google Scholar] [CrossRef]

- Dong, Y.; Oliver, D.S. Quantitative use of 4D seismic data for reservoir description. In Proceedings of the SPE Annual Technical Conference and Exhibition, Denver, CO, USA, 5–8 October 2003.

- Gao, G.; Reynolds, A.C. An improved implementation of the LBFGS algorithm for automatic history matching. In Proceedings of the SPE Annual Technical Conference and Exhibition, Houston, TX, USA, 26–29 September 2004.

- Ballester, P.J.; Carter, J.N. A parallel real-coded genetic algorithm for history matching and its application to a real petroleum reservoir. J. Pet. Sci. Eng. 2007, 59, 157–168. [Google Scholar] [CrossRef]

- Chen, Y.; Zhang, D. Data assimilation for transient flow in geologic formations via ensemble Kalman filter. Adv. Water Resour. 2006, 29, 1107–1122. [Google Scholar] [CrossRef]

- Oliver, D.S.; Reynolds, A.C.; Liu, N. Inverse Theory for Petroleum Reservoir Characterization and History Matching; University Press: Cambridge, UK, 2008. [Google Scholar]

- Liu, G.; Chen, Y.; Zhang, D. Investigation of flow and transport processes at the MADE site using ensemble Kalman filter. Adv. Water Resour. 2008, 31, 975–986. [Google Scholar] [CrossRef]

- Aanonsen, S.; Nævdal, G.; Oliver, D.; Reynolds, A.; Vallès, B. The Ensemble Kalman Filter in Reservoir Engineering—A review. SPE J. 2009, 14, 393–412. [Google Scholar] [CrossRef]

- Xie, X.; Zhang, D. Data assimilation for distributed hydrological catchment modeling via ensemble Kalman filter. Adv. Water Resour. 2010, 33, 678–690. [Google Scholar] [CrossRef]

- Dai, C.; Xue, L.; Zhang, D.; Guadagnini, A. Data-worth analysis through probabilistic collocation-based Ensemble Kalman Filter. J. Hydrol. 2016, 540, 488–503. [Google Scholar] [CrossRef]

- Lorentzen, R.J.; Naevdal, G.; Valles, B.; Berg, A.; Grimstad, A.-A. Analysis of the ensemble Kalman filter for estimation of permeability and porosity in reservoir models. In Proceedings of the SPE Annual Technical Conference and Exhibition, San Antonio, TX, USA, 24–27 September 2006.

- Gao, G.; Reynolds, A.C. An improved implementation of the LBFGS algorithm for automatic history matching. SPE J. 2006, 11, 5–17. [Google Scholar] [CrossRef]

- Gu, Y.; Oliver, D.S. History Matching of the PUNQ-S3 Reservoir Model Using the Ensemble Kalman Filter. SPE J. 2005, 10, 217–224. [Google Scholar] [CrossRef]

- Liao, Q.; Zhang, D. Probabilistic collocation method for strongly nonlinear problems: 1. Transform by location. Water Resour. Res. 2013, 49, 7911–7928. [Google Scholar] [CrossRef]

- Wiener, N. The Homogeneous Chaos. Am. J. Math. 1938, 60, 897–936. [Google Scholar] [CrossRef]

- Ghanem, R.G.; Spanos, P.D. Stochastic Finite Elements: A Spectral Approach; Courier Corporation: Chelmsford, MA, USA, 2003. [Google Scholar]

- Li, H.; Sarma, P.; Zhang, D. A Comparative Study of the Probabilistic-Collocation and Experimental-Design Methods for Petroleum-Reservoir Uncertainty Quantification. SPE J. 2011, 16, 429–439. [Google Scholar] [CrossRef]

- Ghanem, R. Scales of fluctuation and the propagation of uncertainty in random porous media. Water Resour. Res. 1998, 34, 2123–2136. [Google Scholar] [CrossRef]

- Mathelin, L.; Hussaini, M.Y.; Zang, T.A. Stochastic approaches to uncertainty quantification in CFD simulations. Numer. Algorithms 2005, 38, 209–236. [Google Scholar] [CrossRef]

- Tatang, M.A.; Pan, W.; Prinn, R.G.; McRae, G.J. An efficient method for parametric uncertainty analysis of numerical geophysical models. J. Geophys. Res. Atmos. 1997, 102, 21925–21932. [Google Scholar] [CrossRef]

- Deutsch, C.V.; Journel, A.G. GSLIB: Geostatistical Software Library and User’s Guide, 2nd ed.; Applied Geostatistics Series; Oxford University Press: New York, NY, USA, 1998. [Google Scholar]

| Parameter | Layer | Min | Max |

|---|---|---|---|

| Porosity | 1–3 | 0.1 | 0.5 |

| Permeability | 1 | 200 mD | 750 mD |

| Permeability | 2 | 30 mD | 150 mD |

| Permeability | 3 | 100 mD | 500 mD |

| Parameters | Layer | True Value |

|---|---|---|

| Porosity | 1–3 | 0.3 |

| Permeability | 1 | 500 mD |

| Permeability | 2 | 60 mD |

| Permeability | 3 | 200 mD |

© 2017 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license ( http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Xue, L.; Dai, C.; Wang, L. Development of a General Package for Resolution of Uncertainty-Related Issues in Reservoir Engineering. Energies 2017, 10, 197. https://doi.org/10.3390/en10020197

Xue L, Dai C, Wang L. Development of a General Package for Resolution of Uncertainty-Related Issues in Reservoir Engineering. Energies. 2017; 10(2):197. https://doi.org/10.3390/en10020197

Chicago/Turabian StyleXue, Liang, Cheng Dai, and Lei Wang. 2017. "Development of a General Package for Resolution of Uncertainty-Related Issues in Reservoir Engineering" Energies 10, no. 2: 197. https://doi.org/10.3390/en10020197