1. Introduction

Cloud computing is a large-scale distributed computing paradigm in which customers are able to access elastic resources on demand over the Internet by a pay-as-you-go principle [

1,

2,

3]. Cloud computing facilitates services at three different levels, including Infrastructure as a Service (IaaS), Platform as a Service (PaaS), and Software as a Service (SaaS), and four deployment models, including private cloud, community cloud, public cloud, and hybrid cloud [

4]. With the increasing size of storage requirements, the inefficient use of resources causes high energy consumption [

5,

6]. Reports show that energy consumption has occupied a significant proportion of the total cost of data centers [

7]. Energy efficiency is becoming a challenge in data center management [

8,

9,

10,

11]. In cloud computing, virtualization is adopted for abstraction and encapsulation such that the underlying infrastructure can be unified as a pool of resources and multiple applications can be executed within isolated virtual machines (VMs) on the same server simultaneously [

12]. This allows the consolidation of VMs on servers and provides an opportunity for energy saving, since an active but idle server often costs much more in terms of power consumption, using between 50% and 70% of the power of a fully utilized server [

13]. However, the servers in data center utilize 10% to 50% most of the time and rarely reach 100% [

14]. The low utilization is often caused by the over-provisioning of resources since the data center is designed to support the peak load [

15].

When a request comes, the data center needs to create a VM and decides which server to place. However, the VM workload often experiences variability during the running time [

16]. There are two commonly used strategies; static allocation and dynamic allocation. Static resource allocation adopts the planned assignment unless an exception occurs. Provisioning for the peak workload is simple but inefficient and leads to a low resource utilization. To reduce resource waste, Speikamp et al. [

17] optimized the assignment based on daily workload cycles. Setzer et al. [

18] also modeled the problem as a capacity constraint optimization and adopted a mathematical method to consolidate the VMs on a minimum number of servers. To increase the resource utilization, some researchers propose resource reservation methods. The resource demand pattern of each VM is modeled, and then a consolidation algorithm based on the stationary distribution of the VM patterns is adopted for assignment. In [

19], a Markov chain is used to capture the burstiness of workload firstly, and then the VMs are allocated based on their workload patterns. All these static resource allocation methods assume that the workload patterns of servers are known and the VM set is stable. However, the VMs usually present quite different resource demands and execution times [

20]. The static allocation methods may not work well in these cases.

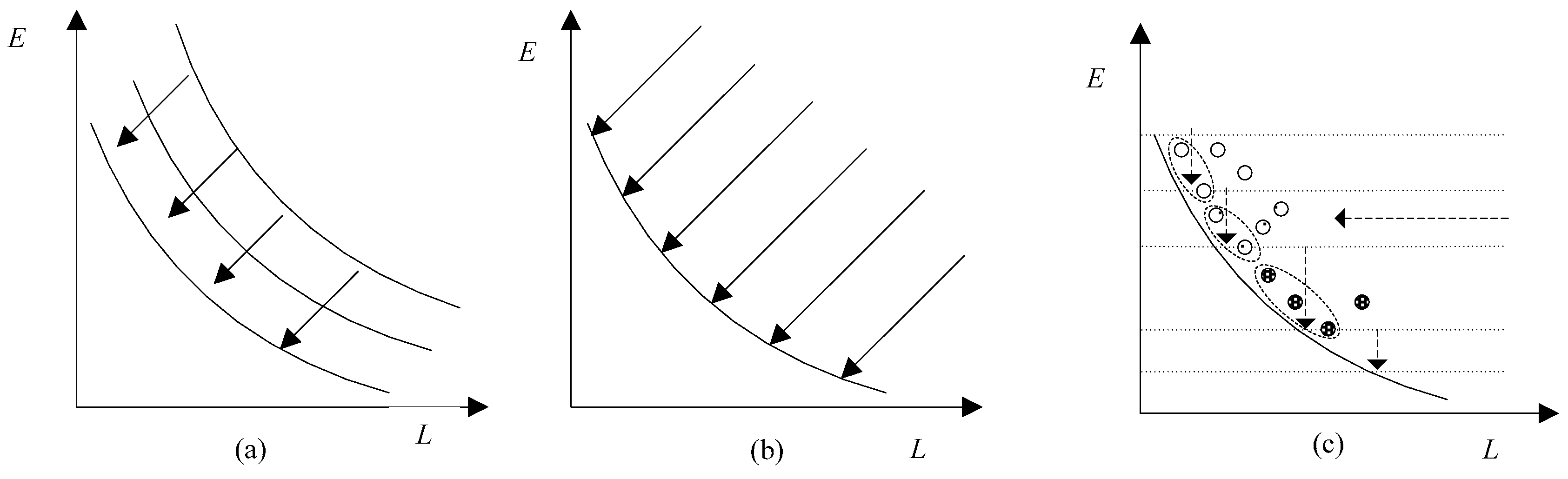

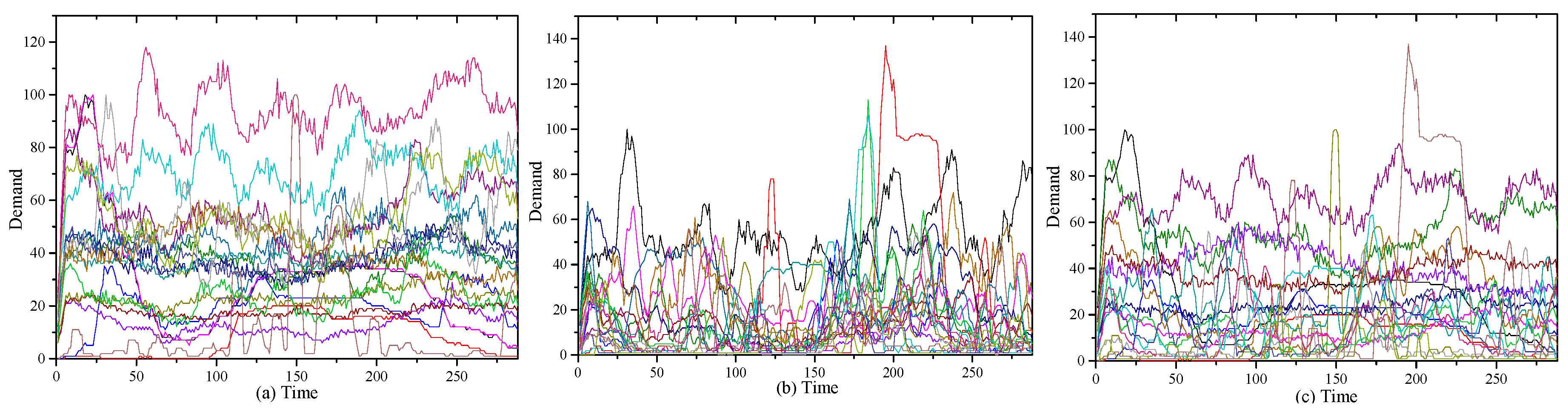

In recent years, the dynamic allocation of VMs with live migration has received significant attention. The VMs are firstly assigned to servers according to a normal workload, and then migration procedure is performed when servers are overloaded or underutilized. The idle servers can respond to the incremental workload and can also be switched to sleep mode. Thus, the VMs are assigned to the minimum active servers to reduce energy consumption and meanwhile meet QoS requirements. Different from static resource allocation, dynamic resource allocation methods do not need planning. The number of active servers is adjusted dynamically. The threshold-based method is simple and commonly used. In commercial and open-source approaches such as VMware’s Distributed Resource Management [

21], the management performs VM migrations when the resource utilization violates the predefined threshold. However, setting static thresholds is not efficient for non-stationary resource usage patterns. Beloglazov et al. [

22] proposed an adaptive threshold method based on the historical data and adopts a modified best-fit decreasing (BFD) method to dynamically allocate VMs. In order to reduce the number of unnecessary migrations, server loads, or VM resource requirements, a prediction method is introduced. The workloads of different applications are classified using k-means in [

23,

24]. Zhang et al. [

25] considered the heterogeneity of the workload and implemented resource prediction according to application characteristics. The VMs are allocated according to a standard convex optimization method and a container-based heuristic method. Recently, Verma et al. [

26] proposed a dynamic resource prediction method by feature extraction from users’ data and allocated the VMs by a best-fit decreasing algorithm. In addition to the above deterministic algorithms for VM assignment, probabilistic methods are also proposed to solve large-scale problem with thousands of servers. In [

15], Mastroianni et al. proposed a probabilistic consolidation method, ecoCloud, to implement VM assignment and migration according to the local information of each server.

In addition to traditional heuristic and probabilistic methods, evolutionary algorithms have also been widely used in DVMP due to their good performance on complex optimization problems [

27,

28,

29]. In [

30], Mi et al. proposed a genetic algorithm based approach to adaptively adjust the VMs according to time-varying requirements. In [

31], Ashraf et al. employed ant colony optimization (ACO) to find a VM migration plan to consolidate VMs on underutilized servers. In [

32], Farahnakian assigned the VMs by a best-fit algorithm first and then performed an ant colony system for VM migration when observing workload variation. A VM migration is allowed only if it is able to reduce the energy consumption and the number of VM migrations. Thus, it may not work well on the situations where many VMs present short-term bursts and a large number of servers are overloaded.

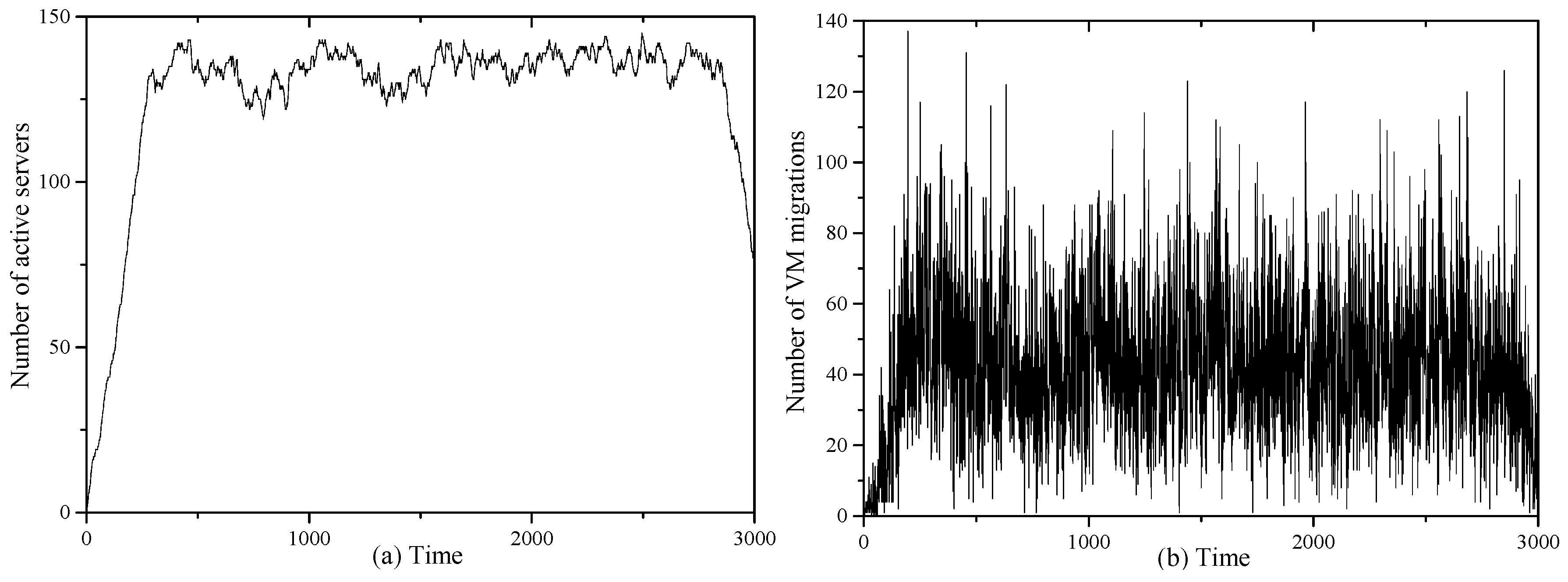

The approaches mentioned above all deal with the VM assignment and VM re-allocation separately. However, these methods are not efficient in cases when new application requests and migrations occur simultaneously. Moreover, the VM re-allocation procedure is to find a new server in which to place the corresponding VM. Thus, we can use a unified algorithm for the assignment and re-allocation of VMs. In this paper, we formulate the dynamic virtual machine placement (DVMP) as a multiobjective combinatorial optimization problem and design an online algorithm and an ant colony system (ACS) based unified algorithm, termed UACS, to consolidate VMs for saving energy and reducing the number of migrations while meeting QoS requirements simultaneously. This is a further extension of our early work on VM assignments at a certain time in [

33]. In UACS, multiple ants construct solutions concurrently with the guidance of a pheromone and heuristic information. A dynamic pheromone deposition method is designed to support the dynamic VM assignment and migration. In order to reduce the number of VM migrations, heuristic information is also introduced to help the solution construction. The QoS requirements are formalized via Service Level Agreements (SLA). Experiments with large scale random workload or real workload traces are carried out to evaluate the performance. Not only the traditional heuristic approach, BFD [

22], and the probabilistic method, ecoCloud [

15], but also a recently proposed ACS-based algorithm, ACS-VM [

32], are implemented for comparison. Compared with the traditional heuristic, probabilistic, and other evolutionary algorithms, the proposed UACS achieves competitive performance in terms of energy consumption, the number of VM migrations, and the number of SLA violations.

The remainder of this paper is organized as follows.

Section 2 describes the DVMP problem, performance metrics, and the ant colony system in detail.

Section 3 develops the proposed algorithm, UACS. Experiments are undertaken to evaluate the performance of the UACS in

Section 4. Finally, the conclusions are drawn in

Section 5.