1. Introduction

Wind energy is a clean resource, and one that is growing worldwide, since it is the most efficient renewable energy source [

1,

2]. Wind turbines present structural health problems, mainly in Wind Turbine Blades (WTB) [

3]. Delamination is one of the most common problems in composite materials, caused by the disunion of their layers or by the detachment of their adhesive bonds. Low speed impact on working conditions can create a visible fault on WTB [

4,

5]. The microstructural fault increases because of the cyclic fatigue loads, and it can degrade the rigidity and strength of the composite [

6]. Similarly, delamination can be generated by an error in the manufacturing process.

State space models are employed to predict the growth of delamination by stress/strain, fracture mechanic, cohesive zone, extended finite element method based models [

7]. Hoon Sohn et al. used a wavelet-based signal processing technique, together with an active sensing system, to detect delamination in near-real-time for composite structures [

8].

Smart blades are currently used in Structural and Health Monitoring (SHM) systems and applied to WTB, using sensors in the blades to monitor the condition of the WTB. McGugan et al. propose a technique for measuring and evaluating the structural integrity by introducing a new concept of tolerance to damage. It involves the life cycle of a blade, i.e., design, operation, maintenance, repair, and recycling. Pattern recognition is used to establish a “damages map” that evaluates the SHM, allowing an efficient operation of the wind turbine in terms of load relief, limited maintenance, and repairs [

9,

10]. Dynamic models are also employed in SHM to detect instantaneous structural changes online [

11,

12]. Sohn et al. propose an approach composed of four steps [

13]: operational evaluation; data acquisition & cleansing; feature extraction & data reduction; and statistical model development. The Artificial Neural Networks (ANN) model produces accurate results in terms of fault diagnosis. ANN demonstrates advantages, such as speed, simplicity, and robustness [

14]. Rasit Ata presents a review of ANN applied in wind energy systems [

15]. Bork et al. [

16,

17], Yam et al. [

18], and Su and Ye [

19] have issued different approaches considering Lamb waves and ANN.

Ultrasonic Lamb waves have been employed in this paper to detect and diagnose faults. Lamb waves can detect internal and surface faults in WTB [

20,

21]. A wide range of potential defects that employ different algorithms are studied in the literature [

22,

23]. In this paper, Discrete Wavelet Transforms (DWT) are applied to filter signals. DWT is effective for signal denoising, filtration, compression, and feature extraction of signals [

24]. A correct signal pre-processing, or an appropriate selection of characteristics, can result in a simple classifier obtaining excellent results [

25]. The Daubechies wavelet family was employed in this paper. It has been demonstrated that it is sensitive to sudden changes [

26]. The normalization of the signals regarding the environmental and operational conditions is a key issue in avoiding false diagnosis [

27]. The mean value of the time series has been used to remove the direct current offset from the signal. This result is divided by the standard deviation of the signal to normalize varying amplitudes in the signal. Gómez et al. developed a similar pattern recognition approach for diagnosing ice on WTB by employing Daubechies wavelet [

21,

28].

The main purposes of Feature Extraction (FE) and Feature Selection (FS) in this paper are to reduce dimensionality, and to increase computational performance and classifier accuracy. The Auto-Regressive (AR) method is proposed to extract the FE. The AR model has high sensitivity to damage features [

29,

30,

31]. This technique has been employed in time series analysis and predictive models for fault detection [

32,

33], but it has not been studied enough for pattern recognition. Yao et al. applied statistical algorithms of pattern recognition using AR FE techniques by spectral model and residual AR with acceleration data [

34]. Nardi et al. studied delamination by means of AR models [

35]. Figueiredo et al. [

36] propose an AR-based approach using acceleration time series in order to distinguish variations in characteristics related to damage from those related to operational and environmental effects. In this paper, selection of the number of AR model parameters is carried out. A higher order model can fit the dataset, but cannot be generalized to other datasets. A lower model order may not adequately represent the physical dynamics of the system.

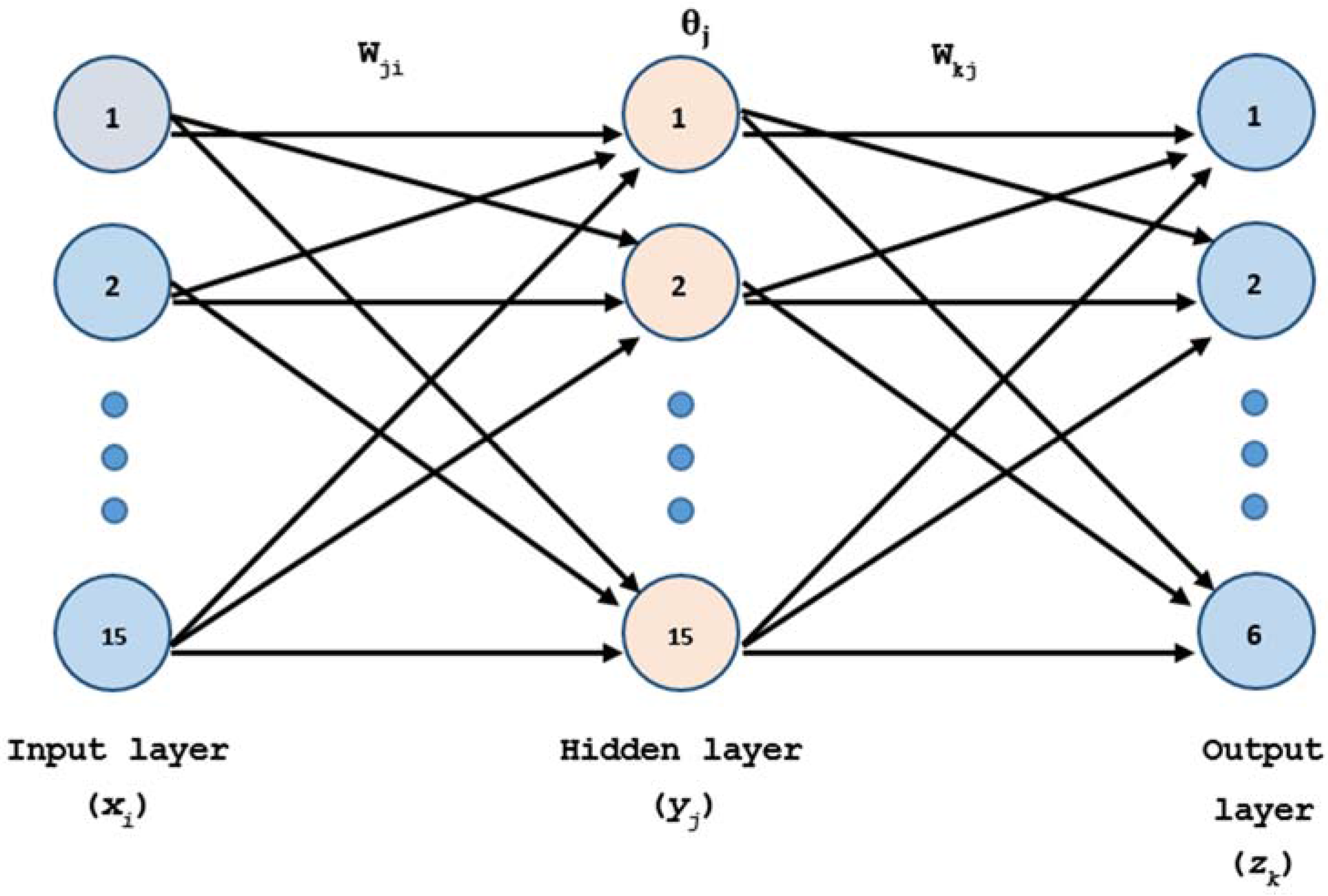

FS is employed to set the architecture of the ANN. The number of inputs corresponds to the features selected, the outputs (number of class) to the network conditions, and the number of nodes in the hidden layer depend on the features and classes.

Akaike’s Information Criteria (

AIC) [

37], Final Prediction Error (FPE) criterion [

38], Partial Autocorrelation Function (PAF) [

39], Root Mean Square (RMS) [

40] and Singular Value Decomposition (SVD) [

41] have been employed in this paper to obtain the most suitable AR model by choosing the number of parameters. The results of this work suggest that the optimal order is from 15 to 30.

The ultrasonic waves are analyzed by signal processing and classifiers by Machine Learning (ML) and ANN to determine the degree of delamination. The experiments consider six levels of delamination. This study analyses different classifiers of ML and ANN to obtain the best success rate.

The classifiers used to identify the scenarios are quadratic discriminant analysis [

42], k-nearest neighbors [

43], and decision trees [

44]. The confusion matrix is employed to evaluate the classification using the receiver operating characteristic analysis by: recall, specificity, precision and

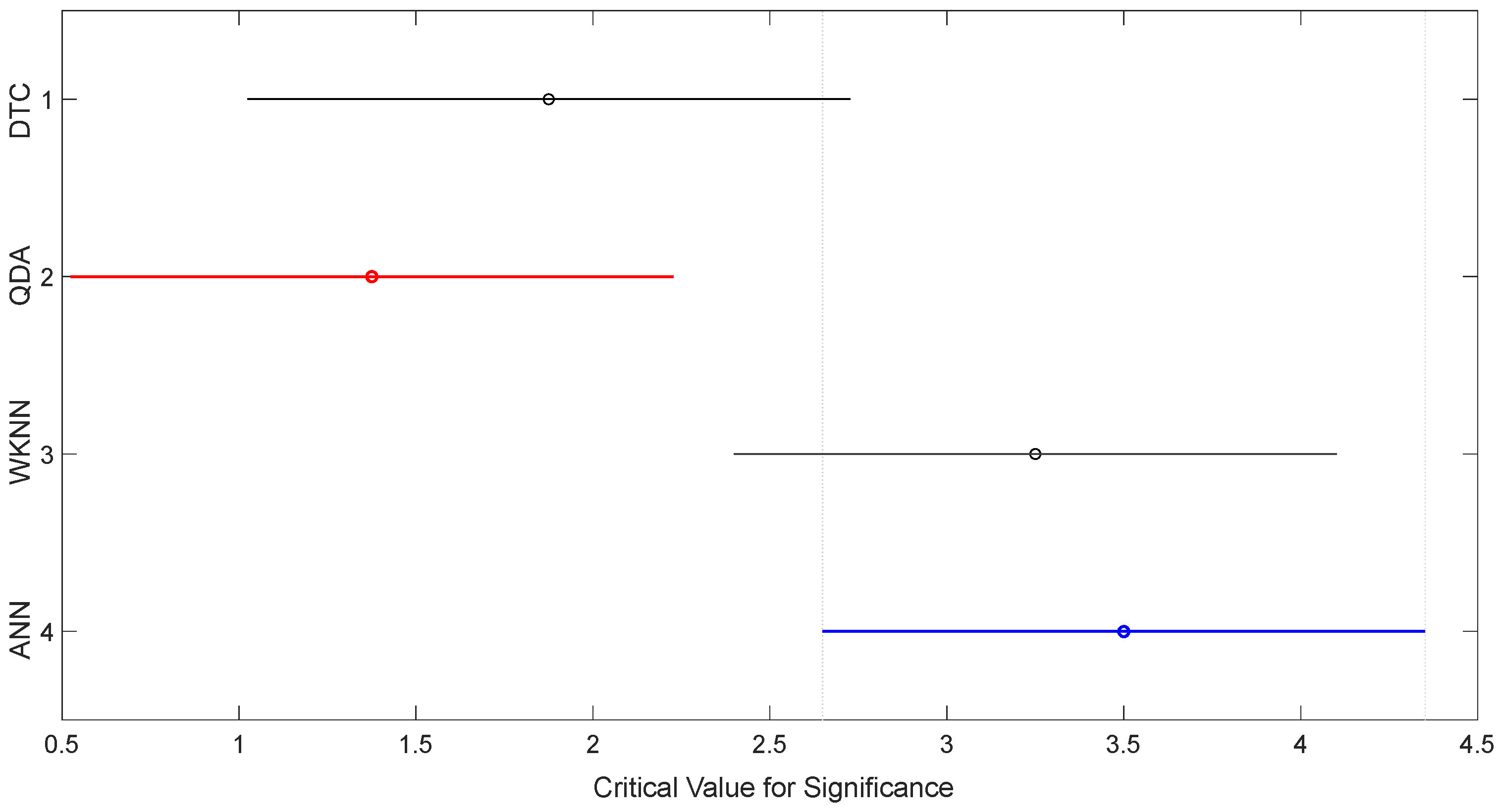

F-score. The conventional methods to establish the average performance in all categories were macro and micro average. The recommendations by Demšar, and Garcia and Herrera have been used to compare the classifiers and analyze their results. The Friedman test has been used to test the null hypothesis that all classifiers achieve the same average. The Bonferroni–Dunn test has been applied to determine significant differences between the top-ranked classifier and the next one. The Holm test was applied to contrast the results. When this approach was used, all the scenarios showed a high level of accuracy.

The main novelty is to employ the abovementioned approach for fault detection and diagnosis in WTB that employs guided waves, where it has not been found in literature for detecting delamination in WTB.

2. Approach for Delamination, Detection, and Diagnosis in WTB

The ultrasonic signal studied should be conditioned and denoised to train the classifiers properly [

45]. The wavelet transform has been used in this paper to perform signal denoising [

46,

47].

The inputs and training patterns are critical in the design of the classifiers, because they will determine the performance of the network and its architecture. It is necessary to use a technique that reduces the number of inputs that maintain the characteristic information of the signal. The feature coefficients of each ultrasonic signal are extracted using the AR model by the Yule–Walker method [

48].

The classifiers considered in ML are: Decision Tree (DT), Quadratic Discriminant Analysis (QDA), K-Nearest Neighbors (KNN), and neural network multilayer perceptron. The schematized process of the approach is shown in

Figure 1.

The wavelet threshold denoising method is applied by employing a multilevel 1D wavelet analysis by Daubechies family [

49,

50]. The wavelet decomposition structure of the signal is extracted. The threshold for the denoising is obtained by the wavelet coefficients selection rule using a penalization method provided by Birgé-Massart [

51,

52].

Each set of ultrasonic signals of every delamination level is averaged and normalized by Equation (1) to avoid false positive damage:

where

is the normalized signal,

is the mean, and

is the standard deviation of

y.

In an AR model of order

p, the current output is a linear combination of the last

p output plus a white noise input. The weight on the last

p output minimizes the mean square prediction error of the AR model. If

y(

t) is the current value of the output, and

y(

t) presents a zero-mean white noise input, the AR(

p) model can be expressed by Equation (2):

where

y(

t) is the time series to be modelled,

are the model coefficients,

. is white noise, independent of the previous points, and

p is the order of the AR model.

Yule–Walker method is used for FE. The Yule–Walker parametric method calculates the AR parameters through the biased estimation of the autocorrelation function given by Equation (3),

where

r(m) is the biased form of the autocorrelation function. It ensures that the autocorrelation

r matrix is positive. The value of

r(m) is given by Equation (4).

The AR coefficients (

) are obtained using the Levison–Durbin algorithm [

53].

Figure 2 shows Yule–Walker Power Spectral Density (PSD) of all delamination levels in the WTB.

Akaike’s Information Criterion (

AIC) has been used to reduce the dimensionality of the feature extraction. The

AIC is a measure of the goodness-of-fit of an estimated statistical model, based on the trade-off between fitting accuracy and the number of estimated parameters.

AIC is given by Equation (5):

where

is the number of estimated parameters,

the number of predicted data points,

the error, and

the average sum-of-square residual error.

3. Classification Procedure

3.1. Machine Learning Approach

A supervised classification is considered in ML, where the same number of signals is set for each group, or population. The cross-validation technique has been employed to estimate the probability of misclassification, and to avoid overfitting in all cases considered.

Decision Tree (DT) is a classifier used to determine if the dataset contains different classes of objects that can be interpreted significantly in the context of a substantive theory. DT generates a split of space from a labelled training set. The objective is to separate the elements of each class into different labelled regions, called leaves, minimizing a local error. Each internal node in the tree is a question, or decision, that determines the branch of the tree that must be taken to reach a leaf. DT is determined in the following cases: how to split the space, called Splitting Rules (SR); stopping the condition of splitting; labelling function of a region, and; measurement of error.

The purpose of the SR is to minimize the impurity of the node. In this case, SR is based on Gini’s Diversity Index (

GDI) [

54], given by Equation (7):

where the sum is over the classes

i at the node, and

p(

i) is the observed fraction of classes with the class

i that has the node. For a node with only one class, called the pure node,

GDI = 0, being

GDI > 0 in other cases. The algorithm stops if the node is pre-set at maximum depth; all elements of the node are the same class; there is no empty sub node; or SR does not reach a pre-set value.

DT labels a leaf, or region, when it is already considered as a terminal. The labelling function is set by Equation (8):

where

is the number of elements of class

l,

is the class to label, and

is the labelling cost.

The labelling cost considering all classes is calculated, and

is selected to minimize the error, where a random one is chosen in case of a tie. Equation (9) provides a classification average error:

where

is the error of labelling a class

l as

l’. This error will be solved by splitting the space and assigning a label to each split.

The number of partitions has been adjusted using the Decision Tree Complex (DTC) algorithm. It allows a maximum of 100 partitions to avoid overfitting.

Quadratic Discriminant Analysis (QDA) is employed to classify each feature (

x) in pre-existing different groups, from the information of a set of variables (

x), called variable classifiers [

55]. The information of each variable (

x) is synthesized in a discriminant function. Each class produces a dataset using Gaussian mixture distribution, given by Equation (10):

where

and

is the class

k (

k ≤

i ≤ K) population mean vector and covariance matrix.

The metric distance to each class is calculated using the variance–covariance matrix of each class, instead of the global matrix grouped in QDA [

56], according to the Equation (11);

where

is the squared distance between sample

i, and the class

k centroid, and

is the corresponding variance–covariance matrix for that class.

The maximum of the a posteriori discriminant function

, employing the Bayes rule and natural logs, is given by Equation (12):

K-Nearest Neighbors (KNN). The KNN classifier has been used for pattern classification and ML. The KNN is based on the principle that an unclassified instance within a dataset is assigned to the class of the nearest previously sorted instances [

57]. Each instance can be considered as a point within an n-dimensional instance space, where each of the n-dimensions corresponds to one of the n-features that define an instance [

58].

The accuracy of KNN classification depends on the metric used to compute distances between different instances. In this case, the best performing classifier is Weighted KNN (WKNN). WKNN assigns weights to neighbors regarding the distance calculated [

59]. Weighted metric can be defined as a distance between an unclassified sample

x, and a training sample

, given by Equation (13):

3.2. Artificial Neuronal Network (ANN): Multilayer Perceptron (MLP)

In this paper, three layers of processing units are used as the structure (15/15/6). Backpropagation, together with the algorithm scaled conjugate gradient and performance cross entropy [

60,

61] with “early stopping” to avoid overfitting [

62], has been employed as the training mode. ANN is given by Equation (14):

where

. is the input,

. the hidden layer output,

. the final layer output,

the target output,

the hidden layer weight,

. the final layer weight,

hidden layer bias,

the final layer bias, and

(·) is the activation function sigmoid type.

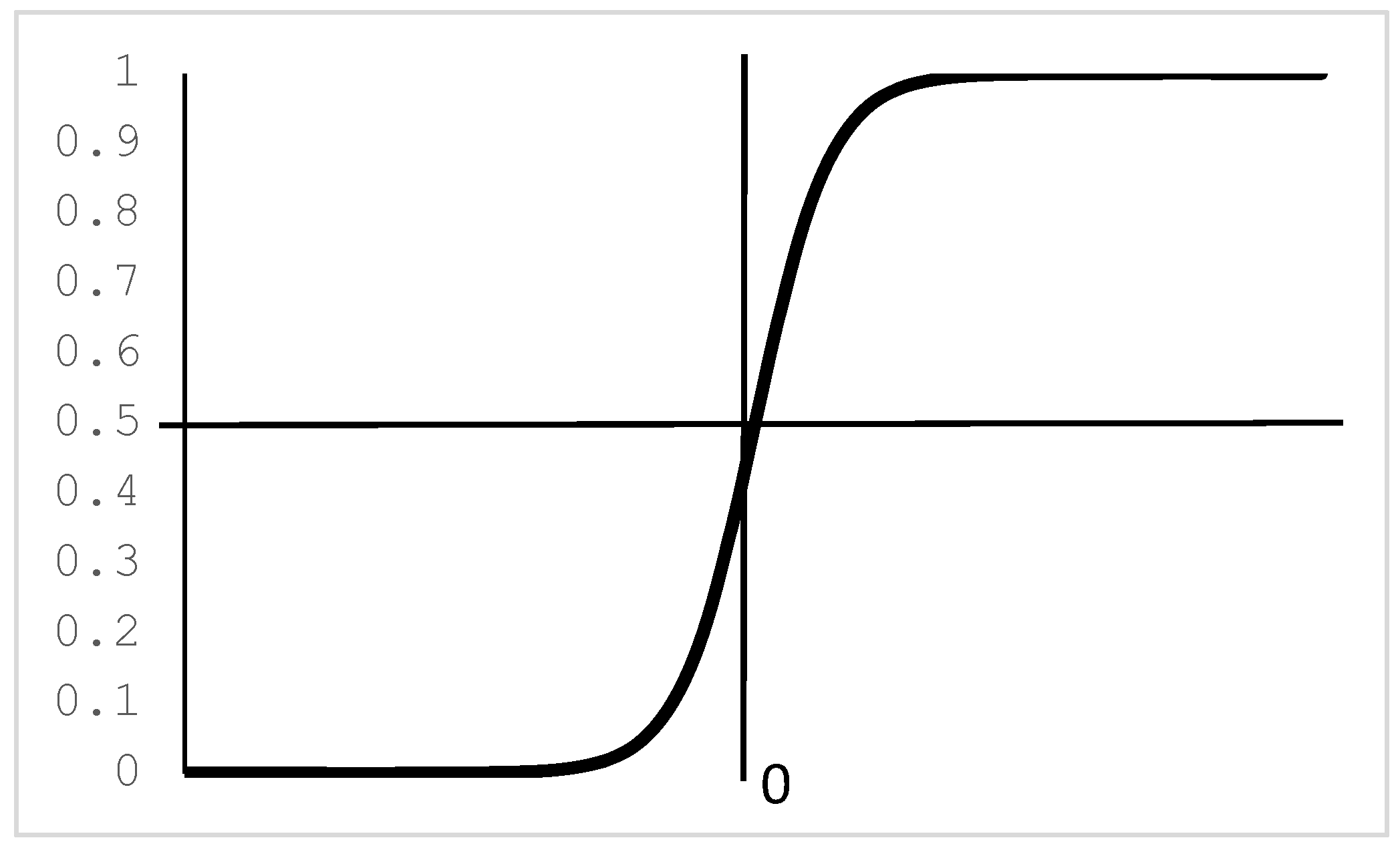

The sigmoid function,

Figure 3, is used as the activation function of the ANN, given by Equation (15). It provides an output in the range [0, 1].

The MLP is tested initially with an ANN architecture, and trained with 70% of the experiments. The ANN architecture is chosen according to the best accuracy and performance. The network is validated with different cases (30%) to determine if the learning is correct, and to check if it classifies correctly.

3.2.1. Training Process

Backpropagation (BP) is one of the simplest and most general methods for supervised training of multilayer ANNs. Techniques, such as scaled, have been developed to accelerate the training method BP, standardization, or normalization that performs pre-processing inputs. In this paper, BP mode algorithms are the scaled conjugate gradient and performance cross entropy.

Conjugate gradient algorithm, based on performing gradient descendent, is a second-order analysis of the error, employed to determine the optimal rate of learning, and extract information provided by the second derivative of the error by the Hessian matrix, (

H), Equation (16).

The algorithm uses different approaches to avoid high computational costs. The MLP is employed in different stages during the learning process, where the reduction of error can be slow. It is suggested that the Mean Square Error (MSE) replaces the cross-entropy error function, because MSE shows a better network performance.

3.2.2. Architecture of the Network

Different ANN structures have been tested, and the structure that provides the best results was a hidden layer with 15 neurons, based on comparative performance by trial and error. The network architecture set was 15-15-6 (

Figure 4).

3.3. Classifier Evaluation

The Receiver Operating Characteristic (ROC) analysis, based on Confusion Matrix (CM), is used to evaluate the classification. CM determines the quality of a classifier and its performance. The main parameters considered in CM are:

TP: True positive is the real success of the classifier.

FP: False positive is the sum of the values of a class in the corresponding CM column, excluding the TP.

FN: False negative is the sum of the values of a class in the corresponding CM row, excluding the TP.

TN: True negative is the sum of all columns and rows, excluding the column and row of the class.

The following equations will be applied to find the main measurement parameters when they are known:

Recall,

R, known as true positive rate, is the probability of being correctly classified, given by Equation (17).

Specificity,

S, also called the true negative rate, measures the proportion of negatives that are correctly identified, given by Equation (18).

Additional terms associated with ROC curves and CM are:

The average performance in all categories is set by two conventional methods [

63]:

Macro average (): , , is obtained by the averaging overall , where M denotes macro average, and i is the scenario. They are calculated for each category, i.e., the values precision is evaluated locally, , and then globally, .

Micro average (): , and value is obtained as: (i) TPi, FPi, FNi values are calculated for each of the scenarios; (ii) the value of TP, FP, and FN are calculated as the sum of TPi, FPi, FNi; and (iii) applying the equation of the measure that corresponds to it.

There are several indices that are extracted from the ROC curve to evaluate the efficiency of a classifier. The Area Under Curve (

AUC), value between 0.5 and 1, is the area between the ROC curve and the negative diagonal [

64,

65].

AUC ≤ 0.5 indicates that the classifier is invalid, and

AUC = 1 indicates a perfect rating, because there is a region in which, for any point cut, the value of

R and

P is 1. The statistical property of

AUC is equivalent to the Wilcoxon test of ranks [

65]. The

AUC is also closely related to the Gini coefficient [

54], which is twice the area between the diagonal and the ROC curve.

The recommendations by Demšar, and Garcia and Herrera have been used to compare the different classifiers and analyze their best performance. Firstly, the Friedman Test will be used to test the null hypothesis that all classifiers achieve the same average. The Bonferroni–Dunn test will be applied to determine significant differences between the top-ranked classifier and the next one. The Holm test will be applied to contrast the results.

4. Case Study

The experiments were carried out in laboratory conditions. Regarding reference [

66], it is possible to reproduce the test in working conditions by a multi frequency analysis. Low frequencies are associated with the vibration of the blade, medium frequencies with acoustic emissions, and high frequencies with the ultrasonic excitation signal.

Delamination, perpendicular to the direction of propagation according to Reference [

19], has been carried out, with the smallest side perpendicular to the direction of propagation, and the larger side parallel to the propagation direction. The occurrence of multiple-delamination and transverse cracking has not been encountered in this study, but, according to [

66], it would be affected in a similar way to the case study considered in this paper. Therefore, it would be possible to detect a potential failure with the presented approach.

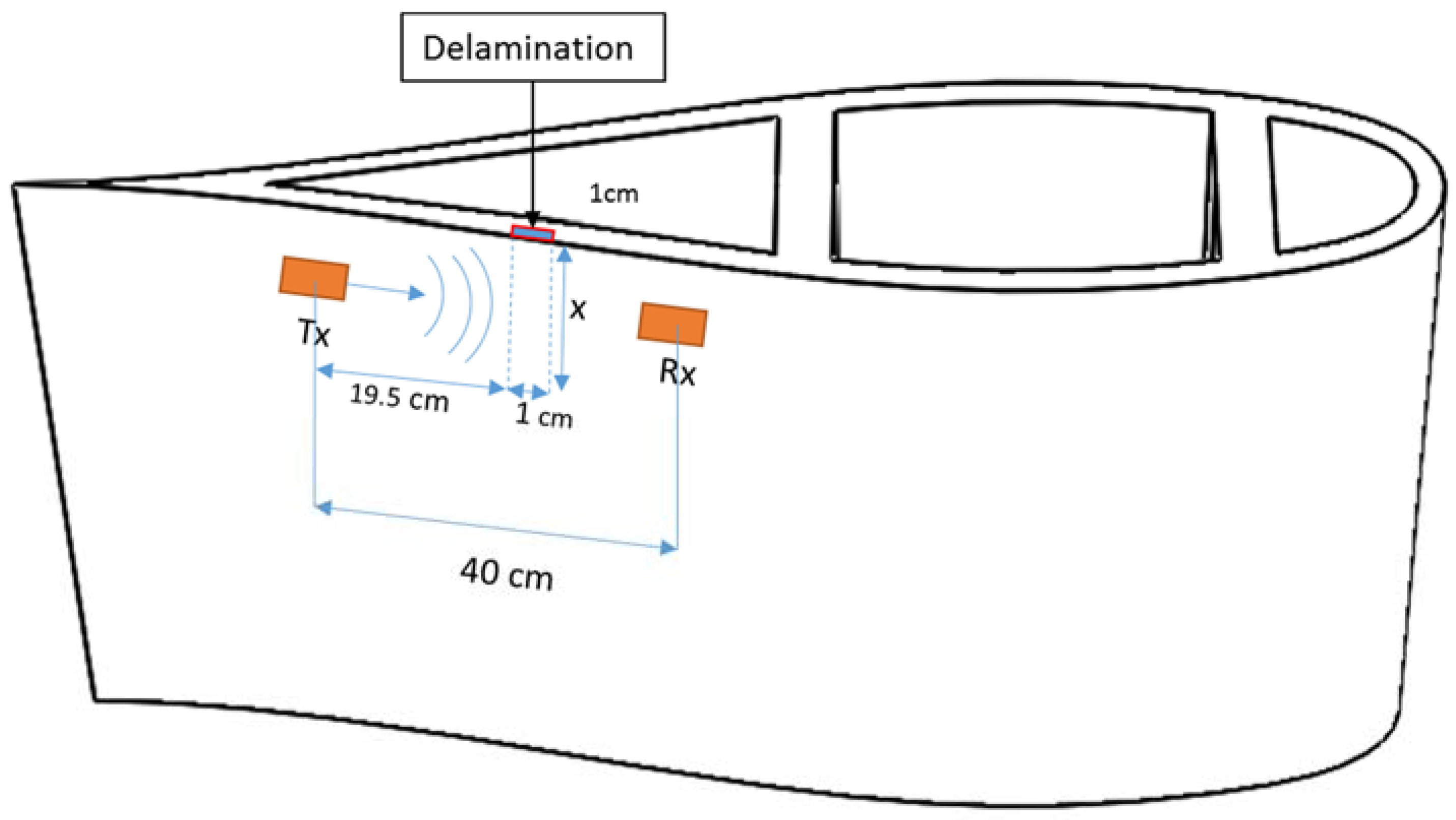

Six different scenarios have been studied: the WTB without delamination (free of fault) was considered in the first scenario. A separation of layers was induced in the second scenario. The dimensions of the disunion were one centimeter wide by one centimeter deep. Subsequently, from scenarios three to six, deeper delamination was induced by increasing the depth in a centimeter in each state.

Figure 5 shows the arrangement of the transducers and delamination in the WTB section, and

Table 1 shows the deep values (

x).

Guided waves were generated in the WTB section using Macro Fiber Composite (MFC) transducers.

Figure 6 shows the transducer arrangement on the downwind side of the WTB. The ultrasonic technique used is called “pitch and catch” [

67]. A short ultrasonic pulse is emitted by the MFC transmitter (Tx). The signal is collected by the MFC sensor (Rx). The excitation pulse is a six cycle Hanning pulse [

68,

69].

Five different excitation frequencies were conducted for each scenario of delamination to check the accuracy regarding the frequencies of delamination detection: 18 kHz, 25 kHz, 30 kHz, 37 Hz, and 55 kHz. Six hundred signals from each frequency were collected. The best accuracy was found at 55 kHz.

Figure 7 shows signals acquired at 55 kHz in all scenarios.

6. Conclusions

The main novelty presented in this paper has been to apply an approach for detecting and diagnosing the delamination in Wind Turbine Blades (WTB) to guided waves. The signals were experimentally obtained in a real WTB. The signal is filtered by wavelet transform with Daubechies family. The signal is studied by normalized and non-normalized signal tests, to avoid the effects of environmental and operational variations.

Feature extraction is done by the AR Yule–Walker model, and Feature Selection (FS) by Akaike’s information criterion.

The FS is done by the autoregressive model, where the selection process is based on trial and error, specifically for artificial neural network, where several input configurations and neuron nodes in the hidden layer have been tested.

Machine learning and artificial neuronal networks are used for pattern recognition. Six scenarios of delamination were considered. The approach detected and classified all the scenarios. The classifiers used to identify the scenarios are: quadratic discriminant analysis, k-nearest neighbors, and decision trees. The confusion matrix is used to evaluate the classification, especially the receiver operating characteristic analysis by recall, specificity, precision and F-score. The conventional methods to establish the average performance in all categories were macro and micro average. The recommendations by Demšar, and Garcia and Herrera have been used to compare the different classifiers and analyze their best performance. Firstly, the Friedman test has been used to confirm the null hypothesis that all classifiers achieve the same average. The Bonferroni–Dunn test has been applied to determine significant differences between the top-ranked classifier and the next one. The Holm test was applied to contrast the results. In this paper, the approach shows a high level of accuracy for the scenarios considered at room temperature, according to the results of the tests.

The performance of diagnostic system testing for multi-level detection of delamination in ANN and WKNN classifiers indicate a high level of accuracy, ANN being the best classifier.