1. Introduction

Lithium iron phosphate (LiFePO

4) batteries have become popular due to their high specific power, high specific energy density, long cycle life, low self-discharge rate and high discharge voltage for renewable energy storage devices, electric vehicles [

1,

2,

3] and smart grids [

4,

5,

6]. However, the batteries are vulnerable to the operating ambient temperature and unforeseen operating conditions such as being overly charged or discharged that could result in a reduced lifespan and deteriorate their performance. It is therefore vital to obtain the current state of charge (SOC) of the battery in actual application. The SOC is defined as the available capacity over its rated capacity in Ampere-hour (Ah). The estimation of SOC is quite complicated, as it depends on the type of cell, ambient temperature, internal temperature and the application. There have been many efforts in recent years to enhance the accuracy of the SOC estimation in battery management systems (BMSs) to improve the reliability, increase the lifetime and enhance the performance of batteries [

7,

8]. An accurate estimation of SOC could prevent sudden system failures resulting in damage to the battery power system. As a result, investigation on SOC estimation has spurred many research and development projects.

The common Ampere-hour method [

8] uses the current reading of the battery over the operating period to calculate SOC values. However, the SOC need to be calibrated on a regular basis as its capacity could decline over the time. On the other hand, the voltage method uses the battery voltage and SOC relationship or discharge curve to determine the SOC. Both the Ampere-hour and voltage methods have disadvantages. The latter needs the battery to rest for a long duration and be cut off from the circuit to obtain the open circuit voltage, and the former suffers noise corruption and cumulative integration errors. In addition, the operating ambient temperature effects makes it quite difficult to estimate the correct SOC value. Another method using cell impedance for measuring both discharging and charging with an impedance analyzer was used, however, it is not commonly used in operating battery systems as it requires external instrumentation for measurement and validation.

Instead, a more accurate physical modeling using an electrochemical model was used [

9,

10,

11,

12]. The electrochemical models ensure the model parameters give have a proper physical meaning but the required nonlinear partial differential equations increase the complexity and computational efforts in the SOC estimation process. As a result, a model with fewer parameters in the electrochemical model was proposed [

13] using moving horizon estimation to determine the SOC. For example, the equivalent circuit model [

14,

15,

16] that depends on the value of the electronic components such as the resistors and capacitors was used. It was easier to obtain as compared to solving partial differential equations. However, the resistance and capacitance rely on the operating ambient temperature and the type of cell used in the battery. A few nonlinear observer design methods were applied to derive the ECM-based nonlinear SOC estimators such as sliding mode observer [

17], adaptive model reference observer [

18] and Lyapunov-based observer [

19]. Despite its simplicity and less computational effort, it cannot represent the actual physical meaning of model parameters like the ambient temperature was not included in the model for the SOC estimation.

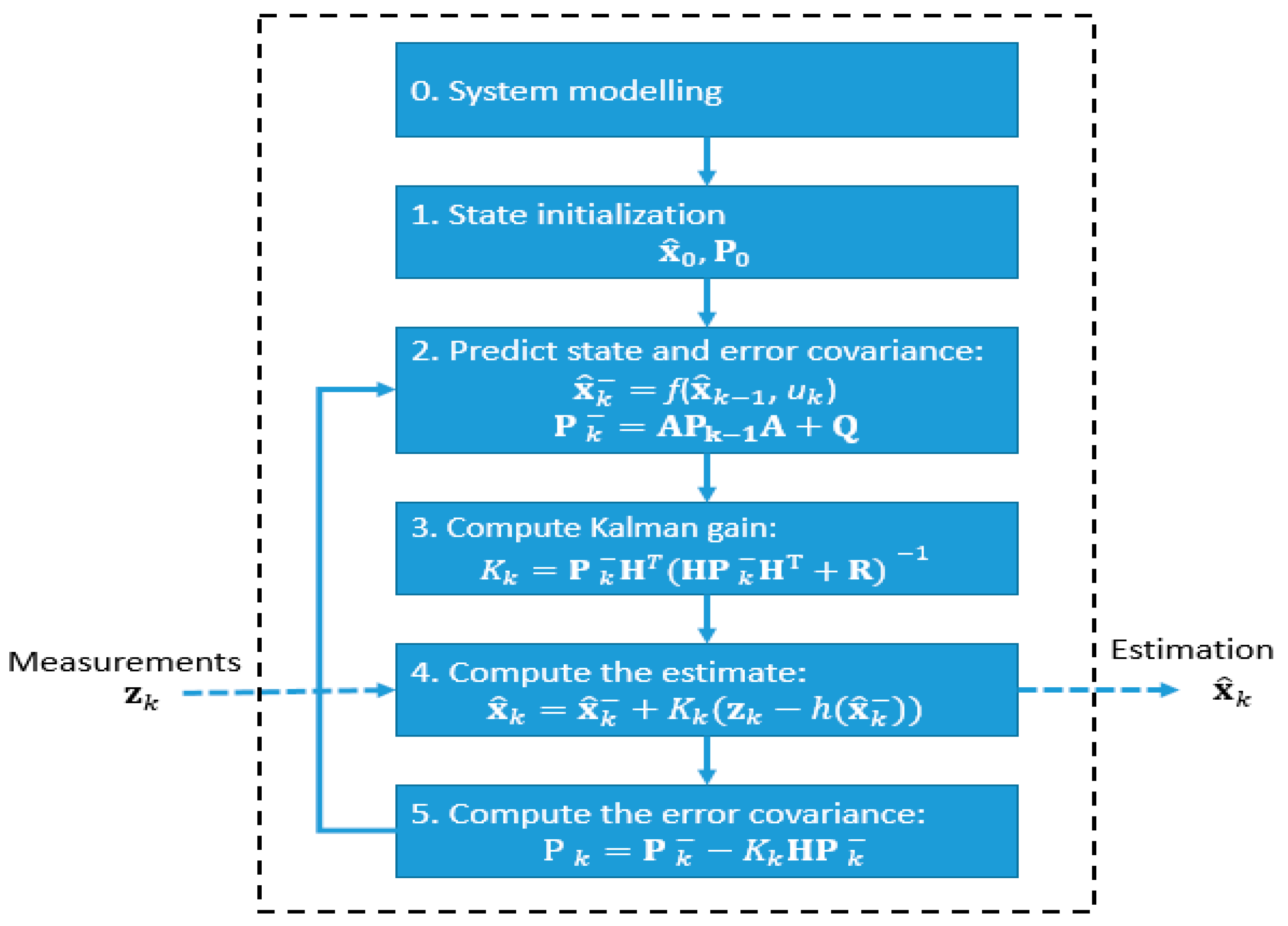

The equivalent circuit model using a Kalman filter (KF) [

20,

21,

22,

23] was another method employed to estimate the SOC. However, it requires a model of the battery and higher computing resources with correct initialization of parameters. The Extended KF (EKF) [

20] was applied to estimate the SOC using a nonlinear ordinary differential equation model. The unscented KF was then used [

21] to avoid the linearization of the nonlinear equation in the EKF. In addition, a nonlinear SOC estimator [

22] are employed on the partial differential equations of the battery model.

The filtering performance could be enhanced using an online time-varying fading gain matrix. As a result, a strong tracking extended Kalman filter (STCKF) [

23,

24,

25] that outperforms the EKF was used to track the state of sudden change of SOC value accurately. Cubature Kalman filter (CKF) [

25] was utilized for a higher dimensional state estimation followed by a spherical cubature particle filter (SCPF) [

26] for predicting the battery’s useful life. However, SCPF takes times to get the samples to congregate correctly, and it is difficult to determine the particle filter performance.

For the past few decades, artificial intelligence has been used to model complex systems with uncertainties. A data-driven approach using neural network [

27,

28,

29], fuzzy logic [

30], neural network-fuzzy [

31], genetic algorithm-based fuzzy C-means (FCM) clustering techniques [

32] to partition the training data and support vector machine (SVM) [

33] were applied to predict the SOC. These machine learning methods require sufficient datasets and computation time for training and validating the SOC. The types of machine learning approaches used in the literature are numerous. In this study, the extreme learning machine (ELM) [

34] will be used to model the state-of-charge of the battery pack. ELM has become quite useful due to its good generalization, fast training time, and universal approximation capability. As compared to other machine learning algorithms such as backpropagation (BP) [

35], it is well-known that the parameters of hidden layers of the ELM are randomly generated without tuning. The hidden nodes could be determined from the training samples. Some researchers [

23,

36,

37,

38] have shown that the single layer feedforward networks (SLFNs) [

39,

40,

41,

42,

43,

44,

45,

46,

47,

48,

49] ensure its universal approximation capability without changing the hidden layer parameters. ELM using regularized least squares could compute faster than the quadratic programming approach in gradient method adopted by BP. There is no issue of local minimal and instabilities caused by different learning rate, and differentiable activation function.

There are numerous types of ELM learning algorithms. The list in this paper is not exhaustive. A few selected algorithms will be briefly explained. Basic incremental ELM (I-ELM) [

36,

50] randomly produces the hidden nodes and analytically computes the output weights of SLFNs. I-ELM does not recompute the output weights of all the existing nodes when a new node is appended. The output weights of the existing nodes are then recalculated based on a convex optimization method when new hidden nodes are randomly added one at each time. The learning time for I-ELM is longer than ELM as it needs to compute n output weights one at a time when n hidden nodes are used. However, ELM only computes n output weights once when n hidden nodes are used. Few methods using different growth mechanism of hidden nodes were adopted. They are namely enhanced incremental ELM (EI-ELM) [

51], error-minimized ELM (EM-ELM) [

52] and optimal pruned ELM (OP-ELM) [

53] that produce a more compact network and faster convergence speed than the basic I-ELM. Another incremental ELM, named bidirectional ELM (B-ELM) [

54] with some hidden nodes not randomly selected could improve the error at initial learning stage at the expense of higher training time when compared to ELM. Another ELM learning using hierarchical ELM (H-ELM) [

49] improves the learning performance of the original ELM due to its excellent training efficiency, but it increases the training time due to its deep feature learning.

On the other hand, the sequential learning algorithms are quite useful for feedforward networks with RBF nodes [

48,

55,

56,

57,

58,

59,

60]. Some researchers [

59,

60] have simplified the sequential learning algorithms to enhance the training time, but it remains quite slow since data are handled one at a time instead of in batches. The online sequential extreme learning machine (OS-ELM) that can handle additive nodes (and RBF) in a unified framework from the batch learning ELM [

36,

61,

62,

63,

64,

65,

66] is implemented in SLFNs. As compared to other sequential learning algorithms using different tuning parameters, OS-ELM requires the number of hidden nodes for tuning the networks solely. The newly arrived blocks or single observations (instead of the entire past data) are learned and removed after the learning process is accomplished. The input weights (connections between the input nodes to hidden nodes) and biases are randomly produced, and the output weights are analytically computed.

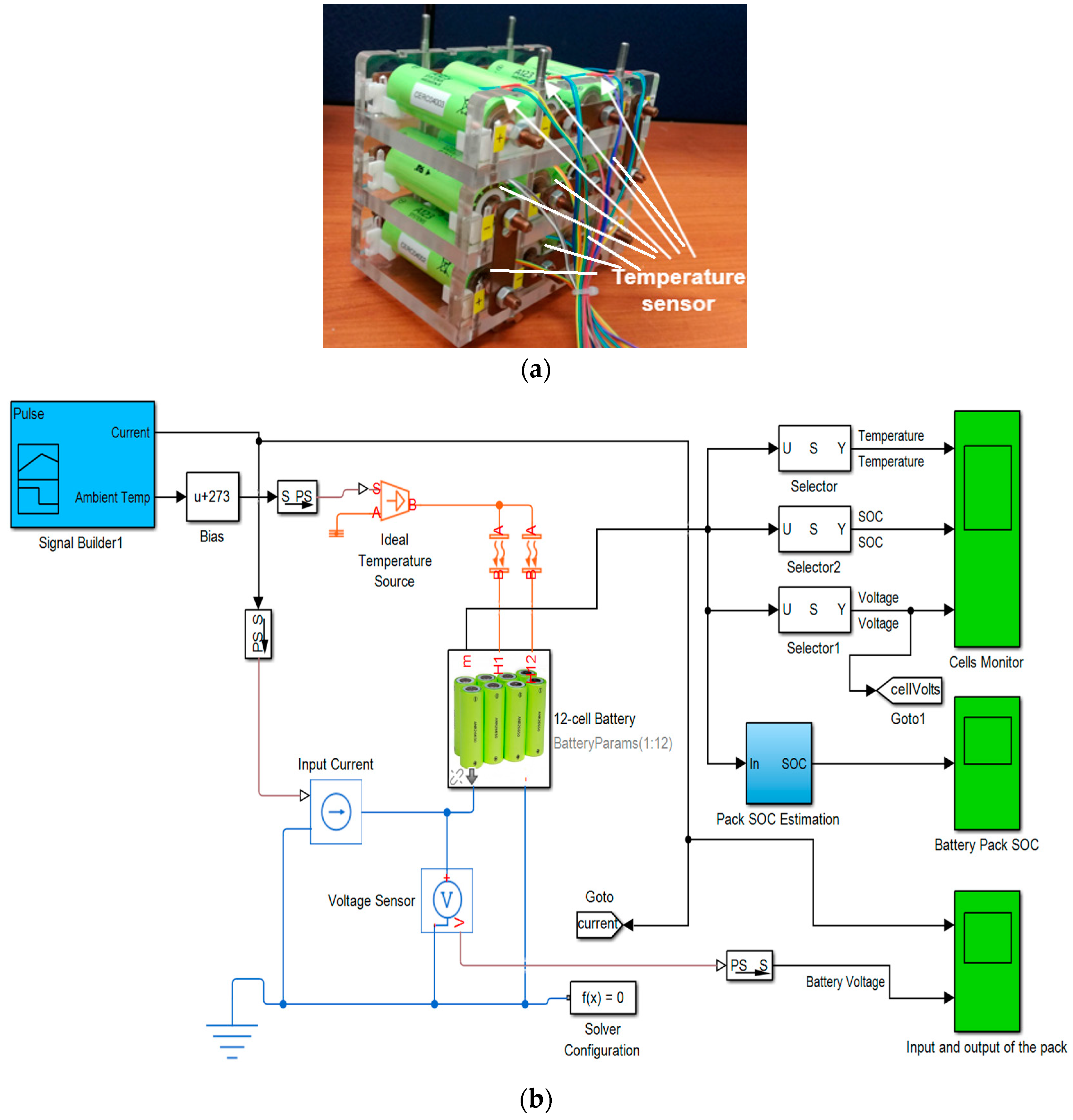

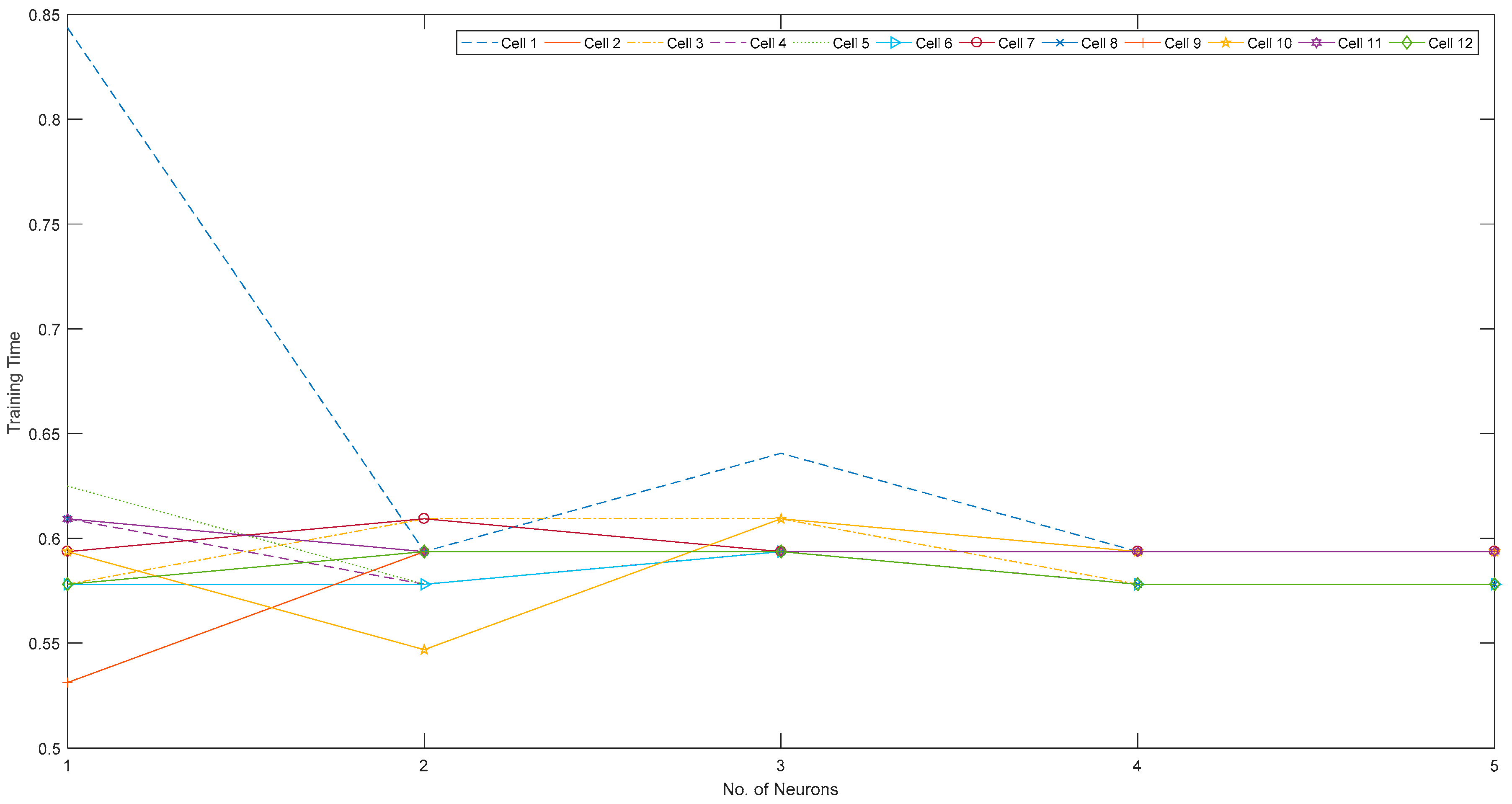

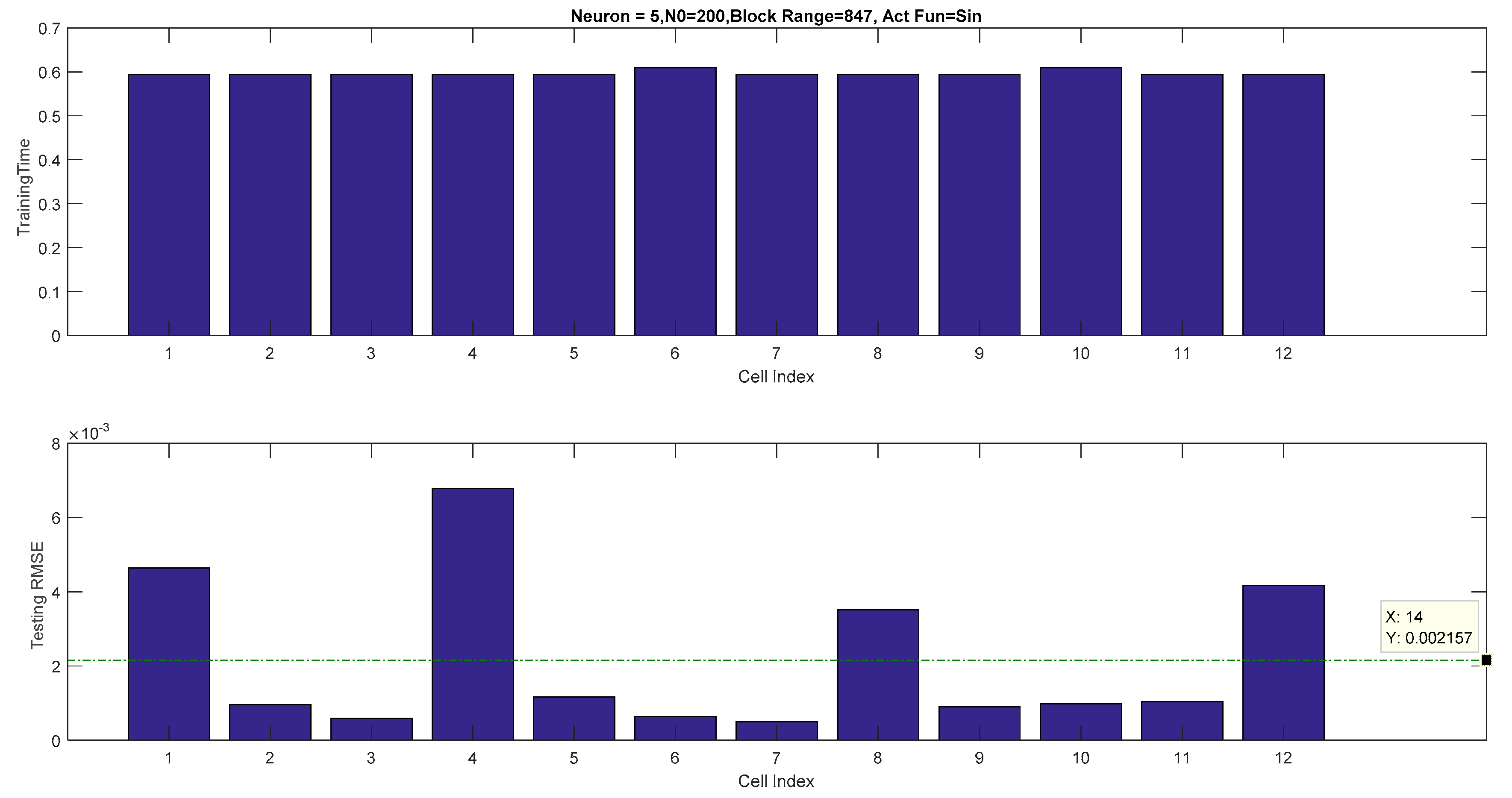

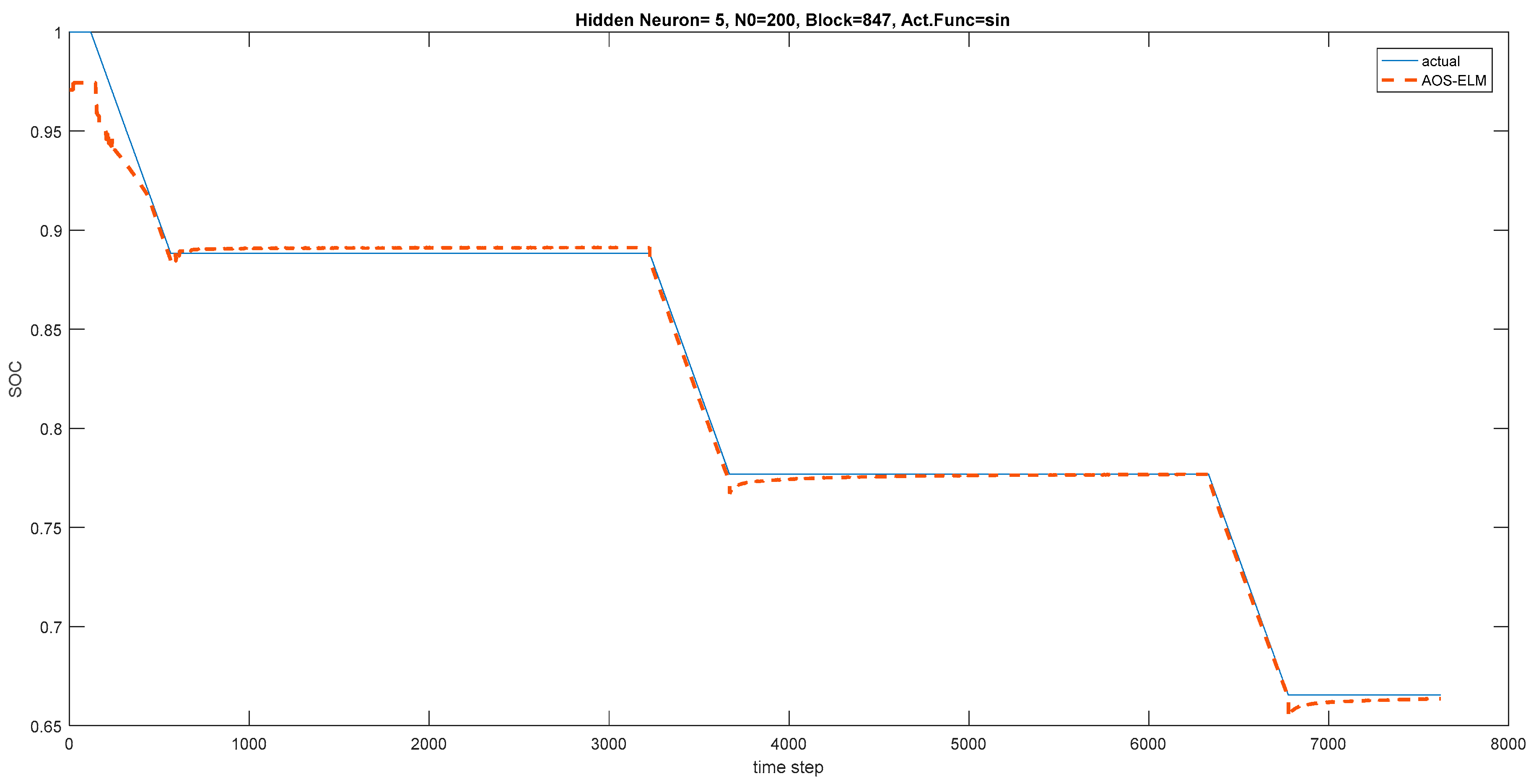

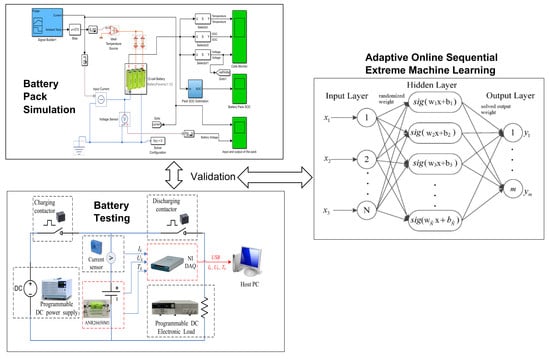

In the current literature, AOS-ELM applications to model the SOC of battery packs at different ambient temperatures has not been discussed. The application of AOS-ELM can converge quickly and sequentially with good generalization during the initial training stage where the data is progressively available in batches of small sample size from different cells. Moreover, the availability of the full data set for the design variables is often delayed by a lack of exact information during the early design stage that makes the sequential ELM based learning (that is crafted to handle newly arrived block or single observation) useful. In this paper, the SOC of the 12-cell will be estimated by Ampere-hour method to provide the output data set, and computation of the root mean square error (RMSE) of the AOS-ELM training and testing at different ambient temperature. As a result, the subsequent SOC estimation of the battery pack will be performed without extensive and time-consuming measurement using the Ampere-hour method (that needs frequent calibration of the cells’ static capacity and parameters from the actual experiments).

This paper has the following sections:

Section 2 describes the SOC estimation process under different ambient temperature.

Section 3 reviews on ELM and adaptive sequential ELM learnings on the SOC.

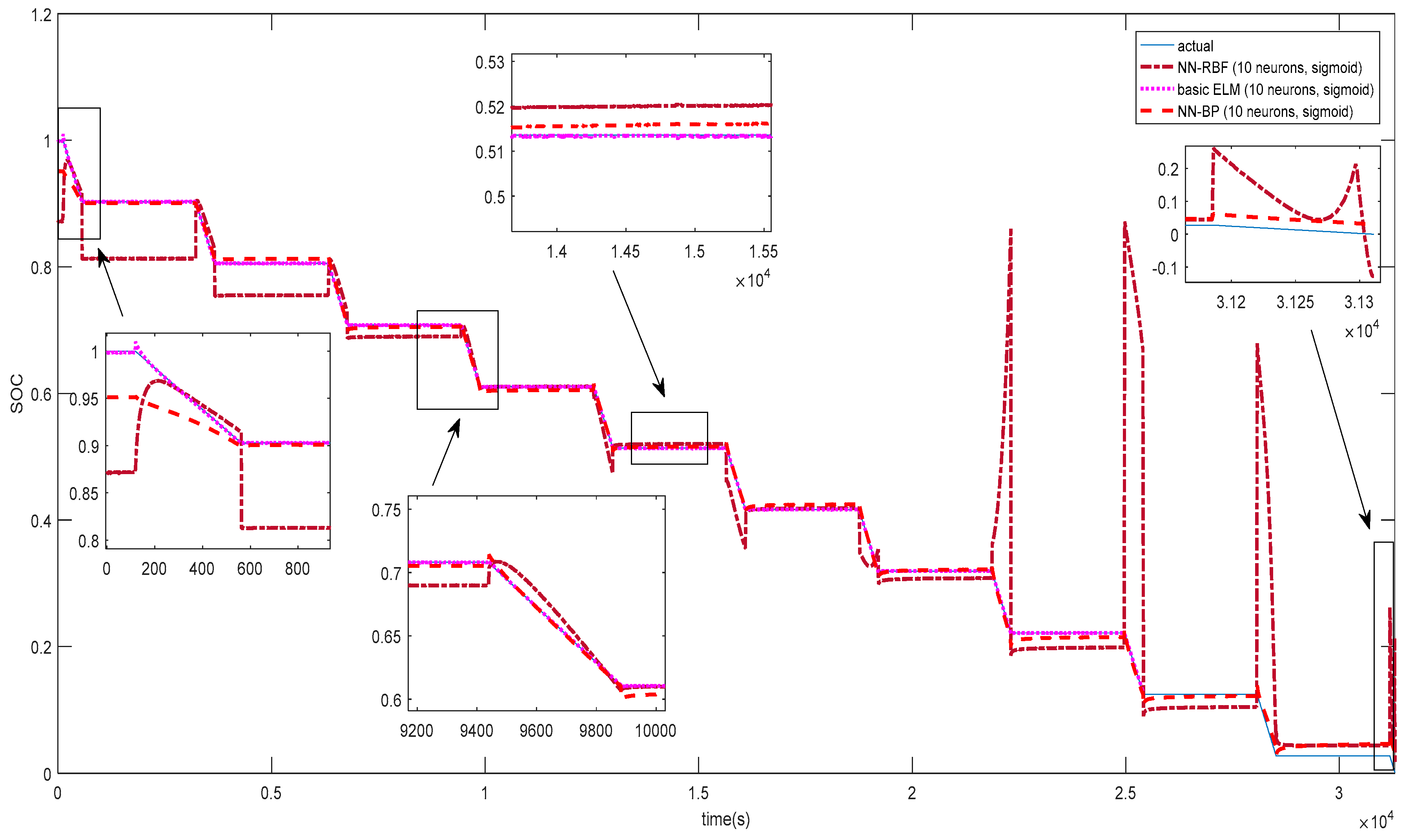

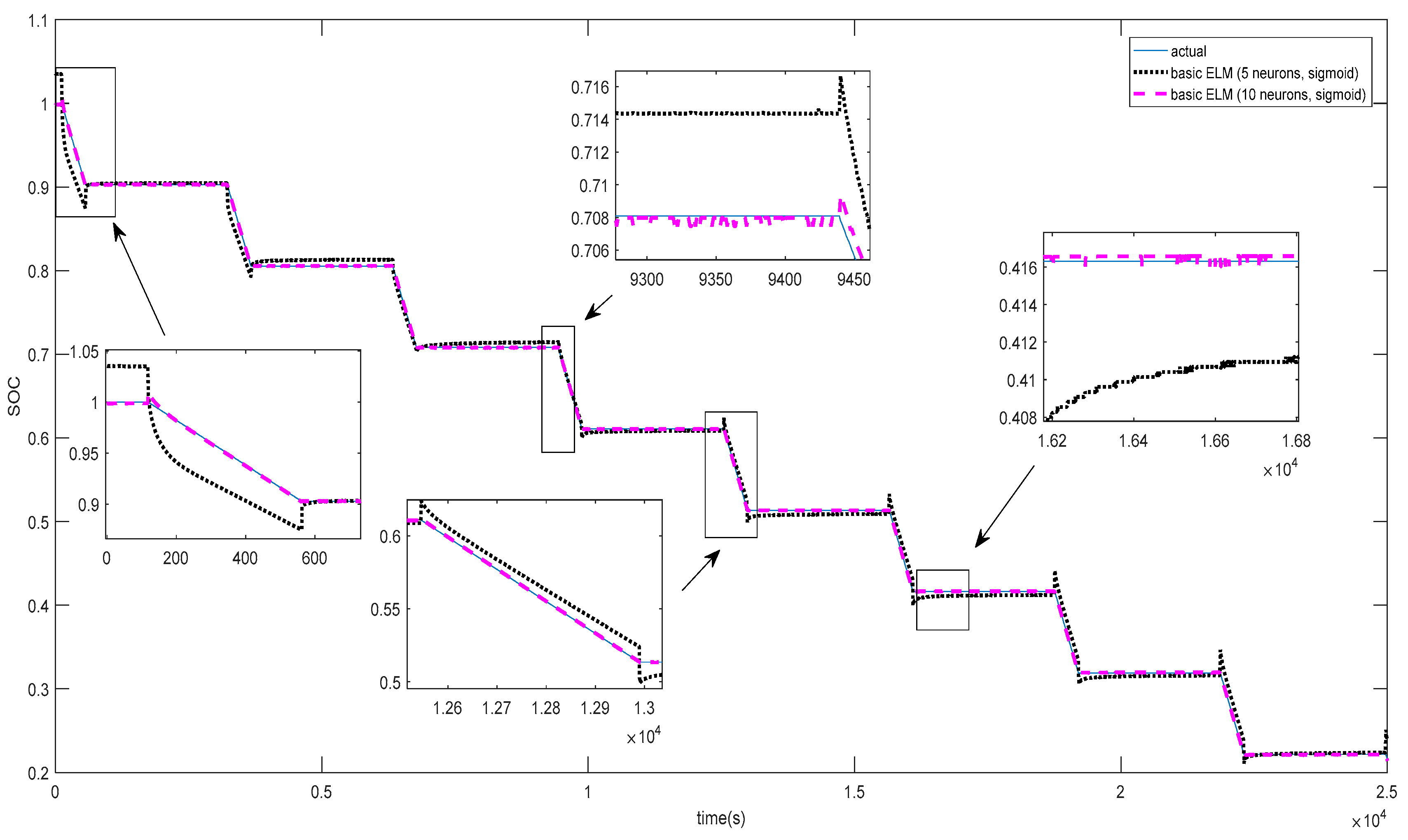

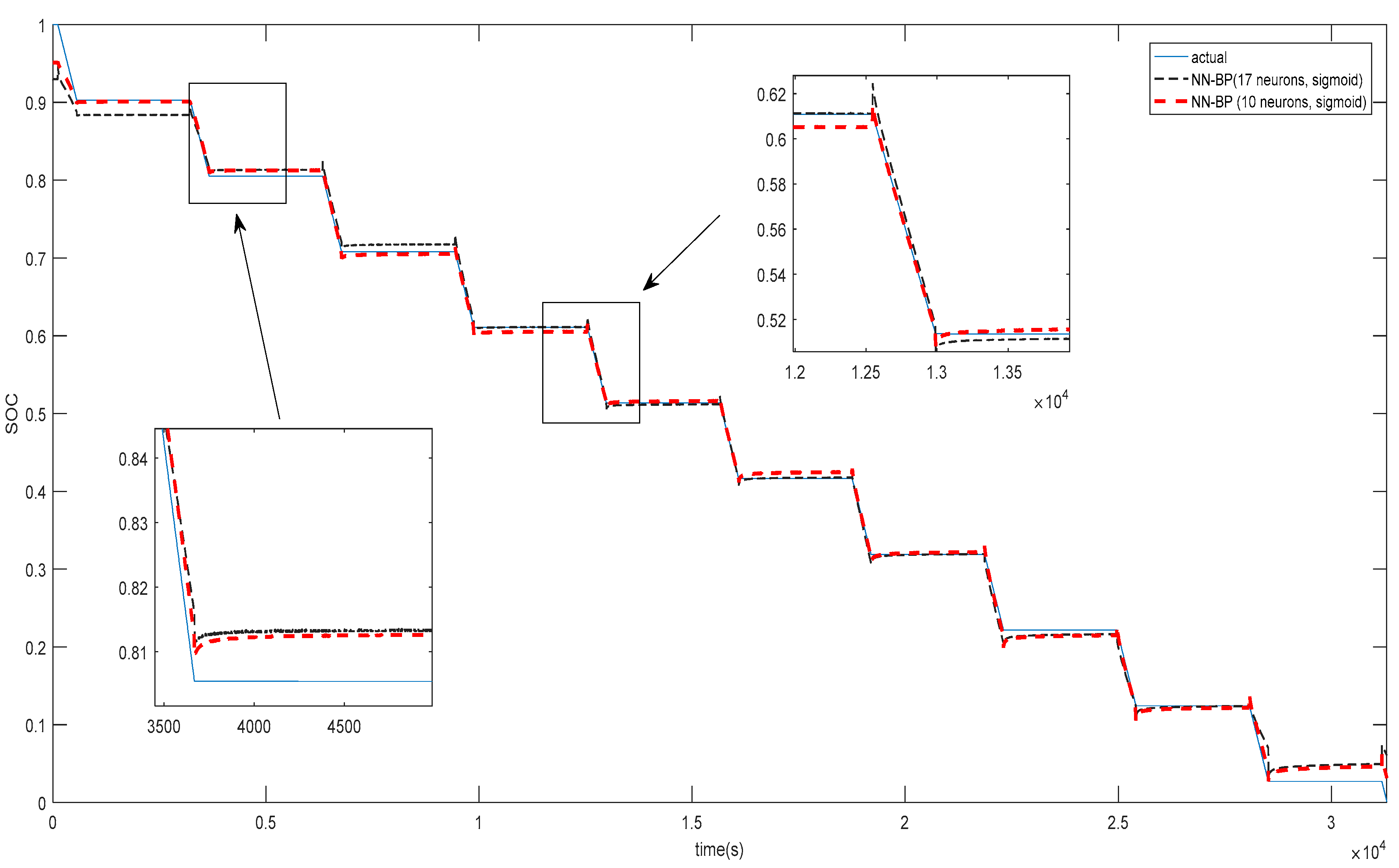

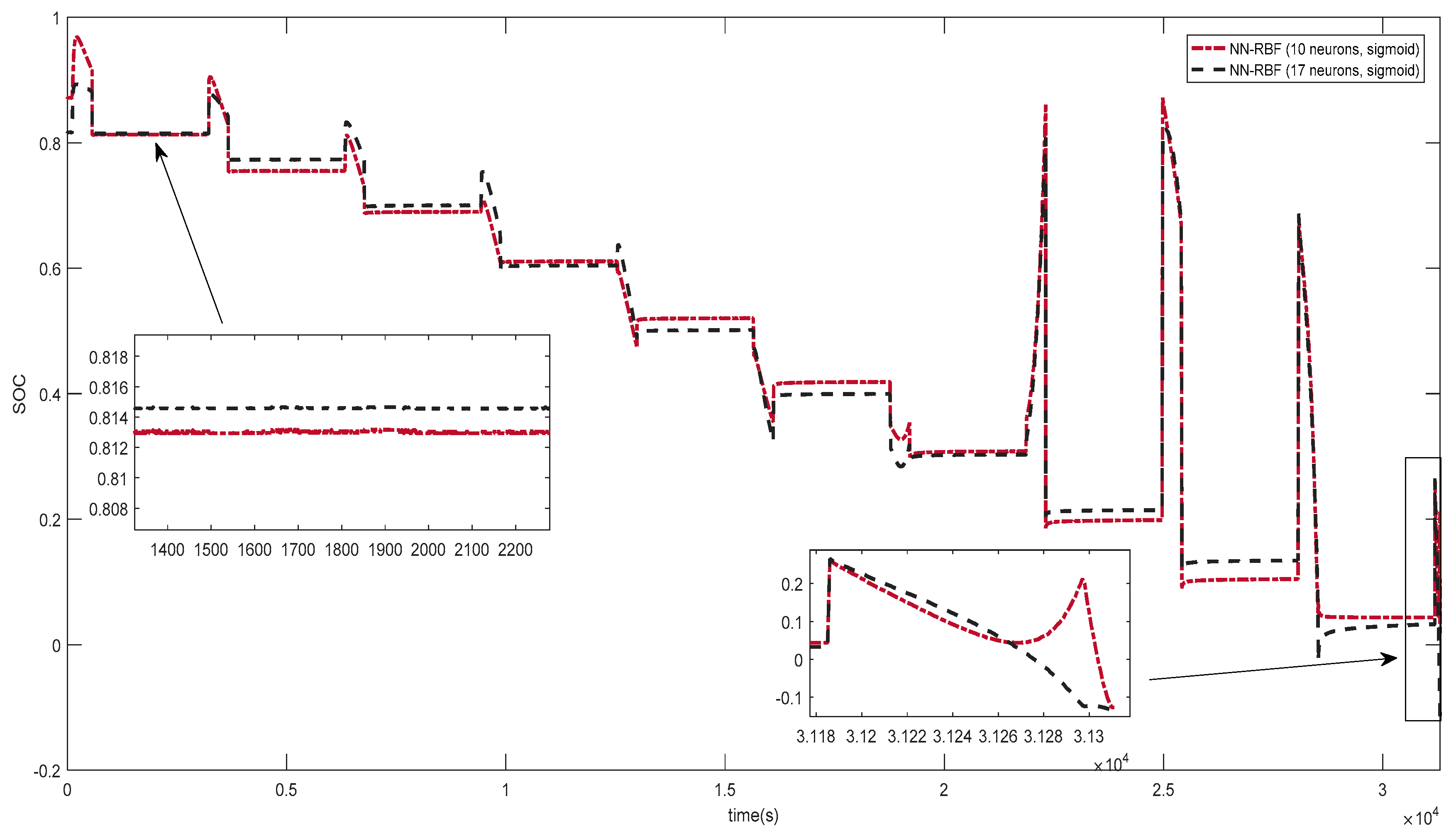

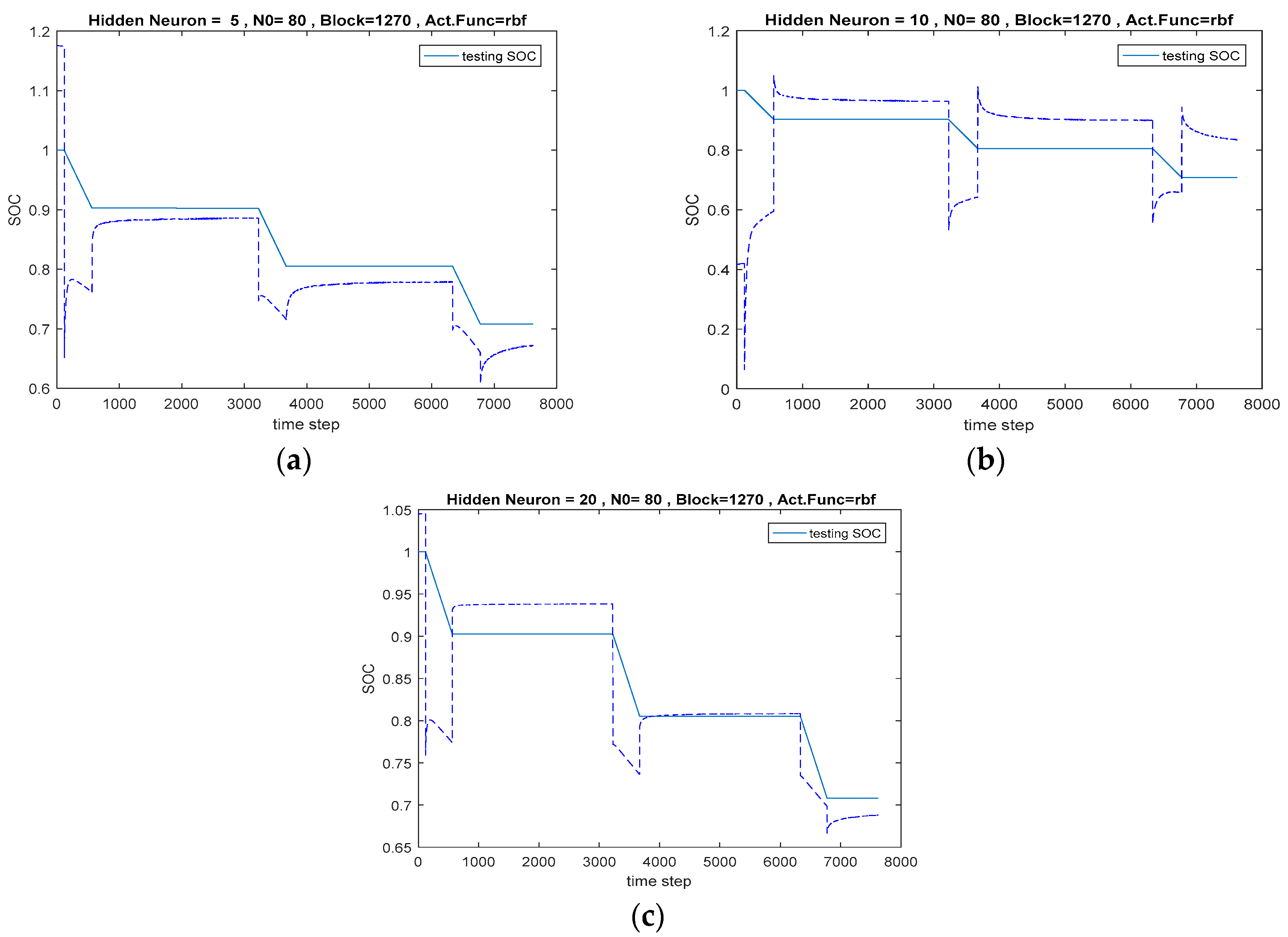

Section 4 evaluates some commonly used EKF-based approach and other types of neural networks as compared to the ELM-based learning.

Section 5 concludes the paper.

3. Adaptive Online Sequential Extreme Machine Learning (AOS-ELM)

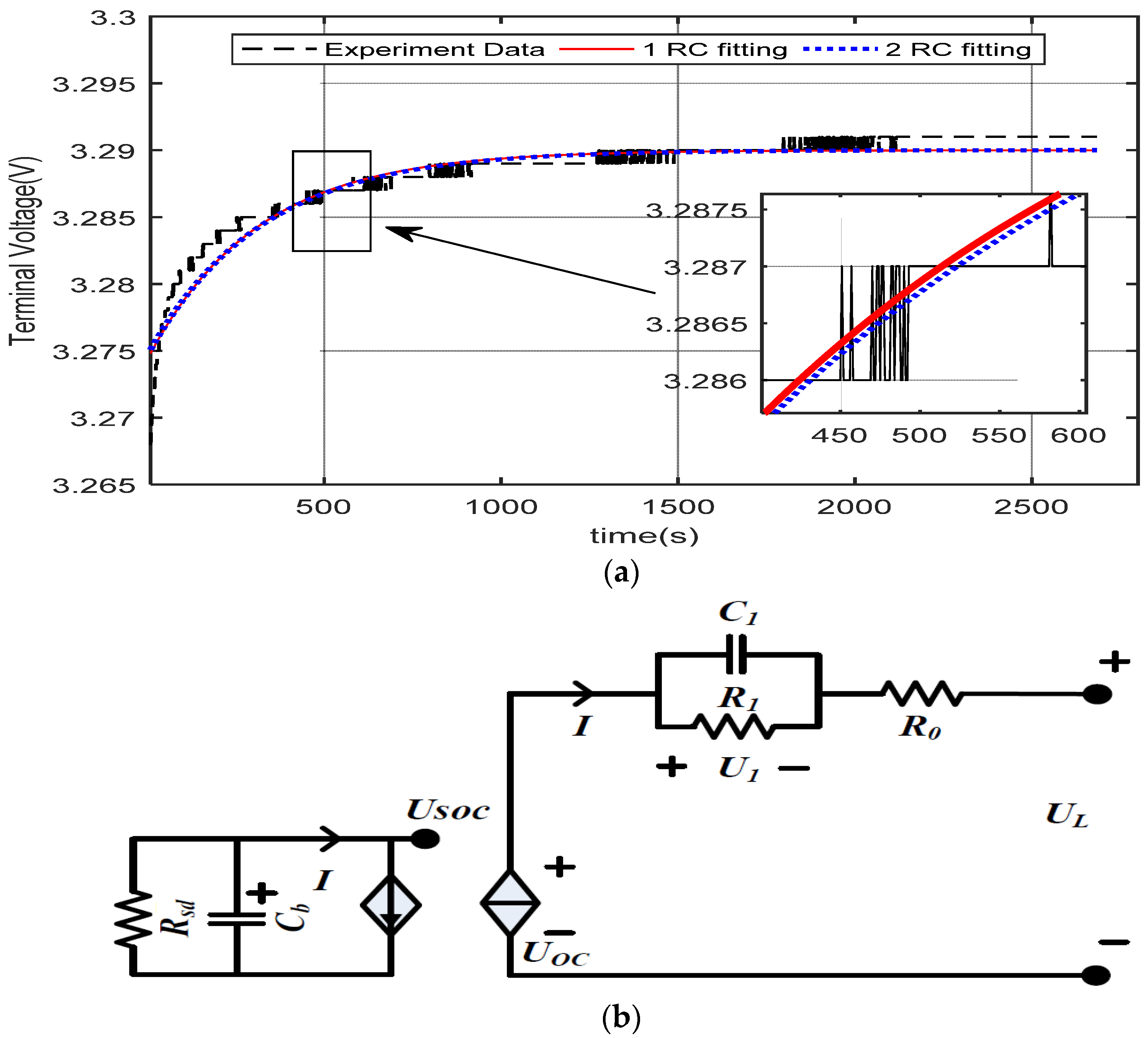

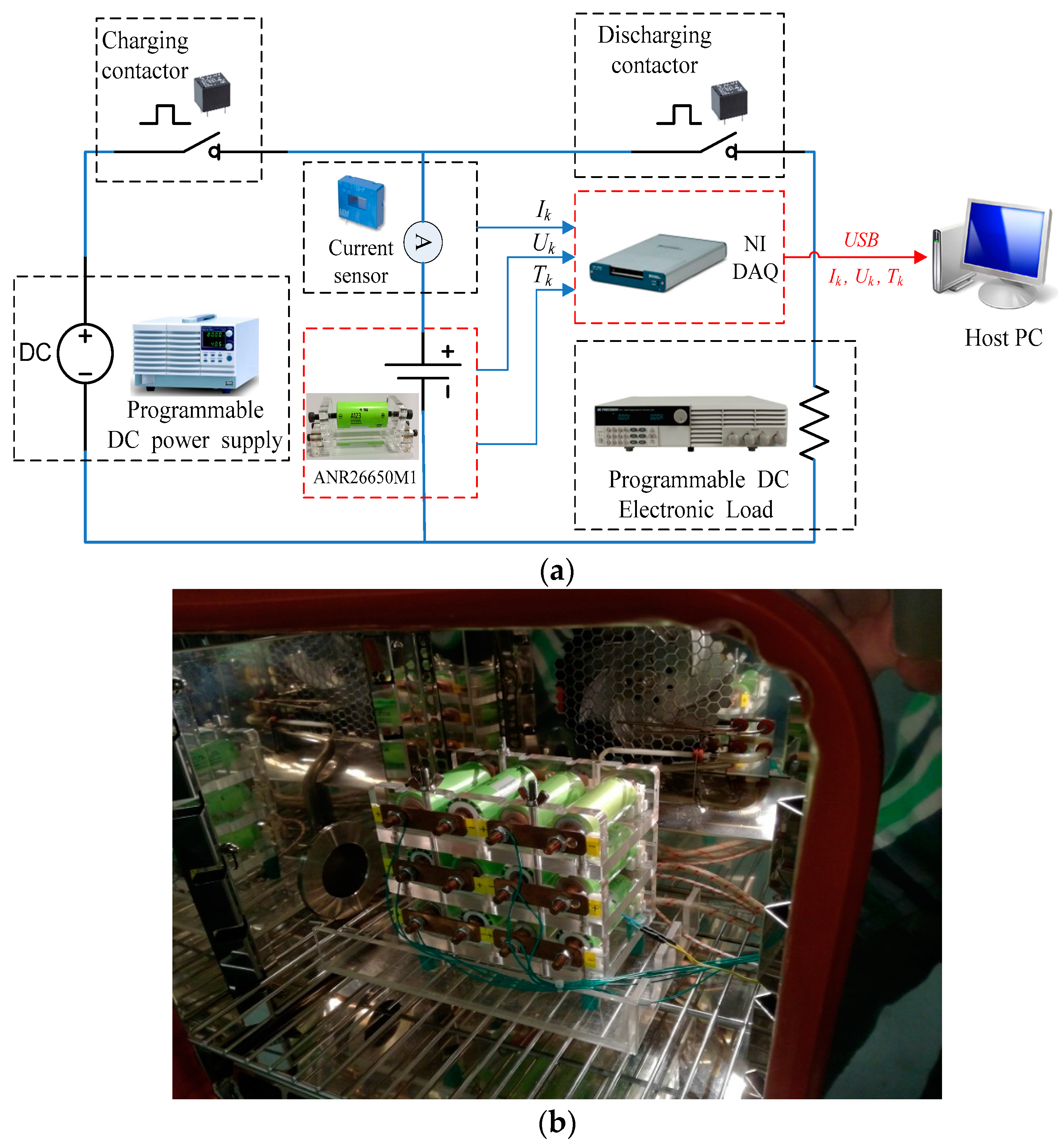

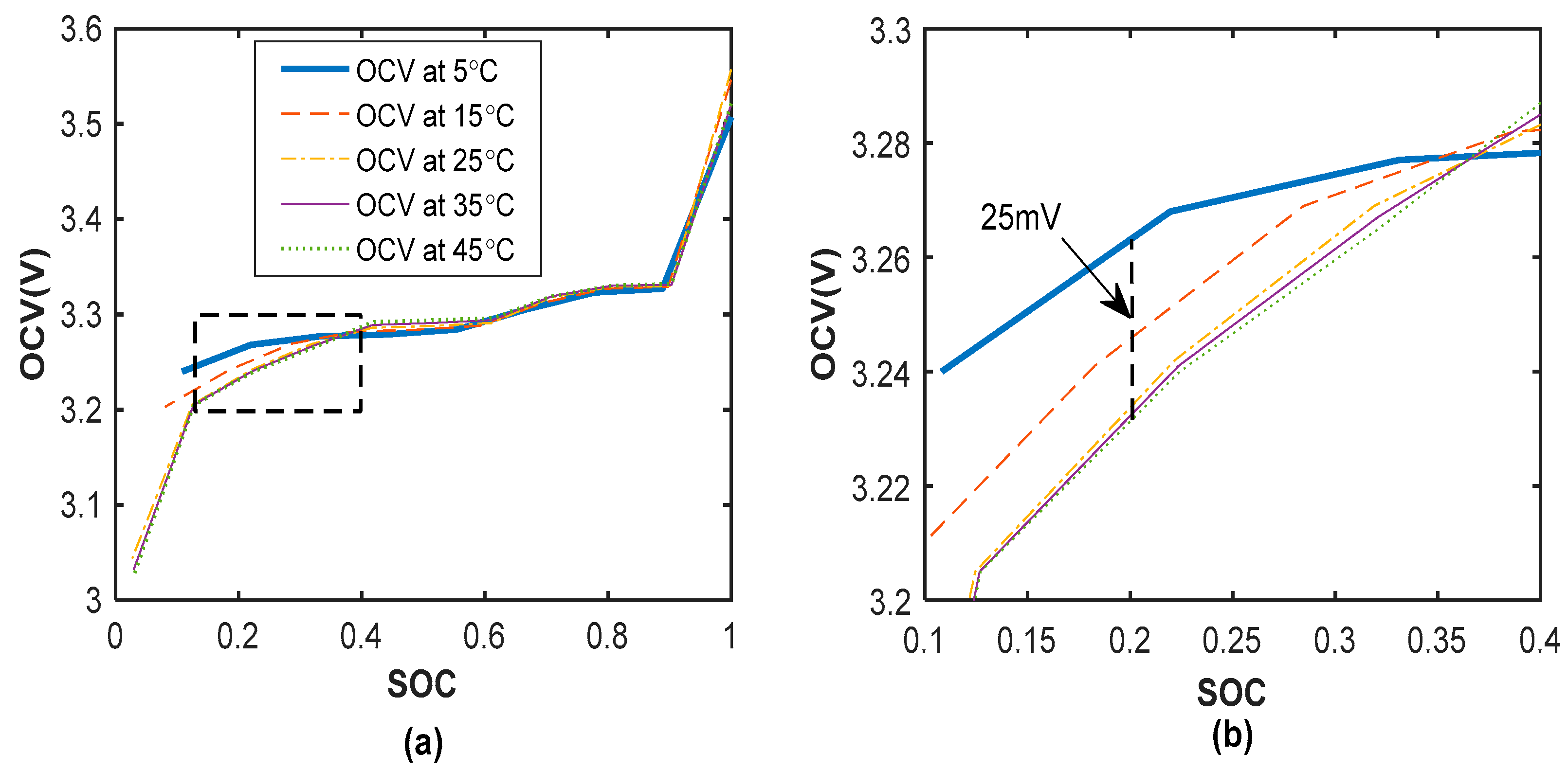

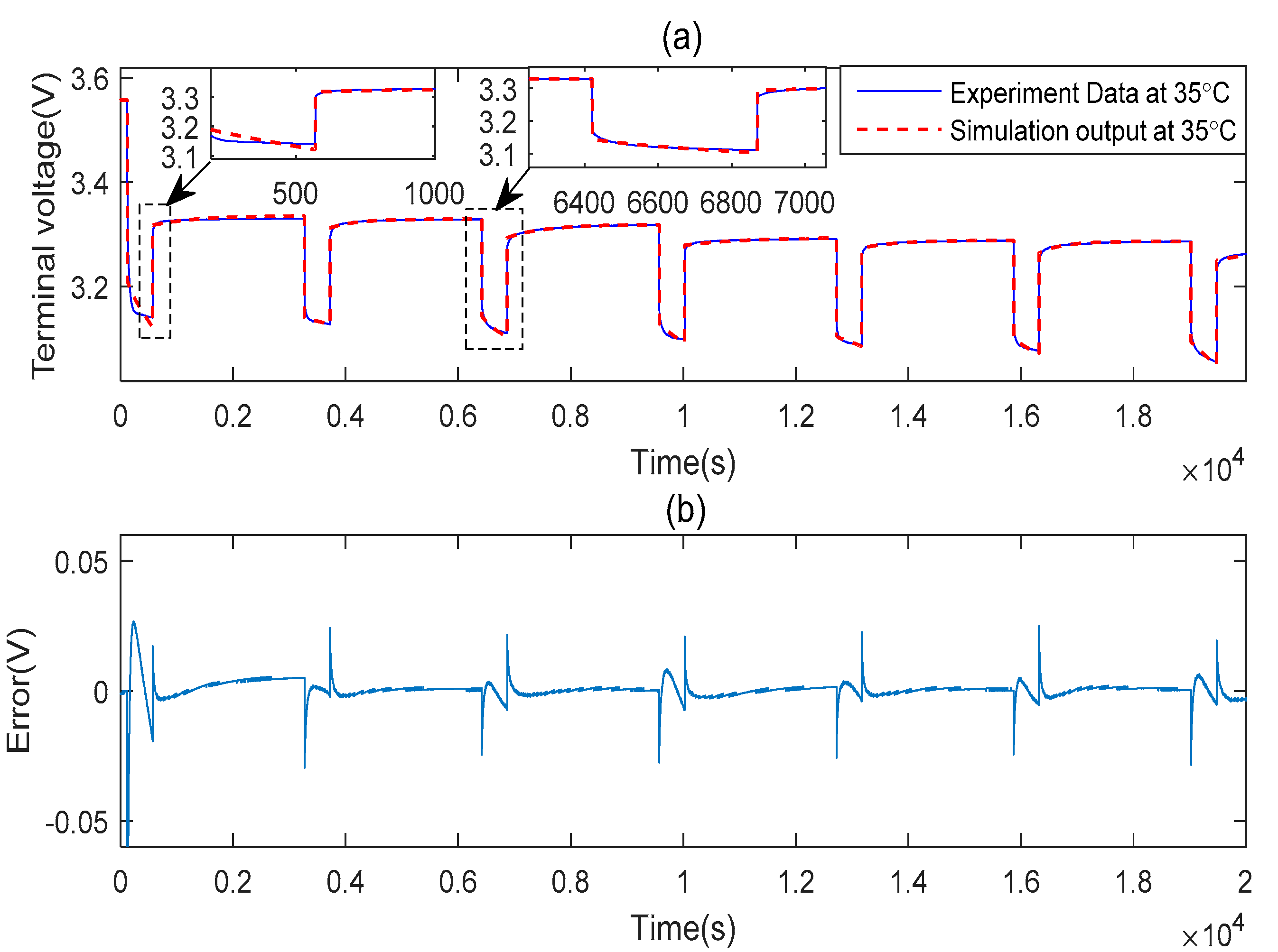

As shown in

Section 2, the estimation of the SOC value for the 12-cell using ECM is quite time consuming due to the physical measurement of the resistors, capacitor, and OCV. Moreover, the Ampere-hour method requires regular calibration as the capacity of the cells could change after cycles of charging and discharging. Hence, the ELM will be used to estimate the SOC for the 12-cell after the initial SOC estimation using the ECM obtained in

Section 2.

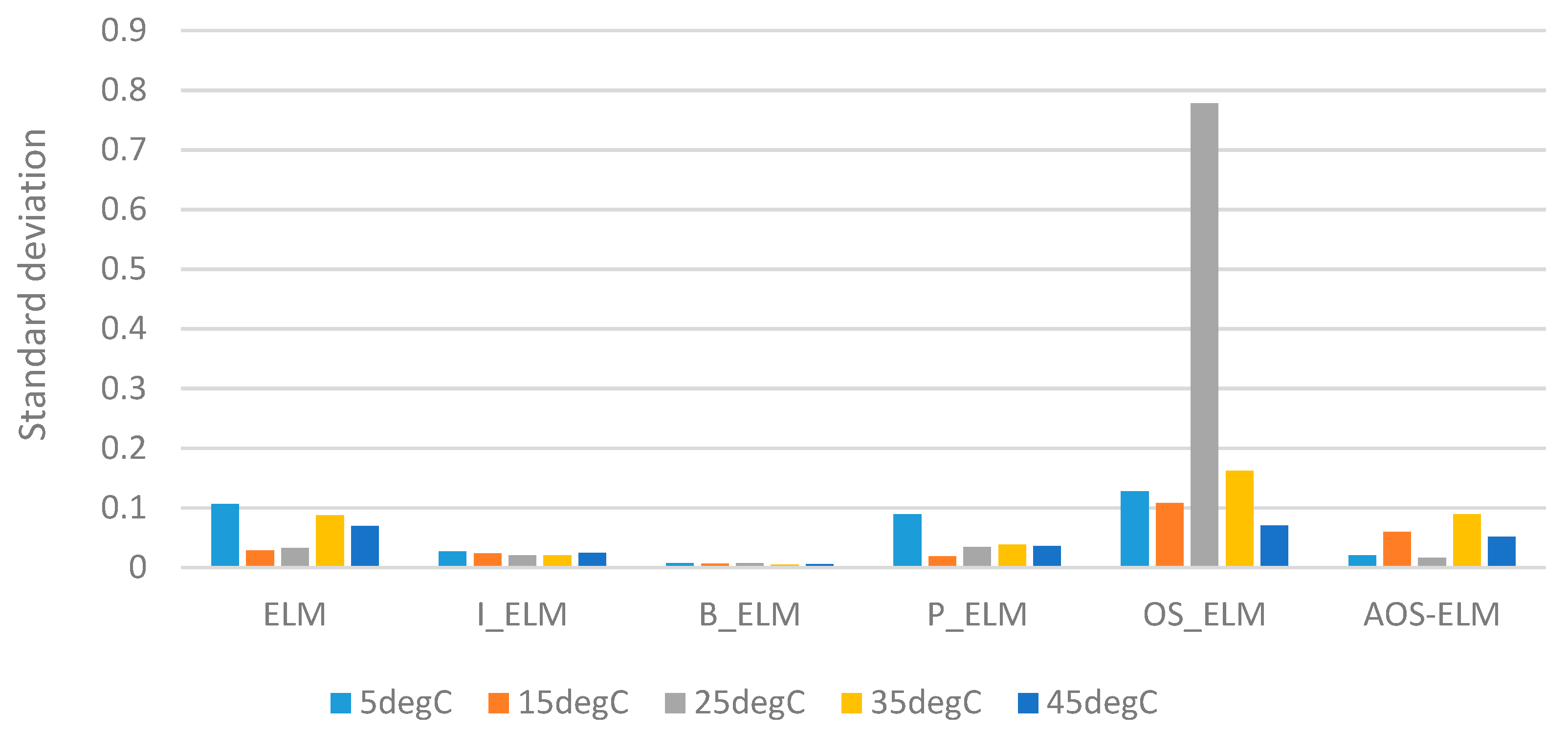

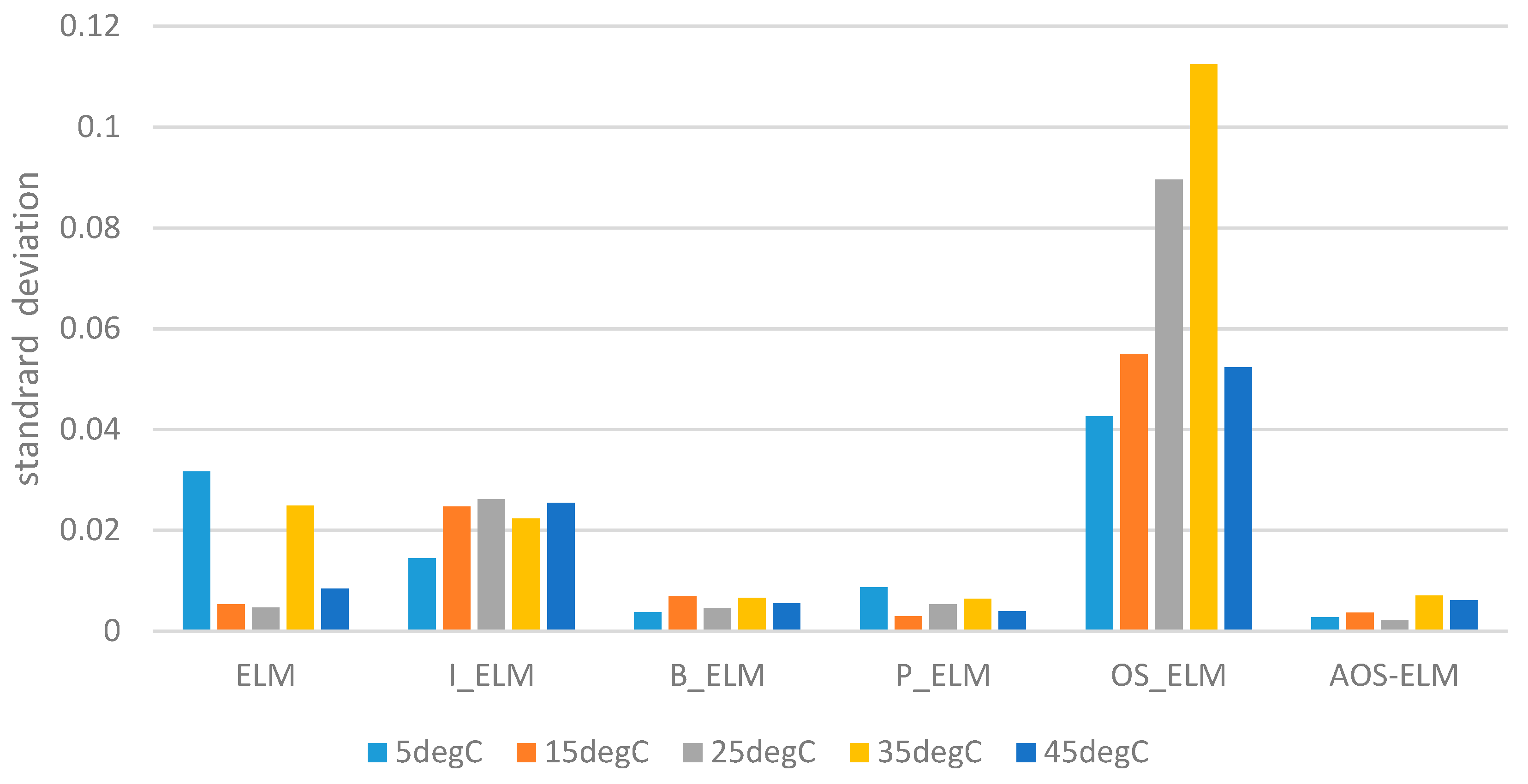

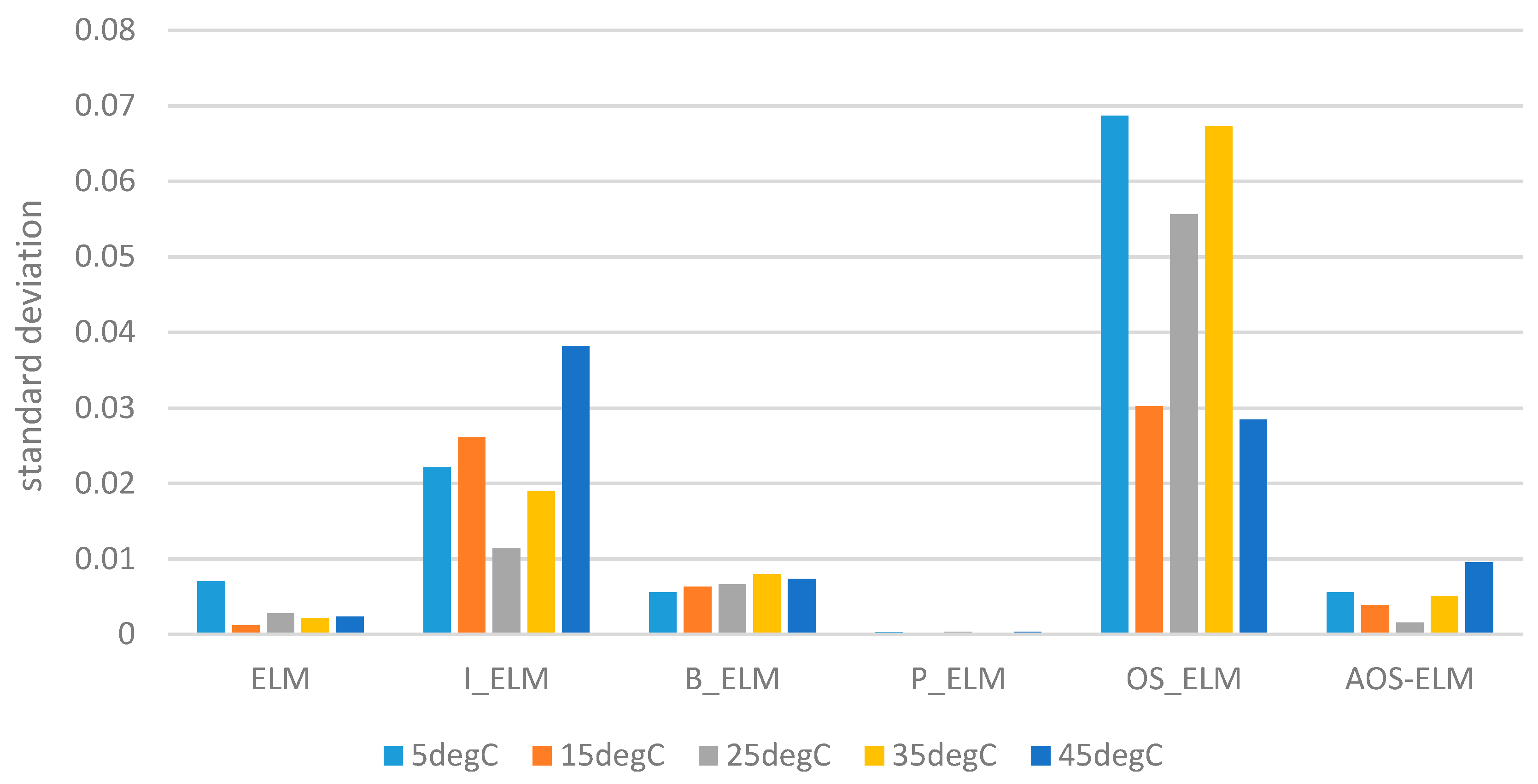

The following input and output parameters will be used for the ELM and other neural networks based training. The parameters were obtained at a different ambient temperature (5 °C, 15 °C, 25 °C, 35 °C and 45 °C) for a single cell followed by the 12-cell (at 25 °C only):

Input parameters: voltage, current, capacity in Ah

Output parameter: SOC obtained from Ampere-hour method

In general, the dynamic change of SOC is defined by integrating the current over the time step.

where

Kc =

ηΔ

T/

Cn,

Cn is the nominal capacity in ampere-hour (Ah),

η is the Coulombic efficiency, Δ

T is the sampling time.

For series-connected cells, the total capacity of each cell can be written as:

where

is the minimum capacity of the pack that can be charged,

is the minimum capacity of the pack that can be discharged.

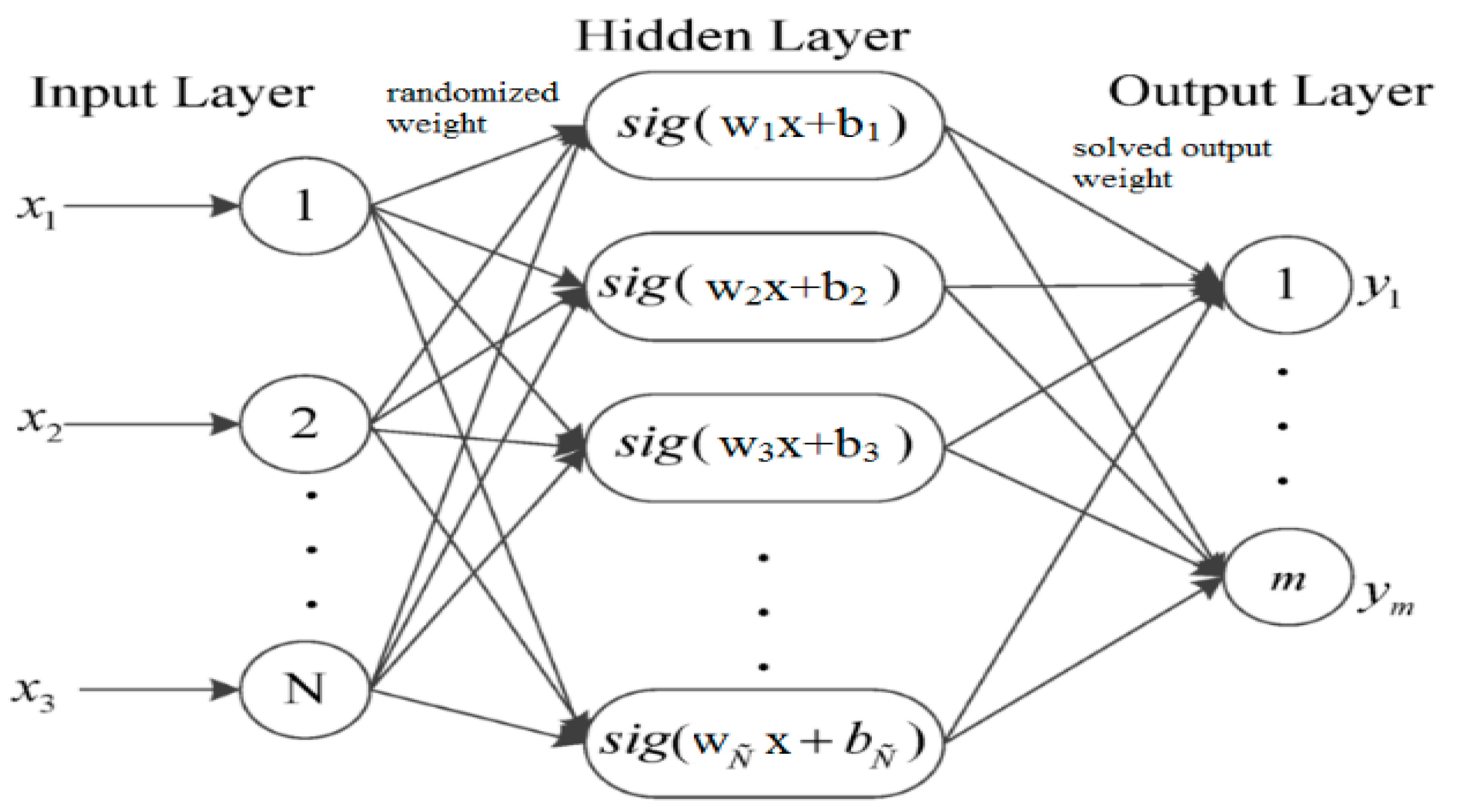

Instead of always estimating the SOC of each cell then determine the SOC for the pack, a single layer feedforward neural network (SLFN) trained by Extreme Learning Machine (ELM) is used. For

n samples

, where

denotes the input,

denotes the output with

hidden neurons and sigmoid activation function

a(

x) = 1/(1 +

e(−x)) as:

where

is the weight vector connecting the

i-th hidden neuron and input neurons,

is the weight vector connecting the

i-th hidden neuron and output neurons, and

bi is the bias of the

i-th hidden neuron. The aim of the ELM with

hidden neurons with activation function

a(

x) can approximate the

N samples with zero error,

. Hence, there exist

γi.

w and

bi so that:

Equation (8) can be written compactly as:

where

H is called the hidden layer output matrix with the

i-th column of

H is the

i-th hidden neuron’s output vector with respect to the input

. Each neuron activation function is sigmoid in

Figure 8.

In ELM, the input weight

wi and

bi will be randomly chosen. The output weights is analytically computed through the least square solution of a linear system:

where

H† represents the Moore-Penrose generalized inverse of a matrix

H. The results of the training can achieve a faster training, better generalization performance (i.e., small training error and smallest norm of output weights, avoid local minimal and do not need the activation function of the hidden neurons to be differentiable as compared to the gradient-based learning algorithm.

The term

SOC(

k) can be approximated using ELM learning. Equation (6) can be rewritten as:

Equation (14) can be expressed as equivalent form:

where:

and

is the parameter vector.

With measurement data

where

nt is the number of time measurements and the training set

obtained, the

SOC(

k) can be written as:

where

SOC(

k) = [

SOCk(2),

SOCk(3),K,

SOCk(

ni)] and

Hi = [

Hi(1),

Hi(2),K,

Hi(

ni − 1)]

T.

By choosing the input weights and bias in the hidden layer nodes randomly, the parameter vector

can be computed as:

Hence, the predicted SOC for the battery pack using the ELM learning can be written as follows:

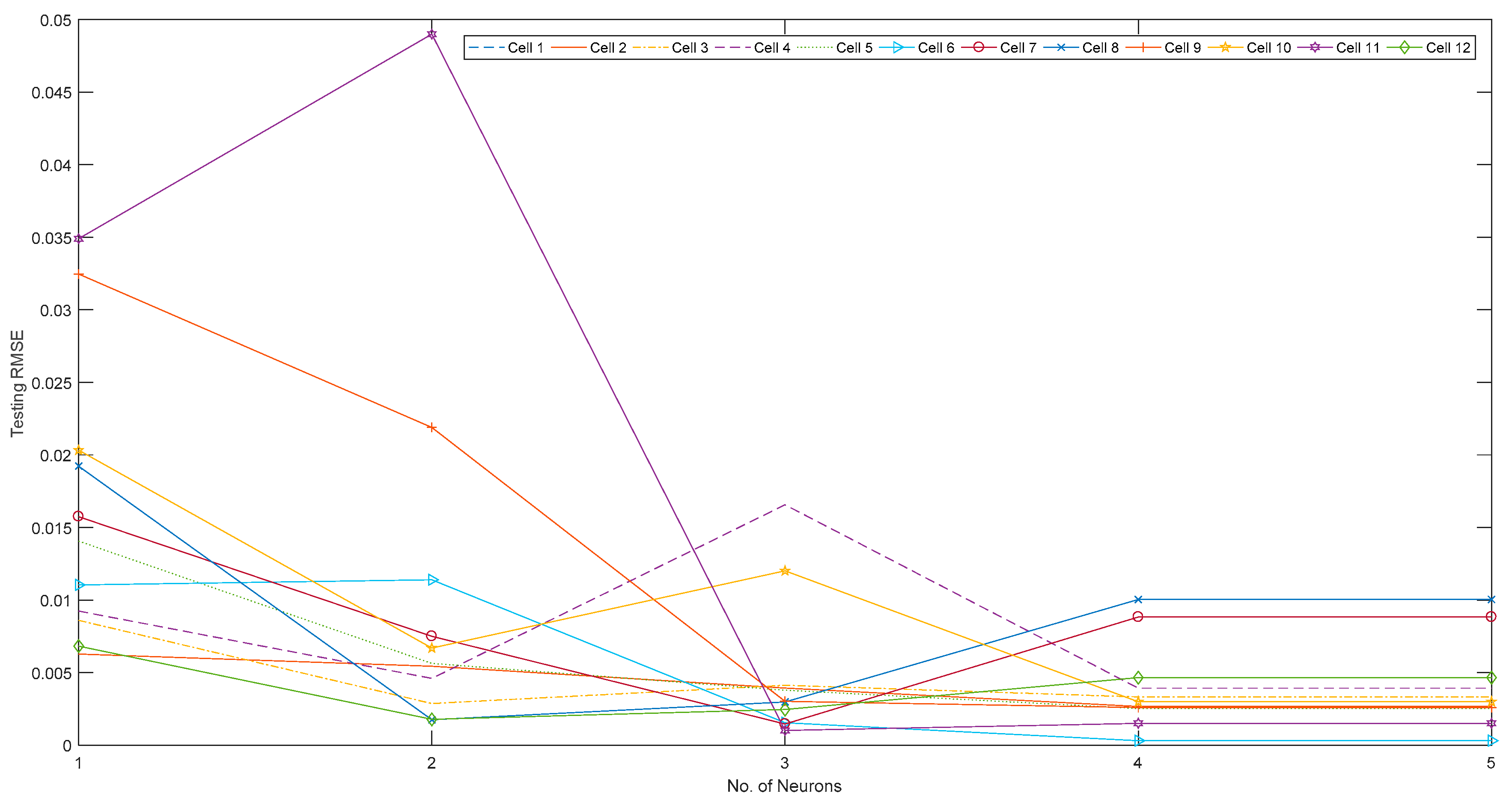

The SOC will be first computed from the first set of data obtained from

Section 2. Subsequently, the ELM learning will be used to train and predict the SOC of each cell in (16), followed by the SOC of the battery pack in (18). However, the data will arrive at certain sample time, i.e., 1 sample/s. The basic ELM may not be able to handle the sequential learning needs. As such, the adaptive online sequential ELM (AOS-ELM) will be used to learn the data batch-by-batch in a varying size. The next set of data will not be used until the learning is completed. The process of the AOS-ELM is as follows:

Step 1: Use a small batch of initial training data from the training set

Step 2: Assign random input weight wi and bi, .

Step 3: Compute the initially hidden layer output matrix,

Hk:

Step 4: Estimate the initial output weight, where , .

Step 5: Set k = 0.

Step 6: For each i-th cell, compute where and

Step 7: Compute the total SOC for the battery pack, i.e., 12-cell i,j ∈ {1,K,Ns}.

Step 8: Provide (k + 1)-th batch of new observations as where Nk+1 denotes the number of observations in the (k + 1)-th batch.

Step 9: Compute the partial hidden layer output matrix

Hk+1 for the (

k + 1)-th batch of data

Nk+1 as shown:

Step 10: Set target as

Step 11: Compute the output weight γ(k+1)

Step 12: Set k = k +1. Go to Step 6.