3.1. Study Area

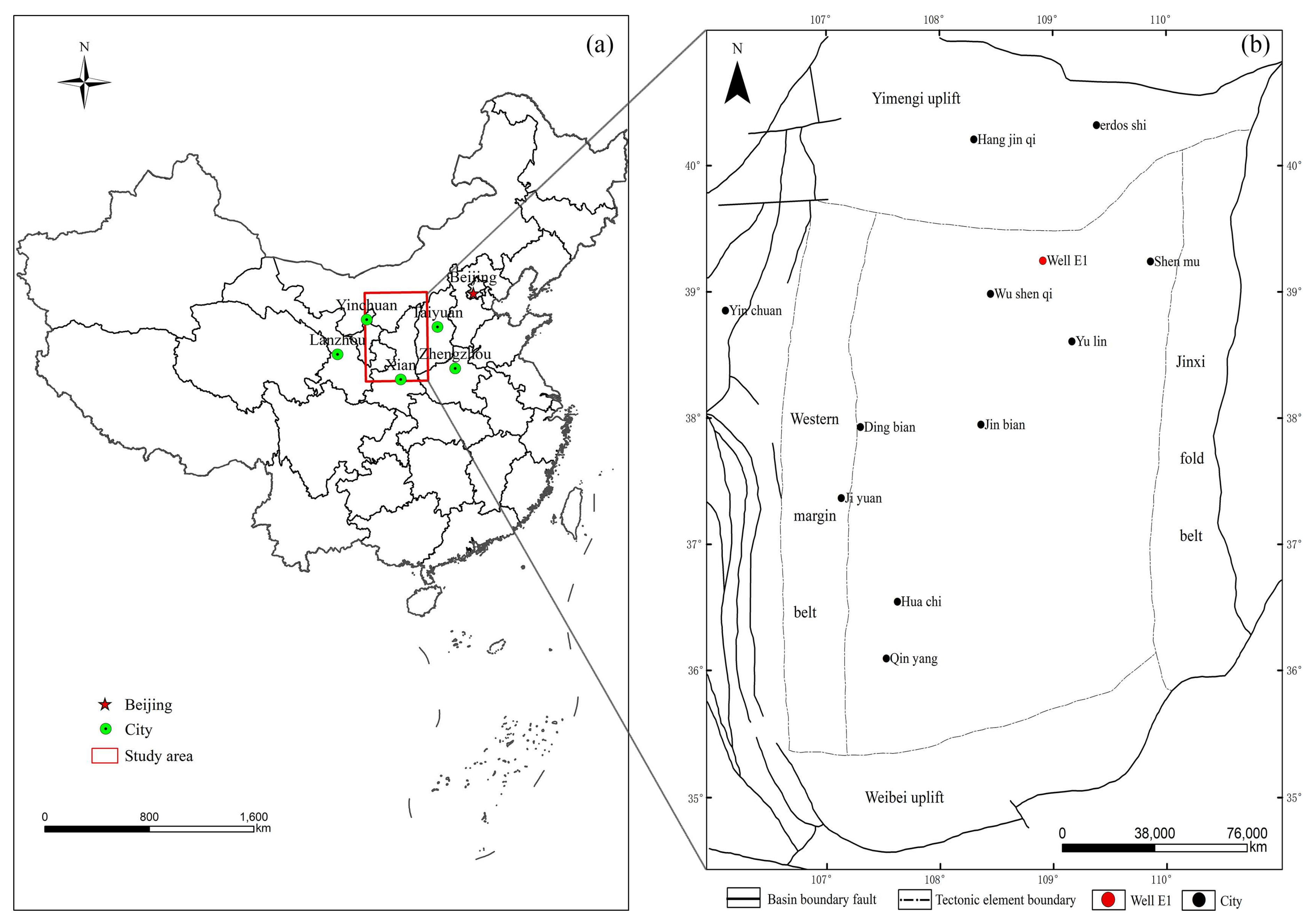

In recent years, PetroChina, Sinopec, and Shell, among others, have conducted many studies and production processes in the Ordos Basin. Some studies have shown that the organic matter in most areas of the basin is at the mature stage [

40,

41,

42,

43,

44]. The Ro (vitrinite reflectance) value ranges from 0.85% to 1.20%; Types I and II of kerogen are the main types in this study area, and the TOC content of the organic-rich shale ranges from 0.23% to 32.86%. The position of the Ordos Basin in China is marked with a red rectangle in

Figure 3a, and Well E1 is located in the north-central part of the Ordos Basin as is shown in

Figure 3b.

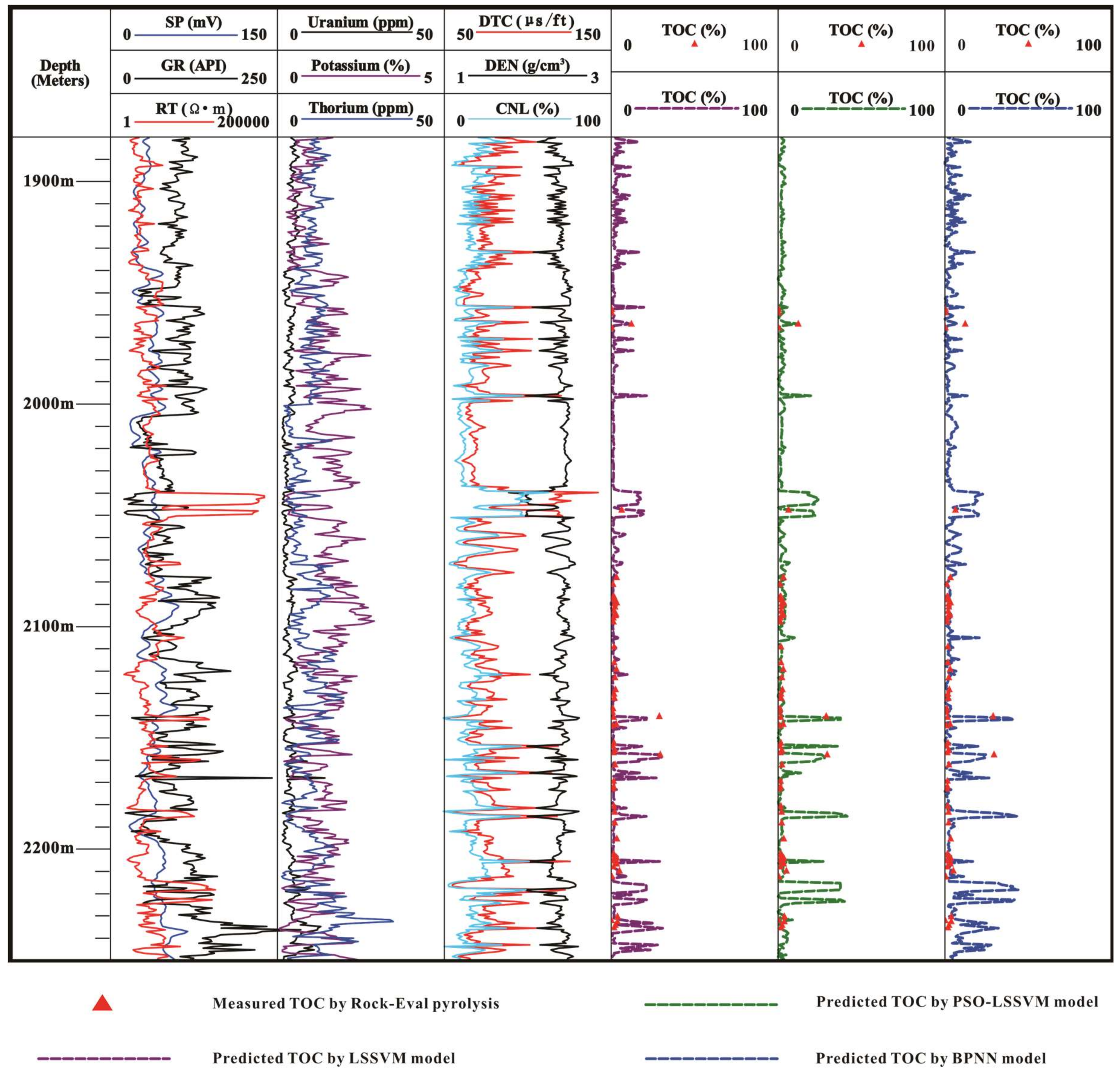

In this study, TOC was measured with a LECO CS-400 carbon sulfur analyzer (LECO CS-400, LECO Corp., Saint Joseph, MO, USA) (combustion at temperatures over 800 °C). A total number of 70 TOC data points were obtained from laboratory tests. On September 14, 2015, mud drilling technology was used for the drilling of Well E1 in Tabudai Village of Wulan County, which is a suburb of Wushenqi City. The drilling was completed on 27 October 2015, with a drilling depth of 2284 m. On October 28, the logging operations were carried out. The location of the drilling in the study area is shown in

Figure 3b. Logging equipment is from the logging system of COSL (China Oilfeld Services Limited, Beijing, China); logging method is from Well E1 and includes the caliper logging, spontaneous potential, natural gamma-ray spectroscopy, array acoustic, dual lateral resistivity, lithology density, and neutron porosity logs, of which all the logging curves displayed excellent qualities.

Table 1 shows the logging parameter data of Well E1, along with the TOC content data that were analyzed in the core experiment.

3.2. Data Analysis

The relationship between the logging parameters and the TOC content differs dramatically in different areas. Also, it cannot be guaranteed that the empirical Equation established in previous studies to calculate TOC content can get the same prediction effects [

12,

17]. In different study areas, the function relationship between the logging parameters and TOC content was also found to be different [

5,

7].

Therefore, in order to define the relationship between the well log and TOC content, this study obtained a simple linear regression relationship between the well log and TOC content through a cross-plot analysis for the TOC content of the samples and well log. The coefficient (R

2) was regarded as the index to judge whether the correlation between each well log and TOC content was strong or not.

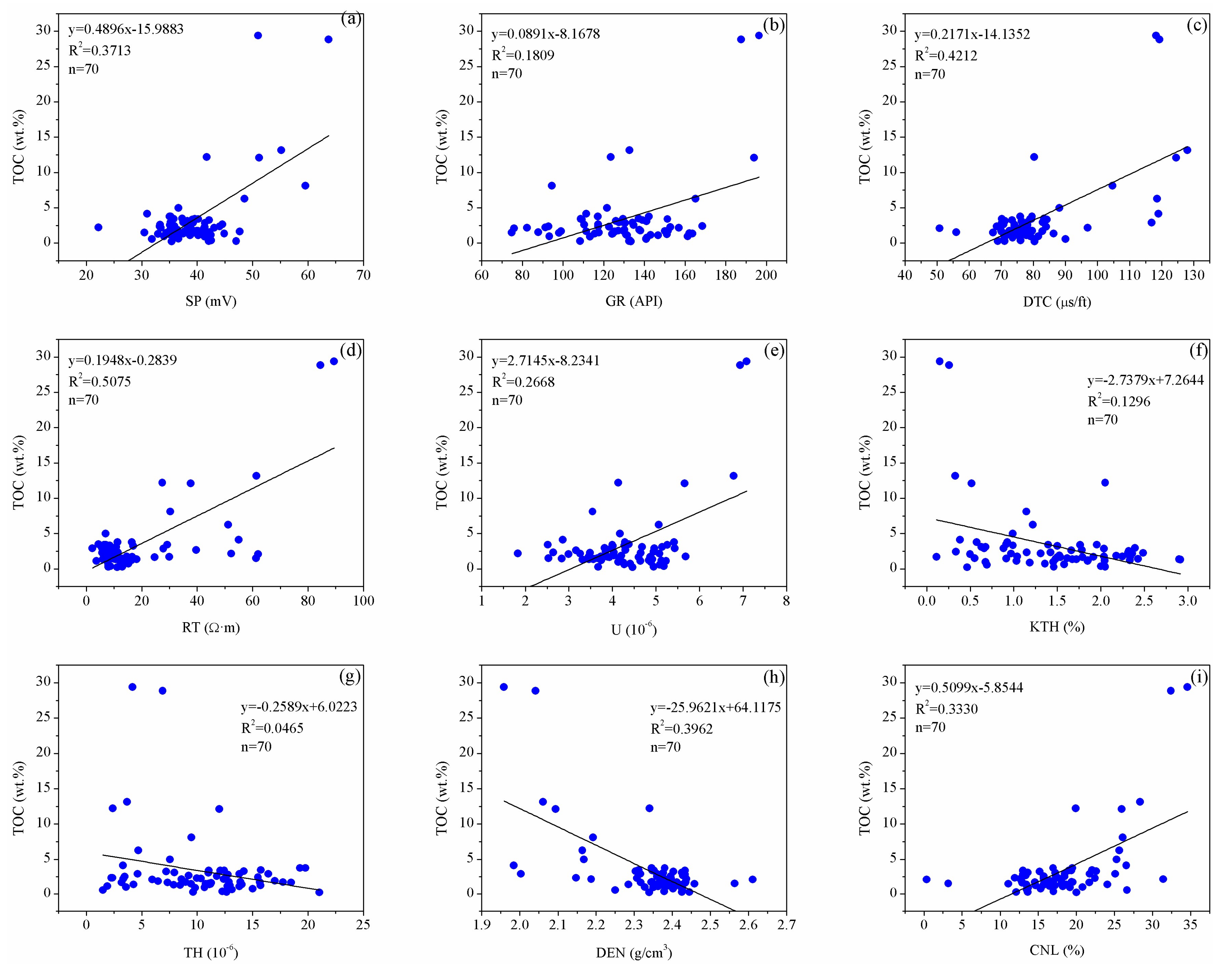

Figure 4 shows the cross plots between the logging parameter and TOC content of Well E1. It can be seen that there is a positive correlation among the well logs of the spontaneous potential, gamma ray, acoustic time difference, resistivity, uranium and neutron porosity, and TOC content, and their coefficients of determination are 0.3713, 0.1809, 0.4212, 0.5075, 0.2668, and 0.3330, respectively. However, there was a negative correlation among the well logs of the potassium, thorium, and neutron porosity and the TOC content, and their coefficients of determination are 0.1296, 0.0465, and 0.3962, respectively. After comparison, when the simple linear regression of the well log and TOC content is made, the resistivity curve has the largest coefficient of determination, while the thorium curve has the smallest coefficient of determination.

In addition, this study conducted a correlation analysis for the well log and TOC content, to calculate the correlation between each well log and the TOC content. The calculation Equation is as follows:

in which

r is the correlation coefficient;

and

are the average value of logging parameters, respectively;

xi and

yi are the corresponding logging observation values of the

ith coring sample point, respectively.

Table 2 shows the correlation coefficient matrix obtained by calculation. It can be seen that the correlation coefficient between the resistivity curve and the TOC content was high (0.7124). Otherwise, there is a high coefficient of correlation among the spontaneous potential, acoustic time difference, density, neutron porosity, uranium curve, and gamma ray curve, and the TOC content.

In summary, it was found from the analysis for the cross-plot and coefficient of correlation between the well log and TOC content that there was no one-to-one correspondence function relationship between any aforementioned well logs and the TOC content. However, the sensibility of different well logs to the TOC content was significantly different. This study analyzed the log response characteristics of the TOC content, as is shown in

Table 3.

3.3. Data Optimization

The 70 sample data of the wells were divided into two types according to the fuzzy

c-means clustering analysis. One type was the data that best reflected the function relationship between the TOC content and the log curve, which was called the high-quality sample point. The other type was the data that were named the low-quality sample point, because they could not reflect the function relationship between the TOC content and log curve. The aforementioned nine types of log curves were regarded as the sample classification index. Since the log data had different dimensions and orders of magnitude, it was necessary to preprocess through normalization to guarantee the classification effect. The normalization processing Equation was as follows:

in which

is the index value after normalization;

is the

lth index of the

nth sample; and

and

represent the maximum and minimum value of the sample of the

lth index, respectively.

Following the normalization, the sample data were classified using a fuzzy

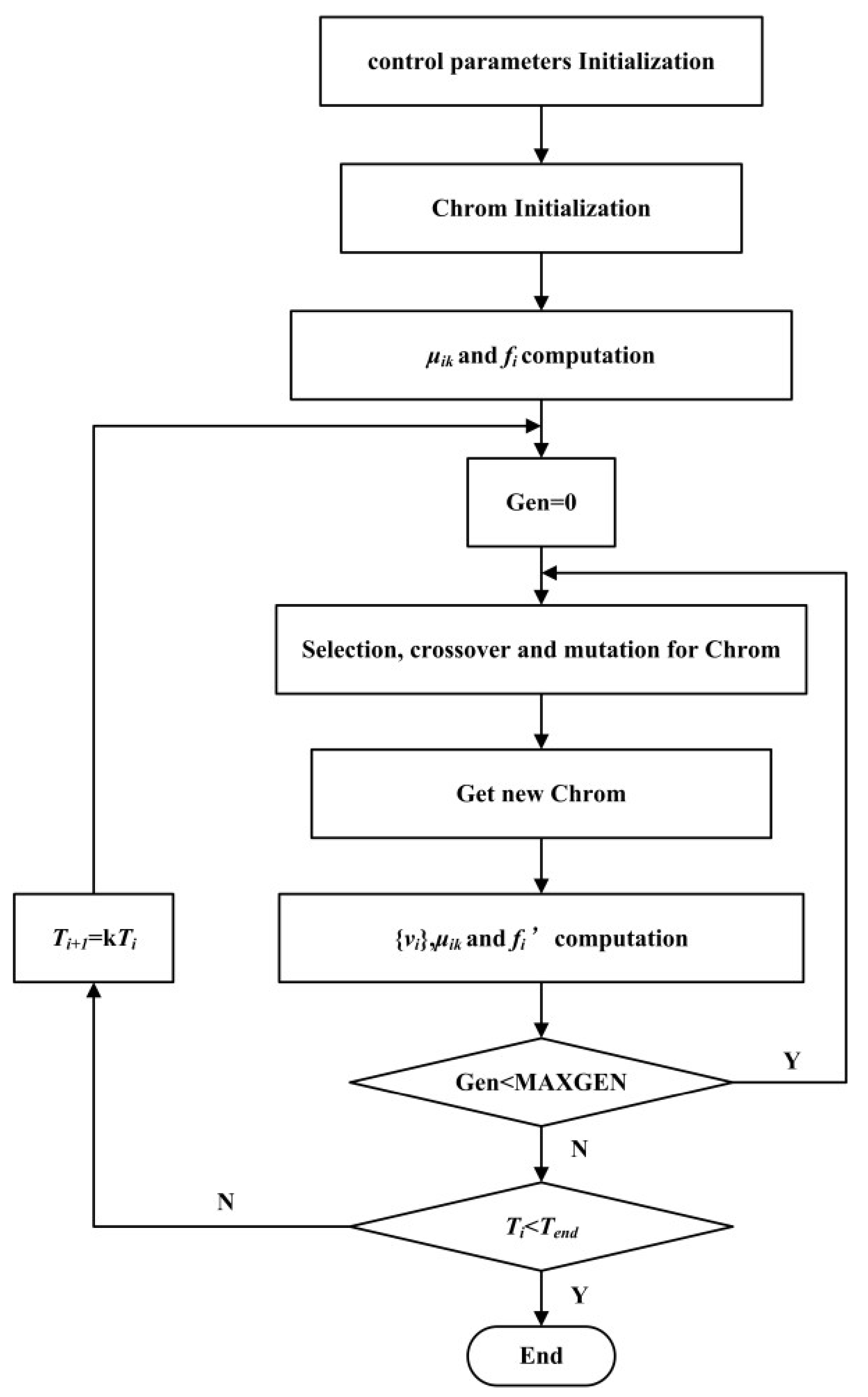

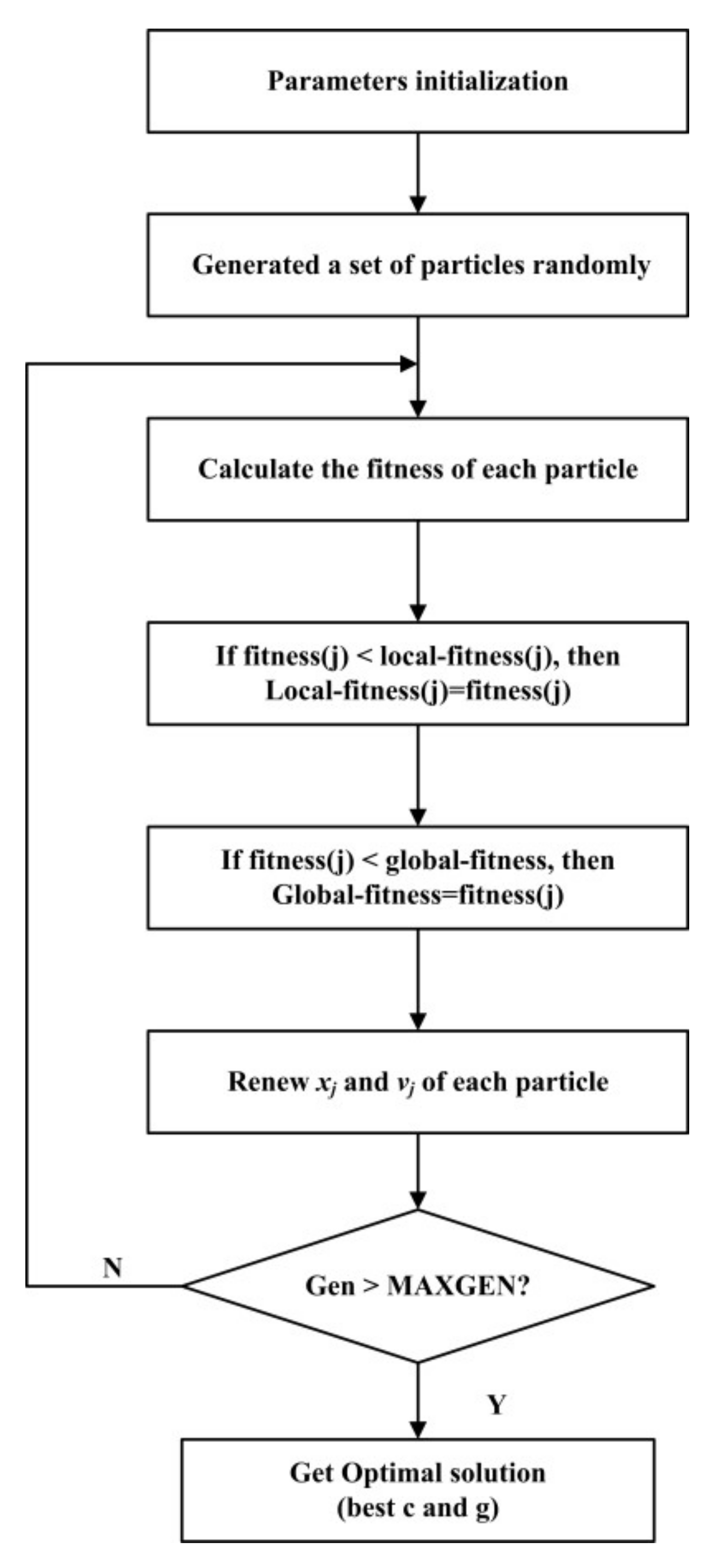

c-means clustering method based on a genetic simulated annealing algorithm. The algorithm in this study involved the control parameters that are shown in

Table 4.

In

Table 4,

b is the weighted index and controls the distribution of the grade of membership and the fuzzy degree of clusters;

N represents the maximum number iterations;

D represents the termination tolerance of the objective function; sizepop indicates the population size; MAXGEN represents the maximum number of evolution;

Pc is the crossover probability;

Pm represents the mutation probability;

T0 is the initial annealing temperature;

k represents the cooling coefficient; and

Tend represents the end temperature.

The samples were classified into high and low-quality samples. Also, the grade of membership of each sample to these two classes was obtained through calculation. Comparing the grades of membership of these two classes, the class with a larger grade of membership was the grade of the sample.

Table 5 shows the matrix of the grade of membership for the samples. The values of HQ and PQ for each sample point were calculated by SAGA-FCM method as list in

Table 5. If the HQ value less than the PQ value, the data is classified as low-quality data. As can be seen from

Table 5, there were 61 high-quality sample points in total, while the remaining nine sample points were low-quality sample points.

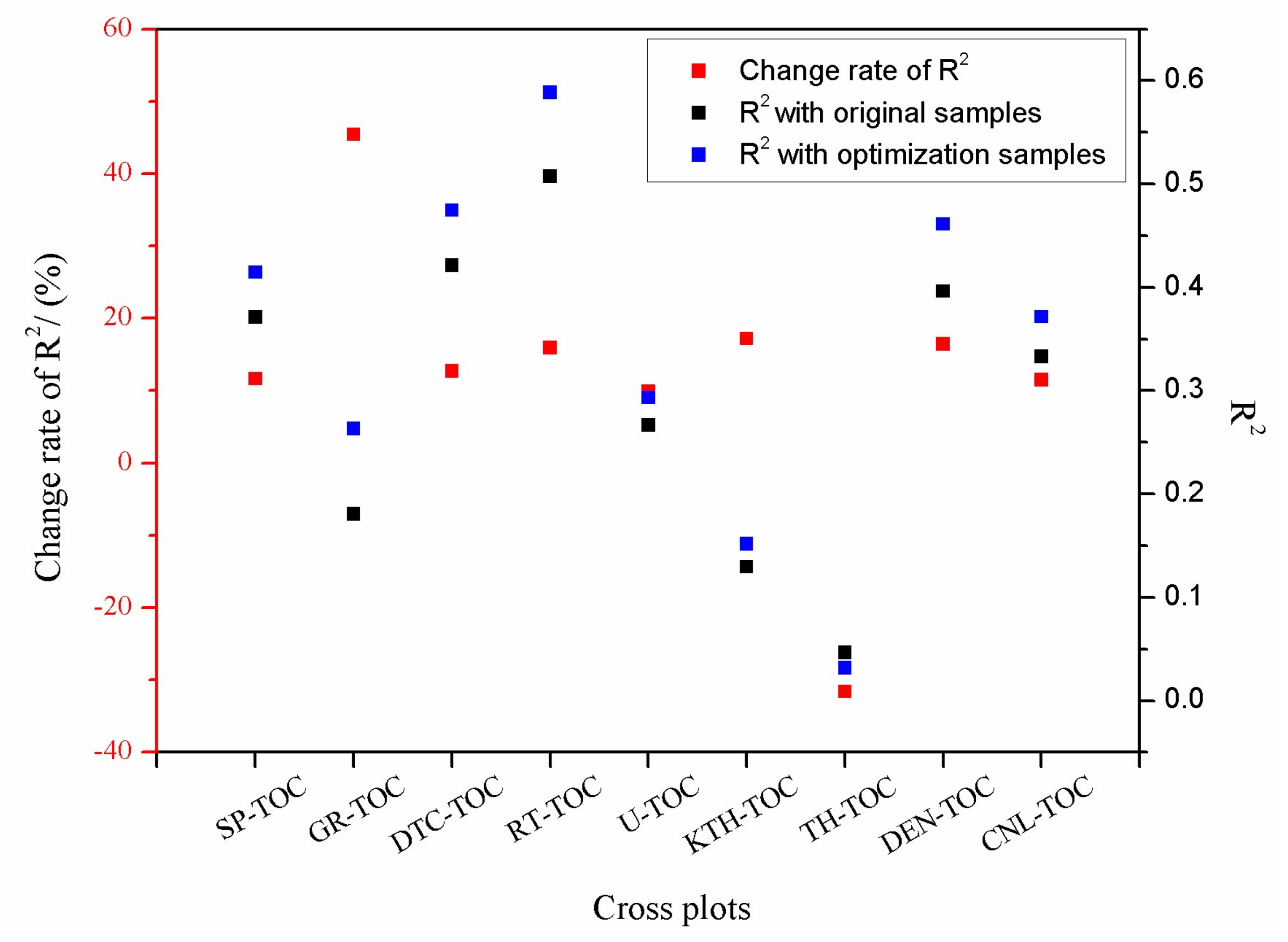

A cross-plot and a coefficient of correlation analysis were constructed for the 61 high-quality sample data. As is shown in

Table 6, comparing the analysis results of the sample data before optimization (

Figure 4 and

Table 2), it was found that the coefficient of determination and that of correlation were both greatly improved. This study analyzed the coefficient of determination before and after the optimization of the sample data. The change rate of R

2 were calculated by the following equation:

in which

is the R

2 of optimization samples,

is the R

2 of original samples, and G is the change rate of R

2 before and after the optimization of the sample data.

When the G > 0, it means that the optimization is effective. While the G < 0, it means that the optimization is invalid. As shown in

Figure 5, it was obvious that the coefficient of determination (R

2) for the well log and the simple linear regression of the TOC content after optimization of sample data were greatly improved. For example, the natural gamma ray curve had the largest change in coefficient of determination (the change rate of R

2 for GR-TOC is 45.4395%). Since there was almost no correlation between the thorium curve and the TOC content, the coefficient of determination showed a negative change (the change rate of R

2 for TH-TOC is −31.6129%). The coefficient of determination (R

2) for the spontaneous potential, acoustic time difference, resistivity, uranium curve, potassium curve, density curve, and compensate neutron curve all showed positive change. From

Figure 5, taking SP-TOC for example, the R

2 of SP-TOC with original samples data is 0.3713, and the R

2 of SP-TOC with optimization samples data is 0.4146. Then, the change rate of R

2 for SP-TOC is calculated by Equation (18), which is 11.6617%. Finally, the change rates of R

2 for DTC-TOC, RT-TOC, U-TOC, KTH-TOC, DEN-TOC, and CNL-TOC were calculated, respectively, and they were 12.7018%, 15.9803%, 9.8951%, 17.2068%, 16.4311%, and 11.5315%, respectively. The results illustrate that the sample data optimization is effective generally.