Refined Diebold-Mariano Test Methods for the Evaluation of Wind Power Forecasting Models

Abstract

:1. Introduction

2. The Evaluation Criteria for Wind Power Forecasting Models

2.1. Traditional Evaluation Criteria and Their Limitations

| Index | Abbreviator | Specification |

|---|---|---|

| Mean Squared Error | MSE |  |

| Root Mean Squared Error | RMSE |  |

| Mean Absolute Error | MAE |  |

| Mean Absolute Percentage Error(%) | MAPE |  |

| Maximum Error | ME | ME = max (|yt − ŷt|) |

| Theil Inequality Coefficient | TIC |  |

2.2. Diebold-Mariano Test

denote the ith competing h-step forecasting series.

denote the ith competing h-step forecasting series. (i = 1, 2, 3,…m). where m is the number of the forecasting models. The h-step forecasting errors

(i = 1, 2, 3,…m). where m is the number of the forecasting models. The h-step forecasting errors  is:

is:

is a consistent estimator of the asymptotic variance of

is a consistent estimator of the asymptotic variance of  . Note that the variance is used in the statistic because the sample of loss differentials dt are serially correlated for h > 1. Since the DM statistics converge to a normal distribution, we can reject the null hypothesis at the 5% level if |DM| > 1.96; this condition corresponds to the zone A and zone C in Figure 1. Otherwise, if |DM| ≤ 1.96, we cannot reject the null hypothesis H0, and this case corresponds to zone B in Figure 1.

. Note that the variance is used in the statistic because the sample of loss differentials dt are serially correlated for h > 1. Since the DM statistics converge to a normal distribution, we can reject the null hypothesis at the 5% level if |DM| > 1.96; this condition corresponds to the zone A and zone C in Figure 1. Otherwise, if |DM| ≤ 1.96, we cannot reject the null hypothesis H0, and this case corresponds to zone B in Figure 1.

2.3. Augmented DM Test

2.4. Asymmetric DM Test

, p is a positive integer valued power parameter; a is the asymmetric index parameter. If a = 1, the type I asymmetric loss function is reduced to a symmetric loss function. Moreover, if a = 1 and p = 2, the loss function is reduced to a squared-error loss function.

, p is a positive integer valued power parameter; a is the asymmetric index parameter. If a = 1, the type I asymmetric loss function is reduced to a symmetric loss function. Moreover, if a = 1 and p = 2, the loss function is reduced to a squared-error loss function.

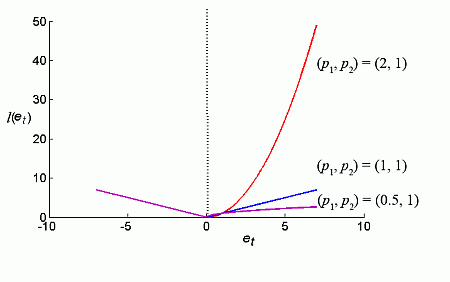

, p1 and p2 are positive integer valued asymmetric power parameters. If p1 = p2 = 2, the Type II asymmetric loss function is reduced to a squared-error loss function. Otherwise, if p1 = p2 = 1, the loss function is reduced to an absolute-error loss function.

, p1 and p2 are positive integer valued asymmetric power parameters. If p1 = p2 = 2, the Type II asymmetric loss function is reduced to a squared-error loss function. Otherwise, if p1 = p2 = 1, the loss function is reduced to an absolute-error loss function.

3. Case Study and Results

3.1. Data

3.2. Forecasting Models

3.3. Forecasting Performance

| Models | MAE | MSE |

|---|---|---|

| Model A:GARCH | 1.916871 | 4.315878 |

| Model B:TAR | 2.571685 | 10.08665 |

| Model C:ARMA | 2.614892 | 10.02256 |

3.4. Forecasting Evaluation Based on DM Test

| DM test based on Model A and Model B | DM test based on Model B and Model C | DM test based on Model C and Model A | |

|---|---|---|---|

| DM-AE | −1.5168 | −0.0852 | 1.7401 |

| p-Value_DM-AE | 0.1429 | 0.9328 | 0.0952 |

| DM-SE | −2.3647 | 0.0213 | 2.5044 |

| p-Value_DM-SE | 0.026861 | 0.9832 | 0.0198 |

- (1)

- According to the DM test based on the absolute-error loss, since the absolute value of DM-AE is 1.5168, that is, less than 1.96, the zero hypothesis cannot be rejected at the 5% level of significance, that is to say, the observed difference between the forecasting performance of model A and model B is not significant and might me due to stochastic interference.

- (2)

- According to the DM test based on the squared-error loss, since the absolute value of DM-SE = 2.3647 > 1.96, the zero hypothesis is rejected at the 5% level of significance, that is to say, the observed differences are significant and the forecasting accuracy of model A is better than that of model B.

3.5. Forecasting Evaluation Based on Augmented DM Test

3.6. Forecasting Evaluation Based on Asymmetric DM Test

| Comparison of model A and model B | Comparison of model B and model C | Comparison of model C and model A | |

|---|---|---|---|

| DM-aI | −2.2429 | −0.7747 | 3.1649 |

| p-Value_ DM-aI | 0.02966 | 0.4424 | 0.002721 |

| DM-aII | −1.711 | −0.3481 | 2.2932 |

| p-Value_ DM-aII | 0.09367 | 0.7293 | 0.02635 |

- (1)

- Different loss functions will induce different DM test results. The forecasting accuracy of model A and model C is equally matched by DM-AE, as shown in Table 3. However, the forecasting accuracy of the two models is significantly different by the DM-aI test and DM-aII test. Consequently, a reasonable loss function will help to choose the better model.

- (2)

- The asymmetric loss can penalize large positive forecasting errors, et. If the positive forecasting errors are large enough, the zero hypothesis of the DM test based on asymmetric loss tends to be rejected. Model C has several large positive forecasting errors, while model A is outstanding in the view of large positive forecasting errors, so model C is worse than model A by the asymmetric DM test based on asymmetric loss.

4. Discussion

5. Conclusions

Acknowledgments

Author Contributions

Conflicts of Interest

References

- Baños, R.; Manzano-Agugliaro, F.; Montoya, F.G.; Gil, C.; Alcayde, A.; Gómez, J. Optimization methods applied to renewable and sustainable energy: A review. Renew. Sustain. Energy Rev. 2011, 4, 1753–1766. [Google Scholar]

- Liu, H.; Erdem, E.; Shi, J. Comprehensive evaluation of ARMA–GARCH (-M) approaches for modeling the mean and volatility of wind speed. Appl. Energy 2011, 3, 724–732. [Google Scholar]

- Manzano-Agugliaro, F.; Alcayde, A.; Montoya, F.G.; Zapata-Sierra, A.; Gil, C. Scientific production of renewable energies worldwide: An overview. Renew. Sustain. Energy Rev. 2013, 18, 134–143. [Google Scholar]

- Hernández-Escobedo, Q.; Saldaña-Flores, R.; Rodríguez-García, E.R.; Manzano-Agugliaro, F. Wind energy resource in Northern Mexico. Renew. Sustain. Energy Rev. 2014, 32, 890–914. [Google Scholar] [CrossRef]

- Li, J. Wind 12 in China; Chemical Industry Press: Beijing, China, 2005. [Google Scholar]

- Hernández-Escobedo, Q.; Manzano-Agugliaro, F.; Gazquez-parra, J.A.; Zapata-Sierra, A. Is the wind a periodical phenomenon? The case of Mexico. Renew. Sustain. Energy Rev. 2011, 1, 721–728. [Google Scholar]

- Hernández-Escobedo, Q.; Manzano-Agugliaro, F.; Zapata-Sierra, A. The wind power of Mexico. Renew. Sustain. Energy Rev. 2010, 9, 2830–2840. [Google Scholar]

- Zhang, Y.; Wang, J.; Wang, X. Review on probabilistic forecasting of wind power generation. Renew. Sustain. Energy Rev. 2014, 32, 255–270. [Google Scholar]

- KLange, M.; Focken, U. Physical Approach to Short Term Wind Power Prediction; Springer-Verlag: New York, NY, USA, 2009; pp. 7–53. [Google Scholar]

- Yang, X.; Xiao, Y.; Chen, S. Wind speed and generated power forecasting in wind farm. Proc. CSEE 2005, 11, 1–5. [Google Scholar]

- Torres, J.L.; García, A.; de Blas, M.; de Francisco, A. Forecast of hourly average wind speed with ARMA models in Navarre (Spain). Sol. Energy 2005, 1, 65–77. [Google Scholar]

- Taylor, J.W.; McSharry, P.E.; Buizza, R. Wind power density forecasting using wind ensemble predictions and time series models. IEEE Trans. Energy Convers. 2009, 3, 775–782. [Google Scholar] [CrossRef]

- Liu, H.; Tian, H.; Pan, D.; Li, Y. Forecasting models for wind speed using wavelet, wavelet packet, time series and Artificial Neural Networks. Appl. Energy 2013, 107, 191–208. [Google Scholar] [CrossRef]

- Alexiadis, M.C.; Dokopoulos, P.S.; Sahsamanoglou, H.S. Wind speed and power forecasting based on spatial models. IEEE Trans. Energy Convers. 1999, 3, 836–842. [Google Scholar]

- Louka, P.; Galanis, G.; Siebert, N.; Kariniotakis, G.; Katsafados, P.; Pytharoulis, I.; Kallos, G. Improvements in wind speed forecasts for wind power prediction purposes using Kalman filtering. J. Wind Eng. Ind. Aerodyn. 2008, 12, 2348–2362. [Google Scholar]

- Sanchez, I.; Usaola, J.; Ravelo, O.; Velasco, C.; Dominguez, J.; Lobo, M.; Gonzalez, G.; Soto, F.; Diaz-Guerra, B.; Alonso, M. Sipreolico—A Wind Power Prediction System Based on Flexible Combination of Dynamical Models. Application to the Spanish Power System. In Proceedings of the First Joint Action Symposium on Wind Forecasting Techniques, Norrkoping, Sweden, 3–4 December 2002.

- Diebold, F.X. Element of Forecasting, 4 ed.; Thomson South-western: Cincinnati, OH, USA, 2007; pp. 257–287. [Google Scholar]

- Xu, M.; Qiao, Y.; Lu, Z. A comprehensive error evaluation method for short-term wind power prediction. Autom. Electr. Power Syst. 2011, 12, 20–26. [Google Scholar]

- Yan, G.; Song, W.; Yang, M.; Wang, D.; Xiong, H. A comprehensive evaluation method of the real-time prediction Effect of wind power. Power Syst. Clean Energy 2012, 5, 1–6. [Google Scholar]

- De Giorgi, M.; Ficarella, A.; Tarantino, M. Error analysis of short term wind power prediction models. Appl. Energy 2011, 4, 1298–1311. [Google Scholar] [CrossRef]

- Diebold, F.X.; Mariano, R. Comparing predictive accuracy. J. Bus. Econ. Stat. 1995, 13, 253–265. [Google Scholar]

- Chen, H.; Wan, Q.; Li, F.; Wang, Y. GARCH in Mean Type Models for Wind Power Forecasting. In Proceedings of the IEEE PES General Meeting 2013, Vancouver, BC, Canada, 24–29 July 2013.

- Bollerslev, T. Generalized Autoregressive Conditional Heteroskedasticity. J. Econom. 1986, 3, 307–327. [Google Scholar] [CrossRef]

- Chen, H.; Li, F.; Wan, Q.; Wang, Y. Short Term Load Forecasting using Regime-Switching GARCH Models. In Proceedings of the IEEE PES General Meeting 2010, Detroit, MI, USA, 25–29 July 2011.

- Fan, J.; Yao, Q. Nonlinear Time Series: Nonparametric and Parametric Methods; Springer-Verlag: New York, NY, USA, 2003; pp. 125–192. [Google Scholar]

- Tsay, R.S. An Introduction to Analysis of Financial Data with R; John Wiley & Sons, Inc.: Hoboken, NJ, USA, 2012; pp. 176–273. [Google Scholar]

© 2014 by the authors; licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution license (http://creativecommons.org/licenses/by/3.0/).

Share and Cite

Chen, H.; Wan, Q.; Wang, Y. Refined Diebold-Mariano Test Methods for the Evaluation of Wind Power Forecasting Models. Energies 2014, 7, 4185-4198. https://doi.org/10.3390/en7074185

Chen H, Wan Q, Wang Y. Refined Diebold-Mariano Test Methods for the Evaluation of Wind Power Forecasting Models. Energies. 2014; 7(7):4185-4198. https://doi.org/10.3390/en7074185

Chicago/Turabian StyleChen, Hao, Qiulan Wan, and Yurong Wang. 2014. "Refined Diebold-Mariano Test Methods for the Evaluation of Wind Power Forecasting Models" Energies 7, no. 7: 4185-4198. https://doi.org/10.3390/en7074185