3.1. Opposition-Based Learning Population Initialization

Opposition-based learning (OBL) is first proposed by Tizhoosh [

13]. OBL simultaneously considers a solution and its opposite solution; the fitter one is then chosen as a candidate solution in order to accelerate convergence and improve solution accuracy. It has been used to enhance various optimization algorithms, such as the differential evolution [

14], the particle swarm optimization [

15], the firefly algorithm [

16], the adaptive fireworks algorithm [

17] and the quantum firework algorithm [

18]. Inspired by these, OBL was add to FWA to initialize population.

Definition 1. Assume

X = (

x1,

x2,...,

xd) is a solution with d dimensions, where

x1,

x2,...,

xd∈

R and

xi∈[

Li,Ui],

i=1,2,...,

d. The opposite solution

OX = (

ox1,

ox2,...,

oxd) is defined as follows:

In fact, according to probability theory, 50% of the time an opposite solution is better. Therefore, based on a solution and an opposite solution, OBL has the potential to accelerate convergence and improve solution accuracy.

In the population initialization, both a random solution and an opposite solution OP are considered to obtain fitter starting candidate solutions.

Algorithm 4 is performed for opposition-based population initialization as follows.

| Algorithm 4: Opposition-Based Population |

| Initialize fireworks P with a size of N randomly |

| Calculate an opposite fireworks OP based on Equation (6) |

| Assess 2 × N fireworks’ fitness |

| Choose the fittest individuals from P and OP as initial fireworks |

3.2. Analysis and Improvement of Explosion Amplitude

The main purpose of Equation (1) is that the explosion amplitude of the fireworks is inversely proportional to the fitness value of the function. It enhances the local search ability of the fireworks. However, if we apply the optimal fireworks into Equation (1), the result as follows.

Since the numerator is the smallest constant expressed in the computer, the result of the Equation (7) is equal to 0. It is obviously inconsistent with the original design intent of the fireworks algorithm. The fireworks algorithm requires the optimal firework generated the largest number of sparks, i.e., sparks do not create much searches but increase the amount of calculation in vain.

To solve this problem, [

10] gives the linear decreasing (Equation (8)) and non-linear decreasing (Equation (9)) explosion amplitude strategies as follows.

where

T is the maximum number of iterations,

t is the number of iterations,

Ainit and

Afinal are the initial and final value of the explosion amplitude respectively.

Although the two strategies effectively avoid the optimal firework explosion amplitude approaching to 0, it relies on the maximum number of iterations heavily which needs to be set manually. Based on this, we propose a new method to calculate the explosion amplitude for the optimal firework. According to the aspects of population evolution rate and population aggregation degree, this method dynamically changes the explosion amplitude.

Definition 2. Assume the

t generation global optimal value is denoted as

Ymin(t), the global optimal value of the

t − 1 generation is

Ymin(t − 1). The population evolution rate

a(t) is defined as follows.

Fireworks algorithm retains the optimal fireworks for each iteration, the current global optimal value is always better than or equal to the global optimal value of the last iteration. From Equation (10), the value of a(t) changes greatly means that the evolution speed is fast. When a(t) is equal to 1 after several iterations, it indicates that the algorithm stagnates or finds the optimal value.

Definition 3. Assume the t generation global optimal value is denoted as

Ymin(t), the average fitness value of all fireworks in the t generation is denoted as

Yavg(t). The population aggregation degree

b(t) is defined as follows.

From Equation (11), b(t) is larger, indicating the distribution of fireworks in the population more concentrated.

According to Definitions 2 and 3 can be clearly reflected optimization process in FWA. If we adjust explosion amplitude of the optimal firework with the population evolution rate and population aggregation degree, it means combining the explosion amplitude and optimization process.

When a(t) is small, the evolution speed is fast, and the algorithm can search in a large space. That is, the optimal firework can be optimized in a large scope; when a(t) is too large, the search is performed in a small scope to find the optimal solution faster.

When b(t) is small, the fireworks are scattered and are less likely to fall into local optima, which is more likely to happen when b(t) assumes greater values. At this time, it is necessary to increase the explosion amplitude to increase the search space and improve the global searching ability of FWA.

To sum up, the explosion amplitude should decrease as the population evolution rate increases, and increase with population aggregation degree increases. This paper describes this phenomenon in a simplified way.

where

Ai is the explosion amplitude , and the initial value is set as the size of the objective function search space.

up is the enlargement factor,

low is the reduction factor. Of course, larger

up and smaller

low cannot help to search exactly. Thus,

up and

low should be set a fit value.

This improvement is discussed in the following section:

When the a(t) is not equal to 1, it means the algorithm finds a better solution than the last generation, and the explosion amplitude should be enlarged. We emphasize that increasing the explosion amplitude may speed up the rate of convergence: assume that the current optimal firework is far from the global optimum. Increasing the explosion amplitude is a direct and efficient way to help the algorithm move faster towards global optimization. However, it should also be noted that the probability of finding a better firework will decrease as the search space increases (obviously, this depends on the optimization function to a large extent).

When the b(t) is equal 1, it means the algorithm may fall into local optima, and the fireworks are concentrated, increasing the explosion amplitude to make the fireworks are scattered, which help the algorithm jump out the local optima effectively.

When the a(t) is equal 1 and the b(t) is not equal 1, it means the algorithm does not find out a better solution than the last generation and the fireworks are scattered. In this case, the optimal firework explosion amplitude will be reduced to narrow the search to a smaller area, thereby enhancing local development capability of the optimal firework. In general, the probability of finding a better firework increases as the explosion amplitude decreases.

In this paper, the explosion amplitude of the optimal firework is calculated by Equation (12). In contrast, the explosion amplitude of non-optimal fireworks is calculated still by Equation (1). Algorithm 5 is performed for updating explosion amplitude as follows.

| Algorithm 5: Update Explosion Amplitude |

| Find the optimal firework from all fireworks of t generation : Xbest |

| Calculate the fitness of the optimal firework of t generation : Ymin(t) |

| Calculate the fitness of the optimal firework of last iteration: Ymin(t − 1) |

| Calculate the average fitness value of all fireworks of t generation : Yavg(t) |

| Calculate the population evolution rate : a(t) |

| Calculate the population aggregation degree : b(t) |

| For the optimal firework: |

| If a(t) ≠ 1 or b(t) = 1 then |

| Ai = Ai × up |

| else |

| Ai = Ai × low |

| End if |

| For the non-optimal fireworks: |

| Ai is calculated by Equation (1) |

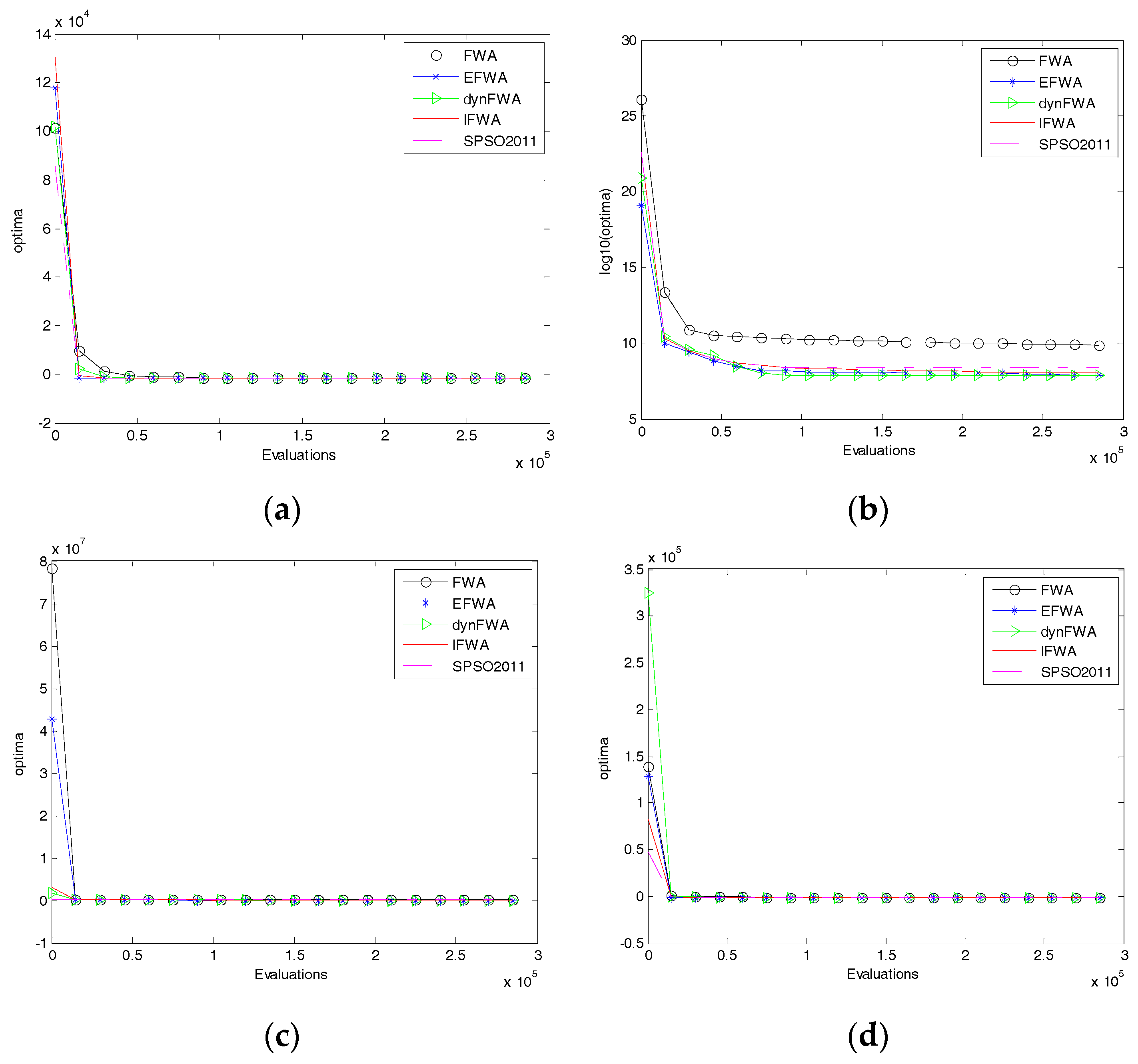

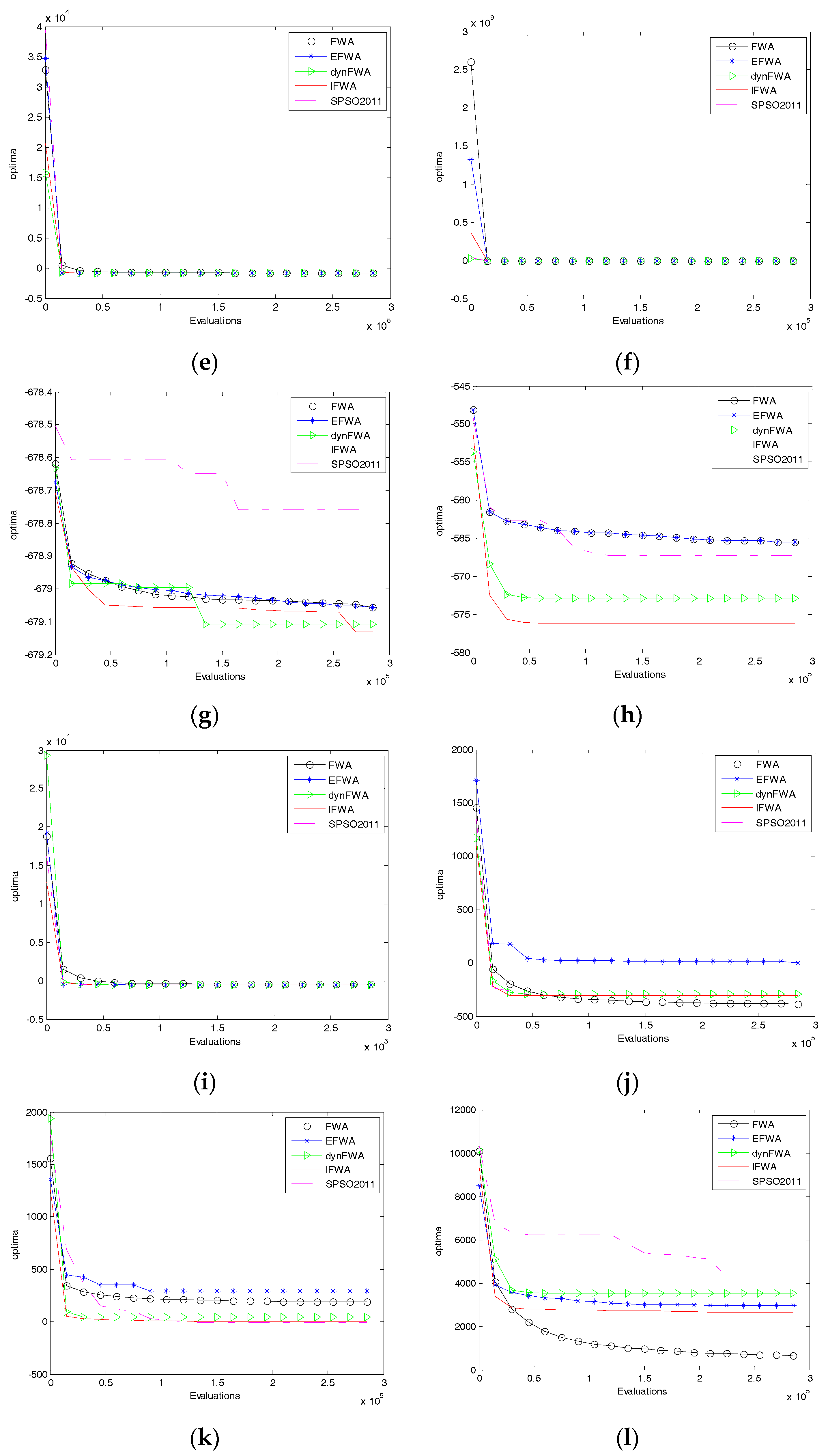

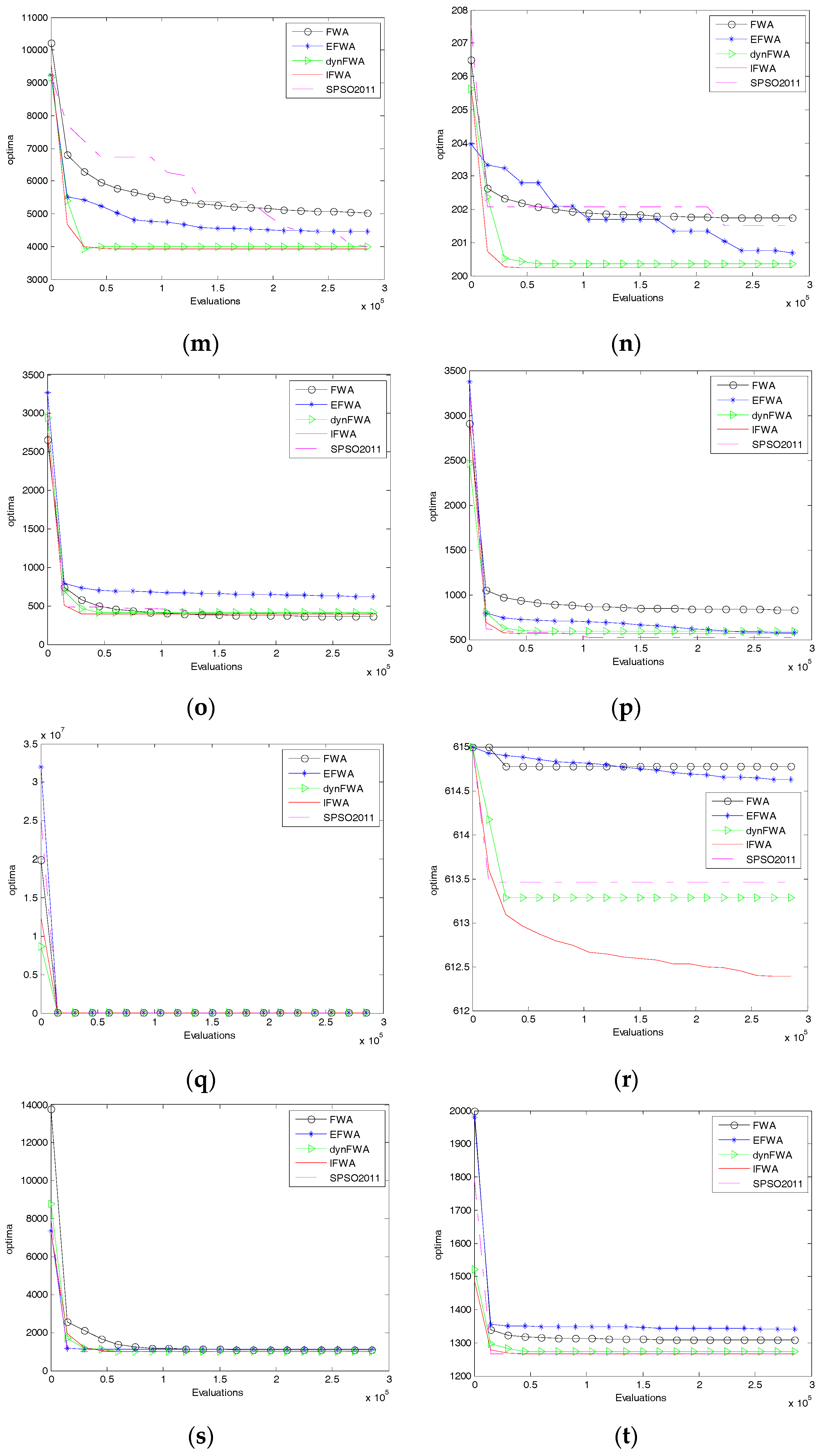

Figure 1 depicts the process of explosion enlargement and reduction during the optimization of the Sphere function. An alternating behavior is noted, with reduction being performed more often, on the one hand because

up and

low are set to 1.2 and 0.9, on the other hand because the initial value of the explosion amplitude is set to the size of the search space, which is a considerable initial value.

3.3. Analysis and Improvement of Gaussian Mutation

Zheng pointed out the shortcomings of Gaussian mutation in FWA [

10], and proposed a new type to generating location of Gaussian sparks, which is calculated as follows.

where

g = Gaussian(0,1),

Xbk is the position of the optimal firework in the

k dimension of the current fireworks population.

Cauchy mutation has a strong global search ability due to larger search range, and Gaussian mutation has a strong local development ability with small search range [

19]. Therefore, the advantage of Equation (13) is to improve the local development capability of the algorithm, and does not improve the global search ability in the early stage of algorithm. Zhou pointed out

t-distribution mutation combined with the two advantages of the Cauchy and Gaussian mutation [

20], which has a strong global search ability in the early stage of algorithm and a good local development ability in the later stage of algorithm.

From Equation (13), when the optimal firework of the current population is selected for Gaussian mutation exactly, apply it into Equation (13).

As we know, the optimal firework is the best information for the current population carrier, but Gaussian mutation does not have any effect on the optimal firework in Equation (14).

To sum up, the adaptive t-distribution mutation is proposed for non-optimal fireworks to effectively keep a better balance between exploration and exploitation. Elite opposition-based learning for optimal firework to make the FWA jump out of the local optimum effectively and accelerate the global search ability.

3.3.1. Adaptive t-Distribution Mutation

T-distribution, also known as the student’s

t-distribution, includes

n degrees of freedom. When

t(

n→∞), it is equal to Gaussian(0,1); when

t(

n→1), it is equal to Cauchy(0,1). That is the Gaussian distribution and the Cauchy distribution are two boundary special cases of

t-distribution [

20].

Definition 4. Adaptive

t-distribution mutation for non-optimal fireworks is used to generate location of sparks as follows.

where

n is the number of iterations, that is the number of iterations is the freedom of

t-distribution.

Algorithm 6 is performed for Adaptive t-distribution mutation for non-optimal fireworks to generate location of sparks as follows.

| Algorithm 6: Generating t-Distribution Mutation Sparks |

| Initialize the location of the explosion sparks: Xj = Xi |

| Set z = rand(1,d) |

| For k = 1:d do |

| If k∈z then |

| Xjk = Xjk + (Xbk − xjk) × t(n) |

| If Xjk out of bounds |

| Xjk = Xmink + |Xjk|% (Xmaxk − Xmink) |

| End if |

| End if |

| End for |

In the early stage of the algorithm, the value of n is small and the t-distribution mutation is similar to Cauchy distribution mutation, and it has a good global exploratory ability. In the later stage of the algorithm, the value of n is large, and the t-distribution mutation is similar to Gaussian distribution mutation, and it has a good local development ability. In the mid-run of the algorithm, the t-distribution mutation is between the Cauchy distribution mutation and the Gaussian distribution mutation. Therefore, the t-distribution combines the advantages of Gaussian distribution and Cauchy distribution, balancing the exploration and exploitation.

3.3.2. Elite Opposition-Based Learning

The basic idea of opposition-based learning is as follows: for a feasible solution, we evaluate the opposition-based solution simultaneously, and the optimal solution is selected as the next generation in the current feasible solution and opposition-based solution. Opposition-based learning keeps the diversity of population but large, if all the fireworks produce opposition-based solution, it is blind and increasing the amount of calculation. Therefore, here we choose the optimal individual to perform opposition-based learning.

Definition 5. Assume

Xbest = (

xbest,1,

xbest,2,...,

xbest,d) is a solution of the optimal firework with

d dimensions, where

xbest,1,

xbest,2,...,

xbest,d∈

R and

xi∈[min

i,max

i],

i = 1,2,...,

d. The opposite solution

OXbest = (

oxbest,1,

oxbest,2,...,

oxbest,d) is defined as follows.

where

rand is a uniform distribution on the interval [0, 1], and min

i and max

i are the minimum and maximum values of the current search interval on the

i dimension.

By Definition 5, rand is a uniformly distributed random number on [0, 1]. When rand takes different values, the optimal firework from the current population can produce a number of different optimal opposition-based fireworks, which are effective in increasing the diversity of the population and avoid the algorithm getting into the local optimal solution.

Algorithm 7 is performed for elite opposition-based learning for optimal firework to generate location of sparks. This algorithm is performed Nop times (Nop is a constant to control the number of elite opposition-based sparks).

| Algorithm 7: Generating Elite Opposition-Based Sparks |

| Find the location of optimal firework: Xbest = (x1,x2,...,xd) |

| For i = 1:d do |

| Find mini and maxi of the current search interval on the i dimension |

| oxbest,I = rand × (mini + maxi) − xbest,i |

| If oxi out of bounds |

| oxi = Xmini + |oxi|% (Xmaxi − Xmini) |

| End if |

| End for |

3.4. Analysis and Improvement of Selection Strategy

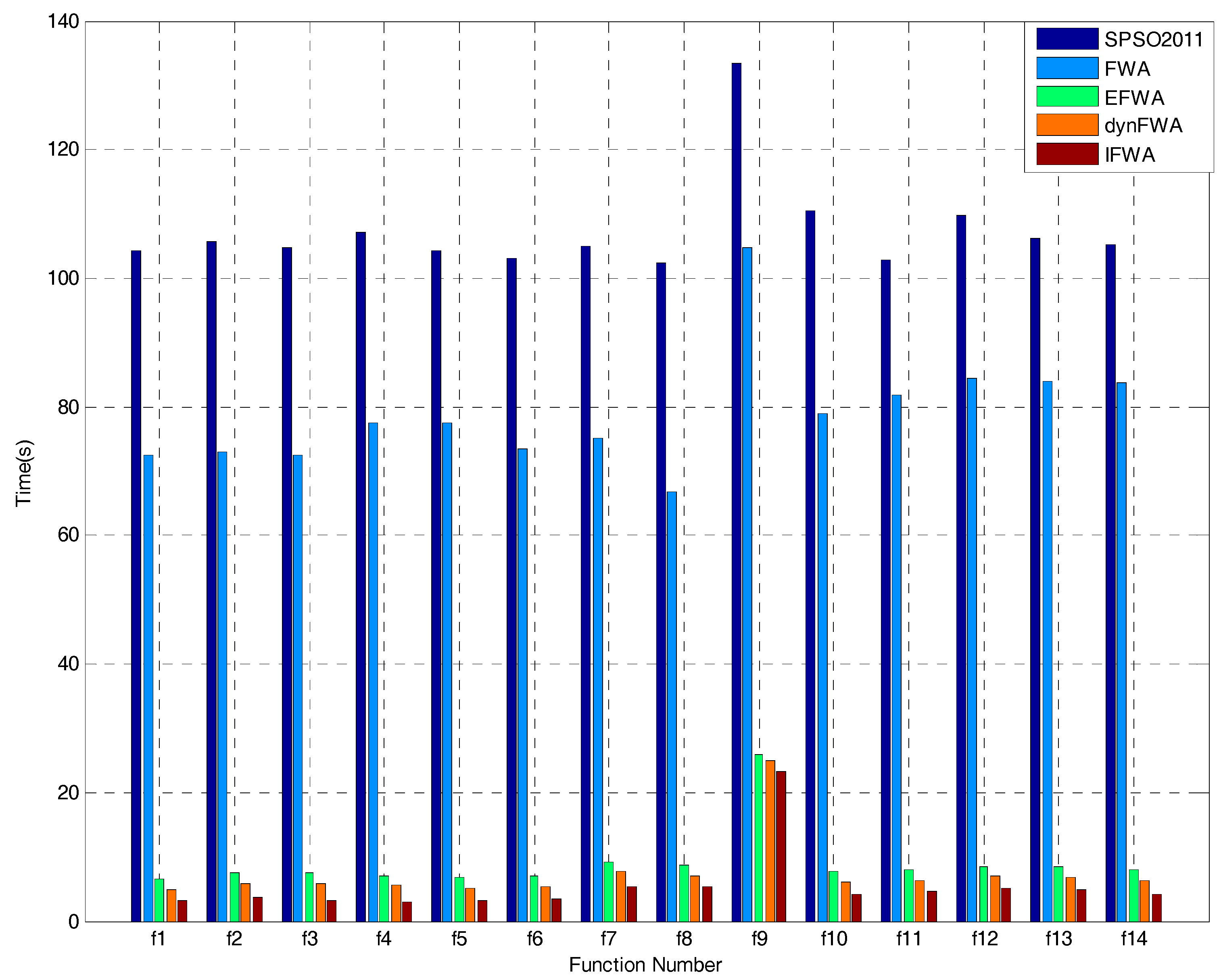

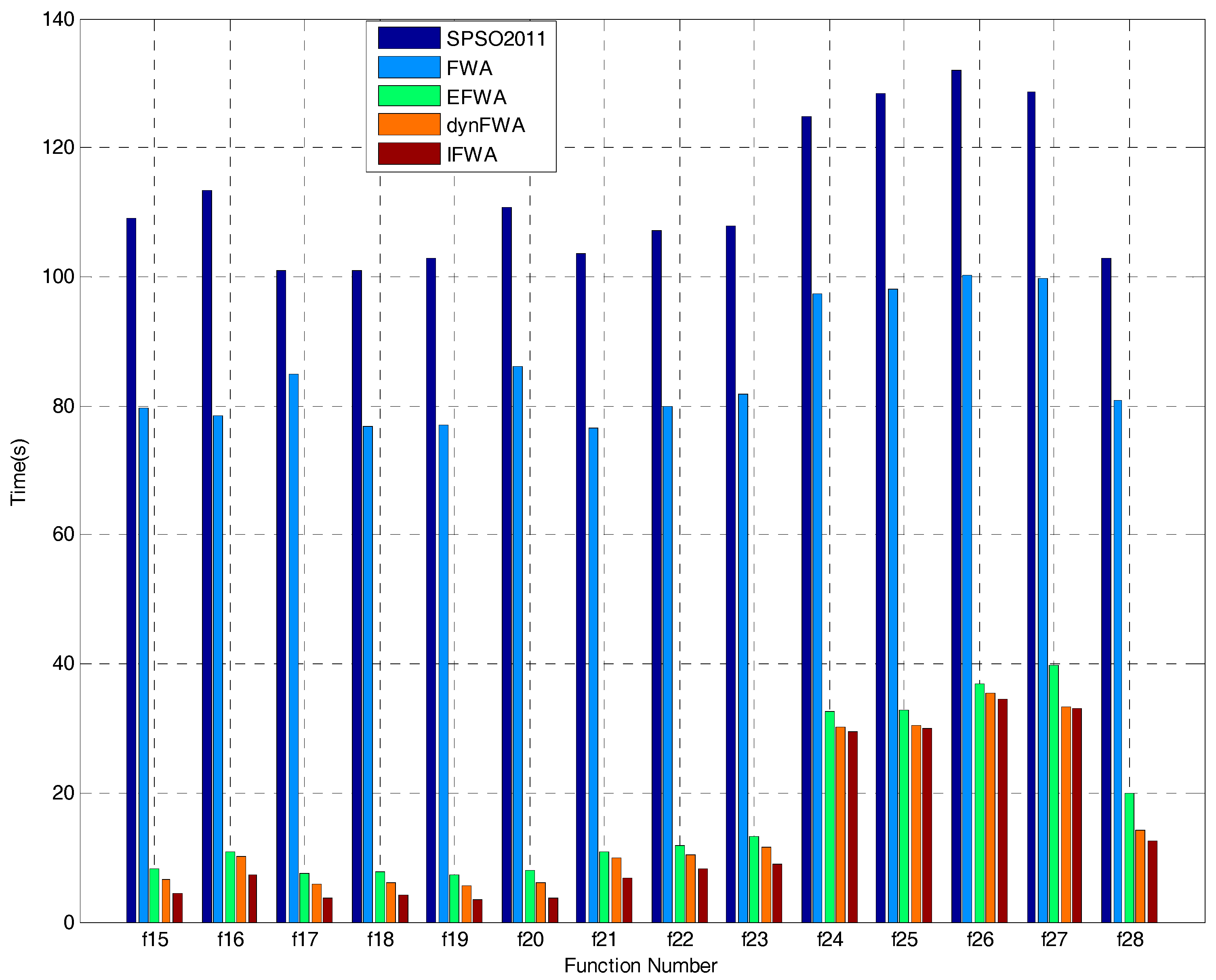

From Equations (4) and (5), the selection strategy is based on the distance measure in FWA. However, this requires that the euclidean distance matrix between any two points in each generation, which will lead to fireworks algorithm time consuming. Based on this, this paper proposes a new selection strategy: Elitism-Disruptive selection strategy.

The same as FWA, the Elitism-Disruptive selection also requires that the current best location is always kept for the next iterations. In order to keep the diversity, the remaining

N − 1 locations are selected based on disruptive selection operator. For location

Xi, the selection probability

pi is calculated as follows [

21]:

where

Yi is the fitness value of the objective function,

Yavg is the mean of all fitness values of the population in generation

t,

SN is the set of all fireworks.

The selection probabilities determined by this method can give both good and poor individuals more chances to be selected for the next iteration, while individuals with mid-range fitness values will be eliminated. This method can not only maintain the diversity of the population, reflect the better global searching ability, but also reflect greatly reduce the run-time compared with the FWA.