1. Introduction

Nature-inspired optimization algorithms have become of increasing interest to many researchers in optimization fields in recent years [

1]. After half a century of development, nature-inspired optimization algorithms have formed a great family. It not only has a wide range of contact with biological, physical, and other basic science, but also involves many fields such as artificial intelligence, artificial life, and computer science.

Due to the differences in natural phenomena, these optimization algorithms can be roughly divided into three types. These are algorithms based on biological evolution, algorithms based on swarm behavior, and algorithms based on physical phenomena. Typical biological evolutionary algorithms are Evolutionary Strategies (ES) [

2], Evolutionary Programming (EP) [

3], the Genetic Algorithm (GA) [

4,

5,

6], Genetic Programming (GP) [

7], Differential Evolution (DE) [

8], the Backtracking Search Algorithm (BSA) [

9], Biogeography-Based Optimization (BBO) [

10,

11], and the Differential Search Algorithm (DSA) [

12].

In the last decade, swarm intelligence, as a branch of intelligent computation models, has been gradually rising [

13]. Swarm intelligence algorithms mainly simulate biological habits or behavior, including foraging behavior, search behavior and migratory behavior, brooding behavior, and mating behavior. Inspired by these phenomena, researchers have designed many intelligent algorithms, such as Ant Colony Optimization (ACO) [

14], Particle Swarm Optimization (PSO) [

15], Bacterial Foraging (BFA) [

16], Artificial Bee Colony (ABC) [

17,

18,

19], Group Search Optimization (GSO) [

20], Cuckoo Search (CS) [

21,

22], Seeker Optimization (SOA) [

23], the Bat Algorithm (BA) [

24], Bird Mating Optimization (BMO) [

25], Brain Storm Optimization [

26,

27] and the Grey Wolf Optimizer (GWO) [

28].

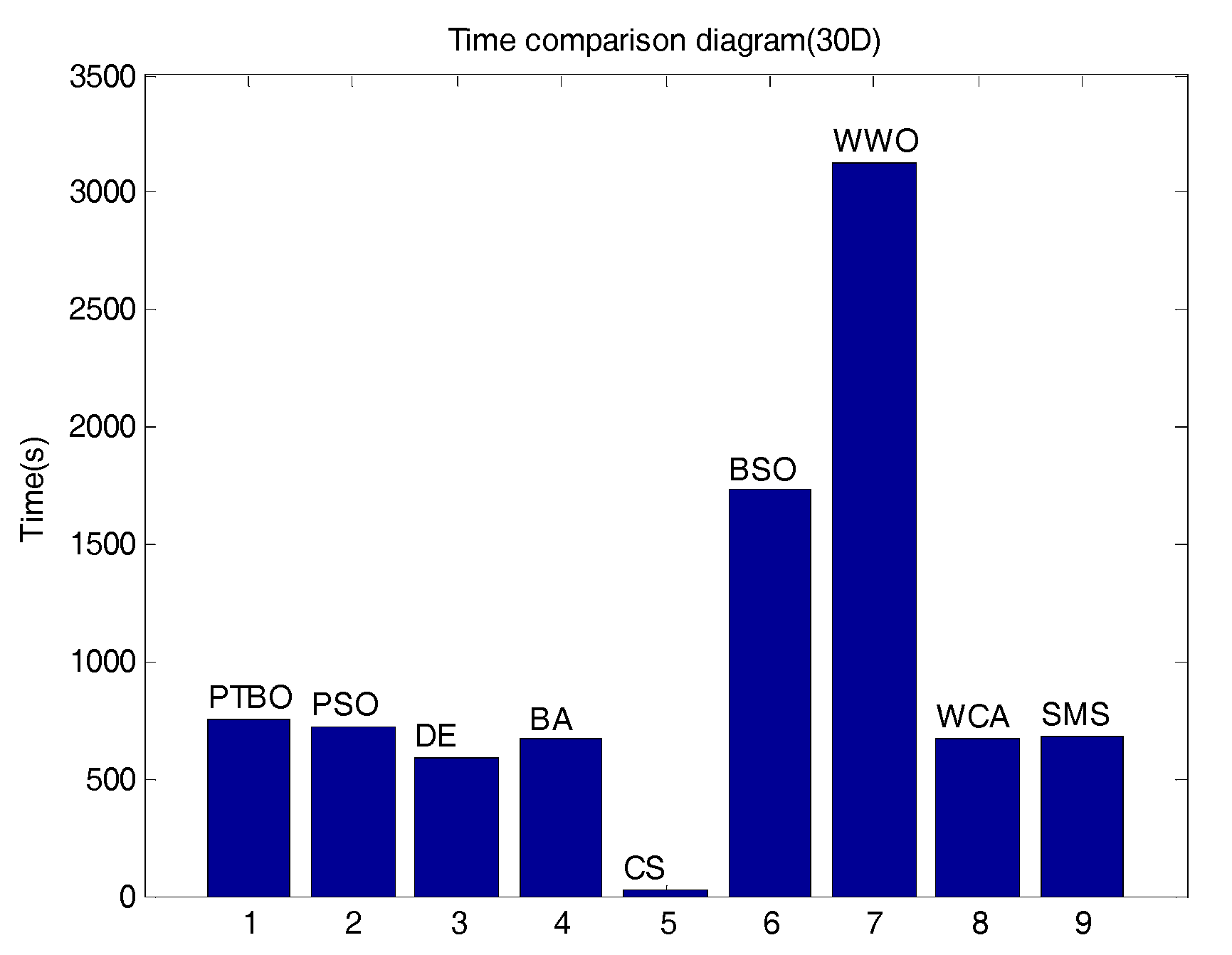

In recent years, in addition to the above two kinds of algorithms, intelligent algorithms simulating physical phenomenon have also attracted a great deal of researchers’ attention, such as the Gravitational Search Algorithm (GSA) [

29], the Harmony Search Algorithm (HSA) [

30], the Water Cycle Algorithm (WCA) [

31], the Intelligent Water Drops Algorithm (IWDA) [

32], Water Wave Optimization (WWO) [

33], and States of Matter Search (SMS) [

34]. The classical optimization algorithm about a physical phenomenon is simulated annealing, which is based on the annealing process of metal [

35,

36].

Though these meta-heuristic algorithms that have many advantages over traditional algorithms, especially in NP hard problems, have been proposed to tackle many challenging complex optimization problems in science and industry, there is no single approach that is optimal for all optimization problems [

37]. In other words, an approach may be suitable for solving these problems, but it is not suitable for solving those problems. This is especially true as global optimization problems have become more and more complex, from simple uni-modal functions to hybrid rotated shifted multimodal functions [

38]. Hence, more innovative and effective optimization algorithms are always needed.

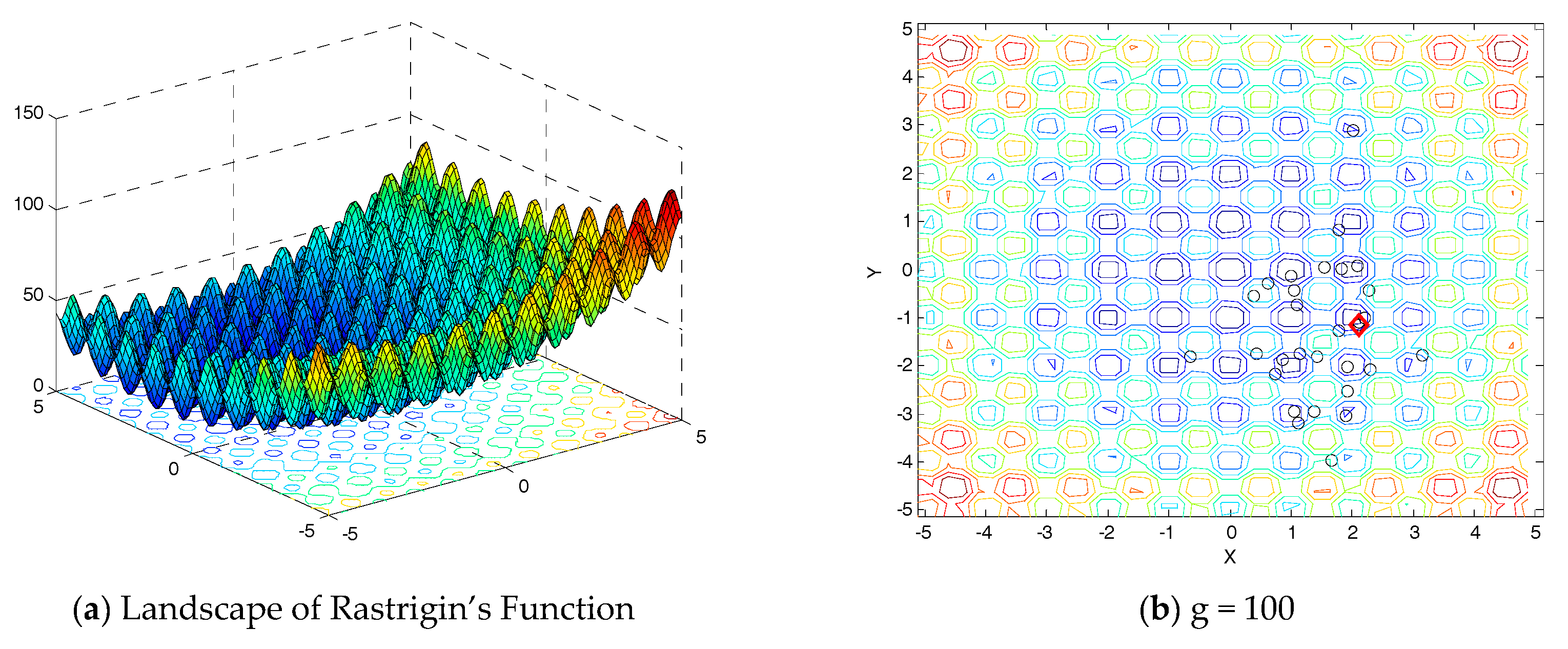

A natural phenomenon provides a rich source of inspiration to researchers to develop a diverse range of optimization algorithms with different degrees of usefulness and practicability. In this paper, a new meta-heuristic optimization algorithm inspired by the phase transition phenomenon of elements in a natural system for continuous global optimization is proposed, which is termed phase transition-based optimization (PTBO). From a statistical mechanics point of view, a phase transition is a non-trivial macroscopic form of collective behavior in a system composed of a number of elements that follow simple microscopic laws [

39]. Phase transitions play an important role in the probabilistic analysis of combinatorial optimization problems, and are successfully applied in Random Graph, Satisfiability, and Traveling Salesman Problems [

40]. However, there are few algorithms with the mechanism of phase transition for solving continuous global optimization problems in the literature.

Phase transition is ubiquitous in nature or in social life. For example, at atmospheric pressure, water boils at a critical temperature of 100 °C. When the temperature is lower than 100 °C, water is a liquid, while above 100 °C, it is a gas. Besides this, there are also a lot of examples of phase transition. Everybody knows that magnets attract nails made out of iron. However, the attraction force disappears when the temperature of the nail is raised above 770 °C. When the temperature is above 770 °C, the nail enters a paramagnetic phase. Moreover, it is well-known that mercury is a good conductor for its weak resistance. Nevertheless, the electrical resistance of mercury falls abruptly down to zero when the temperature passes through 4.2 K (kelvin temperature). From a macroscopical view, a phase transition is the transformation of a system from one phase to another one, depending on the values of control parameters, such as temperature, pressure, and other outside interference.

From the above examples, we can see that each system or matter has different phases and related critical points for those phases. We can also observe that all phase transitions have a common law, and we may think that phase transition is the competitive result of two kinds of tendencies: stable order and unstable disorder. In complex system theory, phase transition is related to the self-organized process by which a system transforms from disorder to order. From this point of view, phase transition implicates a search process of optimization. The thermal motion of an element is a source of disorder [

41]. The interaction of elements is the cause of order.

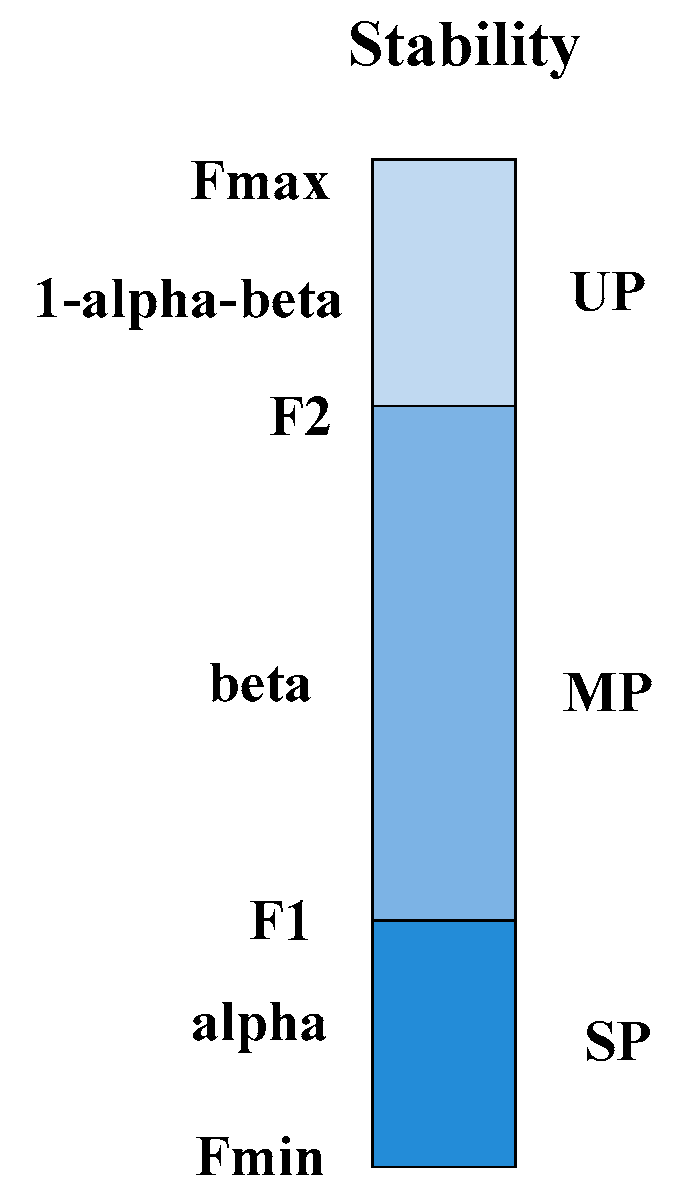

In the proposed PTBO algorithm, the degree of order or disorder is described by stability. We used the value of an objective function to depict the level of stability. For the sake of generality, we extract three kinds of phases in the process of transition from disorder to order, i.e., an unstable phase, a meta-stable phase [

42] and a stable phase. From a microscopic viewpoint, the diverse motion characteristics of elements in different phases provide us with novel inspiration to develop a new meta-heuristic algorithm for solving complex continuous optimization problems.

This paper is organized as follows. In

Section 2, we briefly introduce the prerequisite preparation for the phase transition-based optimization algorithm. In

Section 3, the phase transition-based optimization algorithm and its operators are described. An analysis of PTBO and a comparative study are presented in

Section 4. In

Section 5, the experimental results with those of other state-of-the-art algorithms are demonstrated. Finally,

Section 6 draws conclusions.

3. Phase Transition-Based Optimization Algorithm

3.1. Basic Idea of the PTBO Algorithm

In this work, the motion of elements from an unstable phase to another relative stable phase in PTBO is as natural selection in GA. Many of these iterations from an unstable phase to another relative stable phase can eventually make an element reach absolute stability. The diverse motional characteristics of elements in the three phases are the core of the PTBO algorithm to simulate this phase transition process of elements. In the PTBO algorithm, three corresponding operators are designed. An appropriate combination of the three operators makes an effective search for the global minimum in the solution space.

3.2. The Correspondence of PTBO and the Phase Transition Process

Based on the basic law of elements transitioning from an unstable phase (disorder) to a stable phase (order), the correspondence of PTBO and phase transition can be summarized in

Table 3.

3.3. The Overall Design of the PTBO Algorithm

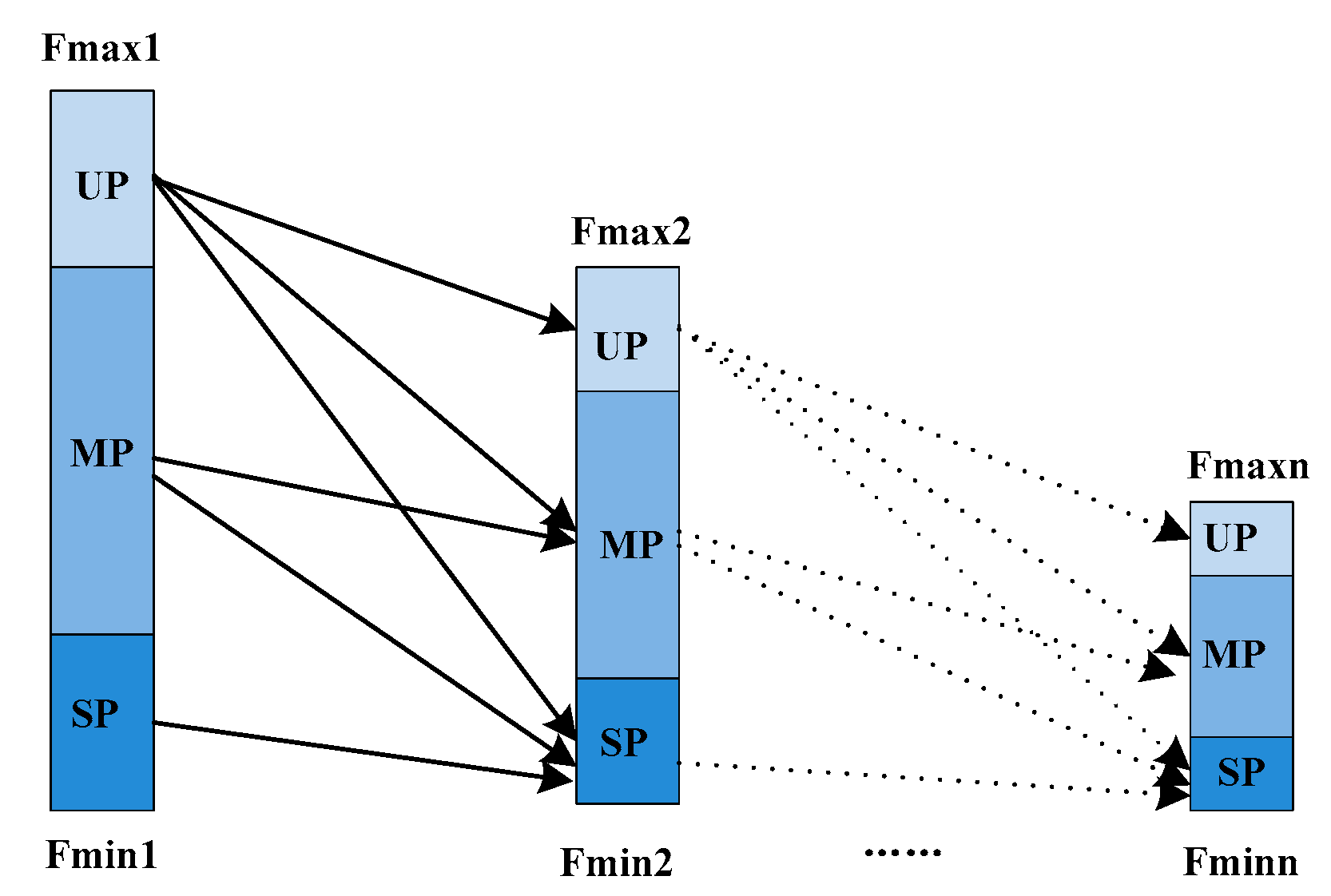

The simplified cyclic diagram of the phase transition process in our PTBO algorithm is shown in

Figure 3. It is a complete cyclic process of phase transition from an unstable phase to a stable phase. Firstly, in the first generation, we calculate the maximum and minimum fitness value for each element, respectively, and divide the critical intervals of the unstable phase, the meta-stable phase, and the stable phase according to the rules in

Table 2. Secondly, the element will perform the relevant search according to its own phase. If the result of the new degree of stability is better than that of the original phase, the motion will be reserved. Otherwise, we will abandon this operation. That is to say, if the original phase of an element is UP, the movement direction may be towards UP, MP, and SP. However, if the original phase of an element is MP, the movement direction may be towards MP and SP. Of course, if the original phase of an element is SP, the movement direction is towards only SP. Finally, after much iteration, elements will eventually obtain an absolute stability.

Broadly speaking, we may think of PTBO as an algorithmic framework. We simply define the general operations of the whole algorithm about the motion of elements in the phase transition. In a word, PTBO is flexible for skilled users to customize it according to a specific scene of phase transition.

According to the above complete cyclic process of the phase transition, the whole operating process of PTBO can be summarized as three procedures: population initialization, iterations of three operators, and individual selection. The three operators in the iterations include the stochastic operator, the shrinkage operator, and the vibration operator.

3.3.1. Population Initialization

PTBO is also a population-based meta-heuristic algorithm. Like other evolutionary algorithms (EAs), PTBO starts with an initialization of a population, which contains a population size (the size is

) of element individuals. The current generation evolves into the next generation through the three operators described as below (see

Section 3.3.2). That is to say, the population continually evolves along with the proceeding generation until the termination condition is met. Here, we initialize the

j-th dimensional component of the

i-th individual as

where

is an uniformly distributed random number between 0 and 1,

and

are the upper boundary and lower boundary of

j-th dimension of each individual, respectively.

3.3.2. Iterations of the Three Operators

Now we simply give some certain implementation details about the three operators in the three different phases.

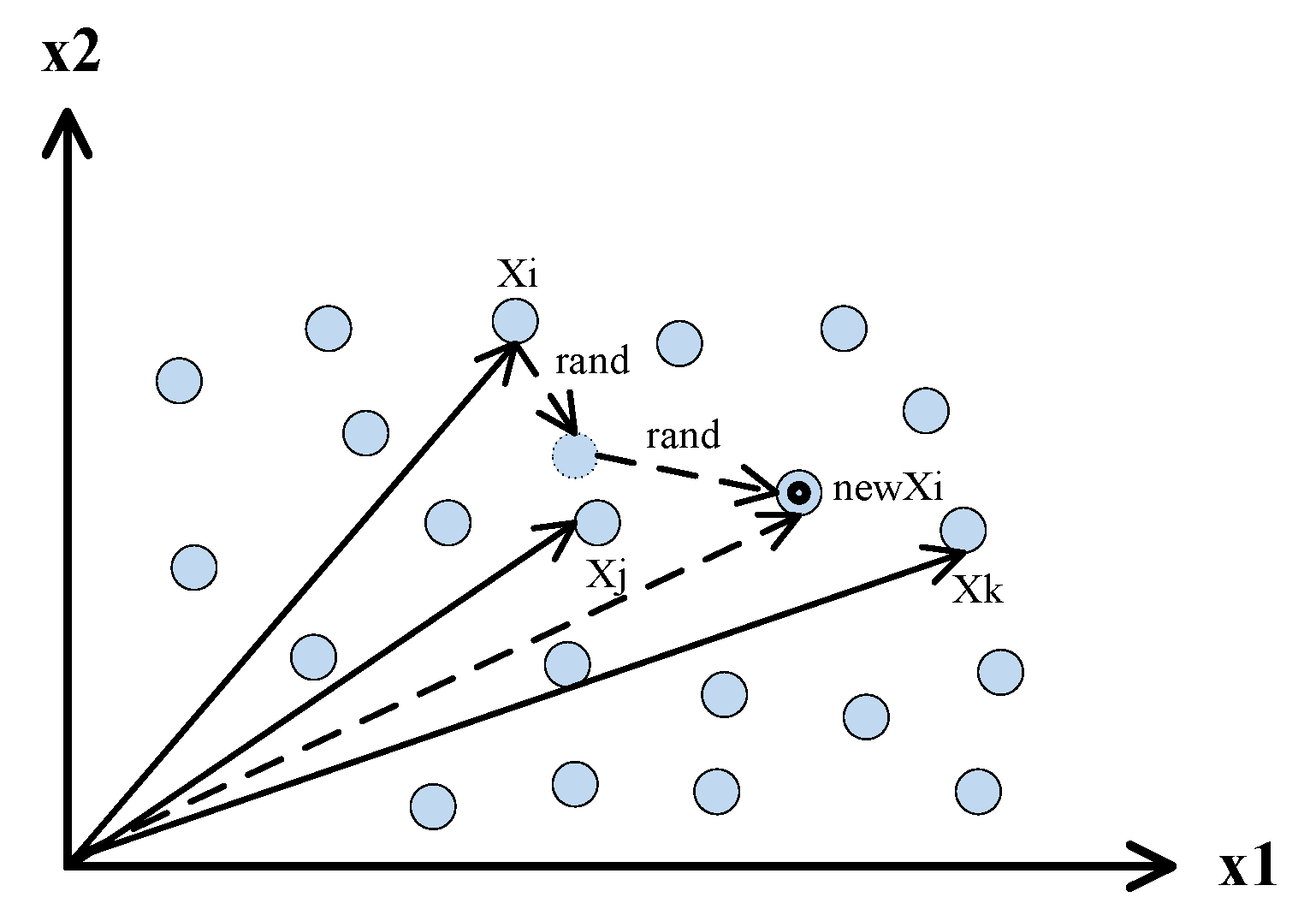

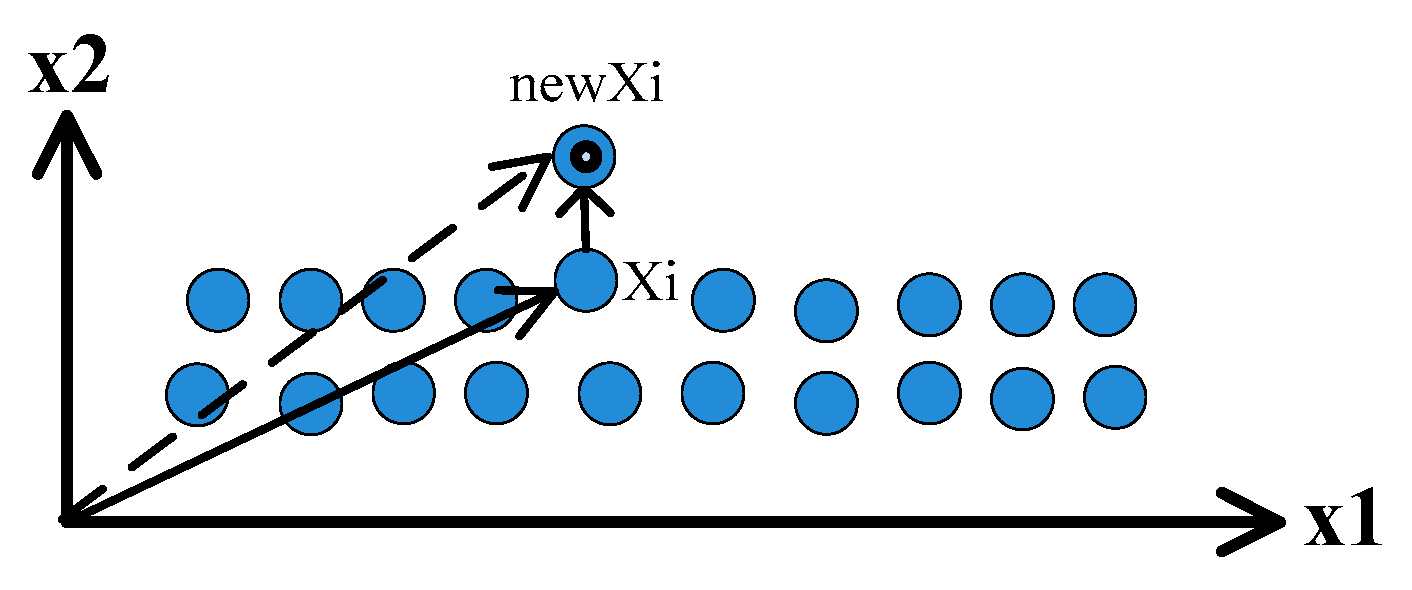

(1) Stochastic operator

Stochastic diffusion is a common operation in which elements randomly move and pass one another in an unstable phase. Although the movement of elements is chaotic, it actually obeys a certain law from a statistical point of view. We can use the mean free path [

43], which is a distance between an element and two other elements in two successive collisions, to represent the stochastic motion characteristic of elements in an unstable phase.

Figure 4 simply shows the process of the free walking path of elements.

The free walking path of elements is the distance traveled by an element and other individuals through two collisions. Therefore, the stochastic operator of elements may be implemented as follows:

where

is the new position of

after the stochastic motion,

and

are two random vectors, where each element is a random number in the range (0, 1), and the indices

j and

k are mutually exclusive integers randomly chosen from the range between 1 and

N that is also different from the indices

i.

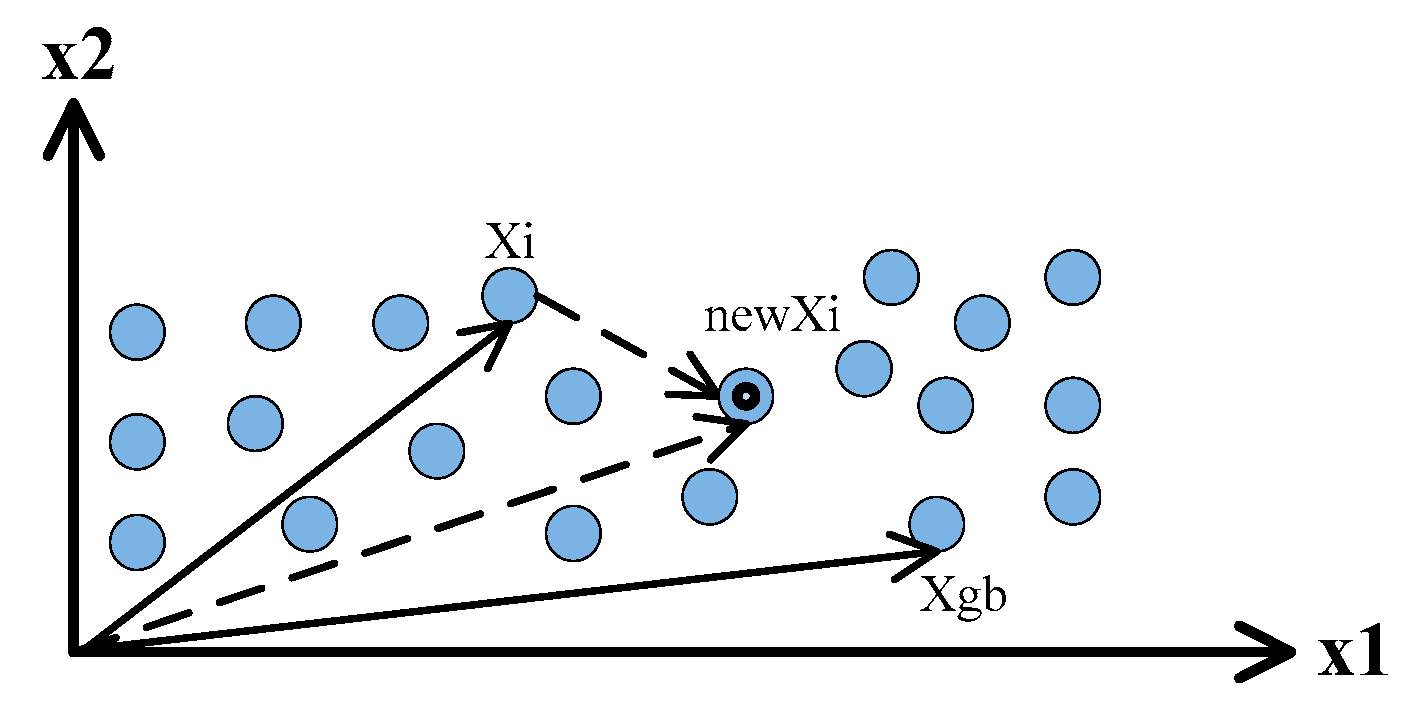

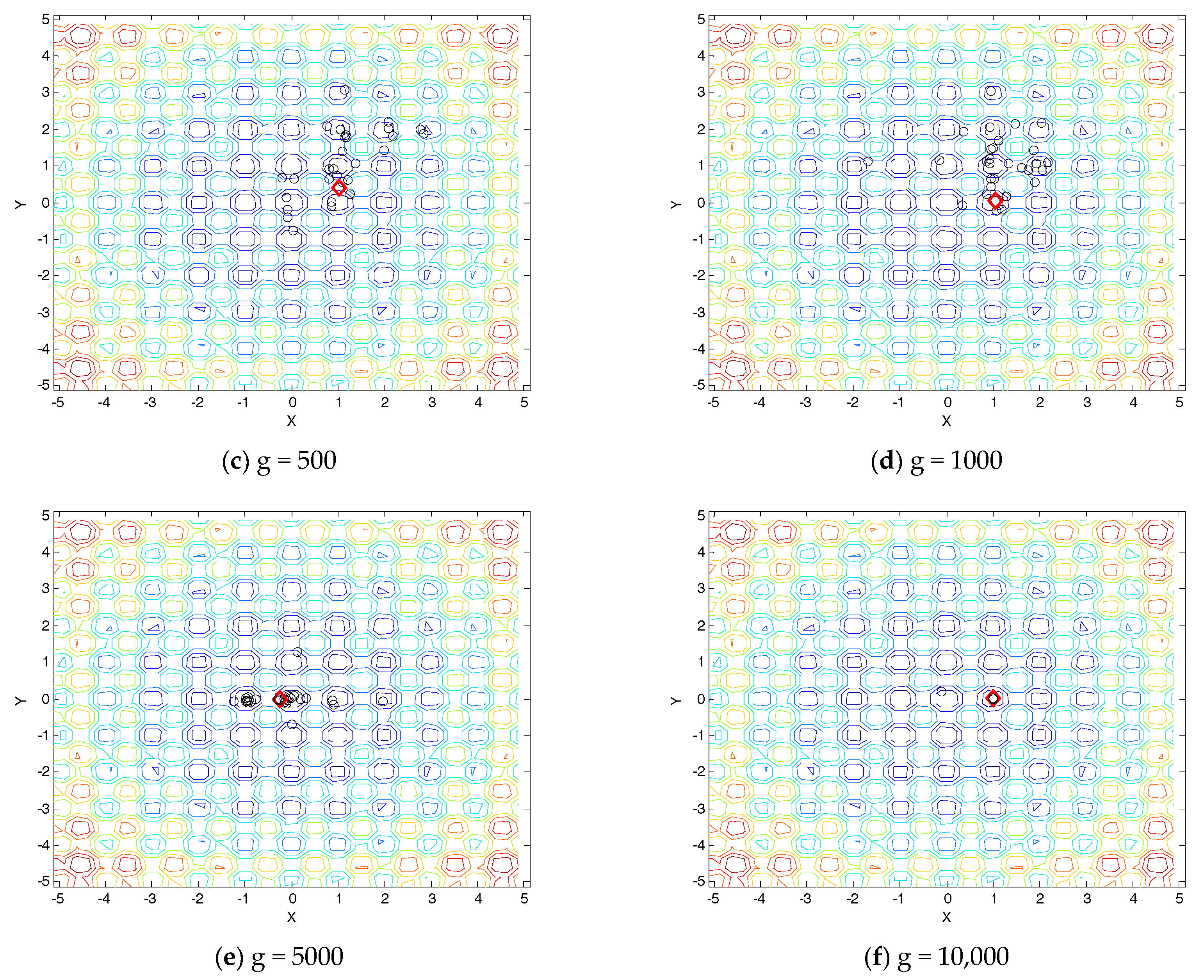

(2) Shrinkage operator

In a meta-stable phase, an element will be inclined to move closer to the optimal one. From a statistical standpoint, the geometric center is a very important digital characteristic and represents the shrinkage trend of elements in a certain degree.

Figure 5 briefly gives the shrinkage trend of elements towards the optimal point.

Hence, the gradual shrinkage to the central position is the best motion to elements in a meta-stable phase. So, the shrinkage operator of elements may be implemented as follows:

where

is the new position of

after the shrinkage operation,

is the best individual in the population, and

is a normal random number with mean 0 and standard deviation 1. The Normal distribution is an important family of continuous probability distributions applied in many fields.

(3) Vibration operator

Elements in a stable phase will be apt to only vibrate about their equilibrium positions.

Figure 6 briefly shows the vibration of elements.

Hence, the vibration operator of elements may be implemented as follows:

where

is the new position of

after the vibration operation,

is a uniformly distributed random number in the range (0, 1), and

is the control parameter which regulates the amplitude of jitter with a process of evolutionary generation. With the evolution of the phase transition, the amplitude of vibration will gradually become smaller.

is described as follows:

where the

and

denote the maximum number of iterations and current number of iteration respectively, and

stands for the exponential function.

3.3.3. Individual Selection

In the PTBO algorithm, like other EAs, one-to-one greedy selection is employed by comparing a parent individual and its new generated corresponding offspring. In addition, this greedy selection strategy may raise diversity compared with other strategies, such as tournament selection and rank-based selection. The selection operation at the

k-th generation is described as follows:

where

is the objective function value of each individual.

3.4. Flowchart and Implementation Steps of PTBO

As described above, the main flowchart of the PTBO algorithm is given in

Figure 7.

The implementation steps of PTBO are summarized as follows:

Step 1. Initialization: set up algorithm parameters N, D, alpha and beta, randomly generate initial population of elements, and set ;

Step 2. Evaluation and partition interval: calculate the fitness values of all individuals and obtain the

and

, and divide the critical interval of UP, MP and SP according to

Table 2;

Step 3. Stochastic operator: using Formula (2) to create ;

Step 4. Shrinkage operator: using Formula (3) to update ;

Step 5. Vibration operator: using Formula (4) and (5) to update ;

Step 6. Individual selection: accept if is better than ;

Step 7. Termination judgment: if termination condition is satisfied, stop the algorithm; otherwise, , go to Step 3.